Abstract

In recent years, smartphone users are interested in large volumes to view live videos and sharing video resources over social media (e.g., Youtube, Netflix). The continuous streaming of video in mobile devices faces many challenges in network parameters namely bandwidth estimation, congestion window, throughput, delay, and transcoding is a challenging and time-consuming task. To perform these resource-intensive tasks via mobile is complicated, and hence, the cloud is integrated with smartphones to provide Mobile Cloud Computing (MCC). To resolve the issue, we propose a novel framework called delay aware bandwidth estimation and intelligent video transcoder in mobile cloud. In this paper, we introduced four techniques, namely, Markov Mobile Bandwidth Cloud Estimation (MMBCE), Cloud Dynamic Congestion Window (CDCW), Queue-based Video Processing for Cloud Server (QVPS), and Intelligent Video Transcoding for selecting Server (IVTS). To evaluate the performance of the proposed algorithm, we implemented a testbed using the two mobile configurations and the public cloud server Amazon Web Server (AWS). The study and results in a real environment demonstrate that our proposed framework can improve the QoS requirements and outperforms the existing algorithms. Firstly, MMBCE utilizes the well-known Markov Decision Process (MDP) model to estimate the best bandwidth of mobile using reward function. MMBCE improves the performance of 50% PDR compared with other algorithms. CDCW fits the congestion window and reduces packet loss dynamically. CDCW produces 40% more goodput with minimal PLR. Next, in QVPS, the M/M/S queueing model is processed to reduce the video processing delay and calculates the total service time. Finally, IVTS applies the M/G/N model and reduces 6% utilization of transcoding workload, by intelligently selecting the minimum workload of the transcoding server. The IVTS takes less time in slow and fast mode. The performance analysis and experimental evaluation show that the queueing model reduces the delay by 0.2 ms and the server’s utilization by 20%. Hence, in this work, the cloud minimizes delay effectively to deliver a good quality of video streaming on mobile.

Similar content being viewed by others

1 Introduction

The traditional mobile phones play a significant role in effective communication, especially in transmission media. Although they support heterogeneous networks, the mobile phones do not support many multimedia applications [1, 2]. Hence, smartphone networks, such as 5G and 6G support video streaming. In this work, the author discusses briefly the 6G wireless network which operates with the current telecommunication technologies such as IoT, cloud and edge computing, and Big data. To handle the smart and secure building, the author proposed a new mechanism called Secure Cache Decision System (CDS) in the wireless network. This provides a safer internet service for sharing and scalability of data [3]. Kaium et.al discuss the future view of IoT management in the context of cloud, fog, and mobile edge computing [4]. Ahmed et. al provides a different mechanism for the benefit of IoT applications to handle the fog computing services like low latency. This model is mainly designed to achieve scalability and partial validation is committed only in read and write transactions [5].

The prerequisite of a smartphone is to deliver a good quality of the video to the viewers. Some of the multimedia challenges faced by a streaming video are delay and network performance. [6]. Apart from these QoS(Quality of Service) challenges, smartphones need to solve network QoS issues such as a battery, energy consumption, low bandwidth, latency, and packet loss. Therefore, the solution is integrating smartphones with the cloud. Mobile Cloud Computing (MCC) defines on-demand service and resource-constrained mobile devices offloads tasks from mobile devices to cloud. Thus it saves energy, power, the battery in mobile. This work proposes the offloading process of the mobile application in the cloud. The code is going to be offloaded to the cloud. Therefore, the cloud is responsible to parallel execute the task independently [7]. This will reduce the battery consumption and execution time of mobile [8,9,10]. In recent years cloud-based IoT applications useful for day to life activities. For example, this work introduced blockchain-based authentication and authorization services for smart city applications. In this work, the security information is decentralized for the smart city ecosystem, and blockchain technology is used to detect the management and provide the access control which is integrated within the FIWARE platform [11]. The author proposed a novel algorithm for watermark embedding and extraction by using a synergetic neural network. In this work, the optimal PSNR is achieved efficiently compared with other models [12].

Lai et al. focus mainly on delay and packet loss as network parameters [13]. Hence, the need is to estimate the available bandwidth based on the profile of the mobile. Profile means history of the mobile which is already registered in the cloud server. The mobile user activates the cloud monitors services, and the device manager maintains the various QoS parameters such as bandwidth, link capacity, network status, signal strength, buffering stream, and energy. Whenever, a device state change occurs, the device information updates in the cloud server for the registered mobile users and the estimated bandwidth fits the congestion window. There is a lot of research on bandwidth estimation [14], and TCP congestion control algorithms exist in the literature [15,16,17,18,19,20]. Lai et al. shows that the buffering in the transport layer reduced to deliver a good quality of video live streaming in mobile [13]. As far as cloud networks are concerned, Leong et al. input the necessary parameters as Round Trip Time (RTT) and video segments for bandwidth estimation in heterogeneous networks [21].

We propose MMBCE to estimate the bandwidth of mobile using MDP. Here, MDP defines the available bandwidth of each video request of mobile with different actions and reward functions for each region or state. The reward function derives with Best First Reward Search(BFRS) algorithm using heuristic value, and the cloud server dynamically fits the congestion window. After estimating the bandwidth, in the cloud server, the next proposed QVPS schedules the video process as per the estimated bandwidth, arrival rate of video request, and queue length of individual video server with the M/M/S queueing model. QVPS identifies the minimum queue length of a video server for processing the video request. The server significantly minimizes the computational workload and processing time of each video processor, which directly implies the reduction in a video processing delay. To calculate the average output video chunk size and average transcoding time of the server, we propose IVTS for deciding the different transcoding modes by comparing maximum delay with average delay, maximum chunk size with average chunk size. After mode selection, the transcoding server selects the optimal transcoder. Thus, IVTS reduces both transcoding delay and resource utilization.

1.1 Contribution

-

1.

We propose the MMBCE algorithm to estimate the bandwidth of the mobile in the cloud network.

-

2.

CDCW is the new congestion algorithm proposed to fit the congestion window dynamically in the cloud.

-

3.

We compared MMBCE with an existing TCPHas, TCPW, Cubic, and Reno algorithms to evaluate the performance metrics of bandwidth, packet delivery ratio, and throughput.

-

4.

Our CDCW algorithm compared with the existing congestion algorithm to reduce PLR and improve the congestion window size.

-

5.

We proposed QVPS using the M/M/S queueing model to schedule the video process, minimize video server utilization and process time.

-

6.

IVTS intelligently selects an optimal transcoder server, and comparative analysis made with existing work, which reduces the transcoding delay.

-

7.

Overall, the above-proposed algorithms improve the network QoS parameters and deliver a quality of video streaming in the mobile cloud.

This paper is organized as follows: Section 2 briefly covers the background on three areas (1) Bandwidth estimation and congestion window, (2) Queueing model, (3) Transcoding. Section 3 gives a detailed description of the proposed framework of delay – aware bandwidth estimation and intelligent video transcoder in the mobile cloud. Next, Section 4 deals with three components such as experimental setup, case study and performance metrics, then we present the results and discussion in Section 5. Finally, Section 6 concludes with future work.

2 Related work

In this section, we review the Network QoS parameters, namely, Bandwidth estimation and congestion window, Queueing Model for video processing, and Transcoding.

2.1 Bandwidth estimation and congestion window

We first address the bandwidth estimation in a wireless network and study their influence on the parameters of TCP congestion control variants. The famous TCP variants such as Reno [22], Binary Increase Congestion control (BIC) [23], CUBIC [24], TCPWestwood (TCPW) [25] and TCPHas [26] have been analyzed and compared with proposed work. The detailed working of each algorithm mentioned above discussed below.

Reno

Among different TCP congestion control variants, TCP Tahoe, detects the packet loss after the time interval expires. Then the performance of the algorithm becomes slow, and transmission flow also decreases. [15, 27, 28]. Since Slow Start, Congestion Avoidance, and Fast Retransmit techniques followed in Tahoe. Reno is similar to Tahoe but applicable only when three duplicate acknowledgments (ACKs) receives at the sender, then it incur packet loss in a network. A Fast recovery algorithm overcomes the drawback of this method sets a threshold value to retransmit the lost packet. After receiving the third duplicate ACKs, the congestion window is fit to half, based on the threshold value. The threshold has added to three times of Maximum Segment Size (MSS) [29], which assigns to the congestion window to reduce packet loss. However, in experimental scenarios, when multiple packet loss occurs in the same window, Reno exhibits the capability to improve the throughput. To address these challenges, the authors introduce a new algorithm, namely, a queueing delay-based rate control algorithm. Lin et al. and Wang et al. observe that Reno does not show the ability to identify the impact of a packet loss. Hence, we believe that full link utilization may not achieve [30, 31]. The analysis of RENO incurs the low bandwidth with minimum delay. To improve this, we will move on to BIC.

BIC

Next, we discuss the packet loss-based TCP congestion control algorithm is BIC [23, 32]. Based on the maximum window size, a binary search technique fits the destination window. The window selects the midpoint of the maximum and minimum level, to enhance the window size than BIC, CUBIC. To simplify the window size and friendliness, BIC enhances to CUBIC.

Cubic

Cubic, also considered a packet loss-based congestion algorithm fixes the Congestion window size (cwnd) through a cubical function. Two shaping approaches explain in CUBIC. While TCP handles congestion by slow start mechanism, CUBIC handles utilizing a Hybrid Slow-Start mechanism [33]. Packet loss is identified based on ACK and RTT. The main drawback of CUBIC is time consumption and more bandwidth requirement.

TCPWestwood (TCPW)

In this method, the sender analyzes the ACKs arrival rate to evaluate the RTT and bandwidth. If RTT is minimum, the congestion is said to be low and vice-versa. In some situations, packet drops occur at the receiver. In such cases, TCP-Westwood [25] determines a new BDP (Bandwidth Delay Product) and allocates it to a threshold values instead of assigning half of the limit. Later, Yet another scheme, namely Persistent Non-Congestion Detection, was introduced to determine the congestion [34]. To improve throughput, Westwood adjusts the congestion window. Instead of fixing the window, we strive to incorporate TCPHas, to fit the congestion window.

TcpHas

TCPHas is an entirely new TCP congestion algorithm that helps estimate bandwidth and fits the congestion window. In TCPHas, the quality levels of video expect the bandwidth of the network, queueing delay, a historic level of video, and bit rates. This rate calculates with the help of shaping function. The sending rate of this function should be suitable for the encoding bitrate of the estimated optimal quality level. Since the reduction happens in the queueing delay and packet loss rate, the QoS improves live streaming [26, 35]. In our work, we intend to reduce the same parameter with the help of the queueing model. The above survey examines the TCP congestion control variants for estimating the bandwidth. Then, the video packets are processed in the video server as per the bandwidth by utilizing the different queueing models. Further, the discussion proceeds with the survey of the queueing model.

2.2 Queueing model for video processing

The theory of queueing is a mathematical model defined for measuring the capacity of the queue length and the waiting time to be produced in a network [36, 37]. M/M/1, M/M/S, M/G/1, M/G/N are some of the different types of queueing model. M/M/1 is the queueing model where the arrival of the video request follows a Poisson distribution with exponential service rate. The drawback of the model is always single server handles only one queue at a time. As a result, the waiting time increases. To solve this problem, M/M/S was applied to increase system performance by having multiple servers. Therefore, this M/M/S and M/G/N model are used for the application of multi-service cloud computing. The resulted in processing delay minimization and response time, maximizing the average queue length compared to other models [38, 39]. The video packets in the queue are ready to enter in the transcoding server. To process, the server operation, the analysis of existing transcoding work has been explained as follows.

2.3 Transcoding

Nearly every corporate public cloud service, such as Spritdsp, Amazon Web Services (AWS) and Bitcodin helps manage the transcoding service of a user to create a different solution for uploading video files. In multiple devices, a user can experience online video content with varying sizes of resolution [40].

The work in [41] jointly measured transcoding and delivery of video based on user priorities in the geographical areas and video varieties of the Content Delivery Network (CDN). The existing literature survey [42, 43] does not contain the capacity scaling problem [44]. In microdata center, based on the broadcast request, Heuristic schedules the live transcoding jobs dynamically. The schedule allows small degradation in the output [45]. To overcome these problems, Lei Wei.et al. introduced a new Cloud-based Online Video Transcoding system (COVT) [46] to provide a QoS guaranteed video, using the profiling approach to achieve the different metrics for performing tasks with separate infrastructures. Based on the queueing model, the QoS metrics design, the findings from the experimental analysis show that a COVT dynamically resolve the resource limitation, and the task is programmed at runtime to implement an assured QoS.

2.4 Motivation

In the literature, we find that TCP Tahoe detects the packet loss only after a timeout occurs. Whereas, Reno does not detect packet loss within a single RTT. As far as the rest of the techniques like BIC, CUBIC, TCP Westwood are concerned, they commonly face fairness issues. On the other hand, TcpHas, a reasonably new method requires slight performance improvement in delivering live streaming service, especially when the network is stable. Hence, our motivation provides a solution for the issues of packet loss, delay, network performance in existing techniques. Thus the need for a framework to handle bandwidth estimation, video processing, transcoding challenges and stream the quality of the video in mobile.

3 Delay – aware bandwidth estimation and intelligent video transcoder in mobile cloud

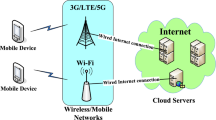

The proposed framework for delay – aware bandwidth estimation and intelligent video transcoder in a mobile cloud has two major components. (1) Mobile Client, (2) Cloud Server.

3.1 Mobile client

This component has two significant elements, namely, a video player and a decoder. Smart mobile users request for different types of video from heterogeneous networks. According to video requests, a decoder decodes video content from the cloud server with good quality. Then, video streaming happens in a real-time player of mobile.

3.2 Cloud server

The cloud server manages three main components, namely, proposed MMBCE, QVPS, and IVTS. The mobile profile manager maintains the historical information of mobile and helps to estimate the bandwidth. MMBCE applies Markov Decision Process (MDP) to achieve available bandwidth. Then, BFRS in server estimates the bandwidth of mobile in the cloud environment. To stream good quality video, we define the action and reward function in each state to calculate the goal state. Then fits the congestion window dynamically by using CDCW. Then, QVPS processes the video as per the bandwidth. After video processing, IVTS transcodes the video content. Once the transcoding process completes, the cloud server streams the video to the mobile client. We will discuss the three proposed components briefly in the following section. Firstly, the working principle of MMBCE explains in the next subsection.

3.2.1 Bandwidth estimation process formulation

The cloud server estimates bandwidth and analyzes the mobile profile manager. By considering the available bandwidth, the current bandwidth estimation assigns to an individual mobile, and it will act as an agent. The agent works on the principle of reinforcement learning task [47]. In the cloud environment (Fig. 1), the actual bandwidth passes to the agent of each mobile (State S). Then, the agent identifies the best bandwidth correspondingly. For each bandwidth, the cloud environment, in turn, responds with a reward to the bandwidth estimation agent. The Markov Decision Process (MDP) develops to provide better bandwidth estimation, and the transition state behavior of the same Markov process [48]. The Markov chain is defined to derive the transition state model as follows:

Markov Chain (Definition)

Let State X denote the state of the available bandwidth of all mobiles with different locations. X ∈ d(a,b) of the Euclidean distance for heterogeneous mobile. The dynamic movement of mobile measures with the discrete-time interval [49].

Markov Decision Process (MDP) is defined as a four tuples MDP = (X, A, TP, r). X, A are the state and action space. TP(y/x, a) is the transition probability with

where r(x, a, y) is reward of transition probability (x, a, y). The estimated bandwidth is mapped with the actual Bw bandwidth for each step.

The study of MDP helps to develop our bandwidth estimation algorithm MMBCE. The new bandwidth algorithm description is as follows:

Algorithm 1 Description

In the cloud server, to estimate bandwidth, we propose MMBCE, to measure the best available bandwidth of each video request of the mobile user. This performance of the available bandwidth has evaluated; three inputs passed to the MMBCE algorithm are the number of video requests, segment size, and RTT. Here, the number of requests considers the number of states. Therefore, based on the segment size and time interval, each state of mobile’s available bandwidth is calculated. Then, the output of the state and the available bandwidth are input to the Best-First Search() procedure (Algorithm 2). The output of Algorithm 2 assigns EBw in Algorithm 1(MMBCE). The next step is to compare the estimated bandwidth of each mobile and maximum bandwidth. If EBw is greater than the maximum, the estimated bandwidth assigns to maximum bandwidth and action of each state to available bandwidth. This comparison repeats for all the states of the mobile. Next, we move on to determine the best first reward from the transition matrix and states. The BFRS function is as follows:

Description of Algorithm 2

The mobile network faces different challenges in traffic and time intervals. When different mobile users request the video in the cloud, the bandwidth estimation of every mobile becomes a complicated task. To overcome this issue, the Markov channel model proposed to estimate with a time-varying random variable. The Markov model has two elements (1) Transition matrix, (2) state model. To generate a transition matrix, TPM (Refer 2) represents the total number of mobile transitions for each state i to j. According to the TPM, the state model is indicated by n with n x n matrix. The number of states, the available bandwidth are received from algorithm 1 given as input to Algorithm 2. The steps 6 to 10 makes a corresponding entry in the transition matrix of available bandwidth start from an initial state to the total number of states. Then step 11 through 16 counts the number of requests for each state and fills the TPM. To assign the reward for each state, step 17 calls the reward function (Algorithm 3). Next, the reward adds along with TPM. Finally, EBw returns as the output of Algorithm 2.

Description of Algorithm 3 and 4

According to the MDP, a reward is added to the estimated bandwidth. To select the reward, algorithm 3 determines the best reward, which falls under the heuristic or informed search calculation. The two inputs, such as many states and TPM, assigns to algorithm 3. A priority queue also initializes for vertices, and the maximum value assigns as 999. There are two states introduced in this algorithm, namely computing state and goal state. Step 8 through 11 determines the heuristic function for all vertices until it reaches the goal state. The level 13 through 14 inserts a new vertex as a computing node for each neighboring vertex v in the priority queue and is marked as unvisited.

Here, the initial state is added in the priority queue to identify the computing state and labeled as a visited node and marked as 1. Step 16 through 26 calculates the minimum heuristic value for every computing state, and the goal state is marked as visited. Step 16 checks the priority queue is empty or not. If it is not empty, determine the computing state using getStateWithMin_Heuristic() procedure. Now, the goal state assigns 1. Step 19 compares the goal state with many states. If it is less than or equal to a number of states, it checks the step 21. If this condition is satisfied, that particular vertex is added to the priority queue and marked as visited. The goal state is assigned 1. Next, the goal state increments and repeats until the priority queue empty. Then the minimum value is removed from the priority queue, and that reaches a final goal state.

All the above four algorithms have devised to calculate the best bandwidth estimation at different states in the heterogeneous network. Next, to minimize the packet loss, the cloud dynamically adjusts the congestion window as per the estimated bandwidth. The steps involved are explained in the CDCW algorithm as follows:

Description of Algorithm 5

CDCW algorithm takes the estimated bandwidth as input from Algorithm 1. The maximum mobile window size initializes to 64 kbps. The cloud server receives three duplicate acknowledgments to calculate MaxSZ. The dynamic congestion window fits to avoid congestion by comparing the MaxMW with MaxSZ. The cloud server minimizes the packet loss as per the estimated bandwidth and congestion window through this algorithm. Then, the video server in the cloud receives the packets in the queue. In the next section, we elaborately explain the operation of the video processing server.

3.2.2 Video processing server

Assume that mobile users are in a heterogeneous network. These users send video requests to the cloud server. Since multiple mobile clients send the request, they fall under the M/M/S queueing model, also known as buffering. Consequently, video processing happens through the server. In this work, we applied a queueing model named multi-server system to accept the request from the mobile and issue the appropriate services in the cloud. This architecture works on the open Jackson network [38, 50]. The symbols and description list are given in Table 1:

Video Processing Server using M/M/S Queueing model formulation

Model : (M/M/S): (\(\infty \)/ FIFO) [51] This model is based on the following assumptions:

-

The number of video request arrivals follows a Poisson distribution with a mean arrival rate Vλ.

-

The response time has an exponential distribution with the average response rate AV μ.

-

Arrivals are infinite population α.

-

Number of video requests follows First-in First-out basis (FIFO).

This model provides a solution for mobile users to satisfy demand. It depends on the values of Vλ and AVμ. If Vλ ≥ AV μ, then the waiting line of the Video Processing Server (VPS) would increase without limit. Therefore, it must satisfy a condition as Vλ ≤ AV μ.

As mentioned earlier, traffic intensity of the VPS ρ = Vλ / S * AV μ which refers to the probability of time. High traffic intensity makes the server busy. The probability of VPS is idle, or there are no video processes in the system, V P0 = 1 − ρ. The probability of a single process in the server is V P1 = ρ V P0. Similarly, the probability of two processes in the server V P3 = ρ V P1 = ρ2 V P0. The probability of n process in the server is

The expected number of processes in the video server is given by,

The expected number of process in the server queue is given by,

With an average arrival rate of video Vλ, the average time between the arrivals is \(\frac {1}{V_{\lambda }}\). Therefore, the mean waiting time in the queue, Wq, calculates the average time between the arrivals of video packets and the average queue length.

Similarly the average waiting time in the server, Ws

Expected length of non-empty queue,

Probability that there are n video segments in the server,

Probability that there is no video process in the cloud server,

Video Server (VS) queue is assigned to provide the service Video Processing Service Time (VPST) given by VPST1.... VPSTn. This is formulated in the following equation as

where n is the number of VS. Then, μV SR and γV AR are the parameters of the M/M/S model which represents μV SR video service rate and γV AR, video arrival rate. Therefore, γV AR and μV SR = μi. where i = 1,2 ... S. In some cases, the new video request which is forwarded to the video server, the Erlang’s C distribution will be denoted as [52]

The above M/M/S model equation is used to calculate the total video processing service time in QVPS algorithm. The steps are explained as follows:

According to the bandwidth prediction of the cloud server, the video packets arrive in the VPS. Table 2 diminishes the arrival rate, the queue length of the server, and the probability of an idle server. The arrival rates of video packets examine with the servers (2, 4, and 6). In general, whenever the arrival video packet arises, the probability of idle server decreases. Table 2 shows for the number of servers is 2. Similarly, for the same arrival rate, the VPS increases, which increases the probability of idle server by an average of approximately 2%. The same arrival rate process with more number of servers. Hence, we have chosen the number of servers as 6. Then, there is an automatic decrease in queue length. This reduction happens due to the implementation of the M/M/S Queueing model in the VPS. The overall advantage is to reduce the computational overhead and energy consumption. Thus, we significantly minimize video processing delay. After processing the video packets, the cloud server deals with the transcoding server as follows.

3.3 Transcoding server (TS)

The TS mainly focuses on two parameters, namely Transcoding Mode(TM) and different video types (Vd). Frequently, mobile users request different types of video, like popular videos, news, live sports streaming, etc. In the Federation International de Football Association (FIFA), live streaming happens. The same request is made by different mobile clients in various networks around the world, across regions (Higher to a lower network). Herein, the networks specify the mode of operation. Slow mode indicates a low spectrum, which results in delay. It follows that higher mode is faster than the slower mode. Hence the two parameters are well defined. Now the received video is fragmented into chunks. The TS must process the Average Output of Video chunks (AOVc), and the following formula [36, 46] calculate the Average Video Transcoding time (AVTT).

In the above equations, 19 and 20, the transcoding mode q ranges from 1 to TM and video type limits from 1 to Vd. The obtained values help find the total time spent for transcoding the video packets based on historical data. In the following section, the AVTT and AOVc mapped with each other. Both are directly proportional. The growth of AVTT, AOVc makes the transcoder to work in a faster mode. Thus, there is no possibility of a delay. The overall transcoding mode distribution evaluates the maximum delay of MaxD and maximum transcoding video size MaxVsz. The following conditions give this:

Where Td represents the transcoding delay of individual TS, and TVSZ is the transcoder video size of TS.

By applying the above equation, we propose the IVTS algorithm to choose the optimal transcoding server with a different mode. Then determine the transcoding delay and video segment size of the individual transcoder.

Description of IVTS

The intelligent video transcoder server proposed in this framework helps to choose a transcoder. The algorithm’s input is transcoding mode, history of transcoding time with a different mode, and prehistoric average segment size. Apart from this, maximum delay and maximum transcoding video segment size are given as input in our algorithm. IVTS mainly focuses on two processes. The first process continuously receives the number of video requests from 1 to n and video segment size of transcoder checks with maximum video segment size. Based on the condition in step 7, the transcoding server increments. Step 9 through 12 compares the transcoding delay with 0. If it is greater than zero, calculate the transcoding delay of individual server (Td) and that of video segment size. The second process deals with the selection of the transcoding mode, which consists of two modes, namely fast mode and slow mode. Step 17 compares the maximum delay, VSZ with Td and TS. If this criterion becomes true, then the mode is selected as a fast or slow mode. In the fast mode, the current transcoder queue length has computed using step 23. The minimum current transcoder queue is assigned as a video segment size. Similarly, in the slow mode, the input of the video segment size waits in the queue. As per the condition in step 27, the video segment resides in the waiting queue and is assigned to VSZ. Hence, this IVTS algorithm minimizes the transcoding delay by intelligently distributing the workload for individual transcoder. In turn, it reduces the burden for a single server. Thus, it also reduces the transcoding delay in the transcoder server.

3.4 Delay

The framework aims to achieve the QoS parameter of delay in all three components. According to the video request, MMBCE estimates the bandwidth. Then, the below equation calculates the overall delay. The result of VPS, VTS, and Output delay is tabulated as follows:

In Table 3, the no. of servers fixed as 2, then response delay is 0.05 ms with the transcoding queue capacity 25. Tables 3, 4 and 5 demonstrates the delay parameters for video processor, transcoding server and the time taken to deliver the video packets in the mobile client. Therein, the delay affects the delivery of good quality, and buffering happens on the mobile. To overcome this issue, we propose an intelligent transcoder using M/G/N queueing model. The M/M/S video processing server reduces the delay as 0.82 ms, which improves the QoS in the video.

4 Experimental setup and performance metrics

This section provides a detailed description of the experimental setup, implementation of the mobile client, and public cloud in Table 6.

To better evaluate the performance of our proposed video streaming algorithm, we established a realistic testbed to measure the performance with a real video stream and an Android mobile phone. We implemented a video streaming application that can request the video contents from the web server through the HTTP/1.1 protocol. The proposed framework is implemented using Samsung Galaxy mobile phones [53] and AWS cloud server [54]. We used a Samsung Galaxy S7 edge smartphone with Android 6.0 (Marshmallow) OS as the client. The Samsung Galaxy is equipped with a dual-core 1.2 GHz Cortex-A9 CPU and 14GB RAM in Configuration 1. Samsung Galaxy Note20 Ultra 5G mobile phone with Android 10 OS, and Octa-core 2x2.73 GHz Mongoose M5 & 2x2.50 GHz Cortex-A76 & 4x2.0 GHz Cortex-A55 CPU and 12GB RAM in Configuration 2. The minimum RTT between the smartphone and the proxy is about 25.83 ms, and between the proxy and the server is about 1.79 ms, respectively.

Our experimental setup machine was utilized in the research lab setup with the system following system configuration as shown below in Table 6. Twitch TV and YouTube live are the two major real-time live streaming service providers which were used in this work as video datasets. The results are aimed at synchronizing smartphones with the cloud environment by effectively utilizing the estimated bandwidth of mobile, efficient queue-based video processing, and intelligent transcoding, smoothly download the video without any packet loss.

In the cloud server, the mobile profile manager maintains the historical information of mobile which helps MMBCE to estimate the best bandwidth. The proposed queuing theory model QVPS was implemented using AWS. AWS goes beyond a classic hypervisor (i.e., VirtualBox3, Xen4, and VMware5), provides the creation and setup of virtual machines dynamically, which are required for computational resources. This enables the way to handle QoS constraints in traffic peaks. Based on traffic conditions, the arrival rate of the requests that need to be served increases. When traffic decreases, AWS creates new instances automatically and current servers are destroyed and shut down. The structure composed for this experiment was formed with different servers created as virtual machines (VMs) containing the Entering Server (ES), servicing nodes, VPS1. . . VPSn, and a MySQL6 database, Database Server(DS), and the Output Server (OS) and client servers. Twitch API provides parallel online video channels in Twitch which helps to enable online video services for transcoding servers. We use the arrival rates on different days as the arrival rates for the different types of videos. After video processing, the IVTS algorithm minimizes the transcoding delay by intelligently distributing the workload for individual transcoder using M/G/N queuing model.

Herein, the cloud server uses public cloud: Amazon Web Service (AWS). The results observed and experimental analysis has been made with Amazon Elastic Cloud Computing (EC2) [55] to demonstrate the working principle in a public cloud. In this cloud environment, the availability zone (AZ) specifies the regions(R). There are 8 regions globally. For our experimental setup, two regions namely, the Asia Pacific and USWest (Northern California) have been considered to demonstrate the live video streaming. The reason for choosing the particular two regions is to examine network traffic between short and far geographic distances.

In the demo shown in appendix 1, in step1, the user can choose the AMI. In step 2, the user can create and launch an instance. In step 3, the user can execute the existing algorithm and our proposed algorithms in this framework. Finally, to test the application, AWS is used to provide the Device farming testing forum. By using this service, the Samsung Galaxy S7 edge has been tested successfully. Finally, we could view our video outputs smoothly with good quality in the mobile screen AWS platform as shown in the output screen. To provide a demo of our system, we have added a set of screenshots in Appendix Appendix of this paper.

4.1 Analysis of Industrial application for video streaming

Cloud-based mobile live streaming is executed in many different applications such as enterprise and corporate streaming, health care, social media live streaming, telecommunication public safety, and law enforcement and government. Among these applications, we focus on health care, social media, and telecommunication. For example, the world faces pandemic situations due to coronavirus and people are utilizing the telehealth sevices effectively. Nowadays, doctors in many healthcare organizations are adopting live video streaming services for medical purposes like surgical and diagnostic processes. Doctors are also practicing live streaming to guide and educate new doctors, broadcasting surgical procedures, respond to the patients via live chat. For example, The U.S. Department of Health and Human Services (HHS) practices live video to broadcast workshops, advisory council meetings, and other events to create awareness for covid 19 and improve medical fitness.

The above industrial application for video streaming uses the resolution, frame rate, video bit-rate settings are the basic requirement to stream the video on platforms like YouTube Live, Twitch.tv, Netflix, Beam, and Hitbox. The stream settings are different on each platform. We aim to deliver a good quality of video on different streaming platforms with estimated bandwidth. To achieve this goal, we analyzed the two different configurations of mobile standards with different resolutions and frame rates as shown in Table 7. To evaluate the better performance of our proposed algorithms, we made a comparative study with the existing TCP variants.

We used a Samsung Galaxy S7 edge smartphone with Android 6.0 (Marshmallow) OS as the client. The Samsung Galaxy is equipped with a dual-core 1.2 GHz Cortex-A9 CPU and 14GB RAM in Configuration 1. Samsung Galaxy Note20 Ultra 5G mobile phone with Android 10 OS, and Octa-core 2x2.73 GHz Mongoose M5 & 2x2.50 GHz Cortex-A76 & 4x2.0 GHz Cortex-A55 CPU and 12GB RAM in Configuration 2. To better test, the usefulness and robustness of our methods, two different mobile configurations with the encoded videos are employed, which are shown in Table 7. The frame rate of the two configurations 1 and 2 are set to 24 frames and 30 frames. Hence, the duration of the individual segment is about 1000 ms and 3000 ms for the two configurations, respectively. In our scenario, 200 segments are elected for configuration 1 and 100 segments for configuration 2. In Table 7, we notice how much bandwidth is required for two different mobile configurations with varying resolution and frame rates. The novel idea of our approach is to estimate the bandwidth effectively to minimize packet loss and delay. The requirement of the bandwidth is available in Table 7.

4.1.1 Case 1

In configuration 1, with 24 frame rate and 640×360 resolution, the exact bandwidth which needs to stream the good quality video is shown in Table 7. Hence, the bandwidth is necessary to stream the video. In case, if bandwidth is not available, then packet loss occurs with delay and the quality of the video also becomes low. To avoid the case, the cloud server plays a vital role to estimate the bandwidth of mobile. We implemented the different existing TCP variants in cloud server such as Cubic, TCPW, and TCPHas with the given configuration. Experimental results demonstrate the estimated bandwidth of different TCP variants and our approach. TCP Cubic, TCP Westwood, TCPHas achieves the estimated bandwidth as 0.4, 0.52, and 0.78 with low resolution. The required bandwidth is 0.8112 but it is not estimated efficiently by the existing TCP variants. Hence, in our work, we used the Markov decision process to estimate the bandwidth. The bandwidth of each link will be divided into several regions. In the cloud environment, the actual bandwidth from Table 7 passes to the agent of each mobile (State S). Then, the agent identifies the best bandwidth correspondingly. For each bandwidth, the cloud environment, in turn, responds with a reward to the bandwidth estimation agent. The Markov Decision Process (MDP) develops to provide better bandwidth estimation and the transition state behavior of the same Markov process. The MMBCE achieves 0.8 Mbps. Similarly, with the same procedure, MMBCE obtains 11.002 Mbps than other TCP variants. The analysis made with the high resolution 1440×2560 and with frame rate 24 in the same configuration 1. The required bandwidth is 11.179 to stream the good quality video. We made the comparative study with other TCP variants and obtains 10.452, 9.28, 10.56 for cubic, TCPwestwood and TCPHas. The existing work does not reach the actual bandwidth. In order to solve the issue, MMBCE proposed in this work and it achieves 11.002 as estimated bandwidth respectively.

4.1.2 Case 2

In configuration 2, we added one more high resolution, and the frame rate is fixed as 30. We analyzed with a low-resolution 640×360 and 30 frame rate. The required bandwidth is 0.984 Mbps to stream good quality video but the existing algorithm Cubic, TCPW, and TCPHas as 0.60, 0.75, 0.84. Mbps respectively. To improve the bandwidth, our MMBCE proposed and it achieves 0.9 Mbps. Next, the comparative study is made with high resolution 1440×3088 and with 30 frames. Table 7 shows the required bandwidth as 16.7 Mbps. But 13.125, 13.810, 14.065 Mbps are the bandwidth which is achieved by Cubic, TCPW, and TCPHas. Our proposed algorithm MMBCE attains 15.992 Mbps estimated bandwidth which nearly occupies the actual bandwidth to deliver good quality video.

For both mobile configurations, to minimize the packet loss, the CDCW algorithm in the cloud dynamically adjusts the congestion window as per the estimated bandwidth. The main advantage of CDCW dynamically adjusts the congestion window to improve the goodput with minimal PLR. According to the bandwidth prediction of the cloud server, the video packets arrive in the VPS. The arrival rates of video packets examine with the servers (2, 4, and 6). In general, whenever the arrival video packet arises, the probability of an idle server decreases. Similarly, for the same arrival rate, the VPS increases, which increases the probability of idle servers by an average of approximately 2%. The overall advantage is to reduce the computational overhead and energy consumption. Thus, we significantly minimize video processing delay. After processing the video packets, the cloud server deals with the transcoding server. We propose the IVTS algorithm to choose the optimal transcoding server with a different model. Then determine the transcoding delay and video segment size of the individual transcoder. It always monitors and schedules the performance of transcoders to reduce the workload of the individual transcoder and delay.

4.2 Performance evaluation metrics

The bandwidth estimation, packet delivery ratio, packet loss rate, congestion window, and throughput are the network QoS parameters used to evaluate the proposed algorithms. Tables 8, 9 and 12 show the performance of MMBCE compare with other TCP algorithms. Our CDCW reduces the PLR in Table 10 and improves the congestion window size in Table 11.

Packet Delivery Ratio (PDR)

Packet Delivery Ratio (PDR) defines the sum of accepted video packets to the total no. of video packets sent from the cloud server during different RTT.

Goodput

Goodput is defined as the ratio of the total number of video packets received by the mobile in sequence order to the total number of video packets sent by the cloud. The unit of goodput is bytes per second. When any packet loss occurs, retransmission is performed in the goodput parameters based on bandwidth.

Packet Loss Rate (PLR)

PLR, defines the number of losing video packets per unit of time. In some cases, the video packets are retransmitted from the sender because they are either damaged or dropped.

Throughput

The throughput is defined as the transmission rate of video packets from the cloud server to the mobile client at the given time.

5 Results and discussion

In this Section, the framework evaluates the performance of estimated bandwidth, Packet Delivery Ratio(PDR), Packet Loss Ratio(PLR), goodput, dynamic congestion window, and throughput for MMBCE. The performance analysis of video processing server utilization and delay carried out in QVPS. Next, we discuss the transcoding parameters such as workload, mode, average output size, and delay.

5.1 Mobile bandwidth estimation

Figure 2 depicts the estimated bandwidth of different TCP variants. When the file size and RTT vary, the estimation of actual bandwidth also shows variations in the multi-flow distributed cloud environment. Our approach MMBCE applies the Markovian mathematical model to achieve high bandwidth estimation compared to other TCP algorithms. To make it more transparent, for the file size 30 MB with 50 ms, RTT, the MMBCE reaches 0.5 Mbps, and TCPHas utilizes 0.4 Mbps. The slight modification that occurs between the two algorithms is 0.1 Mbps. Our algorithm provides the best bandwidth estimation using a reward function. The same analysis is also applicable to larger file sizes.

5.2 Packet delivery ratio

PDR is an important parameter to improve network performance. The higher rate of PDR provides a functional network capacity. The unit of PDR metrics measures in terms of percentage. Figure 3 depicts PDR concerning RTT. For example, the lowest RTT 100 ms, the PDR for MMBCE, and TCPHAS is 50% and 48%. The rest of the congestion control schemes TCPW, Reno, and Cubic, provides 40% and 38%. The results show that MMBCE is more efficient and depicts the highest value of PDR. The same analysis performed with the highest value of 600 RTT, MMBCE, provides a high PDR rate since the congestion window dynamically fits in the cloud as per the bandwidth of mobile. Hence, MMBCE improves the performance of PDR compared with other algorithms.

5.3 Packet loss rate

Figure 4 experimentally analyzed the four TCP variants of various congestion algorithms, such as Cubic, TCPW, TcpHas, and CDCW. This result shows the better goodput with different Packet Loss Rate (PLR). For example, the lowest 0.00001 PLR, the goodput for the TcpHas, TCPW, and Cubic is 25%, 23%, and 12%, whereas our approach CDCW achieves 28% improvemen t. TCPHas, TCPW, and our proposed algorithm comparatively made a slight modification in the goodput. The reason behind this result is, all three algorithms deal with high Bandwidth Delay Product (BDP), and it handles the packet loss issue. A similar analysis examines with the highest PLR as 0.7%. We notice that the proposed algorithm, CDCW, produces 40% more goodput than other algorithms. For a better understanding as PLR increases, the goodput decreases gradually, as both deal with congestion loss and dynamically adjust the congestion window. The congestion window sets the reduction in packet loss. A cubic function fixes the CWND as a low percentage in TCP Cubic. According to the estimated bandwidth, dynamic adjustment of the congestion window happens in the sender side itself. The main advantage of CDCW dynamically adjusts the congestion window to improve the goodput with minimal PLR.

5.4 Dynamic congestion window

The congestion window is flow control forced through the sender, whereas the receiver inflicts the advertised window flow control. Figure 5 outlines the window size deviation for various algorithms at different RTT. In Fig. 5 CDCW window size steeply improves by adjusting the window dynamically based on segment size and estimated bandwidth. The window of other TCP variants such as TCPW, Cubic, Reno individually increased slowly, but TCPHas has a slight variation compare with proposed CDCW.

5.5 Throughput

Figure 6 shows the throughput performance for our proposed MMBCE compared with other TCP variants. Our approach improves throughput performance than other existing algorithms due to the reduction of PLR. Figure 6 observed that the proposed MMBCE algorithm attempts maximum throughput than other TCP Variants.

5.6 Video processing server queue utilization

Figure 7 illustrates the evaluation of two different queueing models as M/M/1 and M/M/S for the utilization of video server based on the arrival of request. In the graph, we noticed the arrival rate starts from 100 to 200 with different service rate and utilization of queue are calculated in percentage. The VPS utilizes 91% of the server for the M/M/1 queueing model at 100 arrival rate. Whereas for the same arrival rate, our proposed M/M/S queueing model in QVPS reduces as 45% of server utilization. This variation predicts until 200 requests. Hence, whenever the arrival rate increases, the server utilization also gradually increases in M/M/1. Using M/M/S, the server utilization significantly reduced due to the processing server increasing in the proposed model. The next figure shows the processing delay changes with an increase in the arrival rate of many servers.

5.7 Video processing delay

Figure 8 shows the processing delay for the different number of servers. We noticed that the increase in the number of servers (S = 6) reduces the video processing delay. The result achieves the goal of the QVPS algorithm. Once video processing over, the next step is transcoding. Here, for the heterogeneous network, experiments were performed for different workloads and modes.

5.8 Transcoding server workload

Figure 9 illustrates the comparison of transcoding servers utilization for different algorithms. To understand transcoding server utilization, we analyzed three approaches, namely Heuristic, COVT, and IVTS. Our algorithm utilizes 29% transcoder, and COVT employs 35%. Hence, our IVTS method reduces 6% utilization of transcoding workload, and overall, it uses only 13 transcoders. This reduction happens due to the intelligent decision making of IVTS. It always monitors and schedules the performance of transcoders to reduce the workload of the individual transcoder.

5.9 Transcoding mode

Figure 10 investigates with three different video transcoding algorithms, namely IVTS, COVT, and Heuristic. The analysis shows various video data types with varying intervals of time. While transcoding, sports video occupies more transcoding time due to on-demand of different viewers with the heterogeneity of mobiles heavy network traffic, different encoding standards, and billions of user’s demands make the delay in slow mode. The time takes for IVTS will always be less in both modes, such as slow and fast. Here, the transcoder operates on the workload instead of applying the heavy load to the particular transcoder. Our algorithm IVTS equally schedules the workload and optimize the time.

5.10 Transcoding average output size and delay

Figure 11 illustrates the comparison of the video output size and average delay for a different algorithm in video streaming. The utilization of video transcoder correlates with IVTS to select the video transcoder based on the output segment size, and thereby, it reduces the transcoding delay. Initially, the maximum segment size fixes as 500 KB, and the delay sets at 2 hours. As per the algorithm 3 and 4, the parameters MaxD and MaxVSZ map with QoS (SMax) and (DMax) of the given figure. The above analysis of Figs. 11 and 12 gives the result of average output size and average delay for different video transcoding algorithms. In this, we noticed, at the initial time of 0.5 hours, COVT executes 510 KB, whereas our proposed IVTS takes 500 KB, which is equal to SMax. The figure clearly states that always IVTS maintains the execution of transcoder output segment size to the QoS(SMax). But, the existing approach Heuristics exceeds the maximum segment size at a specified time interval. Then, it reflects the transcoding delay in the execution process. Since our algorithm maintains a constant level, to minimize the delay and buffering. Next, we move on to the average delay. Once, IVTS identifies the segment size of individual video transcoder, the execution begins.

Herein, the video transcoder is scheduled based on the video output segment size. In turn, it depicts the delay in Fig. 12. The average delay is plotted with a different time interval, as shown in Fig. 12. Here, the comparison analysis performs with the traditional approaches of COVT, Heuristic, IVTS, and QoS (DMax). The maximum delay for the execution of the different algorithm fixes as an average of 2 seconds. COVT and IVTS take the lower bound of QoS (DMax) of minimum average delay. But heuristic executes with upper bound of DMax. Hence, this figure clearly shows that the IVTS delay is minimal compared with COVT and Heuristic.

6 Conclusion

In this paper, we proposed an MMBCE, CDCW, QVPS, and IVTS approach to improve the network and multimedia QoS. The first approach, MMBCE and CDCW estimated the bandwidth with MDP’s help to avoid congestion and made a comparative analysis with the existing approach. The second approach, QVPS, involves the M/M/S queue to reduce the delay and server utilization of video processing. Thirdly, IVTS works intelligently for selecting the transcoding server using M/G/N queueing model, which reduces the transcoding time efficiently. The experimental evaluation shows that the overall system delay has reduced by using the above approaches. The queue model in the cloud helps to deliver a good quality of the video. In the future, we intend the cloud server to minimize congestion avoidance in the router for mobile live video streaming.

References

Dinh HT, Lee C, Niyato D, Wang P (2013) A survey of mobile cloud computing: architecture, applications, and approaches. Wirel Commun Mobile Comput 13(18):1587–1611

Sanaei Z, Abolfazli S, Gani A, Buyya R (2014) Heterogeneity in mobile cloud computing: taxonomy and open challenges. IEEE Commun Surv Tutor 16(1):369–392

Stergiou CL, Psannis KE, Gupta B (2020) Iot-based big data secure management in the fog over a 6g wireless network. IEEE Internet Things J

Hossain K, Rahman M, Roy S (2019) Iot data compression and optimization techniques in cloud storage: current prospects and future directions. Int J Cloud Appl Comput (IJCAC) 9(2):43–59

Al-Qerem A, Alauthman M, Almomani A, Gupta B (2020) Iot transaction processing through cooperative concurrency control on fog–cloud computing environment. Soft Comput 24(8):5695–5711

Tamizhselvi S, Muthuswamy V (2014) Adaptive video streaming in mobile cloud computing. In: 2014 IEEE International conference on computational intelligence and computing research. IEEE, pp 1–4

Jadad HA, Touzene A, Day K, Alziedi N, Arafeh B (2019) Context-aware prediction model for offloading mobile application tasks to mobile cloud environments. Int J Cloud Appl Comput (IJCAC) 9(3):58–74

Ahmed E, Naveed A, Gani A, Ab Hamid SH, Imran M, Guizani M (2019) Process state synchronization-based application execution management for mobile edge/cloud computing. Futur Gener Comput Syst 91:579–589

Noor TH, Zeadally S, Alfazi A, Sheng QZ (2018) Mobile cloud computing: Challenges and future research directions. J Netw Comput Appl 115:70–85

Abdo JB, Demerjian J (2017) Evaluation of mobile cloud architectures. Pervasive Mob Comput 39:284–303

Esposito C, Ficco M, Gupta B (2021) Blockchain-based authentication and authorization for smart city applications. Inf Process Manag 58(2):102468

Li D, Deng L, Gupta B, Wang H, Choi C (2019) A novel cnn based security guaranteed image watermarking generation scenario for smart city applications. Inform Sci 479:432–447

Lai CF, Wang H, Chao HC, Nan G (2013) A network and device aware qos approach for cloud-based mobile streaming. IEEE Trans Multimed 15(4):747–757

Tamizhselvi S, Muthuswamy V (2015) A bayesian gaussian approach for video streaming in mobile cloud computing. Adv Nat Appl Sci 9(6 SE):470–478

Wang Z, Zeng X, Liu X, Xu M, Wen Y, Chen L (2016) Tcp congestion control algorithm for heterogeneous internet. J Netw Comput Appl 68:56–64

Lukaseder T, Bradatsch L, Erb B, Van Der Heijden RW, Kargl F (2016) A comparison of tcp congestion control algorithms in 10g networks. In: 2016 IEEE 41st conference on local computer networks (LCN). pp. IEEE, 706–714

Wang J, Wen J, Zhang J, Xiong Z, Han Y (2016) Tcp-fit: An improved tcp algorithm for heterogeneous networks. J Netw Comput Appl 71:167–180

Jiang X, Jin G (2015) Cltcp: an adaptive tcp congestion control algorithm based on congestion level. IEEE Commun Lett 19(8):1307–1310

Parichehreh A, Alfredsson S, Brunstrom A (2018) Measurement analysis of tcp congestion control algorithms in lte uplink. In: 2018 Network Traffic Measurement and Analysis Conference (TMA). IEEE, pp 1–8

Callegari C, Giordano S, Pagano M, Pepe T (2014) A survey of congestion control mechanisms in linux tcp. In: Distributed computer and communication networks. Springer, pp 28–42

Leong WK, Wang Z, Leong B (2017) Tcp congestion control beyond bandwidth-delay product for mobile cellular networks. In: Proceedings of the 13th international conference on emerging networking experiments and technologies. ACM, pp 167–179

Jacobson V (1990) Modified tcp congestion control and avoidance alogrithms. Technical Report 30

Xu L, Harfoush K, Rhee I (2004) Binary increase congestion control (bic) for fast long-distance networks. In: Ieee Infocom, vol 4. INSTITUTE OF ELECTRICAL ENGINEERS INC (IEEE), pp 2514–2524

Ha S, Rhee I, Xu L (2008) Cubic: a new tcp-friendly high-speed tcp variant. ACM SIGOPS Operat Syst Rev 42(5):64–74

Mascolo S, Casetti C, Gerla M, Sanadidi MY, Wang R (2001) Tcp westwood: Bandwidth estimation for enhanced transport over wireless links. In: Proceedings of the 7th annual international conference on Mobile computing and networking. ACM, pp 287–297

Metzger F, Liotou E, Moldovan C, Hoßfeld T. (2016) Tcp video streaming and mobile networks: not a love story, but better with context. Comput Netw 109:246–256

Jacobson V (1995) Congestion avoidance and control. ACM SIGCOMM Comput Commun Rev 25(1):157–187

Fall K, Floyd S (1996) Simulation-based comparisons of tahoe, reno and sack tcp. ACM SIGCOMM Comput Commun Rev 26(3):5–21

Alrshah MA, Othman M, Ali B, Hanapi ZM (2014) Comparative study of high-speed linux tcp variants over high-bdp networks. J Netw Comput Appl 43:66–75

Lin S, Zhang X, Yu Q, Qi H, Ma S (2013) Parallelizing video transcoding with load balancing on cloud computing. In: 2013 IEEE International symposium on circuits and systems (ISCAS2013). IEEE, pp 2864–2867

Wang Z, Sun L, Wu C, Zhu W, Zhuang Q, Yang S (2015) A joint online transcoding and delivery approach for dynamic adaptive streaming. IEEE Trans Multimed 17(6):867–879

Liu S, Baṡar T., Srikant R (2008) Tcp-illinois: A loss-and delay-based congestion control algorithm for high-speed networks. Perform Eval 65(6-7):417–440

Mudassar A, Asri NM, Usman A, Amjad K, Ghafir I, Arioua M (2018) A new linux based tcp congestion control mechanism for long distance high bandwidth sustainable smart cities. Sustain Cities Soc 37:164–177

Chen Z, Liu Y, Duan Y, Liu H, Li G, Chen Y, Sun J, Zhang X (2015) A novel bandwidth estimation algorithm of tcp westwood in typical lte scenarios. In: 2015 IEEE/CIC International conference on communications in China (ICCC). IEEE, pp 1–5

Ameur CB, Mory E, Cousin B, Dedu E (2017) Tcphas: Tcp for http adaptive streaming. In: 2017 IEEE International conference on communications (ICC). IEEE, pp 1–7

Taha HA (2011) Operations research: an introduction, vol 790. Pearson/Prentice Hall, Upper Saddle River

Kleinrock L (1976) Queueing systems, volume 2: Computer applications, vol 66. Wiley, New York

Vilaplana J, Solsona F, Teixidó I., Mateo J, Abella F, Rius J (2014) A queuing theory model for cloud computing. J Supercomput 69(1):492–507

Nan X, He Y, Guan L (2014) Queueing model based resource optimization for multimedia cloud. J Vis Commun Image Represent 25(5):928–942

Baik E, Pande A, Zheng Z, Mohapatra P (2016) Vsync: Cloud based video streaming service for mobile devices. In: IEEE INFOCOM 2016-The 35th annual ieee international conference on computer communications. IEEE, pp 1–9

Cheng R, Wu W, Lou Y, Chen Y (2014) A cloud-based transcoding framework for real-time mobile video conferencing system. In: 2014 2nd IEEE international conference on mobile cloud computing, services, and engineering. IEEE, pp 236–245

Zheng Y, Wu D, Ke Y, Yang C, Chen M, Zhang G (2017) Online cloud transcoding and distribution for crowdsourced live game video streaming. IEEE Trans Circ Syst Vid Technol 27(8):1777–1789

Gao G, Zhang W, Wen Y, Wang Z, Zhu W (2015) Towards cost-efficient video transcoding in media cloud: Insights learned from user viewing patterns. IEEE Trans Multimed 17(8):1286–1296

Aparicio-Pardo R, Pires K, Blanc A, Simon G (2015) Transcoding live adaptive video streams at a massive scale in the cloud. In: Proceedings of the 6th ACM multimedia systems conference. ACM, pp. 49–60

Oikonomou P, Koziri MG, Tziritas N, Loukopoulos T, Cheng-Zhong X (2019) Scheduling heuristics for live video transcoding on cloud edges. ZTE Commun 15(2):35–41

Wei L, Cai J, Foh CH, He B (2017) Qos-aware resource allocation for video transcoding in clouds. IEEE Trans Circ Syst Vid Technol 27(1):49–61

Sutton RS, Barto AG (2018) Reinforcement learning: An introduction. MIT Press, Cambridge

Xing M, Xiang S, Cai L (2014) A real-time adaptive algorithm for video streaming over multiple wireless access networks. IEEE J Sel Areas Commun 32(4):795–805

Wang HS, Moayeri N (1995) Finite-state markov channel-a useful model for radio communication channels. IEEE Trans Veh Technol 44(1):163–171

Jackson JR (1963) Jobshop-like queueing systems. Manage Sci 10(1):131–142

Nair AN, Jacob M, Krishnamoorthy A (2015) The multi server m/m/(s, s) queueing inventory system. Ann Oper Res 233(1):321–333

Kleinrock L (1975) Theory, volume 1, Queueing systems. Wiley-interscience, Hoboken

Samsung galaxy s7 edge android emulator. https://medium.com/@ssaurel/install-samsung-galaxy-s7-and-s7-edge-skins-in-your-android-emulator-846bbcd84d24https://medium.com/@ssaurel/install-samsung-galaxy-s7-and-s7-edge-skins-in-your-android-emulator-846bbcd84d24https://medium.com/@ssaurel/install-samsung-galaxy-s7-and-s7-edge-skins-in-your-android-emulator-846bbcd84d24

Amazon web services, inc.,amazon elastic compute cloud. http://aws.amazon.com/ec2/

Palmer M (2017) Amazon EC2 instances explained. [online] Available: https://24x7itconnection.com/2017/01/10/amazon-ec2-instances-explainedhttps://24x7itconnection.com/2017/01/10/amazon-ec2-instances-explained

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: 1

Appendix: 1

This section provides the details on the implementations carried out in this work with suitable screenshots and demos. The proposed framework in cloud server (AWS) Fig. 13 depicts the screen taken from the Samsung Galaxy S7 used in the experiment. Here, the user requests for a video from cloud side. The video is retrieved and delivers good quality of live streaming.

Figure 14 shows integration of Samsung mobile with the public cloud namely AWS. Figure 15 has been taken while testing the proposed algorithms in a public cloud environment with AWS device farm. Figure 16 shows the video delivery using the public cloud AWS farm to the user by applying the proposed algorithms and download the video contents with better response.

Rights and permissions

About this article

Cite this article

Tamizhselvi, S., Muthuswamy, V. Delay - aware bandwidth estimation and intelligent video transcoder in mobile cloud. Peer-to-Peer Netw. Appl. 14, 2038–2060 (2021). https://doi.org/10.1007/s12083-021-01134-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12083-021-01134-1