Abstract

We collect and present in a unified way several results in recent years about the elastic flow of curves and networks, trying to draw the state of the art of the subject. In particular, we give a complete proof of global existence and smooth convergence to critical points of the solution of the elastic flow of closed curves in \({\mathbb {R}}^2\). In the last section of the paper we also discuss a list of open problems.

Similar content being viewed by others

1 Introduction

The study of geometric flows is a very flourishing mathematical field and geometric evolution equations have been applied to a variety of topological, analytical and physical problems, giving in some cases very fruitful results. In particular, a great attention has been devoted to the analysis of harmonic map flow, mean curvature flow and Ricci flow. With serious efforts from the members of the mathematical community the understanding of these topics gradually improved and it culminated with Perelman’s proof of the Poincaré conjecture making use of the Ricci flow, completing Hamilton’s program. The enthusiasm for such a marvelous result encouraged more and more researchers to investigate properties and applications of general geometric flows and the field branched out in various different directions, including higher order flows, among which we mention the Willmore flow.

In the last two decades a certain number of authors focused on the one dimensional analog of the Willmore flow (see [26]): the elastic flow of curves and networks. The elastic energy of a regular and sufficiently smooth curve \(\gamma \) is a linear combination of the \(L^2\)-norm of the curvature \(\varvec{\kappa }\) and the length, namely

where \(\mu \ge 0\). In the case of networks (connected sets composed of \(N\in {\mathbb {N}}\) curves that meet at their endpoints in junctions of possibly different order) the functional is defined in a similar manner: one sum the contribution of each curve (see Definition 2.1). Formally the elastic flow is the \(L^2\) gradient flow of the functional \({\mathcal {E}}\) (as we show in Section 2.3) and the solutions of this flow are the object of our interest in the current paper.

To the best of our knowledge the problem was taken into account for the first time by Polden. In his Doctoral Thesis [46, Theorem 3.2.3.1] he proved that, if we take as initial datum a smooth immersion of the circle in the plane, then there exists a smooth solution to the gradient flow problem for all positive times. Moreover, as times goes to infinity, it converges along subsequences to a critical point of the functional (either a circle, or a symmetric figure eight or a multiple cover of one of these). Polden was also able to prove that if the winding number of the initial curve is \(\pm 1\) (for example the curve is embedded), then it converges to a unique circle [46, Corollary 3.2.3.3]. In the early 2000s Dziuk, Kuwert and Schätzle generalize the global existence and subconvergence result to \({\mathbb {R}}^n\) and derive an algorithm to treat the flow and compute several numerical examples. Later the analysis was extended to non closed curve, both with fixed endpoint and with non–compact branches. The problem for networks was first proposed in 2012 by Barrett, Garcke and Nürnberg [7].

Beyond the study of this specific problem there are quite a lot of catchy variants. For instance, as for a regular \(C^2\) curve \(\gamma :I\rightarrow {\mathbb {R}}^2\) it holds \({\varvec{k}}=\partial _s \tau \), where \(\tau \) is the unit tangent vector and \(\partial _s\) denotes derivative with respect to the arclength parameter s of the curve, we can introduce the tangent indicatrix: a scalar map \(\theta :I\rightarrow {\mathbb {R}}\) such that \(\tau =(\cos \theta ,\sin \theta )\). Then we can write the elastic energy in terms of the angle spanned by the tangent vector. By expressing the \(L^2\) corresponding gradient flow by means of \(\theta \) one get another geometric evolution equation. This is a second order gradient flow and it has been first considered by [54] and then further investigated by [30, 31, 42, 45, 55].

Critical points of total squared curvature subject to fixed length are called elasticae, or elastic curves. Notice that for any \(\mu >0\) the elasticae are (up to homothety) exactly the critical points of the energy \({\mathcal {E}}\). Elasticae have been studied since Bernoulli and Euler as the elastic energy was used as a model for the bending energy of an elastic rod [53] and more recently Langer and Singer contributed to their classification [27, 28] (see also [20, 32]).

The \(L^2\)-gradient flow of \(\int \vert \varvec{\kappa }\vert ^2\,\mathrm {d}s\) when the curve is subjected to fixed length is studied in [12, 13, 21, 49].

It is worth to mention also results about the Helfrich flow [17, 56], the elastic flow with constraints [25, 43, 44] and other fourth (or higher) order flows [1, 2, 36, 37, 57].

In the following table we collect some contributions on the elastic flow of curves (closed or open) and networks. The first column concerns papers containing detailed proofs of short time existence results. The initial datum can be a function of a suitably chosen Sobolev space, or Hölder space, or the curves are smooth. In the second column we place the articles that show existence for all positive times or that describe obstructions to such a desired result. When the flow globally exists, it is natural to wonder about the behavior of the solutions for \(t\rightarrow +\infty \). Papers that answer this question are in the third column. The ambient space may vary from article to article: it can be \({\mathbb {R}}^2\), \({\mathbb {R}}^n\), or a Riemannian manifold.

We refer also to the two recent PhD theses [38, 48].

The aim of this expository paper is to arrange (most of) this material in a unitary form, proving in full detail the results for the elastic flow of closed curves and underlying the differences with the other cases.

For simplicity we restrict to the Euclidean plane as ambient space. In Section 2 we define the flow, deriving the motion equation and the necessary boundary conditions for open curves and networks. In the literature curves that meet at junctions of order at most three are usually considered, while here the order of the junctions is arbitrary.

In Section 3 we show short time existence and uniqueness (up to reparametrizations) for the elastic flow of closed curve, supposing that the initial datum is Hölder-regular (Theorem 3.18). The notion of \(L^2\)-gradient flow gives rise to a fourth order parabolic quasilinear PDE, where the motion in tangential direction is not specified. To obtain a non–degenerate equation we fix the tangential velocity, then getting first a special flow (Definition 2.12). We find a unique solution of the special flow (Theorem 3.14) using a standard linearization procedure and a fixed point argument. Then a key point is to ensure that solving the special flow is enough to obtain a solution to the original problem. How to overcome this issue is explained in Section 2.4. The short time existence result can be easily adapted to open curves (see Remark 3.15), but present some extra difficulties in the case of networks, that we explain in Remark 3.16.

One interesting feature following from the parabolic structure of the elastic flow is that solutions are smooth for any (strictly) positive times. We give the idea of two possible lines of proof of this fact and we refer to [15, 22] for the complete result.

Section 4 is devoted to the prove that the flow of either closed or open curves with fixed endpoint exists globally in time (Theorem 4.15). The situation for network is more delicate and it depends on the evolution of the length of the curves composing the network and on the angles formed by the tangent vectors of the curves concurring at the junctions (Theorem 4.18).

In Section 5 we first show that, as time goes to infinity, the solutions of the elastic flow of closed curve convergence along subsequences to stationary points of the elastic energy, up to translations and reparametrizations. We shall refer to this phenomenon as the subconvergence of the flow. We then discuss how the subconvergence can be promoted to full convergence of the flow, namely to the existence of the full asymptotic limit as \(t\rightarrow +\infty \) of the evolving flow, up to reparametrizations (Theorem 5.4). The proof is based on the derivation and the application of a Łojasiewicz–Simon gradient inequality for the elastic energy.

We conclude the paper with a list of open problems.

2 The Elastic Flow

A regular curve \(\gamma \) is a continuous map \(\gamma :[a,b]\rightarrow {{{\mathbb {R}}}}^2\) which is differentiable on (a, b) and such that \(\vert \partial _x\gamma \vert \) never vanishes on (a, b). Without loss of generality, from now on we consider \([a,b]=[0,1]\).

In the sequel we will abuse the word “curve” to refer both to the parametrization of a curve, the equivalence class of reparametrizations, or the support in \({\mathbb {R}}^2\).

We denote by s the arclength parameter and we will pass to the arclength parametrization of the curves when it is more convenient without further mentioning. We will also extensively use the arclength measure \(\mathrm {d}s\) when integrating with respect to the volume element \(\mu _g\) on [0, 1] induced by a regular rectifiable curve \(\gamma \), namely, given a \(\mu _g\)-integrable function f on [0, 1] it holds

where \(\ell (\gamma )\) is the length of the curve \(\gamma \).

Definition 2.1

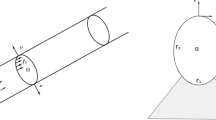

A planar network \({\mathcal {N}}\) is a connected set in \({\mathbb {R}}^2\) given by a finite union of images of regular curves \(\gamma ^i:[0,1]\rightarrow {{{\mathbb {R}}}}^2\) that may have endpoints of order one fixed in the plane and curves that meet at junctions of different order \(m\in {\mathbb {N}}_{\ge 2}\).

The order of a junction \(p\in {{{\mathbb {R}}}}^2\) is the number \(\sum _i \{0,1\}\cap \sharp (\gamma ^i)^{-1}(p)\).

As special cases of networks we find:

-

a single curve (either closed or not);

-

a network of three curves whose endpoints meet at two different triple junction (the so-called Theta);

-

a network of three curves with one common endpoint at a triple junction and the other three endpoint of order one (the so called Triod).

Notice that when it is more convenient, we will parametrize a closed curve as a map \(\gamma :{\mathbb {S}}^1\rightarrow {\mathbb {R}}^2\).

In order to calculate the integral of an N-tuple \(f=(f^1,\ldots ,f^N)\) of functions along the network \({\mathcal {N}}\) composed of the N curves \(\gamma ^i\) we adopt the notation

If \(\mu =(\mu ^1, \ldots ,\mu ^N)\) with \(\mu ^i\ge 0\), then the notation \(\int _{{\mathcal {N}}} \mu f\,\mathrm {d}s\) stands for \(\sum _{i=1}^N\int _0^1 \mu ^i f^i\vert \partial _x\gamma ^i\vert \,\mathrm {d}x\).

Let \(\gamma :[0,1]\rightarrow {\mathbb {R}}^2\) be a regular curve and \(f:(0,1)\rightarrow {\mathbb {R}}\) a Lebesgue measurable function. For \(p\in [1,\infty )\) we define

and

We will also use the \(L^\infty \)-norm

Whenever we are considering continuous functions, we identify the supremum norm with the \(L^\infty \) norm and denote it by \(\left\Vert \cdot \right\Vert _\infty \).

We remark here that for sake of notation we will simply write \(\Vert \cdot \Vert _{L^p}\) instead of \(\Vert \cdot \Vert _{L^p({\,\mathrm {d}}s)}\) both for \(p\in [1,\infty )\) and \(p=\infty \) whenever there is no risk of confusion.

We will analogously write

for an N-tuple of functions f along a network \({\mathcal {N}}\).

Assuming that \(\gamma ^i\) is of class \(H^2\), we denote by \(\varvec{\kappa }^i:=\partial _s^2\gamma ^i\) the curvature vector to the curve \(\gamma ^i\), which is defined at almost every point and the curvature is nothing but \(\kappa ^i:=\vert \varvec{\kappa }^i\vert \). We recall that in the plane we can write the curvature vector as \(\varvec{\kappa }^i=k^i \nu ^i\) where \(\nu ^i\) is the counterclockwise rotation of \(\frac{\pi }{2}\) of the unit tangent vector \(\tau ^i:=\vert \partial _x\gamma ^i\vert ^{-1}(\partial _x\gamma ^i)\) to a curve \(\gamma ^i\) and then \(k^i\) is the oriented curvature.

Definition 2.2

Let \(\mu ^i \ge 0\) be fixed for \(i\in \{1, \ldots , N\}\). The elastic energy functional \({\mathcal {E}}_\mu \) of a network \({\mathcal {N}}\) given by N curves \(\gamma ^i\) of class \(H^2\) is defined by

and \(\mu \mathrm {L}({\mathcal {N}})\) is named weighted global length of the network \({\mathcal {N}}\).

2.1 First Variation of the Elastic Energy

The computation of the first variation has been carried several times in full details in the literature, both in the setting of closed curves or networks. We refer for instance to [7, 35].

Let \(N\in {\mathbb {N}}\), \(i\in \{1,\ldots ,N\}\). Consider a network \({\mathcal {N}}\) composed of N curves, parametrized by \(\gamma ^i:[0,1]\rightarrow {\mathbb {R}}^2\) of class \(H^4\). In order to compute the first variation of the energy we can suppose that the curves meet at one junction, which is of order N and \(\gamma ^i(1)\) is some fixed point in \({{{\mathbb {R}}}}^2\) for any i. That is

The case of networks with other possible topologies can be easily deduced from the presented one. We consider a variation \(\gamma ^i_\varepsilon =\gamma ^i+\varepsilon \psi ^i\) of each curve \(\gamma ^i\) of \({\mathcal {N}}\) with \(\varepsilon \in {\mathbb {R}}\) and \(\psi ^i:[0,1]\rightarrow {\mathbb {R}}^2\) of class \(H^2\). We denote by \({\mathcal {N}}_\varepsilon \) the network composed of the curves \(\gamma ^i_\varepsilon \), which are regular whenever \(|\varepsilon |\) is small enough. We need to impose that the structure of the network \({\mathcal {N}}\) is preserved in the variation: we want the network \({\mathcal {N}}_\varepsilon \) to still have one junction of order N and we want to preserve the position of the other endpoints \(\gamma ^i_\varepsilon (1)=P^i\). To this aim we require

By definition of the elastic energy functional of networks, we have

We introduce the operator \(\partial _s^\perp \) (that acts on a vector field \(\varphi \)) defined as the normal component of \(\partial _s\varphi \) along the curve \(\gamma \), that is \(\partial _s^\perp \varphi =\partial _s\varphi -\left\langle \partial _s\varphi ,\partial _s\gamma \right\rangle \partial _s\gamma \). Then a direct computation yields the following identities:

for any i on (0, 1), where s is the arclength parameter of \(\gamma _\varepsilon \) for any \(\varepsilon \). Therefore, evaluating at \(\varepsilon =0\), we obtain

Moreover, denoting by \(\partial _s^\perp (\cdot ): =\partial _s(\cdot )-\langle \partial _s (\cdot ),\tau \rangle \tau \), we have

then, using these identities and integrating (2.3) by parts twice, one gets

As we chose arbitrary fields \(\psi ^i\), we can split \(\partial _s\psi ^i\) into normal and tangential components as

This allows us to write

and we can then partially reformulate the first variation in terms of the oriented curvature and its derivatives:

2.2 Second Variation of the Elastic Energy

In this part we compute the second variation of the elastic energy functional \({\mathcal {E}}_\mu \). We are interested only in showing its structure and analyze some properties, instead of computing it explicitly (for the full formula of the second variation we refer to [18, 47]). In fact, we will exploit the properties of the second variation only in the proof of the smooth convergence of the elastic flow of closed curves in Section 5. In particular, we we will not need to carry over boundary terms in the next computations.

Let \(\gamma :(0,1)\rightarrow {{{\mathbb {R}}}}^2\) be a smooth curve and let \(\psi :(0,1)\rightarrow {{{\mathbb {R}}}}^2\) be a vector field in \(H^4(0,1)\cap C^0_c(0,1)\), that is, \(\psi \) identically vanishes out of a compact set contained in (0, 1). In this setting, we can think of \(\gamma \) as a parametrization of a part of an arc of a network or of a closed curve. We are interested in the second variation

By (2.4) we have

where \(\varvec{\kappa }_\varepsilon \) is the curvature vector of \(\gamma _\varepsilon =\gamma +\varepsilon \psi \), for any \(\varepsilon \) sufficiently small.

We further assume that \(\gamma \) is a critical point for \({\mathcal {E}}_\mu \) and that \(\psi \) is normal along \(\gamma \). Then

Using (2.2), if \(\phi _\varepsilon \) is a normal vector field along \(\gamma _\varepsilon \) for any \(\varepsilon \) and we denote \(\phi {:}{=}\phi _0\), a direct computation shows that

Hence \(\partial _\varepsilon |_{_{\varepsilon =0}} (\partial _s^\perp )^2 \varvec{\kappa }_\varepsilon \) can be computed applying the above commutation rule twice, first with \(\phi _\varepsilon = \partial _s^\perp \varvec{\kappa }_\varepsilon \) and then with \(\psi _\varepsilon = \varvec{\kappa }_\varepsilon \). One easily obtains

where \(\Omega (\psi )\in L^2(\mathrm {d}s)\) is a normal vector field along \(\gamma \), depending only on \(k,\psi \) and their “normal derivatives” \(\partial _s^\perp \) up to the third order. Moreover the dependence of \(\Omega \) on \(\psi \) is linear. For further details on these computations we refer to [35].

Using (2.2) it is immediate to check that \(\partial _\varepsilon |_{_{\varepsilon =0}} \left( |\varvec{\kappa }_\varepsilon |^2 \varvec{\kappa }_\varepsilon - \mu \varvec{\kappa }_\varepsilon \right) \) yields terms that can be absorbed in \(\Omega (\psi )\). Therefore we conclude that

By polarization, we see that the second variation of \({\mathcal {E}}_\mu \) defines a bilinear form \(\delta ^2{\mathcal {E}}_\mu (\varphi ,\psi )\) given by

for any normal vector field \(\varphi ,\psi \) of class \(H^4\cap C^0_c\) along \(\gamma \), which is a smooth critical point of \({\mathcal {E}}_\mu \).

2.3 Definition of the Flow

In this section we define the elastic flow for curves and networks. We formally derive it as the \(L^2\)-gradient flow of the elastic energy functional (2.1). We need to derive the normal velocity defining the flow. The reasons why a gradient flow is defined in term of a normal velocity are related to the invariance under reparametrization of the energy functional and we will come back on this point more deeply in Section 2.4.

The analysis of the boundary terms appeared in the computation of the first variation play an important role in the definition of the flow. Indeed, a correct definition of the flow depends on the fact that the velocity defining the evolution should be the opposite of the “gradient” of the energy. Hence we need to identify such a gradient from the formula of the first variation and, in turn, analyze the boundary terms appearing.

Suppose first that the network is composed only of one closed curve \(\gamma \in C^{\infty }([0,1],{\mathbb {R}}^2)\). This means that for every \(k\in {\mathbb {N}}\) we have \(\partial _x^k\gamma (0)=\partial _x^k\gamma (1)\) and \(\gamma \) can be seen as a smooth periodic function on \({{{\mathbb {R}}}}\). Then a variation field \(\psi \) is just a periodic function as well and no further boundary constraints are needed and then the boundary terms in (2.4) are automatically zero. Then (2.5) reduces to

We have formally written the directional derivative of \({\mathcal {E}}_\mu \) of each curve in the direction \(\psi \) as the \(L^2\)-scalar product of \(\psi \) and the vector \(2 (\partial ^\perp _s)^2 \varvec{\kappa } +|\varvec{\kappa }|^2 \varvec{\kappa } -\mu \varvec{\kappa }\). Hence we can understand \(2 (\partial ^\perp _s)^2 \varvec{\kappa } +|\varvec{\kappa }|^2 \varvec{\kappa } -\mu \varvec{\kappa }\) to be the gradient of \({\mathcal {E}}_\mu \). We then set the normal velocity driving the flow to be the opposite of such a gradient, that is

where, again, \((\cdot )^\perp \) denotes the normal component of the velocity \(\partial _t\gamma \) of the curve \(\gamma \):

In \({\mathbb {R}}^2\) it is possible to express the evolution equation in terms of the scalar curvature:

This last equality can be directly deduced from (2.6). In this way we have derived an equation that describe the normal motion of each curve.

We pass now to consider, exactly as in Section 2.1, a network composed of N curves, parametrized by \(\gamma ^i:[0,1]\rightarrow {\mathbb {R}}^2\) with \(i\in \{1,\ldots ,N\}\), that meet at one junction of order N at \(x=0\) and have the endpoints at \(x=1\) fixed in \({\mathbb {R}}^2\). We denote by \({\mathcal {N}}_\varepsilon \) the network composed of the curves \(\gamma ^i_\varepsilon =\gamma ^i+\varepsilon \psi ^i\) with \(\psi ^i:[0,1]\rightarrow {\mathbb {R}}^2\) such that

Since the energy of a network is defined as the sum of of the energy of each curve, it is reasonable to define the gradient of \({\mathcal {E}}_\mu \) as the sum of the gradient of the energy of each curve composing the network, that we have identified with the vectors \(2 (\partial ^\perp _s)^2 \varvec{\kappa }^i +|\varvec{\kappa }^i|^2 \varvec{\kappa }^i-\mu ^i \varvec{\kappa }^i\). Hence, a network is a critical point of the energy when the the vectors \(2 (\partial ^\perp _s)^2 \varvec{\kappa }^i +|\varvec{\kappa }^i|^2 \varvec{\kappa }^i-\mu ^i \varvec{\kappa }^i\) vanish and the boundary terms in (2.5) are zero. Depending on the boundary constraints imposed on the network, i.e., its topology or possible fixed endpoints, we aim now to characterize the set of networks fulfilling boundary conditions that imply

Let us discuss the main possible cases of boundary conditions separately.

Curve with constraints at the endpoints

As we have mentioned before, if the network is composed of one curve, but this curve is not closed, then we fix its endpoint, namely \(\gamma (0)=P\in {\mathbb {R}}^2\) and \(\gamma (1)=Q\in {\mathbb {R}}^2\). As already shown in in Section 2.1, to maintain the position of the endpoints, we require \(\psi (0)=\psi (1)=0\), that automatically implies

in the computation of the first variation. On the other hand we are free to chose \(\partial _s\psi \) as test fields in the first variation. Suppose for example that \(\partial _s\psi (0)=\nu \) (where \(\nu \) is the unit normal vector to the curve \(\gamma \)) and \(\partial _s\psi (1)=0\), then from (2.5) we obtain \(k(0)=0\) and so \({\varvec{k}}(0)=0\). Interchanging the role of \(\partial _s\psi (0)\) and \(\partial _s\psi (1)\) we have \(k(1)={\varvec{k}}(1)=0\).

Hence we end up with the following set of conditions

known in the literature as natural or Navier boundary conditions.

However, since the elastic energy functional is a functional of the second order, it is legitimate to impose also that the unit tangent vectors at the endpoint of the curve are fixed, namely that the curve is clamped. Hence we now have \(\gamma (0)=P, \gamma (1)=Q, \tau (0)=\tau _0,\tau (1)=\tau _1\) as constraints. This time these boundary conditions affects the class of test function requiring \(\partial _s\psi (0)=\partial _s\psi (1)=0\), that, together with \(\psi (0)=\psi (1)=0\), automatically set (2.5) to zero.

Networks

We can consider without loss of generality that the structure of a network is as described in Section 2.1. Indeed boundary conditions for a other possible topologies can be easily deduces from this case.

The possible boundary condition at \(x=1\) are nothing but what we just described for a single curve with constraints at the endpoints. Thus we focus on the junction \(O=\gamma ^1(0)=\cdots =\gamma ^N(0)\). We can distinguish two sub cases

Neumann (so-called natural or Navier) boundary conditions

In this case we only require the network not to change its topology in a first variation. Letting first \(\psi ^i(0)=0\) for any i, it remains the boundary term

where the test functions \(\psi ^i\) appear differentiated. We can choose \(\partial _s\psi ^{1}(0)=\nu ^1(0)\) and \(\partial _s\psi ^i(0)=0\) for every \(i\in \{2,\ldots ,N\}\). This implies \(\varvec{\kappa }^1(0)=0\). Then, because of the arbitrariness of the choice of i we obtain:

for any \(i\in \{1, \ldots , N\}\).

It remains to consider the last term of (2.5). Taking into account the just obtained condition (2.8), by arbitrariness of \(\psi ^1(0)=\cdots =\psi ^N(0)\) it reads

Dirichlet (so-called clamped) boundary conditions

As discussed above, also in the case of a network we can impose a condition on the tangent of the curves at their endpoints. As we saw in the clamped curve case, from the variational point of this extra condition involves the unit tangent vectors. Then an extra property on \(\partial _{s}\psi ^{i}\) is expected.

At the junction we require the following \((N-1)\) conditions:

that is, the angles between tangent vectors are fixed. We need that also the variation \({\mathcal {N}}_{\varepsilon }\) satisfies the same

for any \(|\varepsilon |\) small enough. This means that for every \(i,j\in \{1,\ldots , N\}\) we need that

that implies

where we used the notation \(\left( \psi ^i_{s}\right) ^\perp :=\left\langle \partial _{s}\psi ^i,\nu ^i\right\rangle \). So we impose

Then the first boundary term of (2.5) reduces to

Hence we find the following boundary conditions:

In the end, whenever the network is composed of N curves we have a system of N equations (not coupled) that are quasilinear and of fourth order in the parametrizations of the curves with coupled boundary conditions.

We now need to briefly introduce the Hölder spaces that will appear in the definition of the flow.

Let \(N\in {\mathbb {N}}\), consider a network \({\mathcal {N}}\) composed of N curves with endpoints of order one fixed in the plane and the curves that meet at junctions of different order \(m\in {\mathbb {N}}_{\ge 2}\). As we have already said each curve of \({\mathcal {N}}\) is parametrized by \(\gamma ^i:[0,1]\rightarrow {\mathbb {R}}^2\). Let \(\alpha \in (0,1)\). We denote \(\gamma :=(\gamma ^1,\ldots ,\gamma ^N)\in ({\mathbb {R}}^2)^N\) and

We will make and extensive use of parabolic Hölder spaces (see also [51, §11, §13]). For \(k\in \{0,1,2,3,4\}\), \(\alpha \in (0,1)\) the parabolic Hölder space

is the space of all functions \(u:[0,T]\times [0,1]\rightarrow {\mathbb {R}}\) that have continuous derivatives \(\partial _t^i\partial _x^ju\) where \(i,j\in {\mathbb {N}}\) are such that \(4i+j\le k\) for which the norm

is finite. We recall that for a function \(u:[0,T]\times [0,1]\rightarrow {\mathbb {R}}\), for \(\rho \in (0,1)\) the semi-norms \([ u]_{\rho ,0}\) and \([ u]_{0,\rho }\) are defined as

and

Moreover the space \(C^{\frac{\alpha }{4},\alpha }\left( [0,T]\times [0,1]\right) \) is equal to the space

with equivalent norms.

We also define the spaces \(C^{\frac{k+\alpha }{4}, k+\alpha }([0,T]\times \{0,1\}, {\mathbb {R}}^m)\) to be \(C^{\frac{k+\alpha }{4}}([0,T], {\mathbb {R}}^{2m})\) via the isomorphism \(f\mapsto (f(t,0),f(t,1))^t\).

Definition 2.3

(Elastic flow) Let \(N\in {\mathbb {N}}\) and let \({\mathcal {N}}_0\) be an initial network composed of N curves parametrized by \(\gamma _0=(\gamma _0^1,\ldots ,\gamma _0^N)\in \mathrm {I}_N\), (possibly) with endpoints of order one and (possibly) with curves that meet at junctions of different order \(m\in {\mathbb {N}}_{\ge 2}\). Then a time dependent family of networks \({\mathcal {N}}(t)_{t\in [0,T]}\) is a solution to the elastic flow in the time interval [0, T] with \(T>0\) if there exists a parametrization

with \(\gamma ^i\) regular, and such that for every \(t\in [0,T], x\in [0,1]\) and \(i\in \{1,\ldots ,N\}\) the system

is satisfied. Moreover the system is coupled with suitable boundary conditions as follows, corresponding to the possible cases of boundary conditions discussed in the formulation of the first variation.

-

If \(N=1\) and the curve \(\gamma _0\) is closed we require \(\gamma (t,x)\) to be closed and we impose periodic boundary conditions.

-

If \(N=1\) and the curve \(\gamma _0\) is not closed with \(\gamma _0(0)=P\in {\mathbb {R}}^2\), \(\gamma _0(1)=Q\in {\mathbb {R}}^2\) and we want to impose natural boundary conditions we require

$$\begin{aligned} {\left\{ \begin{array}{ll} \gamma (t,0)=P\\ \gamma (t,1)=Q\\ \varvec{\kappa }(t,0)=\varvec{\kappa }(t,1)=0. \end{array}\right. } \end{aligned}$$(2.11) -

If \(N=1\) and the curve \(\gamma _0\) is not closed with \(\gamma _0(0)=P\in {\mathbb {R}}^2\), \(\gamma _0(1)=Q\in {\mathbb {R}}^2\) and we want to impose clamped boundary conditions, we require

$$\begin{aligned} {\left\{ \begin{array}{ll} \gamma (t,0)=P\\ \gamma (t,1)=Q\\ \tau (t,0)=\tau _0\\ \tau (t,1)=\tau _1. \end{array}\right. } \end{aligned}$$(2.12) -

If N is arbitrary and \({\mathcal {N}}_0\) has one multipoint

$$\begin{aligned} \gamma _0^{i_1}(y_1)=\cdots =\gamma _0^{i_m}(y_m), \end{aligned}$$with \((i_1,y_1),\ldots ,(i_m,y_m)\in \{1,\ldots ,N\}\times \{0,1\}\) and we want to impose natural boundary conditions, for every \(j\in \{1,\ldots ,m\}\) we require

$$\begin{aligned} {\left\{ \begin{array}{ll} \kappa ^{i_j}(t,y)=0\\ \sum _{j=1}^{m} \left( -2\partial ^\perp _s \varvec{\kappa }^{i_j}+\mu ^{i_j}\tau ^{i_j}\right) (t,y_j)=0. \end{array}\right. } \end{aligned}$$(2.13) -

If N is arbitrary and \({\mathcal {N}}_0\) has one multipoint

$$\begin{aligned} \gamma _0^{i_1}(y_1)=\cdots =\gamma _0^{i_m}(y_m), \end{aligned}$$with \((i_1,y_1),\ldots ,(i_m,y_m)\in \{1,\ldots ,N\}\times \{0,1\}\) where we want to impose clamped boundary conditions, we require

$$\begin{aligned} {\left\{ \begin{array}{ll} \left\langle \tau ^{i_1}(y_1),\tau ^{i_2}(y_2)\right\rangle =c^{1,2}\\ \ldots \\ \left\langle \tau ^{i_{m-1}}(y_{m-1}),\tau ^{i_m}(y_m)\right\rangle =c^{m-1,m}\\ \sum _{j=1}^m k^{i_j}=0\\ \sum _{j=1}^m \left( -2\partial ^\perp _s \varvec{\kappa }^{i_j}- |\varvec{\kappa }^{i_j}(y_i)|^2\tau ^{i_j}(y_i) +\mu ^{i_j}\tau ^{i_j}(y_i)\right) =0. \end{array}\right. } \end{aligned}$$(2.14)

Clearly in the case of network with several junctions and endpoints of order one fixed in the plane, one has to impose different boundary conditions (chosen among (2.11), (2.12), (2.13) and (2.14)) at each junctions and endpoint.

We give a name to the boundary conditions appearing in the definition of the flow. When there is a multipoint

with \((i_1,y_1),\ldots ,(i_m,y_m)\in \{1,\ldots ,N\}\times \{0,1\}\) we shortly refer to:

-

\(\gamma _0^{i_1}(t,y_1)=\cdots =\gamma _0^{i_m}(t,y_m)\) as concurrency condition;

-

\(\left\langle \tau ^{i_1}(y_1),\tau ^{i_2}(y_2)\right\rangle =c^{1,2}, \ldots , \left\langle \tau ^{i_{m-1}}(y_{m-1}),\tau ^{i_m}(y_m)\right\rangle =c^{m-1,m}\) as angle conditions;

-

either \(k^{i_j}(t,y)=0\) for every \(j\in \{1,\ldots ,m\}\) or \(\sum _{j=1}^m k^{i_j}=0\) as curvature conditions;

-

\(\sum _{j=1}^m \left( -2\partial ^\perp _s \varvec{\kappa }^{i_j}- |\varvec{\kappa }^{i_j}(y_i)|^2\tau ^{i_j}(y_i) +\mu ^{i_j}\tau ^{i_j}(y_i)\right) =0\) as third order condition.

When we have an endpoint of order one we refer to the condition involving the tangent vector as angle condition and the curvature as curvature condition.

Remark 2.4

In system (2.10) only the normal component of the velocity is prescribed. This does not mean that the tangential velocity is necessary zero. We can equivalently write the motion equations as

where \(V^i=-2\partial _s^2k^i-(k^i)^3+k^i\) and \(T^i\) are some at least continuous functions. In the case of a single closed curve or a single curve with fixed endpoint we can impose \(T\equiv 0\) (see Section 2.4).

Definition 2.5

(Admissible initial network) A network \({\mathcal {N}}_0\) of N regular curves parametrized by \(\gamma =(\gamma ^1, \ldots ,\gamma ^N)\), \(\gamma ^i:[0,1]\rightarrow {\mathbb {R}}^2\) with \(i\in \{1, \ldots , N\}\) possibly with \(\ell \) endpoints of order one \(\{\gamma ^j(y_j)\}\) for some \((j,y_j)\in \{1, \ldots , N\}\times \{0,1\}\), and possibly with curves that meet at k different junctions \(\{O^p\}\) of order \(m\in {\mathbb {N}}_{\ge 2}\) at \(O^p=\gamma ^{p_1}(y_1)=\cdots =\gamma ^{p_m}(p_m)\) for some \((p_i,y_i)\in \{1, \ldots , N\}\times \{0,1\}, p\in \{1, \ldots ,k\}\) forming angles \(\alpha ^{p_i,p_{i+1}}\) between \(\nu ^{p_i}\) and \(\nu ^{p_{i+1}}\) is an admissible initial network if

-

(i)

the parametrization \(\gamma \) belongs to \(\mathrm {I}_N\);

-

(ii)

\({\mathcal {N}}_0\) satisfies all the boundary condition imposed in the system: concurrency, angle, curvature and third order conditions;

-

(iii)

at each endpoint \(\gamma ^j(y_j)\) of order one it holds

$$\begin{aligned} 2\partial _s^2 k^j(y_j)+(k^j)^3(y_j)-\mu ^i k^j(y_j)=0; \end{aligned}$$ -

(iv)

the initial datum fulfills the non–degeneracy condition: at each junction

$$\begin{aligned} \mathrm {span}\{\nu ^{p_1}, \ldots ,\nu _0^{p_m}\}={\mathbb {R}}^2; \end{aligned}$$ -

(v)

at each junction \(\gamma ^{p_1}(y_1)=\ldots =\gamma ^{p_m}(y_m)\) where at least three curves concur, consider two consecutive unit normal vectors \(\nu ^{p_i}(y_i)\) and \(\nu ^{p_k}(y_k)\) such that \(\mathrm {span}\{\nu ^{p_i}(y_i),\nu ^{p_k}(y_k)\}={{{\mathbb {R}}}}^2\). Then for every \(j\in \{1,\ldots ,m\}\), \(j\ne i\), \(j\ne k\) we require

$$\begin{aligned} \sin \theta ^i V^i(y_i)+\sin \theta ^k V^k(y_k) +\sin \theta ^j V^j(y_j)=0, \end{aligned}$$where \(\theta ^i\) is the angle between \(\nu ^{p_k}(y_k)\) and \(\nu ^{p_j}(y_j)\), \(\theta ^k\) between \(\nu ^{p_j}(y_j)\) and \(\nu ^{p_i}(y_i)\) and \(\theta ^{j}\) between \(\nu ^{p_i}(y_i)\) and \(\nu ^{p_k}(y_k)\).

Remark 2.6

The conditions (ii)–(iii)–(v) on the initial network are the so-called compatibility conditions. Together with the non-degeneracy condition, these conditions concern the boundary of the network, and so they are not required in the case of one single closed curve.

Remark 2.7

We refer to the conditions (iii) and (v) as fourth order compatibility conditions. We explain here how one derives condition (v) in the case of a junction \(\gamma ^1(0)=\ldots =\gamma ^m(0)\). Differentiating in time the concurrency condition we get \(\partial _t\gamma ^1(0) =\cdots =\partial _t\gamma ^m(0)\), or, in terms of the normal and tangential velocities \(V^1(0)\nu ^1(0)+T^1(0)\tau ^1(0)=\cdots =V^m(0)\nu ^m(0)+T^m(0)\tau ^m(0)\).

Without loss of generality we suppose that the concurring curves are labeled in a counterclockwise sense and that \(\mathrm {span}\{\nu ^1(0),\nu ^2(0)\}={\mathbb {R}}^2\). Then for every \(j\in \{3,\ldots ,m\}\) we have

where \(\theta ^1\) is the angle between \(\nu ^2(0)\) and \(\nu ^j(0)\), \(\theta ^2\) between \(\nu ^j(0)\) and \(\nu ^1(0)\) and \(\theta ^j\) between \(\nu ^1(0)\) and \(\nu ^2(0)\). Then

Hence for every \(j\in \{3,\ldots ,m\}\) we obtained \(\sin \theta ^1V^1(0)+\sin \theta ^2 V^2(0) +\sin \theta ^j V^j(0)=0\).

Remark 2.8

To prove existence of solutions of class \(C^{\frac{4+\alpha }{4}, 4+\alpha }\) to the elastic flow of networks it is necessary to require the fourth order compatibility conditions for the initial datum. This conditions may sound not very natural because it does not appear among the boundary conditions imposed in the system. It is actually possible not to ask for it by defining the elastic flow of networks in a Sobolev setting. The price that we have to pay is that in such a case a solution will be slightly less regular (see [22, 38] for details). On the opposite side, if we want a smooth solution till \(t=0\) one has to impose many more conditions. These properties, the compatibility conditions of any order, are derived repeatedly differentiating in time the boundary conditions and using the motion equation to substitute time derivatives with space derivatives (see [14, 15]).

2.4 Invariance Under Reparametrization

It is very important to remark the consequences of the invariance under reparametrization of the energy functional on the resulting gradient flow. These effects actually occur whenever the starting energy is geometric, i.e., invariant under reparamentrization. To be more precise, let us say that the time dependent family of closed curves parametrized by \(\gamma :[0,T]\times \mathrm {S}^1\rightarrow {\mathbb {R}}^2\) is a smooth solution to the elastic flow

and the driving velocity \(\partial _t\gamma \) is normal along \(\gamma \). If \(\chi :[0,T]\times \mathrm {S}^1\rightarrow \mathrm {S}^1\) with \(\chi (t,0)=0\) and \(\chi (t,1)=1\) is a smooth one-parameter family of diffeomorphism and \(\sigma (t,x){:}{=}\gamma (t,\chi (t,x))\), then it is immediate to check that \(\sigma \) solves

and W can be computed explicitly in terms of \(\chi \) and \(\gamma \). More importantly, one has that \(V_\sigma (t,x)\nu _\sigma (t,x) = V_\gamma (t,\chi (t,x))\nu _\gamma (t,\chi (t,x))\). Since \(W(t,x)\tau _\sigma (t,x)\) is a tangential term, \(\sigma \) itself is a solution to the elastic flow. Indeed its normal driving velocity \(\partial _t^\perp \sigma \) is the one defining the elastic flow on \(\sigma \). This is the reason why the definition of the elastic flow is given in terms of the normal velocity of the evolution only.

In complete analogy, if \(\beta :[0,T)\times \mathrm {S}^1 \rightarrow {\mathbb {R}}^2\) is given, it is smooth and solves

where \(\chi _0: \mathrm {S}^1\rightarrow \mathrm {S}^1\) is a diffeomorphism, then letting \(\psi :[0,T]\times \mathrm {S}^1\rightarrow \mathrm {S}^1\) be the smooth solution of

it immediately follows that \(\gamma (t,x){:}{=}\beta (t,\psi (t,x))\) solves (2.15).

Something similar holds true also in the general case of networks. First of all it is easy to check the all possible boundary conditions are invariant under reparametrizations (both at the multiple junctions and at the endpoints of order one). Concerning the velocity, we cannot impose the tangential velocity to be zero as in (2.15), but it remains true that if a time dependent family of networks parametrized by \(\gamma =(\gamma ^1,\ldots ,\gamma ^N)\) with \(\gamma ^i:[0,T]\times [0,1]\rightarrow {\mathbb {R}}^2\) is a solution to the elastic flow, then \(\sigma =(\sigma ^1,\ldots ,\sigma ^N)\) defined by \(\sigma ^i(t,x)=\gamma ^i(t, \chi ^i(t,x))\) with \(\chi ^i:[0,T]\times [0,1]\rightarrow [0,1]\) a time dependent family of diffeomorphisms such that \(\sigma (t,0)=0\) and \(\sigma (t,1)=1\) (together with suitable conditions on \(\partial _x\sigma (t,0)\), \(\partial _{x}^2\sigma (t,0)\) and so on) is still a solution to the elastic flow of networks. Indeed the velocity of \(\gamma ^i\) and \(\sigma ^i\) differs only by a tangential component.

Remark 2.9

We want to stress that at the junctions the tangential is velocity determined by the normal velocity.

Consider a junction of order m

Differentiating in time yields \(\partial _t\gamma ^1(t,0)=\ldots =\partial _t\gamma ^m(t,0)\) that, in terms of the normal and tangential motion V and T reads as

where \(j\in \{1, \ldots , m\}\) with \(m+1:=1\) and the argument (t, 0) is omitted from now on. Testing these identities with the unit tangent vectors \(\tau ^j\) leads to the system:

We call M the \(m\times m\)-matrix of the coefficients and \(R_1,\ldots , R_m\) its rows.

It is easy to see that

that is different from zero till the non-degeneracy condition is satisfied. Then the system has a unique solution and so each \(T^i(t)\) can be expressed as a linear combination of \(V^1(t),\ldots , V^m(t)\).

Remark 2.10

The previous observations clarify the fact that the only meaningful notion of uniqueness for a geometric flow like the elastic one is thus uniqueness up to reparametrization.

We can actually take advantage of the invariance by reparametrization of the problem to reduce system (2.10) to a non-degenerate system of quasilinear PDEs. Consider the flow of one curve \(\gamma \). As we said before, the normal velocity is a geometric quantity, namely \(\partial _t\gamma ^\perp = V\nu =-2\partial _s^2 k\nu -k^{3}\nu +\mu k\nu \). Computing this quantity in terms of the parametrization \(\gamma \) we get

We define

We can insert this choice of the tangential component of the velocity in the motion equation, which becomes

Considering now the boundary conditions: up to reparametrization the clamped condition \(\tau (t,0)=\tau _0\) can be reformulated as \(\partial _x\gamma (t,0)=\tau _0\) and the curvature condition \(k=\varvec{\kappa }=0\) as \(\partial _x^2 \gamma (t,0)=0\). We can then extend this discussion to the flow of general networks, in order to define the so-called special flow.

Definition 2.11

(Admissible initial parametrization) We say that \(\varphi _0=(\varphi _0^1, \ldots , \varphi _0^N)\) is an admissible parametrization for the special flow if

-

the functions \(\varphi _0^i\) are of class \(C^{4+\alpha }([0,1];{\mathbb {R}}^2)\);

-

\(\varphi _0=(\varphi _0^1, \ldots , \varphi _0^N)\) satisfies all the boundary conditions imposed in the system;

-

at each endpoint of order one it holds \(V^i=0\) and \({\overline{T}}^i=0\) for any i;

-

at each junction it holds

$$\begin{aligned} V^i\nu ^i+{\overline{T}}^i\tau ^i=V^j\nu ^j+{\overline{T}}^j\tau ^j \end{aligned}$$for any i, j;

-

at each junction the non-degeneracy condition is satisfied;

where \({\overline{T}}^i\) is defined as in (2.17) for any i and j.

Definition 2.12

(Special flow) Let \(N\in {\mathbb {N}}\) and let \(\varphi _0=(\varphi _0^1,\ldots ,\varphi _0^N)\) be an admissible initial parametrization in the sense of Definition 2.11 (possibly) with endpoints of order one and (possibly) with junctions of different orders \(m\in {\mathbb {N}}_{\ge 2}\). Then a time dependent family of parametrizations \(\varphi _{t\in [0,T]}\), \(\varphi =(\varphi ^1,\ldots ,\varphi ^N)\) is a solution to the special flow if and only if for every \(i\in \{1,\ldots ,N\}\) the functions \(\varphi ^i\) are of class \(C^{\frac{4+\alpha }{4}, 4+\alpha }([0,T]\times [0,1];{\mathbb {R}}^2)\), for every \((t,x)\in [0,T]\times [0,1]\) it holds \(\partial _x\varphi (x)\ne 0\) and the system

is satisfied, where \({\overline{T}}^i\) is defined as in (2.17) for any i. Moreover the following boundary conditions are imposed:

-

if \(N=1\) and \(\varphi _0\) is a closed curve, then we impose periodic boundary conditions;

-

if \(N=1\) and \(\varphi _0(0)=P,\varphi _0(1)=Q\), we can require either

$$\begin{aligned} {\left\{ \begin{array}{ll} \varphi ^1(t,0)=P\\ \varphi ^1(t,1)=Q\\ \partial _x^2\varphi (t,0)=\partial _x^2\varphi ^1(t,1)=0, \end{array}\right. } \end{aligned}$$or

$$\begin{aligned} {\left\{ \begin{array}{ll} \varphi ^1(t,0)=P\\ \varphi ^1(t,1)=Q\\ \partial _x\varphi ^1(t,0)=\tau _0\\ \partial _x\varphi ^1(t,1)=\tau _1. \end{array}\right. } \end{aligned}$$ -

if N is arbitrary and \({\mathcal {N}}_0\) has one multipoint

$$\begin{aligned} \gamma _0^{i_1}(y_1)=\cdots =\gamma _0^{i_m}(y_m), \end{aligned}$$with \((i_1,y_1),\ldots ,(i_m,y_m)\in \{1,\ldots ,N\}\times \{0,1\}\) we can impose either

$$\begin{aligned} {\left\{ \begin{array}{ll} \partial _x^2\varphi ^{i_j}(t,y)=0\quad \text {for every}\, j\in \{1,\ldots ,m\}\\ \sum _{j=1}^{m} \left( -2\partial ^\perp _s \varvec{\kappa }^{i_j}+\mu ^{i_j}\tau ^{i_j}\right) (t,y_j)=0, \end{array}\right. } \end{aligned}$$or

$$\begin{aligned} {\left\{ \begin{array}{ll} \left\langle \tau ^{i_1}(y_1),\tau ^{i_2}(y_2)\right\rangle =c^{1,2}\\ \ldots \\ \left\langle \tau ^{i_{m-1}}(y_{m-1}),\tau ^{i_m}(y_m)\right\rangle =c^{m-1,m}\\ \sum _{j=1}^m k^{i_j}=0\\ \left\langle \partial _x^2\varphi ^{i_j}(t,y),\partial _x\varphi ^{i_j}(t,y)\right\rangle =0\quad \text {for every}\, j\in \{1,\ldots ,m\}\\ \sum _{j=1}^m \left( -2\partial ^\perp _s \varvec{\kappa }^{i_j}- |\varvec{\kappa }^{i_j}(y_j)|^2\tau ^{i_j}(y_j) +\mu ^{i_j}\tau ^{i_j}(y_{i_j})\right) =0. \end{array}\right. } \end{aligned}$$

Lemma 2.13

Let \(\varphi _0=(\varphi _0^1, \ldots ,\varphi _0^N)\) be an admissible initial parametrization and \(\varphi _{t\in [0,T]}\), \(\varphi =(\varphi ^1, \ldots ,\varphi ^N)\) be a solution to the special flow. Then \({\mathcal {N}}_t=\cup _{i=1}^N\varphi ^i(t,[0,1])\) is a solution of the elastic flow of networks with initial datum \({\mathcal {N}}_0:=\cup _{i=1}^N\varphi ^i_0([0,1])\).

Proof

We show that \({\mathcal {N}}_0\) is an admissible initial networks. Conditions (i) and (iv) are clearly satisfied, together with condition (ii) because at the endpoints of order one \(0=V^i=2\partial _s^2k^i+(k^i)^3-\mu ^i k^i\). Also condition (iii) it is easy to get: \(\partial _x^2\varphi (y)=0\) implies \(k(y)=0\), \(\partial _x\varphi (y)=\tau ^*\) implies \(\tau =\tau ^*\) and all the other conditions are already satisfied by the special flow. At each junction \(\gamma ^{1}(y_1)=\cdots =\gamma ^{m}(y_m)\) of order at least three we consider two consecutive unit normal vectors \(\nu ^{i}(y_i)\) and \(\nu ^{k}(y_k)\) such that \(\mathrm {span}\{\nu ^{i}(y_i),\nu ^{k}(y_k)\}={\mathbb {R}}^2\). For every \(j\in \{1,\ldots ,m\}\), \(j\ne i\), \(j\ne k\) we call \(\theta ^i\) the angle between \(\nu ^k(0)\) and \(\nu ^j(0)\), \(\theta ^k\) between \(\nu ^j(0)\) and \(\nu ^i(0)\) and \(\theta ^j\) between \(\nu ^i(0)\) and \(\nu ^k(0)\) and we recall that it holds

By testing (2.19) by \(\sin \theta ^j\tau ^k\) and by \(\cos \theta ^j\nu ^k\) and summing, we get

If instead we test (2.19) by \(\cos \theta ^j\tau ^k\) and by \(\sin \theta ^j\nu ^k\) and we subtract the second equality to the first one, it holds

Similarly, by testing (2.20) by \(\cos \theta ^k\nu ^j\) and by \(\sin \theta ^k\tau ^j\) and subtracting the second identity to the first we have

Finally we test (2.20) by \(\cos \theta ^k\tau ^j\) and by \(\sin \theta ^k\nu ^j\) and sum, obtaining

With the help of the identities (2.21), (2.22), (2.23) and (2.24) and interchanging the roles of i, j, k we can write

and so for every \(j\in \{1,\ldots ,m\}\), \(j\ne i\), \(j\ne k\) we have \(\sin \theta ^iV^i+ \sin \theta ^kV^k+\sin \theta ^jV^j=0\), as desired.

The solution \({\mathcal {N}}\) admits a parametrization \(\varphi \) with the required regularity. As we have seen for the initial datum, the boundary conditions in Definition 2.18 implies the boundary conditions asked in Definition 2.3. By definition of solution of the special flow the parametrizations \(\varphi =(\varphi ^1,\ldots ,\varphi ^N)\) solves \(\partial _t\varphi ^i=V^i\nu ^i+{\overline{T}}^i\tau ^i\). Then

and thus all the properties of solution to the elastic flow are satisfied. \(\square \)

Lemma 2.14

Suppose that a closed curve parametrized by

is a solution to the elastic flow with admissible initial datum \(\gamma _0\in C^5([0,1])\). Then a reparametrization of \(\gamma \) is a solution to the special flow.

Proof

The proof easily follows arguing similarly as in the discussion at the beginning of the section and, in particular, by recalling that reparametrizations only affect the tangential velocity. \(\square \)

The above result can be generalized to flow of networks as stated below.

Lemma 2.15

Suppose that a network \({\mathcal {N}}_0\) of N regular curves parametrized by \(\gamma =(\gamma ^1, \ldots \gamma ^N)\) with \(\gamma ^i:[0,1]\rightarrow {\mathbb {R}}^2\), \(i\in \{1,\ldots ,N\}\) is an admissible initial network. Then there exist N smooth functions \(\theta ^i:[0,1]\rightarrow [0,1]\) such that the reparametrisztion \(\left( \gamma ^i\circ \theta ^i\right) \) is an admissible initial parametrization for the special flow.

For the proof see [23, Lemma 3.31]. Moreover by inspecting the proof of Theorem 3.32 in [23] we see that the following holds.

Proposition 2.16

Let \(T>0\). Let \({\mathcal {N}}_0\) be an admissible initial network of N curves parametrized by \(\gamma _0=(\gamma _0^1, \ldots \gamma _0^N)\) with \(\gamma ^i:[0,1]\rightarrow {\mathbb {R}}^2\), \(i\in \{1,\ldots ,N\}\). Suppose that \({\mathcal {N}}(t)_{t\in [0,T]}\) is a solution to the elastic flow in the time interval [0, T] with initial datum \({\mathcal {N}}_0\) and suppose that it is parametrized by regular curves \(\gamma =(\gamma ^1, \ldots \gamma ^N)\) with \(\gamma ^i:[0,T]\times [0,1]\rightarrow {\mathbb {R}}^2\). Then there exists \({\widetilde{T}}\in (0,T]\) and a time dependent family of reparametrizations \(\psi :[0,{\widetilde{T}}]\times [0,1]\rightarrow [0,1]\) such that \(\varphi (t,x):=(\varphi ^1(t,x),\ldots ,\varphi ^N(t,x))\) with \(\varphi (t,x):=\gamma ^i(t,\psi (t,x))\) is a solution to the special flow in \([0,{\widetilde{T}}]\).

Remark 2.17

In the case of a single open curve, reducing to the special flow is is particularly advantageous. Indeed one passes from a the degenerate problem (2.10) couple either with quasilinear or fully nonlinear boundary conditions to a non-degenerate system of quasilinaer PDEs with linear and affine boundary conditions.

2.5 Energy Monotonicity

Let us name \(V^i:=-2\partial _ s^2 k^{i}-(k^i)^2k^i+\mu ^i k^i\) the normal velocity of a curve \(\gamma ^i\) evolving by elastic flow and denote the tangential motion by \(T^i\):

Definition 2.18

We denote by \({{\mathfrak {p}}}_\sigma ^h(k)\) a polynomial in \(k,\dots ,\partial _s^h k\) with constant coefficients in \({\mathbb {R}}\) such that every monomial it contains is of the form

where \(\beta _l\in {\mathbb {N}}\) for \(l\in \{0,\ldots ,h\}\) and \(\beta _{l_0}\ge 1\) for at least one index \(l_0\).

We notice that

By (2.2) the following result holds.

Lemma 2.19

If \(\gamma \) satisfies (2.25), the commutation rule

holds. The measure \(\mathrm {d}s\) evolves as

Moreover the unit tangent vector, unit normal vector and the j–th derivatives of scalar curvature of a curve satisfy

With the help of the previous lemma it is now possible to compute the derivative in time of a general polynomial \({\mathfrak {p}}_{\sigma }^h(k)\). By definition every monomial composing \({\mathfrak {p}}_{\sigma }^h(k)\) is of the form \({\mathfrak {m}}(k)=C \prod _{l=0}^h (\partial _s^lk)^{\beta _l}\) with \(\sum _{l=0}^h(l+1)\beta _l = \sigma \). Then for every fixed \(j\in \{1,\ldots ,h\}\) the monomial \({\mathfrak {n}}(k)=C\beta _{j}(\partial _s^{j}k)^{\beta _j-1} \prod _{l\ne j, l=0}^h (\partial _s^lk)^{\beta _l}\) can be written as \({\mathfrak {n}}(k)={\tilde{C}}\prod _{l=0}^h (\partial _s^lk)^{\alpha _l}\) with \(\sum _{l=0}^h(l+1)\alpha _l = \sigma -j-1\). Differentiating in time \({\mathfrak {m}}(k)\) we have

where we used the product rule (2.26) and the structure of the monomial \({\mathfrak {n}}(k)\). Summing up the contribution of each monomial composing \({\mathfrak {p}}_{\sigma }^h(k)\) we have

Proposition 2.20

Let \({\mathcal {N}}_t\) be a time dependent family of smooth networks composed of N curves, possibly with junctions and fixed endpoint in the plane. Suppose that \({\mathcal {N}}_t\) is a solution of the elastic flow. Then

Proof

Using the evolution laws collected in Lemma 2.19, we get

Integrating twice by parts the term \(\int 2kV_{ss}\) we obtain

It remains to show that the contribution of the boundary term in (2.32) equals zero, whatever boundary condition we decide to impose at the endpoint among the ones listed in Definition 2.3. The case of the closed curve is trivial.

Let us start with the case of an endpoint \(\gamma ^j(y)\) (with \(y\in \{0,1\}, j\in \{1,\ldots ,N\}\)) subjected to Navier boundary condition, namely \(k^j(y)=0\). The point remains fixed, that implies \(V^j(y)=T^j(y)=0\). The term \(2k^j(y)\partial _s V^j(y)\) vanishes because \(k^j(y)=0\).

Suppose instead that the curve is clamped at \(\gamma ^j(y)\) with \(\tau ^j(y)=\tau ^*\). Then using Lemma 2.19, \(0=\partial _t\tau ^j(y)=(\partial _s V^j(y)-T^j(y)k^j(y))\nu ^j(y)\). Hence

that combined with \(V^j(y)=T^j(y)=0\) implies that the boundary terms vanish in (2.32).

Consider now a junction of order m where natural boundary conditions have been imposed. Up to inverting the orientation of the parametrizations of the curves, we suppose that all the curves concur at the junctions at \(x=0\). The curvature condition \(k^i(0)=0\) with \(i\in \{1,\ldots ,m\}\) gives

Differentiating in time the concurrency condition \(\gamma ^1(0)=\cdots \gamma ^m(0)\) we obtain

that combined with the third order condition \(0=\sum _{i=1}^m 2\partial _sk^i(0)\nu ^i(0)-\mu ^i\tau ^i(0)\) gives

hence the boundary terms vanish and we get the desired result.

To conclude, consider a junction of order m, where the curves concur at \(x=0\) and suppose that we have imposed there clamped boundary conditions. In this case using the concurrency condition differentiated in time and the third order condition we find

Differentiating in time the angle condition

we have

and hence \(\partial _s V^i(0)+T^i(0)k^i(0)=\partial _s V^{i+1}(0)+T^{i+1}(0)k^{i+1}(0)\). Repeating the previous computation for every \(i\in \{2,\ldots ,m-1\}\) we get

that together with the curvature condition \(\sum k^i=0\) at the junction gives

Summing this last equality with (2.33) we have that the boundary terms vanishes also in this case. \(\square \)

3 Short Time Existence

We prove a short time existence result for the elastic flow of closed curves. We then explain how it can be generalized to other situations and which are the main difficulties that arises when we pass from one curve to networks.

3.1 Short Time Existence of the Special Flow

First of all we aim to prove the existence of a solution to the special flow. Omitting the dependence on (t, x) we can write the motion equation of a curve subjected to (2.18) as

We linearize the highest order terms of the previous equation around the initial parametrization \(\varphi ^0\) obtaining

Definition 3.1

Given \(\varphi ^0:{\mathbb {S}}^1\rightarrow {\mathbb {R}}^2\) an admissible initial parametrization for (2.18), the linearized system about \(\varphi ^0\) associated to the special flow of a closed curve is given by

Here \((f,\psi )\) is a generic couple to be specified later on.

Let \(\alpha \in (0,1)\) be fixed. Whenever a curve \(\gamma \) is regular, there exists a constant \(c>0\) such that \(\inf _{x\in {\mathbb {S}}^1}\vert \partial _x\gamma \vert \ge c\). From now on we fix an admissible initial parametrization \(\varphi ^0\) with

Then for every \(j\in {\mathbb {N}}\) there holds

Definition 3.2

For \(T>0\) we consider the linear spaces

endowed with the norms

and we define the operator \({\mathcal {L}}_{T}:{\mathbb {E}}_T\rightarrow {\mathbb {F}}_T\) by

Remark 3.3

For every \(T>0\) the operator \({\mathcal {L}}_{T}:{\mathbb {E}}_T\rightarrow {\mathbb {F}}_T\) is well-defined, linear and continuous.

Theorem 3.4

Let \(\alpha \in (0,1)\), \((f,\psi )\in {\mathbb {F}}_T\). Then for every \(T>0\) the system (3.2) has a unique solution \(\varphi \in {\mathbb {E}}_T\). Moreover, for all \(T>0\) there exists \(C(T)>0\) such that if \(\varphi \in {\mathbb {E}}_T\) is a solution, then

Proof

See for instance [33, Theorem 4.3.1] and [51, Theorem 4.9]. \(\square \)

From the above theorem we get the following consequence.

Corollary 3.5

The linear operator \({\mathcal {L}}_{T}:{\mathbb {E}}_T\rightarrow {\mathbb {F}}_T\) is a continuous isomorphism.

By the above corollary, we can denote by \({\mathcal {L}}^{-1}_T\) the inverse of \({\mathcal {L}}_T\).

Notice that till now we have considered fixed \(T>0\) and derived (3.3), where the constant C depends on T. Now, once a certain interval of time \((0, {\widetilde{T}}]\) with \({\widetilde{T}}>0\) is chosen, we show that for every \(T\in (0, {\widetilde{T}}]\) it possible to estimate the norm of \({\mathcal {L}}^{-1}_T\) with a constant independent of T.

Lemma 3.6

For all \({\widetilde{T}}>0\) there exists a constant \(c({\widetilde{T}})\) such that

Proof

Fix \({\widetilde{T}}>0\), for all \(T\in (0,{\widetilde{T}}]\), for every \((f,\psi )\in {\mathbb {F}}_T\) we define the extension operator \(E(f,\psi ):=({\widetilde{E}}f,\psi )\) by

It is clear that \(E(f,\psi )\in {\mathbb {F}}_{{\widetilde{T}}}\) and that \(\Vert E \Vert _{{\mathcal {L}}({\mathbb {F}}_T,{\mathbb {F}}_{{\widetilde{T}}})}\le 1\).

Moreover \({\mathcal {L}}^{-1}_{{\widetilde{T}}}(E(f,\psi ))_{\vert [0,T]}={\mathcal {L}}^{-1}_T(f,\psi )\) by uniqueness and then

\(\square \)

Definition 3.7

We define the affine spaces

In the following we denote by \(\overline{B_M}\) the closed ball of radius M and center 0 in \({\mathbb {E}}_T\).

Lemma 3.8

Let \({\widetilde{T}}>0\), \(M>0\), \(c>0\) and \(\varphi ^0\) an admissible initial parametrization with \(\inf _{x\in {\mathbb {S}}^1}\vert \partial _x\varphi ^0\vert \ge c\). Then there exists \({\widehat{T}}={\widehat{T}}(c,M)\in (0,{\widetilde{T}}]\) such that for all \(T\in (0,{\widehat{T}}]\) every curve \(\varphi \in {\mathbb {E}}^0_T\cap B_M\) is regular with

Moreover for every \(j\in {\mathbb {N}}\)

Proof

We have

with \(\vert \partial _x\varphi (t,x)-\partial _x \varphi ^0(x)\vert \le \left[ \varphi \right] _{\beta ,0}t^\beta \le Mt^\beta \) with \(\beta =\frac{3}{4}+\frac{\alpha }{4}\). Taking \({\widehat{T}}\) sufficiently small, passing to the infimum we get the first claim. As a consequence

Then for \(j=1\) the second estimate follows directly combining the estimate (3.5) with the definition of the norm \(\Vert \cdot \Vert _{C^{\frac{\alpha }{4},\alpha }([0,T]\times {\mathbb {S}}^1)}\). The case \(j\ge 2\) follows from multiplicativity of the norm. \(\square \)

Form now on we fix \({\widetilde{T}}=1\) and we denote by \({\hat{T}}={\hat{T}}(c,M)\) the time given by Lemma 3.8 for given c and M.

Definition 3.9

For every \(T\in (0,{\hat{T}}]\) we define the map

where the functions \(f(\varphi ):=f(\partial _x^4\varphi ,\partial _x^3\varphi ,\partial _x^2\varphi ,\partial _x\varphi )\) is defined in (3.1). Moreover we introduce the map \({\mathcal {N}}_T\) given by \({\mathbb {E}}^0_T \ni \gamma \,\mapsto (N_{T}(\gamma ),\gamma \vert _{t=0})\).

Remark 3.10

We remind that f is given by

By Lemma 3.8, for \(\varphi \in {\mathbb {E}}^0_T\), we have that for all \(t\in [0,T]\) the map \(\varphi (t)\) is a regular curve. Hence \(N_{T}\) is well–defined. Furthermore we notice that the map \({\mathcal {N}}_t\) is a mapping from \({\mathbb {E}}^0_T\) to \({\mathbb {F}}^0_T\).

The following lemma is a classical result on parabolic Hölder spaces. For a proof see for instance [33].

Lemma 3.11

Let \(k\in \{1,2,3\}\), \(T\in [0,1]\) and \(\varphi ,{\widetilde{\varphi }}\in {\mathbb {E}}_T^0\). We denote by \(\varphi ^{(4-k)}, {\widetilde{\varphi }}^{(4-k)}\) the \((4-k)\)–th space derivative of \(\varphi \) and \({\widetilde{\varphi }}\), respectively. Then there exist \(\varepsilon >0\) and a constant \({\widetilde{C}}\) independent of T such that

Definition 3.12

Let \(\varphi ^0\) be an admissible initial parametrization, \(c:=\inf _{x\in {\mathbb {S}}^1}\vert \partial _x \varphi ^0\vert \). For a positive M and a time \(T\in (0,{\widehat{T}}(c,M)]\) we define \({\mathcal {K}}_T:{\mathbb {E}}^0_T\cap \overline{B_M}\rightarrow {\mathbb {E}}^0_T\) by

Proposition 3.13

Let \(\varphi ^0\) be an admissible initial parametrization, \(c:=\inf _{x\in {\mathbb {S}}^1}\vert \partial _x \varphi ^0\vert \). Then there exists a positive radius \(M(\varphi ^0)>\Vert \varphi ^0\Vert _{C^{4+\alpha }}\) and a time \({\overline{T}}(c,M)\) such that for all \(T\in (0,{\overline{T}}]\) the map \({\mathcal {K}}_T:{\mathbb {E}}^0_T\cap \overline{B_M}\rightarrow {\mathbb {E}}^0_T\) takes values in \({\mathbb {E}}^0_T\cap \overline{B_M}\) and it is a contraction.

In the following proof constants may vary from line to line and depend on c, M and \(\Vert \varphi ^0\Vert _{C^{4+\alpha }}\).

Proof

Let \(M>0\) and \({\widetilde{T}}>0\) be arbitrary positive numbers. Let \({\widehat{T}}(c,M)\) be given by Lemma 3.8 and assume without loss of generality that \({\widehat{T}}(c,M)<\tfrac{1}{2} {\widetilde{T}}\). Let \(T\in (0,{\widehat{T}}(c,M)]\) be a generic time.

Clearly \({\mathcal {L}}_T^{-1}({\mathbb {F}}_T^0)\subseteq {\mathbb {E}}^0_T\) and the \({\mathcal {K}}_T\) is well defined on \({\mathbb {E}}^0_T\cap \overline{B_M}\).

First we show that there exists a time \(T'\in (0,{\widehat{T}}(c,M))\) such that for all \(T\in (0,T']\), for every \(\varphi ,{\widetilde{\varphi }} \in {\mathbb {E}}^0_T\cap \overline{B_M}\), it holds

We begin by estimating

The highest order term in the above norm is

We can rewrite the above expression using the identity

We get

In order to control \(\left( \frac{1}{\vert \partial _x \varphi ^0 \vert ^2\vert \partial _x\varphi \vert } +\frac{1}{\vert \partial _x \varphi ^0 \vert \vert \partial _x\varphi \vert ^2}\right) \left( \frac{1}{\vert \partial _x \varphi ^0 \vert ^2} +\frac{1}{\vert \partial _x\varphi \vert ^2}\right) \) we use Lemma 3.8. Now we identify \(\varphi ^0\) with its constant in time extension \(\psi ^0(t,x):=\varphi ^0(x)\), which belongs to \({\mathbb {E}}_T^0\) for arbitrary T. Observe that \(\Vert \psi ^0\Vert _{{\mathbb {E}}_T} = \Vert \psi ^0\Vert _{C^{\frac{4+\alpha }{4},4+\alpha }} = \Vert \varphi ^0\Vert _{C^{4+\alpha }}\) is independent of T. Then making use of Lemma 3.11 we obtain

Then

Similarly we obtain allows us to write

The lower order terms of \(f(\varphi )-f({\widetilde{\varphi }})\) are of the form

with \(j\in \{2,6,8\}\) and with \(a,b,c,d,{\tilde{a}},{\tilde{b}},{\tilde{c}},{\tilde{d}}\) space derivatives up to order three of \(\varphi \) and \({\widetilde{\varphi }}\), respectively. Adding and subtracting the expression

to (3.10), we get

With the help of Lemma 3.11 we can estimate the first term of (3.11) in the following way:

The second and the third term of (3.11) can be estimated similarly by Cauchy–Schwarz inequality. To obtain the desired estimate for the last term of (3.11) we proceed in a similar way as for the second term of (3.7). We use the identities

for \(j\in \{6,8\}\) and Lemmas 3.8 and 3.11 and we finally get

Putting the above inequalities together we have

By Lemma 3.6, this implies that for all \(T\in (0,{\widehat{T}}(M,c)]\)

with \(0<\varepsilon <1\). Choosing \(T'\) small enough we can conclude that for every \(T\in (0,T']\) the inequality (3.6) holds.

In order to conclude the proof it remains to show that we can choose M sufficiently big so that \({\mathcal {K}}_T\) maps \({\mathbb {E}}^0_T\cap \overline{B_M}\) into itself.

As before we identify \(\varphi ^0(x)\) with its constant in time extension \(\psi ^0(t,x)\). Notice that the expressions \({\mathcal {K}}_T(\psi ^0)\) and \({\mathcal {N}}_T(\psi ^0)\) are then well defined.

As M is an arbitrary positive constant, let us choose M at the beginning, depending on \(\varphi ^0\) and \({\widetilde{T}}\) only, so that

and

where we used that \(\Vert (f(\varphi ^0),\varphi ^0))\Vert _{{\mathbb {F}}_T}\) is time independent and then estimated by a constant \(C(\varphi ^0)\) depending only on \(\varphi ^0\) and we also used Lemma 3.6. For \(T\in (0,T']\), as \(T'\le {\widehat{T}}(c,M) \le \tfrac{1}{2}{\widetilde{T}}-\delta \) for some positive \(\delta \), we also have

for any \(\varphi \in {\mathbb {E}}^0_T\cap \overline{B_M}\), where we used (3.12). It follows that by taking \(T\le T'\) sufficiently small, we have that \({\mathcal {K}}_T:{\mathbb {E}}^0_T\cap \overline{B_M} \rightarrow {\mathbb {E}}^0_T\cap \overline{B_M}\) and it is a contraction.

\(\square \)

Theorem 3.14

Let \(\varphi ^0\) be an admissible initial parametrization. There exists a positive radius M and a positive time T such that the special flow (2.18) of closed curves has a unique solution in \(C^{\frac{4+\alpha }{4},4+\alpha }\left( [0,T]\times {\mathbb {S}}^1\right) \cap \overline{B_M}\).

Proof

Choosing M and \({\overline{T}}\) as in Proposition 3.13, for every \(T\in (0,{\overline{T}}]\) the map \({\mathcal {K}}_T:{\mathbb {E}}_T^0\cap \overline{B_M}\rightarrow {\mathbb {E}}_T^0\cap \overline{B_M}\) is a contraction of the complete metric space \({\mathbb {E}}_T^0\cap \overline{B_M}\). Thanks to Banach–Cacciopoli contraction theorem \({\mathcal {K}}_T\) has a unique fixed point in \({\mathbb {E}}_T^0\cap \overline{B_M}\). By definition of \({\mathcal {K}}_T\), an element of \({\mathbb {E}}_T^0\cap \overline{B_M}\) is a fixed point for \({\mathcal {K}}_T\) if and only if it is a solution to the special flow (2.18) of closed curves in \(C^{\frac{4+\alpha }{4},4+\alpha }\left( [0,T]\times {\mathbb {S}}^1\right) \cap \overline{B_M}\). \(\square \)

Remark 3.15

In order to prove an existence and uniqueness theorem for the special flow of curves with fixed endpoints subjected to natural or clamped boundary conditions, it is enough to repeat the previous arguments with some small adjustments.

In the case of Navier boundary condition we replace \({\mathbb {E}}_T\), \({\mathbb {E}}_T^0\), \({\mathbb {F}}_T\) and \({\mathbb {F}}_T^0\) by

where by \(P,Q \in {{{\mathbb {R}}}}^2\). In this case we introduce the linear operator

This modification allows us to treat the linear boundary conditions \(\partial _x^2\varphi (0)=\partial _x\varphi (1)=0\) and the affine ones \(\varphi (t,0)=P\), \(\varphi (t,1)=Q\).

In the case of clamped boundary conditions instead we have to take into account four vectorial affine boundary conditions. We modify the affine space \({\mathbb {E}}_T^0\) into

and

Finally the operator \({\mathcal {L}}_T\) in this case is

Remark 3.16

Differently from the case of endpoints of order one, at the multipoints of higher order we impose also non linear boundary conditions (quasilinear or even fully non linear). Treating these terms is then harder: it is necessary to linearize both the main equation and the boundary operator.

Consider for instance the case of the elastic flow of a network composed of N curves that meet at two junction, both of order N and subjected to natural boundary conditions. The concurrency condition and the second order condition are already linear. Instead the third order condition is of the form

where we omit the dependence on (t, y) with \(y\in \{0,1\}\). The linearized version of the highest order term in the third order condition is:

where we denoted by \(\nu _0\) the unit normal vector of the initial datum \(\varphi ^0\). Then, instead of (3.2), the linearized system associated to the special flow is

for \(i, j\in \{1,\ldots ,N\}\), \(j\ne i\), \(t\in [0,T]\), \(x\in [0,1]\), \(y\{0,1\}\).

The spaces introduced in Definitions 3.2 and 3.7 are replaced by

The operator \({\mathcal {L}}_{T}:{\mathbb {E}}_T\rightarrow {\mathbb {F}}_T\) becomes

and the operator that encodes the non–linearities of the problem is \({\mathcal {N}}_{T}:{\mathbb {E}}^0_T\rightarrow {\mathbb {F}}^0_T\) that maps \(\varphi \) into the triple \((N^1_{T}(\gamma ),N^2_{T}(\gamma ),\gamma \vert _{t=0})\) with

where the functions \(f(\varphi ):=f(\partial _x^4\varphi ,\partial _x^3\varphi ,\partial _x^2\varphi ,\partial _x\varphi )\) and \(b(\varphi ):=b(\partial _x^3\varphi ,\partial _x^2\varphi ,\partial _x\varphi )\) are defined in (3.1) and in (3.13). The map \({\mathcal {K}}\) will be defined accordingly. We do not here describe the details concerning the solvability of the linear system, as well as the proof of the contraction property of \({\mathcal {K}}\) and we refer to [23, Section 3.4.1].

3.2 Parabolic Smoothing

When dealing with parabolic problems, it is natural to investigate the regularization of the solutions of the flow. More precisely, we claim that the following holds.

Proposition 3.17

Let \(T>0\) and \(\varphi _0=(\varphi ^1_0, \ldots ,\varphi ^N_0)\) be an admissible initial parametrization (possibly) with endpoints of order one and (possibly) with junctions of different orders \(m\in {\mathbb {N}}_{\ge 2}\). Suppose that \(\varphi _{t\in [0,T]}\), \(\varphi =(\varphi ^1, \ldots ,\varphi ^N)\) is a solution in \({\mathbb {E}}_T\) to the special flow in the time interval [0, T] with initial datum \(\varphi _0\). Then the solution \(\varphi \) is smooth for positive times in the sense that

for every \(\varepsilon \in (0,T)\).

We give now a sketch of proof of this fact in the case of closed curves. Basically, it is possible to prove the result in two different ways: with the so-called Angenent’s parameter trick [4, 5, 11] or making use of the classical theory of linear parabolic equations [51].

Sketch of the proof.

For the sake of notation let

Then the motion equation reads \(\partial _t\gamma ={\mathcal {A}}(\gamma )\). We consider the map

We notice that if we take \(\lambda =1\) and \(\gamma =\varphi \) the solution of the special flow we get \(G(1,\varphi )=0\). The Fréchet derivative \(\delta G(1,\varphi )(0,\cdot ):{\mathbb {E}}_T\rightarrow C^{4+\alpha }({\mathbb {S}}^1;{\mathbb {R}}^2)\times C^{\frac{\alpha }{4},\alpha }([0,T]\times {\mathbb {S}}^1;{{{\mathbb {R}}}}^2)\) is given by

where \(F_\varphi \) is linear in \(\gamma \), where \(\partial _x^3 \gamma ,\partial _x^2 \gamma \) and \(\partial _x\gamma \) appears and the coefficients are depending of \(\partial _x\varphi ,\partial _x^2\varphi ,\partial _x^3\varphi \) and \(\partial _x^4\varphi \). The computation to write in details the Fréchet derivative is rather long and we do not write it here. Since the time derivative appears only as \(\partial _t\gamma \) and it is not present in \(A(\gamma )\), formally one can follow the computations of Section 2.2.

It is possible to prove that \(\delta G(1,\varphi )(0,\cdot )\) is an isomorphism. This is equivalent to show that given any \(\psi \in C^{4+\alpha }{\mathbb {S}}^1;{\mathbb {R}}^2)\) and \(f\in C^{\frac{\alpha }{4},\alpha }([0,T]\times {\mathbb {S}}^1;{\mathbb {R}}^2)\) the system

has a unique solution.

Then the implicit function theorem implies the existence of a neighbourhood \((1+\varepsilon ,1-\varepsilon )\subseteq (0,\infty )\), a neighbourhood U of \(\varphi \) in \({\mathbb {E}}_T\) and a function \(\Phi :(1+\varepsilon ,1-\varepsilon ) \rightarrow U\) with \(\Phi (1)=0\) and

Given \(\lambda \) close to 1 consider

where \(\varphi \), as before, is a solution to the special flow. This satisfies \(G(\lambda ,\varphi _{\lambda })=0\). Moreover by uniqueness \(\varphi _{\lambda }=\Phi (\lambda )\). Since \(\Phi \) is smooth, this shows that \(\varphi _{\lambda }\) is a smooth function of \(\lambda \) with values in \({\mathbb {E}}_T\). This implies

from which we gain regularity in time of the solution \(\varphi \).

Then using the fact that \(\varphi \) is a solution to the special flow and the structure of the motion equation of the special flow it is possible to increase regularity also in space.

We can then start a bootstrap to obtain that the solution is smooth for every positive time.

Alternatively we can show inductively that there exists \(\alpha \in (0,1)\) such that for all \(k\in {\mathbb {N}}\) and \(\varepsilon \in (0,T)\),

The case \(k=1\) is true because \(\varphi \in C^{\frac{4+\alpha }{4},4+\alpha }\left( [0,T]\times {\mathbb {S}}^1;{\mathbb {R}}^2\right) \) by Theorem 3.14.

Now assume that the assertion holds true for some \(k\in {\mathbb {N}}\) and consider any \(\varepsilon \in (0,T)\). Let \(\eta \in C_0^\infty \left( (\frac{\varepsilon }{2},\infty );{\mathbb {R}}\right) \) be a cut–off function with \(\eta \equiv 1\) on \([\varepsilon ,T]\). By assumption,

and thus it is straightforward to check that the function g defined by

lies in \(C^{\frac{2k+2+\alpha }{4},2k+2+\alpha }\left( \left[ 0,T\right] \times {\mathbb {S}}^1;{\mathbb {R}}^2\right) \). Moreover g satisfies a parabolic problem of the following form: for all \(t\in (0,T)\), \(x\in {\mathbb {S}}^1\):

The lower order terms in the motion equation are given by

The problem is linear in the components of g and in the highest order term of exactly the same structure as the linear system (3.2) with time dependent coefficients in the motion equation. The coefficients and the right hand side fulfil the regularity requirements of [51, Theorem 4.9] in the case \(l=2(k+1)+2+\alpha \). As \(\eta ^{(j)}(0)=0\) for all \(j\in {\mathbb {N}}\), the initial value 0 satisfies the compatibility conditions of order \(2(k+1)+2\) with respect to the given right hand side. Thus [51, Theorem 4.9] yields that there exists a unique solution to (3.15) g with the regularity

This completes the induction as \(g=\varphi \) on \([\varepsilon ,T]\). \(\square \)

3.3 Short Time Existence and Uniqueness

We conclude this section by proving the local (in time) existence and uniqueness result for the elastic flow.

As before, we give the proof of this theorem in the case of closed curves and then we explain how to adapt it in all the other situations.

We remind that a solution of the elastic flow is unique if it is unique up to reparametrizations.

Theorem 3.18

(Existence and uniqueness) Let \({\mathcal {N}}_0\) be an admissible initial network. Then there exists a positive time T such that within the time interval [0, T] the elastic flow of networks admits a unique solution \({\mathcal {N}}(t)\).

Proof

We write a proof for the case of the elastic flow of closed curves.

Existence. Let \(\gamma _0\) be an admissible initial closed curve of class \(C^{4+\alpha }([0,1];{\mathbb {R}}^2)\). Then \(\gamma _0\) is also an admissible initial parametrization for the special flow. By Theorem 3.14 there exists a solution of the special flow, that is also a solution of the elastic flow.

Uniqueness. Consider a solution \(\gamma _t\) of the elastic flow. Then we can reparametrize the \(\gamma _t\) into a solution to the special flow using Proposition 2.16. Hence uniqueness follows from Theorem 3.14. \(\square \)

We now explain how to prove existence of solution to the elastic flow of networks. Differently from the situation of closed curves, an admissible initial network \({\mathcal {N}}_0\) admits a parametrization \(\gamma =(\gamma ^1, \ldots ,\gamma ^N)\) of class \(C^{4+\alpha }([0,1];{\mathbb {R}}^2)\) that, in general, is not an admissible initial parametrization in the sense of Definition 2.11. However it is always possible to reparametrize each curve \(\gamma ^i\) by \(\psi ^i:[0,1]\rightarrow [0,1]\) in such a way that \(\varphi =(\varphi ^1, \ldots ,\varphi ^N)\) with \(\varphi ^i:=\gamma ^i\circ \psi ^i\) is an admissible initial parametrization for the special flow. Then by the suitable modification of Theorem 3.14 there exists a solution to the special flow, that is also a solution of the elastic flow.

Thus all the difficulties lie is proving the existence of the reparametrizations \(\psi ^i\).

In all cases we look for \(\psi ^i:[0,1]\rightarrow [0,1]\) with \(\psi ^i(0)=0\), \(\psi ^i(1)=1\) and \(\partial _x\psi ^i(x)\ne 0\) for every \(x\in [0,1]\). We now list all possible further conditions a certain \(\psi ^i\) has to fulfill at \(y=0\) or \(y=1\) in the different possible situations. It will then be clear that such reparametrizations \(\psi ^i\) exist.

-

If \(\gamma (y)\) is an endpoint of order one with Navier boundary conditions (namely \(\gamma (y)=P\), \(\varvec{\kappa }(y)=0\)), then \(\psi (y)\) needs to satisfy the following conditions:

$$\begin{aligned} {\left\{ \begin{array}{ll} \partial _x\psi (y)=1\\ \partial _x^2\psi (y)=-\left\langle \frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert }, \frac{\partial _x^2 \gamma (y)}{\vert \partial _x\gamma (y)\vert }\right\rangle =:a(y)\\ \partial _x^3\psi (y)=0\\ \partial _x^4\psi (y)=-\frac{1}{\vert \partial _x\gamma (y)\vert ^5}\left\langle \frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert }, \frac{\partial _x^4 \gamma (y)}{\vert \partial _x\gamma (y)\vert } +6a(y)\frac{\partial _x^3 \gamma (y)}{\vert \partial _x\gamma (y)\vert } +3a^2(y)\frac{\partial _x^2 \gamma (y)}{\vert \partial _x\gamma (y)\vert } \right\rangle =:-\frac{1}{\vert \partial _x\gamma (y)\vert ^5}b(y). \end{array}\right. } \end{aligned}$$Indeed, with such a request, we have \(\varphi (y)=\gamma (\psi (y))=\gamma (y)=P\) and

$$\begin{aligned} \partial _x^2\varphi (y)&=\partial _x^2 \gamma (\psi (y))(\partial _x\psi (y))^2 +\partial _x\gamma (\psi (y))\partial _x^2\psi (y)\\&=\partial _x^2 \gamma (y)+\partial _x\gamma (y)\left( -\left\langle \frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert }, \frac{\partial _x^2 \gamma (y)}{\vert \partial _x\gamma (y)\vert }\right\rangle \right) =\vert \partial _x\gamma \vert ^2\varvec{\kappa }(y)=0. \end{aligned}$$Moreover \({\overline{T}}(y)=0\). Indeed

$$\begin{aligned} {\overline{T}}&=-2\left\langle \frac{\partial _x^4\varphi (y)}{\vert \partial _x\varphi (y)\vert ^4}, \frac{\partial _x\varphi (y)}{\vert \partial _x\varphi (y)\vert } \right\rangle \\&=-2\left\langle \frac{\partial _x^4 \gamma (y)+6\partial _x^3 \gamma (y)a(y) +3\partial _x^2 \gamma (y)a^2(y)+\partial _x\gamma (y)\partial _x^4\psi (y)}{\vert \partial _x\gamma (y)\vert ^4}, \frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert } \right\rangle \\&=-2\frac{1}{\vert \partial _x\gamma (y)\vert ^4}b(y) +2\frac{1}{\vert \partial _x\gamma (y)\vert ^5} \left\langle b(y)\partial _x\gamma (y),\frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert } \right\rangle \\&=-2\frac{1}{\vert \partial _x\gamma (y)\vert ^4}b(y) +2\frac{1}{\vert \partial _x\gamma (y)\vert ^5}b(y) \left\langle \frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)},\frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert } \right\rangle \vert \partial _x\gamma (y)\vert =0. \end{aligned}$$ -

If \(\gamma (y)\) is an endpoint order one where clamped boundary conditions are imposed (\(\gamma (y)=P\), \(\frac{\partial _x\gamma (y)}{\vert \partial _x\gamma (y)\vert }=\tau ^*\) with \(\tau ^*\) a unit vector) we require \(\psi (y)\) to fulfill