Abstract

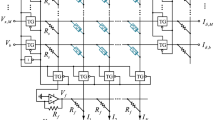

Memristor based computing-in-memory chips have shown the potentials to accelerate deep neural networks with high energy efficiency. Due to the inherent filament-based conductive mechanism of the memristor, the reading and writing noises are hard to eliminate. Besides, the precision of the large-scale memristor array is still limited. However, when the noise of the memristor is large, the existing training methods to reduce the accuracy loss of memristor based computing-in-memory chips will face challenges. Hence, we proposed the array-level boosting method with spatial extended allocation to reduce the accuracy loss induced by the limited precision and large noises. To optimize the spatial allocation number of each layer in the neural network, the greedy spatial extended allocation algorithm is also proposed. The image processing and classification tasks are demonstrated based on fabricated 32 × 128 memristor arrays to valid the performance of the proposed method. The chip-in-loop results show that the recovered accuracy of ResNet-34 on CIFAR-10 with array-level boosting method is 92.3%, which is closed to software-based accuracy of 93.2%.

Similar content being viewed by others

References

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature, 2015, 521: 436–444

He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016. 770–778

Devlin J, Chang M W, Lee K, et al. BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2019. 4171–4186

Lee J, Kim C, Kang S, et al. UNPU: a 50.6TOPS/W unified deep neural network accelerator with 1b-to-16b fully-variable weight bit-precision. In: Proceedings of IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, 2018. 218–220

Salahuddin S, Ni K, Datta S. The era of hyper-scaling in electronics. Nat Electron, 2018, 1: 442–450

Ielmini D, Wong H S P. In-memory computing with resistive switching devices. Nat Electron, 2018, 1: 333–343

Zidan M A, Strachan J P, Lu W D. The future of electronics based on memristive systems. Nat Electron, 2018, 1: 22–29

Zhang W Q, Gao B, Tang J S, et al. Neuro-inspired computing chips. Nat Electron, 2020, 3: 371–382

Biswas A, Chandrakasan A P. Conv-RAM: an energy-efficient SRAM with embedded convolution computation for low-power CNN-based machine learning applications. In: Proceedings of IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, 2018. 488–490

Si X, Chen J J, Tu Y N, et al. A twin-8T SRAM computation-in-memory macro for multiple-bit CNN-based machine learning. In: Proceedings of IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, 2019. 396–397

Lu J, Young S, Arel I, et al. A 1 TOPS/W analog deep machine-learning engine with floating-gate storage in 0.13 µm CMOS. IEEE J Solid-State Circ, 2015, 50: 270–281

Chen W H, Li K X, Lin W Y, et al. A 65 nm 1 Mb nonvolatile computing-in-memory ReRAM macro with sub-16 ns multiply-and-accumulate for binary DNN AI edge processors. In: Proceedings of IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, 2018. 494–496

Mochida R, Kouno K, Hayata Y, et al. A 4M synapses integrated analog ReRAM based 66.5 TOPS/W neural-network processor with cell current controlled writing and flexible network architecture. In: Proceedings of IEEE Symposium on VLSI Technology, Honolulu, 2018. 175–176

Nandakumar S R, Le Gallo M, Boybat I, et al. Mixed-precision architecture based on computational memory for training deep neural networks. In: Proceedings of IEEE International Symposium on Circuits and Systems (ISCAS), Florence, 2018

Kim S, Ishii M, Lewis S, et al. NVM neuromorphic core with 64k-cell (256-by-256) phase change memory synaptic array with on-chip neuron circuits for continuous in-situ learning. In: Proceedings of IEEE International Electron Devices Meeting (IEDM), Washington, 2015

Sun X Y, Wang P N, Ni K, et al. Exploiting hybrid precision for training and inference: a 2T-1FeFET based analog synaptic weight cell. In: Proceedings of IEEE International Electron Devices Meeting (IEDM), 2018

Prezioso M, Merrikh-Bayat F, Hoskins B D, et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature, 2015, 521: 61–64

Ambrogio S, Narayanan P, Tsai H, et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature, 2018, 558: 60–67

Yao P, Wu H Q, Gao B, et al. Face classification using electronic synapses. Nat Commun, 2017, 8: 15199

Li C, Wang Z R, Rao M Y, et al. Long short-term memory networks in memristor crossbar arrays. Nat Mach Intell, 2019, 1: 49–57

Joshi V, Le Gallo M, Haefeli S, et al. Accurate deep neural network inference using computational phase-change memory. Nat Commun, 2020, 11: 2473

Liu B Y, Li H, Chen Y R, et al. Vortex: variation-aware training for memristor X-bar. In: Proceedings of the 52nd ACM/EDAC/IEEE Design Automation Conference, San Francisco, 2015

Yao P, Wu H Q, Gao B, et al. Fully hardware-implemented memristor convolutional neural network. Nature, 2020, 577: 641–646

Gonugondla S K, Kang M, Shanbhag N R. A variation-tolerant in-memory machine learning classifier via on-chip training. IEEE J Solid-State Circ, 2018, 53: 3163–3173

Boybat I, Le Gallo M, Nandakumar S R, et al. Neuromorphic computing with multi-memristive synapses. Nat Commun, 2018, 9: 2514

Joksas D, Freitas P, Chai Z, et al. Committee machines — a universal method to deal with non-idealities in memristor-based neural networks. Nat Commun, 2020, 11: 4273

Wu W, Wu H Q, Gao B, et al. A methodology to improve linearity of analog RRAM for neuromorphic computing. In: Proceedings of IEEE Symposium on VLSI Technology, Honolulu, 2018. 103–104

Kull L, Toifl T, Schmatz M, et al. A 3.1 mW 8b 1.2 GS/s single-channel asynchronous SAR ADC with alternate comparators for enhanced speed in 32 nm digital SOI CMOS. In: Proceedings of IEEE International Solid-State Circuits Conference Digest of Technical Papers, San Francisco, 2013. 468–469

Shafiee A, Nag A, Muralimanohar N, et al. ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. In: Proceedings of the 43rd Annual International Symposium on Computer Architecture (ISCA), Seoul, 2016. 14–26

Zhang W Q, Peng X C, Wu H Q, et al. Design guidelines of RRAM based neural-processing-unit: a joint device-circuit-algorithm analysis. In: Proceedings of the 56th Annual Design Automation Conference, Las Vegas, 2019

Acknowledgements

This work was supported in part by National Key R&D Program of China (Grant No. 2019YFB2205103), National Natural Science Foundation of China (Grant Nos. 92064001, 61851404, 61874169), Beijing Municipal Science and Technology Project (Grant No. Z191100007519008), and Beijing Innovation Center for Future Chips (ICFC).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhang, W., Gao, B., Yao, P. et al. Array-level boosting method with spatial extended allocation to improve the accuracy of memristor based computing-in-memory chips. Sci. China Inf. Sci. 64, 160406 (2021). https://doi.org/10.1007/s11432-020-3198-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-020-3198-9