Abstract

Policy iteration is a widely used technique to solve the Hamilton Jacobi Bellman (HJB) equation, which arises from nonlinear optimal feedback control theory. Its convergence analysis has attracted much attention in the unconstrained case. Here we analyze the case with control constraints both for the HJB equations which arise in deterministic and in stochastic control cases. The linear equations in each iteration step are solved by an implicit upwind scheme. Numerical examples are conducted to solve the HJB equation with control constraints and comparisons are shown with the unconstrained cases.

Similar content being viewed by others

1 Introduction

Stabilizability is one of the major objectives in the area of optimal control theory. Since the open loop control depends only on the time and the initial state, so if the initial state is changed, unfortunately control needs to be recomputed again. In many physical situation, we are particularly interested in seeking a control law which depends on the state and which can deal additional external perturbation or model errors. When the dynamical systems is linear with unconstrained control and corresponding cost functional or performance index is quadratic, then the associated closed loop or feedback control law which minimizes the cost functional is obtained by the so called Riccati equation. Now when the dynamics is nonlinear, one popular approach is to linearize the dynamics and obtain the Riccati based controller to apply for the original nonlinear system, see e.g. [34].

If the optimal feedback cannot be obtained by LQR theory, then it can be approached by the verification theorem and the value function, which in turn is a solution of the Hamilton Jacobi Bellman (HJB) equation associated to the optimal control problem, see e.g. [21]. But in most of the situations, it is very difficult to obtain the exact value function and thus one has to resort to iterative techniques. One possible approach utilizes the so called value iteration. Here we are interested in more efficient technique, known as policy iteration. Discrete counterpart of policy iteration is also known as Howards’ algorithm [27], compare also [10]. Policy iteration can be interpreted as a Newton method applied to the HJB equation. Hence, using the policy iteration HJB equation reduces to a sequence of linearized HJB equation, which for historical reasons are called ’generalized HJB equations’. The policy iteration requires as initialization a stabilizing control. If such a control is not available, then one can use discounted path following policy iteration, see e.g. [28]. The focus of the present paper is the analysis of the policy iteration in the presence of control constraints. To the authors’ knowledge, such an analysis is not available in the literature.

Let us next mentions very selectively, some of the literature on the numerical solution of the control-HJB equations. Specific comments for the constraint case, are given further below. Finite difference methods and vanishing viscosity method are developed in the work of Crandall and Lions [17]. Other notable techniques are finite difference methods [16], semi-Lagrangian schemes [4, 19], the finite element method [26], filtered schemes [11], domain decomposition methods [14], and level set methods [37]. For an overview we refer to [20]. Policy iteration algorithms are developed in [8, 9, 33, 38]. If the dynamics are given by a partial differential equation (PDE), then the corresponding HJB equations become infinite dimensional. Applying grid based scheme to convert PDE dynamics to ODE dynamics leads to a high dimensional HJB equation. This phenomenon is known as the curse of dimensionality. In the literature there are different techniques to tackle this situation. Related recent works include, polynomial approximation [28], deep neural technique [32], tensor calculus [18], Taylor series expansions [13] and graph-tree structures [5].

Iteration in policy space for second order HJB PDEs arising in stochastic control is discussed in [41]. Tensor calculus technique is used in [40] where the associated HJB equation becomes linear after using some exponential transformation and scaling factor. For an overview for numerical approximations to stochastic HJB equations, we refer to [31]. To solve the continuous time stochastic control problem by the Markov chain approximation method (MCAM) approach, the diffusion operator is approximated by a finite dimensional Markov decision process, and further solved by the policy iteration algorithm. For the connection between the finite difference method and the MCAM approach for the second order HJB equation, see [12]. Let us next recall contributions for the case with control constraints. Regularity of the value function in the presence of control constraints has been discussed in [24, 25] for linear dynamics and in [15] for nonlinear systems. In [35] non-quadratic penalty functionals were introduced to approximately include control constraints. Later in [1] such functionals were discussed in the context of policy iteration for HJB equations.

Convergence of the policy iteration without constraints has been investigated in earlier work, see in particular [38, 41]. In the literature, there appears to be hardly any result on handling constraints on the control in the context of policy iteration, except for the penalty approach [35], which is an approximation procedure. In our work, the control constraints are realized exactly by means of a projection operator. This approach has been used earlier only for numerical purposes in the context of the value iteration, see [23] and [30]. Here we consider a policy iteration algorithm to tackle this situation where constraints can be taken as any closed convex set containing the origin. In our work for the first time as far as we know the convergence of the policy iteration with control constraints for HJB equations is established.

For numerical experiments we use an implicit upwind scheme as proposed in [2].

The rest of the paper is organized as follows. Section 2 provides a convergence of the policy iteration for the first order HJB equation in the presence of control constraints. Section 3 establishes corresponding convergence result for the second order HJB equation with control constraints. Numerical tests are presented in Sect. 4. Finally in Sect. 5 concluding remarks are given.

2 Nonlinear \({\mathcal {H}}_2\) feedback control problem subject to deterministic system

2.1 First order Hamilton–Jacobi–Bellman equation

We consider the following infinite horizon optimal control problem

subject to the nonlinear deterministic dynamical constraint

where \(y(t)=(y_1(t),\ldots ,y_d(t))^t\in {\mathbb {R}}^d\) is the state vector, and \(u(\cdot )\in {\mathcal {U}}\) is the control input with \({\mathcal {U}}=\{u(t):\, {\mathbb {R}}_+\rightarrow U\subset {\mathbb {R}}^m\}\). Further \(\ell (y)>0\), for \(y\ne 0\), is the state running cost, and \(\Vert u\Vert _R^2=u^tRu\), represents the control cost, with \(R\in {\mathbb {R}}^{m\times m},\,R>0\) a positive definite matrix. Throughout we assume that the dynamics f and g, as well as \(\ell\) are Lipschitz continuous on \({\mathbb {R}}^d\), and that \(f(0)=0\) and \(\ell (0)=0\). We also require that g is globally bounded on \(R^d\). This set-up relates to our aim of asymptotic stabilization to the origin by means of the control u. We shall concentrate on the case where the initial conditions are chosen from a domain \(\Omega \subset {\mathbb {R}}^d\) containing the origin in its interior.

The specificity of this work relates to controls which need to obey constraints \(u(t)\in U\), where U is a closed convex set containing 0 in \({\mathbb {R}}^m\). As a special case we mention bilateral point-wise constraints of the form

where \(\alpha =(\alpha _1,\ldots ,\alpha _m)\in {\mathbb {R}}^m\), \(\beta =(\beta _1,\ldots ,\beta _m)\in {\mathbb {R}}^m\), \(\alpha \le 0,\) \(\beta \ge 0\), and the inequalities act coordinate-wise.

The optimal value function associated to (2.1)–(2.2) is given by

where \(x\in \Omega\). Here and throughout the paper we assume that for every \(x\in \Omega\) a solution to (2.1) exists. Thus, implicitly we also assume that the existence of a control \(u\in L^2(0,\infty ;{\mathbb {R}}^m)\) such that (2.2) admits a solution \(y\in W^{1,2}(0,\infty ;{\mathbb {R}}^d)\). If required by the context, we shall indicate the dependence of y on \(x\in \Omega\) or \(u \in {\mathcal {U}}\), by writing \(y(\cdot ;x)\), respectively \(y(\cdot ;u)\).

In case \(V\in C^1({\mathbb {R}}^d)\) it satisfies the Hamilton–Jacobi–Bellman (HJB) equation

with \(\nabla V(x)=(\partial _{{x} _{1}}V,\ldots ,\partial _{{x} _{d}}V)^t\). Otherwise, sufficient conditions which guarantee that V is the unique viscosity solution to (2.4) are well-investigated, see for instance [6, Chapter III].

In the unconstrained case, with \(U={\mathbb {R}}^m\) the value function is always differentiable (see [6, pg. 80]). If \(\ell =x^tQx\), with Q a positive definite matrix, and linear control dynamics of the form \(f(y)+g(y)u=Ay+Bu\), the value function is differentiable provided that U is nonempty polyhedral (finite horizon) or closed convex set with \(0\in int\quad U\) (infinite horizon), see [24, 25]. Sufficient conditions for (local) differentiability of the value function associated to finite horizon optimal control problems with nonlinear dynamics and control constraints have been obtained in [15]. For our analysis, we assume that

Assumption 1

The value function satisfies \(V\in C^1({\mathbb {R}}^d)\). Moreover it is radially unbounded, i.e. \(\lim _{\Vert x\Vert \rightarrow \infty }V(x) =\infty\).

With Assumption 1 holding, the verification theorem, see eg. [22, Chapter 1] implies that an optimal control in feedback form is given as the minimizer in (2.4) and thus

where \({\mathcal {P}}_{U}\) is the orthogonal projection in the R-weighted inner product on \({\mathbb {R}}^m\) onto U, i.e.

Alternatively \(u^*\) can be expressed as

where \({\mathcal {P}}_{{\bar{U}}}\) is the orthogonal projection in \({\mathbb {R}}^m\), with Euclidean inner product, onto \({\bar{U}}=R^{\frac{1}{2}}U\). For the particular case of (2.3) we have

where the \(\max\) and \(\min\) operations operate coordinate-wise. It is common practice to refer to the open loop control as in (2.1) and to the closed loop control as in (2.5) by the same letter.

For the unconstrained case the corresponding control law reduces to \(u^*(x)=-\frac{1}{2}R^{-1}g(x)^t\nabla V(x)\). Using the optimal control law \(u(x)={\mathcal {P}}_{U}\Big (-\frac{1}{2}R^{-1}g(x)^t\nabla V(x)\Big )\) in (2.4), (where we now drop the superscript \(^*\)) we obtain the equivalent form of the HJB equation as

We shall require the notion of generalized Hamilton–Jacobi–Bellman (GHJB) equations (see e.g. [7, 38]), given as follows:

Here f, g and u are considered as functions of \(x\in {\mathbb {R}}^d\).

Remark 1

Concerning the existence of a solution in the unconstrained case to the Zubov type equation (2.7), we refer to [34, Lemma 2.1, Theorem 1.1] where it is shown in the analytic case that if \((\frac{\partial f}{\partial y}(0),g(0))\) is a stabilizable pair, then (2.8) admits a unique locally positive definite solution V(x), which is locally analytic at the origin.

For solving (2.6) we analyse the following iterative scheme, which is referred to as policy iteration or Howards’ algorithm, see e.g. [4, 7, 10, 38], where the case without control constraints is treated.

Remark 2

The fact that the policy iteration can be interpreted as a Newton method in the unconstrained case is pointed out for instance in [28] and [29].

Let us point out, however, that the structural properties which would allow to call upon the well known rate of convergence proof associated to Newton’s method appear to be inapplicable.

We require the notion of admissible controls, a concept introduced in [7, 33].

Definition 1

(Admissible Controls). A measurable function \(u:{\mathbb {R}}^d\rightarrow U\subset {\mathbb {R}}^m\) is called admissible with respect to \(\Omega\), denoted by \(u \in {\mathcal {A}}(\Omega )\), if

-

(i)

u is continuous on \({\mathbb {R}}^m\),

-

(ii)

\(u(0)=0\),

-

(iii)

u stabilizes (2.2) on \(\Omega\), i.e. \(\lim _{t\rightarrow \infty }y(t;u)=0, \quad \forall x\in \Omega\).

-

(iv)

\(\int \limits _0^\infty \Big (\ell (y(t;u))+\Vert u(y(t;u))\Vert _R^2\Big )\, dt < \infty , \quad \forall x\in \Omega\).

Here y(t; u) denotes the solution to (2.2), where \(x\in \Omega\), and with control in feedback form \(u(t) = u(y(t;u))\). As in (2.1) the value of the cost in (iv) associated to the closed loop control is denoted by \({\mathcal {J}}(x,u) = \int \limits _0^\infty (\ell (y(t;u))+\Vert u(y(t;u))\Vert _R^2)\, dt\). In general we cannot guarantee that the controlled trajectories \(t\rightarrow y(t;u(t))\) remain in \(\Omega\) for all t. For this reason we demand continuity and differentiability properties of u and V on all of \({\mathbb {R}}^d\). Under additional assumptions we could introduce a set \({\tilde{\Omega }}\) with \(\Omega \subsetneq {\tilde{\Omega }} \subsetneq {\mathbb {R}}^d\) with the property that \(\{y(t,u) : t\in [0,\infty ), u \in {\mathcal {A}}(\Omega ) \} \subset {\tilde{\Omega }}\) and demanding the regularity properties of u and V on \({\tilde{\Omega }}\) only. We shall not pursue such a set-up here.

We are now prepared the present the algorithm.

Note that (2.8) can equivalently be expressed as \(GHJB(V^{(i)},\nabla V^{(i)};u^{(i)})=0,\, V^{(i)}(0)=0\).

Lemma 1

Assume that \(u(\cdot )\) is an admissible feedback control with respect to \(\Omega\). If there exists a function \(V(\cdot ;u)\in C^1({\mathbb {R}}^d)\) satisfying

then \(V(x;u)={\mathcal {J}}(x,u)\) for all \(x\in \Omega\). Moreover, the optimal control law \(u^*(x)\) is admissible on \({\mathbb {R}}^d\), the optimal value function V(x) satisfies \(V(x)={\mathcal {J}}(x,u^*)\), and \(0<V(x)\le V(x;u)\).

Proof

The proof takes advantage, in part, from the verification of an analogous result in the unconstrained, see eg. [38], where a finite horizon problem is treated. Let \(x\in \Omega\) be arbitrary and fixed, and choose any \(T>0\). Then we have

Since \(\lim _{T\rightarrow \infty }y(T;u)=0\) by (iii), and due to V(0;u)=0, we can take the limit \(T\rightarrow \infty\) in this equation to obtain

where \(y=y(t;u)\) on the right hand side of the above equation. Adding both sides of \({\mathcal {J}}(x,u)=\int _{0}^{\infty } (\ell (y)+\left\Vert u\right\Vert ^2_R)\; dt\) to the respective sides of (2.12), we obtain

and from (2.10) we conclude that \(V(x;u)={\mathcal {J}}(x,u)\) for all \(x\in \Omega\).

Let us next consider the optimal feedback law \(u^*(x)\). We need to show that it is admissible in the sense of Definition 1. By Assumption 1 and (2.5) it is continuous on \({\mathbb {R}}^d\). Moreover \(V(0)=0\), \(V(x)> 0\) for all \(0\ne x\in \Omega\), thus \(\nabla V(0) = 0\), and consequently \(u^*(0)=0\). Here we also use that \(0\in U\). Thus (i) and (ii) of Definition 1 are satisfied for \(u^*\) and (iv) follows from our general assumption that (2.1) has a solution for every \(x\in \Omega\). To verify (iii), note that from (2.11), (2.5), and (2.6) we have for every \(T>0\)

Thus \(T \rightarrow V(y(T;u^*))\) is strictly monotonically decreasing, (unless \(y(T;u^*)=0\) for some T). Thus \(\lim _{T\rightarrow \infty } V(y(T;u^*))= \epsilon\) for some \(\epsilon \ge 0\). If \(\lim _{T\rightarrow \infty } y(T;u^*) \ne 0\), then there exists \(\epsilon >0\) such that \(\epsilon \le V(y(T;u^*)) \le V(x)\) for all \(T\ge 0\). Let \(S=\{z \in {\mathbb {R}}^d: \epsilon \le V(z) \le V(x)\}\). Due to continuity of V the set S is closed. The radial unboundedness assumption on V further implies that S is bounded and thus it is compact. Let us set

Then by (2.6) we have

By compactness of S we have \(\max _{z\in S} \dot{V}(z)=:\zeta <0\). Note that \(\{T: y(T;u^*)\}\subset S\). Hence by (2.13) we find

which is impossible. Hence \(\lim _{T\rightarrow \infty } y(T;u^*) =0\) and \(u^* \in {\mathcal {A}}(\Omega )\).

Now we can apply the arguments from the first part of the proof with \(u=u^*\) and obtain \(V(x)={\mathcal {J}}(x,u^*) \le {\mathcal {J}}(x,u)=V(x;u)\) for every \(u\in {\mathcal {A}}(\Omega )\). This concludes the proof. \(\square\)

2.2 Convergence of policy iteration

The previous lemma establishes the fact that the value \({\mathcal {J}}(x,u)\), for a given admissible control u, and \(x\in \Omega\) can be obtained as the evaluation of the solution of the GHJB equation (2.10). In the following lemma we commence the analysis of the convergence of Algorithm 1.

Proposition 1

If \(u^{(0)} \in {\mathcal {A}}(\Omega )\), then \(u^{(i)}\in {\mathcal {A}}(\Omega )\) for all i. Moreover we have \(V(x)\le V^{(i+1)}(x)\le V^{(i)}(x)\). Further, \(\{V^{(i)}(x)\}\) converges from above pointwise to some \({\bar{V}}(x) \ge V(x)\) in \(\Omega\).

Proof

We proceed by induction. Given \(u^{(0)} \in {\mathcal {A}}(\Omega )\), we assume that \(u^{(i)}\in {\mathcal {A}}(\Omega )\), and establish that \(u^{(i+1)}\in {\mathcal {A}}(\Omega )\). We shall frequently refer to Lemma 1 with \(V^{(i)}:=V(\cdot ;u^i)\) and \(V^{(i)}\) as in (2.8). In view of (2.9) \(u^{(i+1)}\) is continuous since g is continuous and \(V^{(i)}\in C^1(\Omega )\). Using Lemma 1 we obtain that \(V^{(i)}\) is positive definite. Hence it attains its minimum at the origin, \(\nabla V^{(i)}(0)=0\) and consequently \(u^{(i+1)}(0)=0\). Thus (i) and (ii) in the definition of admissibility of \(u^{(i+1)}\) are established.

Next we take the time-derivative of \(t\rightarrow V^{(i)}(y(u^{(i+1)})(t)\) where \(y(u^{(i+1)})\) is the trajectory corresponding to

Let us recall that \({\nabla V^{(i)}(y)}^tf(y)=-{\nabla V^{(i)}(y)}^tg(y)u^{(i)}(y)-\ell (y)-\left\Vert u^{(i)}(y)\right\Vert ^2_{R}\), for \(y\in {\mathbb {R}}^d\) and set \(y^{(i+1)}= y(u^{(i+1)})\). Then we find

Throughout the following computation we do not indicate the dependence on t on the right hand side of the equality. Next we need to rearrange the terms on the right hand side of the last expression. For this purpose it will be convenient to introduce \(z=\frac{1}{2}R^{-1} g(y^{(i+1)})^t{\nabla V^{(i)}(y^{(i+1)})}\) and observe that \(u^{(i+1)}={\mathcal {P}}_{U}(-z)\). We can express the above equality as

Since \(u^{(i)}(y^{(i+1)})\in U\) we obtain \((z+ {\mathcal {P}}_{U}(-z))^tR({\mathcal {P}}_{U}(-z)-u^{(i)}(y^{(i+1)})) \le 0\), and thus

Hence \(t\rightarrow V^{(i)}(y^{(i+1)}(t))\) is strictly monotonically decreasing. As mentioned above, \(V^{i}\) is positive definite. With the arguments as in the last part of the proof of Lemma 1 it follows that \(\lim _{t\rightarrow \infty } y^{(i+1)}(t)=\lim _{t\rightarrow \infty }y(t;u^{(i+1)})=0\). Finally (2.15) implies that \(0\le \int _{0}^{\infty }\Big (\ell (y(t;u^{(i+1)}))+\Vert u^{(i+1)}(y^{(i+1)})(t)\Vert _R^2\Big )\, dt\le V^{(i)}(x)\). Since \(x\in \Omega\) was chosen arbitrarily it follows that \(u^{(i+1)}\) defined in (2.9) is admissible. Lemma 1 further implies that \(V^{(i+1)}(x) \ge V(x)\) on \(\Omega\).

Since for each \(x\in \Omega\) the trajectory \(y^{(i+1)}\) corresponding to \(u^{(i+1)}\) and satisfying

is asymptotically stable, the difference between \(V^{(i+1)}(x)\) and \(V^{(i)}(x)\) can be obtained as

where f and g are evaluated at \(y^{(i+1)}\). Utilizing the generalized HJB equation (2.8), we get \({\nabla V^{(i)}(y^{(i+1)})}^t(f+gu^{(i+1)})={\nabla V^{(i)}(y^{(i+1)})}^tg(u^{(i+1)}-u^{(i)})-(\ell +\left\Vert u^{(i)}\right\Vert ^2_R)\) and \({\nabla V^{(i+1)}(y^{(i+1)})}^t(f+gu^{(i+1)})=-(\ell +\left\Vert u^{(i+1)}\right\Vert ^2_R)\). This leads to

The last two terms in the above integrand appeared in (2.14) and were estimated in the subsequent steps. We can reuse this estimate and obtain

Hence, \(\{V^{(i)}\}\) is a monotonically decreasing sequence which is bounded below by the optimal value function V, see Lemma 1. Since \(\{V^{(i)}\}\) is a monotonically decreasing sequence and bounded below by V, it converges pointwise to some \({\bar{V}} \ge V\). \(\square\)

To show convergence of \(u^{(i)}\) to \({\bar{u}} : = {\mathcal {P}}_{U}(-\frac{1}{2}R^{-1}g(x)^t\nabla {\bar{V}}(x))\), additional assumptions are needed. This is considered in the following proposition. In the literature, for the unconstrained case, one can find the statement that, based on Dini’s theorem, the monotonically convergent sequence \(\{V^{(i)}\}\) converges uniformly to \({\bar{V}}\), if \(\Omega\) is compact. This, however, only holds true, once it is argued that \({\bar{V}}\) is continuous. For the following it will be useful to recall that \(C^m({\bar{\Omega }})\), \(m\in {\mathbb {N}}_0\), consists of all functions \(\phi \in C^m(\Omega )\) such that \(D^{\alpha }\phi\) is bounded and uniformly continuous on \(\Omega\) for all multi-index \(\alpha\) with \(0\le \alpha \le m\), see e.g. [3, pg. 10].

Proposition 2

If \(\Omega\) is bounded, and further \(\{V^{(i)}\}\subset C^1(\Omega )\) satisfy (2.8), \({\bar{V}}\in C^1(\Omega )\cap C({\bar{\Omega }})\), and \(\{\nabla V^{(i)}\}\) is equicontinuous in \(\Omega\), then \(\{\nabla V^{(i)}\}\) converges pointwise to \(\nabla {\bar{V}}\) in \(\Omega\), and \({\bar{V}}\) satisfies the HJB equation (2.6) for all \(x\in \Omega\) with \({\bar{V}}(x)=V(x)\) for all \(x\in \Omega\).

Proof

Let \(x\in \Omega\) and \(\epsilon >0\) be arbitrary. Denote by \(e_k\) the k-th unit vectors, \(k=1,\ldots ,d,\) and choose \(\delta _1>0\) such that \(S_{x}=\{x+h:|h| \le \delta _1\}\;\subset \Omega\). By continuity of \(\nabla {\bar{V}}\) and equicontinuity of \(\nabla V^{(i)}\) in \(\Omega\), there exists \(\delta \in (0,\delta _1)\) such

with for all h with \(|h|\le \delta\), \(k=\{1,\ldots ,d\}\), \(i=1,2,\ldots\).

By assumption \(\Omega\) is bounded and thus \({\bar{\Omega }}\) is compact. Hence by Dini’s theorem \(V^{(i)}\) converges to \({\bar{V}}\) uniformly on \({\bar{\Omega }}\). Here we use the assumption that \({\bar{V}}\in C({\bar{\Omega }})\). We can now choose \({\bar{i}}>0\) such that

We have

We estimate \(I_1\) and \(I_3\) by using (2.17) as

and

We estimate \(I_2\) by using (2.18)

and combining with the estimates for \(I_1\) and \(I_3\), we obtain

Since \(x\in \Omega\) was arbitrary this implies that

It then follows from (2.9) that

and by (2.8)

For uniqueness of value function \({\bar{V}}=V\), we refer to [22, pg. 86] and [6, Chapter III]. \(\square\)

While the assumptions of Proposition 2 describe a sufficient conditions for pointwise convergence of \(\nabla V^{(i)}\), it appears to be a challenging open issue to check them in practice.

Remark 3

To relax the \(C^1\) smoothness requirement in the deterministic case, the theory of viscosity solution has to be used. It is an interesting question, to analyse policy iteration in this context even without control constraints.

The present analysis uses the convexity assumption on U in an essential manner. The case of constraints expressed by nonconvex U is another open issue.

3 Nonlinear \({\mathcal {H}}_2\) control subject to stochastic system

3.1 Second order Hamilton–Jacobi–Bellman equation

Here we consider the stochastic infinite horizon optimal control problem

subject to the nonlinear stochastic dynamical constraint

where \(y(t)\in {\mathbb {R}}^d\) is the state vector, \(W(t)\in {\mathbb {R}}^k\) is a standard multi-dimensional separable Wiener process defined on a complete probability space, and the control input \(u(\cdot )\in {\mathcal {U}}\) is an adapted process with respect to a natural filter. The functions \(f,\,g,\) and \(g_1\) are assumed to be Lipschitz continuous on \({\mathbb {R}}^d\), and satisfy \(f(0)=0\), \(g(0)=0\) and \(g_1(0)=0\). For details about existence and uniqueness for (3.2), we refer to e.g. [36, Chapter 2]. As in the previous section \(\Omega\) denotes a domain in \({\mathbb {R}}^d\) containing the origin.

The value function \(V(x)=\underset{u(\cdot )\in U}{\inf }{\mathcal {J}}(x,u(\cdot ))\) is assumed to be \(C^2\)- smooth over \({\mathbb {R}}^d\). Then the HJB equation corresponding to (3.1) and (3.2) is of the form

From the verification theorem [22, Theorem 3.1], the explicit minimizer \(u^*\) of (3.3) is given by

where the projection \({\mathcal {P}}_{U}\) is defined as in (2.5). The infinitesimal differential generator of the stochastic system (3.2) for the optimal pair (u, V) (dropping the superscript *) is denoted by

Using the optimal control law (3.4) in (3.3), we obtain an equivalent form of the HJB equation

For the unconstrained control, the above HJB equation becomes

The associated generalized second order GHJB equation is of the form

We next define the admissible feedback controls with respect to stochastic set up.

Definition 2

(Admissible Controls). A control u is defined to be admissible with respect to (3.1), denoted by \(u \in {\mathcal {A}}(\Omega )\) if

-

(i)

u is continuous on \({\mathbb {R}}^m\),

-

(ii)

\(u(0)=0\),

-

(iii)

u stabilizes (3.2) on \(\Omega\) stochastically, i.e. \(P(\lim _{t\rightarrow \infty }y(t;u)=0)=1, \quad \forall \ x\in \Omega\) i.e. when \({\mathbb {E}}(\lim _{t\rightarrow \infty }y(t;u))=0\).

-

(iv)

\({\mathbb {E}}\int \limits _0^\infty \Big (\ell (y(t;u))+\Vert u(y(t;u))\Vert _R^2\Big )\, dt < \infty , \quad \forall \; x\in \Omega\).

In Algorithm 2, the policy iteration for the second order HJB equation is documented, see [41].

Lemma 2

Assume that \(u(\cdot )\) is an admissible feedback control in \(\Omega\). If there exist a function \(V(\cdot ;u)\in C^2({\mathbb {R}}^d)\) satisfying

then V(x; u) is the value function of the system (3.2) and \(V(x;u)=J(x,u)\) \(\forall x\in \Omega\). Moreover, the optimal control law \(u^*(x)\) is admissible and the optimal value function \(V(x;u^*)\), if it exists and is radial unbounded, satisfies \(V(x;u^*)={\mathcal {J}}(x,u^*)\), and \(0<V(x;u^*)\le V(x;u)\).

Proof

By Itô’s formula [36, Theorem 6.4] for any \(T>0\)

Taking the expectation with limit as \(T\rightarrow \infty\) on both sides, the above equation becomes

where \({\mathbb {E}}\int _{0}^{t}\nabla V(y;u)^tg_1(y)\;dW=0\) for each time \(t\ge 0\), with \({\mathbb {E}}(\lim _{T\rightarrow \infty }V(y(T);u))=0\). Adding \({\mathcal {J}}(x,u)={\mathbb {E}}\int _{0}^{\infty } (\ell (y)+\left\Vert u(y)\right\Vert ^2_R)\; dt\) to (3.11), we obtain

Hence \(V(x;u)={\mathcal {J}}(x,u)\) for all \(x\in \Omega\).

Concerning the admissibility of \(u^*\), properties (i), (ii) and (iv) in Definition 2, can be shown similarly as in Lemma 1. Since the stochastic Lyapunov functional V is radially unbounded by assumption, and its differential generator \({\mathcal {L}}_{u^*}V\) satisfies \({\mathcal {L}}_{u^*}V(x)=-(\ell (x)+\left\Vert u^*\right\Vert ^2_R)\) as well as

one can follow the standard argument given in Theorem [36, Theorem 2.3-2.4] to obtain \(P(\lim _{t\rightarrow \infty }y(t;u^*)=0)=1, \quad \forall \ x\in \Omega\) and hence \(u^*\in {\mathcal {A}}(\Omega )\). \(\square\)

3.2 Convergence of policy iteration

Here we turn to the convergence analysis of the iterates \(V^{(i)}\).

Proposition 3

If \(u^{(0)} \in {\mathcal {A}}(\Omega )\), then \(u^{(i)}\in {\mathcal {A}}(\Omega )\) for all i. Further we have \(V(x)\le V^{(i+1)}(x)\le V^{(i)}(x)\), where V satisfies the HJB equation (3.5). Moreover \(\{V^{(i)}\}\) converges pointwise to some \({\bar{V}}\ge V\) in \(\Omega\).

Proof

First we show that if \(u^{(i)}\in {\mathcal {A}}(\Omega )\), then \(u^{(i+1)}\in {\mathcal {A}}(\Omega )\). As g is continuous and \(V^{(i)}\in C^1(\Omega )\), we have \(u^{(i+1)}\) is continuous by (3.9). Since \(V^{(i)}\) is positive definite, it attains a minimum at the origin and hence \(\nabla V^{(i)}(0)=0\) and consequently \(u^{(i+1)}(0)=0\). The infinitesimal generator \({\mathcal {L}}_uV(y)\) evaluated along the trajectories generated by \(u^{(i+1)}\) becomes

where \({\nabla V^{(i)}}^tf+\frac{1}{2}Tr[g_1^t\frac{\partial ^2 V^{(i)}}{\partial y^2}g_1]=-{\nabla V^{(i)}}^tgu^{(i)}-\ell (y)-\left\Vert u^{(i)}\right\Vert ^2_{R}\).

Now we can calculate \({\mathcal {L}}_uV^{(i)}(y;u^{(i+1)})\) as in Proposition 1 to show that \({\mathcal {L}}_uV^{(i)}(y;u^{(i+1)})<0\). By the stochastic Lyapunov theorem [36, Theorem 2.4], it follows that \(P(\lim _{t\rightarrow \infty }y(t;u^{(i+1)})=0)=1\), i.e. \({\mathbb {E}}(\lim _{t\rightarrow \infty }y(t;u^{i+1}))=0\). Since \({\mathcal {L}}_uV^{(i)}(y;u^{(i+1)})<0\), we find

and thus \(u^{(i+1)}\) is admissible.

Using that \(dy= \Big (f(y)+g(y)u^{(i)}\Big )dt+g_1(y)\quad dW\) is asymptotically stable for all i, by Itô’s formula along the trajectory

the difference \(V^{(i+1)}(x)-V^{(i)}(x)\) can be obtained as

Using the generalized HJB equation (3.8) for two consecutive iterations (i) and \((i+1)\), we have \(\frac{1}{2}Tr[g_1^t\frac{\partial ^2 V^{(i)}}{\partial y^2}g_1]=-{\nabla V^{(i)}}^t(f+gu^{(i)})-(\ell +\left\Vert u^{(i)}\right\Vert ^2_R)\) and \({\nabla V^{(i+1)}}^t(f+gu^{(i+1)})+\frac{1}{2}Tr[g_1^t\frac{\partial ^2 V^{(i+1)}}{\partial y^2}g_1]=-(\ell +\left\Vert u^{(i+1)}\right\Vert ^2_R)\) respectively. Applying \(u^{(i+1)}(y)={\mathcal {P}}\Big (-\frac{1}{2}R^{-1}g^t\nabla V^{(i)}(y))\Big )\), we obtain finally

where \(A-B\) can be calculated as in Proposition 1 to obtain \(V^{(i+1)}(x)\le V^{(i)}(x)\). Further, \(V(x)\le V^{(i+1)}(x)\). Therefore, \(\{V^{(i)}\}\) converges pointwise to some \({\bar{V}}\). \(\square\)

Now, in addition if \(\Omega\) is compact and \({\bar{V}}\) is continuous, then by Dini’s theorem \(\{V^{(i)}\}\) converges uniformly to \({\bar{V}}\).

Proposition 4

Let \(\Omega\) be a bounded domain. If \(\{V^{(i)}\}\in C^2(\Omega )\) satisfy (3.8), \({\bar{V}}\in C^2(\Omega )\cap C^1({\bar{\Omega }})\), and \(\{\nabla ^{m}V^{(i)}\}\) is equicontinuous, then \(\{\nabla ^{m}V^{(i)}\}\) converges pointwise to \(\nabla ^{m}{\bar{V}}\) in \(\Omega\), for \(m=1\), 2, and \({\bar{V}}\) satisfies the HJB equation (3.5) with \({\bar{V}}=V\).

Proof

From Proposition 2, it follows that \(\{\nabla V^{(i)}\}\) converges pointwise to \(\nabla {\bar{V}}\) in \(\Omega\) and \({\bar{u}}(x)={\mathcal {P}}_U\Big (-\frac{1}{2}R^{-1}g(x)^t\nabla {\bar{V}}(x)\Big )\). Pointwise convergence of \(\{\nabla ^{2}V^{(i)}\}\) to \(\nabla ^{2}{\bar{V}}\) can be argued as for the first derivatives which was done in the proof of Proposition 2. We can now pass to the limit \(i \rightarrow \infty\) in (3.8) to obtain that \({\bar{V}}\) satisfies the HJB equation (3.5). Concerning the uniqueness of the value function \({\bar{V}}=V\), we refer to e.g. [22, pg. 247]. \(\square\)

4 Numerical examples

Here we conduct numerical experiments to demonstrate the feasibility of the policy iteration in the presence of constraints and to compare the solutions between constrained and unconstrained formulations. To describe a setup which is independent of a stabilizing feedback we also introduce a discount factor \(\lambda >0\). Unless specified otherwise we choose \(\lambda =0.05\), and we also give results with \(\lambda =0\).

4.1 Test 1: One dimensional linear equation

Consider the following minimization problem

subject to the following deterministic dynamics with control constraint

and

subject to the following stochastic system with control constraint

where \(\lambda > 0\) is the discount factor. We solve the GHJB equation (3.8) combining an implicit method and with an upwind scheme over \(\Omega =(-2,2)\) as follows

where the superscript n stands for the iteration loop, the subscript i stands for the mesh point numbering in the state space (\(V_i\approx V(x_i)\) for \(i=1,\ldots , I \quad (\text {total number of grid points})\)), dt is the time step, \(\Delta x=x_{i+1}-x_{i}\) is the distance between equi-spaced grid points, and \(D^2V^n_i=(V^n_{i+1}-2V^n_i+V^n_{i-1})/\Delta x^2\) is the approximation of the second order derivative of the value function. To solve the first order HJB (2.8), we take \(g_1=0\) in (4.3).

For the upwind scheme, we take forward difference \(\nabla V_{i,F}=\frac{V_{i+1}-V_i}{\Delta x}\) whenever the drift of the state variable \(S_{i,F}=f(x_i)+g(x_i)u_{i,F}>0\), and backward difference \(\nabla V_{i,B}=\frac{V_{i}-V_{i-1}}{\Delta x}\) if the drift \(S_{i,B}=f(x_i)+g(x_i)u_{i,B}<0\), and \(D{\bar{V}}_i=-\frac{2R}{g(x_i)}{\bar{u}}_i\) with \({\bar{u}}_i=-f(x_i)/g(x_i)\) for \(S_{i,F}\le 0\le S_{i,B}\). Hence,

where \(\mathbb {1}\) is the characteristic function. The updated control policy for \((n+1)\) iteration becomes

Note that since we solve the HJB backward in time, it is necessary to construct the upwind scheme as above which is in a reverse compared to the form an upwind scheme for dynamics forward in time. For more details about upwind schemes for HJB, see [2] including its appendix.

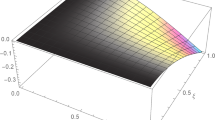

We choose, \(R=0.1\), \(dt=2\), \(I=400\) and two different initial conditions \(x_0=-1.8\) and \(x_0=1\). Figure 1(i) depicts the value functions in the unconstrained and the constrained case. As expected they are convex. Outside \([-1,1]\) the two value functions differ significantly. In the constrained case it tends to infinity at \(\pm 2\) indicating that for such initial conditions the constrained control cannot stabilize anymore. Figure 1(ii), shows the evolution of the states under the effect of the control as shown in Fig. 1(iv). In the transient phase, the decay rate for the constrained control system is slower than in the unconstrained case, which is the expected behavior. The temporal behavior of the running cost of \(\left\Vert y(t)\right\Vert\) is documented in Fig. 1(iii). It is clear that the uncontrolled solution diverges whereas \(\left\Vert y(t)\right\Vert ^2\) tends to zero for the cases with control, both for constrained and unconstrained controls. The pointwise error for the HJB equation (2.6) is documented in Fig. 1(v). Similar behavior is achieved for the stochastic dynamics. It is observed from Fig. 2(i) that even with the small intensity of noise (\(g_1=0.005\)), we can see the Brownian motion of the state cost for the controlled system. Figure 2(ii) plots that error for the value function of the increasing iteration count.

We have also solved this problem with \(\lambda = 0\). For this purpose we initialized the algorithm with the solution of the discounted problem with \(\lambda =0.05\). If the dt was further reduced, then the algorithm converged. Moreover, the value functionals with \(\lambda =0.05\) and \(\lambda =0\) are visually indistinguishable.

The next example focuses on the exclusion of discount factor once we have proper initialization, which is obtained through solving HJB equation with a discount factor.

4.2 Test 2: One dimensional nonlinear equation

We consider the following infinite horizon problem

subject to the following dynamics with control constraint

Here \(\Omega =(-2,2)\), \(dt=0.001\), \(R=0.1\), \(I=400\). To obtain a proper initialization \((u^{(0)},V^{(0)})\), we solve (4.3) with discount factor \(\lambda =0.05\). Now we can solve (2.8) with the HJB initialization using the aforementioned upwind scheme.

Behaviors of the value function, state, control and state cost both for the constrained and unconstrained control systems are documented in Fig. 3(i)–(iv) with a observation of faster decay rate for trajectories in the unconstrained cases.

4.3 Test 3: Three dimensional linear system

Consider the minimization problem

subject to the following 3D linear control system

with \(\sigma =10\), \(\rho =1.1\), and \(\beta =8/3\). System (4.6)-(4.8) arises from linearization of the Lorenz system at (0,0,0). For \(\rho >1\), the equilibrium solution (0, 0, 0) is unstable [39, Chapter 1].

Test 3: Deterministic case, 3D Linear, \(R=0.01\), time step \(dt=10\), initial condition \([x_0,y_0,z_0]=[1,1,1]\). i Control, ii State, iii Running cost \((\left\Vert x(t)\right\Vert ^2+\left\Vert y(t)\right\Vert ^2+\left\Vert z(t)\right\Vert ^2+\left\Vert u_1(t)\right\Vert ^2_{R})+\left\Vert u_2(t)\right\Vert ^2_{R})\), iv) State cost \(\left\Vert x(t)\right\Vert ^2+\left\Vert y(t)\right\Vert ^2+\left\Vert z(t)\right\Vert ^2\)

Figure 4(i) depicts the unconstrained and the constrained controls, where one component of the control constraint is active up to \(t=0.5\), and the other one up to \(t=1\). The first two components of the state of the uncontrolled solution diverge, while the third one converges, see the dotted curves in Fig. 4(ii). For the unconstrained HJB control the state tends to zero with a faster decay rate than for the constrained one. See again Fig. 4(ii). Figure 4(iii)–(iv) document the running and state costs, respectively.

We next turn to the stochastic version of problem (4.5)-(4.8) with noise of intensity .05 added to the second and third components.

Test 3: Stochastic case, 3D linear system, \(R=0.01\), time step \(dt=10\), initial condition \([x_0,y_0,z_0]=[1,1,1]\). i state, ii running cost \((\left\Vert x(t)\right\Vert ^2+\left\Vert y(t)\right\Vert ^2+\left\Vert z(t)\right\Vert ^2+\left\Vert u_1(t)\right\Vert ^2_{R}+\left\Vert u_2(t)\right\Vert ^2_{R})\)

For the stochastic system the constrained and unconstrained states tend to zero with some oscillations, see Fig. 5.

4.4 Test 4: Lorenz system

For the third test, we choose the Lorenz system which appears in weather prediction, for example. This is a nonlinear three dimensional system, with control appearing in the second equation,

where the three parameters \(\sigma >1\), \(\rho >0\) and \(\beta >0\) have physical interpretation. For more details see e.g. [39]. When \(u=0\) in (4.10), we obtain the original uncontrolled Lorenz system. The Lorenz system has 3 steady state solution namely \(C0=(0,0,0)\), \(C^+=\Big (\sqrt{\beta (\rho -1)}, \sqrt{\beta (\rho -1)},\rho -1\Big )\) and \(C^-=\Big (-\sqrt{\beta (\rho -1)}, -\sqrt{\beta (\rho -1)},\rho -1\Big )\). For \(\rho <1\), all steady state solutions are stable. For \(\rho >1\), C0 is always unstable and \(C^\pm\) are stable only for \(\sigma >\beta +1\) and \(1<\rho <\rho ^*=\frac{\sigma (\sigma +\beta +3)}{(\sigma -\beta -1)}\). At \(\rho =\rho ^*\), \(C^\pm\) becomes unstable. Here, we take \(\sigma =10\), \(\beta =8/3\) and \(\rho =2\) so that \(C0=(0,0,0)\) is an unstable equilibrium. We solve the HJB equation over \(\Omega =(-2,2)^3\) with \(dt=0.1\), and \(R=0.01.\)

From Fig. 6(i), it can be observed that the unconstrained HJB control quickly tends to zero whereas the constrained control is active up to \(t\sim 3\) and then converges to zero. Figure 6(ii) shows that in absence of control, the state does not tend to the origin but rather to a stable equilibrium. With HJB-constrained or unconstrained control synthesis, the controlled state tends to the origin, with a faster decay rate for the unconstrained control compared to the constrained one.

Similar numerical results were also obtained in the stochastic case.

Remark 4

Although we have not seen any oscillations in control around the neighborhood of the equilibrium solution by numerically solving the HJB equation, in general one may proceed as follows to overcome the oscillations in control, if they arise in the case of non-unique solutions of u

-

1.

Increase the diagonal value in the control tuning matrix R, so that the control effect can be reduced and so oscillation.

-

2.

Another possibility is to reduce the constrained set so that we can observe up to what level constraint can be handled or to avoid kink in the value function, keeping other parameters fixed.

-

3.

Adding a small viscous term to the HJB equation may be useful in some cases to reduce oscillation.

-

4.

Reducing the artificial time step and refining the grid points so that we can solve the HJB equation if the algorithm does not converge.

5 Concluding remarks

We investigated convergence of the policy iteration technique for the stationary HJB equations which arise from the deterministic and stochastic control of dynamical systems in the presence of control constraints. The control constraints are realized as hard constraints by a projection operator rather than by approximation by means of a penalty technique, for example. Numerical examples illustrate the feasibility of the approach and provide a comparison of the behavior of the closed loop controls in the presence of control constraints and without them. The algorithmic realization is based on an upwind scheme.

References

Abu-Khalaf, M., Lewis, F.L.: Nearly optimal control laws for nonlinear systems with saturating actuators using a neural network HJB approach. Autom. J. IFAC 41(5), 779–791 (2005)

Achdou, Y., Han, J., Lasry, J.-M., Lions, P.-L., Moll, B.: Income and wealth distribution in macroeconomics: a continuous-time approach, National Bureau of Economic Research (2017)

Adams, R.A., Fournier, J.J.F.: Sobolev Spaces. Elsevier/Academic Press, Amsterdam (2003)

Alla, A., Falcone, M., Kalise, D.: An efficient policy iteration algorithm for dynamic programming equations. SIAM J. Sci. Comput. 37(1), 181–200 (2015)

Alla, A., Falcone, M., Saluzzi, L.: An efficient DP algorithm on a tree-structure for finite horizon optimal control problems. SIAM J. Sci. Comput. 41, 2384–2406 (2019)

Bardi, M., Capuzzo-Dolcetta, I.: Optimal Control and Viscosity Solutions of Hamilton–Jacobi–Bellman Equations. Birkhäuser, Boston (1997)

Beard, R.W., Saridis, G.N., Wen, J.T.: Galerkin approximation of the generalized Hamilton–Jacobi–Bellman equation. Automatica 33(12), 2159–2177 (1997)

Beard, R.W., Saridis, G.N., Wen, J.T.: Approximate solutions to the time-invariant Hamilton–Jacobi–Bellman equation. J. Optim. Theory Appl. 96(3), 589–626 (1998)

Beard, R.W., Mclain, T.W.: Successive Galerkin approximation algorithms for nonlinear optimal and robust control. Int. J. Control 71(5), 717–743 (1998)

Bokanowski, O., Maroso, S., Zidani, H.: Some convergence results for Howard’s algorithm. SIAM J. Numer. Anal. 47(4), 3001–3026 (2009)

Bokanowski, O., Falcone, M., Sahu, S.: An efficient filtered scheme for some first order time-dependent Hamilton–Jacobi equations. SIAM J. Sci. Comput. 38(1), 171–195 (2016)

Bonnans, J.F., Zidani, H.: Consistency of generalized finite difference schemes for the stochastic HJB equation. SIAM J. Numer. Anal. 41(3), 1008–1021 (2003)

Breiten, T., Kunisch, K., Pfeiffer, L.: Taylor expansions of the value function associated with a bilinear optimal control problem. In Press, Ann. I. H. Poincare-AN (2019)

Cacace, S., Cristiani, E., Falcone, M., Picarelli, A.: A patchy dynamic programming scheme for a class of Hamilton–Jacobi–Bellman equations. SIAM J. Sci. Comput. 34(5), 2625–2649 (2012)

Cannarsa, P., Frankowska, H.: Local regularity of the value function in optimal control. Syst. Control Lett. 62(9), 791–794 (2013)

Capuzzo-Dolcetta, I., Ishii, H.: Approximate solutions of the Bellman equation of deterministic control theory. Appl. Math. Optim. 11(2), 161–181 (1984)

Crandall, M.G., Lions, P.L.: Two approximations of solutions of Hamilton–Jacobi equations. Math. Comput. 43(167), 1–19 (1984)

Dolgov, S., Kalise, D., Kunisch, K.: Tensor decompositions for high-dimensional Hamilton–Jacobi–Bellman equations. arXiv:1908.01533 (2019)

Falcone, M., Ferretti, R.: Semi-Lagrangian Approximation Schemes for Linear and Hamilton-Jacobi Equations. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2014)

Falcone, M., Ferretti, R.: Numerical methods for Hamilton-Jacobi type equations. Handb. Numer. Anal., Elsevier/North-Holland, Amsterdam,17, 603–626 (2016)

Fleming, W.H., Rishel, R.W.: Deterministic and Stochastic Optimal Control. Springer, Berlin (2012)

Fleming, W.H., Soner, H.M.: Controlled Markov Processes and Viscosity Solutions. Springer, Philadelphia (2006)

Garcke, J., Kröner, A.: Suboptimal feedback control of PDEs by solving HJB equations on adaptive sparse grids. J. Sci. Comput. 43, 1–28 (2017)

Goebel, R.: Convex optimal control problems with smooth Hamiltonians. SIAM J. Control Optim. 43(5), 1787–1811 (2005)

Goebel, R., Subbotin, M.: Continuous time linear quadratic regulator with control constraints via convex duality. IEEE Trans. Autom. Control 52(5), 886–892 (2007)

Gonzalez, R., Rofman, E.: On deterministic control problems: an approximation procedure for the optimal cost. I. The stationary problem. SIAM J. Control Optim. 23(2), 242–266 (1985)

Howard, R.: Dynamic Programming and Markov Processes. MIT Press, Cambridge (1960)

Kalise, D., Kunisch, K.: Polynomial approximation of high-dimensional Hamilton–Jacobi–Bellman equations and applications to feedback control of semilinear parabolic PDEs. SIAM J. Sci. Comput. 40(2), 629–652 (2018)

Kravaris, C., Mousavere, D.: Convergence properties of the Newton–Kantorovich iteration for the Hamilton–Jacobi–Bellman equation. In: Proceedings of the 44th IEEE Conference on Decision and Control, and the European Control Conference 2005 Seville, Spain, December 12–15 (2005)

Kröner, A., Kunisch, K., Zidani, H.: Optimal feedback control of undamped wave equations by solving a HJB equation, ESAIM: COCV. EDP Sci. 21(2), 442–464 (2014)

Kushner, H.J., Dupuis, P.: Numerical Methods for Stochastic Control Problems in Continuous Time. Springer, New York, xii+475 (2001)

Kunisch, K., Walter, D.: Semiglobal optimal feedback stabilization of autonomous systems via deep neural network approximation. arXiv:2002.08625v1

Leake, R.J., Liu, R.-W.: Construction of suboptimal control sequences. SIAM J. Control 5(1), 54–63 (1967)

Lukes, D.L.: Optimal regulation of nonlinear dynamical systems. SIAM J. Control 7(1), 75–100 (1969)

Lyshevski, S.E.: Optimal control of nonlinear continuous-time systems: design of bounded controllers via generalized nonquadratic functionals. In: Proceedings of the 1998 American Control Conference. ACC (IEEE Cat. No. 98CH36207) 1, 205–209 (1998)

Mao, X.: Stochastic Differential Equations and Applications. Elsevier, Amsterdam (2007)

Osher, S., Fedkiw, R.: Level set methods and dynamic implicit surfaces. Springer-Verlag, New York, Applied Mathematical Sciences (2003)

Saridis, G.N., Lee, C.S.G.: An approximation theory of optimal control for trainable manipulators. IEEE Trans. Syst. Man Cybern. 9(3), 152–159 (1979)

Sparrow, C.: The Lorenz Equations: Bifurcations, Chaos, and Strange Attractors. Springer, New York (1982)

Stefansson, E., Leong, Y.P.: Sequential alternating least squares for solving high dimensional linear Hamilton–Jacobi–Bellman Equations. In: Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). https://doi.org/10.1109/IROS.2016.7759553 (2016)

Wang, F.Y., Saridis, G.N.: On successive approximation of optimal control of stochastic dynamic systems, Modeling uncertainty, Internat. Ser. Oper. Res. Management Sci., Kluwer Acad. Publ., Boston, MA 46, 333–358 (2002)

Acknowledgements

The authors gratefully acknowledge support by the ERC advanced Grant 668998 (OCLOC) under the EU’s H2020 research program.

Funding

Open access funding provided by University of Graz.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kundu, S., Kunisch, K. Policy iteration for Hamilton–Jacobi–Bellman equations with control constraints. Comput Optim Appl 87, 785–809 (2024). https://doi.org/10.1007/s10589-021-00278-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-021-00278-3

Keywords

- Optimal feedback control

- \({\mathcal {H}}_2\) control synthesis

- Hamilton–Jacobi–Bellman equations

- Policy iteration

- Upwind scheme