Abstract

In this paper, we study the asymptotic properties of regularized least squares with indefinite kernels in reproducing kernel Kreĭn spaces (RKKS). By introducing a bounded hyper-sphere constraint to such non-convex regularized risk minimization problem, we theoretically demonstrate that this problem has a globally optimal solution with a closed form on the sphere, which makes approximation analysis feasible in RKKS. Regarding to the original regularizer induced by the indefinite inner product, we modify traditional error decomposition techniques, prove convergence results for the introduced hypothesis error based on matrix perturbation theory, and derive learning rates of such regularized regression problem in RKKS. Under some conditions, the derived learning rates in RKKS are the same as that in reproducing kernel Hilbert spaces (RKHS). To the best of our knowledge, this is the first work on approximation analysis of regularized learning algorithms in RKKS.

Similar content being viewed by others

1 Introduction

Kernel methods (Schölkopf and Smola 2003; Suykens et al. 2002; Liu et al. 2020) have demonstrated success in statistical learning, such as classification (Zhu and Hastie 2002; Shang et al. 2019), regression (Shi et al. 2019; Farooq and Steinwart 2019), and clustering (Dhillon et al. 2004; Terada and Yamamoto 2019; Liu et al. 2020). The key ingredient of kernel methods is a kernel function, that is positive definite (PD) and can be associated with the inner product of two vectors in a reproducing kernel Hilbert space (RKHS). Nevertheless, in real-world applications, the used kernels might be indefinite (real, symmetric, but not positive definite) (Ying et al. 2009; Loosli et al. 2016; Oglic and Gärtner 2019) due to intrinsic and extrinsic factors. Here, intrinsic means that we often meet some indefinite kernels by specific domain metrics such as tanh kernel (Smola et al. 2001), TL1 kernel (Huang et al. 2018), log kernel (Boughorbel et al. 2005), and hyperbolic kernel (Cho et al. 2019). Meanwhile, extrinsic indicates that some positive definite kernels degenerate to indefinite ones in some cases. An intuitive example is that a linear combination of PD kernels (with negative coefficient) (Ong et al. 2005) is an indefinite kernel. Polynomial kernels on the unit sphere are not always PD (Pennington et al. 2015). In manifold learning, the Gaussian kernel with some geodesic distances would lead to be an indefinite one. In neural networks, the sigmoid kernel with various values of hyper-parameters are mostly indefinite (Ong et al. 2004). We refer to a survey (Schleif and Tino 2015) for details.

Efforts on indefinite kernels are often based on a reproducing kernel Kreĭn space (RKKS) (Ong et al. 2004; Loosli et al. 2016; Alabdulmohsin et al. 2016; Saha and Palaniappan 2020) which is endowed by the indefinite inner product. The (reproducing) indefinite kernel associated with RKKS can be decomposed as the difference between two PD kernels, a.k.a, positive decomposition (Bognár 1974). The related optimization problem is often non-convex due to the non-positive definiteness of the used indefinite kernel. Since the indefinite inner product in RKKS does not define a norm, most previous works on RKKS (Ong et al. 2004; Loosli et al. 2016; Saha and Palaniappan 2020) focus on stabilization instead of risk minimization in RKHS. Here stabilization aims to finding a stationary point (more precisely, saddle points) instead of a minimum. Interestingly, stabilization in RKKS and minimization in RKHS can be linked together in a projection view (Ando 2009). In this sense, indefinite inner product in RKKS in a projection view can be still served as a valid regularization mechanism (Loosli et al. 2016). It is worth noting that, recently, Oglic and Gärtner (2018, 2019) directly consider empirical risk minimization in RKKS restricted in a hyper-sphere, which is demonstrated to generalize well.

In learning theory, the asymptotic behavior of these regularized indefinite kernel learning based algorithms in RKKS has not been fully investigated in an approximation theory view. Current literature (Wu et al. 2006; Steinwart et al. 2009; Lin et al. 2017; Jun et al. 2019) on approximation analysis often focus on regularized methods in RKHS, but their results could not be directly applied to that in RKKS due to the following two reasons. First, approximation analysis in RKHS often requires a (globally) optimal solution yielded by learning algorithms. While most indefinite kernel based methods via stabilization in RKKS seek for saddle points instead of a minimum. In this case, traditional concentration estimates could be invalid to that in RKKS. Second, in RKKS, the regularizer endowed by the indefinite inner product might be negative, which would fail to quantify complexity of a hypothesis. The classical error decomposition technique (Cucker and Zhou 2007; Lin et al. 2017) might be infeasible to our setting in RKKS.

To overcome the mentioned essential problems, in this paper, we study learning rates of least squares regularized regression in RKKS. Motivated by Oglic and Gärtner (2018), we focus on a typical empirical risk minimization in RKKS, i.e., indefinite kernel ridge regression in a hyper-sphere region endowed by the indefinite inner product. For this purpose, we provide a detailed error analysis and then derive learning rates. To be specific, in algorithm, we demonstrate that, the solution to our considered kernel ridge regression model in RKKS with a spherical constraint can be achieved on the hyper-sphere. Subsequently, albeit non-convex, this model admits a global minimum with a closed form as demonstrated by Oglic and Gärtner (2018).

We start the analysis from the regularized algorithm that has an analytical solution and obtain the first-step to understand the learning behavior in RKKS. In theory, we modify the traditional error decomposition approach, and thus the excess error can be bounded by the sample error, the regularization error, and the additional hypothesis error. We provide estimates for the introduced hypothesis error based on matrix perturbation theory for non-Hermitian and non-diagonalizable matrices and then derive convergence rates of such model. Our analysis is able to bridge the gap between the least squares regularized regression problem in RKHS and RKKS. Under some conditions, the derived learning rates in RKKS is the same as that in RKHS (the best case). To the best of our knowledge, this is the first work to study learning rates of regularized risk minimization in RKKS.

The rest of the paper is organized as follows. In Sect. 2, we briefly introduce the basic concepts of Kreĭn spaces and RKKS. Section 3 presents the problem setting and main results under some fair assumptions. In Sect. 4, we present the least squares regularized regression model in RKKS and give a globally optimal solution to aid the proof. In Sect. 5, we give the framework of convergence analysis for the modified error decomposition technique, detail the estimates for the introduced hypothesis error, and derive the learning rates. In Sect. 6, we report numerical experiments to demonstrate our theoretical results and the conclusion is drawn in Sect. 7.

2 Preliminaries

In this section, we briefly introduce the definitions and basic properties of Kreĭn spaces and the reproducing kernel Kreĭn space (RKKS) that we shall need later. Detailed expositions can be found in the book by Bognár (1974).

We begin with a vector space \({{\mathcal {H}}_{\mathcal{K}}}\) defined on the scalar field \({\mathbb {R}}\).

Definition 1

(Inner product space) An inner product space is a vector space \({{\mathcal {H}}_{\mathcal{K}}}\) defined on the scalar field \({\mathbb {R}}\) together with a bilinear form \(\langle \cdot , \cdot \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) called inner product that satisfies the following conditions

- i):

-

symmetry: \(\forall f,g \in {{\mathcal {H}}_{\mathcal{K}}}\), we have \(\langle f, g \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} = \langle g,f \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\).

- ii):

-

linearity: \(\forall f,g,h \in {{\mathcal {H}}_{\mathcal{K}}}\) and two scalars \(a,b \in {\mathbb {R}}\), we have \(\langle af+bg, h \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} = a\langle f, h \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} + b\langle g, h \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}.\)

- iii):

-

non-degenerate: for \(f \in {{\mathcal {H}}_{\mathcal{K}}}\), if \(\langle f, g \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} = 0\) for all \(g \in {{\mathcal {H}}_{\mathcal{K}}}\) implies that \(f=0\).

If \(\langle f, f \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} > 0\) holds for any \(f \in {{\mathcal {H}}_{\mathcal{K}}}\) with \(f \ne 0\), then the inner product on \({{\mathcal {H}}_{\mathcal{K}}}\) is positive. If there exists \(f,g \in {{\mathcal {H}}_{\mathcal{K}}}\) such that \(\langle f, f \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} > 0\) and \(\langle g, g \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} < 0\), then the inner product is called indefinite, and \({{\mathcal {H}}_{\mathcal{K}}}\) is an indefinite inner product space. Recall that Hilbert spaces satisfy the above conditions and admit the positive inner product. After reviewing the indefinite inner product, we are ready to introduce the definition of Kreĭn space.

Definition 2

(Kreĭn space, Bognár 1974) The vector space \({{\mathcal {H}}_{\mathcal{K}}}\) with the inner product \(\langle \cdot , \cdot \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) is a Kreĭn space if there exist two Hilbert spaces \({\mathcal {H}}_{+}\) and \({\mathcal {H}}_{-}\) such that

- i):

-

the vector space \({{\mathcal {H}}_{\mathcal{K}}}\) admits a direct orthogonal sum decomposition \({{\mathcal {H}}_{\mathcal{K}}} = {\mathcal {H}}_{+} \oplus {\mathcal {H}}_{-}\).

- ii):

-

all \(f \in {{\mathcal {H}}_{\mathcal{K}}}\) can be decomposed into \(f=f_{+}+f_{-}\), where \(f_{+} \in {\mathcal {H}}_{+}\) and \(f_{-} \in {\mathcal {H}}_{-}\), respectively.

- iii):

-

\(\forall f,g \in {{\mathcal {H}}_{\mathcal{K}}}\), \(\langle f,g\rangle _{{{\mathcal {H}}_{\mathcal{K}}}}=\langle f_{+},g_{+}\rangle _{{\mathcal {H}}_{+}} - \langle f_{-},g_{-}\rangle _{{\mathcal {H}}_{-}}.\)

From the definition, the decomposition \({{\mathcal {H}}_{\mathcal{K}}} = {\mathcal {H}}_{+} \oplus {\mathcal {H}}_{-}\) is not necessarily unique. For a fixed decomposition, the inner product \(\langle f, g \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) is given accordingly (Loosli et al. 2016; Oglic and Gärtner 2018). Kreĭn spaces are indefinite inner product spaces endowed with a Hilbertian topology. The key difference with Hilbert spaces is that the inner products might be negative for Kreĭn spaces, i.e., there exists \(f \in {{\mathcal {H}}_{\mathcal{K}}}\) such that \(\langle f,f\rangle _{{{\mathcal {H}}_{\mathcal{K}}}} < 0\). If \({\mathcal {H}}_{+}\) and \({\mathcal {H}}_{-}\) are two RKHSs, the Kreĭn space \({{\mathcal {H}}_{\mathcal{K}}}\) is a RKKS associated with a unique indefinite reproducing kernel k such that the reproducing property holds, i.e., \(\forall f \in {{\mathcal {H}}_{\mathcal{K}}},~f(x) = \langle f,k(x,\cdot ) \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\).

Proposition 1

(Positive decomposition, Bognár 1974) An indefinite kernel k associated with a RKKS admits a positive decomposition \(k = k_{+} - k_{-}\), with two positive definite kernels \(k_{+}\) and \(k_{-}\).

Typical examples include a wide range of commonly used indefinite kernels, such as a linear combination of PD kernels (with negative coefficients) (Ong et al. 2005; Oglic and Gärtner 2018), and conditionally PD kernels (Schaback 1999; Wendland 2004). It is important to note that, not every indefinite kernel function admits such positive decomposition as a difference between two positive definite kernels. Nevertheless, this can be conducted on finite discrete spaces, e.g., eigenvalue decomposition of indefinite kernel matrices. In fact, for any given an indefinite kernel, whether it can be associated with RKKS still remains a long-lasting open question. For example, the hyperbolic kernel (Cho et al. 2019) is based on the hyperboloid model (Sala et al. 2018), in which the distance between two point is defined as the length of the geodesic path on the hyperboloid that connects the two points. Although the used hyperboloid space stems from a finite-dimensional Kreĭn space, it is unclear whether the derived hyperbolic kernel is associated with RKKS or not. Besides, the existence of positive decomposition for the TL1 kernel (Huang et al. 2018), defined by the truncated \(\ell _1\) distance, is also unknown. Our results in this paper are based on RKKS, and can be applied to these kernels if they can be associated with RKKS.

Definition 3

(Associated RKHS of RKKS (Ong et al. 2004)) Let \({{{\mathcal {H}}_{\mathcal{K}}}}\) be a RKKS with the direct orthogonal sum decomposition into two RKHSs \({{\mathcal {H}}_{+}}\) and \({{\mathcal {H}}_{-}}\). Then the associated RKHS \({{\mathcal {H}}_{{\bar{\mathcal{K}}}}}\) endowed by \({{{\mathcal {H}}_{\mathcal{K}}}}\) is defined with the positive inner product

Note that \({{\mathcal {H}}_{{\bar{\mathcal{K}}}}}\) is the smallest Hilbert space majorizing the RKKS \({{\mathcal {H}}_{\mathcal{K}}}\) with \(| \langle f,f\rangle _{{{{\mathcal {H}}_{\mathcal{K}}}}} | \le \Vert f\Vert _{{\mathcal {H}}_{{\bar{\mathcal{K}}}}}^2 = \Vert f_{+}\Vert _{{\mathcal {H}}_{+}}^2 + \Vert f_{-}\Vert _{{\mathcal {H}}_{-}}^2\). Denote C(X) as the space of continuous functions on X with the norm \(\Vert \cdot \Vert _{\infty }\), and suppose that \(\kappa :=\sqrt{2}\sup _{\varvec{x} \in X} \sqrt{k_{+}(\varvec{x}, \varvec{x}) + k_{-}(\varvec{x}, \varvec{x}')} < \infty\). The reproducing property in RKKS indicates that \(\forall f \in {{\mathcal {H}}_{\mathcal{K}}}\), we have

Definition 4

(The empirical covariance operator in RKKS (Pȩkalska and Haasdonk 2009)) Let k be an indefinite kernel associated with a RKKS \({{{\mathcal {H}}_{\mathcal{K}}}}\), \(\psi : X \rightarrow {{\mathcal {H}}_{\mathcal{K}}}\) be a mapping of the data in \({{{\mathcal {H}}_{\mathcal{K}}}}\) and \(\varvec{\varPsi }= [\psi (\varvec{x}_1), \psi (\varvec{x}_2), \ldots , \psi (\varvec{x}_m)]\) be a sequence of images of the training data in \({{{\mathcal {H}}_{\mathcal{K}}}}\), then its empirical non-centered covariance operator \(T: {{{\mathcal {H}}_{\mathcal{K}}}} \rightarrow {{{\mathcal {H}}_{\mathcal{K}}}}\) is defined by

which is not positive definite in the Hilbert sense, but it is in the Kreĭn sense satisfying \(\langle \zeta , T \zeta \rangle _{{{\mathcal {H}}_{\mathcal{K}}}} \ge 0\) for \(\zeta \ne 0\).

The operator T actually depends on the sample set and can be linked to an empirical kernel (Guo and Shi 2019). In our paper, we choose the mapping \(\psi (\varvec{x}) : = k(\varvec{x}, \cdot )\) to obtain the empirical covariance operator T. Since \(\langle f, T f \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) is nonnegative, we use it as a regularizer to aid our proof.

3 Problem setting and main results

In this section, we introduce our problem setting and present our results under some fair assumptions.

3.1 Problem setting

Let X be a compact metric space and \(Y \subseteq {\mathbb {R}}\), we assume that a sample set \(\varvec{z} = \{ (\varvec{x}_i, y_i) \}_{i=1}^m \in Z^m\) is drawn from a non-degenerate Borel probability measure \(\rho\) on \(X \times Y\). In the context of statistical learning theory, the target function of \(\rho\) is defined by \(f_{\rho }(\varvec{x}) = \int _Y y \mathrm {d} \rho (y|\varvec{x}), \varvec{x} \in X\), where \(\rho (\cdot |\varvec{x})\) is the conditional distribution of \(\rho\) at \(\varvec{x} \in X\). The indefinite kernel function \(k: X \times X \rightarrow {\mathbb {R}}\) is endowed by the RKKS \({{\mathcal {H}}_{\mathcal{K}}}\). The associated indefinite kernel matrix is given by \(\varvec{K} = [k(\varvec{x}_i, \varvec{x}_j)]_{i,j=1}^m\) on the sample set. The goal of a supervised learning task in RKKS endowed by k is to find a hypothesis \(f: X \rightarrow Y\) such that \(f(\varvec{x})\) is a good approximation of the label \(y \in Y\) corresponding to a new instance \(\varvec{x} \in X\).

Motivated by Oglic and Gärtner (2018), we consider the least squares regularized regression problem in a bounded region induced by the original regularization mechanism of RKKS

where \({\mathcal {B}} (r) :=\left\{ f \in {\text {span}}\{ k(\varvec{x}_1,\cdot ), k(\varvec{x}_2,\cdot ), \ldots , k(\varvec{x}_m,\cdot ) \}: \frac{1}{m} \sum _{i=1}^{m} \left( f(\varvec{x}_i) \right) ^2 \le r^2 \right\}\) is assumed to be spanned by the training data \(\{ \varvec{x}_i \}_{i=1}^m\) in \({{\mathcal {H}}_{\mathcal{K}}}\) in a bounded hyper-sphere. This setting can be also used in Ong et al. (2004). Here we employ the original regularization mechanism of RKKS, which aims to understand the learning behavior in RKKS and avoid the inconsistency when using various regularizers spanned by different spaces. Our result in fact can be applied to other settings with different regularizers. Following Oglic and Gärtner (2018), we consider a risk minimization problem in a hyper-sphere instead of stabilization. The considered hyper-spherical constraint in RKKS is able to prohibit the objective function value in Problem (3) approaches to infinity, avoiding a meaningless solution. The radius r can be chosen by cross validation or hyper-parameter optimization (Oglic and Gärtner 2018) in practice and is naturally needed and common in classical approximation analysis in RKHS (Wu et al. 2006; Cucker and Zhou 2007; Steinwart et al. 2009). Such risk minimization problem still preserves the specifics of learning in RKKS, i.e., there exists some points \(f \in {\mathcal {B}} (r)\) such that \(\langle f,f\rangle _{{{\mathcal {H}}_{\mathcal{K}}}} < 0\), and generalizes well when compared to stabilization, as indicated by Oglic and Gärtner (2018). One main reason why we consider the risk minimization problem is that, stabilization in RKKS does not necessarily have a unique saddle point, which makes approximation analysis infeasible to define the concentration of certain empirical hypotheses around some target hypothesis. Conversely, the studied risk minimization in a hyper-sphere, problem 3, leads to a (globally) optimal solution. This nice result motivates us to obtain the first-step to understand the learning behavior in RKKS.

3.2 Main results

In this section, we state and discuss our main results. To illustrate our analysis, we need the following notations and assumptions.

In the least squares regression problem, the expected (quadratic) risk is defined as \({\mathcal {E}}(f) = \int _Z (f(\varvec{x}) - y)^2 \mathrm {d} \rho\). The empirical risk functional is defined on the sample \(\varvec{z}\), i.e., \({\mathcal {E}}_{\varvec{z}}(f) = \frac{1}{m} \sum _{i=1}^{m} \big (f( \varvec{x}_i) - y_i \big )^2\). To measure the estimation quality of \(f_{\varvec{z}, \lambda }\), one natural way in approximation theory is the excess risk: \({\mathcal {E}}(f_{\varvec{z}, \lambda }) - {\mathcal {E}}(f_{\rho })\).

Assumption 1

(Existence and boundedness of \(f_{\rho }\)) we assume that the target function \(f_{\rho } \in {{\mathcal {H}}_{\mathcal{K}}}\) exists and bounded. There exits a constant \(M^* \ge 1\), such that

Remark

This is a standard assumption in approximation analysis (Cucker and Zhou 2007; Lin et al. 2017; Rudi and Rosasco 2017). Here we remark that existence of \(f_{\rho }\) implies a bounded hyper-sphere region is needed, e.g., the used radius r in problem (3). In fact, the existence of \(f_{\rho }\) is not ensured if we consider a potentially infinite dimensional RKKS \({{\mathcal {H}}_{\mathcal{K}}}\), possibly universal (Steinwart and Andreas 2008). Instead, in approximation analysis, the infinite dimensional RKKS is substituted by a finite one, i.e., \({\mathcal {H}}_{{\mathcal {K}}}^r = \{ f \in {{\mathcal {H}}_{\mathcal{K}}}: \Vert f \Vert \le r \}\) with r fixed a priori, where the norm \(\Vert f \Vert\) is defined in some associated Hilbert spaces, e.g., \({{\mathcal {H}}_{{\bar{\mathcal{K}}}}}\) or using the non-negative inner product \(\langle f, T f \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) by the empirical covariance operator. In this case, a minimizer of risk \({\mathcal {E}}\) always exists but r is fixed with a prior and \({\mathcal {H}}_{{\mathcal {K}}}^r\) cannot be universal. As a result, assuming the existence of \(f_{\rho }\) implies that \(f_{\rho }\) belongs to a ball of radius \(r_{\rho , {{\mathcal {H}}_{\mathcal{K}}}}\). So this is the reason why the spherical constraint is indeed taken into account in approximation analysis.

For a tighter bound, we need the following projection operator.

Definition 5

(Projection operator (Chen et al. 2004)) For \(B > 0\), the projection operator \(\pi :=\pi _{B}\) is defined on the space of measurable functions \(f: X \rightarrow {\mathbb {R}}\) as

and then the projection of f is denoted as \(\pi _B(f)(\varvec{x}) = \pi _B(f(\varvec{x}))\).

The projection operator is beneficial to the \(\Vert \cdot \Vert _{\infty }\)-bounds for sharp estimation. Besides, we consider the standard output assumptionFootnote 1\(|y| \le M\), and then we have \({\mathcal {E}}_{\varvec{z}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) \le {\mathcal {E}}_{\varvec{z}}\big (f_{\varvec{z},\lambda }\big )\). So it is more accurate to estimate \(f_{\rho }\) by \(\pi _{M^*}(f_{\varvec{z},\lambda })\) instead of \(f_{\varvec{z},\lambda }\). Therefore, our approximation analysis attempts to bound the error \(\Vert \pi _{M^*}(f_{\varvec{z},\lambda }) - f_{\rho } \Vert ^2_{L_{\rho _X}^{p^*}}\) in the space \({L_{\rho _X}^{p^*}}\) with some \(p^*>0\), where \(L^{p^*}_{\rho _{{X}}}\) is a weighted \(L^{p^*}\)-space with the norm \(\Vert f\Vert _{L^{p^*}_{\rho _{{X}}}} = \Big ( \int _{{X}} |f(\varvec{x})|^{p^*} \mathrm {d} \rho _{X}(\varvec{x}) \Big )^{1/{p^*}}\). Specifically, in our analysis, the excess error is exactly the distance in \(L_{\rho _X}^{2}\) due to the strong convexity of the squared loss.

To derive the learning rates, we need to consider the approximation ability of \({{\mathcal {H}}_{\mathcal{K}}}\) with respect to its capacity and \(f_{\rho }\) in \(L_{\rho _X}^{2}\). Since the original regularizer \(\langle f,f\rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) in RKKS fails to quantify complexity of a hypothesis, here we use the empirical regularizer \(\langle f, T f \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) in Definition 4 as an alternative. Note that, other RKHS regularizers, such as \(\langle f,f \rangle _{{\mathcal {H}}_{{\bar{\mathcal{K}}}}}\) in Definition 3, is also acceptable, but the used \(\langle f, Tf \rangle _{{{{\mathcal {H}}_{\mathcal{K}}}}}\) will result in elegant and concise theoretical results. Accordingly, the approximation ability of \({{\mathcal {H}}_{\mathcal{K}}}\) can be characterised by the regularization error.

Assumption 2

Rregularity condition) The regularization error of \({{\mathcal {H}}_{\mathcal{K}}}\) is defined as

The target function \(f_{\rho }\) can be approximated by \({{\mathcal {H}}_{\mathcal{K}}}\) with exponent \(0 < \beta \le 1\) if there exists a constant \(C_0\) such that

Remark

This is a natural assumption and approximation theory requires it, e.g., Wu et al. (2006); Wang and Zhou (2011); Steinwart and Andreas (2008). Note that \(\beta =1\) is the best choice as we expect, which is equivalent to \(f_{\rho } \in {{\mathcal {H}}_{\mathcal{K}}}\) when \({{\mathcal {H}}_{\mathcal{K}}}\) is dense.

Furthermore, to quantitatively understand how the complexity of \({{\mathcal {H}}_{\mathcal{K}}}\) affects the learning ability of algorithm (3), we need the capacity (roughly speaking the “size”) of \({{\mathcal {H}}_{\mathcal{K}}}\) measured by covering numbers.

Definition 6

(Covering numbers (Cucker and Zhou 2007; Shi et al. 2019)) For a subset Q of C(X) and \(\epsilon > 0\), the covering number \({\mathscr {N}}(Q, \epsilon )\) is the minimal integer \(l \in {\mathbb {N}}\) such that there exist l disks with radius \(\epsilon\) covering Q.

In this paper, the covering numbers of balls are defined by

as subsets of \(L^{\infty }(X)\). Note that the used R in Eq. (6) and r in problem (3) admits \(R=Cr\) for some positive constant C, as the definition of such non-negative inner product leads to a hyper-sphere with different radius. Hence there is no difference of using R or r in our analysis and thus we directly use R for convenience.

Assumption 3

(Capacity) We assume that for some \(s>0\) and \(C_s>0\) such that

Remark

This is a standard assumption to measure the capacity of \({{\mathcal {H}}_{\mathcal{K}}}\) that follows with that of RKHS (Cucker and Zhou 2007; Wang and Zhou 2011; Shi et al. 2019), When X is bounded in \({\mathbb {R}}^d\) and \(k \in C^{\tau }(X \times X)\), Eq. (7) always holds true with \(s=\frac{2d}{\tau }\). In particular, if \(k \in C^{\infty }(X \times X)\), Eq. (7) is still valid for an arbitrary small \(s>0\).

It can be noticed that, the capacity of a RKHS can be also measured by eigenvalue decay of the PSD kernel matrix, which has been has been fully studied, e.g., Steinwart and Andreas (2008), Bach (2013). A small RKHS indicates a fast eigenvalue decay so as to obtain a promising prediction performance. In other words, functions in the RKHS are potentially smoother than what is necessary, which means an arbitrary small s in Assumption 3. Nevertheless, eigenvalue decay of the indefinite kernel matrix has not been studied before due to the extra negative eigenvalues. By virtue of eigenvalue decomposition \(\varvec{K} = \varvec{K}_{+} - \varvec{K}_{-}\) with two PSD matrices \(\varvec{K}_{\pm }\), we can easily make the assumption for \(\varvec{K}\) based on the eigenvalue decay of \(\varvec{K}_{\pm }\).

Assumption 4

(Eigenvalue assumption for indefinite kernel matrices) Suppose that the indefinite kernel matrix \(\varvec{K} = \varvec{V} \varvec{\varSigma }\varvec{V}^{\!\top }\) has p positive eigenvalues, q negative eigenvalues, and \(m-p-q\) zero eigenvalues, i.e., \(\varvec{\varSigma }= \varvec{\varSigma }_{+} + \varvec{\varSigma }_{-}\), where \(\varvec{\varSigma }_{+} = {{\,\mathrm{diag}\,}}( \sigma _1, \sigma _2, \ldots \sigma _{p}, 0,\ldots , 0)\), \(\varvec{\varSigma }_{-} = {{\,\mathrm{diag}\,}}(0,\ldots ,0, \sigma _{m-q+1}, \ldots , \sigma _{m})\) with the decreasing order \(\sigma _1 \ge \ldots \ge \sigma _p> 0 > \sigma _{m-q+1} \ge \cdots \ge \sigma _{m}\) and \(\sigma _{p+1} = \sigma _{p+2} = \cdots = \sigma _{m-q} = 0\). Here we assume that its (positive) largest eigenvalue satisfies \(\sigma _1 \ge c_1 m^{\eta _1}\) with \(c_1>0\), \(\eta _1 > 0\) and its smallest (negative) eigenvalue admits \(\sigma _m \le c_m m^{\eta _2}\) with \(c_m<0\), \(\eta _2 > 0\). And we denote \(\eta :=\min \{ \eta _1, \eta _2 \}\).

Remark

Our assumption only requires the lower bound of the largest (positive) eigenvalue and the upper bound of the smallest (negative) eigenvalue, which is weaker than the common decay of a PSD kernel matrix, e.g., polynomial/exponential decay. In particular, if we take these common eigenvalue decays of \(\varvec{K}_{\pm }\), then our assumption on \(\sigma _1\) and \(\sigma _m\) is naturally satisfied. To be specific, Bach (2013) considers three eigenvalue decays of a PSD kernel matrix, including i) the exponential decay \(\sigma _i \propto m e^{-ci}\) with \(c > 0\), ii) the polynomial decay \(\sigma _i \propto m i^{-2t}\) with \(t \ge 1\), and iii) the slowest decay with \(\sigma _i \propto m /i\). Hence, under such three eigenvalue decays of \(\varvec{K}_{\pm }\), then our assumption on \(\sigma _1 \ge c_1 m^{\eta _1}\) and \(\sigma _m \le c_m m^{\eta _2}\) always holds. Specifically, although the number of positive/negative eigenvalues depends on the sample set, our theoretical results will be independent of the unknown p and q.

Formally, our main result about least squares regularized regression in RKKS is stated as follows.

Theorem 1

Suppose that \(|f_{\rho }(x)|\le M^*\) with \(M^* \ge 1\) in Assumption 1, \(\rho\) satisfies the condition in Eq. (5) with \(0 < \beta \le 1\) in Assumption 2, the indefinite kernel matrix \(\varvec{K}\) satisfies the eigenvalue assumption in Assumption 4 with \(\eta = \min \{ \eta _1, \eta _2 \} > 0\). Assume that for some \(s>0\) in Assumption 3, take \(\lambda :=m^{-\gamma }\) with \(0 < \gamma \le 1\). Let

Then for \(0<\delta <1\) with confidence \(1-\delta\), when \(\gamma + \eta > 1\), we have

where \(\widetilde{C}\) is a constant independent of m or \(\delta\) and the power index \(\varTheta\) is

where \(\eta\) is further restricted by \(\max \{0, 1-2/s\}< \eta < 1\) for a positive \(\varTheta\), i.e., a valid learning rate.

We hence directly have the following corollary that corresponds to learning rates in RKHS.

Corollary 1

(Link to learning rates in RKHS) If \(\eta :=\min \{ \eta _1, \eta _2 \} \ge 1\) in Assumption 4, the power index \(\varTheta\) in Eq. (9) can be simplified as

which is actually the learning rate for least squares regularized regression in RKHS, independent of \(\eta\).

Remark

We provide learning rates in RKKS in Theorem 1 and also demonstrate the relation of the derived learning rates between RKKS and RKHS in Corollary 1. We make the following remarks.

- i):

-

In Theorem 1, our results choose \(\lambda :=m^{-\gamma }\) and the radius R (or r) is implicit in Eq. (9). The estimation for R depends on a bound for \(\lambda \langle f_{\varvec{z},\lambda } ,Tf_{\varvec{z},\lambda } \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\), see Lemma 3 for details. Note that s can be arbitrarily small when the kernel k is \(C^{\infty }(X \times X)\). In this case, \(\varTheta\) in Eq. (9) can be arbitrarily close to \(\min ({\gamma \beta }, \gamma + \eta -1)\).

- ii):

-

Corollary 1 derives the learning rates in RKHS, which recovers the result of Wang and Zhou (2011) for least squares in RKHS. That is, when choosing \(\beta =1\) and s is small enough, the derived learning rate in Corollary 1 can be arbitrary close to 1, and hence is optimal (Wang and Zhou 2011).

- iii):

-

Based on Theorem 1 and Corollary 1, we find that if \(\eta :=\min \{ \eta _1, \eta _2 \} \ge 1\), our analysis for RKKS is the same as that in RKHS. This is the best case. However, if \(\eta < 1\), the derived learning rate in RKKS demonstrated by Eq. (9) is not faster than that in RKHS. This is reasonable since the spanning space of RKKS is larger than that of RKHS.

The proof of Theorem 1 is fairly technical and lengthy, and we briefly sketch some main ideas in the next section.

Furthermore, if problem (3) considers some nonnegative regularizers, such as \(\Vert f\Vert _{{\mathcal {H}}_{{\bar{\mathcal{K}}}}}^2\) in Definition 3 and \(\langle f,Tf\rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) in Definition 4, the analysis would be simplified due to the used nonnegative regularizer. To be specific, denote \(\overline{f_{\varvec{z},\lambda }} :={{\,\mathrm{argmin}\,}}_{f \in {\mathcal {B}} (r)} \left\{ \frac{1}{m} \sum _{i=1}^{m} \big (f(\varvec{x}_i) - y_i \big )^2 + \lambda \Vert f\Vert _{{\mathcal {H}}_{{\bar{\mathcal{K}}}}}^2 \right\}\) as demonstrated by Oglic and Gärtner (2018), its learning rate could be given by the following corollary.

Corollary 2

Under the same assumption with Theorem 1 (without the eigenvalue assumption), by defining the regularization error as

satisfying \(D'(\lambda ) \le C'_0\lambda ^{\beta '}\) with a constant \(C'_0\) and \(\beta ' \in (0,1]\), we have

where \(\widetilde{C}'\) is a constant independent of m or \(\delta\) and the power index \(\varTheta '\) is defined as Eq. (10) with \(\beta '\).

Remark

In fact, Corollary 2 gives the convergence rates of the model in Oglic and Gärtner (2018).

Note that the learning rates would be effected by different regularizers, as indicated by the regularization error in Assumption 2. In Table 1 we summarize the learning rates of problem (3) with different non-negative regularizers. Although the associated Hilbert space norms generated by different decomposition of the Krein space are topologically equivalent (Langer 1962), the derived learning rates cannot be ensured to be the same due to their respective spanning/solving spaces. Besides, Oglic and Gärtner (2019) demonstrate that, stabilization of support vector machine (SVM) in RKKS can be transformed to a risk minimization problem with a PSD kernel matrix by taking the absolute value of negative eigenvalues of the original indefinite one. That means, stabilization of SVM in RKKS could also achieve the same convergence behavior as risk minimization with a PSD kernel matrix in RKHS, e.g., Steinwart and Scovel (2007). Accordingly, the considered problem (3), i.e., risk minimization in RKKS with the original regularizer induced by the indefinite inner product is general. The obtained results in Theorem 1 provide the worst case, and can be improved to the same learning rates as other settings, e.g., least-squares in RKHS, minimization in RKKS but with non-negative regularizers.

4 Solution to regularized least-squares in RKKS

In this section, we study the optimization problem (3), obtain a globally optimal solution to aid our analysis.

By virtue of \(f=\sum _{i=1}^m \alpha _i k(\varvec{x}_i,\cdot )\), problem (3) can be formulated as

where the output is \(\varvec{y}=[y_1, y_2, \ldots , y_m]^{\!\top }\). We can see that the above regularized risk minimization problem is in essence non-convex due to the non-positive definiteness of \(\varvec{K}\). But more exactly, problem (11) is non-convex when \(\frac{1}{m} \varvec{K}^2 + \lambda \varvec{K}\) is indefinite. This condition always holds in practice due to \(m \gg \lambda\). Following Oglic and Gärtner (2018), we do not strictly distinguish between the two differences in this paper. This is because, approximation analysis considers the \(m \rightarrow \infty\) and \(\lambda \rightarrow 0\) case, so it always holds true when m is large enough. Even if \(\frac{1}{m} \varvec{K}^2 + \lambda \varvec{K}\) is PSD, our analysis for problem (11) is still applicable and reduces to a special case (i.e., using a RKHS regularizer), of which the learning rates are demonstrated by Corollary 2.

To obtain a global minimum of problem (11), we need the following proposition.

Proposition 2

Problem (11) is equivalent to

Proof

Denote the objective function in problem (11) as \(F(\varvec{\alpha }) = \frac{1}{m} \Vert \varvec{K} \varvec{\alpha }- \varvec{y} \Vert _2^2 + \lambda \varvec{\alpha }^{\!\top } \varvec{K} \varvec{\alpha }\), we aim to prove that the solution \(\varvec{\alpha }^* :={{\,\mathrm{argmin}\,}}_{\varvec{\alpha }} F(\varvec{\alpha })\) of this unconstrained optimization problem would be unbounded. Due to the non-positive definiteness of \(\frac{1}{m} \varvec{K}^2 + \lambda \varvec{K}\), there exists an initial solution \(\varvec{\alpha }_0\) such that

By constructing a solving sequence \(\{ \varvec{\alpha }_i \}_{i=0}^{\infty }\) admitting \(\varvec{\alpha }_{i+1} :=c \varvec{\alpha }_{i}\) with \(c > 1\), we have

which indicates that, after the t-th iteration, \({F}(\varvec{\alpha }_t)< c^t {F} (\varvec{\alpha }_0) < 0\) and \(\Vert \varvec{\alpha }_t\Vert _2 = c^t \Vert \varvec{\alpha }_0 \Vert _2\) with \(c>1\). Therefore, the minimum \(F(\varvec{\alpha }^*)\) is unbounded, and tends to negative infinity. In this case, \(\Vert \varvec{\alpha }^* \Vert _2\) would also approach to infinity, i.e., a meaningless solution. Based on the above analyses, for problem \(\min _{\varvec{\alpha }} F(\varvec{\alpha })\), by introducing the constraint \(\varvec{\alpha }^{\!\top } \varvec{K}^2 \varvec{\alpha }\le mr^2\), its solution is obtained on the hyper-sphere, i.e., \(\varvec{\alpha }^{\!\top } \varvec{K}^2 \varvec{\alpha }= mr^2\), which concludes the proof. \(\square\)

As demonstrated by Proposition 2, the inequality constraint in problem (11) can be transformed into an equality constraint, which is also suitable to problem (3). Then, albeit non-convex, problem (12) can be formulated as solving a constrained eigenvalue problem (Gander et al. 1988; Oglic and Gärtner 2018), yielding an optimal solution with closed-form.Footnote 2 Accordingly, the optimal solution \(\varvec{\alpha }_{\varvec{z}, \lambda }\) is given by

where the notation \((\cdot )^{\dag }\) denotes the pseudo-inverse, \(\varvec{I}\) is the identity matrix, and \({\mu }\) is the smallest real eigenvalue of the following non-Hermitian matrix

where \(\varvec{K}^{\dag }\) is the pseudo-inverse of \(\varvec{K}\), i.e. \(\varvec{K}^{\dag } = \varvec{V} {{\,\mathrm{diag}\,}}\big ( \varvec{\varSigma }_1, \varvec{0}_{m-p-q}, \varvec{\varSigma }_2 \big ) \varvec{V}^{\!\top }\) with two invertible diagonal matrices

It is clear that we cannot directly calculate \(\mu\). However, \(\mu\) is very important in our analysis and thus we attempt to estimate it based on matrix perturbation theory (Stewart and Sun 1990). We will detail this in Sect. 5.

Besides, to aid our analysis, we introduce another nonnegative regularization scheme in RKKS to problem (3)

where the empirical covariance operator T is defined in RKKS but nonnegative, see Definition 4. Based on the above regularized risk minimization problem and Eq. (2), the regularizer can be represented as

Accordingly, problem (16) can be formulated as

with \(\widetilde{\varvec{\alpha }_{\varvec{z}, \lambda }} = -\frac{1}{m {\widetilde{\mu }}} \varvec{K}^{\dag } \varvec{y}\), and \({\widetilde{\mu }}\) is the smallest real eigenvalue of the matrix

By Sylvester’s determinant identity, we directly calculate the largest and smallest real eigenvalues of \(\widetilde{\varvec{G}}\) as \(\frac{\Vert \varvec{y} \Vert _2}{\sqrt{m}mr}\) and \(-\frac{\Vert \varvec{y} \Vert _2}{\sqrt{m}mr}\), respectively. So we have \({\widetilde{\mu }} = -\frac{\Vert \varvec{y} \Vert _2}{m\sqrt{m}r} < 0\). Note that the regularizer in problem (16) can be also chosen to be other RKHS regularizers, such as \(\langle f,f \rangle _{{\mathcal {H}}_{{\bar{\mathcal{K}}}}}\) in Definition 3. But using the empirical kernel regularizer \(\langle f, Tf \rangle _{{{{\mathcal {H}}_{\mathcal{K}}}}}\), one obtains elegant and concise theoretical results, i.e., directly compute \({\widetilde{\mu }}\).

5 Framework of proofs

In this section, we establish the framework of proofs for Theorem 1. By the modified error decomposition technique in Sect. 5.1, the total error can be decomposed into the regularization error, the sample error, and an additional hypothesis error. We detail the estimates for the hypothesis error in Sect. 5.2. These two points are the main elements on novelty in the proof. We briefly introduce estimates for the sample error in Sect. 5.3 and derive the learning rates in Sect. 5.4.

5.1 Error decomposition

In order to estimate error \(\Vert \pi _{M^*}(f_{\varvec{z},\lambda }) - f_{\rho } \Vert\) in the \(L_{\rho _X}^{2}\) space, i.e., to bound \(\Vert \pi _{B}(f_{\varvec{z},\lambda }) - f_{\rho } \Vert\) for any \(B \ge M^*\), we need to estimate the excess error \({\mathcal {E}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) - {\mathcal {E}}(f_{\rho })\) which can be conducted by an error decomposition technique (Cucker and Zhou 2007). However, since \(\langle f_{\varvec{z},\lambda },f_{\varvec{z},\lambda } \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) might be negative, traditional techniques are invalid. Formally, our modified error decomposition technique is given by the following proposition by introducing an additional hypothesis error.

Proposition 3

Let \(f_\lambda = {{\,\mathrm{argmin}\,}}_{f \in {{\mathcal {H}}_{\mathcal{K}}}} \Big \{ {\mathcal {E}}(f) - {\mathcal {E}}(f_{\rho }) + \lambda \langle f,Tf\rangle _{{{\mathcal {H}}_{\mathcal{K}}}} \Big \}\), then \({\mathcal {E}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) - {\mathcal {E}}(f_{\rho })\) can be bounded by

where \(D(\lambda )\) is the regularization error defined by Eq. (4). The sample error \(S(\varvec{z}, \lambda )\) is given by

The introduced hypothesis error \(P(\varvec{z}, \lambda )\) is defined by

where \(f_{\varvec{z}, \lambda }\) and \(\widetilde{f_{\varvec{z}, \lambda }}\) are optimal solutions of problem (3) and problem (16), respectively.

Proof

We write \({\mathcal {E}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) - {\mathcal {E}}(f_{\rho }) +\lambda \langle f_{\varvec{z},\lambda } ,Tf_{\varvec{z},\lambda } \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) as

where we use \({\mathcal {E}}_{\varvec{z}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) \le {\mathcal {E}}_{\varvec{z}}\big (f_{\varvec{z},\lambda }\big )\) in the first inequality, and the second inequality holds by the condition that \(\widetilde{f_{\varvec{z},\lambda }}\) is a global minimizer of problem (16). \(\square\)

It can be found that the additional hypothesis error stems from the difference between \(\langle f_{\varvec{z},\lambda }, f_{\varvec{z},\lambda }\rangle _{{\mathcal {H}}_{\mathcal {K}}}\)-regularization and \(\langle f_{\varvec{z},\lambda } ,Tf_{\varvec{z},\lambda } \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\)-regularization in essence. Hence, we estimate the introduced hypothesis error in the following descriptions.

5.2 Bound hypothesis error

Since \(\widetilde{f_{\varvec{z}, \lambda }}\) is an optimal solution of problem (16), obviously, we have \(P(\varvec{z}, \lambda ) \ge 0\). To bound the hypothesis error, we need to estimate the objective function value difference of the two learning problems (3) and (17) by the following proposition.

Proposition 4

Suppose that the spectrum of the indefinite kernel matrix \(\varvec{K}\) satisfies Assumption 4, denote the condition number of two invertible matrices \(\varvec{\varSigma }_1\), \(\varvec{\varSigma }_2\) in Eq. (15) as \(C_1, C_2 < \infty\). When \(\eta + \gamma > 1\) with \(\eta = \min \{ \eta _1, \eta _2 \}\), the hypothesis error defined in Eq. (19) holds with probability 1 such that

where \(\widetilde{C_1} :=2Mr + 2M^2 \big ( \frac{-c_m}{C_2} + \frac{M^2}{r^2} + \frac{C_{1}}{c_1} \big )\) and the power index is \(\varTheta _1 = \min \big \{ 1, \gamma +\eta -1 \big \}\).

Proof

The proof can be found in Sect. 5.2.3. \(\square\)

Remark

The condition number of invertible matrices is finite, which is mild as demonstrated by Gao et al. (2015).

In the next, we give the proof of Proposition 4. For better presentation, we divide the proof into three parts: in Sect. 5.2.1, we decompose the hypothesis error \(P(\varvec{z}, \lambda )\) into the sum of two terms that would depend on \({\mu }\), i.e., the smallest real eigenvalue of a non-Hermitian matrix \(\varvec{G}\) in Eq. (14). Then we estimate \({\mu }\) in Sect. 5.2.2 so as to bound \(P_2(\varvec{z}, \lambda )\) and \(P(\varvec{z},\lambda )\) in Sect. 5.2.3.

5.2.1 Decomposition of hypothesis error

The hypothesis error \(P(\varvec{z}, \lambda )\) can be decomposed into the sum of two parts that depend on \(\mu\) and \({\tilde{\mu }}\) by the following proposition.

Proposition 5

Given the hypothesis error \(P(\varvec{z}, \lambda )\) defined in Eq. (19), it can be decomposed as

where \(P_1(\varvec{z}, \lambda )\) depends on \({\widetilde{\mu }} := -\frac{\Vert \varvec{y} \Vert _2}{m\sqrt{m}r}\) and \(P_2(\varvec{z}, \lambda )\) depends on \({\mu }\), i.e., the smallest real eigenvalue of a non-Hermitian matrix \(\varvec{G}\) in Eq. (14).

Proof

According to the definition of the hypothesis error \(P(\varvec{z}, \lambda )\), we have

where \(f_{\varvec{z}, \lambda }\) and \(\widetilde{f_{\varvec{z}, \lambda }}\) are optimal solutions of problem (3) and problem (16), respectively. Therefore, both of them can be obtained on the hyper-sphere. Besides, the regularizer is \(\varvec{\alpha }_{z, \lambda }^{\!\top } \varvec{K}^2 \varvec{\alpha }_{z, \lambda } = mr^2\) can be canceled out in \(P(\varvec{z}, \lambda )\). Based on this, \(P(\varvec{z}, \lambda )\) can be further represented as

\(\square\)

5.2.2 Estimate \({\mu }\)

To bound \(P(\varvec{z}, \lambda )\), we need to bound \(P_1(\varvec{z}, \lambda )\) and \(P_2(\varvec{z}, \lambda )\) respectively. The estimation for \(P_1(\varvec{z}, \lambda )\) is simple (we will illustrate it in the next subsection). However, \(P_2(\varvec{z}, \lambda )\) involves with \({\mu }\), i.e., the smallest real eigenvalue of a non-Hermitian matrix \(\varvec{G}\), which makes our estimation for \(P_2(\varvec{z}, \lambda )\) quite intractable. Based on this, here we attempt to present an estimation for \(\mu\) based on matrix perturbation theory (Stewart and Sun 1990).

Typically, there are three classical and well-known perturbation bounds for matrix eigenvalues, including the Bauer–Fike theorem and the Hoffman-Wielandt theorem for diagonalizable matrices (Hoffman and Wielandt 2003), and Weyl’s theorem for Hermitian matrices (Stewart and Sun 1990). However, \(\varvec{G}\) is neither Hermitian nor diagonalizable. To aid our proof, we need the following lemma.

Lemma 1

(Henrici theorem (Chu 1986)) Let \(\varvec{A}\) be an \(m \times m\) matrix with Schur decomposition \(\varvec{Q}^{{H}} \varvec{A} \varvec{Q} = \varvec{D} + \varvec{U}\), where \(\varvec{Q}\) is unitary, \(\varvec{D}\) is a diagonal matrix and \(\varvec{U}\) is a strict upper triangular matrix, with \((\cdot )^{{H}}\) denoting the Hermitian transpose. For each eigenvalue \({\tilde{\sigma }}\) of \(\varvec{A} + {\widetilde{\varDelta }}\), there exists an eigenvalue \(\sigma (\varvec{A})\) of \(\varvec{A}\) such that

where \(b \le m\) is the smallest integer satisfying \(\varvec{U}^b=0\), i.e., the nilpotent index of \(\varvec{U}\).

Based on the above lemma, \(\mu\) admits the following representation.

Proposition 6

Under the assumption of Proposition 4, as the smallest real eigenvalue of a non-Hermitian matrix \(\varvec{G}\) in Eq. (14), \({\mu }\) admits the following expression

with \(\widetilde{c_a} \in [-1, 0) \bigcup (0,1]\), \(\widetilde{c_b} \in [-1,1]\), \(\widetilde{c_d} \in [0,1]\), and \({\widetilde{\mu }} := -\frac{\Vert \varvec{y} \Vert _2}{m\sqrt{m}r} < 0\).

Proof

The non-Hermitian matrix \(\varvec{G}\) in Eq. (14) can be reformulated as

As a result, \(\varvec{G}\) can be represented as a sum of a block upper triangular matrix \(\varvec{G}_1\) with a non-Hermitian perturbation \(\varvec{G}_2\).

To estimate \(\varvec{G}_1\), by Lemma 1, from the definition of Schur decomposition on \(\varvec{G}_1\), it can be easily verified that \(\varvec{D}\) and \(\varvec{U}\) are

Accordingly, \(\varvec{U}\) is a nilpotent matrix with \(\varvec{U}^2=0\), and thus we have \(b=2\). According to Lemma 1, there exists an eigenvalue of \(\varvec{G}_1\) denoting as \(\sigma (\varvec{G}_1)\) such that

where \(\varsigma\) is given by

Then we consider the following three cases based on the sign of \(\sigma (\varvec{G}_1)\).

Case 1

\(\sigma (\varvec{G}_1) = 0\)

The inequality in Eq. (21) can be formulated as

Case 2

\(\sigma (\varvec{G}_1) > 0\)

Without loss of generality, we assume that \(\sigma (\varvec{G}_1)\) is \(\lambda / \sigma _l\) with \(l \in \{ 1,2,\ldots ,p \}\). According to the definition of condition number \(C_1\), we have

Then, the inequality in Eq. (21) can be formulated as

Case 3

\(\sigma (\varvec{G}_1) < 0\)

Likewise, we assume that \(\sigma (\varvec{G}_1)\) is \(\lambda / \sigma _l\) with \(l \in \{ m-q+1, m-q+2,\ldots ,m \}\). According to the definition of condition number \(C_2\), we have

Then, the inequality in Eq. (21) can be formulated as

Combining Eq. (22), Eqs. (23) and (24), we have

which can be further written as

Therefore, we have \(\lim _{m \rightarrow \infty } {\mu } = 0\), and its convergence rate is \({\mathcal {O}}(1/m)\) due to \(\gamma + \eta > 1\). Finally, \({\mu }\) can be represented in Eq. (20) with \(\widetilde{c_a} \ne 0\), which concludes the proof. \(\square\)

5.2.3 Proofs of Proposition 4

Given the expression of \(\mu\) with the convergence rate \({\mathcal {O}}(1/m)\) in Proposition 6, we are ready to present the estimates for \(P_2(\varvec{z}, \lambda )\) and \(P(\varvec{z}, \lambda )\) as demonstrated by Proposition 4.

Proof of Proposition 4

We cast the proof in two steps: firstly prove the consistency, i.e., \(\lim _{m \rightarrow \infty } P(\varvec{z}, \lambda ) = 0\), and then derive its convergence rate.

Step 1: Consistency of \(P(\varvec{z}, \lambda )\)

Based on the decomposition of the hypothesis error \(P(\varvec{z}, \lambda )\) in Proposition 5, due to \(P(\varvec{z}, \lambda ) \ge 0\) for any \(m \in {\mathbb {N}}\), we have \(\lim _{m \rightarrow \infty } \big (P_1(\varvec{z}, \lambda ) + P_2(\varvec{z}, \lambda )\big ) \ge 0\) if the limits \(\lim _{m \rightarrow \infty } P_1(\varvec{z}, \lambda )\) and \(\lim _{m \rightarrow \infty } P_2(\varvec{z}, \lambda )\) exist. Next we analyse \(P_1(\varvec{z}, \lambda )\) and \(P_2(\varvec{z}, \lambda )\), respectively.

According to the expression of \(P_1(\varvec{z}, \lambda )\), it can be bounded by

where \(\varvec{v}_i\) is the i-th column of the orthogonal matrix \(\varvec{V}\) from the eigenvalue decomposition \(\varvec{K}=\varvec{V} \varvec{\varSigma }\varvec{V}^{\!\top }\). The inequality in the above equation holds by \(\varvec{y}^{\!\top } \varvec{\varXi }\varvec{y} = \varvec{y}^{\!\top } (\varvec{I} - \sum _{i=p+1}^{m-q} \varvec{v}_i \varvec{v}_i^{\!\top }) \varvec{y} \le \varvec{y}^{\!\top } \varvec{y}\).

According to the expression of \(P_2(\varvec{z}, \lambda )\), it can be rewritten as

Since the function \(h(\sigma _i) = \frac{-1}{\frac{\lambda }{\sigma _i}-{\mu }}\) is an increasing function of \(\sigma _i\), \(P_2(\varvec{z}, \lambda )\) can be bounded by

By Proposition 6, plugging Eq. (20) into the above inequality, when \(\eta + \gamma > 1\), we have

which holds by \(\Vert \varvec{y} \Vert _2 = {\mathcal {O}}(\sqrt{m})\) and \(\widetilde{c_a} \ne 0\). According to the squeeze theorem, we conclude that the limit \(\lim _{m \rightarrow \infty }P_2(\varvec{z}, \lambda )\) exists. Because of \(P(\varvec{z}, \lambda ) \ge 0\), we have

which indicates that \(1-\frac{1}{\widetilde{c_a}} \ge 0\), i.e., \(\widetilde{c_a} \ge 1\). Accordingly, the coefficient in Eq. (20) \(\widetilde{c_a} \in [-1, 0) \bigcup (0,1]\) can be further improved to \(\widetilde{c_a} =1\). In this case, it is obvious that \(\lim _{m \rightarrow \infty } \Big (P_1(\varvec{z}, \lambda ) + P_2(\varvec{z}, \lambda )\Big ) = 0\) implies the consistency for \(P(\varvec{z}, \lambda )\).

Step 2: Convergence rate of \(P(\varvec{z}, \lambda )\)

Based on the consistency of \(P(\varvec{z}, \lambda )\), we derive its convergence rate as follows. For notational simplicity, we denote \(\widetilde{c_e} :=\left[ \frac{C_2}{c_m} + \widetilde{c_d} \left( \frac{C_1}{c_1} - \frac{C_2}{c_m} \right) \right]\). Accordingly, by virtue of Eqs. (25), (26) and Proposition 6 for \(\mu\), we have

where \(\widetilde{C_1} :=2Mr + 2M^2 \big ( \frac{-c_m}{C_2} + \frac{M^2}{r^2} + \frac{C_1}{c_1} \big )\) and the power index is \(\varTheta _1 = \min \big \{ 1, \gamma +\eta -1 \big \}\). Finally, we conclude the proof for Proposition 4. \(\square\)

5.3 Estimate sample error

The sample error can be decomposed into \(S(\varvec{z}, \lambda ) = S_1(\varvec{z}, \lambda ) + S_2(\varvec{z}, \lambda )\) with

Note that \(S_1(\varvec{z}, \lambda )\) involves the samples \(\varvec{z}\). Thus a uniform concentration inequality for a family of functions containing \(f_{\varvec{z}, \lambda }\) is needed to estimate \(S_1(\varvec{z}, \lambda )\). Since we have \(f_{\varvec{z}, \lambda } \in {\mathcal {B}}_R\) defined by Eq. (6), we shall bound \(S_1\) by the following proposition with a properly chosen R. Considering that the estimates for \(S_1(\varvec{z}, \lambda )\) and \(S_2(\varvec{z}, \lambda )\) have been extensively investigated in Wu et al. (2006), Cucker and Zhou (2007), Shi et al. (2014), we directly present the corresponding results in Appendix 1 under the existence of \(f_{\rho }\) in Assumption 1, and the regularity condition on \(\rho\) in Assumption 3.

5.4 Derive learning rates

Combining the bounds in Propositions 3, 4 and estimates for the sample error, the excess error \({\mathcal {E}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) - {\mathcal {E}}(f_{\rho })\) can be estimated. Specifically, as aforementioned, algorithmically, the radius r or R in Eq. (6) is determined by cross validation in our experiments. Theoretically, in our analysis, it is estimated by giving a bound for \(\lambda \langle f_{\varvec{z},\lambda } ,Tf_{\varvec{z},\lambda } \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\). This is conducted by the iteration technique (Wu et al. 2006) to improve learning rates. Under Assumption 1– 4, the proof for learning rates in Theorem 1 can be found in Appendix 2.

6 Numerical experiments

In this section, we validate our theoretical results by numerical experiments in the following three aspects.

6.1 Eigenvalue assumption

Here we verify the justification of our eigenvalue decay assumption in Assumption 4 on four indefinite kernels, including

-

the spherical polynomial (SP) kernel (Pennington et al. 2015): \(k_p(\varvec{x}, \varvec{x}') = (1+ \langle \varvec{x}, \varvec{x}' \rangle )^p\) with \(p=10\) on the unit sphere is shift-invariant but indefinite.

-

the TL1 kernel (Huang et al. 2018): \(k_{\tau '}(\varvec{x},\varvec{x}')= \max \{\tau '-\Vert \varvec{x}-\varvec{x}' \Vert _1,0\}\) with \(\tau ' = 0.7d\) as suggested.

-

the Delta-Gauss kernel (Oglic and Gärtner 2018): It is formulated as the difference of two Gaussian kernels, i.e., \(k\left( \varvec{x}, \varvec{x}' \right) =\exp \left( -\left\| \varvec{x} - \varvec{x}' \right\| ^{2}/ \tau _{1} \right) -\exp \left( -\left\| \varvec{x} - \varvec{x}' \right\| ^{2}/ \tau _{2} \right)\) with \(\tau _1 = 1\) and \(\tau _2=0.1\).

-

the log kernel (Boughorbel et al. 2005): \(k(\varvec{x},\varvec{x}') = - \log (1+\Vert \varvec{x} - \varvec{x}'\Vert )\).

Here the Delta-Gaussian kernel (Oglic and Gärtner 2018) and the log kernel Boughorbel et al. (2005) are associated with RKKS while the SP and TL1 kernels have not been proved as reproducing kernels in RKKS. It is still an open problem to verify that a kernel admits the decomposition (Liu et al. 2020). The Delta-Gaussian kernel is defined as the difference of two Gaussian kernels, and thus it is clear that \(\sigma _1\) and \(\sigma _m\) follow with the exponential decay in the same rate, i.e., \(\eta _1 = \eta _2\). For the log kernel (Boughorbel et al. 2005) is a conditionally positive definite kernel of order oneFootnote 3 associated with RKKS. According to Theorem 8.5 in Wendland (2004), the kernel matrix induced by this kernel has only one negative eigenvalue. Further, we can conclude that the only one negative eigenvalue admits \(\sigma _m = -\sum _{i=1}^{m-1} \sigma _i\) because of \(k(\varvec{0})=\frac{1}{n}\mathrm {tr}(\varvec{K})= \frac{1}{n}\sum _{i=1}^n \lambda _i = 0\), which implies \(\eta _2 > \eta _1\).

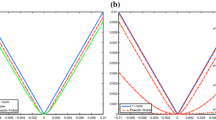

Figure 1 experimentally shows eigenvalue distributions of the above four indefinite kernels on the monks3 dataset.Footnote 4 It can be found that our eigenvalue assumption: \(\sigma _1 \ge c_1 m^{\eta _1}\) (\(c_1>0\), \(\eta _1 > 0\)) and \(\sigma _m \le c_m m^{\eta _2}\) (\(c_m<0\), \(\eta _2>0\)) in Definition 4 is reasonable. Specifically, our experiments on the log kernel verify that it has only one negative eigenvalue admitting \(\sigma _m = -\sum _{i=1}^{m-1} \sigma _i\). Note that although the SP and TL1 kernels have not been proved as reproducing kernels in RKKS, our eigenvalue assumption still covers them, which demonstrates the feasibility of our assumption.

6.2 Empirical validations of derived learning rates

Here we verify the derived convergence rates on the monks3 dataset effected by different indefinite kernels. In our experiment, we choose \(\lambda :=1/m\) and two indefinite kernels including the Delta-Gauss kernel and the log kernel on monks3 to study in what degree they would effect the learning rates. Since the selected two kernels are \(C^{\infty }(X \times X)\), s can be arbitrarily small. In this case, by Theorem 1 and Corollary 2, the learning rate of problem (3) with the RKKS regularizer \(\langle f,f\rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) or the RKHS regularizer \(\Vert f\Vert _{{\mathcal {H}}_{{\bar{\mathcal{K}}}}}^2\) is close to \(\min \{ \beta , \eta \}\). Here the two parameters \(\beta\) and \(\eta\) indicate the approximation ability for \(f_{\rho }\) and the size of RKKS by different indefinite kernels, and thus they will influence the expected risk rate. Figure 2a shows the observed learning rate associated with the Delta-Gauss kernel is \({\mathcal {O}}(1/\sqrt{m})\), while the excess risk associated with the log kernel converges at \({\mathcal {O}}(m^{-1/3})\) in Fig. 2b. Hence, Fig. 2 demonstrates this difference that the excess risk of problem (3) with the Delta-Gauss kernel converges faster than that with the log kernel. This is reasonable and demonstrated by Theorem 1, i.e., different \({{\mathcal {H}}_{\mathcal{K}}}\) spanned by various indefinite kernels lead to different convergence rates due to their different approximation ability for \(f_{\rho }\).

The above experiments validate the rationality of our eigenvalue assumption and the consistency with theoretical results.

7 Conclusion

In this paper, we provide approximation analysis of the least squares problem associated with the \(\langle f,f\rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\) regularization scheme in RKKS. For this non-convex problem with the bounded hyper-sphere constraint, we can get an attainable optimal solution, which makes it possible to conduct approximation analysis in RKKS. Accordingly, we start the analysis from the learning problem that has an analytical solution, and thus obtain the first-step to understand the learning behavior in RKKS. Our analysis and experimental validation bridge the gap between the regularized risk minimization problem in RKHS and RKKS.

Notes

References

Adachi, S., & Nakatsukasa, Y. (2017). Eigenvalue-based algorithm and analysis for nonconvex QCQP with one constraint. Mathematical Programming, 1, 1–38.

Alabdulmohsin, I., Cisse, M., Gao, X., & Zhang, X. (2016). Large margin classification with indefinite similarities. Machine Learning, 103(2), 215–237.

Ando, T. (2009). Projections in krein spaces. Linear Algebra and Its Applications, 431(12), 2346–2358.

Bach, F. (2013). Sharp analysis of low-rank kernel matrix approximations. In Proceedings of conference on learning theory (pp. 185–209).

Bognár, J. (1974). Indefinite inner product spaces. Berlin: Springer.

Boughorbel, S., Tarel, J. P., & Boujemaa, N. (2005). Conditionally positive definite kernels for SVM based image recognition. In Proceedings of IEEE international conference on multimedia and expo (pp. 113–116).

Chen, D., Wu, Q., Ying, Y., & Zhou, D. (2004). Support vector machine soft margin classifiers: Error analysis. Journal of Machine Learning Research, 5(3), 1143–1175.

Cho, H., DeMeo, B., Peng, J., & Berger, B. (2019). Large-margin classification in hyperbolic space. In Proceedings of international conference on artificial intelligence and statistics (pp. 1832–1840). PMLR.

Chu, K. W. E. (1986). Generalization of the Bauer-Fike theorem. Numerische Mathematik, 49(6), 685–691.

Cucker, F., & Zhou, D. (2007). Learning theory: An approximation theory viewpoint (Vol. 24). Cambridge University Press.

Dhillon, I. S., Guan, Y., & Kulis, B. (2004). Kernel k-means: Spectral clustering and normalized cuts. In Proceedings of ACM SIGKDD international conference on Knowledge discovery and data mining (pp. 551–556). ACM.

Farooq, M., & Steinwart, I. (2019). Learning rates for kernel-based expectile regression. Machine Learning, 108(2), 203–227.

Gander, W., Golub, G. H., & Matt, U. V. (1988). A constrained eigenvalue problem. Linear Algebra and Its Applications, 114–115, 815–839.

Gao, C., Ma, Z., Ren, Z., & Zhou, H. H. (2015). Minimax estimation in sparse canonical correlation analysis. Annals of Statistics, 43(5), 2168–2197.

Guo, Z. C., & Shi, L. (2019). Optimal rates for coefficient-based regularized regression. Applied and Computational Harmonic Analysis, 47(3), 662–701.

Hoffman, A. J., & Wielandt, H. W. (2003). The variation of the spectrum of a normal matrix. In Selected papers of Alan J Hoffman: With commentary (pp. 118–120). World Scientific.

Huang, X., Suykens, J. A. K., Wang, S., Hornegger, J., & Maier, A. (2018). Classification with truncated \(\ell _1\) distance kernel. IEEE Transactions on Neural Networks and Learning Systems, 29(5), 2025–2030.

Jun, K. S., Cutkosky, A., & Orabona, F. (2019). Kernel truncated randomized ridge regression: Optimal rates and low noise acceleration. In Proceedings of advances in neural information processing systems (pp. 15358–15367).

Langer, H. (1962). Zur spektraltheoriej-selbstadjungierter operatoren. Mathematische Annalen, 146(1), 60–85.

Lin, S. B., Guo, X., & Zhou, D. X. (2017). Distributed learning with regularized least squares. Journal of Machine Learning Research, 18(1), 3202–3232.

Liu, F., Huang, X., Chen, Y., & Suykens, J. A. K. (2021). Fast learning in reproducing kernel Kreĭn spaces via generalized measures. In Proceedings of the international conference on artificial intelligence and statistics (pp. 388–396).

Liu, F., Huang, X., Gong, C., Yang, J., & Li, L. (2020). Learning data-adaptive non-parametric kernels. Journal of Machine Learning Research, 21(208), 1–39.

Liu, X., Zhu, E., & Liu, J. (2020). SimpleMKKM: Simple multiple kernel k-means. arXiv preprint arXiv:2005.04975.

Loosli, G., Canu, S., & Cheng, S. O. (2016). Learning SVM in Kreĭn spaces. IEEE Transactions on Pattern Analysis and Machine Intelligence, 38(6), 1204–1216.

Oglic, D., & Gärtner, T. (2018). Learning in reproducing kernel Kreĭn spaces. In Proceedings of the international conference on machine learning (pp. 3859–3867).

Oglic, D., & Gärtner, T. (2019). Scalable learning in reproducing kernel Kreĭn spaces. In Proceedings of international conference on machine learning (pp. 4912–4921).

Ong, C. S., Mary, X., & Smola, A. J. (2004). Learning with non-positive kernels. In Proceedings of the international conference on machine learning (pp. 81–89).

Ong, C. S., Smola, A. J., & Williamson, R. C. (2005). Learning the kernel with hyperkernels. Journal of Machine Learning Research, 6, 1043–1071.

Pȩkalska, E., & Haasdonk, B. (2009). Kernel discriminant analysis for positive definite and indefinite kernels. IEEE Transactions on Pattern Analysis and Machine Intelligence, 31(6), 1017–1032.

Pennington, J., Yu, F. X. X., & Kumar, S. (2015). Spherical random features for polynomial kernels. In Proceedings of advances in neural information processing systems (pp. 1846–1854).

Rudi, A., & Rosasco, L. (2017). Generalization properties of learning with random features. In Proceedings of advances in neural information processing systems (pp. 3215–3225.

Saha, A., & Palaniappan, B. (2020). Learning with operator-valued kernels in reproducing kernel Kreĭn spaces. In Proceedings of advances in neural information processing systems (pp. 1–11).

Sala, F., De Sa, C., Gu, A., & Re, C. (2018). Representation tradeoffs for hyperbolic embeddings. In Proceedings of international conference on machine learning (pp. 4460–4469).

Schaback, R. (1999). Native Hilbert spaces for radial basis functions. I. In New developments in approximation theory (pp. 255–282). Springer.

Schleif, F. M., & Tino, P. (2015). Indefinite proximity learning: A review. Neural Computation, 27(10), 2039–2096.

Schölkopf, B., & Smola, A. J. (2003). Learning with kernels: Support vector machines, regularization, optimization, and beyond. MIT Press.

Shang, R., Meng, Y., Liu, C., Jiao, L., Esfahani, A. M. G., & Stolkin, R. (2019). Unsupervised feature selection based on kernel fisher discriminant analysis and regression learning. Machine Learning, 108(4), 659–686.

Shi, L., Huang, X., Feng, Y., & Suykens, J. A. K. (2019). Sparse kernel regression with coefficient-based \(\ell _q\)- regularization. Journal of Machine Learning Research, 20(161), 1–44.

Shi, L., Huang, X., Tian, Z., & Suykens, J. A. K. (2014). Quantile regression with \(\ell _1\)-regularization and Gaussian kernels. Advances in Computational Mathematics, 40(2), 517–551.

Smola, A. J., Ovari, Z. L., & Williamson, R. C. (2001). Regularization with dot-product kernels. In Proceedings of advances in neural information processing systems (pp. 308–314).

Steinwart, I., & Andreas, C. (2008). Support vector machines. Springer.

Steinwart, I., Hush, D. R., & Scovel, C. (2009). Optimal rates for regularized least squares regression. In Proceedings of conference on learning theory (pp. 1–10).

Steinwart, I., & Scovel, C. (2007). Fast rates for support vector machines using Gaussian kernels. Annals of Statistics, 35(2), 575–607.

Stewart, G. W., & Sun, J. (1990). Matrix perturbation theory. Harcourt Brace Jovanoich.

Suykens, J. A. K., Van Gestel, T., De Brabanter, J., De Moor, B., & Vandewalle, J. (2002). Least squares support vector machines. World Scientific.

Terada, Y., & Yamamoto, M. (2019). Kernel normalized cut: A theoretical revisit. In Proceedings of international conference on machine learning (pp. 6206–6214).

Wang, C., & Zhou, D. X. (2011). Optimal learning rates for least squares regularized regression with unbounded sampling. Journal of Complexity, 27(1), 55–67.

Wendland, H. (2004). Scattered data approximation (Vol. 17). Cambridge University Press.

Wu, Q., Ying, Y., & Zhou, D. (2006). Learning rates of least-square regularized regression. Foundations of Computational Mathematics, 6(2), 171–192.

Xia, Y., Wang, S., & Sheu, R. L. (2016). S-lemma with equality and its applications. Mathematical Programming, 156(1–2), 513–547.

Ying, Y., Campbell, C., & Girolami, M. (2009). Analysis of SVM with indefinite kernels. In Proceedings of advances in neural information processing systems (pp. 2205–2213).

Zhu, J., & Hastie, T. (2002). Kernel logistic regression and the import vector machine. Journal of Computational and Graphical Statistics, 14(1), 185–205.

Acknowledgements

The research leading to these results has received funding from the European Research Council under the European Union’s Horizon 2020 research and innovation program/ERC Advanced Grant E-DUALITY (787960). This paper reflects only the authors’ views and the Union is not liable for any use that may be made of the contained information. This work was supported in part by Research Council KU Leuven: Optimization frameworks for deep kernel machines C14/18/068; Flemish Government: FWO projects: GOA4917N (Deep Restricted Kernel Machines: Methods and Foundations), PhD/Postdoc grant. This research received funding from the Flemish Government (AI Research Program). This work was supported in part by Ford KU Leuven Research Alliance Project KUL0076 (Stability analysis and performance improvement of deep reinforcement learning algorithms), EU H2020 ICT-48 Network TAILOR (Foundations of Trustworthy AI - Integrating Reasoning, Learning and Optimization), Leuven.AI Institute; and in part by the National Natural Science Foundation of China (Grants Nos. 61876107, 61977046, U1803261) and NSFC/RGC Joint Research Scheme (Nos. 1201101029 and N_CityU102/20), in part by the National Key Research and Development Project (No. 2018AAA0100702), in part by National Key R&D Program of China (No. 2019YFB1311503), and in part by Shanghai Science and Technology Research Program (20JC1412700 and 19JC1420101).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Editor: Thomas Gärtner.

Appendices

Appendix 1: Proof for the sample error

The asymptotic behaviors of \(S_1(\varvec{z}, \lambda )\) and \(S_2(\varvec{z}, \lambda )\) are usually illustrated by the convergence of the empirical mean \(\frac{1}{m}\sum _{i=1}^m\xi _{i}\) to its expectation \({\mathbb {E}}\xi\), where \(\left\{ \xi _{i}\right\} _{i=1}^m\) are independent random variables on \((Z,\rho )\) defined as

For \(R\ge 1\), denote

Lemma 2

If \(\xi\) is a symmetric real-valued function on \(X \times Y\) with mean \({\mathbb {E}}(\xi )\). Assume that \({\mathbb {E}}(\xi ) \ge 0\), \(|\xi - {\mathbb {E}}\xi |\le T\) almost surely and \({\mathbb {E}}\xi ^2 \le c'_1 ({\mathbb {E}}\xi )^{\theta }\) for some \(0 \le \theta \le 1\) and \(c'_1 \ge 0\), \(T \ge 0\). Then for every \(\epsilon >0\) there holds

Now we can bound \(S_2(\varvec{z}, \lambda )\) by the following proposition.

Proposition 7

Suppose that \(|f_{\rho }(\varvec{x})|\le M^*\) with \(M^* \ge 1\), for any \(0< \delta < 1\), there exists a subset of \(Z_1\) of \(Z^{m}\) with confidence at least \(1-\delta /2\), such that for any \(\forall {\varvec{z}} \in Z_1\)

Proof

From the definition of \(f_{\lambda }\) in Proposition 3, combining Eqs. (1) and (4), we have

which leads to \(\Vert f_{\lambda } \Vert _{\infty } \le \kappa \sqrt{\frac{D(\lambda )}{\lambda }}\). The first equality holds because the reproducing kernel \(k_{+} + k_{-}\) associated with \({{\mathcal {H}}_{\bar{\mathcal{K}}}}\) is the square root of the limiting kernel in Guo and Shi (2019) associated with the empirical covariance operator T. Due to \(f_{\rho }(\varvec{x})\) contained in \([-M^*,M^*]\), we can get

For least squared loss, \({\mathbb {E}}(\xi ^2) \le 4 {\mathbb {E}}(\xi )\) indicates \(c'_1=4\) and \(\theta = 1\). Applying Lemma 2, there exists a subset \(Z_1\) of \(Z^m\) with confidence \(1-\delta /2\), we have

Then, we obtain

which concludes the proof. \(\square\)

In the next, we attempt to bound \(S_1(\varvec{z}, \lambda )\) with respect to the samples \(\varvec{z}\). Thus a uniform concentration inequality for a family of functions containing \(f_{\varvec{z}, \lambda }\) is needed to estimate \(S_1\). Since we have \(f_{\varvec{z}, \lambda } \in {\mathcal {B}}_R\), which is defined by Eq. (6), we shall bound \(S_1\) by the following proposition with a properly chosen R.

Proposition 8

Suppose that \(|f_{\rho }(\varvec{x})|\le M^*\) with \(M^* \ge 1\) in Assumption 1, and \(\rho\) satisfies the regularity condition in Assumption 3, for any \(0< \delta < 1\), \(R \ge 1\), \(B > 0\), there exists a subset \(Z_2\) of \(Z^{m}\) with confidence at least \(1-\delta /2\), such that for any \(\varvec{z} \in {\mathscr {W}}(R) \cap Z_2\),

Proof

Consider the function set \({\mathcal {F}}_R\) with \(R>0\) by

We can easily see that each function \(g \in {\mathcal {F}}_R\) satisfies \(\Vert g\Vert _{\infty } \le B+M^*\), and thus we have \(| g-{\mathbb {E}}g| \le B+M^*\).

So using \({\mathscr {N}}({\mathcal {F}}_R,\epsilon ) \le {\mathscr {N}}({\mathcal {B}}_1,\epsilon )\) and applying Lemma 2 to the function set \({\mathcal {F}}_R\) with the covering number condition in Eq. (7) in Assumption 3, we have

with \({\mathbb {E}}g = {\mathcal {E}}\big (\pi _B(f)\big ) - {\mathcal {E}}(f_{\rho })\). Hence there holds a subset \(Z_2\) of \(Z^{m}\) with confidence at least \(1-\delta /2\) such that \(\forall \varvec{z} \in Z_2 \cap {\mathscr {W}}(R)\)

where \(\epsilon ^*(m,R,\frac{\delta }{2})\) is the smallest positive number \(\epsilon\) satisfying

using Lemma 7.2 in Cucker and Zhou (2007), we have

where we use \(M^*\ge 1\). For \(\varvec{z} \in {\mathcal {B}}(R) \cap Z_2\), we have

\(\square\)

Appendix 2: Proof for learning rates

Combining the bounds in Propositions 3, 4, 7, 8, and Eq. (27), let Eq. (7) with \(s>0\), Eq. (5) with \(0 < \beta \le 1\), take \(\lambda =m^{-\gamma }\) with \(0< \gamma < 1\), the excess error \({\mathcal {E}}\big (\pi _B(f_{\varvec{z},\lambda })\big ) - {\mathcal {E}}(f_{\rho })\) can be bounded by

where \(\widetilde{C_1}\) is given in Proposition 4. Two constants \(\widetilde{C_2}\) and \(\widetilde{C_3}\) are given by

In the next, we attempt to find a \(R>0\) by giving a bound for \(\lambda \langle f_{\varvec{z},\lambda } ,Tf_{\varvec{z},\lambda } \rangle _{{{\mathcal {H}}_{\mathcal{K}}}}\).

Lemma 3

Suppose that \(\rho\) satisfies the condition in Eq. (5) with \(0 < \beta \le 1\) in Assumption 2. For some \(s>0\) in Assumption 3, take \(\lambda =m^{-\gamma }\) with \(0 < \gamma \le 1\). Then for \(0< \epsilon < 1\) and \(0<\delta <1\) with confidence \(1-\delta\), we have

where \(\widetilde{C_{X}}\) is given by

and \(\theta _{\epsilon }\) is

Proof

According to Eq. (28), we know that for any \(R \ge 1\) there exists a subset \(V_R\) of \(Z_m\) with measure at most \(\delta\) such that

where \(a_m = \sqrt{ \widetilde{C_3}} m^{\frac{\gamma }{2} - \frac{1}{2(1+s)}}\), and \(b_m\) is defined as

where the power index \(\zeta\) is

It tells us that \({\mathscr {W}}(R) \subseteq {\mathscr {W}} \left( a_mR^{\frac{s}{2+2s}} + b_m \right) \bigcup V_R\). Define a sequence \(\{ R^{(j)} \}_{j=0}^J\) with \(R^{(j)} = a_m (R^{(j-1)})^{s/(2+2s)} + b_m\) with \(J \in {\mathbb {N}}\), we have \(Z^m = {\mathscr {W}}(R^{(0)})\) satisfying

Since each set \(V_{R^{(j)}}\) is at most \(\delta\), the set \({\mathscr {W}}(R^{(J)})\) has measure at least \(1-J \delta\).

Denote \(\varDelta = s/(2+2s) < 1/2\), the definition of the sequence \(\{ R^{(j)} \}_{j=0}^J\) indicates that

The first term \(R^{(J)}_1\) can be bounded by

where J is chosen to be the smallest integer satisfying \(J \ge \frac{\log (1/ \epsilon ) }{ \log 2}\). Besides, \(R^{(J)}_2\) can be bounded by

with \(b_1:=\sqrt{\widetilde{C_2} \log \frac{2}{\delta } } + \sqrt{2\kappa \sqrt{C_0} \log \frac{2}{\delta }} + \sqrt{3C_0} + \sqrt{\widetilde{C_1}}\). When \(\zeta \le (\gamma (1+s)-1)(2+s)\), \(R^{(J)}_2\) can be bounded by \(\widetilde{C_3} b_1 J m^{(\gamma (1+s)-1)(2+s)}\). When \(\zeta > (\gamma (1+s)-1)(2+s)\), \(R^{(J)}_2\) can be bounded by \(\widetilde{C_3} b_1 J m^{\zeta }\). Based on the above discussion, we have

with \(\theta _{\epsilon }=\max \{ \zeta , (\gamma (1+s)-1)(2+s)+\epsilon \}\). So with confidence \(1-J\delta\), there holds

which follows by replacing \(\delta\) by \(\delta / J\) and noting \(J \le 2 \log (2/ \epsilon )\). Finally, we conclude the proof. \(\square\)

Now, by Lemma 3 and Eq. (28), we are able to prove our main result in Theorem 1.

Proof

Take R to be the right hand side of Eq. (29) by Lemma 3, there exists a subset \(V'_R\) of \(Z_m\) with measure at most \(\delta\) such that \(Z^m / V'_R \subseteq {\mathscr {W}}(R)\). Therefore, there exists another subset \(V_R\) of \(Z^m\) with measure at most \(\delta\) such that for any \(\varvec{z} \in {\mathscr {W}}(R) / V_R\), Eq. (28) can be formulated as

where \(\widetilde{C_4} = \widetilde{C_X} (4\widetilde{C_3})^{\frac{s}{1+s}}\). Accordingly, by setting the constant \(\widetilde{C}\) with

we have the following error bound

with confidence \(1-\delta\) and the power index \(\varTheta\) is

provided that \(\theta _{\epsilon } < 1 / s\). Combining Eqs. (30) and (31), when \(0< \eta < 1\), we have

where \(\epsilon\) is given by Eq. (8) and \(\eta\) needs to be further restricted by \(\max \{0, 1-2/s\}< \eta < 1\). These two restrictions ensure that \(\varTheta\) is positive for a valid learning rate. Specifically, when \(\eta \ge 1\), the power index \(\varTheta\) can be simplified as

which concludes the proof. \(\square\)

Rights and permissions

About this article

Cite this article

Liu, F., Shi, L., Huang, X. et al. Analysis of regularized least-squares in reproducing kernel Kreĭn spaces. Mach Learn 110, 1145–1173 (2021). https://doi.org/10.1007/s10994-021-05955-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-05955-2