Abstract

Gaussian processes (GPs) serve as flexible surrogates for complex surfaces, but buckle under the cubic cost of matrix decompositions with big training data sizes. Geospatial and machine learning communities suggest pseudo-inputs, or inducing points, as one strategy to obtain an approximation easing that computational burden. However, we show how placement of inducing points and their multitude can be thwarted by pathologies, especially in large-scale dynamic response surface modeling tasks. As remedy, we suggest porting the inducing point idea, which is usually applied globally, over to a more local context where selection is both easier and faster. In this way, our proposed methodology hybridizes global inducing point and data subset-based local GP approximation. A cascade of strategies for planning the selection of local inducing points is provided, and comparisons are drawn to related methodology with emphasis on computer surrogate modeling applications. We show that local inducing points extend their global and data subset component parts on the accuracy–computational efficiency frontier. Illustrative examples are provided on benchmark data and a large-scale real-simulation satellite drag interpolation problem.

Similar content being viewed by others

Notes

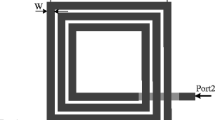

Ordinary IMSE was used, substituting inducing points in for design points, as described in Binois et al. (2019). Progress is blocky because individual inducing point additions do not substantial alter space-filling properties until most of a “new row” of sites are added in this 2d example.

Here, we are abusing notation a little to describe an inductive process \(n \rightarrow n+1\) and referring to n as the final local design size as opposed to introducing a new iterator.

For identical n, ALC bests NN (Gramacy and Apley 2015), motivating increased n for NN here.

A characterization attributed to Derek Bingham predating any published account, to our knowledge.

In laGP, the function providing \(\theta ^{(0)}\) in this way is darg.

That is, two per hyperthreaded core.

Original MATLAB: http://www.gaussianprocess.org/gpml/data/; plain text in our Git repo.

No timings provided; the worst method had RMSE 0.25.

Dividing out \(\nu \) removes dependence on \(\mathbf {Y}\)-values through \(\hat{\nu }\). Greedy build-up of \(\mathbf {x}_{n+1}\) over \(n=N_0, \dots ,N-1\) is near optimal due to a supermartingale property (Bect et al. 2019).

References

Anagnostopoulos, C., Gramacy, R.B.: Information–theoretic data discarding for dynamic trees on data streams. Entropy 15(12), 5510–5535 (2013)

Ankenman, B., Nelson, B.L., Staum, J.: Stochastic kriging for simulation metamodeling. Oper. Res. 58(2), 371–382 (2010)

Aune, E., Simpson, D.P., Eidsvik, J.: Parameter estimation in high dimensional Gaussian distributions. Stat. Comput. 24(2), 247–263 (2014)

Azzimonti, D., Bect, J., Chevalier, C., Ginsbourger, D.: Quantifying uncertainties on excursion sets under a gaussian random field prior. SIAM/ASA J. Uncertain. Quantif. 4(1), 850–874 (2016)

Banerjee, S., Gelfand, A.E., Finley, A.O., Sang, H.: Gaussian predictive process models for large spatial data sets. J. R. Stat. Soc. Ser. B Stat. Methodol. 70(4), 825–848 (2008)

Barnett, S.: Matrix Methods for Engineers and Scientists. McGraw-Hill, New York (1979)

Bauer, M., van der Wilk, M., Rasmussen, C.E.: Understanding probabilistic sparse Gaussian process approximations. In: Advances in Neural Information Processing Systems, vol. 29, pp. 1533–1541 (2016)

Bect, J., Bachoc, F., Ginsbourger, D., et al.: A supermartingale approach to Gaussian process based sequential design of experiments. Bernoulli 25(4A), 2883–2919 (2019)

Binois, M., Huang, J., Gramacy, R.B., Ludkovski, M.: Replication or exploration? Sequential design for stochastic simulation experiments. Technometrics 61(1), 7–23 (2019)

Burnaev, E., Panov, M.: Adaptive design of experiments based on gaussian processes. In: International Symposium on Statistical Learning and Data Sciences, pp. 116–125. Springer, Berlin (2015)

Busby, D.: Hierarchical adaptive experimental design for Gaussian process emulators. Reliab. Eng. Syst. Saf. 94(7), 1183–1193 (2009)

Byrd, R.H., Lu, P., Nocedal, J., Zhu, C.: A limited memory algorithm for bound constrained optimization. SIAM J. Sci. Comput. 16(5), 1190–1208 (1995)

Carnell, R.: lhs: Latin Hypercube Samples. R package version 1.0.1 (2019)

Chen, J., Cao, N., Low, K.H., Ouyang, R., Tan, C.K.-Y., Jaillet, P.: Parallel Gaussian process regression with low-rank covariance matrix approximations. In: Proceedings of the Twenty-Ninth Conference on Uncertainty in Artificial Intelligence, UAI’13, pp. 152–161. AUAI Press, Arlington (2013)

Cohn, D.A.: Neural network exploration using optimal experiment design. In: Proceedings of the 6th International Conference on Neural Information Processing Systems, NIPS’93, pp. 679–686. Morgan Kaufmann Publishers Inc, San Francisco (1993)

Csató, L., Opper, M.: Sparse on-line Gaussian processes. Neural Comput. 14(3), 641–668 (2002)

Datta, A., Banerjee, S., Finley, A.O., Gelfand, A.E.: Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets. J. Am. Stat. Assoc. 111(514), 800–812 (2016)

Emery, X.: The kriging update equations and their application to the selection of neighboring data. Comput. Geosci. 13(3), 269–280 (2009)

Fernández, F.L., Martino, L., Elvira, V., Delgado, D., López-Santiago, J.: Adaptive quadrature schemes for Bayesian inference via active learning. IEEE Access 8, 208462–208483 (2020)

Gardner, J., Pleiss, G., Weinberger, K.Q., Bindel, D., Wilson, A.G.: Gpytorch: Blackbox matrix-matrix Gaussian process inference with GPU acceleration. In: Advances in Neural Information Processing Systems, pp. 7576–7586 (2018a)

Gardner, J., Pleiss, G., Wu, R., Weinberger, K., Wilson, A.: Product kernel interpolation for scalable Gaussian processes. In: Storkey, A., Perez-Cruz, F. (eds.) Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics, Volume 84 of Proceedings of Machine Learning Research, pp. 1407–1416. PMLR (2018b)

Garton, N., Niemi, J., Carriquiry, A.: Knot selection in sparse Gaussian processes with a variational objective function. ASA Data Sci. J. Stat. Anal. Data Min. 13, 324–336 (2020)

Gauthier, B., Pronzato, L.: Spectral approximation of the IMSE criterion for optimal designs in kernel-based interpolation models. SIAM/ASA J. Uncertain. Quantif. 2(1), 805–825 (2014)

Gorodetsky, A., Marzouk, Y.: Mercer kernels and integrated variance experimental design: connections between Gaussian process regression and polynomial approximation. SIAM/ASA J. Uncertain. Quantif. 4(1), 796–828 (2016)

Gramacy, R.B.: laGP: large-scale spatial modeling via local approximate Gaussian processes in R. J. Stat. Softw. 72(1), 1–46 (2016)

Gramacy, R.B.: Surrogates: Gaussian Process Modeling, Design and Optimization for the Applied Sciences. Chapman Hall/CRC, Boca Raton. http://bobby.gramacy.com/surrogates/ (2020)

Gramacy, R.B., Apley, D.W.: Local Gaussian process approximation for large computer experiments. J. Comput. Graph. Stat. 24(2), 561–578 (2015)

Gramacy, R., Haaland, B.: Speeding up neighborhood search in local Gaussian process prediction. Technometrics 58(3), 294–303 (2016)

Gramacy, R.B., Lee, H.K.: Bayesian treed Gaussian process models with an application to computer modeling. J. Am. Stat. Assoc. 103(483), 1119–1130 (2008)

Gramacy, R.B., Lee, H.K.: Adaptive design and analysis of supercomputer experiments. Technometrics 51(2), 130–145 (2009)

Gramacy, R., Niemi, J., Weiss, R.: Massively parallel approximate Gaussian process regression. SIAM/ASA J. Uncertain. Quantif. 2(1), 564–584 (2014)

Harville, D.A.: Matrix Algebra From a Statistician’s Perspective. Springer, New York (2011)

Hensman, J., Fusi, N., Lawrence, N.D.: Gaussian processes for big data. In: UAI’13, pp. 282–290. AUAI Press, Arlington (2013)

Hoang, T.N., Hoang, Q.M., Low, B.K.H.: A unifying framework of anytime sparse gaussian process regression models with stochastic variational inference for big data. In: ICML, pp. 569–578 (2015)

Hoffman, M.D., Blei, D.M., Wang, C., Paisley, J.: Stochastic variational inference. J. Mach. Learn. Res. 14(1), 1303–1347 (2013)

Jankowiak, M., Gardner, J.: Neural likelihoods for multi-output Gaussian processes. arXiv preprint arXiv:1905.13697 (2019)

Johnson, M.E., Moore, L., Ylvisaker, D.: Minimax and maximin distance designs. J. Stat. Plan. Inference 26, 131–148 (1990)

Kanagawa, M., Hennig, P.: Convergence guarantees for adaptive Bayesian quadrature methods. In: Advances in Neural Information Processing Systems, pp. 6237–6248 (2019)

Katzfuss, M., Guinness, J.: A general framework for Vecchia approximations of Gaussian processes. Stat. Sci. 36(1), 124–141 (2021)

Katzfuss, M., Guinness, J., Lawrence, E.: Scaled Vecchia approximation for fast computer-model emulation. arXiv preprint arXiv:2005.00386 (2020)

Kaufman, C., Bingham, D., Habib, S., Heitmann, K., Frieman, J.: Efficient emulators of computer experiments using compactly supported correlation functions, with an application to cosmology. Ann. Appl. Stat. 5(4), 2470–2492 (2011)

Kim, H.M., Mallick, B.K., Holmes, C.C.: Analyzing nonstationary spatial data using piecewise Gaussian processes. J. Am. Stat. Assoc. 100(470), 653–668 (2005)

Leatherman, E.R., Santner, T.J., Dean, A.M.: Computer experiment designs for accurate prediction. Stat. Comput. 28(4), 739–751 (2018)

Lee, H., Gramacy, R., Linkletter, C., Gray, G.: Optimization subject to hidden constraints via statistical emulation. Pac. J. Optim. 7(3), 467–478 (2011)

Liu, H., Cai, J., Ong, Y.-S., Wang, Y.: Understanding and comparing scalable Gaussian process regression for big data. Knowl. Based Syst. 164, 324–335 (2019)

Mckay, D., Beckman, R., Conover, W.: A comparison of three methods for selecting vales of input variables in the analysis of output from a computer code. Technometrics 21, 239–245 (1979)

Mehta, P., Walker, A., Lawrence, E., Linares, R., Higdon, D., Koller, J.: Modeling satellite drag coefficients with response surfaces. Adv. Space Res. 54(8), 1590–1607 (2014)

Morris, M.D., Mitchell, T.J.: Exploratory designs for computational experiments. J. Stat. Plan. Inference 43, 381–402 (1995)

Neal, R.M.: Regression and classification using Gaussian process priors. Bayesian Stat. 6, 475–501 (1998)

Pleiss, G., Gardner, J., Weinberger, K., Wilson, A.G.: Constant-time predictive distributions for Gaussian processes. In Dy, J., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning, vol. 80, pp. 4114–4123. PMLR, Stockholmsmãssan, Stockholm Sweden (2018)

Poggio, T., Girosi, F.: Networks for approximation and learning. In: Proceedings of the IEEE, vol. 78, pp. 1481 – 1497. Eq. 25 (1990)

Pratola, M.T., Harari, O., Bingham, D., Flowers, G.E.: Design and analysis of experiments on nonconvex regions. Technometrics 59(1), 36–47 (2017)

Quiñonero, J., Rasmussen, C.: A unifying view of sparse approximate Gaussian process regression. J. Mach. Learn. Res. 6, 1939–1959 (2005)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna (2020)

Rasmussen, C.E., Williams, C.K.I.: Gaussian Processes for Machine Learning. Adaptive Computation and Machine Learning. MIT Press, Cambridge (2006)

Sacks, J., Welch, W.J., Mitchell, T.J., Wynn, H.P.: Design and analysis of computer experiments. Stat. Sci. 4, 409–423 (1989)

Santner, T., Williams, B., Notz, W.: The Design and Analysis Computer Experiments, 2nd edn. Springer, Berlin (2018)

Schürch, M., Azzimonti, D., Benavoli, A., Zaffalon, M.: Recursive estimation for sparse Gaussian process regression. Automatica 120, 109127 (2020)

Seeger, M., Williams, C., Lawrence, N.: Fast forward selection to speed up sparse Gaussian process regression. In: Proceedings—9th International Conference on Artificial Intelligence and Statistics (AISTATS 2003), p. 9 (2003)

Seo, S., Wallat, M., Graepel, T., Obermayer, K.: Gaussian process regression: active data selection and test point rejection. In: Mustererkennung 2000, pp. 27–34. Springer, Berlin (2000)

Smola, A.J., Bartlett, P.L.: Sparse greedy Gaussian process regression. In: Leen, T.K., Dietterich, T.G., Tresp, V. (eds.) Advances in Neural Information Processing Systems, vol. 13, pp. 619–625. MIT Press, Cambridge (2001)

Snelson, E., Ghahramani, Z.: Sparse Gaussian processes using pseudo-inputs. In: Advances in Neural Information Processing Systems, vol. 18, pp. 1257–1264 (2006)

Solin, A., Särkkä, S.: Hilbert space methods for reduced-rank Gaussian process regression. Stat. Comput. 30(2), 419–446 (2020)

Stein, M.L.: Interpolation of Spatial Data: Some Theory for Kriging, Springer Series in Statistics. Springer, New York (2012)

Stein, M., Chi, Z., Welty, L.: Approximating likelihoods for large spatial data sets. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 66(2), 275–296 (2004)

Sun, F., Gramacy, R., Haaland, B., Lawrence, E., Walker, A.: Emulating satellite drag from large simulation experiments. IAM/ASA J. Uncertain. Quantif. 7(2), 720–759 (2019)

Sung, C., Gramacy, R., Haaland, B.: Exploiting variance reduction potential in local Gaussian process search. Stat. Sin. 28, 577–600 (2018)

Svendsen, D.H., Martino, L., Camps-Valls, G.: Active emulation of computer codes with Gaussian processes—application to remote sensing. Pattern Recognit. 100, 107103 (2020)

Tan, L.S., Ong, V.M., Nott, D.J., Jasra, A.: Variational inference for sparse spectrum Gaussian process regression. Stat. Comput. 26(6), 1243–1261 (2016)

Titsias, M.: Variational learning of inducing variables in sparse Gaussian processes. In: Artificial Intelligence and Statistics, pp. 567–574 (2009)

Titsias, M.: Variational learning of inducing variables in sparse Gaussian processes. In: van Dyk, D., Welling, M. (eds.) Proceedings of the Twelth International Conference on Artificial Intelligence and Statistics, Volume 5 of Proceedings of Machine Learning Research, pp. 567–574. PMLR (2009b)

Ubaru, S., Chen, J., Saad, Y.: Fast estimation of tr(\(f(A)\)) via stochastic Lanczos quadrature. SIAM J. Matrix Anal. Appl. 38(4), 1075–1099 (2017)

Vapnik, V.: The Nature of Statistical Learning Theory. Springer, New York (2013)

Vecchia, A.: Estimation and model identification for continuous spatial processes. J. R. Stat. Soc. Ser. B (Methodol.) 50(2), 297–312 (1988)

Vijayakumar, S., Schaal, S.: Locally weighted projection regression: an \(o(n)\) algorithm for incremental real time learning in high dimensional space. In: Proceedings of the Seventeenth International Conference on Machine Learning (ICML 2000), vol. 1, pp. 288–293 (2000)

Wahba, G.: Spline Models for Observational Data. Society for Industrial and Applied Mathematics, Philadelphia (1990). Ch. 7

Wang, H., Li, J.: Adaptive Gaussian process approximation for Bayesian inference with expensive likelihood functions. Neural Comput. 30(11), 3072–3094 (2018)

Wang, K., Pleiss, G., Gardner, J., Tyree, S., Weinberger, K.Q., Wilson, A.G.: Exact Gaussian processes on a million data points. In: Advances in Neural Information Processing Systems, pp. 14622–14632 (2019)

Williams, C.K.I., Seeger, M.: Using the Nyström method to speed up kernel machines. In: Leen, T.K., Dietterich, T.G., Tresp, V. (eds.) Advances in Neural Information Processing Systems, vol. 13, pp. 682–688. MIT Press, Cambridge (2001)

Wilson, A., Nickisch, H.: Kernel interpolation for scalable structured Gaussian processes (KISS-GP). In: International Conference on Machine Learning, pp. 1775–1784 (2015)

Worley, B.A.: Deterministic uncertainty analysis. Tech. rep., Oak Ridge National Lab. (1987)

Zhang, B., Cole, D.A., Gramacy, R.B.: Distance-distributed design for Gaussian process surrogates. Technometrics 63(1), 40–52 (2021)

Acknowledgements

We would like to thank the journal editor and referees for their thorough review of this paper. They provided valuable insights and suggestions, helping improve the narrative and context of this work. DAC and RBG recognize support from National Science Foundation (NSF) Grant DMS-1821258.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A IMSE and ALC overview

As mentioned in Sect. 2.3, variance-based sequential design criteria are better aligned with the goal of generating accurate GP predictions than using the likelihood. We consider variations on integrated mean-squared error (IMSE) over a domain \(\mathcal {X}\), with smaller being better:

Choose \(\sigma ^2(\cdot ) \equiv \sigma _N^2(\cdot )/\nu \) from Eq. (2), and I may be used to optimize the N coordinates of \(\mathbf {X}_N\), or to choose the next (\(N+1^\mathrm {st}\)) one (\(\tilde{\mathbf {x}}_{N+1})\) in a sequential setting.Footnote 9 Closed-form expressions are available for rectangular \(\mathcal {X}\) and common kernels (e.g., Ankenman et al. 2010; Anagnostopoulos and Gramacy 2013; Burnaev and Panov 2015; Leatherman et al. 2018). Analytic derivatives \(\frac{\partial I}{\partial \tilde{\mathbf {x}}_{N+1}}\) facilitate numerical optimization (Binois et al. 2019; Gramacy 2020, Chapters 4 & 10). Approximations are common otherwise (Gramacy and Lee 2009; Gauthier and Pronzato 2014; Gorodetsky and Marzouk 2016; Pratola et al. 2017).

An analogue active learning heuristic from Cohn (1993), dubbed ALC, instead targets variance aggregated over a discrete reference set \(\mathcal {X}\), originally for neural network surrogates:

Seo et al. (2000) ported ALC to GPs taking \(\sigma ^2(\cdot ) = \sigma _N^2(\cdot )\) and \(\sigma ^2_{\mathrm {new}}(\cdot ) \equiv \sigma ^2_{N+1}(\cdot )\). If discrete and volume-based \(\mathcal {X}\) are similar, then \(\varDelta \sigma ^2 \approx c - I\), where c is constant on \(\mathbf {x}_{N+1}\). Discrete \(\varDelta \sigma ^2\) via ALC is advantageous in transductive learning settings (Vapnik 2013), where \(\mathcal {X}\) can be matched with a testing set. Otherwise, analytic I via IMSE may be preferred.

Against that backdrop, we propose employing ALC and IMSE to select inducing points \({\bar{\mathbf {X}}}_M\). To our knowledge, using such variance-based criteria is novel in the literature on the selection of inducing points. The criteria below are framed sequentially, for an \(M+1^\mathrm {st}\) point given M collected already. Although we prefer this greedy approach—optimizing d coordinates one-at-a-time rather than Md all at once in a surface with many equivalent locally optimal configurations due to label-switching—either criteria is easily re-purposed for an all-at-once application. Under the diagonal-corrected Nyström approximation (3) and assuming coded \(\mathcal {X} = [0,1]^d\),

where \(E=\int _{\tilde{x}\in \mathcal {X}}k(\tilde{\mathbf {x}},\tilde{\mathbf {x}})\mathrm{d}\tilde{\mathbf {x}}\) and \(\mathbf {W}_{M+1}\) is \((M+1)\times (M+1)\) via \( w({\bar{\mathbf {x}}}_i,{\bar{\mathbf {x}}}_j)=\int _{\tilde{\mathbf {x}}\in \mathcal {X}}k({\bar{\mathbf {x}}}_i,\tilde{\mathbf {x}})k({\bar{\mathbf {x}}}_j,\tilde{\mathbf {x}})\mathrm{d}\tilde{\mathbf {x}}\) for \(i,j\in \{1,\dots ,M+1\}\). This derivation is similar to the wIMSE calculations (10) and (11) following Binois et al. (2019).

To explore inducing point optimization, consider Herbie’s tooth (Lee et al. 2011) described in Sect. 2.3. Figure 9 shows variational lower-bound of the log-likelihood (left) and ALC/IMSE surfaces (right) for \({\bar{\mathbf {x}}}_{20}\) given a modestly sized training data set \((\mathbf {X}_N,\mathbf {Y}_N)\) of size \(N=200\). Similarities in the two surfaces are apparent. Many low/red areas coincide, but the optimizing locations (green dots), found via multi-start local optimization with identically fixed kernel hyperparameters, do not. Even after taking great care to humbly restrict searchers, e.g., from crossing \({\bar{\mathbf {X}}}_M\) locations, sometimes upward of 1000 evaluations were required to achieve convergence. Consequently, quadratic ALC/IMSE is faster.

1.1 A.1 Derivations of wIMSE and its gradient

For the predictive location \(\mathbf {x}^*\), assign weight \(k_\theta (\tilde{\mathbf {x}},\mathbf {x}^\star )\) and consider squared exponential kernel \(k_\theta (\cdot , \cdot )\) with isotropic lengthscale (1). The following is based on predictive variance (8) and expectation of the quadratic form of a random vector (Binois et al. 2019, Section 3.1).

where \(\mathbf {W}^\star _{m+1}=\prod _{k=1}^D \mathbf {W}^\star _{m+1,k}\) in D dimensions. The entry in the \(i^{\text {th}}\) row and \(j^{\text {th}}\) column of \(\mathbf {W}^\star _{m+1,k}\) is

where \(\iota ^{(i,j)}_{k}=\mathbf {x}^\star _{k}+{\bar{\mathbf {x}}}_{i,k}+{\bar{\mathbf {x}}}_{j,k}\). \({\bar{\mathbf {x}}}_{i,k},{\bar{\mathbf {x}}}_{j,k}\) are entries from the \(i^{\text {th}}\) and \(j^{\text {th}}\) rows and \(k^{\text {th}}\) column of \({\bar{\mathbf {X}}}_{m+1}\) (\(i, j \in \{1,\dots ,m+1\}\)) and \(\mathbf {x}^\star _k\) is the \(k^{\text {th}}\) coordinate of \(\mathbf {x}^\star \).

The gradient of weighted integrated mean-squared error with respect to the \(k^{\text {th}}\) dimension of \({\bar{\mathbf {x}}}_{m+1}\) is:

In the matrix \(\frac{\partial \mathbf {W}^\star _{m+1}}{d{\bar{\mathbf {x}}}_{m+1,k}}\), all entries are zero except the row/column that corresponds to the row of \({\bar{\mathbf {X}}}_{m+1}\) that contains \({\bar{\mathbf {x}}}_{m+1}\), which we place in the last \(m+1^{\text {st}}\) row. For the nonzero entries in \(\frac{\partial \mathbf {W}^\star _{m+1}}{\partial {\bar{\mathbf {x}}}_{m+1,k}}\), we re-express them as

where

Working with \(\mathbf {K}_{m+1}\) and \(\mathbf {Q}_{m+1}^{(n)}\) is cubic in m, yet even that is overkill. Thrifty evaluation of Eqs. (10–12) lies in construction of \(\mathbf {Q}_{m+1}^{(n)}\) which is equivalent to \(\mathbf {K}_{m+1} + \mathbf {k}^\top _{n,m+1}\varvec{\Gamma }_{n,m+1}\). Evaluating \(\mathbf {k}^\top _{n,m+1}\varvec{\Gamma }_{n,m+1}\) requires \(2n-1\) products for each of \((m+1)^2\) entries, incurring costs in \(\mathcal {O}(m^2n)\) flops. Assuming \(n\gg m\), this dominates the \(\mathcal {O}(m^3)\) cost of decomposition.

More time can be saved through partitioned inverse (Barnett 1979) sequential updates to \(\mathbf {K}^{-1}_{m+1}\) after the new \({\bar{\mathbf {x}}}_{m+1}\) is chosen, porting LAGPs frugal updates to the LIGP context. Writing \(\mathbf {K}_{m+1}\) as an m-submatrix with new \(m+1^\mathrm {st}\) column gives

using \(\rho = k_\theta ({\bar{\mathbf {x}}}_{m+1},{\bar{\mathbf {x}}}_{m+1}) -\mathbf {k}_m^\top ({\bar{\mathbf {x}}}_{m+1})\mathbf {K}_m^{-1}\mathbf {k}_m({\bar{\mathbf {x}}}_{m+1})\) and m-length column vector \(\varvec{\eta }=-\rho ^{-1}\mathbf {K}_m^{-1}\mathbf {k}_m({\bar{\mathbf {x}}}_{m+1})\). Updating \(\mathbf {K}_{m+1}^{-1}\) requires calculation of \(\rho \), \(\varvec{\eta }\), and \(\varvec{\eta } \varvec{\eta }^\top \), each of which is in \(\mathcal {O}(m^2)\). Thus, we reduce the computational complexity of \(\mathbf {K}_{m+1}^{-1}\) from \(\mathcal {O}\left( m^3\right) \) to \(\mathcal {O}\left( m^2\right) \). Similar partitioning provides sequential updates to \(\varOmega _n^{(m+1)}\), a diagonal matrix:

where \(\zeta =\mathbf {k}_{nm}\mathbf {K}_m^{-1}\mathbf {k}_m({\bar{\mathbf {x}}}_{m+1})-\mathbf {k}_n({\bar{\mathbf {x}}}_{m+1})\). Updates of \(\varOmega _n^{(m+1)}\) without partitioning, driven by matrix–vector product(s) \(\mathbf {k}_{n,m+1}\mathbf {K}_{m+1}^{-1} \mathbf {k}_{n,m+1}^\top \) involve \(m^2n\) flops. Using (15) reduces that to \(\mathcal {O}(mn)\).

Unlike in Eq. (14), \(\mathbf {Q}_{m}^{(n)}\) cannot be trivially augmented to construct \(\mathbf {Q}_{m+1}^{(n)}\) due to the presence of \(\varOmega _n^{(m)}\) which is also embedded in \(\mathbf {Q}_{m}^{(n)}\). Yet there are some time savings to be found in the partitioned inverse

with \(\mathbf {Q}_{m*}^{(n)}= \mathbf {K}_m + \mathbf {k}_{nm}^\top \varOmega _n^{(m+1)-1} \mathbf {k}_{nm}\) built via updated values of \(\varOmega _n^{(m+1)}\), \(\varvec{\gamma }({\bar{\mathbf {x}}}_{m+1}) = \mathbf {k}_m({\bar{\mathbf {x}}}_{m+1})+\mathbf {k}^\top _{nm}\varOmega _n^{-1(m+1)} \mathbf {k}_n({\bar{\mathbf {x}}}_{m+1})\), \(\psi ({\bar{\mathbf {x}}}_{m+1}) = k_\theta ({\bar{\mathbf {x}}}_{m+1},{\bar{\mathbf {x}}}_{m+1})+k_n({\bar{\mathbf {x}}}_{m+1})^\top \varOmega _n^{-1(m+1)}k_n({\bar{\mathbf {x}}}_{m+1})\), \(\upsilon = \psi ({\bar{\mathbf {x}}}_{m+1})-\varvec{\gamma }({\bar{\mathbf {x}}}_{m+1})^\top \mathbf {Q}_{m*}^{-1(n)}\varvec{\gamma }({\bar{\mathbf {x}}}_{m+1})\) and \(\xi = -\upsilon ^{-1}\mathbf {Q}_{m*}^{-1(n)}\varvec{\gamma }({\bar{\mathbf {x}}}_{m+1})\). Similar to \(\mathbf {Q}_{m}^{(n)}\), calculating \(\mathbf {Q}_{m*}^{(n)}\) requires in flops in \(\mathcal {O}(m^2n)\). Consequently, the entire scheme can be managed in \(\mathcal {O}(m^2n)\).

B Determining neighborhood size

Little attention is paid in the literature to the choosing the number of (global) inducing points (Seeger et al. 2003; Titsias 2009b; Azzimonti et al. 2016) relative to problem size (N, d), except on computational grounds—smaller M is better. The same is true for local neighborhood size n in LAGP. Although there is evidence that the laGP default of \(n=50\) is too small (Gramacy 2016), especially with larger input dimension d, cubically growing expense in n limits the efficacy of larger n in practice. With local inducing points this is mitigated through cubic-in-m proxies, allowing larger local neighborhoods, thus implying more latitude to explore/choose good (m, n) combinations.

Toward that end, we considered a coarse grid of (m, n) and predictive RMSEs on Herbie’s tooth (\(d=2\)) and borehole \((d=8)\) toy problems. Setup details are identical to descriptions in Sects. 3.2 and 5.2, respectively, and we used the qNorm (\(\varPhi ^{-1}\)) template throughout. An LHS testing set of size \(N'=1000\) was used to generate the response surfaces of RMSEs reported in Fig. 10. These are shown in log space for a more visually appealing color scheme, and were obtained after GP smoothing to remove any artifacts from random testing. Grid elements where \(m>n\) were omitted from the simulation on the grounds that there are no run-time benefits to those choices.

Observe that both surfaces are fairly flat across a wide swath of m, excepting quick ascent (decrease in accuracy) for smaller numbers of inducing points in the left panel. The situation is similar for n. Best settings are apparently input-dimension dependent. Numbers of inducing points as low as \(m=10\) seems sufficient in 2d (top panel), whereas \(m=80\) is needed in 8d. For borehole, it appears that larger neighborhoods n are better, perhaps because the response surface is very smooth and the likelihood prefers long lengthscales (Gramacy 2016). A setting like \(n=150\) seems to offer good results without being too large. The situation is different for Herbie’s tooth. Here, larger n has deleterious effects. Its non-stationary nature demands reactivity which is proffered by smaller local neighborhood. A setting of \(n=100\) looks good.

These are just two problems, and it is clearly not reasonable to grid-out (m, n) space for all future applications. But nevertheless, we have found that these rules of thumb port well to our empirical work in Sect. 5. Our satdrag example (\(d=8\)) and classic \(d=21\) benchmark work well with the settings found for borehole, for example. Some ideas for automating the choice of (m, n) are discussed in Sect. 6.

Rights and permissions

About this article

Cite this article

Cole, D.A., Christianson, R.B. & Gramacy, R.B. Locally induced Gaussian processes for large-scale simulation experiments. Stat Comput 31, 33 (2021). https://doi.org/10.1007/s11222-021-10007-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-021-10007-9