Abstract

In this paper, we explore the learning and teaching of a maritime simulation programme to understand its deep learning elements. We followed the mixed methods approach and collected student perception data from a maritime school, situated within a UK university, using reflection-based survey (n = 112) and three focus groups with eleven students. Findings include the needs for defining clear learning outcomes, improving the learning content to enable exploration and second-chance learning, minimising theory–practice gaps by ensuring skills-knowledge balance and in-depth scholarship building, facilitating tasks for learning preparation and learning extension, and repositioning simulation components and their assessment schemes across the academic programme. Overall, the paper provides evidence on the importance of deep learning activities in maritime simulation and suggests guidelines on improving the existing practice. Although the findings are derived from a maritime education programme, they can be considered and applied in other academic disciplines which use simulation in their teaching and learning.

Similar content being viewed by others

Introduction

Unique designs and applications of simulation have demonstrated wide educational success in many academic disciplines and professions, for example, nursing (Wyatt, Archer, & Fallows, 2015), computing (Jamil & Isiaq, 2019), business (Yahaya et al., 2017), and education (Chini, Straub, & Thomas, 2016). Pedagogical features of simulation are generally creative and interactive, having capabilities to facilitate specialised contents through multiple means, for example the provisions for using practical experiences through conscious and repetitive practices, which can supply rich learning to students (Bryan et al., 2009; Kolb, 1984; Sawyer et al., 2011). Additionally, simulation can link academic learning with professional activities, thus many academic programmes use it as an alternative for industry placement (Kelly et al., 2014; Rochester et al., 2012).

Maritime simulation provides naval experiences through virtual representations of ships as well as surrounding environments and geographic locations (Woolley, 2009). The versatile and realistic functionalities supply exposures of vessel operation and associated mistakes, port facilities and naval architecture (Haun, 2014). Human errors are the primary cause for maritime accidents (Allianz Global Corporate & Specialty, 2017), therefore maritime simulation is an important requirement in the training and professional development activities for seafarers to ensure safe and efficient navigation and seamanship.

Simulation training is offered in maritime education at many universities across the world. However, the teaching and learning features, value and impacts of this scheme are under-researched (Paine-Clemes, 2006; Pallis & Ng, 2011). One of the limitations of maritime simulation is its singular focus on common human errors and associated responses, not the wider application of learning they facilitate (Hanzu-Pazara, 2008). Besides, maritime simulation programmes are predominantly training, or repetitive-practice based, thus their academic rigour is not always gauged according to common principles of higher education, such as self-regulated, inquiry-based, shared, and applied learning (Evans & Kozhevnikov, 2016; Morley & Jamil, 2021; Stern, 2016). However, research-informed discussion on the teaching and learning within maritime simulation environment is gradually expanding in the literature. Examples include students’ self‐efficacy and motivation (Renganayagalu et al., 2019), academic module standardisation (Park, 2016), approaches to managing crisis and complex tasks (Baldauf et al., 2016; Hjelmervik et al., 2018), and role of reflection (Sellberg et al., 2021) in maritime simulation programmes. This paper adds a fresh perspective to this discourse and discusses the aspect of deep learning elements in such a technology-enabled and scenario-based teaching/learning practice.

Maritime simulation: a pedagogical overview

Presently, simulation exercises in higher education are largely dependent on technology. With the help of high-end machineries, modern simulators have powers to create complex and creative learning activities. However, it is plausible that the extensive use of technology in education is not always helpful for effective teaching and learning. For example, learners’ disparate levels of confidence, knowledge, and skills of using technology may lead to mixed learning outcomes in such a learning environment (Johnson et al., 2016). Additionally, the lack of proper pedagogical designs and their efficient use in technology-enhanced learning may trigger challenges and dissatisfaction for students and teachers (Arinto, 2016). Therefore, a simulation environment needs to address both the technological and pedagogical aspects for enabling effective learning (Sellberg, 2018).

Maritime simulation is a technology-enabled virtual reality environment. The hardware or equipment, ship operation tools, and the specially designed learning space used for creating such an environment is called a simulator. Maritime simulators use large electronic screens, computer graphics and mathematical modelling for creating artificial three-dimensional scenarios. The scenarios are visually convincing, and they contain photo-realistic seascape, interiors of ships, sea environments, coastal areas, and seaports. Maritime simulation is a common and often mandatory training requirements for maritime professionals including ship officers, shipping companies’ superintendents, marine pilots, and port officials. These training programmes minimise gaps between theory and practice within an interactive professional learning environment. Students participate in collaborative role plays as the same way they would do in operating real ships. The tasks contain different learning objectives and assessment criteria for individual simulation scenarios. Through physical and cognitive engagement, students deal with varied challenges and environmental conditions. They plan their voyage, prepare navigational equipment, and navigate the ship. The courses are expected to prepare them for managing stress, prioritising multiple tasks, and dealing with emergencies and changing conditions in real situations. These courses are generally facilitated by instructors having prior experience of navigating actual ships, and by technicians and technical instructors who operate the simulator. This paper explores pedagogical aspects of Bridge Simulation (BS), a common maritime simulation that creates virtual environment of the Bridge or the command centre of a ship from where all sides of the sea and waterways can be viewed.

Generally, the pedagogical characteristics of maritime simulation are complex because maritime programmes combine cognitive and behavioural features of training (Cunningham, 2015; Mindykowski, 2013). Unlike regular academic courses in higher education, maritime programmes have widely varied durations making their learning and teaching procedures unique. However, all these programmes require to comply with the Standards of Training, Certification and Watchkeeping for Seafarers (STCW) regulations. STCW provides comprehensive academic guidelines on maritime training objectives, entry requirements for students, trainers’ qualifications, training methods, learning resources, assessment schemes, and certification (International Maritime Organization, 2020). Maritime simulation including Bridge Simulation programmes are required to fulfil these requirements as well.

To accomplish pedagogical and professional expectations in an academic programme; addressing the ethos, conventions and goals of the respective academic discipline is essential (Rystedt & Sjoblom, 2012). This cultural alignment is vital in maritime simulation research, particularly to understand the implications of simulation technology in the pedagogical practice and maritime profession. Historically, there is a disciplinary divide in terms of meanings and applications of pedagogies, thus the adaptation methods of technology and their academic goals are different in different disciplines. Shulman’s concept of ‘signature pedagogies’ elaborates this individuality of instructional conventions, particularly how the academic programmes follow distinct teaching–learning approaches to preparing students for different professions (Shulman, 2005). Therefore, the pedagogical insights into maritime simulation are expected to include discipline-focused and maritime professional skills related discussion. The key objective of this paper is to evaluate a maritime simulation programme to understand its disciplinary features linked to any elements of deep learning.

The importance of deep learning in maritime education

The term ‘deep learning’ conveys two separate domains of knowledge linked to technology and learning. First, it refers to a subfield of machine learning which deals with algorithms and artificial intelligence (Goodfellow, Bengio, & Courville, 2016). Differently, academics and education researchers use it to mean higher order cognitive and emotional competencies that can build a learner’s character as an effective thinker and doer in complex situations (Quinn et al., 2019). In this paper, we explore the aspects of the second domain within the context of maritime and higher education.

Higher education and deep learning

Advance HE, the professional membership scheme provider in the UK, defines five competencies, namely critical thinking, problem solving, collaboration, communication, and learning to learn as key elements of deep learning (Advance HE, 2020). These skills broadly characterise the features of learning and teaching in higher education; thus, university academic programmes are expected to embed them explicitly and implicitly. Deep learning skills are often interconnected and reciprocal in their actions and impacts.

-

Critical thinking is ‘a crucial skill to cope with uncertainty, complexity and change’ (Caena, 2019, p 23). It is a cognitive ability to understand different aspects of an issue or action through careful considerations and analysis of information (McPeck, 2016). In higher education, critical thinking is considered as an important indicator to predict students’ long-term and professional success (Nold, 2017). Research evidence, for example Hyytinen et al. (2019) and Pnevmatikos et al. (2019) shows that carefully designed pedagogical activities can enhance critical thinking skills of students in academic programmes.

-

Problem-solving is a special cognitive capacity and an essential graduate skill for learning, employment, and greater economic development (Greiff et al., 2013; Lowden et al., 2011). It supplies strategies to resolve cross-disciplinary and real problems (OECD, 2004). In higher education curricula, other deep learning skills, such as critical thinking and collaboration can help students develop problem solving competence and gain academic success (Arvanitakis & Hornsby, 2016; McPeck, 2016).

-

Learning is a social phenomenon in which interaction and negotiation of information are vital (Vygotsky, 1978). Collaboration creates opportunities to tackle challenges and co-create ideas in a shared learning environment. Additionally, it promotes engagement, teamwork, and social skills which are essential in professional practices (Scager et al., 2016). In higher education, pedagogical approaches and activities, such as seminars and problem-based assignments can enable collaboration. To ensure effective collaboration, the use of challenging and interesting tasks in pedagogies is important to develop students’ shared ownership and understanding (Scager et al, 2016).

-

Communication is another essential skill required in daily life and education at all stages. There are three main types of communication: verbal (spoken and written); nonverbal (the use of sign and body language); and visual (such as illustration, typography, and animation). Effective communication includes using proper language, comprehension, questioning, active listening, and cultural awareness (Henderson & Mathew, 2016). In higher education, communication is considered as an important graduate attribute to achieve academic excellence and employment (OECD, 2013). Research findings suggest to include inquiry (Carter et al., 2016), discussion (Dallimore et al., 2008), and student-centred learning activities (Baporikar, 2018) to enhance students’ communication skills in higher education.

-

Learning to learn is a long-term and transferrable competence (Hoskins & Fredriksson, 2008; Taguma, Feron, & Hwee, 2018). It involves practical strategies and skills for personal development; for example, academic skills, time management, and exploring difficulties in learning processes as well as identifying potential solutions (European Council, 2018; Nisbet & Shucksmith, 2017). The value of learning to learn skills in higher education is great, particularly for its consistent use in students’ future profession and work. In formal education, students’ learning to learn capacity can be enhanced through developing their critical thinking, problem solving and learning management or metacognition skills (Caena, 2019).

Scope of deep learning in maritime education and training

Traditionally, maritime curricula, particularly their training programmes, are dominated by elements of hands-on practical skills implementable in the respective professional practice. Positioning this trend and learning objectives within higher education settings is problematic as historically higher education programmes are designed to develop learners deep learning or cognitive capabilities, such as critical thinking and analytical skills (Manuel, 2017). Because of this variance, and the different roles of seafarers, some higher education institutions provide academic and pure vocational curricula separately (Francic, Zec, & Rudan, 2011). However, in recent years, maritime training and legislation authorities are highlighting the need of incorporating deep learning skills in maritime curricula. For example, in 2018, the International Association of Maritime Universities (IAMU) identified fifteen key competencies for future seafarers, namely technical competencies, technological awareness, adaptability and flexibility, computing and informatics skills, teamwork, communication skills, leadership, discipline, environmental and sustainability awareness, learning and self-development skills, complexity and critical thinking, language ability, professionalism and ethical behaviour, responsibility, and inter-personal and social skills (cited in Cicek et al., 2019). This list of competencies includes deep learning skills, such as critical thinking, communication, teamwork, and learning to learn skills.

The ongoing Fourth Industrial Revolution or Industry 4.0 is altering traditional industrial practices through remotely controlled and automated technology which uses machine-to-machine communication and artificial intelligence (Grzybowska & Lupicka, 2017; Lasi et al., 2014; World Economic Forum, 2016). This fast-changing role of technology is also transforming maritime equipment and operations which require new knowledge and professional skillsets. As a result, seafarers now need to improve their capacity to adjust with changed work environments and new operational procedures, for example with unmanned or remotely operated vessels. The incorporation of deep learning skills in maritime education and training emerges as a helpful approach in preparing seafarers for more technology-oriented and complex work environments. The emerging applied and real-world learning concepts show practical approaches to accommodating deep learning skills in higher education and professional training programmes leading to certain professions including maritime industry (Morley & Jamil, 2021).

The study

In acknowledging the lack of pedagogical discussion on maritime simulation in higher education literature, and to explore the scope and feasibility of deep learning skills in maritime simulation sessions, we investigated the following three research questions through analysing relevant experiences and perceptions of students.

-

i.

How do the students’ learning experiences define features of maritime simulation pedagogy?

-

ii.

To what extent do their perceptions indicate deep learning elements in the simulation practice?

-

iii.

To what extent do the findings supply pedagogical directions for enhanced teaching and learning activities in maritime simulation?

Methodology

We followed the mixed methods approach in our research and used Bridge Simulation (BS) training courses of a reputed maritime school at a UK university as a case study. The department was using a Kongsberg Maritime Polaris Bridge Simulator for a full mission simulation training (www.kongsberg.com). This simulation setting supplies one Full Mission Simulator (FMS) and five multi-purpose navigational simulators. The facilities of the simulator include sophisticated configurations, such as different modern navigational equipment, propulsion system, single and twin rudders, bow and stern thrusters, portfolios of ‘own-ship’ and target ship structures, and database. By using BS, the school offers a range of operational and management level maritime courses, for example Navigation Aids, Equipment and Simulation Training course (NAEST), Electronic Chart Display and Information Systems (ECDIS), Bridge Team Management (BTM), Bridge Resource Management (BRM), and Yacht Navigation and Radar. In the research, we investigated general pedagogical approaches and procedures of these training programmes through exploring learning experiences and perceptions of the students. We had obtained ethical approval from the parent university and followed all standard ethical guidelines in the entire study.

Research participants

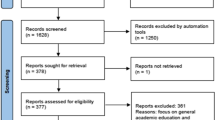

A total of 115 students including 8 in both the surveys and focus group, and 3 only in the focus group, participated in the study (112 in the surveys and 11 in the focus group sessions). The students had a rich mixture of professional levels and experiences, namely (i) young cadets studying towards a professional degree required for working as OOWs (Officer of the Watch) in the ship industry, (ii) experienced yacht officers aiming for professional progression, and (iii) experienced pilots of a renowned British port attending a short course as part of continuing professional development (CPD). We categorised them in three age groups, namely under 30, 31–40, and 40 and above to obtain separate professional experience levels. This age-based categorisation was suggested by one of the researchers of this study who has more than thirty years of maritime industry experience including navigation, ship operation, and management of multinational crews. According to his observation, under 30, 31–40, and 40 and above years old participants generally have entry to intermediate level, mid-level, and senior level professional experience respectively. The data suggest, most of the participants were male and under the age of thirty (see Fig. 1 below).

The students were attending courses leading to Merchant Navy and Commercial Yacht certifications during the data collection period. BS courses were embedded as an integral academic component of the programmes. The diverse professional exposures and varying lengths of experience of the students provided a powerful experiential account of simulation-based learning and teaching.

Data collection methods

We collected research data using two methods: (i) survey (n = 112) in ten BS sessions with a reflective self-report questionnaire, and (ii) three focus group sessions with 11 students. The self-report survey data were rich as they contained responses with the information of gender, age, and experience differences; and on multiple themes, namely pedagogic procedure, learning content, student engagement, and motivation (Sue & Ritter, 2007). The focus groups supplied elaborate answers to the research questions along with useful examples and explanations (Bergold & Thomas, 2012). Broadly, the survey addressed Research Question 1 by allowing students to share responses on different pedagogical components. The focus groups mainly addressed Research Question 2 by providing opportunities to reflect on features of maritime simulation as well as their deep learning elements. The survey and focus groups together were expected to help explore pedagogical directions for enhanced maritime simulation practice which addressed Research Question 3.

The survey questionnaire contained eighteen items including two demographic questions (gender and age), and sixteen self-evaluation items equally divided into four pedagogical areas. The questions were inspired by related learning theories, educational concepts, and empirical studies (see Table 1). The objectives for narrowing down the survey items within the four areas were to enable a focused investigation and obtain baseline understanding of the qualities of simulation-based teaching and learning.

Except the demographic questions, the response options for the survey items were in five-point Likert scale: Strongly Disagree, Disagree, Neutral, Agree, and Strongly Agree (Likert, 1932).

One of the authors of this paper, who was not a member of the maritime school where the study took place, facilitated the focus group sessions. The researcher did not have any prior teaching or academic support relationship with the research participants which helped avoid any potential bias or concerns in sharing information freely. Each of the sessions lasted about forty-five minutes. The discussion topics were centred on BS pedagogies, mainly the activities that enabled or hindered learning of the participants. The key questions were,

-

What were the learning objectives of BS classes?

-

What did the BS sessions want you to learn?

-

What elements of the BS sessions did attract you the most and why?

-

To what extent was the BS module relevant to your future profession?

-

To what extent were the assessment schemes of BS modules reliable?

While the nature of discussion was mainly shared and complementary, the questions encouraged the participants to reflect and share views critically and with examples.

Data processing and analysis procedure

We processed the survey data using Statistical Package for the Social Sciences or SPSS software (version 24). The data were on an ordinal measure and there was a substantial difference between the sample sizes, particularly between genders (male: female = 104: 8). Therefore, we used non-parametric methods, namely Mann–Whitney U test (with gender data which contained two groups), Kruskal–Wallis H test (with age data which contained three groups), and Median. We also used Spearman’s Correlation to find statistically significant levels of relationship between the pedagogical elements, namely procedures, content, motivation, and engagement.

We audio recorded all the focus group sessions and transcribed them verbatim with the help of a professional transcriber. We processed the data manually and in three stages: (i) generated initial codes by identifying and separating individual features of the simulation pedagogy and its deep learning elements, (ii) grouped the codes to gain initial themes, namely teaching/learning procedure, assessment, decision making provision, leadership skills, problem-solving activities, theory–practice gaps, challenging nature of the content, and intended learning outcomes, and finally (iii) positioned the themes in a narrative description. While analysing the findings, we cross-evaluated the survey and focus group results. We also compared the findings with established higher education concepts. This data triangulation was helpful in achieving rich perspectives and practical conclusions (Johnson et al., 2007; Teddlie & Tashakkori, 2009).

Findings

The survey and focus group data shed light on students’ learning experiences in maritime simulation from two broad perspectives: maritime simulation as a pedagogical approach, and the presence of deep learning elements in such an educational environment and procedures. The findings together supplied insights into approaches that can address both the higher education and professional requirements in the changing maritime industry.

Survey findings: Maritime simulation as a pedagogical approach

We were interested to verify if there were any significant difference in student perceptions in terms of genders and age groups. The Mann–Whitney U Test results did not show any significant difference between the male and female students in terms of how they perceived their engagement, motivation, content, and educational procedures in Bridge Simulation (BS) sessions. The Kruskal–Wallis H Test results also showed statistically insignificant results for the students of dissimilar age. These findings suggest similar learning experiences of different gender and age groups in the BS sessions. The effect sizes in the tests results were mainly small and in two cases moderate (see Table 2) indicating minor differences among the gender and age groups.

The Spearman’s Correlation measurement test demonstrated levels of linear relationship between the students’ perceptions of engagement, motivation, content, and educational procedure (see Fig. 2). Based on the interpretation proposed by Dancey and Reidy [+ 0.1/ + 0.2/ + 0.3: weak, + 0.4/ + 0.5/ + 0.6: moderate, + 0.7/ + 0.8/ + 0.9: strong] (Dancey & Reidy, 2007), the results revealed a mixed relationship between the four elements.

The results in Fig. 2 indicate a moderate relationship between engagement, motivation, and educational procedures. It is plausible that the educational procedure or learning/teaching activities in the BS simulation had modest influence on students’ engagement and motivation, which is generally expected in the educational practice.

The content of BS showed different levels of relationship with the other three elements. On the one hand, it showed moderate relationship with students’ motivation indicating its possible success in creating curiosity and interest for learning. On the other, the content had weak relationship with engagement and teaching/learning procedure indicating the likelihood of its less inspiration as well as students’ less satisfaction in using them. These speculations are also supported by the low Median score of content (Median 3.25) compared to the scores of engagement (Median 4.25), motivation (Median 4.25), and learning procedures (Median 4.25), as listed in Table 3.

Focus group findings: elements of deep learning in maritime simulation

Focus group data revealed general features of Bridge Simulation (BS) including its assessment-centredness and strong emphasis on problem-solving and decision-making skills. They also indicated the need for revamping traditional BS sessions according to defined learning outcomes and through incorporating realistic approaches to minimising theory–practice gaps. In reporting the findings, we did not categorise the student views based on gender or age because the survey results did not show any significant difference in these areas. Besides, we did not find any conflicting differences in student perceptions linked to simulation practices between the three focus group sessions. The data were rather complementary which helped formulate the following narrative on the teaching and learning in maritime simulation environment. However, we mention the following codenames in quoting data to provide relevant backgrounds, namely respective session numbers and types of participants.

-

FG1: session one with young cadets studying towards a professional degree

-

FG2: session two with experienced yacht officers

-

FG3: session three with experienced pilots

The focus group data report various instances of deep learning elements. These include decision making, implementation and analysis of decisions, and reflection as critical thinking and problem-solving activities; using prior learning and learning from mistakes as learning to learn practices; and faculty-student teamwork and students’ use of technical communication for leading and managing simulation tasks as collaboration and communication activities (see the four discussion themes below).

Assessment is the heart of BS sessions, and problem-solving drives the learning

Students considered the simulation sessions were mainly an assessment practice through which they were able to test their prior learning gains.

It (simulation) was basically focused on what we had already learnt during other parts of our course… There was a brief introduction to the simulators and then sort of straight away we were there using the simulators … I felt as they were always monitoring what we did, the way we were complying with everything which then all built up to the final larger assessment (FG2).

We were informed that it was a continuous assessment throughout and on the first hour he (the lecturer) said how we were to be assessed. It was a publication we were given so we were aware of what we were being asked of … (FG1).

The assessment was formative in nature and students were expected to learn from their mistakes.

They (the lecturers) observe the first three days of teaching and then the last two days they examine you so the first three days you can make mistakes and they’ll tell you what’s going wrong (FG2).

… if they (the lecturers) felt that one person in the Bridge was weaker, they would let us know and take us aside privately and say you really made the wrong manoeuvre or you’re seeming very stressed, and then maybe give some private tuition (FG1).

The lecturers monitored simulation activities closely and engaged themselves as team members.

… they’re assessing your personality and how confident you are, how assertively you can make decisions. They were quite fair, and they’ve got quite a good way of assessment … they’re monitoring your actual decisions, how you interact on the Bridge (FG1).

Decision making for real-world is the key educational goal

Students had opportunities to take divergent decisions based on situational demands. In this regard, a student shared the following activity.

… a traffic separation scheme exercise was crossing the Dover straight, a busy area and we all three ships made different decisions. The vessel I was on, we decided to adjust our speed and stay ninety degrees to the TSS (Traffic Separation Scheme). It was something which personally I think I overlooked how to reduce speed and speed up could work and it meant that you could cross the TSS effectively. But I guess I overlooked that possibility before, and then I thought we’ve done the best job wherein that all those guys have gone the wrong way down the TSS … in reality, we learnt that adjusting your engines like that can also be problematic in a realistic environment with the engineers and it may also not be readily apparent, and sometimes an alteration of course might have been more effective. I think that was the first time when all three ships had made very different decisions to achieve one end goal and we were able to take apart each bit and formulate what we think was the best decision in the end (FG3).

They experienced changing roles and implemented decisions in various situations.

In brainstorming, people’s strengths and weaknesses were highlighted in a Bridge team, but there was always an Officer of the Watch (OOW), one or two Radar Operators and a Helmsman, so there was clear sort of delegation, and then the next exercise you’d switch it round and there’d be a different officer … (FG1).

In reality, there are times when the pilot may overlook something because there are so many things and multi-tasking on the Bridge and so many things to look after, and he can make errors too. I think the important thing I learnt was not to neglect your duty as an officer and that you have responsibilities of the ship under the Master (Captain) (FG3).

Students also had occasions when they reflected on their activities and analysed decisions which they had to take in simulation environment.

We had three different Bridges, and there were three different ways of doing what we did … you could just look through and go … all of them are correct but it’s all just different sort of ways around it … (FG1).

They had all the recordings up there and they would say why you did that. They would then answer and say well that’s good, did you think you could’ve done this better … (FG2).

The sessions also involved leadership building activities.

On the first day, they gave us a sheet that said each of the exercise (simulation scenarios), who was the Officer of the Watch, who was the Radar (Officer), who was the Helmsman. … It was whoever it happened to fall on which actually gives the chance to show your leadership qualities (FG1).

… we were talking about Bridge team management which would come in handy in future when you’re trying to deal with other people, how would you manage, which is quite interesting (FG2).

Students experience theory–practice gaps and a lack of intended learning outcomes

Students found a blend of theoretical and practical elements in BS tasks, but the expected learning outcomes were not always clear to them.

It was a good combination of theoretical elements in the course so far like Rules of Road (Collision regulations at sea), application of the Rules of the Roads, not just spitting them out verbatim, we can apply them in traffic separation schemes (FG3).

Besides, achieving knowledge was the ultimate goal whereas the students thought they did not always gain the relevant skills.

I think it’s just the knowledge because it’s not a course that’s meant to enhance your boat handling abilities, it’s just meant to show that you know what you’re doing (FG2).

The Bridge Simulation is very much focused on navigation, and we’re also studying the cargo aspect, stability, weather meteorology, so it is very much focused on safety of navigation. I think the exercises that we handled were of much greater difficulty than we’d expect to handle in real life. I think they just want us to know that we can call the Captain, and, if we were in the worst-case scenario, how we would deal with the situation (FG1).

The students thought the simulation tasks were less challenging and thus they learned a little from these activities.

This course was actually combining everything we’ve already learnt which is why there wasn’t too much learning involved (FG2).

There were some sorts of time constraints … there was a lot going on, but I thought it was again quite good to add a bit of pressure and just sort of realism to it … (FG1).

Students felt the need for carefully designed BS sessions

In focus groups, students expressed their hope on using contemporary vessels and modern equipment. They also emphasised the adoption of advanced simulation content which can supply futuristic scenarios.

[In BS,] … we use so much visual information. A small field of vision in a simulator for the sort of work we do is always fairly close quarter (situations) …. You know it becomes a game because you’re just looking at electronics where we may use to get to where we’re going. But, once we get there, it’s still very visual. Being a pilot, a simulator for us has to be good visually (FG3).

I think the stuff that we’re doing at the minute is pretty old, old sort of Bridge Simulation stuff (FG2).

I’m pretty sure that a lot of ships now and yachts are operated from the controls. It’d be pretty handy to know how to use all this modern equipment… maybe even a couple of years in the future, what a futuristic Bridge will look like … (FG2).

Students suggested to use diverse and challenging content, but they believed unrealistic and extremely tough scenarios may become a confidence killer.

You’re using different sorts of ECDIS (Electronic Chart Display and Information System), and the assessment is different here. It would be quite nice to have it streamlined (FG2).

The basic principle of simulation is to give the people confidence. So, if you overload them with scenarios that are unrealistic, all you’re going to do is just destroy their confidence (FG3).

They also discussed benefits of addressing participants’ experience and learning expectations in BS activities.

I suppose many people come here who have only a couple of years’ experiences and may not have much or any experience on the Bridge at all. They’ll probably get a lot more out of it than what some of us would have been doing for ten, fifteen years (FG3).

Ours (profession) is very much driven by what we want to achieve, so once you become a class-one pilot, you pretty well have some set pieces you have got to do each time, but the rest of it is what you want to do (FG3).

Overall, students considered the positioning of simulation modules in the curriculum is an important step towards enabling effective learning.

… within three years, we do sort of four-five months doing basic stuff. Then, we go to sea, we do nine months at college doing a little bit more advanced stuff, go to sea, and then for us we’ve just got this phase (BS training). We’ve got loads of short courses, we’ve got the Nexus simulator (maritime simulation software) course, we’ve got GMDSS (Global Maritime Distress and Safety System) radio communications course, lots of these sorts of courses, and then we’re just prepping for our final oral exams. By this stage, we’re not learning anything new, we’re revising and refreshing what we’re doing (FG1).

Timewise it was quite constrained, and I think as a class we all agree that maybe if we’d done something similar like this in the past, then we would’ve had a better platform to do this course (FG1).

Discussion

As depicted in the research questions, the aims of our study were to explore three areas of maritime simulation programmes: (i) student perceptions of the learning and teaching features (ii) the extent of deep learning elements, and (iii) feasible approaches, if there are any, for improving the existing practice. The survey and focus groups individually and together shed light on these areas and supply fresh insights into the use of maritime simulation as an academic approach to facilitating deep learning.

Firstly, the survey findings illustrate pedagogical features, namely teaching/learning procedure, content, motivation, and engagement in maritime simulation practice. This, along with the findings from the focus groups, responds to Research Question 1. The survey results report students’ high satisfaction in areas of teaching/learning procedure, motivation, and engagement. A moderate relationship between them also indicates their effective and mutual influences in creating an active learning environment. However, a possible lack of enabling power in the content is identified through its weak relationship and influence on the areas of teaching/learning procedure and student engagement. In focus groups, students felt they did not require any preparation to participate in the simulation sessions which is probably a reason behind this drawback. It is likely that either the content is not challenging, therefore the students do not feel the need for extra preparation; or the lecturers do not provide any structured preparatory activities for students which could make the sessions more engaging. Empirical studies suggest student preparation for actual sessions can enhance students’ access and interest to learning content (Yumusak, 2020), and can facilitate participation and collaboration in the actual sessions (Blaser, 2019).

In technology-enhanced learning environments, student engagement and motivation are critical areas which are dependent on teaching structure, strategies, and educational content (Bond et al., 2020; Dunn & Kennedy, 2019). Although the students reported their continued engagement in simulation tasks, the focus group results suggest mixed perceptions on the aspects of motivation and learning expectations. Students’ low satisfaction about the content and its weak relationship with the other pedagogical components, namely engagement and procedure (see Fig. 2) signal several possible reasons, for example the content was not adequately interesting and encouraging, or students did not recognise the value of the content as they did for the teaching and learning procedure. Another possible reason emerged in the study is the assessment-centred nature of simulation sessions which may have prevented students from doing exploration, risk-taking, and experimentation.

Secondly, the focus group results, in association with the survey findings, reveal the state of deep learning elements in the simulation practice, and answer Research Question 2. Critical thinking and problem-solving in the form of decision making, implementation of decisions in changed situations and roles, analysis of decisions, and reflection-based activities appear strongly in students’ comments. Students also report instances linked to learning to learn practices, namely tasks for testing prior learning and opportunities to learn from mistakes. Conversely, the evidence of collaboration and communication practice is found limited within co-learning activities through faculty-student teamwork, and students’ use of technical communication for leading and managing simulation tasks.

Students report opportunities and benefits of using deep learning skills. For example, they solve practical problems while operating ships and take technical and professional decisions in complex situations. The sessions have occasions for them to exhibit leadership and management skills as team members which improves their overall professional practice. However, the survey results demonstrating moderate and weak relationship between educational procedures, engagement, motivation, and content indicating the scope for considering approaches to calibrate and develop them. In this regard, the focus group data suggest a few areas to improve, such as defining learning objectives, and incorporating challenging tasks and investigation opportunities. Prior research findings suggest that these actions have potentials to improve students’ deep learning skills (Archer-Kuhn et al., 2020; Warburton, 2003) and ensure high satisfaction in learning (Chiang et al., 2020; Chotitham et al., 2014).

Generally, maritime simulation places an emphasis on enhancing technical skills in ship operation and maritime equipment over acquiring specialised knowledge and deep learning in this field. Students’ post-session discussion and reflection along with lecturer-led debrief sessions appear as ad-hoc and spontaneous actions. The professional expertise and experience-driven advice shared by lecturers and peers are undeniably valuable in such kind of profession-focused educational programmes. However, gaps between theory and practice seem to be a catalyst for limiting in-depth scholarship building among the students which they need for informed decision making in complex settings, or while performing any changed roles.

The survey and focus group results lead to the following guidelines and strategies on improving existing maritime simulation practices. The suggested approaches respond to Research Question 3.

-

The lack of defined learning outcomes in maritime simulation programmes is an important finding in this study. In formal education, transparent learning outcomes, also termed as teaching and learning objectives, help ensure academic quality, reduce stress, and enhance learning and teaching satisfaction through achieving the desired goals. Higher education curricula are expected to contain multifaceted academic objectives, for example, student learning gains in disciplinary knowledge and reasoning; development of their personal attributes, such as critical thinking and values; and enhancing collaboration and communication skills (Crawley, Edstrom, & Stanko, 2013). Clear learning outcomes can help achieve these targets and direct pedagogical procedures towards a shared and supportive educational environment, as Havnes and Proitz suggest,

Learning outcomes clearly direct teaching and students’ learning activities, opening the way for feedback and dialogue between and among teachers and students. Moreover, LOs (learning outcomes) can support internal dialogue and enhance self-assessment (Havnes & Proitz, 2016, p 219).

Yet, maritime education and training for seafarers are highly profession-focused and skills-oriented, thus the learning objectives for traditional maritime simulation sessions need to combine skills and knowledge related outcomes. It is vital that the learning outcomes comply with and complement the professional standards set by associated regulatory and certification bodies, such as International Maritime Organization (IMO), International Organization for Standardization (ISO), and Maritime Administrations (for example, Maritime Coastguard Agency and Merchant Navy training Board in the UK). Hence, policy level recognition of the scope and value of deep learning along with practical skills building in maritime simulation is essential to allow education and training providers to incorporate such objectives in the academic programmes.

-

The study shows the importance of incorporating exploration, risk-taking, and experimentation opportunities to enable deep learning in maritime simulation. It appears to be beneficial to modify the very nature of the traditional simulation pedagogy and create space for learning-centred activities. Research findings in technology-enhanced learning show strengths of evidence-, case- or scenario-based discussion (Forstronen et al., 2020); research- or inquiry-based tasks (Lamsa et al., 2018), second-chance activities allowing flexibility to do mistakes and learn from them (Atanasyan et al, 2020); and collaborative scholarship building by students (Nel, 2017) which can be incorporated in maritime simulation to enhance student learning.

-

The study indicates gaps between simulation activities and real-world practice. Empirical evidence suggests exploratory tasks linked to target learning and practice points (Tempelman & Pilot, 2011) and systematic reflections supported by prior evidence and theoretical underpinnings (Veine et al., 2020) are effective tools to combine theory and practice leading to enhanced communication, criticality, and collaboration.

-

The findings demonstrate a lack of challenging tasks in simulation programmes which can be ensured by adding pre-simulation study and post-simulation learning extension provisions through flipped model of instruction. Flipped approaches have powers to enhance interaction between students and lectures and can allow students to become inventive enquirers and take higher learning responsibilities (Bergmann & Waddell, 2012; Guo, 2019; Herreid & Schiller, 2013). The extended nature of pedagogy can also create independent or collaborative study opportunities based on appropriate simulation scenarios. However, the consideration of student experiences and needs are also important to address while planning maritime simulation schemes including their pre- and post- activities.

-

The study reports students’ lack of preparation for attending simulation sessions. Essential prior knowledge, awareness building on learning environments, and planned learning strategies facilitate effective learning (Biwer et al., 2020; Lee et al., 2019; Tacgın, 2020). Therefore, carefully designed preparatory tasks for attending maritime simulation activities seem to be advantageous for both the students and faculty members. A coordinated and connected programme design, and re-positioning of the simulation component in the overall curriculum can be another effective approach (Fung, 2017). This can help students gain essential prior knowledge and study skills, prepare useful queries and strategies to apply in simulation exercises, and, after all, attend the sessions as informed and active participants.

Conclusion

Pedagogical aspects of maritime simulation are an emerging field in higher education literature. Maritime simulation or, maritime education as a whole, is generally considered a repetitive practice-based training; thus, its pedagogical value as a higher education programme had been undermined before. This study was an opportunity to explore the teaching and learning within such a technology-aided environment in relation to four pillars of higher education: content, motivation, engagement, and teaching/learning procedure. The findings supply insights into the existing qualities of deep learning in Bridge Simulation (BS), a common form of maritime simulation. According to the nature of the pedagogical practice and its deep learning elements, the study provides recommendations in areas of content and curriculum design. The suggestions include articulating clearer learning goals and outcomes for the sessions, expanding the academic goals from assessment to learning, placing simulation modules rationally within the curriculum to ensure prior knowledge for students, inclusion of more challenging tasks, and creating futuristic simulator which are technologically and educationally cutting-edge.

The findings of this study are derived from one maritime institute situated at a university in the UK, which may not represent academic ethos and practices of other similar institutions and their maritime simulation exercises. Besides, the evaluation of the teaching and learning of the programme was based on student perceptions only. It is plausible that the findings could be inclusive and thus more complete if perceptions of the associated faculty members and learning technologists were gathered and analysed. Another limitation of the study is its consideration to the simulation programme as an isolated academic scheme. Our study did not explore varied aspects of knowledge and skill transfer between non-simulation and simulation-based academic programmes. However, the findings gained through this empirical study provide useful baseline evidence on the importance of deep learning elements in maritime simulation, and also supply practical guidelines on improving the activities in line with the principles of contemporary higher education practices. They also provide with strategies on the enhancement of educational attainments and deep learning competences of seafarers which have been categorically highlighted by the International Maritime Organisation. Yet, maritime simulation requires continued and in-depth exploration to understand its pedagogical qualities more fully. For example, the cognitive, behavioural, and emotional facets of student engagement, one of the pedagogical elements discussed in this paper, can be studied to realise how they facilitate or hinder deep learning within a simulation environment. It is also important to investigate whether the traditional simulation exercises are able to meet learning objectives of the fast-chagrining maritime industry, for example its unmanned and remotely operated ships. This study directs to these demands and calls for more investigation and discussion on the unique teaching and learning features of maritime simulation.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Advance HE (2020). Deep Learning: Retrieved on 20 April 2020 from https://www.advance-he.ac.uk/knowledge-hub/deep-learning

Allianz Global Corporate and Specialty. (2017). Safety and Shipping Review 2017. Retrieved on 30 April 2020 from https://www.agcs.allianz.com/content/dam/onemarketing/agcs/agcs/reports/AGCS-Safety-Shipping-Review-2017.pdf

Archer-Kuhn, B., Wiedeman, D., & Chalifoux, J. (2020). Student engagement and deep learning in higher education: Reflections on inquiry-based learning on our group study program course in the UK. Journal of Higher Education Outreach and Engagement, 24(2), 107–122.

Arinto, P. B. (2016). Issues and challenges in open and distance e-learning: Perspectives from the Philippines. International Review of Research in Open and Distributed Learning, 17(2), 162–180.

Arvanitakis, J., & Hornsby, D. J. (2016). Are universities redundant? In J. Arvanitakis & D. J. Hornsby (Eds.), Universities, the citizen scholar and the future of higher education (pp. 7–20). Palgrave Macmillan.

Atanasyan, A., Kobelt, D., Goppold, M., Cichon, T., & Schluse, M. (2020). The FeDiNAR Project: Using augmented reality to turn mistakes into learning opportunities. In V. Geroimenko (Ed.), Augmented Reality in education. Springer series on cultural computing. Cham: Springer.

Baird, J. A., Andrich, D., Hopfenbeck, T. N., & Stobart, G. (2017). Assessment and learning: Fields apart? Assessment in Education: Principles, Policy & Practice, 24(3), 317–350. https://doi.org/10.1080/0969594X.2017.1319337

Baldauf, M., Schroder-Hinrichs, J. U., Kataria, A., Benedict, K., & Tuschling, G. (2016). Multidimensional simulation in team training for safety and security in maritime transportation. Journal of Transportation Safety & Security, 8(3), 197–213. https://doi.org/10.1080/19439962.2014.996932

Ball, D. L., & Bass, H. (2000). Interweaving content and pedagogy in teaching and learning to teach: Knowing and using mathematics. In J. Boaler (Ed.), Multiple perspectives on the teaching and learning of mathematics (pp. 83–104). London: Ablex Publishing.

Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84, 191–215. https://doi.org/10.1037/0033-295X.84.2.191

Baporikar, N. (2018). Improving communication by linking student centred pedagogy and management curriculum development. In N. P. Ololube (Ed.), Encyclopedia of Institutional Leadership, Policy and Management (pp. 369–386). Pearl Publications.

Bergmann, J., & Waddell, D. (2012). Point/counterpoint-to flip or not to flip? Learning and Leading with Technology, 39(8), 6.

Bergold, J., & Thomas, S. (2012). Participatory research methods: A methodological approach in motion. Historical Social Research/Historische Sozialforschung, 4, 191–222.

Biwer, F., & oude Egbrink, M. G., Aalten, P., & de Bruin, A. B. . (2020). Fostering effective learning strategies in higher education–a mixed-methods study. Journal of Applied Research in Memory and Cognition, 9(2), 186–203. https://doi.org/10.1016/j.jarmac.2020.03.004

Blaser, M. (2019). Combining pre-class preparation with collaborative in-class activities to improve student engagement and success in general chemistry. Active learning in general chemistry: Whole-class solutions (pp. 21–33). London: American Chemical Society, p.

Bond, M., Buntins, K., Bedenlier, S., Zawacki-Richter, O., & Kerres, M. (2020). Mapping research in student engagement and educational technology in higher education: A systematic evidence map. International Journal of Educational Technology in Higher Education, 17(1), 2. https://doi.org/10.1186/s41239-019-0176-8

Bryan, R. L., Kreuter, M. W., & Brownson, R. C. (2009). Integrating adult learning principles into training for public health practice. Health Promotion Practice, 10(4), 557–563.

Caena, F. (2019). Developing a European Framework for the Personal, Social & Learning to Learn Key Competence (LifEComp) (No. JRC117987). Joint Research Centre (Seville site). Retrieved on 25 September 2020 from https://www.sel-gipes.com/uploads/1/2/3/3/12332890/2019_-_ue_-_developing_a_european_framework_for_the_personal_social_and_learning_to_learn_key_comepence.pdf

Carter, D. F., Ro, H. K., Alcott, B., & Lattuca, L. R. (2016). Co-curricular connections: The role of undergraduate research experiences in promoting engineering students’ communication, teamwork, and leadership skills. Research in Higher Education, 57(3), 363–393.

Chiang, C., Wells, P. K., Fieger, P., & Sharma, D. S. (2020). An investigation into student satisfaction, approaches to learning and the learning context in Auditing. Accounting & Finance, 61, 913–936. https://doi.org/10.1111/acfi.12598

Chini, J. J., Straub, C. L., & Thomas, K. H. (2016). Learning from avatars: Learning assistants practice physics pedagogy in a classroom simulator. Physical Review Physics Education Research, 12(1), 1–15.

Chotitham, S., Wongwanich, S., & Wiratchai, N. (2014). Deep learning and its effects on achievement. Procedia-Social and Behavioral Sciences, 116, 3313–3316. https://doi.org/10.1016/j.sbspro.2014.01.754

Cicek, K., Akyuz, E., & Celik, M. (2019). Future Skills Requirements Analysis in Maritime Industry. Procedia Computer Science, 158, 270–274.

Cohen, J. (1988). Statistical power analysis for the behavioural sciences. Laurence Erlbaum Associates.

Crawley, E. F., Edstrom, K., & Stanko, T. (2013, June). Educating Engineers for Research-based Innovation–Creating the learning outcomes framework. In Proceedings of the 9th International CDIO Conference (pp. 9–13).

Cunningham, S. B. (2015). The relevance of maritime education and training at the secondary level. World Maritime University Dissertations. 499. Retrieved on 27 November 2020 from https://commons.wmu.se/cgi/viewcontent.cgi?article=1498&context=all_dissertations

Dallimore, E. J., Hertenstein, J. H., & Platt, M. B. (2008). Using discussion pedagogy to enhance oral and written communication skills. College Teaching, 56(3), 163–172.

Dancey, C. P., & Reidy, J. (2007). Statistics without maths for psychology. Pearson Education.

Dunn, T. J., & Kennedy, M. (2019). Technology enhanced learning in higher education; motivations, engagement and academic achievement. Computers & Education, 137, 104–113. https://doi.org/10.1016/j.compedu.2019.04.004

European Council (2018). Council Recommendation of 22 May 2018 on Key Competences for LifeLong Learning. 2018/C 189/01–13. Brussels: European Council. Retrieved on 5 May 2020 from https://eur-lex.europa.eu/legalcontent/EN/TXT/PDF/?uri=CELEX:32018H0604(01)&rid=7

Evans, C., & Kozhevnikov, M. (Eds.). (2016). Styles of practice in higher education: Exploring approaches to teaching and learning. Routledge.

Forstronen, A., Johnsgaard, T., Brattebo, G., & Reime, M. H. (2020). Developing facilitator competence in scenario-based medical simulation: Presentation and evaluation of a train the trainer course in Bergen Norway. Nurse Education in Practice, 47, 102840.

Francic, V., Zec, D., & Rudan, I. (2011, January). Analysis and Trends of MET System in Croatia–Challenges for the 21st Century. In The 12th Annual General Assembly International Association of Maritime University, Green Ships Eco Shipping Clean Seas, Gdynia, Poljska.

Fung, D. (2017). A connected curriculum for higher education. UCL Press.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT press.

Greiff, S., Holt, D. V., & Funke, J. (2013). Perspectives on problem solving in educational assessment: Analytical, interactive, and collaborative problem solving. Journal of Problem Solving, 5(2), 71–91.

Grzybowska, K., & Lupicka, A. (2017). Key competencies for Industry 4.0. Economics & Management Innovations, 1(1), 250–253.

Guo, J. (2019). The use of an extended flipped classroom model in improving students’ learning in an undergraduate course. Journal of Computing in Higher Education, 31(2), 362–390.

Hansen, T. I., & Gissel, S. T. (2017). Quality of learning materials. IARTEM e-Journal, 9(1), 122–141. https://doi.org/10.21344/iartem.v9i1.601

Hanzu-Pazara, R., Barsan, E., Arsenie, P., Chiotoroiu, L., & Raicu, G. (2008). Reducing of maritime accidents caused by human factors using simulators in training process. Journal of Maritime Research, 5(1), 3–18.

Hartikainen, S., Rintala, H., Pylvas, L., & Nokelainen, P. (2019). The concept of active learning and the measurement of learning outcomes: A review of research in engineering higher education. Education Sciences, 9(4), 1–19. https://doi.org/10.3390/educsci9040276

Haun, E. (2014, June 23). Grants Offered for Maritime History Projects. Retrieved on 15 February 2020 from www.marinelink.com/news/maritimeprojectshistory371659.aspx

Havnes, A., & Proitz, T. S. (2016). Why use learning outcomes in higher education? Exploring the grounds for academic resistance and reclaiming the value of unexpected learning. Educational Assessment, Evaluation and Accountability, 28(3), 205–223.

Henderson, K., & Mathew Byrne, J. (2016). Developing communication and interviewing skills. In K. Davies & R. Jones (Eds.), Skills for social work practice (pp. 1–22). Palgrave Macmillan.

Herreid, C. F., & Schiller, N. A. (2013). Case studies and the flipped classroom. Journal of College Science Teaching, 42(5), 62–66.

Hjelmervik, K., Nazir, S., & Myhrvold, A. (2018). Simulator training for maritime complex tasks: an experimental study. WMU Journal of Maritime Affairs, 17(1), 17–30. https://doi.org/10.1007/s13437-017-0133-0

Hoskins, B., & Fredriksson, U. (2008). Learning to learn: What is it and can it be measured?. European Commission JRC. Retrieved on 2 May 2020 from http://www.diva-portal.org/smash/get/diva2:128429/FULLTEXT01.pdf

Hyytinen, H., Toom, A., & Shavelson, R. J. (2019). Enhancing scientific thinking through the development of critical thinking in higher education. In M. Murtonen & K. Balloo (Eds.), Redefining scientific thinking for higher education (pp. 59–78). Palgrave Macmillan.

International Maritime Organisation (2020). STCW Convention. Retrieved on 20 February 2020 from http://www.imo.org/en/OurWork/HumanElement/TrainingCertification/Pages/STCW-Convention.aspx

Jamil, M. G., & Isiaq, S. O. (2019). Teaching technology with technology: Approaches to bridging learning and teaching gaps in simulation-based programming education. International Journal of Educational Technology in Higher Education, 16(1), 1–21. https://doi.org/10.1186/s41239-019-0159-9

Johnson, A. M., Jacovina, M. E., Russell, D. G., & Soto, C. M. (2016). Challenges and solutions when using technologies in the classroom. In S. A. Crossley & D. S. McNamara (Eds.), Adaptive educational technologies for literacy instruction (pp. 13–30). New York: Routledge.

Johnson, R., Onwuegbuzie, A., & Turner, L. (2007). Toward a definition of mixed methods research. Journal of Mixed Methods Research, 1(2), 112–133.

Jones, B. F. (1987). Strategic teaching and learning: Cognitive instruction in the content areas. Association for Supervision and Curriculum Development, 125 N. West St., Alexandria, VA 22314.

Kelly, M. A., Forber, J., Conlon, L., Roche, M., & Stasa, H. (2014). Empowering the registered nurses of tomorrow: Students’ perspectives of a simulation experience for recognising and managing a deteriorating patient. Nurse Education Today, 34(5), 724–729.

Kim, O. (2018) Teacher Decisions on Lesson Sequence and Their Impact on Opportunities for Students to Learn. In: Fan L., Trouche L., Qi C., Rezat S., Visnovska J. (eds) Research on Mathematics Textbooks and Teachers’ Resources. ICME-13 Monographs. Springer, Cham. doi: https://doi.org/10.1007/978-3-319-73253-4_15

Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Prentice-Hall.

Lamsa, J., Hamalainen, R., Koskinen, P., & Viiri, J. (2018). Visualising the temporal aspects of collaborative inquiry-based learning processes in technology-enhanced physics learning. International Journal of Science Education, 40(14), 1697–1717.

Lasi, H., Fettke, P., Kemper, H. G., Feld, T., & Hoffmann, M. (2014). Industry 40. Business & Information Systems Engineering, 6(4), 239–242.

Lawson, M. A., & Lawson, H. A. (2013). New conceptual frameworks for student engagement research, policy, and practice. Review of Educational Research, 83(3), 432–479. https://doi.org/10.3102/0034654313480891

Lee, J. Y., Donkers, J., Jarodzka, H., & Van Merrienboer, J. J. (2019). How prior knowledge affects problem-solving performance in a medical simulation game: Using game-logs and eye-tracking. Computers in Human Behavior, 99, 268–277. https://doi.org/10.1016/j.chb.2019.05.035

Lei, H., Cui, Y., & Zhou, W. (2018). Relationships between student engagement and academic achievement: A meta-analysis. Social Behavior and Personality, 46(3), 517–528. https://doi.org/10.2224/sbp.7054

Likert, R. (1932). A technique for the measurement of attitudes. Archives of Psychology, 140(1), 44–53.

Lowden, K., Hall, S., Elliot, D. & Lewin, J. (2011). Employer’s perceptions of the employability skills of the new graduates. Edge/The SCRE Centre, University of Glasgow. Retrieved on 10 October 2020 from https://www.educationandemployers.org/wp-content/uploads/2014/06/employability_skills_as_pdf_-_final_online_version.pdf

Manuel, M. E. (2017). Vocational and academic approaches to maritime education and training (MET): Trends, challenges and opportunities. WMU Journal of Maritime Affairs, 16(3), 473–483.

McPeck, J. E. (2016). Critical thinking and education. Routledge.

Mindykowski, J. (2013). Gdynia Maritime University experience in view of 21st century challenges. Government Gazette, 5, 20–21.

Morley, D. A., & Jamil, M. G. (2021). Applied Pedagogies for Higher Education: Real World Learning and Innovation across the Curriculum (p. 415). Cham: Palgrave Macmillan. doi: https://doi.org/10.1007/978-3-030-46951-1

Muijs, D., & Reynolds, D. (2017). Effective teaching: Evidence and practice. Sage.

Nel, L. (2017). Students as collaborators in creating meaningful learning experiences in technology-enhanced classrooms: An engaged scholarship approach. British Journal of Educational Technology, 48(5), 1131–1142.

Nisbet, J., & Shucksmith, J. (2017). Learning strategies. Routledge.

Nold, H. (2017). Using critical thinking teaching methods to increase student success: an action research project. International Journal of Teaching and Learning in Higher Education, 29(1), 17–32.

Organisation for Economic Co-operation and Development, OECD (2004). Problem solving for tomorrow’s world: first measures of cross-curricular competencies from PISA 2003. Retrieved on 2 May 2020 from http://www.oecd.org/education/school/programmeforinternationalstudentassessmentpisa/34009000.pdf

Organisation for Economic Co-operation and Development, OECD (2013). OECD skills outlook 2013: First results from the survey of adult skills, OECD Publishing. Retrieved 11 March 2020 from http://dx.doi.org/https://doi.org/10.1787/9789264204256-en

Paine-Clemes, B. (2006). What is quality in a maritime education? IAMU Journal, 4(2), 23–30.

Pallis, A. A., & Ng, A. K. (2011). Pursuing maritime education: an empirical study of students’ profiles, motivations and expectations. Maritime Policy & Management, 38(4), 369–393.

Park, Y. S. (2016). A study on the standardization of education modules for ARPA/radar simulation. Journal of the Korean Society of Marine Environment & Safety, 22(6), 631–638.

Perrott, E. (2014). Effective teaching: A practical guide to improving your teaching. Routledge.

Pnevmatikos, D., Christodoulou, P., & Georgiadou, T. (2019). Promoting critical thinking in higher education through the values and knowledge education (V a KE) method. Studies in Higher Education, 44(5), 892–901.

Quinn, J., McEachen, J., Fullan, M., Gardner, M., & Drummy, M. (2019). Dive into deep learning: Tools for engagement. Corwin Press.

Renganayagalu, S. K., Mallam, S., Nazir, S., Ernstsen, J., & Haavardtun, P. (2019). Impact of simulation fidelity on student self-efficacy and perceived skill development in maritime training. The International Journal on Marine Navigation and Safety of Sea Transportation., 13(3), 663–669. https://doi.org/10.12716/1001.13.03.25

Rochester, S., Kelly, M., Disler, R., White, H., Forber, J., & Matiuk, S. (2012). Providing simulation experiences for large cohorts of 1st year nursing students: Evaluating quality and impact. Collegian, 19(3), 117–124.

Rystedt, H., & Sjoblom, B. (2012). Realism, authenticity, and learning in healthcare simulations: rules of relevance and irrelevance as interactive achievements. Instructional science, 40(5), 785–798.

Sawyer, T., Sierocka-Castaneda, A., Chan, D., Berg, B., Lustik, M., & Thompson, M. (2011). Deliberate practice using simulation improves neonatal resuscitation performance. Simulation in Healthcare, 6(6), 327–336.

Scager, K., Boonstra, J., Peeters, T., Vulperhorst, J., & Wiegant, F. (2016). Collaborative learning in higher education: Evoking positive interdependence. CBE Life Sciences Education, 15(4), 1–9.

Sellberg, C. (2018). From briefing, through scenario, to debriefing: the maritime instructor’s work during simulator-based training. Cognition, Technology & Work, 20(1), 49–62.

Sellberg, C., Lindwall, O., & Rystedt, H. (2021). The demonstration of reflection-in-action in maritime training. Reflective Practice. https://doi.org/10.1080/14623943.2021.1879771

Shulman, L. S. (2005). Signature pedagogies in the professions. Daedalus, 134(3), 52–59.

Stern, N. (2016). "Building on success and learning from experience: an independent review of the Research Excellence Framework". Department for Business, Energy & Industrial Strategy, London, UK. Retrieved on 15 January 2020 from https://www.gov.uk/government/publications/research-excellence-framework-review

Sue, V. M., & Ritter, L. A. (2007). Conducting online surveys. Sage.

Tacgın, Z. (2020). The perceived effectiveness regarding Immersive Virtual Reality learning environments changes by the prior knowledge of learners. Education and Information Technologies, 25(4), 2791–2809. https://doi.org/10.1007/s10639-019-10088-0

Taguma, M., Feron, E., & Hwee, M. (2018). Future of Education and Skills 2030: Curriculum Analysis. Organisation for Economic Co-operation and Development (OECD). Retrieved on 5 May 2020 from http://www.oecd.org/education/2030/Education-and-AI-preparing-for-the-future-AI-Attitudes-and-Values.pdf

Teddlie, C., & Tashakkori, A. (2009). Foundations of Mixed Methods Research. Sage Publications.

Tempelman, E., & Pilot, A. (2011). Strengthening the link between theory and practice in teaching design engineering: an empirical study on a new approach. International journal of technology and design education, 21(3), 261–275.

Veine, S., Anderson, M. K., Andersen, N. H., Espenes, T. C., Soyland, T. B., Wallin, P., & Reams, J. (2020). Reflection as a core student learning activity in higher education-Insights from nearly two decades of academic development. International Journal for Academic Development, 25(2), 147–161.

Vygotsky, L. (1978). Mind in Society. Harvard University Press.

Warburton, K. (2003). Deep learning and education for sustainability. International Journal of Sustainability in Higher Education, 4(1), 44–56. https://doi.org/10.1108/14676370310455332

Williams, K. C., & Williams, C. C. (2011). Five key ingredients for improving student motivation. Research in Higher Education Journal, 12, 1. Retrieved 5 March 2021 from file:///C:/Users/pn19040/Downloads/motiv%20(3).pdf

Woolley, M. (2009). Time for the Navy to Get into the Game! US Naval Institute Proceedings, 135(4), 34–39.

World Economic Forum. (2016, January). The future of jobs: Employment, skills and workforce strategy for the fourth industrial revolution. In Global challenge insight report. Geneva: World Economic Forum. Retrieved on 18 March 2020 from http://www3.weforum.org/docs/WEF_Future_of_Jobs.pdf

Wyatt, A., Archer, F., & Fallows, B. (2015). Use of simulators in teaching and learning: paramedics’ evaluation of a patient simulator? Australasian Journal of Paramedicine, 5, 2. https://doi.org/10.33151/ajp.5.2.412

Yahaya, C. K. H. C. K., Mustapha, J. C., Jaffar, J., Talip, B. A., & Hassan, M. M. (2017). Operations and supply chain mini simulator development as a teaching aid to Enhance student’s learning experience. 7th Annual Conference on Industrial Engineering and Operations Management, IEOM 2017 (pp. 358–367). IEOM Society.

Yumusak, G. (2020). Preparation before Class or Homework after Class? Flipped Teaching Practice in Higher Education. International Journal of Progressive Education, 16(2), 297–307.

Zepke, N. (2015). Student engagement research: Thinking beyond the mainstream. Higher Education Research & Development, 34(6), 1311–1323. https://doi.org/10.1080/07294360.2015.1024635

Acknowledgements

The authors would like to thank Professor Tansy Jessop, Pro Vice-Chancellor for Education, University of Bristol (former Head, Solent Learning and Teaching Institute, Solent University) for her great support and inspiration.

Funding

Solent Learning and Teaching Institute, Solent University, UK.

Author information

Authors and Affiliations

Contributions

The first author identified the knowledge gap, designed the data collection tools, and led the literature review and data analysis activities. The second author led the data collection work and helped analyse and report the findings. Both authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jamil, M.G., Bhuiyan, Z. Deep learning elements in maritime simulation programmes: a pedagogical exploration of learner experiences. Int J Educ Technol High Educ 18, 18 (2021). https://doi.org/10.1186/s41239-021-00255-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41239-021-00255-0