Abstract

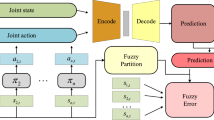

Fuzzy Q-learning extends Q-learning to continuous state space and has been applied to a wide range of applications such as robot control. But in a multi-agent system, the non-stationary environment makes joint policy challenging to converge. To give agents more suitable rewards in a multi-agent environment, a multi-agent reward-iteration fuzzy Q-learning (RIFQ) is proposed for multi-agent cooperative tasks. The state space is divided into three channels by the proposed state-divider with fuzzy logic. The reward of an agent is reshaped iteratively according to its state, and the update sequence is constructed by calculating the relation among states of different agents. Then, the value functions are updated top-down. By replacing the reward given by the environment with the reshaped reward, agents can avoid the most unreasonable punishments and receive rewards selectively. RIFQ provides a feasible reward relationship for multi-agents, which makes the training of multi-agent more steady. Several simulation experiments show that RIFQ is not limited by the number of agents and has a faster convergence speed than baselines.

Similar content being viewed by others

References

Buşoniu, L., Babuška, R., De Schutter, B.: Multi-agent reinforcement learning: an overview. In: Innovations in Multi-agent Systems and Applications, vol. 1, pp. 183–221. Springer, Berlin (2010)

Chen, G., Cao, W., Chen, X., Wu, M.: Multi-agent q-learning with joint state value approximation. In: Proceedings of the 30th Chinese Control Conference, pp. 4878–4882. IEEE (2011)

Devlin, S., Kudenko, D.: Theoretical considerations of potential-based reward shaping for multi-agent systems. In: The 10th International Conference on Autonomous Agents and Multiagent Systems, pp. 225–232. ACM (2011)

Devlin, S.M., Kudenko, D.: Dynamic potential-based reward shaping. In: Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems, pp. 433–440. IFAAMAS (2012)

Duan, Y., Cui, B.X., Xu, X.H.: A multi-agent reinforcement learning approach to robot soccer. Artif. Intell. Rev. 38(3), 193–211 (2012)

Galindo-Serrano, A., Giupponi, L.: Self-organized femtocells: a fuzzy q-learning approach. Wirel. Netw. 20(3), 441–455 (2014)

Gu, S., Lillicrap, T., Sutskever, I., Levine, S.: Continuous deep q-learning with model-based acceleration. In: International Conference on Machine Learning, pp. 2829–2838 (2016)

Gupta, J.K., Egorov, M., Kochenderfer, M.: Cooperative multi-agent control using deep reinforcement learning. In: International Conference on Autonomous Agents and Multiagent Systems, pp. 66–83. Springer (2017)

Hu, H.X., Yu, W., Wen, G., Xuan, Q., Cao, J.: Reverse group consensus of multi-agent systems in the cooperation-competition network. IEEE Trans. Circuits Syst. I: Regul. Pap. 63(11), 2036–2047 (2016)

Kapitonov, A., Lonshakov, S., Krupenkin, A., Berman, I.: Blockchain-based protocol of autonomous business activity for multi-agent systems consisting of uavs. In: 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), pp. 84–89. IEEE (2017)

Lin, C.M., Li, H.Y.: Intelligent control using the wavelet fuzzy cmac backstepping control system for two-axis linear piezoelectric ceramic motor drive systems. IEEE Trans. Fuzzy Syst. 22(4), 791–802 (2013)

Lowe, R., Wu, Y.I., Tamar, A., Harb, J., Abbeel, O.P., Mordatch, I.: Multi-agent actor-critic for mixed cooperative-competitive environments. In: Advances in Neural Information Processing Systems, pp. 6379–6390 (2017)

Mavrogiannis, C.I., Blukis, V., Knepper, R.A.: Socially competent navigation planning by deep learning of multi-agent path topologies. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 6817–6824. IEEE (2017)

Mnih, V., Badia, A.P., Mirza, M., Graves, A., Lillicrap, T., Harley, T., Silver, D., Kavukcuoglu, K.: Asynchronous methods for deep reinforcement learning. In: International Conference on Machine Learning, pp. 1928–1937 (2016)

Moodie, E.E., Dean, N., Sun, Y.R.: Q-learning: flexible learning about useful utilities. Stat. Biosci. 6(2), 223–243 (2014)

Muñoz, P., Barco, R., de la Bandera, I.: Optimization of load balancing using fuzzy q-learning for next generation wireless networks. Expert Syst. Appl. 40(4), 984–994 (2013)

Nowroozi, A., Shiri, M.E., Aslanian, A., Lucas, C.: A general computational recognition primed decision model with multi-agent rescue simulation benchmark. Inf. Sci. 187, 52–71 (2012)

Palmer, G., Tuyls, K., Bloembergen, D., Savani, R.: Lenient multi-agent deep reinforcement learning. In: AAMAS. International Foundation for Autonomous Agents and Multiagent Systems, pp. 443–451 (2018)

Santos, G., Pinto, T., Morais, H., Sousa, T.M., Pereira, I.F., Fernandes, R., Praça, I., Vale, Z.: Multi-agent simulation of competitive electricity markets: autonomous systems cooperation for European market modeling. Energy Convers. Manag. 99, 387–399 (2015)

Shamshirband, S., Patel, A., Anuar, N.B., Kiah, M.L.M., Abraham, A.: Cooperative game theoretic approach using fuzzy q-learning for detecting and preventing intrusions in wireless sensor networks. Eng. Appl. Artif. Intell. 32, 228–241 (2014)

Shi, H., Li, X., Hwang, K.S., Pan, W., Xu, G.: Decoupled visual servoing with fuzzyq-learning. IEEE Trans. Ind. Inform. 14(1), 241–252 (2016)

Shi, H., Lin, Z., Hwang, K.S., Yang, S., Chen, J.: An adaptive strategy selection method with reinforcement learning for robotic soccer games. IEEE Access 6, 8376–8386 (2018)

Shi, H., Lin, Z., Zhang, S., Li, X., Hwang, K.S.: An adaptive decision-making method with fuzzy Bayesian reinforcement learning for robot soccer. Inf. Sci. 436, 268–281 (2018)

Tsubakimoto, T., Kobayashi, K.: Cooperative action acquisition based on intention estimation in a multi-agent reinforcement learning system. Electron. Commun. Jpn. 100(6), 3–10 (2017)

Wei, E., Luke, S.: Lenient learning in independent-learner stochastic cooperative games. J. Mach. Learn. Res. 17(1), 2914–2955 (2016)

Wu, J., He, H., Peng, J., Li, Y., Li, Z.: Continuous reinforcement learning of energy management with deep q network for a power split hybrid electric bus. Appl. Energy 222, 799–811 (2018)

Zheng, J., Cai, Y., Wu, Y., Shen, X.: Dynamic computation offloading for mobile cloud computing: a stochastic game-theoretic approach. IEEE Trans. Mob. Comput. 18(4), 771–786 (2018)

Acknowledgements

This work is supported by National Natural Science Foundation of China under Grant 62076202, 61976178.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Rights and permissions

About this article

Cite this article

Leng, L., Li, J., Zhu, J. et al. Multi-Agent Reward-Iteration Fuzzy Q-Learning. Int. J. Fuzzy Syst. 23, 1669–1679 (2021). https://doi.org/10.1007/s40815-021-01063-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40815-021-01063-4