Abstract

We present a general existence result for a type of equilibrium in normal-form games, which extends the concept of Nash equilibrium. We consider nonzero-sum normal-form games with an arbitrary number of players and arbitrary action spaces. We impose merely one condition: the payoff function of each player is bounded. We allow players to use finitely additive probability measures as mixed strategies. Since we do not assume any measurability conditions, for a given strategy profile the expected payoff is generally not uniquely defined, and integration theory only provides an upper bound, the upper integral, and a lower bound, the lower integral. A strategy profile is called a legitimate equilibrium if each player evaluates this profile by the upper integral, and each player evaluates all his possible deviations by the lower integral. We show that a legitimate equilibrium always exists. Our equilibrium concept and existence result are motivated by Vasquez (2017), who defines a conceptually related equilibrium notion, and shows its existence under the conditions of finitely many players, separable metric action spaces and bounded Borel measurable payoff functions. Our proof borrows several ideas from (Vasquez (2017)), but is more direct as it does not make use of countably additive representations of finitely additive measures by (Yosida and Hewitt (1952)).

Similar content being viewed by others

1 Introduction

The model and main result. The main goal of the current paper is to present a general existence result for a type of equilibrium in normal-form games, with an arbitrary number of players and arbitrary action spaces. The only condition we impose on the game is that the payoff function of each player is bounded. We allow players to use finitely additive probability measures as mixed strategies. This new equilibrium concept is a novel generalisation of the concept of Nash equilibrium.

Since we do not pose any measurability assumptions, the payoff function of a player is not necessarily integrable. That is, a strategy profile does not always induce a unique expected payoff. In that case, the upper integral, i.e. the upper approximations of the integral by simple functions, is not the same as the lower integral, i.e. lower approximations of the integral by simple functions. So based on integration theory, the upper integral could be interpreted as the best possible expected payoff, while the lower integral as the worst expected payoff.

We call a strategy profile a legitimate equilibrium if each player evaluates this strategy profile by the upper integral, and each player evaluates all his possible deviations by the lower integral. Our equilibrium concept is motivated by the concept of optimistic equilibrium in (Vasquez 2017). The concept of legitimate equilibrium has a few conceptual and technical advantages. First, the definition is straightforward and has an easy interpretation. Second, it allows us to eliminate technical restrictions on the action spaces and payoff functions, and to treat the case of infinitely many players. Third, we only need to approximate the integral of the payoff functions at the strategy profiles under consideration. This is in stark contrast with optimistic equilibrium in Vasquez (2017), which is defined through several abstract steps and makes use of small perturbations of each strategy profile. Admittedly, both concepts have one drawback: a strategy profile is not necessarily evaluated in the same way when it is a candidate equilibrium and when it arises by a deviation of a player.

Our main result is that a legitimate equilibrium always exists, in any normal-form game with bounded payoff functions. Moreover, the set of legitimate equilibria is a compact subset of the set of strategy profiles, with respect to the topology of pointwise convergence. The proof uses the Kakutani-Fan-Glicksberg fixed point theorem. Our proof borrows several ideas from (Vasquez 2017), but is more direct as it does not make use of countably additive representations of finitely additive measures (comment 4.5 in Yosida and Hewitt (1952)).

Related literature. Finite additivity, instead of countable additivity, for probability measures was argued for on several grounds. For example, in decision theory conceptual arguments were given by de Finetti (1975), (Savage 1972), and Dubins et al. (2014). For a comparison between finitely additive and countably additive measures, see (Bingham 2010).

In game theory, countable additivity is the usual assumption on probability measures. There is a stream of literature extending the class of games with equilibria, for example, (Dasgupta and Maskin 1986a, b; Reny 1999; Simon and Zame 1990) and Bich and Laraki (2017). Usually, the issue is how to circumvent the problems caused by the discontinuity of the payoff functions. In the countably additive setup, as in these papers, it requires some assumptions of the set of actions and on the payoff functions. Reny (1999) shows existence of pure strategy Nash equilibria while Dasgupta and Maskin (1986a) show existence of mixed strategy Nash equilibria. The equilibrium concept of Simon and Zame (1990) can possibly modify the payoff functions, and their equilibrium is a mixed strategy Nash equilibrium with respect to the new payoff functions. Bich and Laraki (2017) show the relation between the results of Reny (1999) and Simon and Zame (1990), among other things.

Even though countable additivity is the usual assumption in game theory, equilibria in finitely additive strategies have also gained recognition. Marinacci (1997) proves the existence of Nash equilibrium in nonzero-sum normal-form games, when the payoff functions are integrable. In this case, the lower and upper integrals coincide, and hence our result can be seen as a generalization of the existence result in Marinacci (1997). In a strongly related work (Harris et al. 2005) give different types of characterizations of the utility functions that (Marinacci 1997) considers. In a different vein, (Capraro and Scarsini 2013) consider some nonzero-sum games where the upper and lower integrals of utility functions do not coincide. They calculate expected payoffs through convex combinations of different orders of integration, and prove the existence of Nash equilibrium when the game has countable action spaces and can be defined through an algebraic operator. They extend their result to uncountable action spaces by adding further restrictions on the payoff functions. Generally speaking, the existence of finitely additive Nash equilibrium in normal-form games seems to require fairly restrictive assumptions on the payoff functions, but sometimes also on the action spaces.

There are various results on the existence of the value and optimal strategies in zero-sum games, see for instance (Yanovskaya 1970; Heath and Sudderth 1972; Kindler 1983; Maitra and Sudderth 1993; Schervish and Seidenfeld 1996; Maitra and Sudderth 1998), and (Flesch et al. 2017). For an extensive overview we refer to Flesch et al. (2017).

What all the above mentioned papers have in common is that either each strategy profile induces a unique expected payoff or each strategy profile is assigned a certain expected payoff according to some rule. Then, Nash equilibrium can be defined in the usual way by requiring that each player’s strategy is a best response to the strategies of his opponents. In this sense, our definition of legitimate equilibrium and the notion of optimistic equilibrium in Vasquez (2017) conceptually separate themselves from the literature and take a somewhat new direction. Indeed, as mentioned earlier, both concepts assign to a strategy profile a possibly different payoff when it is a candidate equilibrium and when it arises by a deviation of a player. We discuss later in Sect. 5.1 whether our proof and existence result could be extended to a Nash equilibrium, that is when each strategy profile is assigned the same expected payoff, irrespective of it being considered a candidate equilibrium or not.

Legitimate equilibrium uses the upper integral and the lower integral, when the payoff function is not integrable. The use of the upper and lower integrals is of course not a new idea, see for example (Lehrer 2009) who uses the upper integral for the definition of a new integral for capacities, and Stinchcombe (2005) where the upper and lower integrals appear in the context of set-valued integrals. Stinchcombe (2005) also considers equilibria in finitely additive strategies, however he only considers games with finitely many players.

The best-response equilibrium in Milchtaich (2020) is a different finitely additive equilibrium, both technically and conceptually. It does not exist in all games, however it has nice properties. Theorem 2 in Milchtaich (2020) shows that, in games with bounded payoff functions, every best-response equilibrium is a legitimate equilibrium but not the other way around. Section 6 of Milchtaich (2020) contains a more extensive comparison of the two solution concepts.

Structure of the paper. In the next section we discuss some technical preliminaries on finitely additive probability measures. We present the model and the main result in Sect. 3. We provide the proof of the main result in Sect. 4. In Sect. 5 we discuss some properties of legitimate equilibrium, and demonstrate the difficulties of improving upon this existence result. Finally, in Sect. 6, we conclude.

2 Preliminaries

In this section we provide a brief summary on probability charges. For further reading, we refer to (Rao and Rao 1983) and (Dunford and Schwartz 1964).

Charges. Take a nonempty set X endowed with an algebra \({\mathcal {F}}(X)\). A finitely additive probability measure, or simply charge, on \((X, {\mathcal {F}}(X))\) is a mapping \(\mu :{\mathcal {F}}(X) \rightarrow [0,1]\) such that \(\mu (X) = 1\) and for all disjoint sets \(E, F \in {\mathcal {F}}(X)\) it holds that \(\mu (E \cup F) = \mu (E) + \mu (F)\). When X is countable and \({\mathcal {F}}(X)\) is the algebra \(2^X\), a charge \(\mu \) is called diffuse if \(\mu (x)=0\) for each \(x\in X\).Footnote 1 It follows from the axiom of choice that diffuse charges exist (see, for example, (Rao and Rao 1983), p. 38).Footnote 2

Product charge. Let I be a nonempty set, and for each i let \(X_i\) be a nonempty set endowed with an algebra \({\mathcal {F}}(X_i)\), and let \(\mu _i\) be a charge on \((X_i, {\mathcal {F}}(X_i))\). Let \(X=\times _{i \in I} X_i\). A rectangle of X is a set of the form \(Y=\times _{i \in I} Y_i\), where \(Y_i \in {\mathcal {F}}(X_i)\) for all \(i \in I\) and moreover \(Y_i=X_i\) for all but finitely many \(i \in I\). Let \({\mathcal {F}}(X)\) be the smallest algebra on X containing the rectangles of X, which is identical to the collection of all finite unions of rectangles of X. It is known that there is a unique charge \(\mu \) on \((X,{\mathcal {F}}(X))\), called the product charge, that assigns probability \(\Pi _{i \in I} \mu _i(Y_i)\) to each rectangle \(\times _{i \in I} Y_i\) of X (see for example, (Dunford and Schwartz 1964), p. 184).

Integration with respect to a charge. We call a function \(s: X \rightarrow \mathbb {R}\) an \({\mathcal {F}}(X)\)-measurable simple function if s is of the form \(s = \sum _{m=1}^k c_m {\mathbb {I}}_{B_m}\), where \(c_1, \ldots , c_k \in \mathbb {R}\), the sets \(B_1, \ldots , B_k\) are rectangles of X and form a partition of X, and \({\mathbb {I}}_{B_m}\) is the characteristic function of the set \(B_m\). With respect to a charge \(\mu \) on \((X, {\mathcal {F}}(X))\), the integral of s is defined by \(s(\mu )=\int _{x \in X} s(x) \; \mu (d x) = \sum _{m=1}^k c_m \cdot \mu (B_m)\).

Consider a bounded function \(u: X \rightarrow \mathbb {R}\). The upper integral of u with respect to \(\mu \) is defined as

and the lower integral of u with respect to \(\mu \) as

3 The model and the main result

A game has an arbitrary nonempty set I of players. Each player \(i \in I\) is given an arbitrary nonempty action space \(A_i\), endowed with an algebra \({\mathcal {F}}(A_i)\). Let \(A=\Pi _{i \in I} A_i\). Each player \(i \in I\) is given an arbitrary bounded payoff function \(u_i: A \rightarrow \mathbb {R}\).

A strategy for player \(i \in I\) is a charge \(\sigma _i\) on \((A_i, {\mathcal {F}}(A_i))\). We denote the set of strategies for player i by \(\Sigma _i\). A strategy profile is a collection of strategies \(\sigma =(\sigma _i)_{i \in I}\), where \(\sigma _i\) is a strategy for each player \(i \in I\). We denote the set of strategy profiles by \(\Sigma \). Let \(\sigma _{-i}\) denote the partial strategy profile \((\sigma _j)_{j \in I \setminus \{i\}}\) of the opponents of player i, and \(\Sigma _{-i}\) denote the set of such partial strategy profiles.

As described in Sect. 2, every strategy profile \(\sigma \) generates a unique charge on \((A, {\mathcal {F}}(A))\), which with a small abuse of notation we also denote by \(\sigma \). For a player \(i \in I\) the upper integral of his payoff function is denoted by \({\overline{u}}_i\), and the lower integral of his payoff function is denoted by \({\underline{u}}_i\).

Definition 1

A strategy profile \(\sigma \) is called a legitimate equilibrium if for each player \(i \in I\) and each strategy \(\tau _i \in \Sigma _i\)

Intuitively, at a legitimate equilibrium profile \(\sigma \), each player’s best possible expected payoff should be greater than or equal to his worst possible expected payoff if he deviates.

The concepts of legitimate equilibrium and Nash equilibrium coincide in those games where the lower integral and the upper integral of the payoff functions always coincide, that is \({\underline{u}}_i(\sigma ) = {\overline{u}}_i(\sigma )\) for every strategy profile \(\sigma \) and every player i. In such games, a Nash equilibrium is known to exist due to (Marinacci 1997). As a special case, this class of games encompasses games with finitely many players and actions.

Our main result is the following.

Theorem 1

Every game with bounded payoff functions has a legitimate equilibrium. Moreover, the set of legitimate equilibria is a compact subset of the set of strategy profiles.

Note that we have no restriction on the number of players and the action spaces. The proof is based on the Kakutani-Fan-Glicksberg fixed point theorem.

The game in the following example does not admit a Nash equilibrium in countably additive strategies. However, it has a legitimate equilibrium.

Example 1

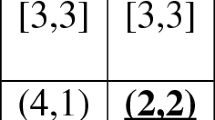

The following game is a version of Wald’s game (Wald 1945). The action sets are \(A_1 = A_2 = {\mathbb {N}}\), endowed with the algebra \(2^{\mathbb {N}}\). Player 1’s payoff for \((a_1,a_2)\in {\mathbb {N}}\times {\mathbb {N}}\) is \(u_1 (a_1,a_2) = 1\) if \(a_1 \ge a_2\) and \(u_1 (a_1,a_2) = 0\) if \(a_1 < a_2\). Player 2’s payoff is \(u_2(a_1,a_2)=1-u_1(a_1,a_2)\) for all \((a_1,a_2) \in {\mathbb {N}}\times {\mathbb {N}}\). The payoffs given by \(u=(u_1,u_2)\) are represented in the following matrix, where player 1 is the row player and player 2 is the column player.

u | 1 | 2 | 3 | \(\ldots \) |

|---|---|---|---|---|

1 | 1,0 | 0,1 | 0,1 | \(\ldots \) |

2 | 1,0 | 1,0 | 0,1 | \(\ldots \) |

3 | 1,0 | 1,0 | 1,0 | \(\ldots \) |

\(\vdots \) | \(\vdots \) | \(\vdots \) | \(\vdots \) | \(\ddots \) |

1. This game has no Nash equilibrium in countably additive strategies. Indeed, take any strategy profile \(\sigma =(\sigma _1,\sigma _2)\). Since the sum of the expected payoffs is 1, we can assume without loss of generality that \(u_1(\sigma )\le 1/2\). However, against the strategy \(\sigma _2\), player 1 can obtain an expected payoff arbitrarily close to 1 by choosing a large action \(a_1\in {\mathbb {N}}\). Hence, \(\sigma \) cannot be a Nash equilibrium.

2. There is a legitimate equilibrium in this game. A strategy \(\sigma _i\) for player \(i\in \{1,2\}\) is called diffuse if \(\sigma _i(n)=0\) for every \(n\in {\mathbb {N}}\). Indeed, each strategy profile \(\sigma =(\sigma _1,\sigma _2)\) in which at least one of the strategies is diffuse, is a legitimate equilibrium.

We show that \(\sigma \) is a legitimate equilibrium if \(\sigma _1\) is diffuse; the proof is similar when \(\sigma _2\) is diffuse. Because the payoff functions only take values 0 and 1, by the definition of legitimate equilibrium, it suffices to prove that \({\overline{u}}_1(\sigma )=1\) and \({\underline{u}}_2(\sigma _1,\sigma '_2)=0\) for every strategy \(\sigma '_2\) for player 2.

2.1 We show first that \({\overline{u}}_1(\sigma )=1\).

We summarise, informally, step 2.1. We use the definition of an upper integral to show that \({\overline{u}}_1(\sigma ) \ge 1\). This means that we consider simple functions that are above the payoff function \(u_1\). We show that for any such simple function s, the expected payoff \(s(\sigma ) \ge 1\). This implies that \({\overline{u}}_1(\sigma )\ge 1\) as well.

Now we present the formal definition of an appropriate simple function. As before, \({\mathcal {F}}({\mathbb {N}}\times {\mathbb {N}})\) is the collection of all finite unions of rectangles of \({\mathbb {N}}\times {\mathbb {N}}\). Take any \({\mathcal {F}}({\mathbb {N}}\times {\mathbb {N}})\)-measurable simple function \(s: {\mathbb {N}}\times {\mathbb {N}}\rightarrow \mathbb {R}\) such that \(s\ge u_1\). Assume that s is of the form \(s = \sum _{m=1}^k c_m {\mathbb {I}}_{B_m}\), where \(c_1, \ldots , c_k \in \mathbb {R}\) and the sets \(B_1, \ldots , B_k\) are rectangles of \({\mathbb {N}}\times {\mathbb {N}}\) and form a partition of \({\mathbb {N}}\times {\mathbb {N}}\).

We show that \(s(\sigma ) \ge 1\). For calculating the integral of s with respect to \(\sigma \) we need to consider the probabilities of the sets \((B_m)_{m=1}^k\). First, let us consider (certain) sets with probability 0. Let \(B_l=B_l^1 \times B_l^2 \subseteq {\mathbb {N}}\times {\mathbb {N}}\) where \(B_l^1\) is finite. Since \(\sigma _1\) is diffuse, \(\sigma _1(n)=0\) for every \(n\in {\mathbb {N}}\). Using finite additivity of charges, since \(B_l^1\) is finite, the probability \(\sigma _1(B_l^1)=\sum _{n \in B_l^1} \sigma (n)=0\). Therefore \(\sigma (B)=\sigma _1(B_l^1) \cdot \sigma _2(B_l^2)=0\) as well. Second, let us consider the sets with positive probability. Let M be the set of m for which \(\sigma (B_m)>0\). Note that \(\sum _{m\in M}\sigma (B_m)=1\), since \(B_1, \ldots , B_k\) form a finite partition of \({\mathbb {N}}\times {\mathbb {N}}\). Let \(m\in M\). The set \(B_m\) is of the form \(B_m=B^1_m\times B^2_m\). It follows from earlier arguments that because \(\sigma _1\) is diffuse and \(\sigma (B_m)>0\), the set \(B^1_m\subseteq {\mathbb {N}}\) is infinite. Consequently, there is \((x^1_m,x^2_m)\in B_m\) such that \(x^1_m\ge x^2_m\). The simple function \(s \ge u_1\) implies that \(c_m\ge u_1(x^1_m,x^2_m)=1\). Therefore,

It follows that \({\overline{u}}_1(\sigma )= 1\).

2.2 We show that \({\underline{u}}_2(\sigma _1,\sigma '_2)=0\) for every strategy \(\sigma '_2\) for player 2. Let \(\sigma '_2\) be any strategy of player 2. Take any \({\mathcal {F}}({\mathbb {N}}\times {\mathbb {N}})\)-measurable simple function \(s: {\mathbb {N}}\times {\mathbb {N}}\rightarrow \mathbb {R}\) such that \(s\le u_2\). Similarly to step 2.1, one can verify that

It follows that \({\underline{u}}_2(\sigma _1,\sigma '_2)=0\).\(\Diamond \)

4 Proof of the existence result

In this section we prove Theorem 1. The proof is based on the Kakutani-Fan-Glicksberg fixed point theorem, stated below (cf. Corollary 17.55 in Aliprantis and Border (2005)).

Theorem 2

(Kakutani-Fan-Glicksberg) Let K be a nonempty compact convex subset of a locally convex Hausdorff topological vector space, and let the correspondence \(\phi : K \rightrightarrows K\) have closed graph and nonempty convex values. Then the set of fixed points of \(\phi \) is nonempty and compact.

We endow \(\Sigma \) with the topology of pointwise convergence. That is, we see \(\Sigma \) as a subset of \(C\;:=\;\times _{i \in I} \times _{A_i' \in {\mathcal {F}}(A_i)} \mathbb {R}\), where C is endowed with the product topology and \(\Sigma \) is given its relative topology. By Tychonoff’s theorem, \(C_{[0,1]}:=\times _{i \in I} \times _{A_i' \in {\mathcal {F}}(A_i)} [0,1]\) is a compact subset of C. As \(\Sigma \) is a closed subset of \(C_{[0,1]}\), the set \(\Sigma \) is compact. This way \(\Sigma \) is a nonempty compact convex subset of the locally convex Hausdorff topological vector space C.

A mapping \(f: \Sigma \rightarrow \mathbb {R}\) is called upper semicontinuous if for every net \((\sigma ^{\alpha })_{\alpha \in D}\) in \(\Sigma \), where D is a directed set, converging to some \(\sigma \in \Sigma \), we have \(\limsup _{\alpha }f(\sigma ^\alpha )\le f(\sigma )\). Similarly, a mapping \(f: \Sigma \rightarrow \mathbb {R}\) is called lower semicontinuous if for every net \((\sigma ^{\alpha })_{\alpha \in D}\) in \(\Sigma \), where D is a directed set, converging to some \(\sigma \in \Sigma \), we have \(\liminf _{\alpha }f(\sigma ^\alpha )\ge f(\sigma )\).

Lemma 1

For every player \(i \in I\), the mapping \(\sigma \rightarrow {\overline{u}}_i(\sigma )\) from \(\Sigma \) to \(\mathbb {R}\) is upper semicontinuous, and the mapping \(\sigma \rightarrow {\underline{u}}_i(\sigma )\) from \(\Sigma \) to \(\mathbb {R}\) is lower semicontinuous.

Proof

We only prove that the mapping \(\sigma \rightarrow {\overline{u}}_i(\sigma )\) from \(\Sigma \) to \(\mathbb {R}\) is upper semicontinuous. The proof of the second part is similar.

Take a net \((\sigma ^{\alpha })_{\alpha \in D}\) in \(\Sigma \), where D is a directed set, converging to some \(\sigma \in \Sigma \).

First we show that \(\lim _{\alpha }s(\sigma ^\alpha )\;=\;s(\sigma )\) for every \({\mathcal {F}}(A)\)-measurable simple function s. Take an \({\mathcal {F}}(A)\)-measurable simple function s of the form \(s = \sum _{m=1}^k c_m {\mathbb {I}}_{B_m}\). Since each \(B_m\) is a rectangle of A, the net \((\sigma ^{\alpha }(B_m))_{\alpha \in D}\) of probabilities converges to \(\sigma (B_m)\). Therefore \(\lim _{\alpha }s(\sigma ^\alpha )\;=\;s(\sigma )\).

Let \(\varepsilon >0\). By the definition of \({\overline{u}}_i (\sigma )\), there is an \({\mathcal {F}}(A)\)-measurable simple function s such that \(s \ge u_i\) and

Since s is an \({\mathcal {F}}(A)\)-measurable simple function, we have by the argument above that \(\lim _{\alpha }s(\sigma ^\alpha )\;=\;s(\sigma )\). Because \(s \ge u_i\), we also have \(s(\sigma ^\alpha )\ge {\overline{u}}_i (\sigma ^\alpha )\) for each \(\alpha \in D\). Hence

As \(\varepsilon >0\) was arbitrary the proof is complete. \(\square \)

Now we prove Theorem 1 in a number of steps. We will define a correspondence from the set of strategy profiles \(\Sigma \) to the power set of \(\Sigma \) such that this correspondence has a fixed point, by the Kakutani-Fan-Glicksberg theorem, and each fixed point is a legitimate equilibrium. To define this correspondence we need a number of auxiliary steps. Some of these steps are fairly similar to steps taken by Vasquez (2017).

Step 1. Consider a player i and let \(\gamma _i\) be a strategy for player i. For each strategy profile \(\sigma \), we define the set

Note that \(BR^{\gamma _i}_i\) is a subset of \(\Sigma \) and not of \(\Sigma _i\). It is not essential for the proof to define \(BR^{\gamma _i}_i\) as a set of strategy profiles, however it makes the exposition somewhat simpler. Intuitively, \(BR^{\gamma _i}_i\) consists of all strategy profiles \(\tau \) such that \(\tau _i\) with the upper integral is a better reply to \(\sigma _{-i}\) than \(\gamma _i\) with the lower integral. If a strategy profile \(\tau \) belongs to \(BR^{\gamma _i}_i\), then \((\tau _i, \tau '_{-i})\) also belongs to \(BR^{\gamma _i}_i\) for every \(\tau '_{-i} \in \Sigma _{-i}\).

We show that for each strategy profile \(\sigma \), the set \(BR^{\gamma _i}_i (\sigma )\) is nonempty and convex.

Proof of step 1.

Take a strategy profile \(\sigma \). Since \((\gamma _i, \sigma _{-i}) \in BR^{\gamma _i}_i (\sigma )\), the set \(BR^{\gamma _i}_i (\sigma )\) is nonempty.

We show that \(BR^{\gamma _i}_i (\sigma )\) is convex. As a first step, we argue that \({\overline{u}}_i\) is linear in the strategy of player i. Take two strategy profiles \(\tau , \mu \in \Sigma \) such that \(\tau _{-i}=\mu _{-i}\) and \(\lambda \in (0,1)\). We prove that

Let \(S_i\) denote the set of \({\mathcal {F}}(A)\)-measurable simple functions s satisfying \(s \ge u_i\). Clearly, for every \(s\in S_i\) we have

Hence,

Let \(\varepsilon >0\), and let \(s',s''\in S_i\) such that

Define \(s'''=\min \{s',s''\}\). Clearly, \(s'''\in S_i\). Thus

As \(\varepsilon >0\) was arbitrary, we conclude

Hence, (1) holds, which shows that \({\overline{u}}_i\) is linear in the strategy of player i.

Take two strategy profiles \(\tau , \mu \in BR^{\gamma _i}_i (\sigma )\) and \(\lambda \in (0,1)\). Therefore,

As a consequence, \(BR^{\gamma _i}_i (\sigma )\) is convex.

Step 2. Consider a player i and let \(\gamma _i\) be a strategy for player i. We prove that the correspondence \(\sigma \rightrightarrows BR_i^{\gamma _i}(\sigma )\) from \(\Sigma \) to \(2^\Sigma \) has a closed graph.

Proof of step 2. With a directed set D, take two nets \((\sigma ^{\alpha })_{\alpha \in D}\) and \((\tau ^{\alpha })_{\alpha \in D}\) in \(\Sigma \) converging to respectively some \(\sigma \in \Sigma \) and \(\tau \in \Sigma \). Assume that for every \(\alpha \in D\), we have \(\tau ^\alpha \in BR^{\gamma _i}_i (\sigma ^\alpha )\). We show that \(\tau \in BR^{\gamma _i}_i (\sigma )\). Then, the proof of step 2 will be complete (cf. also Theorems 17.16 and 17.10 in Aliprantis and Border (2005)).

For every \(\alpha \in D\), as \(\tau ^\alpha \in BR^{\gamma _i}_i (\sigma ^\alpha )\), we have \({\overline{u}}_i (\tau _i^\alpha , \sigma ^\alpha _{-i}) \ge {\underline{u}}_i (\gamma _i, \sigma ^\alpha _{-i})\). By Lemma 1, taking limits yields

Thus, \(\tau \in BR^{\gamma _i}_i (\sigma )\) as desired.

Step 3. Consider a player i. For each strategy profile \(\sigma \), we define the set

Intuitively, \(BR_i\) consists of all strategy profiles \(\tau \) such that \(\tau _i\) with the upper integral is a better reply to \(\sigma _{-i}\) than any other strategy of player i with the lower integral. We prove that for each strategy profile \(\sigma \), the set \(BR_i (\sigma )\) is nonempty and convex.

Proof of step 3. Take \(\sigma \in \Sigma \). Convexity of \(BR_i(\sigma )\) directly follows from Step 1, where we showed the convexity of \(BR_i^{\gamma _i}(\sigma )\) for each \(\gamma _i \in \Sigma _i\).

Now we show that \(BR_i(\sigma )\) is nonempty. By the finite intersection property (cf. Theorem 2.31 in Aliprantis and Border (2005)) it is sufficient to check for finitely many strategies \(\gamma _i^1, \ldots , \gamma _i^k \in \Sigma _i\) that \(\cap _{j=1}^k BR_i^{\gamma _i^j}(\sigma )\) is not empty. Choose \(m \in \{1, \ldots , k\}\) such that \({\underline{u}}_i(\gamma ^m_i, \sigma _{-i})\ge {\underline{u}}_i(\gamma ^j_i, \sigma _{-i})\) for all \(j \in \{1, \ldots , k\}\). Since \({\overline{u}}_i(\gamma ^m_i, \sigma _{-i})\ge {\underline{u}}_i(\gamma ^m_i, \sigma _{-i})\), the strategy profile \((\gamma ^m_i,\sigma _{-i})\) belongs to \(\cap _{j=1}^k BR_i^{\gamma _i^j}(\sigma )\). So \(BR_i(\sigma )\) is nonempty.

Step 4. For each strategy profile \(\sigma \), we define the set

Intuitively, \(BR(\sigma )\) consists of all strategy profiles \(\tau \) such that, for any player i, the strategy \(\tau _i\) with the upper integral is a better reply to \(\sigma _{-i}\) than any other strategy of player i with the lower integral. We prove that for each strategy profile \(\sigma \), the set \(BR (\sigma )\) is nonempty and convex.

Proof of step 4. Take \(\sigma \in \Sigma \). Convexity of \(BR(\sigma )\) directly follows from Step 3, where we showed the convexity of \(BR_i(\sigma )\) for each \(i\in I\).

Now we show that \(BR(\sigma )\) is nonempty. By Step 3, \(BR_i(\sigma )\) is nonempty for each player \(i\in I\). Choose a strategy profile \(\tau ^i \in BR_i(\sigma )\) for each player \(i \in I\). As usual, \(\tau ^i_i\) denotes the strategy of player i in the strategy profile \(\tau ^i\). Construct a new strategy profile \(\tau \) such that \(\tau _i=\tau ^i_i\) for each player \(i \in I\). Then \(\tau \in BR_i (\sigma )\) for all \(i \in I\). This implies that \(\tau \in \cap _{i \in I} BR_i(\sigma )\), and hence \(BR(\sigma )\) is nonempty.

Step 5. We argue that the correspondence \(\phi :\sigma \rightrightarrows BR(\sigma )\) from \(\Sigma \) to \(2^\Sigma \) has a fixed point. Moreover, any fixed point of \(\phi \) is a legitimate equilibrium.

Proof of step 5. The graph of the correspondence \(\phi \) is the intersection of the graphs of the correspondences \(\sigma \rightrightarrows BR_i^{\gamma _i}(\sigma )\) over all players \(i\in I\) and strategies \(\gamma _i\) of player i. Hence, by Step 2, the correspondence \(\phi \) has a closed graph. Moreover, by Step 4, \(\phi \) has nonempty and convex values. Due to Theorem 2 the correspondence \(\phi \) has a fixed point. It is clear that any fixed point of \(\phi \) is a legitimate equilibrium.

5 Properties of the concept of legitimate equilibrium

In this section we discuss some properties of legitimate equilibrium. As we previously mentioned, the concept of legitimate equilibrium coincides with the concept of Nash equilibrium in those games where the lower integral and the upper integral of the payoff functions always coincide, in particular, in games with finitely many players and actions.

As Theorem 3 shows a legitimate equilibrium exists under the sole condition that the payoff functions are bounded. Such a general existence result has its consequences. Out of the three consequences we point out below, the most prominent one is discussed in the next subsection, which seems to be difficult to overcome.

5.1 Unique evaluation of strategy profiles

According to the concept of legitimate equilibrium, a strategy profile is not necessarily evaluated in the same way when it is a candidate equilibrium and when it arises by a deviation of a player. In this subsection we examine whether the current proof could be generalised to obtain a Nash equilibrium. That is, we would like to assign one specific payoff to each strategy profile regardless whether it is a candidate equilibrium or it arises as a deviation.Footnote 3

One natural attempt would be to take, for each player \(i\in I\), a selector \(f_i\) of the correspondence \(\sigma \rightrightarrows [{\underline{u}}_i(\sigma ),{\overline{u}}_i(\sigma )]\) from \(\Sigma \) to \(\mathbb {R}\), try to replace both \({\overline{u}}_i\) and \({\underline{u}}_i\) by \(f_i\) in the proof, and thus find a strategy profile \(\sigma ^*\) such that \(f_i(\sigma ^*)\ge f_i(\sigma '_i,\sigma ^*_{-i})\) for every payer i and every strategy \(\sigma '_i\) of player i. So, this strategy profile \(\sigma ^*\) would not only be a legitimate equilibrium, but even a Nash equilibrium with respect to the payoffs given by \(f=(f_i)_{i\in I}\). Taking a selector and defining an equilibrium based on it has also been considered by Simon and Zame (1990) and by Stinchcombe (2005).

Since we try to replace both \({\overline{u}}_i\) and \({\underline{u}}_i\) by \(f_i\) in the proof, our line of proof would only work if the selector \(f_i\), for each player i, satisfies those properties of both \({\underline{u}}_i\) and \({\overline{u}}_i\) that we used in the proof of Sect. 4. To be precise, in that proof we made use of the following properties of \({\underline{u}}_i\) and \({\overline{u}}_i\) for each player i: (1) \({\underline{u}}_i(\sigma ) \le {\overline{u}}_i(\sigma )\) for every strategy profile \(\sigma \), (2) the mapping \(\sigma \rightarrow {\underline{u}}_i(\sigma )\) is lower semicontinuous (cf. Lemma 1), (3) the mapping \(\sigma \rightarrow {\overline{u}}_i(\sigma )\) is upper semicontinuous (cf. Lemma 1) and it is linear in player i’s strategy \(\sigma _i\) (cf. Step 1 in Sect. 4). Even though the mapping \(\sigma \rightarrow {\underline{u}}_i(\sigma )\) is also linear in player i’s strategy \(\sigma _i\), this was not needed in the proof.

So, for each player i, the selector \(f_i\) should be continuous and in addition linear in player i’s strategy. However, in general, such a selector does not exist. In fact, there might not even be a selector that is only required to be continuous. We illustrate it by showing that there is no continuous selector for player 1 in the game of Example 1. Let \(\sigma =(\sigma _1,\sigma _2)\) be a strategy profile in which both strategies are diffuse charges, that is \(\sigma _1(n)=\sigma _2(n)=0\) for every \(n\in {\mathbb {N}}\), and both strategies are 0–1 valued, that is they only assign to each set probability 0 or 1 (such strategies correspond to ultrafilters on the action sets).

Consider any open neighborhood U of \(\sigma \) in \(\Sigma \). Then, there is a finite collection \(\{B_1,\ldots ,B_k\}\) of rectangles of \(A=A_1\times A_2={\mathbb {N}}\times {\mathbb {N}}\) and positive numbers \(\varepsilon _1,\ldots ,\varepsilon _k\) such that the set

is a subset of U. By adding more constraints (splitting the sets \(B_1,\ldots ,B_k\) if necessary and adding more sets), we can even assume that there is a finite partition \(P_1\) of \(A_1\) and a finite partition \(P_2\) of \(A_2\) such that \(\{B_1,\ldots ,B_k\}\) is the same as \(\{X\times Y: X\in P_1, Y\in P_2\}\).

Let X be the unique element of \(P_1\) for which \(\sigma _1(X)=1\), and let Y be the unique element of \(P_2\) for which \(\sigma _2(Y)=1\). Since \(\sigma _1\) and \(\sigma _2\) are diffuse, X and Y are both infinite. This implies that there are \((x^1,y^1),(x^2,y^2)\in X\times Y\) such that \(x^1\ge y^1\) and \(x^2< y^2\).

So we define two strategy profiles \(\mu =\delta (x^1,y^1)\) and \(\nu =\delta (x^2,y^2)\) where \(\delta \) is the Dirac measure. We have \(\mu (X\times Y)=\nu (X\times Y)=1\), and \(\mu (B_m)=\nu (B_m)=\sigma (B_m)\) for all \(m=1,\ldots ,k\). Hence \(\mu ,\nu \in W\). As \({\underline{u}}_1(\mu )=1\) and \({\overline{u}}_1(\nu )=0\), in conclusion, there is no continuous selector \(f_1\) for player 1 of the correspondence \(\sigma \rightrightarrows [{\underline{u}}_1(\sigma ),{\overline{u}}_1(\sigma )]\) from \(\Sigma \) to \(\mathbb {R}\).

5.2 Dominated strategies

We say that an action \(a_i\) of player i is c-dominated by another action \(a_i'\), where \(c>0\), if for all action profiles \(a_{-i}\) of the other players, \(u_i(a'_i, a_{-i}) \ge u_i(a_i, a_{-i})+c\).

In a legitimate equilibrium it can happen that a player places a positive probability on a set of c-dominated actions for some \(c>0\). Indeed, consider the following example.

We make slight changes to Wald’s game (Example 1). Assume that additionally to choosing integers, player 1 can also choose one of two colors, green or red. Whichever color player 1 chooses, the payoff of player 2 is according to Wald’s game. If player 1 chooses green, then the payoff for player 1 is also according to Wald’s game. However, if player 1 chooses red, then player 1 receives the payoff of Wald’s game minus \(\tfrac{1}{2}\). Notice that for any integer n the action \((\text {red},n)\) is \(\tfrac{1}{2}\)-dominated by \((\text {green},n)\). A strategy profile \((\sigma _1,\sigma _2)\) where player 1 plays a diffuse charge \(\sigma _1\) placing probability 1 on the color red and player 2 plays a diffuse charge \(\sigma _2\) is a legitimate equilibrium in this game. Indeed, player 2 has no incentive to deviate as \({\overline{u}}_2(\sigma )=1\), whereas for any deviation \(\sigma '_1\) of player 1 we have \({\underline{u}}_1(\sigma '_1,\sigma _2)=0\). Thus, in this legitimate equilibrium player 1 places probability 1 on a set of \(\tfrac{1}{2}\)-dominated actions.

In this example it is a crucial feature that the strategy profiles are evaluated differently when they are considered as a candidate equilibrium compared to a deviation. Therefore, this discussion is strongly related to Sect. 5.1, and raises once again the question if the goal in Sect. 5.1 can be achieved.

The best-response equilibrium in Milchtaich (2020) does not exist in all games, however when it exists, it excludes c-dominated actions.

5.3 Constant-sum payoffs

In a legitimate equilibrium, each player evaluates the equilibrium strategy profile through the upper integral. As a consequence, in games in which the payoffs for each action profile sum up to the same constant c, it can happen that the expected payoffs of a legitimate equilibrium do not sum up to c but to something higher. For instance, in Wald’s game (Example 1) the payoffs always add up to 1, but both players playing a diffuse charge is a legitimate equilibrium, with a total expected payoff of 2. We remark that losing the constant sum feature is hardly uncommon in games with finitely additive strategies (see for example, (Flesch et al. 2017; Vasquez 2017), because of the difficulties discussed in the Introduction to define an expected payoff for each strategy profile.

6 Conclusions

Under rather general conditions we prove the existence of a legitimate equilibrium in finitely additive strategies. Namely, a legitimate equilibrium exists in any normal-form game with an arbitrary number of players and arbitrary action spaces, provided that the payoff functions are bounded. The proof uses the Kakutani-Fan-Glicksberg fixed point theorem. It seems difficult to find a refinement of legitimate equilibrium for which the existence can be guaranteed while using a similar line of proof.

Notes

The definition of a diffuse charge can be generalized to sets that are uncountably infinite. However, in the general case the definition is more involved.

Note that diffuse charges are crucial for the existence of legitimate equilibria (see Example 1). These charges are not countably additive which means that in comparison to a game theoretic model with countably additive measures on infinite action spaces, our model includes more mixed strategies.

We remark that as Example 6.1 in Flesch et al. (2017) demonstrates, under an unfortunate payoff assignment to strategy profiles, a Nash equilibrium does not have to exist.

References

Aliprantis D, Border KC (2005) Infinite Dimensional Analysis. Springer, Berlin

Bingham, NH (2010) Finite additivity versus countable additivity. Electron J History Probabil Stat 6(1)

Bich P, Laraki R (2017) On the Existence of Approximate Equilibria and Sharing Rule Solutions in Discontinuous Games. Theor Econ 12(79)108

Capraro V, Scarsini M (2013) Existence of equilibria in countable games: an algebraic approach. Games Econ Behav 79(C):163-180

Dasgupta P, Maskin E (1986) The existence of equilibrium in discontinuous economic games, Part I (Theory). Rev Econ Stud 53(1):1–26

Dasgupta P, Maskin E (1986) The existence of equilibrium in discontinuous economic games, Part II (Applications). Rev Econ Stud 53(1):27–41

Dubins LE, Savage LJ, edited and updated by Sudderth, WD and Gilat, D, (2014) How to gamble if you must: inequalities for stochastic processes. Dover Publications, New York

Dunford N, Schwartz JT (1964) Linear Operators, Part I: General Theory. Interscience Publishers, New York

de Finetti B (1975) The theory of probability (2 volumes). J. Wiley and Sons, Chichester

Flesch J, Vermeulen D, Zseleva A (2017) Zero-sum games with charges. Games Econ Behav 102:666–686

Harris JH, Stinchcombe MB, Zame WR (2005) Nearly compact and continuous normal form games: characterizations and equilibrium existence. Games Econ Behav 50:208–224

Heath D, Sudderth W (1972) On a Theorem of de Finetti, oddsmaking and game theory. Ann Math Stat 43:2072–2077

Kindler J (1983) A general solution concept for two-person zero sum games. J Optim Theory Appl 40:105–119

Lehrer E (2009) A new integral for capacities. Econ Theory 39:157–176

Loś J, Marczewski E (1949) Extensions of measure. Fundamenta Mathematicae 36:267–276

Maitra A, Sudderth W (1993) Finitely additive and measurable stochastic games. Int J Game Theory 22:201–223

Maitra A, Sudderth W (1998) Finitely additive stochastic games with Borel measurable payoffs. Int J Game Theory 27:257–267

Marinacci M (1997) Finitely additive and epsilon Nash equilibria. Int J Game Theory 26(3):315–333

Milchtaich I (2020) Best-Response Equilibrium: An Equilibrium in Finitely Additive Mixed Strategies. Research Institute for Econometrics and Economic Theory, Discussion paper No, pp 2–20

Rao KPSB, Rao B (1983) Theory of charges: a study of finitely additive measures. Academic Press, New York

Reny P (1999) On the existence of pure and mixed strategy nash equilibria in discontinuous games. Econometrica 67(5):1029–1056

Savage LJ (1972) The foundations of statistics. Dover Publications, New York

Schervish MJ, Seidenfeld T (1996) A fair minimax theorem for two-person (zero-sum) games involving finitely additive strategies. In: Berry DA, Chaloner KM, Geweke JK (eds) Bayesian Analysis in Statistics and Econometrics. Wiley, New York, pp 557–568

Simon L, Zame W (1990) Discontinuous games and endogenous sharing rules. Econometrica 58(4):861–872

Stinchcombe MB (2005) Nash equilibrium and generalized integration for infinite normal form games. Games Econ Behav 50:332–365

Vasquez, MA (2017). Essays in Mathematical Economics. PhD thesis, UC Berkeley

Wald A (1945) Generalization of a theorem by v. Neumann concerning zero sum two person games. Ann Math 2(46):281–286

Yanovskaya EB (1970) The solution of infinite zero-sum two-person games with finitely additive strategies. Theory Probabil Appl 15(1):153–158

Yosida K, Hewitt E (1952) Finitely additive measures. Trans Am Math Soc 72(1):46–66

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We would like to thank Philippe Bich, Igal Milchtaich and Xavier Venel for their helpful comments and discussion. We thank the anonymous referee and associate editor for their helpful comments.

Support from the Basic Research Program of the National Research University Higher School of Economics is gratefully acknowledged.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Flesch, J., Vermeulen, D. & Zseleva, A. Legitimate equilibrium. Int J Game Theory 50, 787–800 (2021). https://doi.org/10.1007/s00182-021-00768-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00182-021-00768-y