Abstract

Brownian multiplicative chaos measures, introduced in Jego (Ann Probab 48:1597–1643, 2020), Aïdékon et al. (Ann Probab 48(4):1785–1825, 2020) and Bass et al. (Ann Probab 22:566–625, 1994), are random Borel measures that can be formally defined by exponentiating \(\gamma \) times the square root of the local times of planar Brownian motion. So far, only the subcritical measures where the parameter \(\gamma \) is less than 2 were studied. This article considers the critical case where \(\gamma =2\), using three different approximation procedures which all lead to the same universal measure. On the one hand, we exponentiate the square root of the local times of small circles and show convergence in the Seneta–Heyde normalisation as well as in the derivative martingale normalisation. On the other hand, we construct the critical measure as a limit of subcritical measures. This is the first example of a non-Gaussian critical multiplicative chaos. We are inspired by methods coming from critical Gaussian multiplicative chaos, but there are essential differences, the main one being the lack of Gaussianity which prevents the use of Kahane’s inequality and hence a priori controls. Instead, a continuity lemma is proved which makes it possible to use tools from stochastic calculus as an effective substitute.

Similar content being viewed by others

1 Introduction

Thick points of planar Brownian motion/random walk are points that have been visited unusually often by the trajectory. The study of these points has a long history going back to the famous conjecture of Erdős and Taylor [23] on the leading order of the number of times a planar simple random walk visits the most visited site during the first n steps. Since then, the understanding of these thick points has considerably improved. On the random walk side, Dembo et al. [17] settled Erdős–Taylor conjecture and computed the number of thick points at the level of exponent, for random walk having symmetric increments with finite moments of all order. Bass and Rosen [13] and Rosen [43], and more recently [28], streamlined the proof and extended these results to a wide class of planar random walk. On the Brownian motion side, Bass et al. [7] constructed random measures supported on the set of thick points. Their results concern only a partial range \(\{a \in (0,1/2)\}\) of the thickness parameter a.Footnote 1 Aïdékon et al. [2] and Jego [26] extended simultaneously the results of [7] by building these random measures for the whole subcritical range \(\{a \in (0,2)\}\). Jego [27] gave an axiomatic characterisation of these measures and showed that they describe the scaling limit of thick points of planar simple random walk for any fixed \(a < 2\). All these aforementioned works are subcritical results. The aim of this paper is to extend the theory to the critical point \(a=2\) by constructing a random measure supported by the thickest points of a planar Brownian trajectory. This enables us to formulate a precise conjecture on the convergence in distribution of the supremum of local times of planar random walk.

Our construction is inspired by Gaussian multiplicative chaos theory (GMC), i.e. the study of random measures formally defined as the exponential of \(\gamma \) times a log-correlated Gaussian field, such as the two-dimensional Gaussian free field (GFF), where \(\gamma \ge 0\) is a parameter. Since such a field is not defined pointwise but is rather a random generalised function, making sense of such a measure requires some nontrivial work. The theory was introduced by Kahane [32] and has expanded significantly in recent years. By now it is relatively well understood, at least in the subcritical case where \(\gamma <\sqrt{2d}\) [9, 22, 45,46,47] and even in the critical case \(\gamma = \sqrt{2d}\) [3, 4, 18, 19, 29, 30, 39]. In this article, the log-correlated field we have in mind is the (square root of) the local time process of a planar Brownian motion, appropriately stopped. The main interest of our construction from GMC point of view is that this field is non-Gaussian, so that our results give the first example of a critical chaos for a truly non-Gaussian field.Footnote 2

1.1 Main results

Let \({\mathbb {P}}_x\) be the law under which \((B_t)_{t \ge 0}\) is a planar Brownian motion starting from \(x \in {\mathbb {R}}^2\). Let \(D \subset {\mathbb {R}}^2\) be an open bounded simply connected domain, \(x_0 \in D\) be a starting point and \(\tau \) be the first exit time of D:

For all \(x \in {\mathbb {R}}^2, t>0, \varepsilon >0,\) define the local time \(L_{x,\varepsilon }(t)\) of \(\left( \left| B_s - x \right| , s \ge 0 \right) \) at \(\varepsilon \) up to time t (here \(\left| \cdot \right| \) stands for the Euclidean norm):

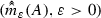

Jego [26, Proposition 1.1] shows that we can make sense of the local times \(L_{x,\varepsilon }(\tau )\) simultaneously for all x and \(\varepsilon \) with the convention that \(L_{x,\varepsilon }(\tau ) = 0\) if the circle \(\partial D(x,\varepsilon )\) is not entirely included in D. We can thus define for any thickness parameter \(\gamma \in (0,2]\) and any Borel set A,

We recall:

Theorem A

(Theorem 1.1 of [26]) Let \(\gamma \in (0,2)\). The sequence of random measures \(m_\varepsilon ^\gamma \) converges as \(\varepsilon \rightarrow 0\) in probability for the topology of weak convergence on D towards a Borel measure \(m^\gamma \) called Brownian multiplicative chaos.

See [2] for a different construction of the subcritical Brownian multiplicative chaos, as well as [7] for partial results. See also [27] for more properties on these measures.

Our first result towards extending the theory to the critical point \(\gamma =2\) is the fact that the subcritical normalisation yields a vanishing measure in the critical case:

Proposition 1.1

\(m_\varepsilon ^{\gamma =2} (D)\) converges in \({\mathbb {P}}_{x_0}\)-probability to zero.

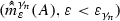

To obtain a non-trivial object we thus need to renormalise the measure slightly differently. Firstly, we consider the Seneta–Heyde normalisation: for all Borel set A, define

Secondly, we consider the derivative martingale normalisation which formally corresponds to (minus) the derivative of \(m_\varepsilon ^\gamma \) with respect to \(\gamma \) evaluated at \(\gamma = 2\): for all Borel set A, define

Theorem 1.1

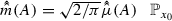

The sequences of random positive measures \((m_\varepsilon )_{\varepsilon > 0}\) and random signed measures \((\mu _\varepsilon )_{\varepsilon > 0}\) converge in \({\mathbb {P}}_{x_0}\)-probability for the topology of weak convergence towards random Borel measures m and \(\mu \). Moreover, the limiting measures satisfy:

-

1.

\(m = \sqrt{\frac{2}{\pi }} \mu \) \({\mathbb {P}}_{x_0}\)-a.s. In particular, \(\mu \) is a random positive measure.

-

2.

Nondegeneracy: \(\mu (D) \in (0,\infty )\) \({\mathbb {P}}_{x_0}\)-a.s.

-

3.

First moment: \({\mathbb {E}}_{x_0} \left[ \mu (D) \right] = \infty \).

-

4.

Nonatomicity: \({\mathbb {P}}_{x_0}\)-a.s. simultaneously for all \(x \in D\), \(\mu (\{x\}) = 0\).

Our next main result is the construction of critical Brownian multiplicative chaos as a limit of subcritical measures. Before stating such a result, we need to ensure that we can make sense of the subcritical measures simultaneously for all \(\gamma \in (0,2)\).

Proposition 1.2

Let \({\mathcal {M}}\) be the set of finite Borel measures on \({\mathbb {R}}^2\). The process \(\gamma \in (0,2) \mapsto m^\gamma \in {\mathcal {M}}\) of subcritical Brownian multiplicative chaos measures possesses a modification such that for all continuous nonnegative function f, \(\gamma \in (0,2) \mapsto \int f dm^\gamma \in {\mathbb {R}}\) is lower semi-continuous.

Theorem 1.2

Let \( \gamma \in (0,2) \mapsto m^\gamma \) be the process of subcritical Brownian multiplicative chaos measures from Proposition 1.2. Then, \((2-\gamma )^{-1} m^\gamma \) converges towards \(2 \mu \) as \(\gamma \rightarrow 2^{-}\) in probability for the topology of weak convergence of measures.

Remark 1.1

In Proposition 1.2, we do not obtain continuity of the process in \(\gamma \). The main difficulty here is that, in order to use Kolmogorov’s continuity theorem, one has to consider moments of order larger than 1. When \(\gamma \ge \sqrt{2}\), the second moment blows up and we have to deal with non-integer moments which are difficult to estimate without the use of Kahane’s convexity inequalities but this tool is restricted to the Gaussian setting. To bypass this difficulty, we apply Kolmogorov’s criterion to versions of the measures that are restricted to specific ‘good’ events allowing us to make \(L^2\)-computations. The drawback is that it does not yield continuity of the process but only lower semi-continuity. See “Appendix B”.

We mention that the construction of the critical measure as a limit of subcritical measures is only partially known in the GMC realm. Such a result has first been proved to hold in the specific case of the two-dimensional GFF [4] exploiting on the one hand the construction of Liouville measures as multiplicative cascades [3] and on the other hand the strategy of Madaule [37] who proves a result analogous to Theorem 1.2 in the case of multiplicative cascades/branching random walk. It has then been extended to a wide class of log-correlated Gaussian fields in dimension two by comparing them to the GFF [30]. In other dimensions, a natural reference log-correlated Gaussian field is lacking and the result is so far unknown. We believe that the approach we use in this paper to prove Theorem 1.2 can be adapted in order to show that critical GMC measures can be built from their subcritical versions in any dimension.Footnote 3

Theorem 1.2 can be seen as exchanging the limit in \(\varepsilon \) and the derivative with respect to \(\gamma \). Surprisingly, a factor of 2 pops up when one exchanges the two:

This factor of 2 is present as well in the context of GMC [4, 30] and cascades [37].

Theorem 1.2 is important because it hints at the universal nature of the measure \(\mu \), in the following sense. First, recall that the article [27] gives an axiomatic characterisation of the subcritical measures \(m^\gamma \) implying their universality in the sense that different approximations yield the same limiting measures. Thus, Theorem 1.2 can be seen as showing a form of universality for \(\mu \) as well. Furthermore, the subcritical measures \(m^\gamma \) are known to be conformally covariant [2, 26] and Theorem 1.2 allows us to extend this conformal covariance to the critical measures.

Corollary 1.1

Let \(\phi : D \rightarrow D'\) be a conformal map between two bounded simply connected domains. Let \(x_0 \in D\) and denote by \(\mu ^D\) and \(\mu ^{D'}\) the critical Brownian multiplicative chaos measures built in Theorem 1.1 for the domains \((D,x_0)\) and \((D', \phi (x_0))\) respectively. Then we have

Proof

Let \(\gamma \in (0,2)\) and denote by \(m^{\gamma ,D}\) and \(m^{\gamma ,D'}\) the subcritical measures built in Theorem A for the domains \((D,x_0)\) and \((D',\phi (x_0))\) respectively. By [26, Corollary 1.4 (iv)], it is known that

By Theorem 1.2, we obtain the desired result by dividing both sides of the above equality by \(2(2-\gamma )\) and then by letting \(\gamma \rightarrow 2\).

Let us note that in [26] the conformal covariance (1.5) of the subcritical measures is stated between domains that are assumed to have a boundary composed of a finite number of analytic curves. This extra assumption was made to match the framework of [2] but we emphasise that it is useless in our context. Proposition 6.2 of [26] only requires the domain to be bounded and simply connected. This proposition characterises the law of \(m^{\gamma ,D}\) together with the Brownian motion from which it has been built. The conformal covariance then follows from this proposition as it is written in Section 5 of [2]. \(\square \)

Note that we could not hope to apply directly the approach used in the subcritical case to prove conformal covariance at criticality. Indeed, in the subcritical regime, this is based on a characterisation of the law of the couple formed by the measure together with the Brownian motion from which it has been built. This characterisation is in turn based on \(L^1\) computations that are infinite at criticality (Theorem 1.1, point 3).

1.2 Conjecture on the supremum of local times of random walk

In recent years, much effort has been put in the study of the supremum of log-correlated fields, the ultimate goal being the convergence in distribution of the supremum properly centred. In many examples, the limiting law is a Gumbel distribution randomly shifted by the log of the total mass of an associated critical chaos. This has been established for example in the following instances: branching random walk [6], local times of random walk on regular trees [1], cover time of binary trees [15, 21], discrete GFF [8], log-correlated Gaussian field [20, 36]. See [5, 48] and [11, Section 2] for more references. By analogy with these results, it is natural to make the following conjecture that we present in the more natural setting of random walk.

For \(x \in {\mathbb {Z}}^2\) and \(N \ge 1\), let \(\ell _x^N\) be the total number of times a planar simple random walk starting from the origin has visited the vertex x before exiting the square \([-N,N]^2\). Define a random Borel measure \(\mu _N\) on \({\mathbb {R}}^2 \times {\mathbb {R}}\) by setting for all Borel sets \(A \subset {\mathbb {R}}^2\) and \(T \subset {\mathbb {R}}\),

Conjecture 1

There exist constants \(c_1, c_2>0\) such that \((\mu _N, N \ge 1)\) converges in distribution for the topology of vague convergence on \({\mathbb {R}}^2 \times ({\mathbb {R}}\cup \{+ \infty \})\) towards the Poisson point process

where \(\mu \) is the critical Brownian multiplicative chaos in the domain \([-1,1]^2\) with the origin as a starting point. In particular, for all \(t \in {\mathbb {R}}\),

The leading order term \(2 \pi ^{-1/2} \log N\) has been conjectured by Erdős and Taylor [23] and proven by [17]. See also [13, 28, 43]. We expect \(-\pi ^{-1/2} \log \log N\) to be the second order term since, with this choice of constant, the expectation of \(\mu _N({\mathbb {R}}^2 \times (0,\infty ))\) blows up like \(\log N\). Indeed, in analogy with the case of the 2D discrete GFF (see [11]), this should be the correct way of scaling the point measure to get a nondegenerate limit.

Let us compare this conjecture with the case of the 2D discrete GFF \((\phi _N(x))_{x \in {\mathbb {Z}}^2}\), that is the centred Gaussian vector whose covariance is given by \({\mathbb {E}}[\phi _N(x) \phi _N(y)] = {\mathbb {E}}_{x} \left[ \ell _y^N \right] \). Bramson et al. [8] (see [12] for the link with Liouville measure) showed that for all \(t \in {\mathbb {R}}\),

where \(c_1, c_2>0\) are some constants and \(\mu ^L\) is the Liouville measure in \([-1,1]^2\). Despite strong links between local times and half of the GFF squared (see lecture notes [44] for an overview of the topic), Conjecture 1 would show that the supremum of the former is slightly smaller than the supremum of the latter, enhancing subtle differences between the two fields (see [27, Corollary 1.1] and [26, Corollary 1.1] for results in this direction).

Let us mention that [28] shows results analogous to Conjecture 1 in dimensions larger or equal to three and that [27] establishes the subcritical analogue of Conjecture 1 in dimension two. A first step towards solving Conjecture 1 might be to give a characterisation of the law of critical Brownian multiplicative chaos analogous to the subcritical characterisation of [27]. Since the first moment blows up, fixing the normalisation of the measure is one of the main challenges in this regard.

1.3 Proof outline

We now explain the main ideas and difficulties of the proof of Theorems 1.1 and 1.2.

We start by recalling that, as noticed in [26], if the domain D is a disc \(D = D(x,\eta )\) centred at x, then the local times \(L_{x,r}(\tau ), r >0,\) exhibit the following Markovian structure: for all \(\eta ' \in (0,\eta )\) and all \(z \in D(0,\eta ) \backslash D(0,\eta ')\), under \({\mathbb {P}}_z\) and conditioned on \(L_{x,\eta '}(\tau )\),

with \((X_s,s \ge 0)\) being a zero-dimensional Bessel process starting from \(\sqrt{ L_{x,\eta '}(\tau ) / {\eta '}}\). This is an easy consequence of rotational invariance of Brownian motion and second Ray-Knight isomorphism for local times of one-dimensional Brownian motion. In order to exploit this relation, we will very often stop the Brownian trajectory at the first exit time \(\tau _{x,R}\) of the disc D(x, R), R being the diameter of the domain D.

What makes the critical case so special is that the approximating measures are not normalised by the first moment any more (otherwise we would get a vanishing measure as shown in Proposition 1.1). We thus need to introduce good events before being able to even make \(L^1\)-computations. Defining the right events and showing that they do not change the measures with high probability is one of the crucial steps of this paper that we are about to explain. We first explain the most natural events to consider and we then explain why we will actually consider different events.

Naive definition of good events In analogy with the case of log-correlated Gaussian fields, it is natural to consider the following events to make the measures bounded in \(L^1\): let \(\beta >0\) be large and for all \(x \in D\) and \(\varepsilon >0\), define

Here, we stop the Brownian path at time \(\tau _{x,R}\) to be able to use (1.6). One would expect \({\mathbb {P}}_{x_0} \left( \bigcap _{x \in D} \bigcap _{\varepsilon >0} G_\varepsilon (x) \right) \rightarrow 1\) as \(\beta \rightarrow \infty \) since, by analogy with the Gaussian case (see [39, Corollary 2.4] for instance), the following should hold true:

Because of the lack of self-similarity and Gaussianity of our model, showing (1.7) turns out to be far from easy (see the introduction of Sect. 4 for more about this). We thus take a detour to justify that the introduction of the events \(G_\varepsilon (x)\) is harmless. We first control the supremum of the more regular local times of small annuli allowing us to introduce good events associated to these local times. Crucially, these good events will be enough to make the measures bounded in \(L^1\). Using repulsion estimates associated to zero-dimensional Bessel process X, we will finally be able to transfer the restrictions on the local times of annuli (requiring for all \(k \ge 0\), \(\min _{[k,k+1]} X \le 2k + 2 \log (k) + \beta /2\)) over to restrictions on the local times of circles (requiring for all \(s \ge 0\), \(X_s \le 2s + \beta \)). This is the content of Sect. 4.

Other repulsion estimates with a similar flavour will tell us that, once we restrict ourselves to the events \(G_\varepsilon (x)\), we will be able to restrict further the measures to the good events

for some large \(M>0\). This is the content of Lemma 2.2. This second layer of good event will make the measures bounded in \(L^2\) (Proposition 2.2). We will conclude the proof by showing that the measures restricted to the second layer of good events converge in \(L^2\) (Proposition 2.3).

Actual definition of good events We now explain why we actually define different good events. This paper extensively uses the relation (1.6) between local times and zero-dimensional Bessel process. When making \(L^1\)-computations, we will bound from above the local times \(L_{x,\varepsilon }(\tau )\) by \(L_{x,\varepsilon }(\tau _{x,R})\) and we will use directly (1.6). Difficulties arise when we start to make \(L^2\)-computations since we need to consider local times at two different centres. We will resolve this issue with the following reasoning. Consider a Brownian excursion from \(\partial D(x,1)\) to \(\partial D(x,2)\) and condition on the initial and final points of the excursion (this will be important to keep track of the number of excursions). Because of this conditioning, rotational symmetry is broken and the law of the local times \((L_{x,\delta }(\tau _{x,2}), \delta \le 1)\) is no longer given by a zero-dimensional Bessel process. But if we condition further on the fact that the excursion went deep inside D(x, 1), then it will have forgotten its starting position and the law of \((L_{x,\delta }(\tau _{x,2}), \delta \le 1)\) will be very close to the one given in (1.6). This is the content of the continuity lemma (Lemma 3.3) which is a much more precise version of [26, Lemma 5.1] giving a quantitative estimate of the error in the aforementioned approximation. Importantly, this approximation cannot be true if we look at the local times \(L_{x,\delta }(\tau _{x,2})\) for all radii \(\delta \le 1\). Instead, we must restrict ourselves to dyadic radii \(\delta \in \{e^{-n}, n \ge 0\}\) so that the Brownian path has enough space to forget its initial position. See Remark 3.1. This is one reason why we cannot define the good events \(G_\varepsilon (x)\) and \(G_\varepsilon '(x)\) using this continuum of radii. Another reason is that it would prevent us from decoupling the two-point estimates needed in the proof of Proposition 2.3 [see especially (5.7)].

Moreover, we will not define the good events using only local times at dyadic radii neither. Indeed, doing so would then require us to estimate probabilities associated to zero-dimensional Bessel process evaluated at discrete times. These probabilities are much harder to estimate than their continuous time counterpart and our approach cannot afford to lose too much on these estimates (especially in the identifications of the different limiting measures). We will resolve this using the following surprising trick: we will consider a field \((h_{x,\delta }, x \in D, \delta \in (0,1])\) that interpolates the local times \(\sqrt{\frac{1}{\delta } L_{x,\delta }(\tau _{x,R})}\) between dyadic radii by zero-dimensional Bessel bridges that have a very small range of dependence (see Lemma 2.1). In this way, the one-point estimates will be the same as if we considered local times at all radii but we will be able to decouple things to make the two-point computations. We believe this new idea will be useful in subsequent studies.

Paper outline The rest of the paper is organised as follows. Section 2 proves Theorems 1.1 and 1.2 subject to the intermediate results Proposition 2.1, Lemma 2.2 and Propositions 2.2 and 2.3. Section 3 collects preliminary results that will be used throughout the paper. In particular, it states and proves the continuity lemma and contains results on Bessel processes and barrier estimates associated to 1D Brownian motion. Section 4 proves Proposition 2.1 and Lemma 2.2 showing that we can safely add the two layers of good events. Section 5 is dedicated to the \(L^2\) estimates needed to prove Propositions 2.2 and 2.3. “Appendix A” justifies the existence of the field \((h_{x,\delta }, x \in D, \delta \in (0,1])\) interpolating local times with zero-dimensional Bessel bridges. Finally, “Appendix B” sketches the proof of Proposition 1.2.

We end this introduction with some notations that will be used throughout the paper. We will denote:

Notation 1.1

For \(x > 0\) and \(d \ge 0\), \(\mathbb {P}^d_x\) and \(\mathbb {E}_x^d\) the law and the expectation under which \((X_t)_{t \ge 0}\) is a d-dimensional Bessel process starting from x at time 0. \(\mathbb {P}_x\) and \(\mathbb {E}_x\) will denote the law and the expectation of 1D Brownian motion starting at x. Note that under \(\mathbb {P}_x\), the process X takes negative and positive values, whereas the process stays nonnegative under \(\mathbb {P}_x^1\).

Notation 1.2

For \(x \in D\), \(k_x\) the smallest nonnegative integer such that \(e^{-k_x} \le |x-x_0|\);

Notation 1.3

R the diameter of the domain D and for \(x \in D\) and \(r >0\), \(\tau _{x,r}\) the first hitting time of \(\partial D(x,r)\);

Notation 1.4

For \(a_\varepsilon \in {\mathbb {R}}, b_\varepsilon>0, \varepsilon > 0\), we will denote \(a_\varepsilon \lesssim b_\varepsilon \) (resp. \(a_\varepsilon = O(b_\varepsilon )\), resp. \(a_\varepsilon = o(b_\varepsilon )\)) if there exists some constant \(C>0\) such that for all \(\varepsilon >0\), \(a_\varepsilon \le C b_\varepsilon \) (resp. \(|a_\varepsilon | \le C b_\varepsilon \), resp. \(a_\varepsilon /b_\varepsilon \rightarrow 0\) as \(\varepsilon \rightarrow 0\)). Sometimes we will emphasise the dependency on some parameter \(\eta \) by writing for instance \(a_\varepsilon = o_\eta (b_\varepsilon )\);

Notation 1.5

For \(x \in {\mathbb {R}}\), \((x)_+ = \max (x,0)\).

In this paper, C, c, etc. will denote generic constants that may vary from line to line.

2 High level proof of Theorems 1.1 and 1.2

To ease notations, we will prove the convergences stated in Theorem 1.1 along the radii \(\varepsilon \in \{e^{-k}, k \ge 0\}\). The proof extends naturally to all radii \(\varepsilon \in (0,1]\). In particular, in what follows we will write \(\sup _{\varepsilon >0}\), \(\limsup _{\varepsilon >0}\), etc. but we actually mean \(\sup _{\varepsilon \in \{e^{-k}, k \ge 0\} }\), \(\limsup _{\varepsilon \in \{e^{-k}, k \ge 0\} }\), etc.

We start off by defining the field \((h_{x,\delta }, x \in D, \delta \in (0,1])\) mentioned in Sect. 1.3. Recall Notation 1.3. We will also denote for any \(x=(x_1,x_2) \in {\mathbb {R}}^2\), \(\left\lfloor x \right\rfloor = (\left\lfloor x_1 \right\rfloor , \left\lfloor x_2 \right\rfloor )\).

Lemma 2.1

By enlarging the probability space we are working on if necessary, we can construct a random field \((h_{x,\delta }, x \in D, \delta \in (0,1])\) such that

-

for all \(x \in D\), and \(n \ge 0\), conditionally on \(\{ L_{x,\delta }(\tau _{x,R}), \delta = e^{-n}, e^{-n-1} \},\) \((h_{x,e^{-t}}, t \in [n,n+1])\) has the law of a zero-dimensional Bessel bridge from \(\sqrt{e^n L_{x,e^{-n}}(\tau _{x,R})}\) to \(\sqrt{e^{n+1} L_{x,e^{-n-1}}(\tau _{x,R})}\) that is independent of \((B_t, t \ge 0)\) and \((h_{y,\delta }, y \in D, \delta \notin [e^{-n-1}, e^{-n}])\);

-

for all \(n_0 \ge 0\) and \(x, y \in D\), conditionally on \(\{L_{z,\delta }(\tau _{z,R}), z=x,y, \delta =e^{-n}, n \ge n_0\}\), \((h_{x,\delta }, \delta \le e^{-n_0})\) and \((h_{y,\delta }, \delta \le e^{-n_0})\) are independent as soon as \(|x-y| \ge 2 e^{-n_0}\);

-

for all \(n \ge 0\) and \(z \in e^{-n-10} {\mathbb {Z}}^2 \cap D\), \((h_{x,\delta }, x \in D, \left\lfloor e^{n+10} x \right\rfloor = e^{n+10}z, e^{-n-1} \le \delta \le e^{-n} )\) is continuous.

See “Appendix A” for a proof of the existence of such a process. Note that by (1.6), for all \(n_0 \ge 0\) and for all \(x \in D\), conditionally on \(L_{x,e^{-n_0}}(\tau _{x,R})\), \((h_{x,e^{-s-n_0}}, s \ge 0)\) has the law of a zero-dimensional Bessel process starting from \(\sqrt{e^{n_0} L_{x,e^{-n_0}}(\tau _{x,R})}\).

We now introduce the good events that we will work with: let \(\beta , M >0\) be large and define for all \(x \in D\) and \(\varepsilon \le |x-x_0|\), \(\varepsilon = e^{-k}\),

and

If \(|x-x_0| < \varepsilon \), the above good events do not impose anything by convention. Let us mention that if \(\varepsilon = e^{-k - t_0}\) for some \(k \ge 0\) and \(t_0 \in (0,1)\), one would need to consider the process

instead of \(s \mapsto h_{x,e^{-s}}\) to define the good events when \(\varepsilon \notin \{e^{-k}, k \ge 0\}\). Again, in what follows we will restrict ourselves to \(\varepsilon \in \{e^{-k}, k \ge 0\}\) to ease notations.

We now consider modified versions of the measures \(m_\varepsilon ^\gamma , \gamma \in (0,2)\), and \(m_\varepsilon \) defined respectively in (1.2) and (1.3):

and

We also consider modified versions of the measure \(\mu _\varepsilon \) defined in (1.4): for all Borel set A, set

and we decompose further

We emphasise that in (2.3) the local times are stopped at time \(\tau \) or \(\tau _{x,R}\) depending on whether the local time is in the exponential or not.

A first step towards the proof of Theorem 1.1 consists in showing that these changes of measures are harmless:

Proposition 2.1

Let A be a Borel set. The following three limits hold in \({\mathbb {P}}_{x_0}\)-probability:

Once the good events \(G_\varepsilon (x)\) are introduced, we can perform \(L^1\) computations. Next, we will show:

Lemma 2.2

Let A be a Borel set and fix \(\beta >0\). We have

The second layer of good events makes the sequences  ,

,  and

and  bounded in \(L^2\). Here

bounded in \(L^2\). Here

goes to zero very rapidly as \(\gamma \rightarrow 2\). We recall that a sequence \((\nu _n, n \ge 1)\) of random Borel measures on D is tight for the topology of weak convergence on D if, and only if, the sequence \((\nu _n(D), n \ge 1)\) of real-valued random variables is tight (see [10, Exercise 3.8] for instance).

Proposition 2.2

Fix \(\beta >0\) and \(M > 0\). We have

In particular,  and

and  is tight for the topology of weak convergence on D. Moreover, any subsequential limit

is tight for the topology of weak convergence on D. Moreover, any subsequential limit  of

of  satisfies: \({\mathbb {P}}_{x_0}\)-a.s. simultaneously for all \(x \in D\),

satisfies: \({\mathbb {P}}_{x_0}\)-a.s. simultaneously for all \(x \in D\),  .

.

Finally, we will show:

Proposition 2.3

Fix \(\beta >0\) and \(M>0\) and let A be a Borel set. Let \((\gamma _n, n \ge 1) \in [1,2)^{\mathbb {N}}\) be a sequence converging to 2.

-

1.

,

,  and for all \(n \ge 1\),

and for all \(n \ge 1\),  are Cauchy sequences in \(L^2\). Let

are Cauchy sequences in \(L^2\). Let  ,

,  and

and  , be the limiting random variables.

, be the limiting random variables. -

2.

-a.s.

-a.s. -

3.

converges in \(L^2\) towards

converges in \(L^2\) towards  as \(n \rightarrow \infty \).

as \(n \rightarrow \infty \).

We now have all the ingredients to prove Theorems 1.1 and 1.2.

Proof of Theorems 1.1 and 1.2

Let A be a Borel set. Let \(\beta >0\). For all \(M>0\), we have

By Proposition 2.3, the second right hand side term vanishes whereas by Lemma 2.2 the first right hand side term goes to zero as \(M \rightarrow \infty \). The left hand side term being independent of M, it has to vanish. In other words, \(({\hat{\mu }}_\varepsilon (A), \varepsilon >0)\) converges in \(L^1\) towards some \({\hat{\mu }}(A, \beta )\) (we keep track of the dependence in \(\beta \) here). Let \({\hat{\mu }}(A, \infty )\) be the almost sure limit of the nondecreasing sequence \({\hat{\mu }}(A,\beta )\) as \(\beta \rightarrow \infty \). We now have for any small \(\rho >0\) and large \(\beta >0\),

The second right hand side term vanishes since \(({\hat{\mu }}_\varepsilon (A,\beta ), \varepsilon >0)\) converges (in \(L^1\)) towards \({\hat{\mu }}(A,\beta )\). The third term goes to zero as \(\beta \rightarrow \infty \) since \(({\hat{\mu }}(A,\beta ), \beta >0)\) converges (almost surely) to \({\hat{\mu }}(A,\infty )\). The first term goes to zero as \(\beta \rightarrow \infty \) by Proposition 2.1. We have thus obtained the convergence in \({\mathbb {P}}_{x_0}\)-probability of \((\mu _\varepsilon (A),\varepsilon >0)\).

Let \((\gamma _n, n \ge 1) \in [1,2)^{\mathbb {N}}\) be a sequence converging to 2. By mimicking the above lines, Proposition 2.1, Lemma 2.2 and Proposition 2.3 imply that

in \({\mathbb {P}}_{x_0}\)-probability. By [26], we already know that \((m_\varepsilon ^{\gamma _n}(A), \varepsilon >0)\) converges to \(m^{\gamma _n}(A)\) in probability. We have thus obtained the convergence in probability of \((m_\varepsilon (A), \varepsilon>0), (\mu _\varepsilon (A), \varepsilon >0)\) and \(((2-\gamma _n)^{-1} m^{\gamma _n}(A), n \ge 1)\) and the limits satisfy

Obtaining the convergence of the measures and the identification of the limiting measures as stated in Theorems 1.1 and 1.2 is now routine.

The only points that remained to be checked are points 2–4 of Theorem 1.1. Point 4 follows from the fact that any subsequential limit  of

of  are non-atomic (see Proposition 2.2) and that

are non-atomic (see Proposition 2.2) and that  is as small as desired (in probability, by tuning the parameters \(\beta \) and M) by Proposition 2.1 and Lemma 2.2. We now turn to Point 3. Since \(({\hat{m}}_\varepsilon (D), \varepsilon >0)\) converges in \(L^1\) towards \({\hat{m}}(D)\), \({\mathbb {E}}_{x_0} \left[ {\hat{m}}(D) \right] = \lim _{\varepsilon \rightarrow 0} {\mathbb {E}}_{x_0} \left[ {\hat{m}}_\varepsilon (D) \right] \). Now, by monotonicity, \({\mathbb {E}}_{x_0} \left[ m(D) \right] \ge \lim _{\beta \rightarrow \infty } \lim _{\varepsilon \rightarrow 0} {\mathbb {E}}_{x_0} \left[ {\hat{m}}_\varepsilon (D) \right] \) which is infinite by (4.3).

is as small as desired (in probability, by tuning the parameters \(\beta \) and M) by Proposition 2.1 and Lemma 2.2. We now turn to Point 3. Since \(({\hat{m}}_\varepsilon (D), \varepsilon >0)\) converges in \(L^1\) towards \({\hat{m}}(D)\), \({\mathbb {E}}_{x_0} \left[ {\hat{m}}(D) \right] = \lim _{\varepsilon \rightarrow 0} {\mathbb {E}}_{x_0} \left[ {\hat{m}}_\varepsilon (D) \right] \). Now, by monotonicity, \({\mathbb {E}}_{x_0} \left[ m(D) \right] \ge \lim _{\beta \rightarrow \infty } \lim _{\varepsilon \rightarrow 0} {\mathbb {E}}_{x_0} \left[ {\hat{m}}_\varepsilon (D) \right] \) which is infinite by (4.3).

Finally, let us prove Point 2 of Theorem 1.1. The fact that \(\mu (D)\) is finite \({\mathbb {P}}_{x_0}\)-a.s. follows directly from Proposition 2.1 and Lemma 4.2. We now want to show that it is positive \({\mathbb {P}}_{x_0}\)-a.s. By Point 3 of Theorem 1.1, we already know that it is positive with a positive probability. We are going to bootstrap this to obtain a probability equal to 1. Let \(p \ge 1\) and consider the sequence of stopping times defined by \(\sigma _0^{(2)} = 0\) and for all \(i \ge 1\),

and \(x_i := B_{\sigma _i^{(2)}}\). For \(i \ge 0\), let \(\mu _i\) be the critical Brownian multiplicative chaos in the domain \((D(x_i,2^{-p}),x_i)\) between the times \(\sigma _{i}^{(2)}\) and \(\sigma _{i+1}^{(1)}\). Let \(I:= \left\lfloor \mathrm {d}(x_0, \partial D) 2^p/10 \right\rfloor \). Since \(\mu \le \sum _{i=0}^I \mu _i\), we have

By Markov property and translation invariance, the probability on the right hand side is equal to

By scaling of critical Brownian multiplicative chaos coming from Corollary 1.1, the probability \({\mathbb {P}}_{x_0} \left( \mu _0(D(x_0,2^{-p})) = 0 \right) \) does not depend on p. Moreover, thanks to Theorem 1.1, Point 3, it is strictly less than one. By letting \(p \rightarrow \infty \), we thus deduce that \({\mathbb {P}}_{x_0} \left( \mu (D) = 0 \right) = 0\) concluding the proof. \(\square \)

Proposition 1.1 now follows:

Proof of Proposition 1.1

Recall that \(m_\varepsilon ^{\gamma =2}(D) = m_\varepsilon (D) / \sqrt{\log \varepsilon |}\). By Theorem 1.1, \((m_\varepsilon (D), \varepsilon >0)\) converges in \({\mathbb {P}}_{x_0}\)-probability towards a nondegenerate random variable. Hence \((m_\varepsilon ^{\gamma =2}(D), \varepsilon >0)\) converges in \({\mathbb {P}}_{x_0}\)-probability to zero as desired. \(\square \)

The remaining of the paper is devoted to the proof of the above intermediate statements.

3 Preliminaries

3.1 Local times as exponential random variables

In this short section we recall some results of [26] that allow us to approximate local times of circles by exponential random variables. We start by recalling the behaviour of the Green function.

Lemma 3.1

([26], Lemma 2.1) For all \(x \in {\mathbb {C}}\), \(r> \varepsilon >0\) and \(y \in \partial D(x, \varepsilon )\), we have:

In the following lemma, we denote by \({{\,\mathrm{CR}\,}}(x,D)\) the conformal radius of D seen from x and by \(G_D\) the Green function of D with Dirichlet boundary conditions normalised so that \(G_D(x,y) \sim - \log |x-y|\) as \(x \rightarrow y\). Recall also Notation 1.3.

Lemma 3.2

Let \(\eta >0\), \(x \in D\) and \(\varepsilon >0\) such that the disc \(D(x,\varepsilon )\) is included in D and is at distance at least \(\eta \) from \(\partial D\). Let \(y \in \partial D(x,\varepsilon )\). Then \(L_{x,\varepsilon }(\tau )\) under \({\mathbb {P}}_y\) stochastically dominates and is stochastically dominated by exponential variables with mean

In particular,

Moreover, if \(x_0 \notin D(x,\varepsilon )\),

Proof

(3.3) is part of [26, Lemma 2.2]. The claim about the stochastic dominations is a consequence of [26, Section 2] as explained at the beginning of the proof of [26, Proposition 3.1]. (3.2) is then an easy computation with exponential variables. \(\square \)

3.2 Continuity lemma

We now state a refinement of Lemma 5.1 of [26]. We indeed need a quantitative estimate on the error that we make when we forget about the exit point of the excursion.

Lemma 3.3

Let \(k,k',n \ge 0\) with \(k' \ge k+1\) and \(n \ge k' - k\). Denote \(\eta = e^{-k}\), \(\eta ' = e^{-k'}\) and for all \(i=1 \dots k' - k\), \(r_i = \eta e^{-i}\). Consider \(0< r_n< \dots< r_{k'-k+1} < r_{k'-k} = \eta '\) and for \(i = 1 \dots n\), \(T_i \in {\mathcal {B}}([0,\infty ))\). For any \(y \in \partial D(0,\eta /e)\), we have

with \(p(u) \le \frac{1}{c} \exp \left( - c |\log u|^{1/2} \right) \) for some universal constant \(c >0\).

Remark 3.1

It is crucial that we consider dyadic radii \(r \in \{\eta e^{-i}, i =1 \dots k' -k\}\) between \(\eta '\) and \(\eta /e\) since there is no hope to obtain such a result if we were looking at the local times \(L_{0,r}(\tau _{0,\eta })\) for all \(r \le \eta /e\). Indeed, if we condition the Brownian motion to spend very little time in the disc \(D(0,\eta /e)\) before hitting \(\partial D(0,\eta )\) (which is a function of \(L_{0,r}(\tau _{0,\eta }), r \le \eta /e\)), \(B_{\tau _{0,\eta }}\) will favour points on \(\partial D(0,\eta )\) close to the starting position y, even if we condition further the trajectory to visit \(D(0,\eta ')\) before exiting \(D(0,\eta )\).

Proof of Lemma 3.3

The proof is inspired from the one of [26, Lemma 5.1]. In this proof, we will write \(u = \pm v\) when we mean \(-v \le u \le v\). To ease notations, we will denote \(\tau _\eta := \tau _{0,\eta }, \tau _{\eta '} := \tau _{0,\eta '}\) and for all \(i=1 \dots n, L_{r_i} := L_{0,r_i}(\tau _{0,\eta })\). Take \(C \in {\mathcal {B}}\left( \partial D(0,\eta ) \right) \). We will denote \(\mathrm {Leb}(C)\) for the Lebesgue measure on \(\partial D(0,\eta )\) of C. It is enough to show that

Moreover, establishing (3.5) can be reduced to show that

Indeed, applying (3.6) to \(T_i = [0,\infty )\) for all i gives

which combined with (3.6) leads to (3.5) with slightly different constants. Finally, after reformulation of (3.6), to finish the proof we only need to prove that

The skew-product decomposition of Brownian motion (see [33], Corollary 16.7 for instance) tells us that we can write

where \((w_t, t \ge 0)\) is a one-dimensional Brownian motion independent of the radial part \((\left| B_t \right| , t \ge 0)\) and \((\sigma _t, t \ge 0)\) is a time-change that is adapted to the filtration generated by \((\left| B_t \right| , t \ge 0)\):

In particular, under \({\mathbb {P}}_y\), we have the following equality in law

where \(\theta _0\) is the argument of y, \({\mathcal {N}}\) is a standard normal random variable independent of the radial part \((\left| B_t \right| , t \ge 0)\) and

We now investigate a bit the distribution of \(e^{i \theta _0 + it {\mathcal {N}}}\) for some \(t>0\). More precisely, we want to give a quantitative description of the fact that if t is large, the previous distribution should approximate the uniform distribution on the unit circle. Using the probability density function of \({\mathcal {N}}\) and then using Poisson summation formula, we find that the probability density function \(f_t(\theta )\) of \(e^{i \theta _0 + it {\mathcal {N}}}\) at a given angle \(\theta \) is given by

In particular, we can control the error in the approximation mentioned above by: for all \(\theta \in [0,2 \pi ]\),

for some universal constant \(C_1>0\).

We now come back to the objective (3.7). Using the identity (3.8) and because the local times \(L_{r_i}\) are measurable with respect to the radial part of Brownian motion, we have by triangle inequality

where

To conclude the proof, we want to show that

By conditioning on the trajectory up to \(\tau _{\eta '}\), it is enough to show that for any \(T_i' \in {\mathcal {B}}([0,\infty )), i = 1 \dots n\), for any \(z \in \partial D(0,\eta ')\),

In the following, we fix such \(T_i'\) and such a z.

Consider the sequence of stopping times defined by: \(\sigma _0^{(2)} :=0\) and for all \(i = 1 \dots k' + k\),

We only keep track of the portions of trajectories during the intervals \(\left[ \sigma _i^{(1)}, \sigma _i^{(2)} \right] \) by bounding from below \(\varsigma '\) by

Notice that by Markov property, conditioning on \(\{ \forall i =1 \dots n, L_{r_i} \in T_i' \}\) impacts the variables \(\sigma _i^{(2)} - \sigma _i^{(1)}\) only through \(\left| B_{\sigma _i^{(2)}} \right| \). Since

is convex, we deduce by Jensen’s inequality that

By Markov property and Brownian scaling, we have obtained

where \(\sigma _* := \inf \{ t > 0 : |B_t| \in \{ 1, e^{-1} \} \}\). Now, one can show (see [16, Section 14] for instance) that there exists a universal constant \(c>0\) such that for all \(s \ge 1\),

Since \(\min _{r=1,e^{-1}} {\mathbb {P}}_{e^{-1/2}} \left( |B_{\sigma _*}| = r| \right) \ge c\) for some universal constant \(c>0\), we also have

From this, we deduce that

and therefore, by Cauchy–Schwarz, we obtain that

Recalling that \(k'-k = \log \eta '/\eta \), this shows (3.9) which finishes the proof of Lemma 3.3. \(\square \)

3.3 Bessel process

The purpose of this section is to collect properties of Bessel processes that will be needed in this paper. Recall Notation 1.1.

We start off by recalling the following result that can be found for instance in the lecture notes [34], Proposition 2.2.

Lemma A

For each \(x, t >0\) and \(d \ge 0\), the measures \(\mathbb {P}_x\) and \(\mathbb {P}^d_x\), considered as measures on paths \(\{X_s, s \le t\}\), restricted to the event \(\{ \forall s \le t, X_s >0\}\) are mutually absolutely continuous with Radon-Nikodym derivative

where \(a = (d-1)/2\).

We now state a consequence of Lemma A and Girsanov’s theorem that will allow us to transfer computations on zero-dimensional Bessel process over to 1D Brownian motion and 3D Bessel process. Let us mention that since 0 is absorbing for the zero-dimensional Bessel process X, we will very often write \( \mathbf {1}_{ \left\{ X_t >0 \right\} } \) instead of \( \mathbf {1}_{ \left\{ \forall s \le t, X_s >0 \right\} } \) for this specific process.

Lemma 3.4

Let \(\gamma \in (0,2]\), \(t>0\), \(r>0\) and let \(f: {\mathcal {C}}([0,t],[0,\infty )) \rightarrow [0,\infty )\) be a nonnegative measurable function. Then

In particular,

Moreover,

and

Finally,

Proof of Lemma 3.4

By Lemma A, the left hand side of (3.10) is equal to

Girsanov’s theorem concludes the proof of (3.10). (3.11) follows directly from (3.10). Now, by (3.10), the left hand side of (3.12) is equal to

By Lemma A, this is in turn equal to the right hand side of (3.12). (3.13) is an easy consequence of (3.12) and we now turn to the proof of (3.14). We use (3.12) and we add the stronger constraint that \(\{ \forall s \le t, 2s -X_s+\beta > r/2 +s\}\) in order to have a lower bound. On this event, we can bound

Moreover, we simply bound

which overall shows that

Since \(X_t\) under \(\mathbb {P}^3_{\beta -r}(\cdot \vert \forall s \le t, 2s -X_s+\beta > r/2 +s)\) is stochastically dominated by \(X_t\) under \(\mathbb {P}^3_{\beta -r}\), we can further bound

Lemma 3.5, Point 2, shows that \(\mathbb {E}^3_{\beta -r} \left[ \frac{\sqrt{t}}{X_t} \right] \rightarrow \sqrt{2/\pi }\) as \(t \rightarrow \infty \). Therefore

To see that the above probability remains bounded away from zero as \(\beta \rightarrow \infty \), we can for instance notice that a three-dimensional Bessel process which starts at \(\beta -r\) is stochastically dominated by the sum of three independent one-dimensional Bessel processes \(X^{(i)}\), \(i=1,2,3\), starting at the origin, plus \(\beta -r\) (this follows by bounding \(\sqrt{a^2 + b^2 + c^2} \le |a| + |b| + |c|\)). Therefore

This concludes the proof of (3.14). \(\square \)

We now collect some properties of three-dimensional Bessel process.

Lemma 3.5

Let \(K >0\).

-

1.

Uniformly over \(r \in [0,K]\),

$$\begin{aligned} \mathbb {P}_r^3 \left( \forall t \ge 0, X_t \ge \frac{\sqrt{t}}{M \log (2 + t)^2} \right) \rightarrow 1 \end{aligned}$$as \(M \rightarrow \infty \).

-

2.

\(\mathbb {E}^3_r \left[ \frac{1}{X_t} \right] = \sqrt{\frac{2}{\pi t}} + o \left( \frac{1}{\sqrt{t}} \right) \) as \(t \rightarrow \infty \), where the error is uniform over \(r \in [0,K]\).

-

3.

For any \(q \in (0,3)\), \(\sup _{t \ge 1} \sup _{r >0} \mathbb {E}^3_r \left[ \frac{t^{q/2}}{X_t^q} \right] \) is finite.

-

4.

For any \(q \in (0,1)\), \(\sup _{t \ge 1} \sup _{K \ge 0} \sup _{r \in [0,K]} \mathbb {E}_r^3 \left[ \left( 1 - \frac{X_t - K}{2t} \right) _+^{-q} \right] \) is finite.

Proof of Lemma 3.5

Points 1–2 are part of [39, Lemma 2.9]. To verify Point 3, notice that \(X_t\) under \(\mathbb {P}^3_0\) is stochastically dominated by \(X_t\) under \(\mathbb {P}^3_r\) for any \(r>0\). By scaling, we deduce that

The density of \(X_1\) under \(\mathbb {P}_0^3\) is explicit (see [34, Proposition 2.5] for instance) and is given by

We can therefore directly check that \(\mathbb {E}^3_0 \left[ X_1^{-q} \right] \) is finite as soon as \(q < 3\). This concludes the proof of Point 3. Point 4 follows from a similar direct computation. \(\square \)

We conclude this section on Bessel processes with estimates that will be used repeatedly in the paper.

Lemma 3.6

There exists a universal constant \(C>0\) such that the following estimates hold true. For all \(K \ge 1, r \in [0,K]\) and \(t \ge 1\),

and

Moreover, for all \(K \ge 1, r \in [0,K]\), \(\gamma \in (1,2)\) and \(t \ge \exp (1/(2-\gamma ))\),

Proof of Lemma 3.6

By (3.13), the left hand side of (3.16) is at most

The expectation with respect to the three-dimensional Bessel process is bounded uniformly in \(r \in [0,K], K>0, t\ge 1\) by Lemma 3.5, point 4. This concludes the proof of (3.16). Now, by (3.13) and then by Cauchy–Schwarz inequality, the left hand side of (3.15) is at most

Lemma 3.5, points 3 and 4, then concludes the proof of (3.15). We now turn to the proof of (3.17). By (3.11), the left hand side of (3.17) is at most

By Hölder’s inequality and an analogue of Lemma 3.5, Point 4, for Brownian motion rather than 3D Bessel process, we see that the last expectation above is at most

by recalling that \(t \ge \exp (1/(2-\gamma ))\). On the other hand (see [42, Proposition 6.8.1] for instance),

Since

it implies that

Putting things together yields (3.17). This concludes the proof. \(\square \)

3.4 Barrier estimates for 1D Brownian motion

The purpose of this section is to prove the following lemma.

Lemma 3.7

There exists \(C >0\) such that the following claims hold true. For all \(K, H \ge 1\) and all integer \(n \ge 1\),

and

Moreover, for all \(K, H \ge 1\), \(\gamma \in [1,2)\) and all integer \(n \ge (2-\gamma )^{-4}\),

and

We start off with the following intermediate result.

Lemma 3.8

Let \(c >0\). There exists \(C>0\) such that the following estimates hold. For all \(n \ge 1\) and \(K \ge 1\),

Moreover, for all \(\gamma \in [1,2)\), for all \(n \ge (2-\gamma )^{-4}\) and \(K \ge 1\),

Proof

We start by proving (3.22). If \(K > n^{1/4}\), then the result is clear by bounding the probability by one. In the rest of the proof we thus assume that \(K \le n^{1/4}\). Let us denote \(K_n = c \log (1+n) + K\). By the reflection principle,

For all \(x \in [-n^{1/4},K_n]\), we can bound

implying that

Another similar consequence of the reflection principle is that

Therefore

and

By equation (25) of [8], the last right hand side term is at most \(C K^2 / \sqrt{n}\). The second right hand side term being at most

we deduce that

which concludes the proof of (3.22).

We now turn to the proof of (3.23). Since \(n \ge (2-\gamma )^{-4}\),

Hence

by Girsanov’s theorem. Now, by equation (25) of [8], we conclude that

This finishes the proof of (3.23). \(\square \)

Proof of Lemma 3.7

We start by proving (3.18). By Lemma 3.8, there exists some universal constant \(C_1 >0\) such that for all \(t \ge 1\),

We thus aim to take care of the minima in (3.18). Let \(n \ge 1\) and define

Let \(0 \le t_0 < 1\). Set \(\tau := \inf \{ s >t_0: X_s \ge 3 \log (1+s) + 2K \}\). We are going to decompose the above probability according to the value of \(\tau \). Let \(k \ge 1\). Notice that on the event \(\{ k + t_0 \le \tau < k+1 + t_0, \min _{[k-1+t_0,k+t_0]} X \le 2 \log k + K \}\), we have \(\max _{u,v \in [k-1+t_0,\tau ]} |X_u-X_v| \ge \log (k+1) + K\). If \(k=0\), on the event \(\{t_0 \le \tau < 1+t_0\}\), we simply have \(\max _{u \in [0,\tau ]} |X_u-X_0| \ge K\) when X starts at 0. Hence

By applying Markov’s property to the stopping time \(\tau \), and by writing \({\tilde{X}}\) a Brownian motion independent of \(\tau \), we see that the last probability written above is equal to

Moreover, by (3.24),

We have thus proven that

This recursive relation allows us to conclude the proof of (3.18). We detail the arguments. Define

and assume that K is large enough so that we can define

We clearly have \(p_0 \le 1 \le C_K K^2 / \sqrt{1+0}\). Let \(n \ge 1\) and assume now that for all \(k \le n-1\), \(p_k \le C_K K^2 / \sqrt{k+1}\). By (3.25), we have

This concludes the proof by induction of the fact that \(p_n \le C_K K^2 / \sqrt{n+1}\) for all \(n \ge 1\). Since \(C_K\) does not grow with K, this concludes the proof of (3.18).

We now turn to the proof of (3.19). We are first going to show that

By considering the stopping time

and by following almost the same arguments as above, one can show that the probability in (3.26) is at most

thanks to the estimates on \(p_n\). This shows (3.26). Now, it implies that

If H is larger than \(6 \log (n+1)\), then the probability on the right hand side vanishes and we directly obtain (3.19). Let us now assume that \(H \le 6 \log (n+1)\) and denote \(k_0 := \left\lfloor e^{\frac{H}{6}} - 1 \right\rfloor \le n\) and consider the stopping time \(\tau = \inf \{ s>0: X_s > K + H \}\). By Markov property, the last probability written above is at most equal to

by Lemma 3.8. Now, using the explicit density of \(\tau \) (which is a consequence of the reflection principle), we see that

Hence,

The behaviour of the above sum is given by

By recalling that \(k_0 = \left\lfloor e^{\frac{H}{6}} - 1 \right\rfloor \le n\), we have therefore obtained that

This concludes the proof of (3.19).

We now turn to the proof of (3.20). This time we define for \(n \ge 1\),

By considering for \(0 \le t_0 < 1\), the stopping time

we can show using a reasoning very similar to the one above that

Take \(n \ge (2-\gamma )^{-4}\). By (3.23), the first right hand side term above is at most \(CK^2 (2-\gamma )\). Moreover, for all \(k \in [n/2,n]\),

Therefore,

which shows that \(q_n \lesssim K^2 (2-\gamma )\) as soon as K is large enough. This finishes the proof of (3.20). (3.21) follows from (3.20) in a similar manner that (3.19) follows from (3.18). This concludes the proof. \(\square \)

4 Adding good events: proof of Proposition 2.1 and Lemma 2.2

The purpose of this section is to prove Proposition 2.1 and Lemma 2.2. We start by discussing Proposition 2.1. As mentioned in Section 1.3, it is natural to expect the introduction of the good events \(G_\varepsilon (x)\) to be harmless. Indeed, in analogy with the case of log-correlated Gaussian fields (see [39, Corollary 2.4] for instance), the following should hold true:

which would imply (forgetting about the Bessel bridges) that \( {\mathbb {P}}_{x_0} \left( \bigcap _{x \in D} \bigcap _{\varepsilon >0} G_\varepsilon (x) \right) \rightarrow 1 \) as \(\beta \rightarrow \infty \). We have not been able to prove such a statement because of the following two main reasons.

-

(1)

For a fixed radius \(\varepsilon \), we would like to be able to compare

$$\begin{aligned} \sup _{x \in D} \sqrt{\frac{1}{\varepsilon } L_{x,\varepsilon }(\tau _{x,R})} \quad \mathrm {and} \quad \sup _{x \in \varepsilon {\mathbb {Z}}^2 \cap D} \sqrt{\frac{1}{\varepsilon } L_{x,\varepsilon }(\tau _{x,R})}, \end{aligned}$$(4.2)the latter supremum being a supremum over a finite number of elements. To do so, we would need to be able to precisely control the way the local times vary with respect to the centre of the circle. Obtaining estimates precise enough turns out to be difficult to achieve (the estimates of Section C of [26] leading to the continuity of the local time process \((x,\varepsilon ) \mapsto L_{x,\varepsilon }(\tau )\) are too rough). We resolve this problem by first considering local time of annuli rather than circles. Indeed, comparing local times of annuli is much easier since if an annulus is included in another one, then the local time of the former is not larger than the local time of the latter.

-

(2)

Assuming that we are able to make the comparison (4.2), the next step would be to be able to bound from above

$$\begin{aligned} {\mathbb {P}}_{x_0} \left( \sup _{x \in \varepsilon {\mathbb {Z}}^2 \cap D} \sqrt{\frac{1}{\varepsilon } L_{x,\varepsilon }(\tau _{x,R})} \ge 2 \log \frac{1}{\varepsilon } \right) . \end{aligned}$$If the bound is good enough, Borel–Cantelli lemma would allow us to conclude the proof of (4.1), at least along dyadic radii \(\varepsilon \). Estimating accurately this probability is again challenging (a union bound is not good enough for instance). In the case of log-correlated Gaussian fields, the estimation of such probabilities is heavily based on the Gaussianity of the process. For instance, in [18], Kahane’s convexity inequalities allow the authors to import computations from cascades (Theorem 1.6 of [25]). We resolve this problem by asking the local times to stay under \(2 \log \frac{1}{\varepsilon } + 2 \log \log \frac{1}{\varepsilon }\) instead of \(2\log \frac{1}{\varepsilon }\). Indeed, here we can do very naive computations using for instance union bounds. Importantly, this restriction is enough to turn the variables that we consider bounded in \(L^1\). We can then make \(L^1\) computations and use repulsion estimates to get rid of the extra \(2 \log \log \frac{1}{\varepsilon }\) term.

4.1 Supremum of local times of annuli

Lemma 4.1

For \(x \in D\) and \(\varepsilon >0\), let

be the amount of time the Brownian trajectory has spent in the annulus \(D(x,e \varepsilon ) \backslash D(x,\varepsilon )\) before hitting \(\partial D(x,R)\). Then,

Proof of Lemma 4.1

For \(x \in D\) and \(\varepsilon >0\), define

and notice that if \(|x-y| \le \varepsilon /|\log \varepsilon |\), then \(\ell _{x,\varepsilon }(\tau _{x,R}) \le \ell _{y,\varepsilon }\) \({\mathbb {P}}_{x_0}\)-a.s. Hence

\({\mathbb {P}}_{x_0}\)-a.s. By Borel–Cantelli lemma, to conclude the proof it is now enough to show that

After a union bound, we want to estimate

for a given \(\varepsilon \in \{e^{-n}, n \ge 1\}\) and \(x \in \frac{\varepsilon }{|\log \varepsilon |} {\mathbb {Z}}^2 \cap D\) such that \(|x-x_0| \ge e \varepsilon + \varepsilon /|\log \varepsilon |\). Let \(z \in \partial D(x,e\varepsilon + \varepsilon /|\log \varepsilon |)\). By (1.6), starting from z and conditioned on

where \(X_s\) is a zero-dimensional Bessel process starting at \(\sqrt{\ell }\). By bounding

(if \(\varepsilon \) is small enough) and

we deduce that

Since \((X_s, s \ge 0)\) under \(\mathbb {P}_{\sqrt{\ell }}^0\) is stochastically dominated by \((X_s, s \ge 0)\) under \(\mathbb {P}_{\sqrt{\ell }}\) (zero-dimensional Bessel process has a negative drift), we obtain that

We used reflection principle in the last inequality. Recalling that under \({\mathbb {P}}_z\) \(\ell \) is an exponential variable with mean equal to \(2 |\log \varepsilon | + O(1)\) [see (3.1)], we see that

Moreover, by denoting \(A := \frac{2 |\log \varepsilon | + 2 \log |\log \varepsilon | - 3}{\sqrt{{\mathbb {E}}_{z} \left[ \ell \right] }}\) and \(\lambda = \frac{{\mathbb {E}}_{z} \left[ \ell \right] }{2(1+3/|\log \varepsilon |)}\), we have

Wrapping things up, we have proven that

and summing over \(x \in \frac{\varepsilon }{|\log \varepsilon |} {\mathbb {Z}}^2 \cap D\), \(|x-x_0| \ge e \varepsilon + \varepsilon /|\log \varepsilon |\),

This is summable over \(\varepsilon \in \{ e^{-n}, n \ge 1 \}\) as required. It concludes the proof. \(\square \)

4.2 First layer of good events: proof of Proposition 2.1

We now have all the ingredients to prove Proposition 2.1. During the course of the proof, we will obtain intermediate results that we gather in the following lemma. Recall the definition (2.10) of \(\varepsilon _\gamma \).

Lemma 4.2

Firstly,

Secondly, we have for \(\beta >0\) fixed,

and

Proof of Proposition 2.1 and Lemma 4.2

Let \(\beta '>0\) be large. In light of Lemma 4.1 we introduce for all \(\varepsilon = e^{-k} >0\) and \(x \in D\) at distance at least \(e \varepsilon \) from \(x_0\), the good event

and set

Lemma 4.1 asserts that \({\mathbb {P}}_{x_0} \left( H \right) \rightarrow 1\) as \(\beta ' \rightarrow \infty \). \(\square \)

Seneta–Heyde norming We are first going to show that for a fixed \(\beta ' >0\),

First of all, if \(|x-x_0| < 1/|\log \varepsilon |\), then we simply bound

by (3.2). Take now \(x \in D\) at distance at least \(1/|\log \varepsilon |\) from \(x_0\). We again bound \(L_{x,\varepsilon }(\tau )\) by \(L_{x,\varepsilon }(\tau _{x,R})\) to be able to use the link (1.6) between local times and zero-dimensional Bessel process:

Denote by \(r_x := \sqrt{e^{k_x} L_{x,e^{-k_x}}(\tau _{x,R})}\). (1.6) tells us that, conditionally on \(r_x\), the process

is a zero-dimensional Bessel process starting at \(r_x\). The event \(H_\varepsilon (x)\) requires

for all \(s= 1 \dots k-k_x\). Hence

Now, with (3.10), we have

We now bound

By (3.18), the first right hand side term is at most \(C (k_x)^2 k^{-1/2}\). The second right hand side term decays much faster and we have obtained

where we have used (3.2) in the last inequality (or more precisely, the stochastic domination stated in Lemma 3.2 in order to also handle \(\sqrt{r_x}\)). To wrap things up, we have proven that

which concludes the proof of (4.7). Very few arguments need to be changed in order to show (4.4). The only difference is that, compared to the event \(H_\varepsilon (x)\), the event \(G_\varepsilon (x)\) ensures (in particular) the Bessel process X to stay below \(s \mapsto 2s + \beta + 2k_x \) at every integer s. This is more restrictive than asking \(\min _{[s,s+1]} X\) to be not larger than \(2s + 2 \log s + \beta + 4k_x\), we can thus conclude using the reasoning above.

We now turn to the proof of (2.4). Fix \(\beta ' >0\). We are going to show that

goes to zero as \(\beta \rightarrow \infty \). Let \(\eta _0 >0\) be small. By (4.7),

Fix now \(\eta _0>0\). In what follows the constants underlying the bounds may depend on \(\eta _0\). Recall the definition of \(h_{x,\delta }\) constructed in Lemma 2.1. By a reasoning very similar to what we did above and using (3.19), one can show that

goes to zero as \(\beta \rightarrow \infty \). We are thus left to control

for some \(x \in D\) at distance at least \(\eta _0\) from \(x_0\). Denote \(r_x = \sqrt{ e^{-k_x} L_{x,e^{-k_x}}(\tau _{x,R})}\). By (1.6) and then by (3.11), this is equal to

by (3.19). This concludes the proof of (4.8). We now have for any small \(\rho >0\),

By letting \(\beta \rightarrow \infty \) and then \(\beta ' \rightarrow \infty \), we see that

as desired in (2.4).

To show (4.3), take \(r>0\) small enough so that \(\{x \in D: D(x,r) \subset D\}\) has positive Lebesgue measure and notice that

where \(h^r\) is defined in a similar manner as h expect that we consider local times up to time \(\tau _{x,r}\) rather than \(\tau _{x,R}\). Using (1.6), we see that (4.3) is a direct consequence of (3.14) and Fatou’s lemma.

Subcritical measures We have finished the part of the proof concerning the Seneta–Heyde normalisation and we now turn to the justification of (2.6) and (4.6). This is very similar to what we have just done. The only difference is that after using the link (1.6) between local times and zero-dimensional Bessel process and the relation (3.10) to transfer computations to 1D Brownian motion, we have

We conclude as before by using (3.20) and (3.21) (note here that \(k -k_x \ge (2-\gamma )^{-4}\) since \(\varepsilon _\gamma \) has been chosen small enough) instead of (3.18) and (3.19).

Derivative martingale We finish with the justification of (2.5) and (4.5). Recall that in the modified measure \({\hat{\mu }}_\varepsilon \), the Brownian motion is stopped either at time \(\tau \) or at time \(\tau _{x,R}\) depending on whether the local time \(L_{x,\varepsilon }\) is in the exponential or not. Part of (2.5) consists in saying that, in the limit, this modification does not change the measure with high probability. We thus start by proving that

Let \(x \in D\). By applying Markov property to the first exit time \(\tau \) of D, the integrand in (4.9) is at most equal to

We decompose this expectation in two parts, the first one integrating on the event that \(\sqrt{\frac{1}{\varepsilon }L_{x,\varepsilon }(\tau )} < |\log \varepsilon |/2\) and the second one integrating on the complement event. The first part decays quickly to zero and we explain how to deal with the second part. Recall that starting from any point of \(\partial D(x,\varepsilon )\), \(L_{x,\varepsilon }(\tau _{x,R})\) is a random variable with mean \(2 \varepsilon \log (R/\varepsilon )\) (see Lemma 3.1). By Cauchy–Schwarz inequality and then by bounding \(\sqrt{a+b} - \sqrt{a} \le C b/\sqrt{a}\) for \(a>2b>1\), and using (3.3), we thus obtain that on the event that \(\sqrt{\frac{1}{\varepsilon }L_{x,\varepsilon }(\tau )} \ge |\log \varepsilon |/2\),

The integrand in (4.9) is therefore at most

by (3.2) and (3.3). This concludes the proof of (4.9).

Now, let \(\beta >0\). For any small \(\rho >0\) and large \(\beta '>0\), we have

(4.9) and (4.7) tell us that the second and respectively third right hand side terms vanish. When \(\beta '>0\) and \(\rho >0\) are fixed, one can show using a method very similar to what we did with the Seneta–Heyde normalisation that the last right hand side term goes to zero as \(\beta \rightarrow \infty \). Hence

The left hand side term is independent of \(\beta '\) whereas the right hand side term goes to zero as \(\beta ' \rightarrow 0\). Therefore, for any small \(\rho >0\),

as desired in (2.5). The proof of (4.5) is very similar to that of (4.4). We omit the details and it concludes the proof.

4.3 Second layer of good events: proof of Lemma 2.2

Proof of Lemma 2.2

We start by proving (2.8). Let \(\eta _0 >0\). By Lemma 4.2, it is enough to show that

goes to zero as \(\varepsilon \rightarrow 0\) and then \(M \rightarrow \infty \). The constants underlying the following estimates may depend on \(\eta _0\). We start off by bounding \(L_{x,\varepsilon }(\tau )\) by \(L_{x,\varepsilon }(\tau _{x,R})\) in the exponential above. By letting \(t = k - k_x\), \(\beta _x = \beta + 2k_x\) and \(r = \sqrt{e^{k_x} L_{x,e^{-k_x}}(\tau _{x,R})}\) and by using (1.6), we are left to estimate

By (3.13) and then by Cauchy–Schwarz inequality, this is at most

which goes to zero as \(M \rightarrow \infty \) uniformly in t by Lemma 3.5, Points 1 and 4. We have thus proven that the contribution of points at distance at least \(\eta _0\) from \(x_0\) to the integral (4.10) goes to zero as \(\varepsilon \rightarrow 0\) and then \(M \rightarrow 0\). This concludes the proof of (2.8).

The proof of (2.7) is very similar: the presence of an extra \(\sqrt{|\log \varepsilon |}\) in the normalisation as well as the absence of the derivative term \((-X_t + 2t + \beta )\) makes an extra multiplicative term \(\sqrt{t}/X_t\) popping up in the expectation with respect to the 3D Bessel process. We conclude as before using Cauchy–Schwarz inequality and Lemma 3.5, Point 3.

We finish with the proof of (2.9). With the same notations as above, it is again enough to estimate

By (3.11), this is at most

where we obtained the above estimate by decomposing the expectation according to whether \(X_t \le -\gamma t/2\) or not. By Girsanov’s theorem and then by Lemma A, the above probability with respect to the one-dimensional Brownian motion is equal to

By decomposing the above expectation according to whether \(X_t \ge (2-\gamma ) t / 4\) or not, we see that it is at most, up to a multiplicative constant,

Now, by Lemma 3.5 point 1 and because \(X_t\) under \(\mathbb {P}_{\beta _x-r}^3 \left( \cdot \left| \exists s \le t, X_s \le \frac{\sqrt{s}}{M \log (2+s)^2} \right. \right) \) is stochastically dominated by \(X_t\) under \(\mathbb {P}^3_{\beta _x-r}\), we see that the probability in (4.11) is at most, up to a multiplicative constant,

By a similar procedure as above we can reintroduce \(\frac{\beta _x-r}{X_t}\) in the expectation above in place of \(\frac{\beta _x-r}{(2-\gamma ) t}\) and reverse the computations using Lemma A and then Girsanov’s theorem to obtain that

by (3.20). Wrapping things up, we have obtained that the probability in (4.11) is at most

as desired. This concludes the proof. \(\square \)

5 \(L^2\)-estimates

5.1 Uniform integrability: proof of Proposition 2.2

This section is devoted to the proof of Proposition 2.2. We first state the following result for ease of reference.

Lemma 5.1

Let I be a finite set of indices, \((r_i, i \in I) \in [0,\infty )^I\) and let \((X^{(i)}, i \in I) \sim \otimes _{i \in I} \mathbb {P}^0_{r_i}\) be independent zero-dimensional Bessel processes starting at \(r_i\). Define the process \((X_s, s \ge 0)\) as follows: for all \(n \ge 0\), let \(X_n = \sqrt{ \sum _{i \in I} (X_n^{(i)})^2 }\) and conditionally on \((X_n^{(i)}, n \ge 1, i \in I)\), let \((X_s, s \in (n,n+1)), n \ge 0,\) be independent zero-dimensional Bessel bridges between \(X_n\) and \(X_{n+1}\). Then \(X \sim \mathbb {P}^0_r\) with \(r = \sqrt{\sum _{i \in I} r_i^2}\).

Proof

This is a direct consequence of the fact that the sum of independent zero-dimensional squared Bessel processes is again distributed as a zero-dimensional squared Bessel process. \(\square \)

Proof of Proposition 2.2

The constants underlying this proof may depend on \(\beta \) and M. We start by proving (2.12). We will then see that very few arguments need to be modified to obtain (2.11) and (2.13). Let \(\varepsilon '\) be the only real number in \(\{e^{-n}, n \ge 1\}\) be such that

We are first going to control the contribution of points \(x, y \in D\) at distance at least 1/M from \(x_0\) such that \(|x-y| \le \varepsilon '\). Let x and y be such points. On \(G_\varepsilon '(y)\),

We thus have

using (3.2) in the last inequality. This shows that

We now focus on the remaining contribution. Let \(x,y \in D\) at distance at least 1/M from \(x_0\) be such that \(|x-y| \ge \varepsilon '\). Without loss of generality, assume that the diameter of D is at most 1 so that we can define \(\alpha = e^{-k_\alpha }, \eta = e^{-k_\eta } \in \{ e^{-n} , n \ge 1 + \left\lfloor \log M \right\rfloor \}\) to be the only real numbers satisfying

Notice that \(D(x,\alpha ) \cap D(y,\alpha ) = \varnothing \) (as soon as M is at least 2/e), that \(\eta \ge \varepsilon \) because \(|x-y| \ge \varepsilon '\), that \(k_\eta \ge 1 + \log M \ge k_x\) and that \(\eta < \alpha /e\). Define

Importantly, the event \(G_{\eta , \varepsilon }(x)\) is contained in \(G_\varepsilon (x)\) and only cares about what happens inside the disc \(D(x,\alpha /e)\). We similarly define \(G_{\eta ,\varepsilon }(y)\). We can bound  by

by

In broad terms, our strategy now is to condition on \(L_{x,\eta }(\tau _{x,R})\) and \(L_{y,\eta }(\tau _{y,R})\) and integrate everything else. Let \(N_x\) be the number of excursions from \(\partial D(x,\alpha /e)\) to \(\partial D(x,\alpha )\) before hitting \(\partial D(x,R)\). For \(i=1 \dots N_x\) and \(\delta \le \alpha /e\), let \(L_{x,\delta }^i\) be the local time of \(\partial D(x,\delta )\) accumulated during the ith excursion. We also write \(r_{x,\eta }^i := \sqrt{\frac{1}{\eta } L_{x,\eta }^i}\) and \(r_{x,\eta } := \sqrt{\frac{1}{\eta } L_{x,\eta }(\tau _{x,R})}\). Let \(I_x\) be the subset of \(\{1, \dots , N_x\}\) corresponding to the above excursions that hit \(\partial D(x,\eta )\). Define similar notations with x replaced by y et let \({\mathcal {F}}_{x,y}\) be the sigma algebra generated by \(N_x, N_y, I_x, I_y\) and the successive initial and final positions of the above-mentioned excursions (around both x and y).

Conditionally on the initial and final positions of the above excursions,

are independent. Moreover, for all \(i=1 \dots N_x\), conditioned on \(\{ i \in I_x \}\), \(\left( L_{x,e^{-n}}^i, n \ge k_\alpha + 1 \right) \) is close to be independent of the initial and final positions of the given excursion: this is the content of the continuity Lemma 3.3. The Bessel bridges that we use to interpolate the local times between dyadic radii smaller than \(\alpha \) around x and y do not create any further dependence since \(D(x,\alpha ) \cap D(y,\alpha ) = \varnothing \). Hence, recalling (1.6) and Lemma 5.1, we see that by paying a multiplicative price \(\left( 1 + p \left( \frac{\eta }{\alpha } \right) \right) ^{|I_x|+|I_y|}\) and conditionally on \({\mathcal {F}}_{x,y}\), we can approximate the joint law of \((h_{x,\eta e^{-s}}, s \ge 0)\) and \((h_{y,\eta e^{-s}}, s \ge 0)\) by \(\mathbb {P}^0_{r_{x,\eta }} \otimes \mathbb {P}^0_{r_{y,\eta }}\). Letting \(t = \log \frac{\eta }{\varepsilon } = k-k_\eta \) and \(\beta ' := \beta + 2k_\eta \), we deduce that

Now, by (3.16),

We have a similar estimate for the expectation around the point y and we further bound

To wrap things up, we have proven that

By the continuity Lemma 3.3 and recalling (5.2), there exists \(c_* >0\) such that

If we take N to be equal to \(\exp \left( c_* \left( \log |\log |x-y|| \right) ^2 /2 \right) \), we thus have

and (5.5) together with (3.2) yield

We now explain how to bound  . \(|I_x|\) is smaller than the number of excursions from \(\partial D(x,\alpha /e)\) to \(\partial D(x,\eta )\) before hitting \(\partial D(x,R)\) and the probability for a Brownian trajectory starting at \(\partial D(x,\alpha /e)\) to hit \(\partial D(x,\eta )\) before hitting \(\partial D(x,R)\) is given by

. \(|I_x|\) is smaller than the number of excursions from \(\partial D(x,\alpha /e)\) to \(\partial D(x,\eta )\) before hitting \(\partial D(x,R)\) and the probability for a Brownian trajectory starting at \(\partial D(x,\alpha /e)\) to hit \(\partial D(x,\eta )\) before hitting \(\partial D(x,R)\) is given by

By strong Markov property, we then obtain that for all \(M>0\),

Using (5.5), Cauchy–Schwarz and (3.2), we deduce that

This concludes the proof of (2.12).

Let  be any subsequential limit of

be any subsequential limit of  . The claim about the non-atomicity of

. The claim about the non-atomicity of  follows from the following energy estimate which is a consequence of what we did before:

follows from the following energy estimate which is a consequence of what we did before:

For the proof of (2.11), resp. (2.13), we proceed in the exact same way as before. The only difference is that, instead of (5.4), we need to bound from above

resp.

This is done in (3.15), resp. (3.17), and we conclude the proof of (2.11), resp. (2.13), along the same lines as above. \(\square \)

5.2 Cauchy sequence in \(L^2\): proof of Proposition 2.3

This section is devoted to the proof of Proposition 2.3.

Proof of Proposition 2.3

Let A be a Borel set of \({\mathbb {R}}^2\). Let \(\eta = e^{-k_\eta } \in \{e^{-n}, n \ge 1\}\) be small and consider

If \((x,y) \in (A \times A)_\eta \), the two sequences of circles \((\partial D(x,e^{-n}), n \ge 1)\) and \((\partial D(y,e^{-n}), n \ge 1)\) will not interact between each other inside \(D(x,\eta )\) and \(D(y,\eta )\). We can write

Thanks to (2.12) and because the Lebesgue measure of \((A \times A) \backslash (A \times A)_\eta \) goes to zero as \(\eta \rightarrow 0\), we know that the first right hand side term goes to zero as \(\eta \rightarrow 0\). We are going to show that for a fixed \(\eta \) the second right hand side term vanishes. (2.12) provides the upper bound required to apply dominating convergence theorem and we are left to show the pointwise convergence

for a fixed \((x,y) \in (A \times A)_\eta \). Let \(\eta ' = e^{-k_{\eta '}} \in \{e^{-n}, n \ge 0\}\) be much smaller than \(\eta \). Let \(N_y\) (resp. \(N_y'\)) be the number of excursions from \(\partial D(y, \eta /e)\) to \(\partial D(y,\eta )\) before hitting \(\partial D\) (resp. before hitting \(\partial D(y,R)\)). For \(i=1 \dots N_y'\) and \(\delta \le \eta /e\), we will denote \(L_{y,\delta }^i\) the local time of \(\partial D(y,\delta )\) accumulated during the i-th such excursion. Denote by I (resp. \(I'\)) the subset of \(\{1, \dots , N_y\}\) (resp. \(\{1, \dots , N_y'\}\)) corresponding to the excursions that visited \(\partial D(y,\eta ')\). First of all, one can show that there exists \(N \ge 1\) depending on \(\eta \) such that

This is a direct consequence of the bound (5.6). Let \({\mathcal {F}}_{x,y}\) be the sigma-algebra generated by \((L_{x,e^{-n}}(\tau ), L_{x,e^{-n}}(\tau _{x,R}), n \ge 0)\), \((L_{y,e^{-n}}(\tau ), L_{y,e^{-n}}(\tau _{y,R}), n = 0 \dots k_\eta -1)\), \(N_y, N_y', I, I'\), \((L_{y, e^{-n}}^i, i \notin I', n = k_\eta \dots k_{\eta '})\) as well as the starting and exiting point of the excursions from \(\partial D(y, \eta /e)\) to \(\partial D(y,\eta )\) before hitting \(\partial D(y,R)\). Denote \((e/\eta ) (r_{y,\eta /e})^2\) (resp. \((e/\eta ) (r_{y,\eta /e}')^2\)) the local time \(L_{y,\eta /e}(\tau )\) (resp. \(L_{y,\eta /e}(\tau _{y,R}) - L_{y,\eta /e}(\tau )\)), \(t = \log (\eta /(e\varepsilon ))\), \(\beta ' = \beta - 2\log (\eta /e)\), \(t_0 = \log (e/\eta )\), \(t_1 = \log (e \eta '/\eta )\). With a reasoning similar as what we did in the proof of Proposition 2.2, Lemma 3.3, (1.6) and Lemma 5.1 imply that  is equal to

is equal to

where

Now, by (3.12), we have

which converges as \(\varepsilon \rightarrow 0\) (and hence \(t \rightarrow \infty \)) towards

This shows that

is at most zero. The only quantity depending on \(\eta '\) in the above expression is \(p(\eta '/\eta )\) which goes to zero as \(\eta ' \rightarrow 0\). By letting \(\eta ' \rightarrow 0\), we thus obtain

This concludes the proof of the fact that  is Cauchy in \(L^2\).

is Cauchy in \(L^2\).

We move on to the proof of the convergence of  together with the identification of the limit with

together with the identification of the limit with  . Since we already know that

. Since we already know that  converges in \(L^2\) towards

converges in \(L^2\) towards  , it is enough to show that

, it is enough to show that

In particular, we don’t need to consider “mixed moments” with \(\varepsilon ' \ne \varepsilon \). As before, we bound

As before, we only need to care about the two last right hand side terms and thanks to (2.11) and (2.12), we only need to show the two following pointwise convergences:

where \((x,y) \in (A \times A)_\eta \) is fixed. In both cases, we employ the same technique as before by decomposing the Brownian trajectory according to what happens close to the point y and (5.9) follows from the fact that

converges to the same limit as

Let us justify this last claim. After using (3.12), we see that we only need to show that

Take \(t_2>t_1 -t_0\) large. We can bound

The difference between the expectation on the left hand side of (5.10) and

is thus at most

Let \(q_1 \in (1,3), q_2 \in (1,2)\) and \(q_3 > 1\) be such that \(1/q_1 + 1/q_2 + 1/q_3 = 1\). By Hölder’s inequality, we can bound the above expression by

The first two expectations are bounded by a universal constant by Lemma 3.5 Points 3 and 4. The last term containing the two probabilities goes to zero as \(t_2 \rightarrow \infty \). Similarly, we can replace

and

We have shown that the left hand side term of (5.10) is equal to \(o_{t_2 \rightarrow \infty }(1)\) plus

By conditioning up to \(t_2\) and then by using Lemma 3.5 point 2, we see that the above expectation converges as \(t \rightarrow \infty \) to

With a similar reasoning as above, one can show that the expectation on the right hand side of (5.10) converges as \(t \rightarrow \infty \) to \(o_{t_2 \rightarrow \infty }(1)\) plus

We have thus shown the left and right hand sides of (5.10) differ by at most some \(o_{t_2 \rightarrow \infty }(1)\). Since they do not depend on \(t_2\), we obtain the claim (5.10) by letting \(t_2 \rightarrow \infty \). This concludes the fact that  converges in \(L^2\) towards

converges in \(L^2\) towards  .

.

The fact that for all \(\gamma \in (1,2)\),  is a Cauchy sequence in \(L^2\) follows along lines that are very similar to the proof of the fact that