Abstract

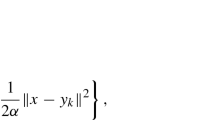

In this paper, we focus on the problem of minimizing the sum of nonconvex smooth component functions and a nonsmooth weakly convex function composed with a linear operator. One specific application is logistic regression problems with weakly convex regularizers that introduce better sparsity than the standard convex regularizers. Based on the Moreau envelope with a decreasing sequence of smoothing parameters as well as incremental aggregated gradient method, we propose a variable smoothing incremental aggregated gradient (VS-IAG) algorithm. We also prove a complexity of \({\mathcal {O}}(\epsilon ^{-3})\) to achieve an \(\epsilon \)-approximate solution.

Similar content being viewed by others

References

Barbu, A., She, Y., Ding, L., Gramajo, G.: Feature selection with annealing for computer vision and big data learning. IEEE Trans. Pattern Anal. Mach. Intell. 39(2), 272–286 (2017)

Böhm, A., Wright, S.J.: Variable smoothing for weakly convex composite functions. J. Optim. Theory Appl. 188, 628–649 (2021). https://doi.org/10.1007/s10957-020-01800-z

Bertsekas, D.P.: Incremental gradient, subgradient, and proximal methods for convex optimization: A survey. Optim. Mach. Learn. pp. 1–38 (2011)

Blatt, D., Hero, A.O., Gauchman, H.: A convergent incremental gradient method with a constant step size. SIAM J. Optim. 18(1), 29–51 (2007)

Bot, R.I., Böhm, A.: Variable smoothing for convex optimization problems using stochastic gradients. J. Sci. Comput. (2020). https://doi.org/10.1007/s10915-020-01332-8

Chapelle, O., Do, C.B., Teo, C.H., Le, Q.V., Smola, A.J.: Tighter bounds for structured estimation. Adv. Neural Inf. Process. Syst. 21, 281–288 (2008)

Chartrand, R.: Exact reconstruction of sparse signals via nonconvex minimization. IEEE Signal Process. Lett. 14(10), 707–710 (2007)

Chen, L., Gu, Y.: The convergence guarantees of a non-convex approach for sparse recovery. IEEE Trans. Signal Process. 62(15), 3754–3767 (2014)

Davis, D., Drusvyatskiy, D.: Stochastic model-based minimization of weakly convex functions. SIAM J. Optim. 29(1), 207–239 (2019)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Gürbüzbalaban, M., Ozdaglar, A.E., Parrilo, P.A.: Convergence rate of incremental gradient and incremental newton methods. SIAM J. Optim. 29(4), 2542–2565 (2018)

Gürbüzbalaban, M., Ozdaglar, A., Parrilo, P.A.: On the convergence rate of incremental aggregated gradient algorithms. SIAM J. Optim. 27(2), 1035–1048 (2017)

Genkin, A., Lewis, D.D., Madigan, D.: Large-scale bayesian logistic regression for text categorization. Technometrics 49(3), 291–304 (2007)

Komarek, P.: Logistic regression for data mining and high-dimensional classification. PhD thesis, Pittsburgh, PA, USA (2004)

Liu, Y., Xia, F.: Linear convergence of proximal incremental aggregated gradient method for nonconvex nonsmooth minimization problems. Appl. Anal. Published Online: https://doi.org/10.1080/00036811.2020.1849634 (2020)

Masnadi-shirazi, H., Vasconcelos, N.: On the design of loss functions for classification: theory, robustness to outliers, and savageboost. Adv Neural Inf. Process. Syst. 21, 1049–1056 (2008)

Minka, T.P.: A comparison of numerical optimizers for logistic regression. J. Am. Chem. Soc. 125(6), 1660–1668 (2007)

Mokhtari, A., Gürbüzbalaban, M., Ribeiro, A.: Surpassing gradient descent provably: A cyclic incremental method with linear convergence rate. SIAM J. Optim. 28(2), 1420–1447 (2018)

Nedić, A., Bertsekas, D.P.: Incremental subgradient methods for nondifferentiable optimization. SIAM J. Optim. 12(1), 109–138 (2001)

Peng, W., Zhang, H., Zhang, X.: Nonconvex proximal incremental aggregated gradient method with linear convergence. J. Optim. Theory Appl. 183, 230–245 (2019)

Plan, Y., Vershynin, R.: Robust 1-bit compressed sensing and sparse logistic regression: A convex programming approach. IEEE Trans. Inf. Theory 59(1), 482–494 (2013)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis. Springer, New York (2009)

Shen, X., Chen, L., Gu, Y., So, H.C.: Square-root lasso with nonconvex regularization: An admm approach. IEEE Signal Process. Lett. 23(7), 934–938 (2016)

Shen, X., Gu, Y.: Nonconvex sparse logistic regression with weakly convex regularization. IEEE Trans. Signal Process. 66(12), 3199–3211 (2018)

Sun, T., Sun, Y., Li, D., Liao, Q.: General proximal incremental aggregated gradient algorithms: Better and novel results under general scheme. Adv. Neural Inf. Process. Syst. 32, 996–1006 (2019)

Tim, H., Maxime, L., Adam, O.: On proximal point-type algorithms for weakly convex functions and their connection to the backward euler method (2018)

Vanli, N.D., Gürbüzbalaban, M., Ozdaglar, A.: Global convergence rate of proximal incremental aggregated gradient methods. SIAM J. Optim. 28(2), 1282–1300 (2018)

Wai, H.T., Shi, W., Uribe, C.A., Nedić, A., Scaglione, A.: Accelerating incremental gradient optimization with curvature information. Comput. Optim. Appl. 76(2), 347–380 (2020)

Wu, Y., Liu, Y.: Robust truncated hinge loss support vector machines. J. Am. Stat. Assoc. 102(479), 974–983 (2007)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Zhang, H., Dai, Y.H., Guo, L.: Proximal-like incremental aggregated gradient method with linear convergence under bregman distance growth conditions. Math. Oper. Res. 46(1), 61–81 (2020)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Y., Xia, F. Variable smoothing incremental aggregated gradient method for nonsmooth nonconvex regularized optimization. Optim Lett 15, 2147–2164 (2021). https://doi.org/10.1007/s11590-021-01723-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-021-01723-2