Abstract

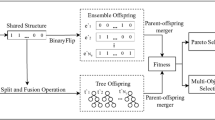

The ensemble learning methods have always been paid attention to their successful performance in handling supervised classification problems. Nevertheless, some deficiencies, such as inadequate diversity between classifiers and existing redundant classifiers, are among the main challenges in this kind of learning. In recent years, a method called density peak has been used in clustering methods to improve this process, which selects cluster centers from the local density peak. In this paper, inspiring this matter, and using the density peak criterion, a new method is proposed to create parallel ensembles. This criterion creates diverse training sets resulting in the generation of diverse classifiers. In the proposed method, during a multi-objective evolutionary decomposition-based optimization process, some (near) optimum diverse training datasets are created to improve the performance of the non-sequential ensemble learning methods. To do so, in addition to density peak as the first objective, the accuracy criterion is used as the second objective function. To show the superiority of the proposed method, it has been compared with the state-of-the-art methods over 19 datasets. To conduct a better comparison, non-parametric statistical tests are used, where the obtained results demonstrate that the proposed method can significantly dominate the other employed methods.

Similar content being viewed by others

References

Kavakiotis I, Tsave O, Salifoglou A et al (2017) Machine learning and data mining methods in diabetes research. Comput Struct Biotechnol J 15:104–116

Zhang DG, Wang X, Song XD (2015) New medical image fusion approach with coding based on SCD in wireless sensor network. J Electr Eng Technol 10:2384–2392. https://doi.org/10.5370/JEET.2015.10.6.2384

Zhang D, Wang X, Song X et al (2015) A new clustering routing method based on PECE for WSN. Eurasip J Wirel Commun Netw 2015:162. https://doi.org/10.1186/s13638-015-0399-x

Zhang D, Ge H, Zhang T et al (2019) New multi-hop clustering algorithm for vehicular ad hoc networks. IEEE Trans Intell Transp Syst 20:1517–1530. https://doi.org/10.1109/TITS.2018.2853165

Zhang DG, Zhu YN, Zhao CP, Dai WB (2012) A new constructing approach for a weighted topology of wireless sensor networks based on local-world theory for the Internet of Things (IOT). In: Computers and mathematics with applications. Pergamon, pp 1044–1055

Cavalcanti GDC, Oliveira LS, Moura TJM, Carvalho GV (2016) Combining diversity measures for ensemble pruning. Pattern Recognit Lett 74:38–45. https://doi.org/10.1016/j.patrec.2016.01.029

Guo H, Liu H, Li R et al (2018) Margin & diversity based ordering ensemble pruning. Neurocomputing 275:237–246. https://doi.org/10.1016/j.neucom.2017.06.052

Rodríguez JJ, Kuncheva LI, Alonso CJ (2006) Rotation forest: a New classifier ensemble method. IEEE Trans Pattern Anal Mach Intell 28:1619–1630. https://doi.org/10.1109/TPAMI.2006.211

Roshan SE, Asadi S (2020) Improvement of Bagging performance for classification of imbalanced datasets using evolutionary multi-objective optimization. Eng Appl Artif Intell 87:103319. https://doi.org/10.1016/j.engappai.2019.103319

Giacinto G, Roli F (2001) Design of effective neural network ensembles for image classification purposes. Image Vis Comput 19:699–707. https://doi.org/10.1016/S0262-8856(01)00045-2

Abuassba AOM, Zhang D, Luo X et al (2017) Improving classification performance through an advanced ensemble based heterogeneous extreme learning machines. Comput Intell Neurosci. https://doi.org/10.1155/2017/3405463

Wang G, Ma J, Yang S (2011) Igf-bagging: information gain based feature selection for bagging. Int J Innov Comput Inf Control 7:6247–6259

Gu S, Jin Y (2015) Generating diverse and accurate classifier ensembles using multi-objective optimization. In: IEEE SSCI 2014—2014 IEEE Symposium Series on Computational Intelligence—MCDM 2014: 2014 IEEE Symposium on Computational Intelligence in Multi-Criteria Decision-Making, Proceedings. Institute of Electrical and Electronics Engineers Inc, pp 9–15

Chung D, Kim H (2015) Accurate ensemble pruning with PL-bagging. Comput Stat Data Anal 83:1–13. https://doi.org/10.1016/j.csda.2014.09.003

Nascimento DSC, Coelho ALV, Canuto AMP (2014) Integrating complementary techniques for promoting diversity in classifier ensembles: a systematic study. Neurocomputing 138:347–357. https://doi.org/10.1016/j.neucom.2014.01.027

Mao S, Chen JW, Jiao L et al (2019) Maximizing diversity by transformed ensemble learning. Appl Soft Comput J. https://doi.org/10.1016/j.asoc.2019.105580

Özöğür-Akyüz S, Windeatt T, Smith R (2015) Pruning of error correcting output codes by optimization of accuracy–diversity trade off. Mach Learn 101:253–269. https://doi.org/10.1007/s10994-014-5477-5

Díez-Pastor JF, Rodríguez JJ, García-Osorio CI, Kuncheva LI (2015) Diversity techniques improve the performance of the best imbalance learning ensembles. Inf Sci (Ny) 325:98–117. https://doi.org/10.1016/j.ins.2015.07.025

Sagi O, Rokach L (2018) Ensemble learning: a survey. Wiley Interdiscip Rev Data Min Knowl Discov. https://doi.org/10.1002/widm.1249

Kuncheva LI (2003) That elusive diversity in classifier ensembles. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 2652:1126–1138. https://doi.org/10.1007/978-3-540-44871-6_130

Rokach L (2010) Ensemble-based classifiers. Artif Intell Rev 33:1–39. https://doi.org/10.1007/s10462-009-9124-7

Bi Y (2012) The impact of diversity on the accuracy of evidential classifier ensembles. Int J Approx Reason 53:584–607. https://doi.org/10.1016/j.ijar.2011.12.011

Kuncheva LI, Whitaker CJ (2003) Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach Learn 51:181–207. https://doi.org/10.1023/A:1022859003006

Khorashadi-Zadeh AE, Babaie-Zadeh M, Jutten C (2020) A Novel Pruning Approach for Bagging Ensemble Regression Based on Sparse Representation. In: ICASSP, IEEE international conference on acoustics, speech and signal processing—proceedings. Institute of Electrical and Electronics Engineers Inc, pp 4032–4036

Hu R, Zhou S, Liu Y, Tang Z (2019) Margin-based pareto ensemble pruning: an ensemble pruning algorithm that learns to search optimized ensembles. Comput Intell Neurosci. https://doi.org/10.1155/2019/7560872

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55:119–139. https://doi.org/10.1006/jcss.1997.1504

Breiman L (1996) Bagging predictors. Mach Learn 24:123–140. https://doi.org/10.1007/bf00058655

Johnson RW (2001) An Introduction to the Bootstrap. Teach Stat 23:49–54. https://doi.org/10.1111/1467-9639.00050

Akhand MAH, Murase K (2012) Ensembles of neural networks based on the alteration of input feature values. Int J Neural Syst 22:77–87. https://doi.org/10.1142/S0129065712003079

Antal B (2015) Classifier ensemble creation via false labelling. Knowl Based Syst 89:278–287. https://doi.org/10.1016/j.knosys.2015.07.009

Elyan E, Gaber MM (2017) A genetic algorithm approach to optimising random forests applied to class engineered data. Inf Sci (Ny) 384:220–234. https://doi.org/10.1016/j.ins.2016.08.007

Chen Z, Lin T, Chen R et al (2017) Creating diversity in ensembles using synthetic neighborhoods of training samples. Appl Intell 47:570–583. https://doi.org/10.1007/s10489-017-0922-3

Zhang Y, Cao G, Wang B, Li X (2019) A novel ensemble method for k-nearest neighbor. Pattern Recognit 85:13–25. https://doi.org/10.1016/j.patcog.2018.08.003

Ribeiro VHA, Reynoso-Meza G (2018) A multi-objective optimization design framework for ensemble generation. In: GECCO 2018 companion—proceedings of the 2018 genetic and evolutionary computation conference companion. Association for Computing Machinery, Inc, New York, NY, USA, pp 1882–1885

Zhang H, Cao L (2014) A spectral clustering based ensemble pruning approach. Neurocomputing 139:289. https://doi.org/10.1016/j.neucom.2014.02.030

Xiao H, Xiao Z, Wang Y (2016) Ensemble classification based on supervised clustering for credit scoring. Appl Soft Comput J 43:73–86. https://doi.org/10.1016/j.asoc.2016.02.022

Seijo-Pardo B, Porto-Díaz I, Bolón-Canedo V, Alonso-Betanzos A (2017) Ensemble feature selection: homogeneous and heterogeneous approaches. Knowl Based Syst 118:124–139. https://doi.org/10.1016/j.knosys.2016.11.017

Savargiv M, Masoumi B, Keyvanpour MR (2020) A new ensemble learning method based on learning automata. J Ambient Intell Humaniz Comput. https://doi.org/10.1007/s12652-020-01882-7

Raza K (2019) Improving the prediction accuracy of heart disease with ensemble learning and majority voting rule. In: U-Healthcare Monitoring Systems. Elsevier, pp 179–196

Pérez-Gállego P, Quevedo JR, del Coz JJ (2017) Using ensembles for problems with characterizable changes in data distribution: a case study on quantification. Inf Fusion 34:87–100. https://doi.org/10.1016/j.inffus.2016.07.001

Onan A, Korukoğlu S, Bulut H (2017) A hybrid ensemble pruning approach based on consensus clustering and multi-objective evolutionary algorithm for sentiment classification. Inf Process Manag 53:814–833. https://doi.org/10.1016/j.ipm.2017.02.008

Guo L, Boukir S (2013) Margin-based ordered aggregation for ensemble pruning. Pattern Recognit Lett 34:603–609. https://doi.org/10.1016/j.patrec.2013.01.003

Jan MZ, Verma B (2019) A novel diversity measure and classifier selection approach for generating ensemble classifiers. IEEE Access 7:156360–156373. https://doi.org/10.1109/ACCESS.2019.2949059

Zhang H, Song Y, Jiang B et al (2019) Two-stage bagging pruning for reducing the ensemble size and improving the classification performance. Math Probl Eng. https://doi.org/10.1155/2019/8906034

Dai Q, Ye R, Liu Z (2017) Considering diversity and accuracy simultaneously for ensemble pruning. Appl Soft Comput J 58:75–91. https://doi.org/10.1016/j.asoc.2017.04.058

Singh N, Singh P (2020) Stacking-based multi-objective evolutionary ensemble framework for prediction of diabetes mellitus. Biocybern Biomed Eng 40:1–22. https://doi.org/10.1016/j.bbe.2019.10.001

Zouggar ST, Adla A (2018) A new function for ensemble pruning. Lecture notes in business information processing. Springer, Berlin, pp 181–190

Nguyen TT, Luong AV, Dang MT et al (2020) Ensemble selection based on classifier prediction confidence. Pattern Recognit 100:107104. https://doi.org/10.1016/j.patcog.2019.107104

Bui LT, Truong VuV, Huong Dinh TT (2018) A novel evolutionary multi-objective ensemble learning approach for forecasting currency exchange rates. Data Knowl Eng 114:40–66. https://doi.org/10.1016/j.datak.2017.07.001

Donyavi Z, Asadi S (2020) Diverse training dataset generation based on a multi-objective optimization for semi-Supervised classification. Pattern Recognit 108:107543. https://doi.org/10.1016/j.patcog.2020.107543

Ronoud S, Asadi S (2019) An evolutionary deep belief network extreme learning-based for breast cancer diagnosis. Soft Comput 23:13139–13159. https://doi.org/10.1007/s00500-019-03856-0

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11:712–731. https://doi.org/10.1109/TEVC.2007.892759

Asadi S (2019) Evolutionary fuzzification of RIPPER for regression: case study of stock prediction. Neurocomputing 331:121–137. https://doi.org/10.1016/j.neucom.2018.11.052

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6:182–197. https://doi.org/10.1109/4235.996017

Donyavi Z, Asadi S (2020) Using decomposition-based multi-objective evolutionary algorithm as synthetic example optimization for self-labeling. Swarm Evol Comput 58:100736. https://doi.org/10.1016/j.swevo.2020.100736

Yu Z, Lu Y, Zhang J et al (2018) Progressive semisupervised learning of multiple classifiers. IEEE Trans Cybern 48:689–702. https://doi.org/10.1109/TCYB.2017.2651114

Cheng Y (1995) Mean shift, mode seeking, and clustering. IEEE Trans Pattern Anal Mach Intell 17:790–799. https://doi.org/10.1109/34.400568

Dua D, Graff C (2017) {UCI} Machine learning repository

Margineantu D, Dietterich TG (1997) Pruning adaptive boosting. In: Proc fourteenth int conf mach learn, pp 211–218

Martínez-Muñoz G, Suárez A (2006) Pruning in ordered bagging ensembles. In: ICML 2006—proceedings of the 23rd international conference on machine learning, pp 609–616

Zhang Y, Burer S, Street WN (2006) Ensemble pruning via semi-definite programming. J Mach Learn Res 7:1315–1338

Martínez-Muñoz G, Suárez A (2004) Aggregation ordering in bagging. In: Proc IASTED Int Conf Appl informatics, pp 258–263

Demˇ J (1993) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Asadi S, Shahrabi J (2016) ACORI: a novel ACO algorithm for rule induction. Knowl Based Syst 97:175–187. https://doi.org/10.1016/j.knosys.2016.01.005

García S, Fernández A, Luengo J, Herrera F (2009) A study of statistical techniques and performance measures for genetics-based machine learning: accuracy and interpretability. Soft Comput 13:959–977. https://doi.org/10.1007/s00500-008-0392-y

Dunn OJ (1961) Multiple comparisons among means. J Am Stat Assoc 56:52–64. https://doi.org/10.1080/01621459.1961.10482090

Hochberg Y (1988) A sharper Bonferroni procedure for multiple tests of significance. Biometrika 75:800–802. https://doi.org/10.1093/biomet/75.4.800

Holm S (1979) A simple sequentially rejective multiple test procedure. Scand J Stat 65–70

Li J (2008) A two-step rejection procedure for testing multiple hypotheses. J Stat Plan Inference 138:1521–1527. https://doi.org/10.1016/j.jspi.2007.04.032

Finner H (1993) On a monotonicity problem in step-down multiple test procedures. J Am Stat Assoc 88:920–923. https://doi.org/10.1080/01621459.1993.10476358

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Roshan, S., Asadi, S. Development of ensemble learning classification with density peak decomposition-based evolutionary multi-objective optimization. Int. J. Mach. Learn. & Cyber. 12, 1737–1751 (2021). https://doi.org/10.1007/s13042-020-01271-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01271-8