Abstract

We propose a simple model for distributed query processing based on the concept of a distributed array. Such an array has fields of some data type whose values can be stored on different machines. It offers operations to manipulate all fields in parallel within the distributed algebra. The arrays considered are one-dimensional and just serve to model a partitioned and distributed data set. Distributed arrays rest on a given set of data types and operations called the basic algebra implemented by some piece of software called the basic engine. It provides a complete environment for query processing on a single machine. We assume this environment is extensible by types and operations. Operations on distributed arrays are implemented by one basic engine called the master which controls a set of basic engines called the workers. It maps operations on distributed arrays to the respective operations on their fields executed by workers. The distributed algebra is completely generic: any type or operation added in the extensible basic engine will be immediately available for distributed query processing. To demonstrate the use of the distributed algebra as a language for distributed query processing, we describe a fairly complex algorithm for distributed density-based similarity clustering. The algorithm is a novel contribution by itself. Its complete implementation is shown in terms of the distributed algebra and the basic algebra. As a basic engine the Secondo system is used, a rich environment for extensible query processing, providing useful tools such as main memory M-trees, graphs, or a DBScan implementation.

Similar content being viewed by others

1 Introduction

Big data management has been a core topic in research and development for the last fifteen years. Its popularity was probably started by the introduction of the MapReduce paradigm [10] which allowed a simple formulation of data processing tasks by a programmer which are then executed in a highly scalable and fault tolerant way on a large set of machines. Massive data sets arise through the global scale of the internet with applications and global businesses such as Google, Amazon, Facebook. Other factors are the ubiquity of personal devices collecting and creating all kinds of data, but also the ever growing detail of scientific experiments and data collection, for example, in physics or astronomy, or the study of the human brain or the genome.

Dealing with massive data sets requires to match the size of the problem with a scalable amount of resources; therefore distributed and parallel processing is essential. Following MapReduce and its open source version Hadoop, many frameworks have been developed, for example, Hadoop-based approaches such as HadoopDB, Hive, Pig; Apache Spark and Flink; graph processing frameworks such as Pregel or GraphX.

All of these systems provide some model of the data that can be manipulated and a language for describing distributed processing. For example, MapReduce/Hadoop processes key-value pairs; Apache Spark offers resilient distributed data sets in main memory; Pregel manipulates nodes and edges of a graph in a node-centric view. Processing is described in terms of map and reduce functions in Hadoop; in an SQL-like style in Hive; by a set of operations on tables in Pig; by a set of operations embedded in a programming language environment in Spark; or by functions processing messages between nodes in Pregel.

In this paper, we consider the problem of transforming an extensible query processing system on a single machine (called the basic engine) into a scalable parallel query processing system on a cluster of computers. All the capabilities of the basic engine should automatically be available for parallel and distributed query processing, including extensions to the local system added in the future.

We assume the basic engine implements an algebra for query processing called the basic algebra. The basic algebra offers some data types and operations. The basic engine allows one to create and delete databases and within databases to create and delete objects of data types of the basic algebra. It allows one to evaluate terms (expressions, queries) of the basic algebra over database objects and constants and to return the resulting values to the user or store them in a database object.

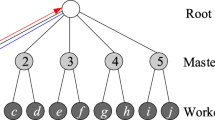

The idea to turn this into a scalable distributed system is to introduce an additional algebra for distributed query processing into the basic engine, the distributed algebra. The distributed system will then consist of one basic engine called the master controlling many basic engines called the workers. The master will execute commands provided by a user or application. These commands will use data types and operations of the distributed algebra. The types will represent data distributed over workers and the operations be implemented by commands and queries sent to the workers.

The fundamental conceptual model and data structure to represent distributed data is a distributed array. A distributed array has fields of some data type of the basic algebra; these fields are stored on different computers and assigned to workers on these computers. Queries are described as mappings from distributed arrays to distributed arrays. The mapping of fields is described by terms of the basic algebra that can be executed by the basic engines of the workers. Further, the distributed algebra allows one to distribute data from the master to the workers, creating distributed arrays, as well as collect distributed array fields from the workers to the master.

These ideas have been implemented in the extensible DBMS Secondo which takes the role of the basic engine. The Secondo kernel is structured into algebra modules each providing some data types and operations; all algebras together form the basic algebra. Secondo provides query processing over the implemented algebras as described above for the basic engine. Currently there is a large set of algebras providing basic data types (e.g., integer, string, bool, ...), relations and tuples, spatial data types, spatio-temporal types, various index structures including B-trees, R-trees, M-trees; data structures in main memory for relations, indexes, graphs; and many others. The distributed algebra described in this paper has been implemented in Secondo.

In the main part of this paper we design the data types and operations of the distributed algebra and formally define their semantics.

To illustrate distributed query processing based on this model, we describe an algorithm for distributed density-based similarity clustering. That is, we show the “source code” to implement the algorithm in terms of the distributed algebra and the basic algebra.

The contributions of the paper are as follows:

-

A generic algebra for distributed query processing is presented.

-

Data types and operations of the algebra are designed and their semantics are formally defined.

-

The implementation of the distributed algebra is explained.

-

A novel algorithm for distributed density-based similarity clustering is presented and its complete implementation in terms of the distributed algebra is shown.

-

An experimental evaluation of the framework shows excellent load balancing and good speedup.

The rest of the paper is structured as follows. Related work is described in Sect. 2. In Sect. 3, Secondo as a generic extensible DBMS is introduced, providing a basic engine and algebra. In Sect. 4, the distributed algebra is defined. Sect. 5 describes the implementation of this algebra in Secondo. In Sect. 6 we show the algorithm for distributed clustering and its implementation. A brief experimental evaluation of the framework is given in Sect. 7. Finally, Sect. 8 concludes the paper.

2 Related work

Our algebra for generic distributed query processing in Secondo has related work in the areas of distributed systems, distributed databases, and data analytics. In the application section of this paper, we present an algorithm for the density-based similarity clustering (see Sect. 6). The most related work in these areas is discussed in this section.

2.1 Distributed system coordination

Developing a distributed software system is a complex task. Distributed algorithms have to be coordinated on several nodes of a cluster. Apache ZooKeeper [29], HashiCorp Consul [8] and etcd [16] are software components used to coordinate distributed systems. These systems cover topics such as service discovery and configuration management. Even these components are used in many software projects; some distributed computing engines have also implemented their own specialized resource management components (such as YARN—Yet Another Resource Negotiator [54], which is part of Hadoop).

In our distributed array implementation, we send the information to coordinate the system directly from the master node to the worker nodes. The worker nodes are manually managed in the current version of our implementation. Topics such as high availability or replication will be part of a further version.

2.2 Distributed file systems and distributed databases

2.2.1 General remarks

In this section we discuss systems for distributed analytical data processing.

A major distinction between those systems and the distributed algebra of this paper is genericity. The systems to be discussed all have some data model that is manipulated by operations, for example, tables or key-value pairs. In contrast, distributed algebra does not have any fixed data model. What is predetermined is the model of a distributed array which is just a simple abstraction of a partitioned (and distributed) data set. Furthermore, it is fixed that sets of tuples are used for data exchange. The field types of distributed arrays are absolutely generic.

This makes it possible to plug in a basic engine providing types and operations, if you want, a “legacy system”, with all its data structures and capabilities. The clear separation between the algebra describing distributed query processing and the basic algebra (or engine) defining local data and query processing by workers is unique for our approach.

Our implementation of the distributed algebra so far uses Secondo as a basic engine; we are currently working on embedding other systems, PostgreSQL in particular.

Many systems provide some level of extensibility such as user defined data types and functions. However, embedding a complete basic engine is a quite different matter: we simply inherit everything there is, without doing extra work for every part, as in the case of extensibility. The basic engine Secondo is a rich environment developed over many years. Beyond standard relational query processing it has specialized “algebra modules” for spatial and spatio-temporal data, index structures such as R-trees, TB-trees, M-trees, symbolic trajectories, image and raster data, map matching algorithms, DBScan, and so forth. There are persistent as well as main memory data structures, allowing distributed in-memory processing.

Moreover, the scope of extensibility within the basic engine Secondo is much higher than in other systems. Whereas in most systems user defined types can be added at the level of attribute types in tables, the architecture of Secondo is designed around extensibility. A DBMS data model is implemented completely in terms of algebra modules. Hence one can add not only atomic data types but also any kind of representation structure such as an index, a graph, or a column-oriented relation representation, for example.

In the following, we refer to Distributed Algebra as DA and to Distributed Algebra with Secondo as a basic engine as DA/Secondo, respectively.

2.2.2 MapReduce and distributed file systems

In 2004, the publication of the MapReduce paper [10] proposed a new technique for the distributed handling of computational tasks. Using MapReduce, calculations are performed in two phases: (1) a map phase and (2) a reduce phase. These tasks are executed on a cluster of nodes in a distributed and fault-tolerant manner. Map and reduce steps are formulated directly in a programming language.

The Google File System (GFS) [21] and its open-source counterpart Hadoop File System (HDFS) [45] are distributed file systems. These file systems represent the backbone of the MapReduce frameworks; they are used for the input and output of large datasets. Stored files are split up into fixed-sized chunks and distributed across a cluster of nodes. To deal with failing nodes, the chunks can be replicated. Due to the architecture of the file systems, data are stored in an append-only manner.

To exploit node level data locality, the MapReduce Master Node tries to schedule jobs in a way that the chunks of the input data are stored on the node that processes the data [21, p. 5]. If the chunks are stored on another node, the data need to be transferred over a network, which is slow and time-consuming. HDFS addresses data locality only on chunks and not based on the value of the stored data, which can lead to performance problems [14].

In our distributed array implementation, data are directly assigned to the nodes in a way that data locality is exploited, and the amount of transferred data is minimized. The output of a query can be directly partitioned and transferred to the worker nodes that are responsible for the next processing step (see the discussion of the \(\underline{\smash { dfmatrix }}\) data type in Sect. 4). In addition, Secondo uses a type system. Before a query is executed, the query is checked for type errors. Therefore, in our distributed array implementation, the data are stored typed. On a distributed file system, data are stored as raw bytes. Type and structure information need to be implemented in the application that reads and writes the data.

2.2.3 Distributed databases and frameworks for analytic processing

HBase [27] (the open-source counterpart of BigTable [6]) is a distributed column-oriented database built on top of HDFS. HBase is optimized to handle large tables of data. Tables consist of rows of multiple column families (a set of key-value pairs). Internally, the data are stored in String Sorted Tables (SSTables) [6, 41], which are handled by HDFS. HBase provides simple operations to access the data (e.g., get and scan) and does not support complex operations such as joins; MapReduce jobs can be used to process the data. The DA can of course operate on data that is stored across a cluster of systems in a distributed manner. In addition, DA/Secondo offers a wide range of operators (such as joins), which can be used to process the data.

Key-Value Stores such as Amazon Dynamo [11] or RocksDB [49] provide a simple data model consisting of a key and a value. Systems such as HBase, BigTable, or Apache Cassandra [32] provide a slightly more complex data model. A key can have multiple values; internally, the data are stored as key-value pairs on disk. The values in these implementations are limited to scalar data types such as int, byte, or string.

Apache Hive [50] is a warehousing solution that is built on top of Apache Hadoop, which provides an SQL-like query language to process in HDFS stored data. Hive contains only a limited set of operations.

Apache Pig [18] provides a query language (called Pig Latin [40]) for processing large amounts of data. With Pig Latin, users no longer need to write their own MapReduce programs; they write queries which are directly translated into MapReduce jobs. Pig Latin focuses primarily on analytical workloads.

Pig Latin provides an interesting data model built from atomic types and tuples, bags and maps. Tuples may have flexible schemas and may be nested. A program is expressed as a sequence of assignments to variables, applying one operation in each step. Operations such as FILTER, FOREACH ... GENERATE, or COGROUP, JOIN, ORDER, can be applied to distributed data sets.

Comparing to DA, we find a fixed, not a generic data model. Operations on distributed data sets are tied to this model and perform implicit redistribution. In DA, we can nest operations (i.e., write sequences of operations) and we have a strict separation between distributed and local computation. Comparing to DA/Secondo, of course, the set of available data structures and operations is much more limited. For example, we do not have any indexes or index-based join algorithms.

Pigeon [13] is a spatial extension to Pig which supports spatial data types and operations. Pig was not extensible by atomic data types; any other type than number or string needed to be represented as bytearray. Hence the Pigeon extension represents spatial data types as Well-Known Text or Well-Known Binary exchange formats within Pig. Spatial functions need to convert from and to this format when working on the data.

Remarkable for a spatial extension is that there are no facilities for spatial indexing or spatial join. In the examples in [13], spatial join is expressed as cross product and filtering (CROSS and FILTER operators of PigLatin), a very inefficient evaluation. This is simply due to the fact that extensions by index structures or spatial join operators are not possible in the Pig framework.

In contrast, spatial data types, spatial indexing and spatial join are supported in DA/Secondo as demonstrated later in this paper.

The publication of the MapReduce paper created the foundation for many new applications and ideas to process distributed data. A widely used framework to process data in a scalable and distributed manner is Apache Spark [57]. In Spark, data are stored in resilient distributed datasets (RDDs) [56] in a distributed way across a cluster of nodes. In contrast to earlier work, RDDs can reside in memory in a fault-tolerant way. Hence Spark supports in particular distributed in-memory processing.

Spark defines an interesting set of operations on RDDs that may be compared to those of DA. For example, there is an operation (called transformation) \(map(f: T =>U\)), parameterized by a function mapping values of type T into those of type U. Applied to an RDD[T], i.e., an RDD with partitions of type U, it returns an RDD[U].

One can see that RDDs are generic and parameterized by types, hence they are fairly similar to the distributed arrays of this paper. Also the map transformation corresponds to our dmap operator, introduced later.

Differences are that RDDs and operations on them are not formalized, especially the way fields of RDDs are mapped to workers and are remapped by operations is determined only by the implementation. In DA, field indices do play a role, are controlled by a programmer and are part of the formalization. It is unclear whether in Spark the number of fields of an RDD can be chosen independently from the number of workers, as in DA. This is relevant for load balancing as shown later in the paper.

Another difference is that only some of the operations are generic; others assume types for key-value pairs or sequences. For example, groupByKey, join or cogroup transformations assume key-value pairs. In contrast, DA has only generic operations and all the transformation operations of RDDs can be expressed in DA/Secondo by combining DA operations with basic engine operations. How data are repartitioned is precisely defined in the DA.

Dryad [30] is a distributed execution engine that is developed at Microsoft. DryadLINQ [55] provides an interface for Dryad which can be consumed by Microsoft programming languages such as C#. In contrast to Secondo and our algebra implementation, the goal of Dryad is to provide a distributed environment for the parallel execution of user-provided programs such as Hadoop. The goal of our implementation is to provide an extensible environment with a broad range of predefined operators that can be used to progress data and which can also be enhanced with new operators by the user.

Another popular framework to process large amounts of data these days is Apache Flink [5]. This software system, originating from the Stratosphere Platform [1], is designed to handle batch and stream processing jobs. Processing batches (historical data or static data sets) is treated as a special form of stream processing. Data batches are processed in a time-agnostic fashion and handled as a bounded data stream. Like our system, Flink performs type checking and can be extended by user-defined operators and data types. However, Secondo ships with a larger amount of operators and data types. For example, it can handle spatial and spatio-temporal data out of the box.

Parallel Secondo [33] and Distributed Secondo [38] are two already existing approaches to execute queries in Secondo [24] in a distributed and parallel manner. Both approaches are integrating an existing software component into Secondo to achieve the distributed query execution. Parallel Secondo uses Apache Hadoop (the open source counterpart of the MapReduce framework) to distribute tasks over several Secondo installations on a cluster of nodes. Distributed Secondo uses Apache Cassandra as a distributed key-value store for the distributed storage of data, service discovery, and job scheduling. Both implementations use an additional component (Hadoop or Cassandra) to parallelize Secondo. The algebra for distributed arrays works without further components and provides the parallelization directly in Secondo.

2.3 Array databases and data frames

Array databases such as Rasdaman (raster data manager) [3], SciDB [47], or SciQL [58] focus on the processing of data cubes (multi-dimensional arrays). In addition to specialized databases, there are raster data extensions for relational database management systems such as PostGIS Raster [44] or Oracle GeoRaster [42]. Array databases are used to process data like maps (two dimensional) or satellite image time series (three dimensional).

Our distributed array implementation works with one-dimensional arrays. The array is just used to structure the data for the workers, representing a partitioned distributed data set. Array databases use the dimensions of the array to represent the location of the data in the n-dimensional space, which is a different concept. Secondo works with index structures (such as the R-Tree [26]) for efficient data access. In addition, in array databases, the values of the array cells are restricted to primitive or common SQL types like integers or strings. In our model and implementation, the data types of the fields can be any type provided by the basic engine, hence an arbitrary type available in Secondo.

Libraries for processing array structured data (also called data frames), such as Pandas [36] or NumPy [39], are widely used in scientific computing these days. Such libraries are used to apply operations such as filters, calculations, or mutations on array structured data. SciHadoop [4] is using Hadoop to process data arrays in a distributed and parallel way. SciHive [19] is a system that uses Hive to process array structured data. AFrame [46] is another implementation of a data frame library which is built on top of Apache AsterixDB [2]. The goal of the implementation is to process the data frames in a distributed manner and hide the complexity of the distributed system from the user. These libraries and systems are intended for direct integration into the source code. These libraries simplify the handling of arrays, bring along data types and functions, and some also allow the distributed and parallel processing of arrays. Our system instead works with a query language to describe operator trees. Further, Secondo is an extensible database system that can be extended with new operators and data types by a user.

In [17] a Query Processing Framework for Large-Scale Scientific Data Analysis is proposed. Using the described framework, large amounts of data can be processed by using an SQL-like query language. This framework enhances the Apache MRQL [37] language in such a way that array data can be efficiently processed. MRQL uses components, such as Hadoop, Flink or Spark, for the execution of the queries. In contrast to our distributed array implementation, the paper focuses on the implementation of matrix operations to speed up algorithms to process the data arrays.

2.4 Clustering

In Sect. 6, we present an algorithm for distributed density-based similarity clustering. The main purpose of the section in the context of this paper is to serve as an illustration of distributed algebra as a language for formulating and implementing distributed algorithms. Nevertheless, the algorithm is a novel contribution by itself.

Density-based clustering, a problem introduced in [15], is a well established technology that has numerous applications in data mining and many other fields. The basic idea is to group together objects that have enough similar objects in their neighborhood. For an efficient implementation, a method is needed to retrieve objects close to a given object. The DBScan algorithm [15] was originally formulated for Euclidean spaces and supported by an R-tree index. But it can also be used with any metric distance function (see for example [31]) and then be supported by an M-tree [7].

Here we only discuss algorithms for distributed density-based clustering. There are two main classes of approaches. The first can be characterized as (non-recursive) divide-and-conquer, consisting of the three steps:

-

1.

Partition the data set.

-

2.

Solve the problem independently for each partition.

-

3.

Merge the local solutions into a global solution.

It is obvious that a spatial or similarity (distance-based) partitioning is needed for the problem at hand. Algorithms falling in this category are [9, 28, 43, 53]. They differ in the partitioning strategy, the way neighbors from adjacent partitions are retrieved, and how local clusters are merged into global clusters. In [53] a global R-tree is introduced that can retrieve nodes across partition (computer) boundaries. The other algorithms [9, 28, 43] include in the partitioning overlap areas at the boundaries so that neighbors from adjacent partitions can be retrieved locally. [28] improves on [53] by determining cluster merge candidate pairs in a distributed manner rather than on the master. [9] strives to improve partitioning by placing partition boundaries in sparse areas of the data set. [43] introduces a very efficient merge technique based on a union-find structure.

These algorithms are all restricted to handle objects in vector spaces. Except for [53] they all have a problem with higher-dimensional vector spaces because in d dimensions \(2^d\) boundary areas need to be considered.

A second approach is developed in [34]. This is based on the idea of creating a k-nearest-neighbor graph by a randomized algorithm [12]. This is modified to create edges between nodes if their distance is less than Eps, the distance parameter of density-based clustering. On the resulting graph, finding clusters corresponds to computing connecting components.

This algorithm is formulated for a node-centric distributed framework for graph algorithms as given by Pregel [35] or GraphX [52]. In contrast to all algorithms of the first class, it can handle arbitrary symmetric distance (similarity) functions. However, the randomized construction of the kNN graph does not yield an exact result; therefore the result of clustering is also an approximation.

The algorithm of this paper, called SDC (Secondo Distributed Clustering), follows the first strategy but implements all steps in a purely distance-based manner. That is, we introduce a novel technique for balanced distance-based partitioning that does not rely on Euclidean space. The computation of overlap with adjacent partitions is based on a new distance-based criterion (Theorem 1). All search operations in partitioning or local DBScan use M-trees.

Another novel aspect is that merging clusters globally is viewed and efficiently implemented as computing connected components on a graph of merge tasks. Repeated binary merging of components is avoided.

Compared to algorithms of the first class, SDC is the only algorithm working with arbitrary metric similarity functions. Compared to [34] it provides an exact instead of an approximate solution.

3 A basic engine: Secondo

As described in the introduction, the concept of the Distributed Algebra rests on the availability of a basic engine, providing data types and operations for query processing. In principle, any localFootnote 1 database system should be suitable. If it is extensible, the distributed system will profit from its extensibility.

The basic engine can be used in two ways: (i) it can provide query processing, and (ii) it can serve as an environment for implementing the Distributed Algebra. In our implementation, Secondo is used for both purposes.

3.1 Requirements for basic engines

The capabilities required from a basic engine to provide query processing are the following:

-

1.

Create and delete, open and close a database (where a database is a set of objects given by name, type, and value);

-

2.

create an object in a database as the result of a query and delete an object;

-

3.

offer a data type for relations and queries over it;

-

4.

write a relation resulting from a queryFootnote 2 efficiently into a binary file or distribute it into several files;

-

5.

read a relation efficiently from one or several binary files into query processing.

The capabilities (1) through (3) are obviously fulfilled by any relational DBMS. Capabilities (4) and (5) are required for data exchange and might require slight extensions, depending on the given local DBMS. In Sect. 4 we show how these capabilities are motivated by operations of the Distributed Algebra.

3.2 Secondo

In this section we provide a brief introduction to Secondo as a basic engine. It also shows an environment that permits a relatively easy implementation of the Distributed Algebra.

Secondo is a DBMS prototype developed at University of Hagen, Germany, with a focus on extensible architecture and support of spatial and spatio-temporal (moving object) data. The architecture is shown in Fig. 1.

There are three major components: the graphical user interface, the optimizer and the kernel, written in Java, Prolog, and C++, respectively. The kernel uses BerkeleyDB as a storage manager and is extensible by so-called algebra modules. Each algebra module provides some types (type constructors in general, i.e., parameterized types) and operations. The query processor evaluates expressions over the types of the available algebras. Note that the kernel does not have a fixed data model. Moreover, everything including relations, tuples, and index structures is implemented within algebra modules.

The data model of the kernel and its interface between system frame and algebra modules is based on the idea of second-order signature [22]. Here a first signature provides a type system, a second signature is defined over the types of the first signature. This is explained in more detail in Sect. 4.3.

To implement a type constructor, one needs to provide a (usually persistent) data structure and import and export functions for values of the type. To implement an operator, one needs to implement a type mapping function and a value mapping function, as the objects manipulated by operators are (type, value) pairs.

A database is a pair (T, O) where T is a set of named types and O is a set of named objects. There are seven basic commands to manipulate such a generic database:

Here a type expression is a term of the first signature built over the type constructors of available algebras. A value expression is a term of the second signature built by applying operations of the available algebras to constants and database objects.

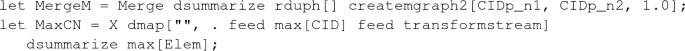

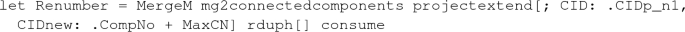

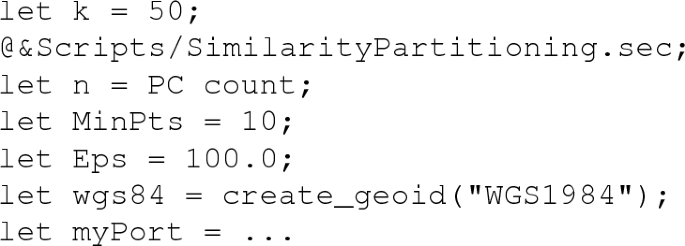

The most important commands are let and query. let creates a new database object whose type and value result from evaluating a value expression. query evaluates an expression and returns a result to the user. Note that operations may have side effects such as updating a relation or writing a file. Some example commands are:

The first examples illustrate the basic mechanisms and that query just evaluates an arbitrary expression. The last two examples show that expressions can in particular be query plans as they might be created by a query optimizer. In fact, the Secondo optimizer creates such plans. Generally, query plans use pipelining or streaming to pass tuples between operators; here the feed operator creates a stream of tuples from a relation; the consume operator creates a relation from a stream of tuples. The exactmatch operator takes a B-tree and a relation and returns the tuples fulfilling the exact-match query by the third argument. Operators applied to types representing collections of data are usually written in postfix notation. Operator syntax is decided by the implementor. Note that the query processing operators used in the examples and in the main algorithm of this paper can be looked up in the Appendix.

Obviously Secondo fulfills the requirements (1) through (3) stated for basic engines. It has been extended by operators for writing streams of tuples into (many) files and for reading a stream from files to fulfill (4) and (5).

4 The distributed algebra

The Distributed Algebra (technically in Secondo the Distributed2Algebra) provides operations that allow one Secondo system to control a set of Secondo servers running on the same or remote computers. It acts as a client to these servers. One can start and stop the servers, provided Secondo monitor processes are already running on the involved computers. One can send commands and queries in parallel and receive results from the servers.

The Secondo system controlling the servers is called the master and the servers are called the workers.

This algebra actually provides two levels for interaction with the servers. The lower level provides operations

-

to start, check and stop servers

-

to send sets of commands in parallel and see the responses from all servers

-

to execute queries on all servers

-

to distribute objects and files

Normally a user does not need to use operations of the lower level.

The upper level is implemented using operations of the lower level. It essentially provides an abstraction called distributed array. A distributed array has slots of some type X which are distributed over a given set of workers. Slots may be of any Secondo type, including relations and indexes, for example. Each worker may store one or more slots.

Query processing is formulated by applying Secondo queries in parallel to all slots of distributed arrays which results in new distributed arrays. To be precise, all workers work in parallel, but each worker processes its assigned slots sequentially.

Data can be distributed in various ways from the master into a distributed array. They can also be collected from a distributed array to be available on the master.

In the following, we describe the upper level of the Distributed Algebra in terms of its data types and operations. We first provide an informal overview. In Sect. 4.3 the semantics of types and operations is defined formally and the use of operations is illustrated by examples.

4.1 Types

The algebra provides two types of distributed arrays called

-

\(\underline{\smash { darray }}\)(X) - distributed array - and

-

\(\underline{\smash { dfarray }}\)(Y) - distributed file array.

There exist also variants of these types called \(\underline{\smash { pdarray }}\) and \(\underline{\smash { pdfarray }}\), respectively, where only some of the fields are defined (p for partial).

Here X may be any Secondo typeFootnote 3 and the respective values are stored in databases on the workers. In contrast, Y must be a relation type and the values are stored in binary files on the respective workers. In query processing, such binary files are transferred between workers, or between master and workers. Hence the main use of \(\underline{\smash { darray }}\) is for the persistent distributed database; the main use of \(\underline{\smash { dfarray }}\) and \(\underline{\smash { dfmatrix }}\) (explained below) is for intermediate results and shuffling of data between workers.

Figure 2 illustrates both types of distributed arrays. Often slots are assigned in a cyclic manner to servers as shown, but there exist operations creating a different assignment. The implementation of a \(\underline{\smash { darray }}\) or \(\underline{\smash { dfarray }}\) stores explicitly how slots are mapped to servers. The type information of a \(\underline{\smash { darray }}\) or \(\underline{\smash { dfarray }}\) is the type of the slots, the value contains the number of slots, the set of workers, and the assignment of slots to workers.

A distributed array can be constructed by partitioning data on the master into partitions \(P_1, ..., P_m\) and then moving partitions \(P_i\) into slots \(S_i\). This is illustrated in Fig. 3.

A third type offered is

-

\(\underline{\smash { dfmatrix }}\)(Y) - distributed file matrix

Slots Y of the matrix must be relation-valued, as for \(\underline{\smash { dfarray }}\). This type supports redistributing data which are partitioned in a certain way on workers already. It is illustrated in Fig. 4.

The matrix arises when all servers partition their data in parallel. In the next step, each partition, that is, each column of the matrix, is moved into one slot of a distributed file array as shown in Fig. 5.

4.2 Operations

The following classes of operations are available:

-

Distributing data to the workers

-

Distributed processing by the workers

-

Applying a function (Secondo query) to each field of a distributed array

-

Applying a function to each pair of corresponding fields of two distributed arrays (supporting join)

-

Redistributing data between workers

-

Adaptive processing of partitioned data

-

-

Collecting data from the workers

4.2.1 Distributing data to the workers

The following operations come in a d-variant and a df-variant (prefix). The d-variant creates a \(\underline{\smash { darray }}\), the df-variant a \(\underline{\smash { dfarray }}\).

- ddistribute2, dfdistribute2:

-

Distribute a stream of tuples on the master into a distributed array. Parameters are an integer attribute, the number of slots and a Workers relation. A tuple is inserted into the slot corresponding to its attribute value modulo the number of slots. See Fig. 3.

- ddistribute3, dfdistribute3:

-

Distribute a stream of tuples into a distributed array. Parameters are an integer i, a Boolean b, and the Workers. Tuples are distributed round robin into i slots, if b is true. Otherwise slots are filled sequentially, each to capacity i, using as many slots as are needed.

- ddistribute4, dfdistribute4:

-

Distribute a stream of tuples into a distributed array. Here a function instead of an attribute decides where to put the tuple.

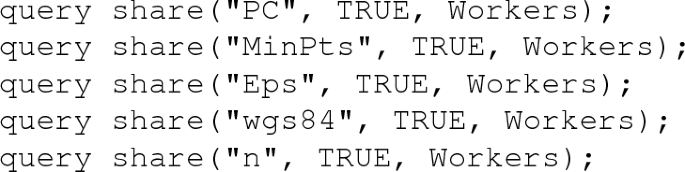

- share:

-

An object of the master database whose name is given as a string argument is distributed to all worker databases.

- dlet:

-

Executes a let command on each worker associated with its argument array; it further executes the same command on the master. This is needed so that the master can do type checking on the query expressions to be executed by workers in following dmap operations.

- dcommand:

-

Executes an arbitrary command on each worker associated with its argument array.

4.2.2 Distributed processing by the workers

Operations:

- dmap:

-

Evaluates a Secondo query on each field of a distributed array of type \(\underline{\smash { darray }}\) or \(\underline{\smash { dfarray }}\). Returns a \(\underline{\smash { dfarray }}\) if the result is a tuple stream, otherwise a \(\underline{\smash { darray }}\). In a parameter query, one refers to the field argument by “.” or $1.

Sometimes it is useful to access the field number within a parameter query. For this purpose, all variants of dmap operators provide an extra argument within parameter functions. For dmap, one can refer to the field number by “..” or by $2.

- dmap2:

-

Binary variant of the previous operation mainly for processing joins. Always two fields with the same index are arguments to the query. One refers to field arguments by “.” and “..”, respectively, the field number is the next argument, $3.

- dmap3, ..., dmap8:

-

Variants of dmap for up to 8 argument arrays. One can refer to fields by “.”, “..”, or by $1, ..., $8.

- pdmap, ..., pdmap8:

-

Variants of dmap which take as an additional first argument a stream of slot numbers and evaluate parameter queries only on those slot numbers. They return a partial \(\underline{\smash { darray }}\) or \(\underline{\smash { dfarray }}\) (\(\underline{\smash { pdarray }}\) or \(\underline{\smash { pdfarray }}\)) where unevaluated fields are undefined.

- dproduct:

-

Arguments are two \(\underline{\smash { darray }}\)s or \(\underline{\smash { dfarray }}\)s with relation fields. Each field of the first argument is combined with the union of all fields of the second argument. Can be used to evaluate a Cartesian product or a generic join with an arbitrary condition. No specific partitioning is needed for a join. But the operation is expensive, as all fields of the second argument are moved to the worker storing the field of the first argument.

- partition, partitionF:

-

Partitions the fields of a \(\underline{\smash { darray }}\) or \(\underline{\smash { dfarray }}\) by a function (similar to ddistribute4 on the master). Result is a \(\underline{\smash { dfmatrix }}\). An integer parameter decides whether the matrix will have the same number of slots as the argument array or a different one. Variant partitionF allows one to manipulate the input relation of a field, e.g., by filtering tuples or by adding attributes, before the distribution function is applied. See Fig. 4.

- collect2, collectB:

-

Collect the columns of a \(\underline{\smash { dfmatrix }}\) into a \(\underline{\smash { dfarray }}\). See Fig. 5. The variant collectB assigns slots to workers in a balanced way, that is, the sum of slot sizes per worker is similar. Some workers may have more slots than others. This helps to balance the work load for skewed partition sizes.

- areduce:

-

Applies a function (Secondo query) to all tuples of a partition (column) of a \(\underline{\smash { dfmatrix }}\). Here it is not predetermined which worker will read the column and evaluate it. Instead, when the number of slots s is larger than the number of workers m, then each worker i gets assigned slot i, for \(i = 0, ..., m-1\). From then on, the next worker which finishes its job will process the next slot. This is very useful to compensate for speed differences of machines or size differences in assigned jobs.

- areduce2:

-

Binary variant of areduce, mainly for processing joins.

4.2.3 Collecting data from the workers

Operations:

- dsummarize:

-

Collects all tuples (or values) from a \(\underline{\smash { darray }}\) or \(\underline{\smash { dfarray }}\) into a tuple stream (or value stream) on the master. Works also for \(\underline{\smash { pdarray }}\) and \(\underline{\smash { pdfarray }}\).

- getValue:

-

Converts a distributed array into a local array. Recommended only for atomic field values; may otherwise be expensive.

- getValueP:

-

Variant of getValue applicable to \(\underline{\smash { pdarray }}\) or \(\underline{\smash { pdfarray }}\). Provides a parameter to replace undefined values in order to return a complete local array on the master.

- tie:

-

Applies aggregation to a local array, e.g., to determine the sum of field values. (An operation not of the Distributed2Algebra but of the ArrayAlgebra in Secondo).

4.3 Formal definition of the distributed algebra

In this section, we formally define the syntax and semantics of the Distributed Algebra. We also illustrate the use of operations by examples.

Formally, a system of types and operations is a (many-sorted) algebra. It consists of a signature which provides sorts and operators, defining for each operator the argument sorts and the result sort. A signature defines a set of terms. To define the semantics, one needs to assign carrier sets to the sorts and functions to the operators that are mappings on the respective carrier sets. The signature together with carrier sets and functions defines the algebra.

We assume that data types are built from some basic types and type constructors. The type system is itself described by a signature [22]. In this signature, the sorts are so-called kinds and the operators are type constructors. The terms of the signature are exactly the available types of the type system.

For example, consider the signature shown in Fig. 6.

It has kinds BASE and ARRAY and type constructors \(\underline{\smash { int }}\), \(\underline{\smash { real }}\), \(\underline{\smash { bool }}\), and \(\underline{\smash { array }}\). The types defined are the terms of the signature, namely, \(\underline{\smash { int }}\), \(\underline{\smash { real }}\), \(\underline{\smash { bool }}\), \(\underline{\smash { array }}\)(\(\underline{\smash { int }}\)), \(\underline{\smash { array }}\)(\(\underline{\smash { real }}\)), \(\underline{\smash { array }}\)(\(\underline{\smash { bool }}\)). Note that basic types are just type constructors without arguments.

4.3.1 Types

The Distributed Algebra has the type system shown in Fig. 7.

Here BASIC is a kind denoting the complete set of types available in the basic engine; REL is the set of relation types of that engine. In our implementation BASIC corresponds to the data types of Secondo. The type constructors build distributed array and matrix types in DARRAY and DMATRIX. Finally, we rely on a generic \(\underline{\smash { array }}\) data type of the basic engine used in data transfer to the master.

Semantics of types are their respective domains or carrier sets, in algebraic terminology, denoted \(A_t\) for a type t.

Let \(\alpha \) be a type of the basic engine, \(\alpha \in BASIC\), and let \( WR \) be the set of possible (non-empty) worker relations.

The carrier set of \(\underline{\smash { darray }}\) is:

Hence the value of a distributed array with fields of type \(\alpha \) consists of an integer n, defining the number of fields (slots) of the array, a set of workers W, a function f which assigns to each field a value of type \(\alpha \), and a mapping g describing how fields are assigned to workers.

The carrier set of type \(\underline{\smash { dfarray }}\) is defined in the same way; the only difference is that \(\alpha \) must be a relation type, \(\alpha \in REL\). This is because fields are stored as binary files and this representation is available only for relations.

Types \(\underline{\smash { pdarray }}\) and \(\underline{\smash { pdfarray }}\) are also defined similarly; here the difference is that f and g are partial functions.

Let \(\alpha \in REL\). The carrier set of \(\underline{\smash { dfmatrix }}\) is:

This describes a matrix with m rows and n columns where each row defines a partitioning of a set of tuples at one worker and each column a logical partition, as illustrated in Fig. 4.

The \(\underline{\smash { array }}\) type of the basic engine is defined as follows:

4.3.2 Operations for distributed processing by workers

Here we define the semantics of operators of Section 4.2.2. For each operator op, we show the signature and define a function \(f_\mathbf{op }\) from the carrier sets of the arguments to the carrier set of the result.

All operators taking \(\underline{\smash { darray }}\) arguments also take \(\underline{\smash { dfarray }}\) arguments.

All dmap, pdmap and areduce operators may return either \(\underline{\smash { darrays }}\) or \(\underline{\smash { dfarrays }}\). The result type depends on the resulting field type: If it is a \(\underline{\smash { stream }}\)(\(\underline{\smash { tuple }}\)((\(\alpha \)))) type, then the result is a \(\underline{\smash { dfarray }}\), otherwise a \(\underline{\smash { darray }}\). Hence in writing a query, the user can decide whether a \(\underline{\smash { darray }}\) or a \(\underline{\smash { dfarray }}\) is built by applying consume to a tuple stream for a field or not.

We omit these cases in the sequel, showing the definitions only for \(\underline{\smash { darray }}\), to keep the formalism simple and concise.

Here \((\alpha \rightarrow \beta )\) is the type of functions mapping from \(A_\alpha \) to \(A_\beta \).

To illustrate the use of operators, we introduce an example database with spatial data as provided by OpenStreetMap [48] and GeoFabrik [20]. We use example relations with the following schemas, originally on the master. Such data can be obtained for many regions of the world at different scales like continents, states, or administrative units.

Example 1

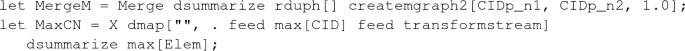

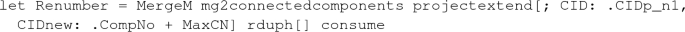

Assume we have created distributed arrays for these relations called BuildingsD, RoadsD, and WaterwaysD by commands shown in Sect. 4.3.3. Then we can apply dmap to retrieve all roads with speed limit 30:

The first argument to dmap is the distributed array, the second a string, and the third the function to be applied. In the function, the “.” refers to the argument. The string argument is omitted in the formal definition. In the implementation, it is used to name objects in the worker databases; the name has the form \(\texttt {<name>\_<slot\_number>}\), for example, RoadsD30_5. One can give an empty string in a query where the intermediate result on the workers is not needed any more; in this case a unique name for the object in the worker database is generated automatically.

The result is a distributed array RoadsD30 where each field contains a relation with the roads having speed limit 30.

Note that the two arrays must have the same size and that the mapping of slots to workers is determined by the first argument. In the implementation, slots of the second argument assigned to different workers than for the first argument are copied to the first argument worker for execution.

Example 2

Using dmap2, we can formulate a spatial join on the distributed tables RoadsD and WaterwaysD. It is necessary that both tables are spatially co-partitioned so that joins can only occur between tuples in a pair of slots with the same index. In Sect. 4.3.3 it is shown how to create partitions in this way.

“Count the number of intersections between roads and waterways.”

Here for each pair of slots an itSpatialJoin operator is applied to the respective pair of (tuple streams from) relations. It joins pairs of tuples whose bounding boxes overlap. In the following refinement step, the actual geometries are checked for intersection.Footnote 4 The notation {r} is a renaming operator, appending _r to each attribute in the tuple stream.

The additional argument myPort is a port number used in the implementation for data transfer between workers.

Further operations dmap3, ..., dmap8 are defined in an analogous manner. For all these operators, the mapping from slots to workers is taken from the first argument and slots from other arguments are copied to the respective workers.

Here a stream of integer values is modeled formally as a sequence of integers. Operator pdmap can be used if it is known that only certain slots can yield results in an evaluation; for an example use see [51]. The operators pdmap2, ..., pdmap8 are defined similarly; as for dmap operators, slots are copied to the first argument workers if necessary.

The dproduct operator is defined for two distributed relations, that is, \(\alpha _1, \alpha _2 \in REL\).

Here it is not required that the two arrays of relations have the same size (number of slots). Each relation in a slot of the first array is combined with the union of all relations of the second array. This is needed to support a general join operation for which no partitioning exists that would support joins on pairs of slots. In the implementation, all slots of the second array are copied to the respective worker for a slot of the first array. For this, again a port number argument is needed.

Example 3

“Find all pairs of roads with a similar name.”

Before applying the dproduct operator, we reduce to named roads, eliminate duplicates from spatial partitioning, and project to the relevant attributes. Then for all pairs of named roads, the edit distance of the names is determined by the ldistance operator and required to lie between 1 and 2. The symmjoin operator is a symmetric variant of a nested loop join. The filter condition after the symmjoin avoids reporting the same pair twice.

Here the union of all relations assigned to worker j is redistributed according to function h. See Fig. 4. The variant partitionF allows one to apply an additional mapping to the argument relations before repartitioning. It has the following signature:

The definition of the function is a slight extension to the one for \(f_\mathbf{partition} \) and is omitted.

This operator collects columns of a distributed matrix into a distributed file array, assigning slots round robin. The variant collectB assigns slots to workers, balancing slot sizes. For it the value of function g is not defined as it depends on the algorithm for balancing slot sizes which is not specified here. Together, partition and collect2 or collectB realize a repartitioning of a distributed relation. See Fig. 5.

Example 4

“Find all pairs of distinct roads with the same name.”

Assuming that roads are partitioned spatially, we need to repartition by names before executing the join.

Here after repartitioning, the self-join can be performed locally for each slot. Assuming the result is relatively small, it is collected on the master by dsummarize.

Semantically, areduce is the same as collect2 followed by a dmap. In collecting the columns from the different servers (workers), a function is applied. The reason to have a separate operator and, indeed, the \(\underline{\smash { dfmatrix }}\) type as an intermediate result, is the adaptive implementation of areduce. Since the data of a column of the \(\underline{\smash { dfmatrix }}\) need to be copied between computers anyway, it is possible to assign any free worker to do that at no extra cost. Similar as for collectB, the value of function g is not defined for areduce as the assignment of slots to workers cannot be predicted.

Example 5

The previous query written with areduce is:

Here within partitionF “.” refers to the relation and “..” refers to the tuple argument in the first and second argument function, respectively.

The binary variant areduce2 has signature:

The formal definition of semantics is similar to areduce and is omitted.

4.3.3 Operations for distributing data to the workers

The operators ddistribute2, ddistribute3, and ddistribute4 and their dfdistribute variants distribute data from a tuple stream on the master into the fields of a distributed array.Footnote 5 We define the first and second of these operators which distribute by an attribute value and randomlyFootnote 6, respectively. Operator ddistribute4 distributes by a function on the tuple which is similar to ddistribute2.

Let WR denote the set of possible worker relations (of a relation type). For a tuple type \(\underline{\smash { tuple }}(\alpha )\) let \( attr (\alpha , \beta )\) denote the name of an attribute of type \(\beta \). Such an attribute a represents a function \( attr _a\) on a tuple t so that \( attr _a(t)\) is a value of type \(\beta \).

In the following, we use the notation \(<s_1, ..., s_n | f(s_i)>\) to restrict a sequence to the elements \(s_i\) for which \(f(s_i) = true \). Functions \(f(s_i)\) are written in \(\lambda x. expr(x) \) notation.

Hence the attribute a determines the slot that the tuple is assigned to. Note that all ddistribute operators maintain the order of the input stream within the slots.

The operator distributes tuples of the input stream either round robin to the n slots of a distributed array, or by sequentially filling each slot except the last one with n elements, depending on parameter b.

Example 6

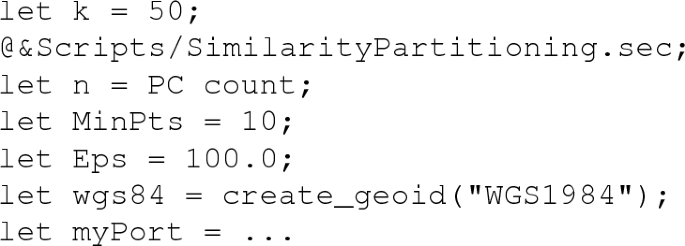

We distribute the Buildings relation round robin into 50 fields of a distributed array. A relation Workers is present in the database.

Example 7

We create a grid-based spatial partitioning of the relations Roads and Waterways.

The distribution is based on a regular grid as shown in Fig. 8. A spatial object is assigned to all cells intersecting its bounding box. The cellnumber operator returns a stream of integers, the numbers of grid cells intersecting the first argument, a rectangle. The extendstream operator makes a copy of the input tuple for each such value, extending it by an attribute Cell with this value. So we get a copy of each road tuple for each cell it intersects. The cell number is then used for distribution.

In some queries on the distributed Roads relation we want to avoid duplicate results. For this purpose, the tuple with the first cell number is designated as original. See Example 3.

The relation Waterways is distributed in the same way. So RoadsD and WaterwaysD are spatially co-partitioned, suitable for spatial join (Example 2).

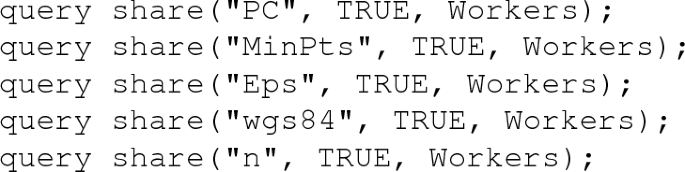

The following two operators serve to have the same objects available in the master and worker databases. Operator share copies an object from the master to the worker databases whereas dlet creates an object on master and workers by a query function.

The Boolean parameter specifies whether an object already present in the worker database should be overwritten. WR defines the set of worker databases.

The semantics for such operators can be defined as follows. These are operations affecting the master and the worker databases, denoted as M and \(D_1, ..., D_m\), respectively. A database is a set of named objects where each name n is associated with a value of some type, hence a named object has structure (n, (t, v)) where t is the type and v the value. A query is a function on a database returning such a pair. Technically, an object name is represented as a \(\underline{\smash { string }}\) and a query as a \(\underline{\smash { text }}\).

We define the mapping of databases denoted \(\delta \). Let (n, o) be an object in the master database.

Example 8

Operator share is in particular needed to make objects referred to in queries available to all workers.

“Determine the number of buildings in Eichlinghofen.” This is a suburb of Dortmund, Germany, given as a \(\underline{\smash { region }}\) value eichlinghofen on the master.

Note that a database object mentioned in a parameter function (query) of dmap must be present in the master database, because the function is type checked on the master. It must be present in the worker databases as well because these functions are sent to workers and type checked and evaluated there.

Whereas persistent database objects can be copied from master to worker databases, this is not possible for main memory objects used in query processing. Again, such objects must exist on the master and on the workers because type checking is done in both environments. This is exactly the reason to introduce the following dlet operator.

The dlet operator creates a new object by a query simultaneously on the master and in each worker database. The \(\underline{\smash { darray }}\) argument serves to specify the relevant set of workers. The operator returns a stream of tuples reporting success or failure of the operation for the master and each worker. Let n be the name and \(\mu \) the query argument.

An example for dlet is given in Sect. 6.5.

The dcommand operator lets an arbitrary command be executed by each worker. The command is given as a \(\underline{\smash { text }}\) argument. The \(\underline{\smash { darray }}\) argument defines the set of workers. The result stream is like the one for dlet.

Example 9

To configure for each worker how much space can be used for main memory data structures, the following command can be used:

4.3.4 Operations for collecting data from workers

The operator dsummarize can be used to make a distributed array available as a stream of tuples or values on the master whereas getValue transforms a distributed into a local array.

The operator is overloaded. For the two signatures, the semantics definitions are:

The operator getValueP allows one to transform a partial distributed array into a complete local array on the master.

Finally, the tie operator of the basic engine is useful to aggregate the fields of a local array.

Example 10

Let X be an array of integers. Then

computes their sum. Here “.” and “..” denote the two arguments of the parameter function which could also be written as

Further, examples 2 and 4 demonstrate the use of these operators.

4.4 Final remarks on the distributed algebra

Whereas the algebra has operations to distribute data from the master to the workers, this is not the only way to create a distributed database. For huge databases, this would not be feasible, the master being a bottleneck. Instead, it is possible to create a distributed array “bottom-up” by assembling data already present on the worker computers. They may have got there by file transfer or by use of a distributed file system such as HDFS [45]. One can then create a distributed array by a dmap operation that creates each slot value by reading from a file present on the worker computer. Further, it is possible to create relations (or any kind of object) in the worker databases, again controlled by dmap operations, and then to collect these relations into the slots of a distributed array created on top of them. This is provided by an operation called createDarray, omitted here for conciseness. Examples can be found in [23, 51].

Note that any algorithm that can be specified in the MapReduce framework can easily be transferred to Distributed Algebra, as map steps can be implemented by dmap, shuffling between map and reduce stage is provided by partition and collect or areduce operations, and reduce steps can again be implemented by dmap (or areduce) operations.

An important feature of the algebra design is that the number of slots of a distributed array may be chosen independently from the number of workers. This allows one to assign different numbers of slots to each worker and so to compensate for uneven partitioning or more generally to balance work load over workers, as it is done in operators collectB, areduce, and areduce2.

5 Implementation

5.1 Implementing an algebra in Secondo

To implement a new algebra, data types and operators working on it must be provided. For the data types, a C++ class describing the type’s structure and some functions for the interaction with Secondo must be provided. In the context of this article, the Secondo supporting functions are less important. They can be found e.g., in the Secondo Programmer’s Guide [25].

An operator implementation consists of several parts. The two most important ones are the type mapping and the value mapping. Other parts provide a description for the user or select different value mapping implementations for different argument types.

The main task of the type mapping is to check whether the operator can handle the provided argument types and to compute the resulting type. Optionally further arguments can be appended. This may be useful for default arguments or to transfer information that is available in the type mapping only to the value mapping part.

Within the value mapping, the operator’s functionality is implemented, in particular the result value is computed from the operator’s arguments.

5.2 Structure of the main types

All information about the subtypes, i.e. the types stored in the single slots, is handled by the Secondo framework and hence not part of the classes representing the distributed array types.

The array classes of the Distributed2Algebra consist of a label (string), a defined flag (bool), and connection information (vector). Furthermore, an additional vector holds the mapping from the slots to the workers. The label is used to name objects or files on the workers. An object corresponding to slot X of a distributed array labeled with myarray is stored as \(myarray\_X\). The defined flag is used in case of errors. The connection information corresponds to the schema of the worker relation that is used during the distribution of a tuple stream. In particular, each entry in this vector consists of the name of the server, the server’s port, an integer corresponding to the position of the entry within the worker relation, and the name of a configuration file. This information is collected in a vector of DArrayElement.

The partial distributed arrays (arrays of type \(\underline{\smash { pdarray }}\) or \(\underline{\smash { pdfarray }}\)) have an additional member of type \(\texttt {set<int>}\) storing the set of used slot numbers.

The structure of a \(\underline{\smash { dfmatrix }}\) is quite similar to the distributed array types. Only the mapping from the slots to the workers is omitted. Instead the number of slots is stored.

5.3 Class hierarchy of array classes

Figure 9 shows a simplified class diagram of the array classes provided by the Distributed2Algebra.

Note that the non-framed parts are not really classes but type definitions only, e.g., the definition of the \(\underline{\smash { darray }}\) type is just \(\texttt {typedef DArrayT<DARRAY> DArray;}\).

5.4 Worker connections

The connections the to workers are realized by a class ConnectionInfo. This class basically encapsulates a client interface to a Secondo server and provides thread-safe access to this server. Furthermore, this class supports command logging and contains some functions for convenience, e.g., a function to send a relation to the connected server.

Instances of this class are part of the Distributed2Algebra instance. If a connection is requested by some operation, an existing one is returned. If no connection is available for the specified worker, a connection is established and inserted into the set of existing connections. Connections will be held until closing is explicitly requested or the master is finished. This avoids the time consuming start of a new worker connection.

5.5 Distribution of data

All distribution variants follow the same principle. Firstly the incoming tuple stream is distributed to local files on the master according to the distribution function of the operator. Each file contains a relation in a binary representation. The number of created files corresponds to the number of slots of the resulting array. After that, these files are copied to the workers in parallel over the worker connections. If the result of the operation is a \(\underline{\smash { darray }}\), the binary file on the worker is imported into the database as a database object by sending a command to the worker. Finally, intermediate files are removed. In case of the failure of a worker, another worker is selected adapting the slot\(\rightarrow \)worker mapping of the resulting distributed array.

5.6 The dmap family

Each variant of the dmap Operator gets one or more distributed arrays, a name for the result’s label, a function, and a port number. The last argument is omitted for the simple dmap operator. As described above, the implementation of an operator consists of several parts where the type mapping and the value mapping are the most interesting ones. By setting a special flag of the operator (calling the SetUsesArgsInTypeMapping function), the type mapping is fed not only with the argument’s types but additionally with the part of the query that leads to this argument. Both parts are provided as a nested list. It is checked whether the types are correct. The query part is only exploited for the function argument. It is slightly modified and delivered in form of a text to the value mapping of the operator.

Within the value mapping it is checked whether the slot-worker-assignment is equal for each argument array. If not, the slot contents are transferred between the workers to ensure the existence of corresponding slots on a single worker. In this process, workers communicate directly with each other. The master acts as a coordinator only. For the communication, the port given as the last argument to the dmapX operator is used. Note, that copying the slot contents is available for distributed file arrays only but not for the \(\underline{\smash { darray }}\) type.

For each slot of the result, a Secondo command is created mainly consisting of the function determined by the type mapping applied to the current slot object(s). If the result type is a \(\underline{\smash { darray }}\), a let command is created, a query creating a relation within a binary file otherwise. This command is sent to the corresponding worker. Each slot is processed within a single thread to enable parallel processing. Synchronization of different slots on the same worker is realized within the ConnectionInfo class.

At the end, any intermediate files are deleted.

5.7 Redistribution

Redistribution of data is realized as a combination of the partition operator followed by collect2 or areduce.

The partition operator distributes each slot on a single worker to a set of files. The principle is very similar to the first part of the ddistribute variants, where the incoming tuple stream is distributed to local files on the master. Here, the tuples of all slots on this worker are collected into a common tuple stream and redistributed to local files on this worker according to the distribution function.

At the beginning of the collect2 operator, on each worker a lightweight server is started that is used for file transfer between the workers. After this phase, for each slot of the result \(\underline{\smash { darray }}\), a thread is created. This thread collects all files associated to this slot from all other workers. The contents of these files are put into a tuple stream, that is either collected into a relation or into a single file.

The areduce operator works as a combination of collect2 and dmap. The a in the operator name stands for adaptive, meaning that the number of slots processed by a worker depends on its speed. This is realized in the following way. Instead for each slot, for each worker a thread is created performing the collect2-dmap functionality. At the end of a thread, a callback function is used to signal this state. The worker that called the function is assigned to process the next unprocessed slot.

5.8 Fault tolerance

Inherent to parallel systems is the possibility of the failure of single parts. The Distributed2Algebra provides some basic mechanisms to handle missing workers. Of course this is possible only if the required data are stored not exclusively at those workers. Conditioned by the two array types, the system must be able to handle files and database objects, i.e., relations. In particular, a redundant storage and a distributed access are required.

In Secondo there are already two algebras implementing these features. The DBService algebra is able to store relations as well as any dependent indexes in a redundant way on several servers. For a redundant storage of files, the functions of the DFSAlgebra are used. If fault tolerance is switched on, created files and relations are stored at the desired worker and additionally given to the corresponding parts of these algebras for replicated storage. In the case of failure of a worker, the created command is sent to another worker and the slot-worker assignment is adapted. In the case the slot content is not available, a worker will get the input from the DFS and the DBService, respectively.

However, at the time of writing fault tolerance does not yet work in a robust way in Secondo and is still under development. It is also beyond the scope of this paper.

6 Application example: distributed density-based similarity clustering

In this section, we demonstrate how a fairly sophisticated distributed algorithm can be formulated in the framework of the Distributed Algebra. As an example, we consider the problem of density-based clustering as introduced by the classical DBScan algorithm [15]. Whereas the original DBScan algorithm was applied to points in the plane, we consider arbitrary objects together with a distance (similarity) function. Hence the algorithm we propose can be applied to points in the plane, using Euclidean distance as similarity function, but also to sets of images, twitter messages, or sets of trajectories of moving objects with their respective application-specific similarity functions.

6.1 Clustering

Let S be a set of objects with distance function d. The distance must be zero for two equal objects; it grows according to the dissimilarity between objects.

We recall the basic notions of density-based clustering. It uses two parameters MinPts and Eps. An object s from S is called a core object if there are at least MinPts elements of S within distance Eps from s, that is, \(|N_{Eps}(s)| \ge MinPts\) where \(N_{Eps}(s) = \{t \in S | d(s, t) \le Eps\}\). It is called a border object if it is not a core object but within distance Eps of a core object.

An object p is directly density-reachable from an object q if q is a core object and \(p \in N_{Eps}(q)\). It is density-reachable from q if there is a chain of objects \(p_1, p_2, ..., p_n\) where \(p_1 = q\), \(p_n = p\) and \(\forall 1 \le i < n:\) \(p_{i+1}\) is directly density-reachable from \(p_i\). Two objects p, r are density-connected, if there exists an object q such that both p and r are density-reachable from q. A cluster is a maximal set of objects that are pairwise density-connected. All objects not belonging to any cluster are classified as noise.

6.2 Overview of the algorithm

A rough description of the algorithm is as follows.

-

1.

Compute a small subset of S (say, a few hundred elements) as so-called partition centers.

-

2.

Assign each element of S to its closest partition center. In this way, S is decomposed into disjoint partitions. In addition, assign some elements of S not only to the closest partition center but also to partition centers a bit farther away than the closest one. The resulting subsets are not disjoint any more but overlap at the boundaries. Within each subset we can distinguish members of the partition and so-called neighbors.

-

3.

Use a single machine DBScan implementation to compute clusters within each partition. Due to the neighbors available within the subsets, all elements of S can be correctly classified as core, border, or noise objects.

-

4.

Merge clusters that extend across partition boundaries and assign border elements to clusters of a neighbor partition where appropriate.

In Step 2, the problem arises how to determine the neighbors of a partition. See Fig. 10a. Here u and v are partition centers; the blue objects are closest to u, the red objects are closest to v; the diagonal line represents equi-distance between u and v. When partition P(u) is processed in Step 3 by a DBScan algorithm, object s needs to be classified as a core or border object. To do this correctly, it is necessary to find object t within a circle of radius Eps around s. But t belongs to partition P(v). It is therefore necessary to include t as a neighbor into the set \(P'(u)\), the extension of partition P(u).

Hence we need to add elements of P(v) to \(P'(u)\) that can lie within distance Eps from some object of P(u). Theorem 1 says that such objects can lie only \(2 \cdot Eps\) further away from u than from their own partition center v. The proof is illustrated in Fig. 10b.

Theorem 1

Let \(s, t \in S\) and \(T \subset S\). Let \(u, v \in T\) be the elements of T with minimal distance to s and t, respectively. Then \(t \in N_{Eps}(s) \Rightarrow d(u, t) \le d(v, t) + 2 \cdot Eps\).

Proof

\(t \in N_{Eps}(s)\) implies \(s \in N_{Eps}(t)\). Let x be a location within \(N_{Eps}(t)\) with equal distance to u and v, that is, \(d(u, x) = d(v, x)\). Such locations must exist, because s is closer to u and t is closer to v. Then \(d(v, x) \le d(v, t) + Eps\). Further, \(d(u, t) \le d(u, x) + Eps = d(v, x) + Eps \le d(v, t) + Eps + Eps = d(v, t) + 2 \cdot Eps\). \(\square \)

Hence to set up the relevant set of neighbors for each partition, we can include an object t into all partitions whose centers are within distance \(d_t + 2 \cdot Eps\), where \(d_t\) is the distance to the partition center closest to t.

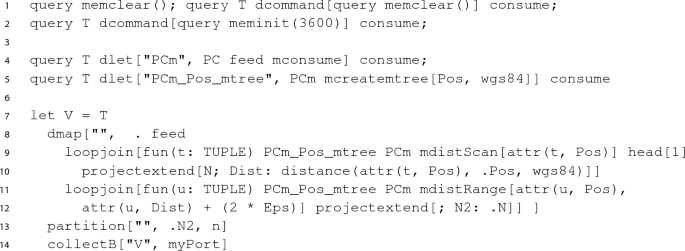

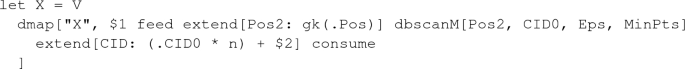

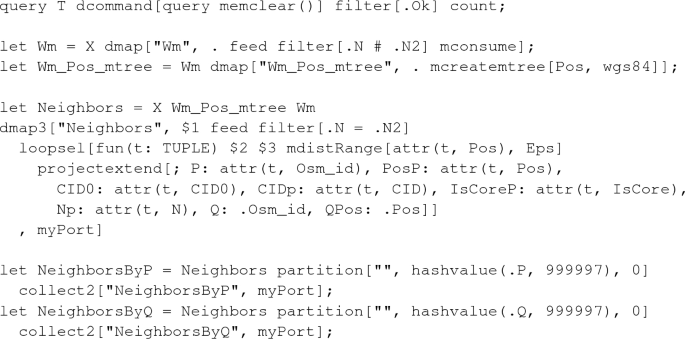

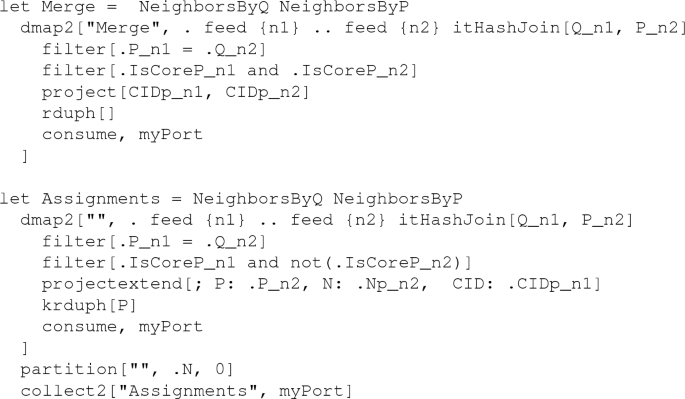

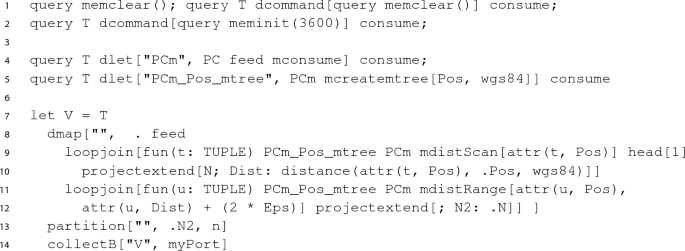

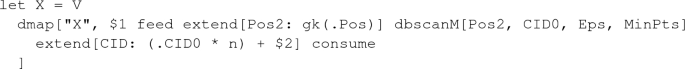

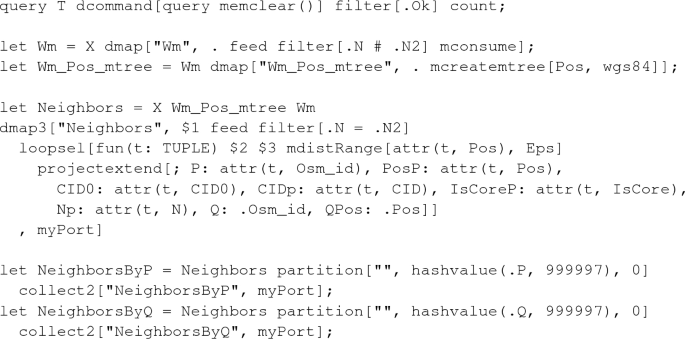

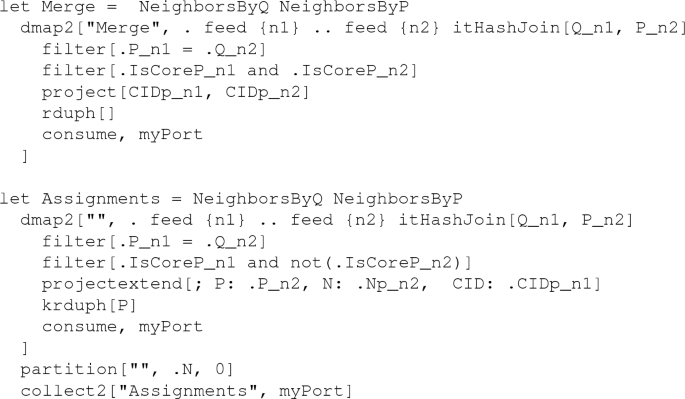

6.3 The algorithm

In more detail, the main algorithm consists of the following steps. Steps are marked as M if they are executed on the master, MW if they describe interaction between master and workers, and W if they are executed by the workers.

Initially, we assume the set S is present in the form of a distributed array T where elements of S have been assigned to fields in a random manner, but equally distributed (e.g., round robin).

As a result of the algorithm, all elements are assigned a cluster number or they are designated as noise.

-

1.

MW Collect a sample subset \(SS \subset S\) from array T to the master, to be used in the following step.

-

2.

M Based on SS, compute a subset \(PC \subset S\) as partition centers using algorithm SimilarityPartitioning (Sect. 6.6). Let \(PC = \{pc_1, ..., pc_n\}\). Subsequently, S will be partitioned in such a way that each object is assigned to its closest partition center.

-

3.

MW Share PC and some constant values with workers.

-

4.