Abstract

We present a connection between two dynamical systems arising in entirely different contexts: the Iteratively Reweighted Least Squares (IRLS) algorithm used in compressed sensing and sparse recovery to find a minimum \(\ell _1\)-norm solution in an affine space, and the dynamics of a slime mold (Physarum polycephalum) that finds the shortest path in a maze. We elucidate this connection by presenting a new dynamical system – Meta-Algorithm – and showing that the IRLS algorithms and the slime mold dynamics can both be obtained by specializing it to disjoint sets of variables. Subsequently, and building on work on slime mold dynamics for finding shortest paths, we prove convergence and obtain complexity bounds for the Meta-Algorithm that can be viewed as a “damped” version of the IRLS algorithm. A consequence of this latter result is a slime mold dynamics to solve the undirected transshipment problem that computes a \((1+\varepsilon )-\)approximate solution in time polynomial in the size of the input graph, maximum edge cost, and \(\frac{1}{\varepsilon }\) – a problem that was left open by the work of (Bonifaci V et al. [10] Physarum can compute shortest paths. Kyoto, Japan, pp. 233–240).

Similar content being viewed by others

Notes

We assume that the matrix A has rank n, i.e., all of its rows are linearly independent. Every linear system can be efficiently brought into this form by removing certain equations.

Note that if for some i and some \(k\in {\mathbb {N}}\) it happens that \(z_i^{(k)}=0\) then the transition to \(z^{(k+1)}\) is not well defined. In such a case the ith coordinate is ignored and \(z_i^{(k+1)}\) is set to 0. See Remark 1 for more details.

We remark that Laplacian solvers output only approximate solutions, hence one would need to prove an equivalent of our results where the q vector is computed only approximately. The details of such a proof are long and not very enlightening. The reader is referred to the paper of Daitch and Spielman [23], where such an analysis has been carried out (see also [6, 50]).

References

Adil, D., Kyng, R., Peng, R., Sachdeva, S.: Iterative refinement for \(\ell _p\)-norm regression. In: Proceedings of the Thirtieth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2019, San Diego, California, USA, January 6-9, 2019, pp. 1405–1424 (2019). https://doi.org/10.1137/1.9781611975482.86

Adil, D., Peng, R., Sachdeva, S.: Fast, provably convergent IRLS algorithm for p-norm linear regression. In: H.M. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché-Buc, E.B. Fox, R. Garnett (eds.) Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, 8-14 December 2019, Vancouver, BC, Canada, pp. 14166–14177 (2019). URL http://papers.nips.cc/paper/9565-fast-provably-convergent-irls-algorithm-for-p-norm-linear-regression

Afek, Y., Alon, N., Barad, O., Hornstein, E., Barkai, N., Bar-Joseph, Z.: A biological solution to a fundamental distributed computing problem. Science 331(6014), 183–185 (2011). https://doi.org/10.1126/science.1193210. URL http://science.sciencemag.org/content/331/6014/183

Ba, D.E., Babadi, B., Purdon, P.L., Brown, E.N.: Convergence and stability of iteratively re-weighted least squares algorithms. IEEE Trans. Signal Process. 62(1), 183–195 (2014). https://doi.org/10.1109/TSP.2013.2287685

Becchetti, L., Bonifaci, V., Dirnberger, M., Karrenbauer, A., Mehlhorn, K.: Physarum can compute shortest paths: Convergence proofs and complexity bounds. In: Automata, Languages, and Programming - 40th International Colloquium, ICALP 2013, Riga, Latvia, July 8-12, 2013, Proceedings, Part II, pp. 472–483 (2013)

Becchetti, L., Bonifaci, V., Dirnberger, M., Karrenbauer, A., Mehlhorn, K.: Physarum can compute shortest paths: Convergence proofs and complexity bounds. In: Full version (2014)

Beck, A.: On the convergence of alternating minimization for convex programming with applications to iteratively reweighted least squares and decomposition schemes. SIAM J. Opt. 25(1), 185–209 (2015)

Becker, R., Bonifaci, V., Karrenbauer, A., Kolev, P., Mehlhorn, K.: Two results on slime mold computations. Theor. Comput. Sci. 773, 79–106 (2019)

Bissantz, N., Dümbgen, L., Munk, A., Stratmann, B.: Convergence analysis of generalized iteratively reweighted least squares algorithms on convex function spaces. SIAM J. Opt. 19(4), 1828–1845 (2009). https://doi.org/10.1137/050639132

Bonifaci, V., Mehlhorn, K., Varma, G.: Physarum can compute shortest paths. In: Proceedings of the Twenty-Third Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2012, Kyoto, Japan, January 17-19, 2012, pp. 233–240 (2012)

Brimberg, J., Love, R.F.: Global convergence of a generalized iterative procedure for the minisum location problem with lp distances. Oper. Res. 41(6), 1153–1163 (1993). https://doi.org/10.1287/opre.41.6.1153

Bubeck, S., Cohen, M.B., Lee, Y.T., Li, Y.: An homotopy method for \(\ell _p\) regression provably beyond self-concordance and in input-sparsity time. In: Proceedings of the 50th Annual ACM SIGACT Symposium on Theory of Computing, STOC 2018, Los Angeles, CA, USA, June 25-29, 2018, pp. 1130–1137 (2018). https://doi.org/10.1145/3188745.3188776

Burrus, C.: Iterative reweighted least squares (2012). URL https://cnx.org/contents/krkDdys0@12/Iterative-Reweighted-Least-Squares

Burrus, C.S., Barreto, J.A., Selesnick, I.W.: Iterative reweighted least-squares design of FIR filters. IEEE Trans. Signal Process. 42(11), 2926–2936 (1994). https://doi.org/10.1109/78.330353

Candes, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theor. 52(2), 489–509 (2006). https://doi.org/10.1109/TIT.2005.862083

Candès, E., Tao, T.: Decoding by linear programming. Inf. Theor., IEEE Trans. 51(12), 4203–4215 (2005)

Cardelli, L., Csikász-Nagy, A.: The cell cycle switch computes approximate majority. Sci. Rep. 2, 656 (2012). https://doi.org/10.1038/srep00656

Chartrand, R., Yin, W.: Iteratively reweighted algorithms for compressive sensing. In: Acoustics, Speech and Signal Processing, 2008. ICASSP 2008. IEEE International Conference on, pp. 3869–3872 (2008)

Chastain, E., Livnat, A., Papadimitriou, C., Vazirani, U.: Algorithms, games, and evolution. Proceedings of the National Academy of Sciences 111(29), 10620–10623 (2014). https://doi.org/10.1073/pnas.1406556111. URL http://www.pnas.org/content/111/29/10620.abstract

Chazelle, B.: Natural algorithms and influence systems. Commun. ACM 55(12), 101–110 (2012). https://doi.org/10.1145/2380656.2380679

Chen, C., He, L., Li, H., Huang, J.: Fast iteratively reweighted least squares algorithms for analysis-based sparse reconstruction. Med. Image Analyt. 49, 141–152 (2018). https://doi.org/10.1016/j.media.2018.08.002

Cook, W., Cunningham, W., Pulleyblank, W., Schrijver, A.: Comb. opt. wiley, New York (1998)

Daitch, S.I., Spielman, D.A.: Faster approximate lossy generalized flow via interior point algorithms. In: C. Dwork (ed.) Proceedings of the 40th Annual ACM Symposium on Theory of Computing, Victoria, British Columbia, Canada, May 17-20, 2008, pp. 451–460. ACM (2008). https://doi.org/10.1145/1374376.1374441

Daubechies, I., DeVore, R., Fornasier, M., Güntürk, C.S.: Iteratively reweighted least squares minimization for sparse recovery. Commun. Pure Appl. Math. 63(1), 1–38 (2010)

Dong, H., Yang, L.: Iteratively reweighted least squares for robust regression via SVM and ELM. CoRR abs/1903.11202 (2019). URL http://arxiv.org/abs/1903.11202

Donoho, D.L., Elad, M.: Optimally sparse representation in general (nonorthogonal) dictionaries via \(\ell _1\) minimization. Proceedings of the National Academy of Sciences 100(5), 2197–2202 (2003). https://doi.org/10.1073/pnas.0437847100. URL http://www.pnas.org/content/100/5/2197.abstract

Donoho, D.L., Huo, X.: Uncertainty principles and ideal atomic decomposition. IEEE Trans. Inf. Theor. 47(7), 2845–2862 (2001). https://doi.org/10.1109/18.959265

Eiben, A.E., Smith, J.: From evolutionary computation to the evolution of things. Nature 521(7553), 476–482 (2015)

Ene, A., Vladu, A.: Improved convergence for \(\ell _1\) and \(\ell _\infty \) regression via iteratively reweighted least squares. In: Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA, pp. 1794–1801 (2019). URL http://proceedings.mlr.press/v97/ene19a.html

Even, S., Tarjan, R.E.: Network flow and testing graph connectivity. SIAM J. Comput. 4(4), 507–518 (1975). https://doi.org/10.1137/0204043

Facca, E., Cardin, F., Putti, M.: Towards a stationary monge-kantorovich dynamics: The physarum polycephalum experience. SIAM J. Appl. Math. 78(2), 651–676 (2018)

Facca, E., Karrenbauer, A., Kolev, P., Mehlhorn, K.: Convergence of the non-uniform directed physarum model. Theor. Comput. Sci. 816, 184–194 (2020). https://doi.org/10.1016/j.tcs.2020.01.034

Ford, L., Fulkerson, D.: Maximal flow through a network. Canad. J. Math. 8, 399–404 (1956)

Goldberg, A.V., Rao, S.: Beyond the flow decomposition barrier. J. ACM 45(5), 783–797 (1998). https://doi.org/10.1145/290179.290181

Gordon, D.M.: Ant Encounters: Interaction Networks and Colony Behavior. Primers in Complex Systems. Princeton University Press (2010). URL https://books.google.ch/books?id=MabwdXLZ9YMC

Gorodnitsky, I., Rao, B.: Sparse signal reconstruction from limited data using focuss: A re-weighted minimum norm algorithm. Trans. Signal Proc. 45(3), 600–616 (1997). https://doi.org/10.1109/78.558475

Green, P.: Iteratively reweighted least squares for maximum likelihood estimation, and some robust and resistant alternatives (with discussion). J. Royal Statist. Soc., Series B: Methodol. 46, 149–192 (1984)

Hopcroft, J.E., Karp, R.M.: An \(n^{5/2}\) algorithm for maximum matchings in bipartite graphs. SIAM J. comput. 2(4), 225–231 (1973)

Johannson, A., Zou, J.: A slime mold solver for linear programming problems. In: How the World Computes. Lecture Notes in Computer Science, vol. 7318, pp. 344–354. Springer, Berlin Heidelberg (2012)

Karam, L.J., McClellan, J.H.: Complex chebyshev approximation for fir filter design. IEEE Trans. Circuits Syst. II: Anal. Digit. Signal Process. 42(3), 207–216 (1995)

Karlovitz, L.: Construction of nearest points in the \(l^p\), \(p\) even, and \(l^\infty \) norms. i. J. Approx. Theor. 3(2), 123–127 (1970)

Karrenbauer, A., Kolev, P., Mehlhorn, K.: Convergence of the non-uniform physarum dynamics. Theor. Comput. Sci. 816, 260–269 (2020). https://doi.org/10.1016/j.tcs.2020.02.032

Lecun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015). https://doi.org/10.1038/nature14539

Lee, Y.T., Sidford, A.: Path finding methods for linear programming: Solving linear programs in \(O(\sqrt{rank})\) iterations and faster algorithms for maximum flow. In: 55th IEEE Annual Symposium on Foundations of Computer Science, FOCS 2014, Philadelphia, PA, USA, October 18-21, 2014, pp. 424–433 (2014). https://doi.org/10.1109/FOCS.2014.52

Miyaji, T., Ohnishi, I.: Physarum can solve the shortest path problem on riemannian surface mathematically rigourously. Int. J. Pure Appl. Matt. 47(3), 353–369 (2008)

Mukhoty, B., Gopakumar, G., Jain, P., Kar, P.: Globally-convergent iteratively reweighted least squares for robust regression problems. In: K. Chaudhuri, M. Sugiyama (eds.) Proceedings of Machine Learning Research, Proceedings of Machine Learning Research, vol. 89, pp. 313–322. PMLR (2019). URL http://proceedings.mlr.press/v89/mukhoty19a.html

Nakagaki, T., Yamada, H., Toth, A.: Maze-solving by an amoeboid organism. Nature 407(6803), 470 (2000)

Nesterov, Y., Nemirovskii, A.: Interior-point polynomial algorithms in convex programming, vol. 13. Society for Industrial and Applied Mathematics, (1994)

Olver, N., Végh, L.A.: A simpler and faster strongly polynomial algorithm for generalized flow maximization. In: Proceedings of the 49th Annual ACM SIGACT Symposium on Theory of Computing, STOC 2017, Montreal, QC, Canada, June 19-23, 2017, pp. 100–111 (2017). https://doi.org/10.1145/3055399.3055439

Orecchia, L., Sachdeva, S., Vishnoi, N.K.: Approximating the exponential, the lanczos method and an õ(m)-time spectral algorithm for balanced separator. In: H.J. Karloff, T. Pitassi (eds.) Proceedings of the 44th Symposium on Theory of Computing Conference, STOC 2012, New York, NY, USA, May 19 - 22, 2012, pp. 1141–1160. ACM (2012). https://doi.org/10.1145/2213977.2214080

Osborne, M.R.: Finite Algorithms in Optimization and Data Analysis. John Wiley & Sons Inc, New York, NY, USA (1985)

Perko, L.: Differential equations and dynamical systems, 3rd edn. Springer Science & Business Media, Berlin (2000)

Rao, B.D., Kreutz-Delgado, K.: An affine scaling methodology for best basis selection. IEEE Trans. Signal Process. 47(1), 187–200 (1999). https://doi.org/10.1109/78.738251

Sherman, J.: Nearly maximum flows in nearly linear time. In: 54th Annual IEEE Symposium on Foundations of Computer Science, FOCS 2013, 26-29 October, 2013, Berkeley, CA, USA, pp. 263–269 (2013). https://doi.org/10.1109/FOCS.2013.36

Spielman, D.A.: Algorithms, graph theory, and the solution of laplacian linear equations. ICALP 2, 24–26 (2012)

Spielman, D.A., Teng, S.: Nearly-linear time algorithms for graph partitioning, graph sparsification, and solving linear systems. In: Proceedings of the 36th Annual ACM Symposium on Theory of Computing, Chicago, IL, USA, June 13-16, 2004, pp. 81–90 (2004). https://doi.org/10.1145/1007352.1007372

Stonick, V.L., Alexander, S.T.: A relationship between the recursive least squares update and homotopy continuation methods. IEEE Trans. Signal Process. 39(2), 530–532 (1991). https://doi.org/10.1109/78.80849

Straszak, D., Vishnoi, N.K.: On a natural dynamics for linear programming. In: ACM Innovations in Theoretical Computer Science (2016)

Straszak, D., Vishnoi, N.K.: IRLS and slime mold: equivalence and convergence. CoRR. arXiv:1601.02712 (2016)

Straszak, D., Vishnoi, N.K.: Natural algorithms for flow problems. In: Proceedings of the Twenty-Seventh Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2016, Arlington, VA, USA, January 10–12, 2016, pp. 1868–1883. https://doi.org/10.1137/1.9781611974331.ch131 (2016)

Teng, S.H.: The Laplacian paradigm: Emerging algorithms for massive graphs. In: TAMC, pp. 2–14 (2010)

Tero, A., Kobayashi, R., Nakagaki, T.: A mathematical model for adaptive transport network in path finding by true slime mold. J. Theor. Biol. 244(4), 553 (2007)

Valiant, L.G.: Evolvability. J. ACM 56(1), 3:1–3:21 (2009). https://doi.org/10.1145/1462153.1462156

Végh, L.A.: A strongly polynomial algorithm for a class of minimum-cost flow problems with separable convex objectives. SIAM J. Comput. 45(5), 1729–1761 (2016). https://doi.org/10.1137/140978296

Vishnoi, N.K.: \({L}x=b\). Foundat. Trends Theor. Comput. Sci. 8(1–2), 1–141 (2012)

Vishnoi, N.K.: The speed of evolution. In: Proceedings of the Twenty-sixth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA ’15, pp. 1590–1601. Society for Industrial and Applied Mathematics, Philadelphia, PA, USA (2015). URL http://dl.acm.org/citation.cfm?id=2722129.2722234

Wright, S.: Primal-Dual Interior-Point Methods. Society for Industrial and Applied Mathematics (1997)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Based on IRLS and Slime Mold: Equivalence and Convergence [59] by the same set of authors.

Appendices

A connection between physarum dynamics and IRLS

In this section we present a proof of Theorem 1. The proof has two parts that are established in the two subsequent subsections. While the first is rather trivial, the second takes some effort as it requires establishing several technical facts about the Physarum dynamics.

1.1 A.1 IRLS as the Meta-Algorithm for \(h=1\)

Proof of Theorem 1, Part 1

When \(h=1\), the Meta-Algorithm proceeds as follows:

where \(q^{(k)}=q(w^{(k)})\). Therefore, at each step, \(w^{(k)}=\left| y^{(k)} \right| \). In particular, the dynamics is guided only by the y variables and is given by \(y^{(k+1)} = q(|y^{(k)}|)\). This is exactly the same update rule as IRLS whose iterations correspond to \(z^{(k+1)}=q(|z^{(k)}|)\). \(\square \)

1.2 A.2 Physarum Dynamics as the limiting case (\(h \rightarrow 0\)) of the Meta-Algorithm

Fix an instance of the basis pursuit problem: \(A\in {\mathbb {R}}^{n\times m}\) and \(b\in {\mathbb {R}}^n\). Note that by Fact 1, the vector \(\varphi (t)\) defined in (4) can be equivalently characterized as \(q(\sigma (t))\). Let \(G(\sigma )=|q(\sigma )|-\sigma \) so that the Physarum dynamics can then be written compactly as

In this section, we use the \(\ell _2\)-norm to measure lengths of vectors and, hence, \(\left\Vert \sigma \right\Vert \) should be understood as \(\sqrt{\sum _{i=1}^{m} \sigma _i^2}\). We now state some technical lemmas whose proofs appear in A.3.

Lemma 7

(Properties of Physarum trajectories) Let \(T\in {\mathbb {R}}_{>0}\) and \(\sigma :[0,T) \rightarrow {\mathbb {R}}^{m}_{>0}\) be any solution to the Physarum dynamics (13).

-

1.

For every \(t\in [0,T)\) and for all \(i\in \{1,2,\ldots , m\}\), \(\sigma _i(t)\ge \sigma _i(0) e^{-t}.\)

-

2.

The solution stays in a bounded region, i.e., \(\sup _{t\in [0,T)} \left\Vert \sigma (t) \right\Vert < \infty \).

-

3.

The limit \(\lim _{t\rightarrow T^{-}} \sigma (t)\) exists and is a point in \({\mathbb {R}}^{m}_{>0}\).

The next lemma implies the existence of a global solution of Physarum trajectories for all valid starting points.

Lemma 8

(Existence of global solution) For every initial condition \(\sigma (0)\in {\mathbb {R}}^{m}_{>0}\) there exists a global solution \(\sigma : [0,+\infty ) \rightarrow {\mathbb {R}}^{m}_{>0}\) to the Physarum dynamics (13).

The final lemma, whose proof relies on the previous lemmas, implies the proof Theorem 1 part 2) b), trivially.

Lemma 9

(Error analysis) Given \(\sigma (0)\in {\mathbb {R}}^m_{>0}\) and \(T\in {\mathbb {R}}_{\ge 0}\), let \(\sigma : [0,T]\rightarrow {\mathbb {R}}^{m}_{>0}\) be the solution to (13) and \(\sigma _{\mathrm {min}}(0)=\min _{i} \sigma _i(0)\). Then, there exists a constant \(K>0\), which depends on \(\sigma (0)\) and T, such that for every step size \(0<h\le \frac{\sigma _{\mathrm {min}}(0)}{2}\cdot (eK)^{-T}\), it holds for the sequence of weights \(\left( w^{(k)}\right) _{k\in {\mathbb {N}}}\) produced by the Meta-Algorithm initialized at \(w^{(0)}=\sigma ^{(0)}\) with step size h that

Proof

Fix any solution \(\sigma :[0,T]\rightarrow {\mathbb {R}}^{m}_{>0}\) and let

Take F to be the closed \(\varepsilon \)-neighborhood of \(\{\sigma (t): t\in [0,T]\}\), i.e., the set of all points of distance at most \(\varepsilon \) from any point on the solution curve. Note that by Lemma 7, F is a compact subset of \({\mathbb {R}}^{m}_{>0}\). Let \(L_1,L_2>0\) be constants such that

Such an \(L_1\) exists because G is locally Lipschitz (see Lemma 10). To see why \(L_2\) exists one can use the fact that G is bounded on F (as a continuous function on a compact domain) together with the formula

We claim that for every \(h\in (0,1)\) and \(\ell \in {\mathbb {N}}\) such that \(h\cdot \ell \le T\) and \(hL_2e^{L_1T}\le \varepsilon \),

The above claim is enough to conclude the proof. Towards its proof, first define

for any \(h\in (0,1)\) and \(\ell \in {\mathbb {N}}\). Recall that

We start by applying the triangle inequality to extract an error term

Next, we analyze the error term:

Where in the last inequality we used the fact that \(w^{(\ell )} \in F\) (which will be justified later). To bound the distance \(\left\Vert \sigma (\tau ) - w^{(\ell )} \right\Vert \) for any \(\tau \in [\ell h, (\ell +1)h]\), note that

Altogether, we obtain the following recursive bound on \(d_h(\ell +1)\)

By expanding the above expression, one can show that

In particular, whenever \(h\cdot \ell \le T\), we obtain that \(d_h(\ell ) \le h L_2 e^{tL_1}.\) Note that the above derivation is correct under the assumption that all the points \(w^{(0)}, w^{(1)}, \ldots , w^{(\ell )}\) belong to F, however this is implied by the assumption that h is small: \(hL_2e^{L_1T}\le \varepsilon ,\) hence the upper bound on h in the statement. \(\square \)

1.3 A.3 Technical lemmas and their proofs

Lemma 10

(Local Lipschitzness) The function \(G:{\mathbb {R}}^{m}_{>0} \rightarrow {\mathbb {R}}^{m}\) is locally Lipschitz, i.e., for every compact subset F of \({\mathbb {R}}^{m}_{>0}\), the restriction \(G\mathord {\upharpoonright }_F\) is Lipschitz.

Proof

Fix any compact subset \(F \subseteq {\mathbb {R}}^{m}_{>0}\). Since \(G(\sigma ) = |q(\sigma )| - \sigma \) and the identity function is Lipschitz, it is enough to prove that \(\sigma \mapsto |q(\sigma )|\) is Lipschitz on F. It follows from Fact 2 that

and, hence, Cramer’s rule, applied to the linear system \((A\varSigma A^\top )\xi = b\) (with variables \(\xi \in {\mathbb {R}}^n\)), implies that \(q_i(\sigma )\) is a rational function of the form \(\frac{Q_i(\sigma )}{\det (A \varSigma A^\top )}\) where \(Q_i(\sigma )\) is a polynomial. Since \(\det (A\varSigma A^\top )\) is positive for \(\sigma \in {\mathbb {R}}^{m}_{>0}\), \(q_i(\sigma )\) is a continuously differentiable function on \({\mathbb {R}}^{m}_{>0}\). Further, since F is a compact set, the magnitude of the derivative of q is upper bounded by a finite quantity, i.e.,

for some \(C\in {\mathbb {R}}\). Now, for any \(x,y\in F\) and any \(i\in \{1,2,\ldots , m\}\):

and, thus, using the Cauchy-Schwarz inequality it follows that

Thus, the Lipschitz constant of q on F is at most \(\sqrt{m} \cdot C\). \(\square \)

Proof of Lemma 7

The first claim follows directly from Gronwall’s inequality (see Sect. 2.3 in [52]) since

For the second claim, it is enough to show that there exists a constant \(C_1>0\) such that

for all \(t\in [0,T]\) and \(i=1,2,\ldots , m\). Indeed, Gronwall’s inequality then implies that \(\sigma _i(t) \le \sigma _i(0) e^{C_1t},\) for all \(t\in [0,T]\). Towards the proof of (14), let \(y\in {\mathbb {R}}^{m}\) be any fixed solution to \(Ay=b\). From the first claim of Lemma 7, for every \(t\in [0,T]\) and \(i=1,2,\ldots , m\) we obtain

Hence, by Corollary 1, \(\frac{\left| q_i(\sigma (t)) \right| }{\sigma _i(t)}\) is upper bounded by a quantity \(C_1\) that depends on \(T, \sigma (0), A, b\) only; proving the second claim.

The last claim follows from the previous two and the continuity of G. Indeed, one can deduce that the solution curve \(\{\sigma (t): t\in [0,T]\}\) is contained in a compact set \(F\subseteq {\mathbb {R}}^{m}_{>0}\). Denote \(C_2:=\max \left\{ \left\Vert G(\sigma ) \right\Vert : \sigma \in F\right\} \). For any \(s,t\in [0,T]\)

The above readily implies that \(\lim _{t\rightarrow T^{-}} \sigma (t)\) exists. Since F is a compact set, the limit \(\sigma (T)\) belongs to F and thus \(\sigma (T)\in {\mathbb {R}}^{m}_{>0}\). \(\square \)

Proof of Lemma 8

By Lemma 10, the function \(G(\sigma )\) is locally Lipschitz and, hence, there is a maximal interval of existence [0, T) of a solution \(\sigma (t)\) to (13) where \(0<T\le +\infty \) (see Theorem 1, Sect. 2.4 in [52]). Suppose that \(x:[0,T) \rightarrow {\mathbb {R}}^{m}\) is a solution with \(T\in {\mathbb {R}}_{>0}.\) We show that it can be extended to a strictly larger interval \([0,T+\varepsilon )\) for some \(\varepsilon >0\). Let \(\sigma (T) := \lim _{t\rightarrow T^{-}} \sigma (t)\); the limit exists and \(\sigma (T)\in {\mathbb {R}}^{m}_{>0}\) by Lemma 7. Since \(G(\sigma )\) is locally Lipschitz, one can apply the Fundamental Existence-Uniqueness theorem (see Sect. 2.2 in [52]) to obtain a solution \(\tau : (T-\varepsilon , T+\varepsilon ) \rightarrow {\mathbb {R}}^{m}_{>0}\) with \(\tau (T)=\sigma (T)\) for some \(\varepsilon >0\). Because of uniqueness, \(\tau \) and \(\sigma \) agree on \((T-\varepsilon ,T]\) and, hence, can be combined to yield a solution on a larger interval: \([0,T+\varepsilon )\). This concludes the proof of the lemma. \(\square \)

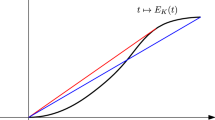

B Example of non-convergence of IRLS

In this section, we present an example instance of the basis pursuit problem for which IRLS fails to converge to the optimal solution.

Theorem 4

There exists an instance (A, b) of the basis pursuit problem (1) and a strictly positive point \(y^{(0)}\in {\mathbb {R}}^{m}_{>0}\) (with \(Ay^{(0)}=b\)) such that if IRLS is initialized at \(y^{(0)}\) (and \(\{y^{(k)}\}_{k\in {\mathbb {N}}}\) is the sequence produced by IRLS) then \(\left\Vert y^{(k)} \right\Vert _1 \) does not converge to the optimal value.

The proof is based on the simple observation that if IRLS reaches a point \(y^{(k)}\) with \(y^{(k)}_i=0\) for some \(k\in {\mathbb {N}}\), \(i\in \{1,2,\ldots ,{m}\}\) then \(y^{(l)}_i=0\) for all \(l>k\).

Consider an undirected graph \(G=(V,E)\) with \(V=\{u_0, u_1, ...,u_6,u_7\}\), \(E=\{e_1, e_2, \ldots , e_9\}\), and also let \(s=u_0\), \(t=u_7\). G is depicted in Fig. 3.

We define \(A\in {\mathbb {R}}^{8\times 9}\) to be the signed incidence matrix of G with edges directed according to increasing indices and let \(b:=(-1,0,0,0,0,0,0,1)^\top \). Then the following problem

is equivalent to the shortest \(s-t\) path problem in G. The linear system \(Ax=b\) is stated explicitly in Fig. 4. The unique optimal solution is the path \(s-u_4-u_3-t\), i.e., \(y^\star = (1,0,0,0,0,0,0,1,-1)^\top \) (note that we work with undirected graphs here). In particular, the edge \((u_3,u_4)\) is in the support of the optimal vector (it corresponds to the last coordinate of \(y^\star \)).

The linear system that encodes the shortest path problem in Fig. 3

Consider an initial solution \(y^{(0)}\) given below

Claim IRLS initialized at \(y^{(0)}\) produces in one step a point \(y^{(1)}\) with \(y^{(1)}_{9}=0\).

The above claim implies that IRLS initialized at \(y^{(0)}\) (which has full support) does not converge to the optimal solution, which has 1 in the last coordinate. Thus to prove Theorem 4 it suffices to show Claim B.

Proof of Claim B

IRLS chooses the next point \(y^{(1)}\in {\mathbb {R}}^9\) according to the rule:

which is the same as the unit electrical \(s-t\) flow in G corresponding to edge resistances \(\frac{1}{y_e}\). (This is due to the fact that electrical flows minimize energy, see [65].) In such an electrical flow the potentials of \(u_4\) and \(u_3\) are equal (the paths \(s-u_4\) and \(s-u_1-u_2-u_3\) have equal resistances), hence the flow through \((u_3,u_4)\) is zero. \(\square \)

C Remaining Proofs

Proof Of Fact 2

To prove the first claim, consider the strictly convex function \(f: {\mathbb {R}}^{m} \rightarrow {\mathbb {R}}\) given by \(f(x) := \sum _{i=1}^{m} \frac{x_i^2}{w_i}\). The optimality conditions for the convex program \(\min \{f(x): Ax=b\}\) are then given by

where \(\lambda \in {\mathbb {R}}^{n}\) are Lagrangian multipliers for the linear constraints \(Ax=b\). We claim that \(L=AWA^\top \) is a full rank matrix. Indeed, suppose that \(u\in {\mathbb {R}}^n\) is such that \(Lu=0\), then

hence \(u=0\), since A, and consequently \(W^{1/2}A\), have rank n. For this reason L is invertible and the optimizer is explicitly given by the expression \(WA^\top (AWA^\top )^{-1}b\).

The proof of the second claim starts with the formula \(q=WA^\top L^{-1}b\) established in the first claim. Thus, \( \sum _{i=1}^{m} \frac{q_i^2}{w_i} = q^\top W^{-1} q\) and, hence:

\(\square \)

Rights and permissions

About this article

Cite this article

Straszak, D., Vishnoi, N.K. Iteratively reweighted least squares and slime mold dynamics: connection and convergence. Math. Program. 194, 685–717 (2022). https://doi.org/10.1007/s10107-021-01644-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-021-01644-z