Abstract

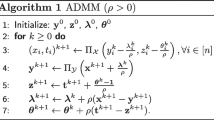

We propose a fast Newton algorithm for \(\ell _0\) regularized high-dimensional generalized linear models based on support detection and root finding. We refer to the proposed method as GSDAR. GSDAR is developed based on the KKT conditions for \(\ell _0\)-penalized maximum likelihood estimators and generates a sequence of solutions of the KKT system iteratively. We show that GSDAR can be equivalently formulated as a generalized Newton algorithm. Under a restricted invertibility condition on the likelihood function and a sparsity condition on the regression coefficient, we establish an explicit upper bound on the estimation errors of the solution sequence generated by GSDAR in supremum norm and show that it achieves the optimal order in finite iterations with high probability. Moreover, we show that the oracle estimator can be recovered with high probability if the target signal is above the detectable level. These results directly concern the solution sequence generated from the GSDAR algorithm, instead of a theoretically defined global solution. We conduct simulations and real data analysis to illustrate the effectiveness of the proposed method.

Similar content being viewed by others

References

Abramovich F, Benjamini Y, Donoho DL, Johnstone IM et al (2006) Adapting to unknown sparsity by controlling the false discovery rate. Ann Stat 34(2):584–653

Birgé L, Massart P (2001) Gaussian model selection. J Eur Math Soc 3(3):203–268

Bogdan M, Ghosh JK, Doerge R (2004) Modifying the schwarz bayesian information criterion to locate multiple interacting quantitative trait loci. Genetics 167(2):989–999

Bogdan M, Ghosh JK, Żak-Szatkowska M (2008) Selecting explanatory variables with the modified version of the Bayesian information criterion. Qual Reliab Eng Int 24(6):627–641

Breheny P, Huang J (2011) Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. Ann Appl Stat 5(1):232

Breheny P, Huang J (2015) Group descent algorithms for nonconvex penalized linear and logistic regression models with grouped predictors. Stat Comput 25(2):173–187

Chen J, Chen Z (2008) Extended bayesian information criteria for model selection with large model spaces. Biometrika 95(3):759–771

Chen J, Chen Z (2012) Extended bic for small-n-large-p sparse glm. Stat Sinica 22:555–574

Chen X, Nashed Z, Qi L (2000) Smoothing methods and semismooth methods for nondifferentiable operator equations. SIAM J Numer Anal 38(4):1200–1216

Chen X, Ge D, Wang Z, Ye Y (2014) Complexity of unconstrained l2-lp minimization. Math Program 143(1–2):371–383

Dolejsi E, Bodenstorfer B, Frommlet F (2014) Analyzing genome-wide association studies with an fdr controlling modification of the bayesian information criterion. PloS One 9(7):e103322

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am stat Assoc 96(456):1348–1360

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc: Ser B (Stat Methodol) 70(5):849–911

Friedman J, Hastie T, Tibshirani R (2010) Regularization paths for generalized linear models via coordinate descent. J Stat Softw 33(1):1

Frommlet F, Nuel G (2016) An adaptive ridge procedure for l 0 regularization. PloS One 11(2):e0148620

Frommlet F, Ruhaltinger F, Twaróg P, Bogdan M (2012) Modified versions of Bayesian information criterion for genome-wide association studies. Comput Stat Data Anal 56(5):1038–1051

Frommlet F, Bogdan M, Ramsey D (2016) Phenotypes and genotypes. Springer, Berlin

Ge J, Li X, Jiang H, Liu H, Zhang T, Wang M, Zhao T (2019) Picasso: A sparse learning library for high dimensional data analysis in R and Python. J Mach Learn Res 20(44):1–5

Huang J, Jiao Y, Liu Y, Lu X (2018) A constructive approach to l 0 penalized regression. J Mach Learn Res 19(1):403–439

Huang J, Jiao Y, Jin B, Liu J, Lu X, Yang C (2021) A unified primal dual active set algorithm for nonconvex sparse recovery. Stat Sci (to appear). arXiv:1310.1147

Jiao Y, Jin B, Lu X (2017) Group sparse recovery via the \(\ell ^0(\ell ^2)\) penalty: theory and algorithm. IEEE Trans Signal Process 65(4):998–1012

Li X, Yang L, Ge J, Haupt J, Zhang T, Zhao T (2017) On quadratic convergence of DC proximal Newton algorithm in nonconvex sparse learning. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds) Advances in neural information processing systems, vol 30, Curran Associates, Inc

Loh P-L, Wainwright MJ (2015) Regularized m-estimators with nonconvexity: statistical and algorithmic theory for local optima. J Mach Learn Res 16:559–616

Louizos C, Welling M, Kingma DP (2018) Learning sparse neural networks through \(L_0\) regularization. In: International conference on learning representations, pp 1–13. URL https://openreview.net/forum?id=H1Y8hhg0b

Luo S, Chen Z (2014) Sequential lasso cum ebic for feature selection with ultra-high dimensional feature space. J Am Stat Assoc 109(507):1229–1240

Ma R, Miao J, Niu L, Zhang P (2019) Transformed \(\ell _1\) regularization for learning sparse deep neural networks. URL https://arxiv.org/abs/1901.01021

McCullagh P (2019) Generalized linear models. Routledge, Oxfordshire

Meier L, Van De Geer S, Bühlmann P (2008) The group lasso for logistic regression. J R Stat Soc: Ser B (Stat Methodol) 70(1):53–71

Natarajan BK (1995) Sparse approximate solutions to linear systems. SIAM J Comput 24(2):227–234

Nelder JA, Wedderburn RW (1972) Generalized linear models. J R Stat Soc: Ser A (General) 135(3):370–384

Nesterov Y (2013) Gradient methods for minimizing composite functions. Math Program 140(1):125–161

Park MY, Hastie T (2007) L1-regularization path algorithm for generalized linear models. J R Stat Soc: Ser B (Stat Methodol) 69(4):659–677

Qi L (1993) Convergence analysis of some algorithms for solving nonsmooth equations. Math Oper Res 18(1):227–244

Qi L, Sun J (1993) A nonsmooth version of Newton’s method. Math Program 58(1–3):353–367

Rockafellar RT, Wets RJ-B (2009) Var Anal. Springer Science & Business Media, Berlin

Scardapane S, Comminiello D, Hussain A, Uncini A (2017) Group sparse regularization for deep neural networks. Neurocomputing 241:81–89

Schwarz G et al (1978) Estimating the dimension of a model. Ann Stat 6(2):461–464

Shen J, Li P (2017) On the iteration complexity of support recovery via hard thresholding pursuit. In: Proceedings of the 34th international conference on machine learning-volume 70, pp 3115–3124

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc: Ser B (Methodological) 58(1):267–288

Van de Geer SA et al (2008) High-dimensional generalized linear models and the lasso. Ann Stat 36(2):614–645

Wang L, Kim Y, Li R (2013) Calibrating non-convex penalized regression in ultra-high dimension. Ann Stat 41(5):2505

Wang R, Xiu N, Zhou S (2019) Fast newton method for sparse logistic regression. arXiv preprint arXiv:1901.02768

Wang Z, Liu H, Zhang T (2014) Optimal computational and statistical rates of convergence for sparse nonconvex learning problems. Ann Stat 42(6):2164

Ye F, Zhang C-H (2010) Rate minimaxity of the lasso and dantzig selector for the lq loss in lr balls. J Mach Learn Res 11(Dec):3519–3540

Yuan X-T, Li P, Zhang T (2017) Gradient hard thresholding pursuit. J Mach Learn Res 18:166

Żak-Szatkowska M, Bogdan M (2011) Modified versions of the bayesian information criterion for sparse generalized linear models. Comput Stat Data Anal 55(11):2908–2924

Zhang C-H (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38(2):894–942

Zhang C-H, Zhang T et al (2012) A general theory of concave regularization for high-dimensional sparse estimation problems. Stat Sci 27(4):576–593

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc: Ser B (Stat Methodol) 67(2):301–320

Acknowledgements

We wish to thank two anonymous reviewers for their constructive and helpful comments that led to significant improvements in the paper. The work of J. Huang is supported in part by the U.S. National Science Foundation grant DMS-1916199. The work of Y. Jiao is supported in part by the National Science Foundation of China grant 11871474 and by the research fund of KLATASDSMOE of China. The research of J. Liu is supported by Duke-NUS Graduate Medical School WBS: R-913-200-098-263 and MOE2016-T2-2-029 from Ministry of Eduction, Singapore. The work of Y. Liu is supported in part by the National Science Foundation of China grant 11971362. The work X. Lu is supported by National Science Foundation of China Grants 11471253 and 91630313.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In the appendix, we prove Lemma 1, Proposition 1 and Theorems 1 and 2.

1.1 Proof of Lemma 1

Proof

Let \(L_\lambda (\varvec{\beta })={\mathcal {L}}(\varvec{\beta })+\lambda \Vert \varvec{\beta }\Vert _0\). Assume \(\widehat{\varvec{\beta }}\) is a global minimizer of \(L_\lambda (\varvec{\beta })\). Then by Theorem 10.1 in Rockafellar and Wets (2009), we have

where \(\partial \Vert \widehat{\varvec{\beta }}\Vert _{0}\) denotes the limiting subdifferential (see Definition 8.3 in Rockafellar and Wets (2009)) of \( \Vert \cdot \Vert _{0}\) at \(\widehat{\varvec{\beta }}\). Let \(\widehat{\mathbf{d}}= -\nabla {\mathcal {L}}(\widehat{\varvec{\beta }})\) and define \(G(\varvec{\beta }) = \frac{1}{2}\Vert \varvec{\beta }- (\widehat{\varvec{\beta }}+\widehat{\mathbf{d}})\Vert ^2 + \lambda \Vert \varvec{\beta }\Vert _0\). We recall that, from the definition of the limiting subdifferential of Definition 8.3 in Rockafellar and Wets (2009)), \(\partial \Vert \widehat{\varvec{\beta }}\Vert _{0}\) satisfies that \(\Vert \varvec{\beta }\Vert _0\ge \Vert \widehat{\varvec{\beta }}\Vert _0+\langle \partial \Vert \widehat{\varvec{\beta }}\Vert _{0}, \varvec{\beta }-\widehat{\varvec{\beta }}\rangle +o(\Vert \varvec{\beta }-\widehat{\varvec{\beta }}\Vert )\) for any \(\varvec{\beta }\in {\mathbb {R}}^p\). (7) is equivalent to

Moreover, \({\widetilde{\varvec{\beta }}}\) being the minimizer of \(G(\varvec{\beta })\) is equivalent to \(\mathbf{0} \in \partial G({\widetilde{\varvec{\beta }}})\). Obviously, \(\widehat{\varvec{\beta }}\) satisfies \(\mathbf{0} \in \partial G(\widehat{\varvec{\beta }})\). Thus we deduce that \(\widehat{\varvec{\beta }}\) is a KKT point of \( G(\varvec{\beta })\). Then \(\widehat{\varvec{\beta }}=H_{\lambda }(\widehat{\varvec{\beta }}+\widehat{\mathbf{d}})\) follows from the result that the KKT points of G coincide with its coordinate-wise minimizer (Huang et al. 2021). Conversely, suppose \(\widehat{\varvec{\beta }}\) and \(\widehat{\mathbf{d}}\) satisfy (2), then \(\widehat{\varvec{\beta }}\) is a local minimizer of \(L_{\lambda }(\varvec{\beta })\). To show \(\widehat{\varvec{\beta }}\) is a local minimizer of \(L_{\lambda }(\varvec{\beta })\), we can assume \(\text{ h }\) is small enough and \(\Vert \text{ h }\Vert _{\infty }<\sqrt{2\lambda }\). Then we will show \(L_{\lambda }(\widehat{\varvec{\beta }}+\text{ h})\ge L_{\lambda }(\widehat{\varvec{\beta }})\) in two cases respectively.

First, we denote

By the definition of \(H_{\lambda }(\cdot )\) and (2), we can conclude that \(|{\widehat{\beta }}_{i}|\ge \sqrt{2\lambda }\) when \(i \in {\widehat{A}}\) and \(\widehat{\varvec{\beta }}_{{\widehat{I}}}=0\). Thus it yields that \(\text{ supp }(\widehat{\varvec{\beta }})={\widehat{A}}\). Moreover, we also have \(\widehat{\mathbf{d}}_{{\widehat{A}}}=[-\nabla {\mathcal {L}}(\widehat{\varvec{\beta }})]_{{\widehat{A}}}=0\), which is equivalent to \(\widehat{\varvec{\beta }}_{{\widehat{A}}}\in \underset{\varvec{\beta }_{{\widehat{A}}}}{\text{ argmin }}~\widetilde{{\mathcal {L}}}(\varvec{\beta }_{{\widehat{A}}})\).

Case 1: \(\text{ h}_{{\widehat{I}}}\ne 0\).

Because \(|{\widehat{\beta }}_{i}|\ge \sqrt{2\lambda }\) for \(i\in {{\widehat{A}}}\) and \(\Vert \text{ h }\Vert _{\infty }<\sqrt{2\lambda }\), we have

Therefore, we get

Let \(m(\text{ h})=\sum _{i=1}^{n}[c(\text{ x}_{i}^{T}(\widehat{\varvec{\beta }}+\text{ h}))-c(\text{ x}_{i}^{T}\widehat{\varvec{\beta }})]-\text{ y}^{T}\mathbf{X}\text{ h }\), so \(m(\text{ h})\) is a continuous function about \(\text{ h }\). As \(\text{ h }\) is small enough and \(\Vert \text{ h }\Vert _{\infty }<\sqrt{2\lambda }\), then \(m(\text{ h})+\lambda >0\). Thus the last inequality holds.

Case 2: \(\text{ h}_{{\widehat{I}}}=0\).

As \(|{\widehat{\beta }}_{i}|\ge \sqrt{2\lambda }\) for \(i\in {{\widehat{A}}}\) and \(\Vert \text{ h}_{{\widehat{A}}}\Vert _{\infty }<\sqrt{2\lambda }\), then we have

and

As known that \(\widehat{\varvec{\beta }}_{{\widehat{A}}}\in \underset{\varvec{\beta }_{{\widehat{A}}}}{\text{ argmin }}~\widetilde{{\mathcal {L}}}(\varvec{\beta }_{{\widehat{A}}})\), so the last inequality holds. In summary, \(\widehat{\varvec{\beta }}\) is a local minimizer of \(L_{\lambda }(\widehat{\varvec{\beta }})\). \(\square \)

1.2 Proof of Proposition 1

Proof

Denote \(D^{k}=-\left( {\mathcal {H}}^{k}\right) ^{-1} F\left( \text{ w}^{k}\right) \). Then

can be recast as

Partition \(\text{ w}^{k}, D^{k}\) and \(F\left( \text{ w}^{k}\right) \) according to \(A^{k}\) and \(I^{k}\) such that

Substituting (10), (11) and \({\mathcal {H}}^{k}\) into (8), we have

It follows from (9) that

Substituting (15) into (12)–(14), we get (4) of Algorithm 1. This completes the proof. \(\square \)

1.3 Preparatory lemmas

The proofs of Theorems 1 and 2 are built on the following lemmas.

Lemma 2

Assume (C1) holds and \(\Vert \varvec{\beta }^{*}\Vert _{0}=K\le T\). Denote \(B^k = A^{k}\backslash A^{k-1}\). Then,

where \(\zeta =\frac{|B^k|}{|B^k|+|A^*\backslash A^{k-1}|}\).

Proof

Obviously, this lemma holds if \(A^{k}=A^{k-1}\) or \({\mathcal {L}}(\varvec{\beta }^k)\le {\mathcal {L}}(\varvec{\beta }^*)\). So we only prove the lemma by assuming \(A^{k}\ne A^{k-1}\) and \({\mathcal {L}}(\varvec{\beta }^k)>{\mathcal {L}}(\varvec{\beta }^*)\). The condition (C1) indicates

Hence,

From the definition of \(A^{k}\) and \(A^*\), it is known that \(B^k\) contains the first \(|B^k|\)-largest elements (in absolute value) of \(\nabla {\mathcal {L}}(\varvec{\beta }^k)\), and \(\text{ supp }(\nabla {\mathcal {L}}(\varvec{\beta }^k))\bigcap \text{ supp }(\varvec{\beta }^*)=A^{*}\backslash A^{k-1}\). Thus, we have

Therefore,

In summary,

\(\square \)

Lemma 3

Assume (C1) holds with \(0<U<\frac{1}{T}\), and \(K\le T\) in Algorithm 1. Then before Algorithm 1 terminates,

where \(\xi =1-\frac{2L(1-TU)}{T(1+K)}\in (0,1)\).

Proof

Let \(\Delta ^{k}=\varvec{\beta }^k-\nabla {\mathcal {L}}(\varvec{\beta }^{k})\). The condition of (C1) indicates

On the one hand, by the definition of \(\varvec{\beta }^{k+1}\) and \(\nabla {\mathcal {L}}(\varvec{\beta }^{k+1})\), we have

Further, we also have

and

where \(a \bigvee b=\max \{a,b\}\). By the definition of \(A^k\), \(A^{k+1}\) and \(\varvec{\beta }^{k+1}\), we know that

By the definition of \(A^{k+1}\), we can conclude that

Due to \(-\nabla _{A^{k+1}\backslash A^{k}}{\mathcal {L}}(\varvec{\beta }^{k+1})=\Delta _{A^{k+1}\backslash A^{k}}^{k+1}\) and \(U<\frac{1}{T}\), hence we can deduce that

By the definition of \(\varvec{\beta }^{k+1}\), we have

Moreover, \(\frac{|A^*\backslash A^{k-1}|}{|B^k|}\le K\). By Lemma 2, we have

Therefore, we have

where \(\xi =1-\frac{2L(1-TU)}{T(1+K)}\in (0,1)\). \(\square \)

Lemma 4

Assume \({\mathcal {L}}\) satisfies (C1) and

for all \(k\ge 0\). Then,

Proof

If \(\Vert \varvec{\beta }^k-\varvec{\beta }^*\Vert _{\infty }< \frac{2\Vert \nabla {\mathcal {L}}(\varvec{\beta }^*)\Vert _{\infty }}{L}\), then (16) holds, so we only consider the case that \(\Vert \varvec{\beta }^k-\varvec{\beta }^*\Vert _{\infty }\ge \frac{2\Vert \nabla {\mathcal {L}}(\varvec{\beta }^*)\Vert _{\infty }}{L}\). On the one hand, \({\mathcal {L}}\) satisfies (C1), then

Due to \(\Vert \varvec{\beta }^k-\varvec{\beta }^*\Vert _{\infty }\ge \frac{2\Vert \nabla {\mathcal {L}}(\varvec{\beta }^*)\Vert _{\infty }}{L}\), then

Further, we can get

which is univariate quadratic inequality about \(\Vert \varvec{\beta }^k-\varvec{\beta }^*\Vert _{\infty }\). Thus, by simple computation, we can get

On the other hand, because \({\mathcal {L}}\) satisfies (C1), then

Then, we can get

Hence, by (17), we have

\(\square \)

Lemma 5

(Proof of Corollary 2 in Loh and Wainwright (2015)). Assume \(x_{ij}^{,}s\) are sub-Gaussian and \(n > rsim \log (p)\), then there exists universal constants \((c_1,c_2,c_3)\) with \(0<c_i<\infty \), \(i=1,2,3\) such that

1.4 Proof of Theorem 1

Proof

By Lemma 3, we have

where

So the conditions of Lemma 4 are satisfied. Taking \(\varvec{\beta }^0 = \mathbf{0} \), we can get

By Lemma 5, then there exists universal constants \((c_1,c_2,c_3)\) defined in Lemma 5, with at least probability \(1-c_2\exp (-c_3\log (p))\), we have

Some algebra shows that

by taking \(k \ge {\mathcal {O}}(\log _{\frac{1}{\xi }} \frac{n}{\log (p)} )\) in (18). Then, the proof is complete. \(\square \)

1.5 Proof of Theorem 2

Proof

(18) and assumption (C2) and some algebra shows that that

if \(k>\log _{\frac{1}{\xi }} 9 (T+K)(1+\frac{U}{L})r^2.\) This implies that \(A^* \subseteq A^k\). \(\square \)

Rights and permissions

About this article

Cite this article

Huang, J., Jiao, Y., Kang, L. et al. GSDAR: a fast Newton algorithm for \(\ell _0\) regularized generalized linear models with statistical guarantee. Comput Stat 37, 507–533 (2022). https://doi.org/10.1007/s00180-021-01098-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-021-01098-z