Abstract

The restoration of drained afforested peatlands, through drain blocking and tree removal, is increasing in response to peatland restoration targets and policy incentives. In the short term, these intensive restoration operations may affect receiving watercourses and the biota that depend upon them. This study assessed the immediate effect of ‘forest-to-bog’ restoration by measuring stream and river water quality for a 15 month period pre- and post-restoration, in the Flow Country peatlands of northern Scotland. We found that the chemistry of streams draining restoration areas differed from that of control streams following restoration, with phosphate concentrations significantly higher (1.7–6.2 fold, mean 4.4) in restoration streams compared to the pre-restoration period. This led to a decrease in the pass rate (from 100 to 75%) for the target “good” quality threshold (based on EU Water Framework Directive guidelines) in rivers in this immediate post-restoration period, when compared to unaffected river baseline sites (which fell from 100 to 90% post-restoration). While overall increases in turbidity, dissolved organic carbon, iron, potassium and manganese were not significant post-restoration, they exhibited an exaggerated seasonal cycle, peaking in summer months in restoration streams. We attribute these relatively limited, minor short-term impacts to the fact that relatively small percentages of the catchment area (3–23%), in our study catchments were felled, and that drain blocking and silt traps, put in place as part of restoration management, were likely effective in mitigating negative effects. Looking ahead, we suggest that future research should investigate longer term water quality effects and compare different ways of potentially controlling nutrient release.

Similar content being viewed by others

Introduction

Peatland restoration is a growing global practice that aims to improve ecosystem services such as climate regulation, water provision and biodiversity conservation, from degraded peatland areas (Bonn et al. 2016). The value of such restoration is widely recognised and many countries in Europe, North America and Asia, have undertaken large-scale restoration schemes, to restore peatlands degraded due to land management and industrial development (NPWS 2015; Andersen et al. 2016; Anderson et al. 2016 ; Chimner et al. 2017). However, restoration often involves interventions (e.g. ditch blocking, forestry removal), which cause both physical and biogeochemical disturbance to the peatland, potentially affecting local surface water quality (Nugent et al. 2003; Nieminen et al. 2017). Therefore, it is important to increase understanding of the impacts of peatland restoration on sensitive riverine ecosystems.

One such case commonly found across Europe and North America, is restoration of deep peat that was drained and afforested (Andersen et al. 2016; Anderson et al. 2016; Chimner et al. 2017). In the UK, such afforested areas represent at least nine percent (1900 km2) of the deep peatland area (here, > 50 cm deep; Hargreaves et al. 2003), with drainage and afforestation with non-native conifer trees occurring between the 1960s and the 1980s (Sloan et al. 2018). During afforestation, the lowering of the water table (i.e., by ploughing closely spaced drainage channels) provided conditions to promote the growth of non-native conifer trees, which then continued to lower the water table through evapotranspiration (Hökkä et al. 2008). As these trees grow and the canopy closes, specialist, native peatland vegetation is lost, and surface peat layers continue to degrade as they dry out further, resulting in continued carbon dioxide emissions from peat beneath the plantation (Hermans 2018) and, potentially, associated deterioration in water quality (Haapalehto et al. 2014; Anderson et al. 2016).

In formerly drained, afforested areas on deep peat, restoration management (termed forest-to-bog restoration) is carried out to reverse the loss of ecosystem services and in the longer-term, can effectively raise the water table, to levels similar to those of open, intact bog (Gaffney et al. 2018; Howson et al. 2021) thus supporting the gradual recovery of native blanket bog vegetation assemblages (Anderson and Peace 2017; Hancock et al. 2018), and enabling restoration areas to function as net carbon sinks (Hambley et al. 2019; Lees et al. 2019).

Forest-to-bog restoration commonly involves blocking drainage ditches so that water and sediment can be retained in the restoration areas, and felling/ removal of trees by harvesting (either the whole tree or just the main stem; Anderson et al. 2016; Hancock et al. 2018). In the short-term, these interventions can impact local surface water chemistry (Wilson et al. 2011; Finnegan et al. 2014). Previous studies have found increased ditch and stream concentrations of dissolved organic carbon (DOC), aluminium (Al), potassium (K) and phosphorus (P) within close proximity to restoration management (Muller et al. 2015), released through rewetting of peat (Fenner et al. 2011; Kaila et al. 2016) and decomposition of brash (i.e., tree tops and branches) and needles (Kaila et al. 2012; Asam et al. 2014a). Thus, the choice of harvesting method (selected depending on tree size, the timber quality/value, site accessibility to machinery and funding available e.g. government grants), can have differing impacts on surface water quality (Nieminen et al. 2017; Shah and Nisbet 2019). For example, in stem-only harvesting restoration sites (where brash remains on site) greater quantities of nutrients (P and N) may be released to watercourses (Asam et al. 2014b; O’Driscoll et al. 2014b).

The biogeochemical effects of restoration, may be largest within the first year post-harvesting (Shah and Nisbet 2019), as rewetting of peat can be rapid (Gaffney et al. 2020b) and fresh brash residues begin to decompose (Kaila et al. 2012). This is additionally concerning where restoration areas drain into streams or rivers, where (for example) Atlantic salmon (Salmo salar) breed: a species of high economic and nature conservation importance peatlands (Butler et al. 2009; Martin-Ortega et al. 2014), which may be negatively affected by these changes in water quality (Andersen et al. 2018).

With a growing international trend in forest-to-bog restoration (Anderson et al. 2016), understanding the impact of this increasingly widespread land-use change (on water quality) is therefore both timely and critical. In Scotland, an estimated 11% of all Scottish afforested areas (1500 km2) may be targeted for potential forest-to-bog restoration in coming decades (Vanguelova et al. 2018). One of the most significant areas where forest-to-bog restoration is occurring is the Flow Country peatlands of Caithness and Sutherland (N. Scotland), Europe’s largest blanket bog habitat, (670 km2 was previously drained and afforested; Stroud et al. 1987), which is our study area here.

This study aimed to determine the short-term effects of forest-to-bog restoration on water quality in streams and receiving rivers in the year pre- and post-restoration. However, in practice the time between the start of sampling and restoration commencement was 16 months, and we thus continued sampling post-restoration for 15 months, for comparable periods pre- and post-restoration. Additionally, we assessed the possible effects of water quality changes on the wider riverine ecosystem—by considering results with respect to national statutory water quality standards aimed at protecting ecological status. We hypothesised that water quality changes would be greater in streams than in rivers, where greater dilution is likely to occur. Further, that there would be measurable increases in concentrations of DOC, nutrients and metals within the duration of the study. Finally, we hypothesised that in the short-term, those changes would affect water quality as considered against the Scottish Government water quality thresholds set under the guidance of the EU Water Framework Directive (EU WFD).

Methodology

Site description

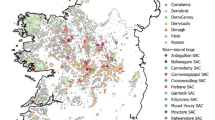

This study was conducted at the Forsinard Flows National Nature Reserve (NNR; − 58.357, − 3.897; latitude/longitude) in northern Scotland. Forest-to-bog restoration has been carried out on the reserve by the nature conservation NGO, the Royal Society for the Protection of Birds (RSPB) since the 1990s in areas of former Sitka spruce (Picea sitchensis) and lodgepole pine (Pinus contorta) forestry plantations. Our study focussed on the Dyke Forest, an area where forest-to-bog restoration commenced in October 2014 (Fig. 1) at seven sites within the forest, each comprising of several forestry compartments. At restoration sites, the main forestry drains were blocked with plastic piling dams (in three places), prior to the drain entering a watercourse (stream or river). As an additional measure, to retain sediment, Hytex Terrastop® geotextile silt traps were also installed across drains, upstream of plastic piling dams. Trees were removed by one of three methods: (1) stem-only harvest, where brash (tree tops and branches) remained on site and was arranged in “mats” for machinery to drive on, and (2) whole-tree harvest, where whole trees were harvested, or stem harvesting took place but with brash mats later removed, and (3) whole tree mulching, where trees were too small to yield value from the timber, they were mulched, without further treatment or removal of mulch material (across ~ 10–15% of the total restoration area).

Stream and river sampling map. Shaded plots show restoration sites (with forestry compartments demarked by black lines), commencing restoration during the study, within the River Dyke catchment. Plots in red show restoration sites where stream chemistry was monitored. Plots in blue are ‘other’ restoration sites (no streams were monitored here) but these restoration areas within the catchment of the River Dyke are part of the 3% of the catchment where restoration commenced during the study. Stream and river sampling points are shown by arrows with names in the attached circle. The first letter of each sampling point name refers to one of the five classes of sample points: B = bog stream control sites (BOG), F = forest streams control sites (FOR), R = restoration treatment stream sites (REST), BR = river baseline sites (BASE-R), RR = restoration treatment river sites (REST-R). Here, baseline refers to sampling points upstream of restoration. All points were sampled for the duration of the study i.e. pre- and post-restoration. Class refers to treatment of data for initial statistical analysis (PRC)

The River Dyke (2010 mean annual discharge 3.4 ± 0.1 m3s−1; Vinjili 2012) was the major river in the study area, which drains the Dyke Forest. It is 13 km long, with a catchment of ~ 57 km2, which encompasses open blanket bog (~ 65%), plantation forestry (~ 25%) and formerly afforested areas of blanket bog (~ 10%; which underwent forest-to-bog restoration in 2005–2006; Andersen et al. 2018). During this study, which took place between May 2013 and December 2015, 3% of the River Dyke catchment underwent forest-to-bog restoration commencing in October 2014. All harvesting/mulching activities were completed by March 2015 in restoration plots DK2, DK4 and DK5, while this was completed by August 2015 in restoration plot DK6 (Fig. 1).

The River Dyke joins the Halladale River (Fig. 1), which is an important river for Atlantic salmon (and thus, for economically valuable, recreational fishing; https://www.strathhalladale.com/). It is 29 km long, with a mostly peat covered catchment (~ 267 km2), of which, the River Dyke makes up about 20% (Lindsay et al. 1988; Vinjili 2012).

Annual precipitation (measured with a Davis Vantage Pro2Plus weather station, situated close to sampling point B1) during the study period was 848 mm, 1059 mm and 719 mm in the years 2013, 2014 and 2015 respectively, compared to the 1981–2010 long term average of 970.5 mm for Kinbrace weather station (~ 20 km distance; Met Office 2020). In generally, summer months had lower precipitation than autumn and winter; this was most pronounced in 2013 where June and July were very dry (Fig. 2).

Stream and river sampling

To measure the effect of forest-to-bog restoration on water quality, we sampled four streams that directly received drainage from the restoration sites, and the Dyke and Halladale rivers receiving these streams (Fig. 1). The stream sampling strategy was to sample an upstream and downstream (of restoration) sampling point on each stream, the upstream acting as a baseline site against which the effect of restoration could be measured in a downstream site. Four first- or second-order streams draining the restoration sites (DK2, DK4, DK5, DK6) were sampled. However, due to the layout of restoration areas and the stream network, only two of these streams had true upstream baseline sampling points (DK2 and DK4). The upstream sampling point on the DK6 stream was influenced by restoration from restoration site DK5. The DK5 stream did not have an upstream sampling point as the stream was sourced from the DK5 restoration area (Fig. 1).

Additionally, two open bog control streams and one afforested control stream were sampled from catchments unaffected by restoration. These streams only had a downstream sampling point, as we made the assumption here that downstream changes in water chemistry, where there was no significant change in land use would be small compared to changes due to land use (Muller et al. 2015). Although not consistent with the restoration streams, this measure reduced the sample numbers for time and cost purposes and also allowed completion of the sampling effort in one day in all seasons.

The Rivers Dyke and Halladale were sampled upstream (baseline site) and downstream of the confluence with the adjoining restoration-influenced streams (Fig. 1). In total, 20 points were sampled monthly from May 2013 to December 2015 (Fig. 1). As forest-to-bog restoration commenced in October 2014, the period May 2013–September 2014 was considered pre-restoration, while October 2014–December 2015 was post-restoration. Water samples were collected every month during the study, except during the main felling and mulching period (in our restoration stream catchments; October to December 2014), when all sites were sampled every two weeks to capture any immediate changes in water quality. Water samples were collected into clean HDPE bottles (Nalgene®) and kept in a coolbox until their return to the laboratory. Measurements of pH, electrical conductivity (EC) and temperature were made in the field, using a YSI 556 MPS multi-parameter meter.

As part of another study (Gaffney et al. 2020a), stream discharge was logged every 30 min from December 2013 to December 2015 at three sampling points, site R6 (restoration stream), site F3 (afforested control stream) and site B1 (open bog control stream). These data are presented alongside water chemistry results here to aid interpretation.

Sample preparation and analysis

Samples were refrigerated at 4 °C on return to the laboratory and then vacuum-filtered (normally within 24 h of collection; always within 36 h). Samples were filtered through pre-combusted 0.7 µm glass fibre filters (Fisherbrand, MF300), prior to analysis of dissolved organic carbon (DOC) and dissolved inorganic carbon (DIC) by high temperature catalytic combustion (using a Shimadzu TOC-L; Sugimura and Suzuki 1988). For analysis of nutrients (dissolved ammonium (NH4+), soluble reactive phosphate (PO43−) and total oxidised nitrogen (TON)) and elements (Ca, Mg, Na, K, Fe, Mn, Al, S, Cu and Zn), samples were vacuum-filtered through 0.45 µm cellulose acetate filters (Sartorius Stedim). Nutrient analysis was carried out immediately with a Seal AQ2 discrete analyser, using methods adapted from ISO international water quality standards (http://www.seal-analytical.com/). Filtered sample for macro- and trace-element analysis was first acidified (to 5% using trace metal grade concentrated nitric acid) and then analysed by inductively coupled plasma optical emission spectrophotometry (ICP-OES; Varian 720ES; Clesceri et al. 1998). Certified reference materials (CRMs; MERCK nitrate 200 mg L−1, Fluka PO43− and NH4+ 1000 mg L−1 and the multi-element environmental CRM MAURI-09 (river water) Lot #913, Environment Canada) were used to validate each method and analytical run, with recoveries of 81 to 104% determined.

Additionally, samples were analysed gravimetrically for concentrations of suspended particulate matter (SPM) and particulate organic carbon (POC). Known sample volumes were filtered through pre-weighed 0.7 µm glass fibre filters (Fisherbrand, MF300) under vacuum. Filters were then oven dried (105 °C, 12 h) and re-weighed to determine SPM (in mg L−1). Filters were then ashed in a muffle furnace (375 °C, 16 h) and re-weighed once cool, to determine loss on ignition, which was subsequently converted to POC (Ball 1964; Dawson et al. 2002). Turbidity (as a measure of fine suspended particulate matter) was also measured on raw samples using a turbidity meter (Lovibond, Turbicheck).

Finally, raw samples were titrated with 0.001 M hydrochloric acid (to an end point of pH 3.5; using a Jenway 3345 Ion Meter), to determine Gran alkalinity (Neal 2001). This was then used to calculate the acid neutralising capacity (ANC), defined as the sum of the strong base cations minus the sum of the strong acid anions, which can be estimated by measurements of Gran alkalinity, dissolved Al (from ICP-OES measurements) and DOC (Neal and Reynolds 1998).

Statistical analyses

All statistical analyses were performed using RStudio (Version 0.98.501, R Core Team 2017). To consider the question of the effect of restoration, the dataset was broken into pre-restoration (May 2013 to September 2014) and post-restoration (restoration commencement to end of study; October 2014 to December 2015) periods.

All sample points were initially assigned to a watercourse class (bog stream control sites (BOG), forest stream control sites (FOR), restoration treatment stream sites (REST), river baseline sites (BASE-R) and restoration treatment river sites (REST-R); Fig. 1). To look at overall changes in water chemistry of watercourse classes over time, we used Principal Response Curves (PRC; package vegan, Oksanen et al. 2016). We used PRCs for a purely descriptive presentation, hence we did not account for spatial correlation among replicates here. PRCs are based on redundancy analysis, where the response variables (log10 transformed water chemistry) for the treatments (watercourse classes) can be compared to a reference, producing a graphical output; the response curve (van den Brink and Ter Braak 1998, 1999). In this case, the bog steam control sites (BOG) were the reference to which the other watercourse classes were compared. The PRC summarises the main trajectory of combined water quality measures (time by treatment interaction), in relation to the reference, and displays the strength of association of the different water chemistry (response) variables with the trajectory by giving each variable a score (van den Brink and Ter Braak 1999). Water chemistry variables which scored > 0.5 or < − 0.5 on the y-axis were identified as influencing the overall temporal trend (van den Brink and Ter Braak 1999).

The PRC analysis suggested that following restoration, the chemistry of restoration stream sites (REST) diverged from that of other classes (due to increased concentrations of the top scoring water chemistry variables), while other classes remained similar to the BOG references sites. Therefore, further statistical analyses were performed to investigate which of these water chemistry variables in restoration stream sites, were significantly affected by restoration, compared to corresponding measures from upstream control sites.

For this analysis, three streams were selected where there was both a sampling point upstream (FOR) and downstream (REST) of restoration (streams DK2, DK4 and DK6). The upstream measures were used as covariates in the model (sites F1, F2 and R4; Fig. 1, Table S1) to test the effect of restoration on the corresponding downstream sites, (sites R1, R2 and R5) i.e. we used a baseline covariate model (Brown and Prescott 2015). Thus, in effect, we modelled the effect of treatment on the downstream sites, after accounting for any effects that could be explained by corresponding measures taken upstream of restoration management. For this modelling, site R4 was used as the baseline site for any additional effects of restoration from DK6, even though it receives drainage from the DK5 restoration site, upstream of here (Fig. 1).

These models were performed on the top scoring parameters (> 0.5 or < − 0.5) from the PRC using generalised linear mixed models (function glmmPQL; package MASS, Venebles and Ripley 2002). Restoration period (pre- and post-restoration), stream and their interaction were fixed factors, while sampling season was included as a random factor, with a first order autoregressive structure (to account for temporal autocorrelation). The best fit models were selected by visually checking normality, homoscedasticity and autocorrelation of residuals (Crawley 2007; Zuur et al. 2011). Wald chi-square tests (function Anova, package car, Fox and Weisberg 2011) were used to determine if the model fixed effects were significant, followed by post-hoc comparison using least squares means with Tukey adjustment, where appropriate (function lsmeans, package lsmeans, Lenth 2016).

There are mandatory water quality standards which apply solely to rivers in Scotland (i.e., those defined within the national monitoring plans—not small streams) based on EU Water Framework Directive (WFD) guidelines (The Scottish Government 2014). Under these standards, small changes in concentration may affect the “ecological status” of a river. Therefore, we also considered water quality standard pass rates for all river sites (BASE-R and REST-R) pre- and post-restoration. WFD guidance seeks to class key water chemistry variables at four ranged levels—classifying water quality from “high” to “poor” quality, depending on parameter concentrations (The Scottish Government 2014). For each of these class ranges, the percentage of time (pass rate) within each ranged level was calculated. For other parameters, which only have a single level or cut-off below which “good” quality is stated, the percentage pass or fail rate was calculated.

Metals which appear in the WFD standards are given as bioavailable concentrations. This is the fraction of a metal readily available to biota (i.e., present as free ions in solution; UK TAG 2014a). Bioavailable concentrations of a metal are thus dependent on other parameters (i.e., DOC, pH, Ca; UK TAG 2014a) as these can affect the fraction present as free ions in any solution. Given this dependency, bioavailable concentrations can be estimated using these quantified parameters and the total dissolved concentration of metals of interest, using the UKTAG Metal Bioavailability Assessment Tool (UK TAG 2014b). Hence, bioavailable concentrations of Cu, Zn, Mn and Ni were calculated and percentage pass rates against the Scottish Government standards (The Scottish Government 2014) were determined and compared pre- and post-restoration.

Results

Effects of forest-to-bog restoration on water chemistry

The PRC analysis suggests that in the restoration treatment streams (REST), PO43−, TON, Mn, SPM, Al, POC, turbidity, Fe, DOC and NH4+ increased relative to the BOG streams after forest-to-bog restoration, from summer (2015) for the rest of the study period (Fig. 3). However, it is important to note that these parameters were also elevated in REST compared to the other watercourse classes prior to restoration during summer months. In restoration treatment river sites (REST-R), there was no apparent change post-restoration, and the temporal pattern between REST-R and river baseline sites (BASE-R), throughout the whole study was closely coupled. During the post-restoration summer period, where REST streams were affected by restoration, stream discharge was mostly low during sampling (Fig. 4).

Principal response curves for water chemistry between June 2013 and December 2015. Panel a represents overall deviation from the reference bog stream control sites (BOG), for the other watercourse classes (forest stream control sites (FOR), restoration treatment stream sites (REST), river baseline sites (BASE-R) and restoration treatment river sites (REST-R)). This is expressed as a canonical coefficient on the first principal component axis (PC1), in comparison with the reference BOG stream control sites—represented by the zero line. Panel b shows canonical coefficients for all the elements interpreted. A more positive coefficient shows a stronger relationship with the curve while a more negative coefficient suggests the opposite. The water chemistry parameters most strongly associated with the curves are therefore those with the highest scoring coefficients (shaded area)

Time series of mean concentrations ± standard errors of Fe (a, b), PO43− (c, d), DOC (f, g) and K (h, i) in bog stream control sites (BOG; n = 2), forest stream control sites (FOR; n = 3), restoration treatment stream sites (REST; n = 6), river baseline sites (BASE-R; n = 2) and restoration treatment river sites (REST-R; n = 7) from May 2013 to December 2015. Time series of stream discharge (e) during sampling, measured at bog stream control site B1, forest stream control site (F3) and restoration treatment stream site (R6). Time points are monthly intervals except for October to December 2014, when restoration first commenced and sampling was undertaken every two weeks (indicated by grey rectangle)

Modelled effects of restoration on water chemistry

The baseline covariate model (using three restoration streams (DK2, 4, 6) each with an upstream (baseline; sites F1, F2 and R4) and downstream (restoration; sites R1, R2 and R5) sampling point), found significant increases in PO43− concentrations following forest-to-bog restoration in downstream (restoration) sites, relative to their upstream (baseline) concentrations (X = 13.52, p = 0.0002; Table S2). Despite showing high positive values on the PRC, no significant effects of restoration were found for TON, Mn, SPM, POC, Al, turbidity, Fe, DOC or NH4+ using baseline covariate models (Table S2).

In downstream treatment sites included in the model, mean PO43− concentrations increased by a factor of 4.7 following restoration, while in the upstream baseline sites the mean PO43− concentration increase post-restoration was a factor of 2.3 (Table 1). However, the baseline site for the DK6 stream (site R4) was really a restoration stream site (class = REST; 9% of upstream catchment undergoing restoration at DK5), and PO43− concentrations increased here post-restoration (5.4-fold). Including all sites in the restoration (REST) class, PO43− concentrations increased by a mean factor of 4.4 (range 1.7–6.2) post-restoration, while in the FOR class (forest stream control sites), PO43− concentrations decreased slightly post-restoration (mean factor of 0.8 (range 0.6–1.0) compared to pre-restoration concentrations; Table 1).

Temporal effects of restoration on water chemistry

Across the whole study (considering all sampling points in the classes assigned in Fig. 1), PO43−, DOC, Fe, K, Mn and turbidity reached maximum concentrations during summer months in all stream classes. However, in restoration stream sites (REST), seasonal increases for each of these parameters in summer 2015 (post-restoration) were notably larger in amplitude than in pre-restoration summers, relative to the BOG and FOR control sites (Figs. 4,5). In general, these post-restoration summer concentration peaks were associated with low stream discharge conditions (aside from November 2015, Figs. 4,5).

Time series of mean concentrations ± standard errors of Mn (a, b) and turbidity (c, d) in bog stream control sites (BOG; n = 2), forest stream control sites (FOR; n = 3), restoration treatment stream sites (REST; n = 6), river baseline sites (BASE-R; n = 2) and restoration treatment river sites (REST-R; n = 7) from May 2013 to December 2015. Time series of stream discharge (e) during sampling, measured at bog stream control site B1, forest stream control site (F3) and restoration treatment stream site (R6). Time points are monthly intervals except for October to December 2014, when restoration first commenced and sampling was undertaken every two weeks (indicated by grey rectangle)

The largest post-restoration concentration increase measured was for PO43−, where the mean post-restoration concentration in restoration stream sites increased 4.4-fold (90 µg P L−1), compared to the mean pre-restoration concentration (20 µg P L−1). Similarly, enhanced seasonal cycles were observed in REST streams following restoration for DOC (1.3 fold increase), Fe (1.5 fold increase), K (1.9 fold increase; Fig. 4), Mn (1.9 fold increase) and turbidity (1.6 fold increase; Fig. 5), compared to mean pre-restoration concentrations.

Additional biweekly sampling in the first three months of restoration commencing (October–December 2014), showed no immediate effects of restoration on water quality (Figs. 4,5) in the first autumn into winter.

In the river sites (REST-R), the seasonal patterns were more subtle and there were no discernible differences in concentrations of PO43−, DOC, Fe, K, Mn or turbidity in sites downstream of treatments when compared to controls (BASE-R), following restoration (Figs. 4,5).

Effects of restoration on WFD standards pass rates

In the river sites, there were some small effects of restoration on pass rates of some WFD parameters, under the Scottish Government’s water quality standards (Fig. 6).

a, b Pass rates (%) for Scottish Government water quality standards (based on EU WFD guidelines) applied to for river baseline (BASE-R) and river restoration treatment (REST-R) sites, pre- and post-restoration for a Acid Neutralising Capacity (ANC) and b Phosphate (PO43−). To achieve “high”, “good”, “moderate” or “poor” quality status for any variable the parameter must exceed (e.g., > 80) or fall below (e.g., < 4.1) the stated threshold. For ANC higher values indicate higher water quality. For PO43−, lower values indicate higher water quality. c Pass rates for concentrations of bioavailable metals as calculated using the M-bat UK Government Tool (UK TAG 2014b). To pass, concentrations must not exceed the following bioavailable metal standards: Cu—1 µg L−1, Mn—123 µg L−1, Ni—4 µg L−1, Zn—10.9 µg L−1

The pass rate for the “high” quality standard for PO43− concentrations in REST-R sites fell from 64 to 46% post-restoration, meanwhile, the pass rate changed little upstream of restoration areas in BASE-R sites (falling from 58 to 53% post-restoration). Post-restoration, there were also fewer occasions where the target “good” quality status (< 9 µg P L−1) was achieved in REST-R sites compared to BASE-R sites. In REST-R sites the pass rate for achieving “good” quality status i.e. achieving “good” or “high” quality, fell from 100 (pre-restoration) to 75% post-restoration, while in BASE-R sites the pass rate changed less falling from 100 (pre-restoration) to 90% post-restoration. Therefore, post-restoration in REST-R sites, there were more samples achieving only “moderate” quality status (compared to BASE-R sites) and some (5% of samples) achieving “poor” quality status for PO43−.

Aside from two post-restoration REST-R samples failing to achieve even “poor” quality status for ANC, there were no other effects of restoration on pass rates for the Scottish Government’s water quality standards. Pass rates for temperature, dissolved oxygen, NH4+ and pH, were ≥ 78% when considering “high” quality threshold (data not shown).

Pass rates for bioavailable Cu, Mn and Ni were generally high in both river classes pre- and post-restoration with no apparent effects of restoration. Manganese and Ni had almost 100% pass rate during the entire study, while the pass rate for Zn was ≤ 50% in both BASE-R and REST-R sites (Fig. 6c).

Discussion

In this study, the chemistry of streams immediately downstream of restoration activity diverged from that of non-impacted watercourses, in the first 15 months following restoration, which confirms our hypothesis. PRC analysis suggested this divergence was due to increased concentrations of some key chemical parameters (PO43−, Al, DOC, Fe, Mn, NH4+ and TON) and increased suspended particulates (e.g., turbidity, POC and SPM), which occurred from the first summer post-restoration (June–November), during both high and low discharge conditions. However, only PO43− was clearly highlighted as having been markedly different pre- and post-restoration. Nevertheless, we also noted that the areas planned for restoration, already differed somewhat from control areas, prior to restoration commencing (PRC).

Effects of forest-to-bog restoration on water chemistry

Restoration effects on stream chemistry were strongly seasonal, with increased concentrations of many parameters occurring from June 2015 until November 2015 (the first summer and autumn post-restoration), showing a strong influence of the annual temperature and decomposition cycle on stream chemistry along with stream discharge (Dinsmore et al. 2013; Muller et al. 2015). Within this period, some parameters (DOC, Fe, Mn) exhibited maximum concentrations during August (even though discharge was low), while others (PO43−, K) exhibited maximum concentrations during November sampling (under high discharge conditions).

Phosphate concentrations clearly scored highest in the PRC, showing this was a key parameter among overall water quality variation in the study. Furthermore, our univariate analyses, which controlled for upstream variation, confirmed that there was a significant concentration increase in restoration affected stream sites, post-restoration, by 4.4-fold (range 1.7–6.2). Significant increases in stream PO43− have also been found by others following forest felling, which has been directly related to the post-felling decomposition of tree biomass; the left over needles and branches (Cummins and Farrell 2003a; Kaila et al. 2012; Asam et al. 2014a; Shah and Nisbet 2019; Table 2).

In restoration stream sites, there was also a larger amplitude in seasonal stream PO43− concentrations post-restoration (increasing from June 2015; up to ~ 800 µg L−1). This is similar to that found in other studies regarding peatland felling impacts on stream water (Cummins and Farrell 2003a; Finnegan et al. 2014; Shah and Nisbet 2019). These trends have been found to persist for up to 4 years post felling (Rodgers et al. 2010; Table 2), continuing to be clearly observable as seasonal peaks in summer months (Cummins and Farrell 2003a). In our study, peak PO43− concentrations were measured in November 2015, which was the highest discharge conditions sampled, within the post-restoration summer-autumn period (where PO43− concentrations were elevated). Thus, within this period, PO43− released from restoration sites, was flushed into streams during precipitation events and the timing of peak concentrations was likely related to the occurrence of high discharge conditions (Rodgers et al. 2010).

Similarly, seasonally increased stream water K and Mn (~ twofold) occurred in our study following restoration. These are both known to be affected in streams impacted by conifer felling (Rosén et al. 1996; Cummins and Farrell 2003b; Table 2), with Mn released from needles (Asam et al. 2014a) and K from brash decomposition (Fahey et al. 1991; Palviainen et al. 2004a). Other work has found an even greater effect on K (~ fourfold increase) than was observed here, with a decline seen after 2 years (Cummins and Farrell 2003b). Müller and Tankéré-Müller (2012) also found a similar (~ twofold) increase in Mn occurring during the spring and summer, 1-year post-felling on a blanket peatland (Table 2).

There were also seasonal increases in DOC (31%) in restoration stream sites, following restoration. Increased DOC has been widely noted following conifer harvesting (Table 2) and has been attributed to both brash decomposition (Hyvonen et al. 2000; Palviainen et al. 2004b) and a rising water table which then causes enhanced decomposition in rewetted peat (Nieminen et al. 2015). DOC changes can also be associated with shifts in Al and Fe—as these metals are commonly adsorbed to DOC molecules (McKnight and Bencala 1990; Krachler et al. 2010). In our study, seasonal increases in Fe were observed following restoration in streams, with a maxima occurring in August 2015, alongside peak DOC concentrations. Here, increased Fe may have been associated with changing redox conditions within the peat and water table movement (Muller et al. 2015); whereby, a rise in the water table post-restoration (Gaffney et al. 2018) promoted Fe dissolution and thus contributed to increased Fe in streams. The peak post-restoration concentrations for DOC and Fe occurring during low discharge conditions in summer (August), suggests maximum release during summer decomposition of peat and brash. Therefore, if high precipitation and discharge events had occurred during the post-restoration summer, this would likely increase peak DOC concentrations above what was measured here under low discharge conditions, resulting in high, event-based DOC fluxes (Gaffney et al. 2020a).

Another rapid effect of conifer felling on stream water quality is increased SPM and turbidity, for example during the first six months post-harvest (spring/summer; Finnegan et al. 2014; Table 2). We did not find a significant effect following restoration as REST sites had high turbidity (Fig. 5), SPM and POC concentrations (Figure S1) in both the pre-restoration (2013) and post-restoration (2015) summers (giving these parameters a high PRC score relative to the BOG controls). However, in 2013 the concentration increases were associated with high discharge occurring as heavy rain fell after a summer drought in June/July 2013. As there was no drought in summer 2015 but instead more consistent low rainfall across the season, a higher number of rain days (relative to the very dry summer in 2013) and generally low discharge conditions during summer 2015 sampling, this may link restoration activity to the increases in turbidity, SPM and POC (from July–October 2015), through physical disturbance and erosion. In our study, maximum SPM concentrations (~ 100 mg L−1) were lower than those recorded by others (481 mg L−1 during high flow events; Finnegan et al. 2014). Although our study did not specifically target high flow events, the maximum concentrations measured could suggest that the use of silt traps in addition to drain blocking in our restoration sites may have helped prevent a significant increase in SPM in streams affected by restoration. However, the effects of clear felling (stem harvesting) on increased suspended solids have been known to last > 10 years (Palviainen et al. 2014), therefore, low concentrations or declines observed in shorter-term studies (i.e., less than one year) may only be temporary.

Interestingly, there were no clear increases in nitrogen (N) species post-restoration, which is also commonly reported following conifer felling (Asam et al. 2014b; Palviainen et al. 2014; Table 2). From a decomposition perspective, P is known to be released faster than N from tree litter (Moore et al. 2011), which may explain why, in the first year following restoration at least, we did not observe clear increases in N.

In general, the effects of forest-to-bog restoration were restricted to streams directly receiving drainage from restoration sites, as found by Muller et al. (2015). Here, restoration streams (REST) flowed directly into main rivers (REST-R), but streams were small in comparison to the rivers Dyke or Halladale. Restoration effects thus became markedly diluted and rendered undetectable at river sites, even immediately downstream of the stream confluence. This was also found by Rodgers et al. (2010), where the main study river (Srahrevagh River, W. Ireland) was not impacted following conifer felling on blanket bog sites.

Effect of catchment area undergoing restoration

Felling a smaller proportion of a catchment is known to have less impact on stream water quality than felling a larger area (Nieminen 2004), with one study from a mixture of peat and mineral soils suggesting that 30% of a catchment was a threshold below which significant effects could not be measured (Palviainen et al. 2014). In our study, the percentage of any restoration stream catchment felled ranged from 3% (DK4 stream), to 8% (DK2 stream), 9% (DK5 stream) and 23% (DK5 + DK6 stream, at sample point R5), while the total area undergoing restoration between October 2014 and December 2015, in the River Dyke catchment, was 174 ha (just 3% of the Dyke catchment area).

It could be hypothesised that the largest post-restoration changes in water chemistry might occur where the largest percentage of a catchment area was felled (i.e., DK5 + DK6 stream). However, of the impacted water chemistry variables, only K increased to levels that were higher (> twofold higher than pre-restoration concentrations) in this catchment than in other restoration stream sites. Very similar peak concentrations of PO43− (reaching ~ 600 µg P L−1) and Mn (reaching ~ 1000 µg L−1) occurred in the DK2 stream (site 2), DK5 stream (site 18) and DK5 + DK6 stream (site 10), where quite different proportions of the stream catchments were felled. We suspect this may be due to certain site-specific factors. For example, the DK5 + DK6 stream had a larger unplanted area between the restoration sites and the stream itself—which could have acted as a buffer zone, attenuating water chemistry prior to the stream (Väänänen et al. 2008).

Restoration on water chemistry and biodiversity links

Another important consideration for forest-to-bog restoration here, is the Atlantic salmon spawning season, which normally occurs in October or November in the Rivers Dyke and Halladale and subsequent early life cycle stages in the spring months where young salmon can be especially sensitive to water quality (Soulsby et al. 2001). Increased monitoring every two weeks from October to December 2014 (when restoration commenced), showed no changes in water quality during this initial restoration period, which is critical for salmonid egg survival (Malcolm et al. 2003). This lack of change in water quality may again have been (at least partly) due to the use of sediment traps in addition to blocking the main forestry drains, due to felling occurring only on small proportions of the catchment at any one time (3–23%), and, as most effects of restoration in this study, occurred as an enhanced seasonal cycle (peaking in summer months).

In agreement with our findings, another study also found no significant effects on water chemistry and sediment deposition in the hyporheic waters of known salmonid spawning sites of the River Dyke (Andersen et al. 2018). However, as the felled areas increases, observed effects may become significant, or, other effects may arise; therefore, water quality monitoring should continue medium to long term where large restoration or felling projects occur.

Limitations of study design

This study measured the short-term effects of forest-to-bog restoration on water quality in streams and receiving rivers, but there were some limitations to the sampling design. Firstly, the sampling design was imperfect. Not all restoration streams had an upstream and downstream (of restoration) sampling site, due to the layout of restoration sites and stream catchments. This was compensated by the inclusion of independent afforested and open bog control catchments. However, these streams only had one sampling point each, to keep sample numbers manageable. Here, we made the assumption that downstream changes in water quality would be small in comparison to those due to restoration (Muller et al. 2015). For the most part, sampling was restricted to monthly intervals, which captured a range of discharge conditions, but by not specifically targeting high discharge (storm event) conditions, peak nutrient concentrations may not have been recorded (Rodgers et al. 2010). This is particularly true in the post-restoration (2015) summer into autumn, where monthly sampling showed nutrient concentrations were elevated in restoration streams. The November 2015 sampling captured this somewhat but other high discharge events during the early summer were not sampled. This study was also short-term, measuring only for 15 months post-restoration. Although the greatest water quality effects of restoration are likely to occur in the first-year post-restoration, they may last up to four years post-restoration (Rodgers et al. 2010; Shah and Nisbet 2019), thus we were not able to quantify the longer-term duration of effects on water quality in this study.

Potential effects of restoration on statutory water quality standards

We monitored certain water quality parameters, considered nationally and internationally to be relevant to ecological and/or human health, as listed under the EU Water Framework Directive WFD (European Commission 2000), but the only parameter for which we observed significant concentration increases following restoration was PO43− (in restoration streams).

WFD standards apply solely to rivers; therefore, the pass rate assessment presented here regarding Scottish standards (based on WFD criteria) for PO43− only considered river classes. Achieving “good” quality status within the WFD normally implies that a system is subject to minimal anthropogenic impact (European Commission 2016). This status was achieved during 75% of the post-restoration period (for PO43−) in restoration river sites (REST-R), compared to 90% of the time during the post-restoration period in baseline river sites (BASE-R). The key period when a “good” status was not achieved in REST-R (i.e., 25% of the time during the post-restoration period) was during the summer of 2015. For PO43−, the main risk posed to ecosystems is through eutrophication—and the promotion of algal blooms, which can reduce light penetration and (upon decay) consume oxygen. However, the risk is often of greater concern in static water bodies (i.e., small lochs where there is a longer water residence time) or in coastal waters, rather than in rivers (Smith et al. 1999).

For other parameters included in the WFD (i.e., temperature, DO, ANC, NH4+, pH and bioavailable metals), no effects of restoration in rivers were observed, suggesting that the restoration practices used were effective at protecting water quality and ecological status.

Targeted mitigation for phosphate

To minimise PO43− inputs to surface waters, there are potential additional management interventions that could be used—in addition to felling small percentages of catchments (< 23%). The removal brash and needles, which are a major source of PO43− after harvesting, may reduce the leaching of PO43− into watercourses (Palviainen et al. 2004b), although there are limited uses for brash and needles once removed.

Others have demonstrated that diverting runoff water from upstream felled areas, through a buffer zone sown with grasses (Holcus lanatus and Agrostis capillaris) reduced SPM and phosphate in runoff by 18% and 33%, respectively (O’Driscoll et al. 2014a), and that these buffers were effective in retaining PO43− during high flow events (Asam et al. 2012). However, in our study site, which is managed for nature conservation, H. lanatus and A. capillaris are not suitable species to use. Our study site is very close to Natura 2000 designated blanket bog and hence alternative solutions, including the removal of brash and needles and timing restoration slowly and carefully, e.g. phased felling (Shah and Nisbet 2019), may need to be explored. In other restoration sites (outside designated areas), the use such species or other native grasses (e.g. Deschampsia flexuosa which tend to colonise restoration areas; Hancock et al. 2018) could be tested as an effective means of removing PO43− in runoff water (O’Driscoll et al. 2011), thus reducing leaching to streams. Further, the grazing of grasses by deer may then facilitate the spread of nutrients across the wider landscape (Bokdam 2001), and should be investigated for the potential to assist restoration (Hancock et al. 2018).

Conclusion

Forest-to-bog restoration (by drain blocking combined with conifer removal) resulted in significant increases in phosphate (mean 4.4-fold, range 1.7–6.2-fold) in streams draining from restoration sites, but not in rivers. However, there were a greater number of occasions post-restoration (15% increase), when phosphate did not reach the target “good” status in receiving rivers, when compared to the river baseline monitoring sites under the WFD based Scottish Government thresholds for water quality. Seasonally increased turbidity, DOC, Fe, K and Mn concentrations in restoration streams were observed as part of an enhanced seasonal cycle, peaking in summer months.

We attribute these limited effects to the fact that only small percentages of each catchment studied was felled (3–23%), and, to the use of drain blocking and silt traps. For future restoration, we recommend following these same measures. We further suggest that harvesting brash and needles, to reduce leaching of phosphate, would also be beneficial (although we were not able to test this hypothesis here).

Compared to the long term benefits of forest-to-bog restoration to ecosystem services, the overall short term impacts on water quality are relatively minor. However, future studies should seek to monitor the longer term water quality effects and compare different ways of potentially controlling nutrient release.

Data availability

Research data are not publicly shared, please contact corresponding author for enquiries.

Code availability

Coding use for data analysis are not publicly shared, please contact corresponding author for enquiries.

References

Anderson R, Peace A (2017) Ten-year results of a comparison of methods for restoring afforested blanket bogs. Mires Peat 19:1–23 ((Article 06))

Andersen R, Farrell C, Graf M, Muller F, Calvar E, Frankard P, Caporn S, Anderson P (2016) An overview of the progress and challenges of peatland restoration in Western Europe. Restor Ecol 25:271–282

Anderson R, Vasander H, Geddes N, Laine A, Tolvanen A, O’sullivan A, Aapala K (2016) Afforested and forestry-drained peatland restoration. In: Bonn A (ed) Peatland restoration and ecosystem services: science, policy and practice. Cambridge Univeristy Press, Cambridge, pp 213–233

Andersen R, Taylor R, Cowie NR, Svoboda D, Youngson A (2018) Assessing the effects of forest-to-bog restoration in the hyporheic zone at known Atlantic salmon (Salmo salar) spawning sites. Mires Peat 23:1–11

Asam ZZ, Kaila A, Nieminen M, Sarkkola S, O’Driscoll C, O’Connor M, Sana A, Rodgers M, Xiao L (2012) Assessment of phosphorus retention efficiency of blanket peat buffer areas using a laboratory flume approach. Ecol. Eng. 49:160–169

Asam ZZ, Nieminen M, Kaila A, Laiho R, Sarkkola S, O’Connor M, O’Driscoll C, Sana A, Rodgers M, Zhan X, Xiao L (2014a) Nutrient and heavy metals in decaying harvest residue needles on drained blanket peat forests. Eur J For Res 133:969–982

Asam ZZ, Nieminen M, O’Driscoll C, O’Connor M, Sarkkola S, Kaila A, Sana A, Rodgers M, Zhan X, Xiao L (2014b) Export of phosphorus and nitrogen from lodgepole pine (Pinus contorta) brash windrows on harvested blanket peat forests. Ecol. Eng. 64:161–170

Ball DF (1964) Loss-on-ignition as an estimate of organic matter and organic carbon in non-calcareous soils. J Soil Sci 15:84–92

Bonn A, Allott T, Evans M, Joosten H, Stoneman R (2016) Peatland restoration and ecosystem services: science, policy, and practice. Cambridge Univeristy Press, Cambridge

Brown H, Prescott R (2015) Applied mixed models in medicine, 3rd edn. Wiley, Chichester

Chimner RA, Cooper DJ, Wurster FC, Rochefort L (2017) An overview of peatland restoration in North America: where are we after 25 years? Restor Ecol 25:283–292

Clesceri L, Greenberg A, Eaton A (1998) Standard methods for the examination of water and wastewater, 20th edn. American Association of Public Health, Baltimore, Maryland

Crawley M (2007) The R book. Wiley, Chichester

Cummins T, Farrell EP (2003a) Biogeochemical impacts of clearfelling and reforestation on blanket peatland streams I. phosphorus. For Ecol Manag 180:545–555

Cummins T, Farrell EP (2003b) Biogeochemical impacts of clearfelling and reforestation on blanket-peatland streams II. Major ions and dissolved organic carbon. For Ecol Manag 180:557–570

Dawson JJC, Billett MF, Neal C, Hill S (2002) A comparison of particulate, dissolved and gaseous carbon in two contrasting upland streams in the UK. J Hydrol 257:226–246

Dinsmore KJ, Billett MF, Dyson KE (2013) Temperature and precipitation drive temporal variability in aquatic carbon and GHG concentrations and fluxes in a peatland catchment. Glob Chang Biol 19:2133–2148

European Commission, 2000. Directive 2000/60/EC of the European Parliament and of the council of 23 October 2000—establishing a framework for Community action in the field of water policy.

European Commission, 2016. Introduction to the new EU water framework directive [WWW Document]. http://ec.europa.eu/environment/water/water-framework/info/intro_en.htm

Fahey TJ, Stevens PA, Hornung M, Rowland P (1991) Decomposition and nutrient release from logging residue following conventional harvest of sitka spruce in North Wales. Forestry 64:289–301

Fenner N, Williams R, Toberman H, Hughes S, Reynolds B, Freeman C (2011) Decomposition ‘hotspots’’ in a rewetted peatland: implications for water quality and carbon cycling’. Hydrobiologia 674:51–66

Finnegan J, Regan JT, O’Connor M, Wilson P, Healy MG (2014) Implications of applied best management practice for peatland forest harvesting. Ecol Eng 63:12–26

Fox J, Weisberg S (2011) Nonlinear regression and nonlinear least squares in R. An R Companion to Appl. Regres. 1–20

Gaffney PPJ, Hancock MH, Taggart MA, Andersen R (2018) Measuring restoration progress using pore- and surface-water chemistry across a chronosequence of formerly afforested blanket bogs. J Environ Manag 219:239–251

Gaffney PPJ, Hancock MH, Taggart MA, Andersen R (2020a) Restoration of afforested peatland: immediate effects on aquatic carbon loss. Sci Total Environ 742:140594

Gaffney PPJ, Jutras S, Hugron S, Marcoux O, Raymond S, Rochefort L (2020b) Ecohydrological change following rewetting of a deep-drained northern raised bog. Ecohydrology. https://doi.org/10.1002/eco.2210

Haapalehto T, Kotiaho JS, Matilainen R, Tahvanainen T (2014) The effects of long-term drainage and subsequent restoration on water table level and pore water chemistry in boreal peatlands. J Hydrol 519:1493–1505

Hambley G, Andersen R, Levy P, Saunders M, Cowie NR, Teh YA, Hill TC (2019) Net ecosystem exchange from two formerly afforested peatlands undergoing restoration in the Flow Country of northern Scotland. Mire Peats 23:1–14

Hancock MH, Klein D, Andersen R, Cowie NR (2018) Vegetation response to restoration management of a blanket bog damaged by drainage and afforestation. Veg Sci Appl. https://doi.org/10.1111/avsc.12367

Hermans R (2018) Impact of forest-to-bog restoration on greenhouse gas fluxes. University of Stirling, Scotland

Hökkä H, Repola J, Laine J (2008) Quantifying the interrelationship between tree stand growth rate and water table level in drained peatland sites within Central Finland. Can J For Res 38:1775–1783

Howson T, Chapman PJ, Shah N, Anderson R, Holden J (2021) A comparison of porewater chemistry between intact, afforested and restored raised and blanket bogs. Sci Total Environ 766:144496

Hyvonen R, Olsson BA, Lundkvist H, Staaf H (2000) Decomposition and nutrient release from Picea abies (L.) Karst and Pinus sylvestris L-logging residues. For Ecol Manag 126:97–112

Kaila A, Asam ZZ, Sarkkola S, Xiao L, Laurén A, Vasander H, Nieminen M (2012) Decomposition of harvest residue needles on peatlands drained for forestry—implications for nutrient and heavy metal dynamics. For Ecol Manag. 277:141–149

Kaila A, Asam Z, Koskinen M, Uusitalo R, Smolander A (2016) Impact of re-wetting of forestry-drained peatlands on water quality—a laboratory approach assessing the release of P, N, Fe, and dissolved organic carbon. Water Air Soil Pollut. https://doi.org/10.1007/s11270-016-2994-9

Krachler R, Krachler RF, von der Kammer F, Suphandag A, Jirsa F, Ayromlou S, Hofmann T, Keppler BK (2010) Relevance of peat-draining rivers for the riverine input of dissolved iron into the ocean. Sci Total Environ 408:2402–2408

Lees KJ, Quaife T, Artz RRE, Khomik M, Sottocornola M, Kiely G, Hambley G, Hill T, Saunders M, Cowie NR, Ritson J, Clark JM (2019) A model of gross primary productivity based on satellite data suggests formerly afforested peatlands undergoing restoration regain full photosynthesis capacity after five to ten years. J Environ Manag 246:594–604

Lenth R (2016) Least-squares means: the R package lsmeans. J Stat Softw 69:1–33

Lindsay RA, Charman DJ, Everingham F, O’Reilly RM, Palmer MA, Rowell TA, Stroud DA (1988) The flow country—the peatlands of Caithness and Sutherland, Ratcliffe DA, Oswald PH (eds), JNCC Report. Nature Conservancy Council, Peterborough

Löfgren S, Ring E, von Brömssen C, Sørensen R, Högbom L (2009) Short-term effects of clear-cutting on the water chemistry of two boreal streams in Northern Sweden: a paired catchment study. AMBIO: J Human Environ 38(7):347–356

Malcolm IA, Youngson AF, Soulsby C (2003) Survival of salmonid eggs in a degraded gravel-bed stream: effects of groundwater-surface water interactions. River Res Appl 19:303–316

McKnight DM, Bencala KE (1990) The chemistry of iron, aluminum, and dissolved organic material in three acidic, metal-enriched, mountain streams, as controlled by watershed and in-stream processes. Water Resour Res 26:3087–3100

Met Office (2020) Kinbrace (Highland) UK climate averages. https://www.metoffice.gov.uk/research/climate/maps-and-data/uk-climate-averages/gfm5qbgxz

Moore TR, Trofymow JA, Prescott CE, Titus BD (2011) Nature and nurture in the dynamics of C, N and P during litter decomposition in Canadian forests. Plant Soil 339:163–175

Muller FLL, Chang KC, Lee CL, Chapman SJ (2015) Effects of temperature, rainfall and conifer felling practices on the surface water chemistry of northern peatlands. Biogeochemistry 126:343–362

Muller FLL, Tankéré-Muller SPC (2012) Seasonal variations in surface water chemistry at disturbed and pristine peatland sites in the Flow Country of northern Scotland. Sci Total Environ 435–436:351–362

Neal C (2001) Alkalinity measurements within natural waters: towards a standardised approach. Sci Total Environ 265:99–113

Neal C, Reynolds B (1998) The impact of conifer harvesting and replanting on upland water quality. Environment Agency, Bristol

Nieminen M (2004) Export of dissolved organic carbon, nitrogen and phosphorus following clear-cutting of three Norway spruce forests growing on drained peatlands in Southern Finland. Silva Fenn 38:123–132

Nieminen M, Koskinen M, Sarkkola S, Laurén A, Kaila A, Kiikkilä O, Nieminen TM, Ukonmaanaho L (2015) Dissolved organic carbon export from harvested peatland forests with differing site characteristics. Water Air Soil Pollut. https://doi.org/10.1007/s11270-015-2444-0

Nieminen M, Palviainen M, Sarkkola S, Laurén A, Marttila H, Finér L (2017) A synthesis of the impacts of ditch network maintenance on the quantity and quality of runoff from drained boreal peatland forests. Ambio. https://doi.org/10.1007/s13280-017-0966-y

NPWS (2015) Final national peatlands strategy. National Parks and Wildlife Service, Dublin

Nugent C, Kanali C, Owende PMO, Nieuwenhuis M, Ward S (2003) Characteristic site disturbance due to harvesting and extraction machinery traffic on sensitive forest sites with peat soils. For Ecol Manag 180:85–98

O’Driscoll C, Rodgers M, O’Connor M, Asam ZUZ, De Eyto E, Poole R, Xiao L (2011) A potential solution to mitigate phosphorus release following clearfelling in peatland forest catchments. Water Air Soil Pollut 221:1–11

O’Driscoll C, O’Connor M, Asam ZZ, De Eyto E, Rodgers M, Xiao L (2014a) Creation and functioning of a buffer zone in a blanket peat forested catchment. Ecol Eng 62:83–92

O’Driscoll C, O’Connor M, Asam ZZ, Eyto E, de Poole R, Rodgers M, Zhan X, Nieminen M, Xiao L (2014b) Whole-tree harvesting and grass seeding as potential mitigation methods for phosphorus export in peatland catchments. For Ecol Manag 319:176–185

Oksanen J, Blanchet FG, Friendly L, Kindt R, Legendre PDM, Minchin P, O’Hara R, Simpson G, Solymos P, Henry M, Stevens H, Szoecs E, Wagner H (2016) Vegan: community ecology package. R package version 2.4-1. https://cran.r-project.org/package=vegan

Palviainen M, Finér L, Kurka AM, Mannerkoski H, Piirainen S, Starr M (2004a) Release of potassium, calcium, iron and aluminium from Norway spruce, Scots pine and silver birch logging residues. Plant Soil 259:123–136

Palviainen M, Finér L, Kurka AM, Mannerkoski H, Piirainen S, Starr M (2004b) Decomposition and nutrient release from logging residues after clear-cutting of mixed boreal forest. Plant Soil 263:53–67

Palviainen M, Finér L, Laurén A, Launiainen S, Piirainen S, Mattsson T, Starr M (2014) Nitrogen, phosphorus, carbon, and suspended solids loads from forest clear-cutting and site preparation: long-term paired catchment studies from eastern Finland. Ambio 43:218–233

R Core Team, 2017. R: A language and environment for statistical computing. R Found. Stat. Comput. Vienna, Austria. https://www.r-project.org/

Rodgers M, O’Connor M, Healy MG, O’Driscoll C, Asam ZUZ, Nieminen M, Poole R, Muller M, Xiao L (2010) Phosphorus release from forest harvesting on an upland blanket peat catchment. For Ecol Manag 260:2241–2248

Rosén K, Aronson JA, Eriksson HM (1996) Effects of clear-cutting on streamwater quality in forest catchments in central Sweden. For Ecol Manage 83:237–244

Shah N, Nisbet T (2019) The effects of forest clearance for peatland restoration on water quality. Sci Total Environ 693:133617

Smith VH, Tilman GD, Nekola JC (1999) Eutrophication: impacts of excess nutrient inputs on freshwater, marine, and terrestrial ecosystems. Environ Pollut 100:179–196

Soulsby C, Malcolm I, Youngson AF (2001) Hydrochemistry of the hyporheic zone in salmon spawning gravels: a preliminary assessment in a degraded agricultural stream. Regul Rivers Res Manag 17:651–665

Stroud DA, Reed TM, Pienkowski MW, Lindsay RA (1987) Birds, bogs and forestry. The peatlands of Caithness and Sutherland. Nature Conservancy Council

Sugimura Y, Suzuki Y (1988) A high-temperature catalytic oxidation method for the determination of non-volatile dissolved organic carbon in seawater by direct injection of a liquid sample. Mar Chem 24:105–131

The Scottish Government, 2014. Environmental Protection—The Scotland River Basin District (Standards) Directions 2014

UK TAG (2014a) River & Lake Assessment Method Specific Pollutants ( Metals ) Metal Bioavailability Assessment Tool ( M-BAT )

UK TAG (2014b) Metal bioavailability assessment tool. http://www.wfduk.org/

Väänänen R, Nieminen M, Vuollekoski M, Nousiainen H, Sallantaus T, Tuittila ES, Ilvesniemi H (2008) Retention of phosphorus in peatland buffer zones at six forested catchments in southern Finland. Silva Fenn 42:211–231

van den Brink PJ, Ter Braak CJF (1998) Multivariate analysis of stress in experimental ecosystems by principal responses curves and similarity analysis. Aquat Ecol 32:163–178

van den Brink PJ, Ter Braak CJF (1999) Principal response curves: analysis of time-dependent multivariate responses of biological community to stress. Environ Toxicol Chem 18:138–148

Vanguelova E, Chapman S, Perks M, Yamulki S, Randle T, Ashwood F, Morison J (2018) Afforestation and restocking on peaty soils—new evidence assessment. ClimateXChange, Edinburgh

Venebles W, Ripley B (2002) Modern applied statistics with S, 4th edn. Springer, New York

Vinjili, S., 2012. Landuse change and organic carbon exports from a peat catchment of the Halladale River in the Flow Country of Sutherland and Caithness, Scotland. PhD Thesis. University of St Andrews

Wilson L, Wilson J, Holden J, Johnstone I, Armstrong A, Morris M (2011) Ditch blocking, water chemistry and organic carbon flux: evidence that blanket bog restoration reduces erosion and fluvial carbon loss. Sci Total Environ 409:2010–2018

Zuur A, Ieno E, Walker N, Saveliev A, Smith G (2011) Mixed effects models and extensions in ecology with R. In Gail M, Krickeberg K, Samet J, Tsiatis A, Wong W (eds), Statistics for biology and health. Springer, New York

Acknowledgements

We gratefully acknowledge Neil Cowie, Daniela Klein, Norrie Russell, Trevor Smith (RSPB), Graeme Findlay (Forestry Commission Scotland), Fountains Forestry, Donald MacLennan (Brook Forestry), Malcolm Morrsion and Andrew Mackay for guidance and land access and Rebecca McKenzie for fieldwork assistance.

Funding

This work was supported by a PhD studentship to P. Gaffney from the Royal Society for the Protection of Birds (RSPB) and University of the Highlands and Islands, Scotland. Further support to P.Gaffney was provided by small grants from the International Peatland Society (IPS) and by the Marine Association for Sciences and Technology (MASTS; SG106).

Author information

Authors and Affiliations

Contributions

All authors were involved in study design. Fieldwork was carried out by PG under guidance of RA and MH. Laboratory work was carried out by PG under guidance of MT. Data analysis was carried out by PG under guidance of RA and MH. PG produced the first draft of this manuscript, with contributions and edits from all co-authors.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflicts of interest or competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Responsible Editor: Adam Langley

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gaffney, P.P.J., Hancock, M.H., Taggart, M.A. et al. Catchment water quality in the year preceding and immediately following restoration of a drained afforested blanket bog. Biogeochemistry 153, 243–262 (2021). https://doi.org/10.1007/s10533-021-00782-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10533-021-00782-y