Abstract

We obtain an asymptotic formula for \(n\times n\) Toeplitz determinants as \(n\rightarrow \infty \), for non-negative symbols with any fixed number of Fisher–Hartwig singularities, which is uniform with respect to the location of the singularities. As an application, we prove a conjecture by Fyodorov and Keating (Philos Trans R Soc A 372: 20120503, 2014) regarding moments of averages of the characteristic polynomial of the Circular Unitary Ensemble. In addition, we obtain an asymptotic formula regarding the momentum of impenetrable bosons in one dimension with periodic boundary conditions.

Similar content being viewed by others

1 Introduction

In this paper, we consider the asymptotics as \(n\rightarrow \infty \) of Toeplitz determinants

where the symbol f is of the form

with \(\arg z \in [0,2\pi )\) in the term \(\left( \frac{ z}{e^{\pi i}}\right) ^{\beta }\) in the definition of \(\omega _{\alpha ,\beta }\), and where the symbol satisfies the following conditions:

-

(a)

V(z) is real-valued for \(|z|=1\) and is analytic on an open set containing \(|z|=1\),

-

(b)

\(z_j=e^{it_j}\), where \(0\le t_1<t_2<\dots<t_m<2\pi \),

-

(c)

\(\alpha _j\ge 0 \) and \(\mathrm{Re \,}\beta _j = 0\) for \(j=1,2,\ldots ,m\).

Under these conditions f is a non-negative symbol, and we obtain large n asymptotics of \(D_n(f)\) (up to a bounded multiplicative term) which are uniform in the parameters \(z_j\).

When \((\alpha _j,\beta _j)\ne (0,0)\) for all j, one says the Toeplitz determinant possesses a Fisher–Hartwig (FH) singularity at each point \(z_j=e^{it_j}\), and that the singularity at \(z_j\) is of root-type if \(\beta _j=0\) and of jump-type if \(\alpha _j=0\).

The large n asymptotics of Toeplitz determinants were first studied by Szegő in 1915 [61]. They have been intensively studied over the last 70 years, and owe their relevance to applications to physical models. The most prominent such application is the question of spontaneous magnetization of the Ising model on the lattice \({\mathbb {Z}}^2\) (see e.g. [7, 9, 24, 52]), but we also mention questions surrounding the momentum of impenetrable bosons in 1 dimension, which we return to in Sect. 3.

In addition to physical models, a considerable effort has been invested in understanding statistical similarities between the asymptotics of the Riemann zeta function along the critical line \(\mathrm{Re \,}z=1/2\) and the statistics of the characteristic polynomial of the Circular Unitary Ensemble (CUE) over arcs of the unit circle. Toeplitz determinants appear in this context, and we return to this topic in Sect. 2.

We now turn to known results for the asymptotics of \(D_n(f)\). The simplest case is the special one where \(\omega (z)\equiv 1\) (i.e. \(\alpha _j,\beta _j=0\) for all j), in which case

as \(n\rightarrow \infty \), where \(V_k=\int _0^{2\pi }V\left( e^{i\theta }\right) e^{-ik\theta }\frac{d\theta }{2\pi } \). This is known as the strong Szegő limit theorem (see [8, 9, 11, 37, 40, 43, 60, 62]), and holds for V satisfying condition (a), but also more generally for any V such that \(\sum _{k=-\infty }^\infty k|V_k|^2\) converges.

It was conjectured by Lenard [50] and Fisher and Hartwig [28], and proven in subsequent steps by Widom [67] (relying also on work by Lenard [50]) and Basor [4, 5], that if f is a symbol of the form (1) satisfying (a)–(c), then

as \(n\rightarrow \infty \), where E is independent of n and given by

\(V_+(z)=\sum _{j=1}^\infty V_{ j}z^{ j}\), and G(z) is the Barnes’ G-function (see e.g. [56]). We mention here that although our focus is on non-negative symbols, the analogue of (2), (3) in the case of complex symbols f is interesting and exhibits behaviour with additional subtleties, see [8,9,10, 23, 25, 27], or [24] for a review.

By the proof in [25], it is clear that the asymptotics (2) hold uniformly for \(e^{it_j}\) and \(e^{it_k}\) bounded away from each other. It is also clear that the asymptotics (2) are discontinuous if any two points \(e^{it_j}, e^{it_k}\) merge and that the asymptotic formula cannot be correct in this situation. In [42] and [64], for \(\alpha =1/2\) and \(\beta =0\), a part of the transition was considered, corresponding to the box \(|e^{it_j}-1|<C/n\) for all \(j=1,\ldots ,m\) and some fixed, large constant C. More recently, in [16], the authors considered the situation where \(m=2\) and obtained the full asymptotics of \(D_n(f)\), uniformly for \(0\le t_1<t_2<2\pi \). It is easily seen that the results of [16] may be presented in the following manner:

uniformly as \(n\rightarrow \infty \), where \({\widehat{F}}_n\) is an explicit function in \(t_1,t_2\) which is uniformly bounded as \(n\rightarrow \infty \). We mention that \({\widehat{F}}_n \) has an interesting and intricate representation involving a solution to the Painlevé V equation when \(|t_1-t_2|={\mathcal {O}}(1/n)\)—for the details we refer the reader to [16] (and additionally to [14] for certain simplifications that occur in the specific case where \(\alpha \) is integer-valued and \(\beta =0\)).

We also refer the reader to work on a different but related problem, namely the transition between smooth symbols and those with one singularity, see [15] and [68], and the transition between a single singularity and two singularities, see [45].

In this paper, we obtain asymptotics for \(D_n(f)\) as \(n\rightarrow \infty \), uniformly in the parameters \(t_1,\ldots ,t_m\). Our main result is the following.

Theorem 1.1

Assume that f is of the form (1), satisfying (a)–(c). Then as \(n\rightarrow \infty \),

where the error term is uniform for \(0\le t_1<t_2<\dots<t_m<2\pi \).

Remark 1.2

For simplicity of notation we take \(\alpha _j\ge 0\), but it is straightforward to see that the proof may be applied to negative \(\alpha _j\) as well, provided any combination of merging singularities has a total sum of \(\alpha _j\)’s strictly greater than \(-1/2\). For example if there are three singularities and \(t_2\rightarrow t_1\) while \(t_3\) remains bounded away from \(t_1\) as \(n\rightarrow \infty \), one would require \(\alpha _1,\alpha _2, \alpha _3>-1/2\) and \(\alpha _1+\alpha _2>-1/2\).

2 The Characteristic Polynomial of the CUE

Let \(Z_1,Z_2,\ldots ,Z_n\) be random variables, distributed as the eigenvalues of the \(n\times n\) Circular Unitary Ensemble of random matrices, with the following joint probability density function on the unit circle in the complex plane:

Let the characteristic polynomial be denoted by

It has long been believed that the statistical properties of the Riemann zeta function on the critical line \(s=1/2+it,\) \(t\in {\mathbb {R}}\), and the statistics of large random matrices are related—it was Dyson who first spotted this possible connection. More recently, possible connections between the behaviour of the characteristic polynomial \(P_n(e^{i\theta })\) over the unit circle and the behaviour of the Riemann zeta function along the critical line have been studied intensively (see e.g. [18,19,20, 34, 35, 38, 39, 44, 53] and references therein). In this context the authors of [34, 35] were interested in both extreme values and average values of \(P_n(e^{it})\) over the unit circle, namely the random variables

In addition, their work sparked interest in connections between the characteristic polynomial of the CUE and Gaussian Multiplicative Chaos, see [55, 66] for results on such connections.

In [34] it was conjectured that \(\log Y_n-\log n+\frac{3}{4}\log \log n\) converges in distribution to a random variable, and subsequently the asymptotics of \(Y_n\) have been studied in [1, 13, 57]. In these works, the terms \(\log n\) and \(\frac{3}{4}\log \log n\) were confirmed. The full conjecture, however, remains open.

In [35], Fyodorov and Keating conjectured that for \(m=2, 3,\dots \),

as \(n\rightarrow \infty \), where \({\mathbb {E}}\) denotes the expectation with respect to (5), and

As a corollary to Theorem 1.1, we will prove (6).

To make the connection between Toeplitz determinants and the moments of \(X_n(\alpha )\), we recall the well-known representation of Toeplitz determinants in terms of multiple integrals

from which it follows that

where we denote

Using (8) and (4), Claeys and Krasovsky were able to prove (6) for \(m=2\). They were furthermore able to prove that \({\mathbb {E}}\left[ X_n(\alpha =1/\sqrt{2})^2\right] ={\hat{c}} n\log n(1+o(1))\) as \(n\rightarrow \infty \) for an explicit constant \({\hat{c}}\). Additionally, for \(2\alpha ^2>1\), they were able to determine explicitly the \({\mathcal {O}}(1)\) term in (6) in terms of the Painlevé V equation.

For integer \(m\ge 2\), the conjecture was proven recently by Bailey and Keating [3] for integer \(\alpha =1,2,3,\dots \), by representing \({\mathbb {E}}\left[ X_n(\alpha )^m\right] \) in terms of integrals of Schur polynomials. A second proof was given by Assiotis and Keating in [2], where a representation for the constant \({\mathcal {O}}(1)\) term was given in terms of a certain volume of continuous Gelfand–Tsetlin patterns with constraints.

Relying on Theorem 1.1 and (2), we prove the conjecture (6) for all parameter sets, and more precisely we have following corollary.

Corollary 2.1

As \(n\rightarrow \infty \), the asymptotics of \(\log {\mathbb {E}}\left[ X_n(\alpha )^m\right] \) are given by (6), both for \(0<m\alpha ^2<1\) and for \(m\alpha ^2>1\). Additionally, if \(m\alpha ^2=1\), then

as \(n\rightarrow \infty \).

2.1 Proof of Corollary 2.1

2.1.1 \(0<m\alpha ^2<1\)

Denote

for \(\epsilon >0\). If \(0<m\alpha ^2<1\), the integral is well defined for \(\epsilon =0\) and \(I_0(\alpha )\) is a Selberg integral [33, 59]:

Thus Corollary 2.1 follows (for \(0<m\alpha ^2<1\)) by combining (2), (8) and Theorem 1.1 as follows. Fix \(\delta >0\). We will show that there is an integer N such that for \(n>N\),

thus proving the corollary. Given a measurable subset \(R\subset [0,2\pi )^m\), we denote

We note that \(I_{\epsilon }(\alpha ,R)<I_0(\alpha ,R)\) for any \(\epsilon >0\) for any \(R\subset [0,2\pi )^m\). For \(\eta >0\), divide the integration regime \([0,2\pi )^m\) into two regions \(R_1(\eta )\) and \(R_2(\eta )\), where \(R_1(\eta )\) is the region where \(\sin \frac{|t_i-t_j|}{2}>\eta \) for all \(i\ne j\), and \(R_2(\eta )\) is the complement of \(R_1(\eta )\).

It follows by Theorem 1.1 that

as \(n\rightarrow \infty \), uniformly for \(0<\eta <\eta _0\). In particular, since \(I_0(\alpha ,R_2(\eta ))\rightarrow 0\) as \(\eta \rightarrow 0\), it follows that there exists \(\eta _0>0\) and \(N_0\in {\mathbb {N}}\) such that

for \(n>N_0\) and \(\eta <\eta _0\), which gives the desired bound for the integral over \(R_2(\eta )\). We now evaluate the integral over \(R_1(\eta )\). By (2), it follows that

where the o(1) tends to zero uniformly over \(R_1(\eta )\) for any fixed \(\eta \) as \(n\rightarrow \infty \). Thus we may move the error term outside the integral, and since \(R_2(\eta )\) is the complement of \(R_1(\eta )\), we have

By (11) we obtain

where the o(1) tends to zero for any fixed \(\eta \) as \(n\rightarrow \infty \). Again we use the fact that \(I_0(\alpha ,R_2(\eta ))\rightarrow 0\) as \(\eta \rightarrow 0\), from which it follows that we may pick \(\eta <\eta _0\) such that the second term on the right hand side of (16) is less than \(\delta n^{m\alpha ^2} /4\).

Thus we may fix \(\eta <\eta _0\) such that

as \(n\rightarrow \infty \), and combined with (14), this proves (12).

2.1.2 \(m\alpha ^2>1\)

We now study the asymptotics of \(I_{\epsilon }(\alpha )\) for \(m\alpha ^2>1\). A lower bound for \(I_{\epsilon }(\alpha )\) is easily obtained by integrating over the box \(|t_i-t_j|<\epsilon \) for all \(i\ne j\), it is easily seen that there is a constant c such that

for \(0<\epsilon <\epsilon _0\).

To prove Corollary 2.1, we need to obtain a corresponding upper bound for \(I_\epsilon (\alpha )\). We choose to work with the following integral instead:

Lemma 2.2

There is a constant \(0<c\) such that for all sufficiently small \(\epsilon >0\),

Proof

Denote \(U_j=[0,2\pi (j-1)/(m+1))\cup [2\pi j/(m+1),2\pi )\) for \(j=1,2,\ldots ,m+1\). Since there are m points \(t_1,\ldots ,t_m\) and \(m+1\) sets \(U_j\), it follows that there is always a j such that \(\{t_1,\ldots ,t_m\}\subset U_j\), thus

It follows that

Furthermore, for \(0\le x \le \pi -\pi /(m+1)\), one has

and it follows that

The lemma follows easily from (18). \(\quad \square \)

We now take the change of variables \(s_j=t_{j+1}-t_j\) for \(j=1,\ldots ,m-1\) and find that

with integration taken over \( s_1,\ldots , s_{m-1}\ge 0\) such that \( \sum _{j=1}^m s_j< 1-t_1\), from which it follows that

where

If \(2\alpha ^2>1\), then \(I_\epsilon ^{(2)}(\alpha )\) is straightforward to evaluate—one simply notes that

for any \(i=j,\ldots ,k\), and thus

Separating out the variables, it follows that

as \(\epsilon \rightarrow 0\), for \(2\alpha ^2>1\). However, if \(\frac{1}{m}<\alpha ^2<\frac{1}{2}\), this approach fails to yield (22), and in fact yields a worse error termFootnote 1. To achieve the optimal error term (22) also in the case \(\frac{1}{m}<\alpha ^2<\frac{1}{2}\), we need to consider ordered integrals.

Since the integral (20) is taken over all possible orderings, it follows that

where the integral is taken over

Let

Let \(S(\ell )=V(\ell ){\setminus } \cup _{j=1}^{\ell -1}V(j)\). Then \(S(1),\ldots , S(m-1)\) are disjoint, and

By (21) it follows that

It is easily seen that \(|S(\ell )|=m-\ell \), and it follows that

By (23), it follows that

If \(m=2\) and \(m\alpha ^2>1\), then the right hand side is of order \(\epsilon ^{-2\alpha ^2+1}\), and we are done. We assume that \(m>2\), and integrate in \(s_{\sigma (m-1)}\) on the right hand side of (24). The power of \(s_{\sigma (m-1)}\) is \(-2\alpha ^2\), which could very well be equal to \(-1\), so we need to take this into account. Clearly

for any fixed x as \(\epsilon \rightarrow 0\). Since

it follows that

hand side is taken over \(0<s_{\sigma (m-2)}<\dots<s_{\sigma (1)}<1\). We will next integrate out \(s_{\sigma (m-2)}\), then \(s_{\sigma (m-3)}\), etc. To do this, we introduce the following notation for \(v=1,2,\ldots ,m-2\):

with integration taken over \(0<s_{\sigma (m-v-1)}<\dots<s_{\sigma (1)}<1\), and where we interpret \(\sum _{j=1}^0j=\sum _{j=v+1}^vj=0\). We observe that the error term on the right hand side of (25) is equal to \(J_\epsilon (1)\). It is easily verified that

as \(\epsilon \rightarrow 0\), for \(v=1,2,\ldots ,m-3\). Iterating, we obtain

as \(\epsilon \rightarrow 0\), where we interpret \(\sum _{j=1}^0j=\sum _{j=m-1}^{m-2}j=0\). Since \(m\alpha ^2>1\), it follows that the power of \(s+\epsilon \) is smaller than \(-1\), namely:

for any \(r=0,1,2,\ldots ,m-2\). If \(x<-1\), then

as \(\epsilon \rightarrow 0\), and thus it follows that

as \(\epsilon \rightarrow 0\). Thus by (19) and (24), \(I_\epsilon (\alpha )={\mathcal {O}}\left( \epsilon ^{(m-1)(1-m\alpha ^2)}\right) \), as \(\epsilon \rightarrow 0\), which combined with the lower bound (17) and Theorem 1.1 proves Corollary 2.1.

2.1.3 \(m\alpha ^2=1\)

We start by finding a lower bound for \(I_\epsilon (\alpha )\) when \(m\alpha ^2=1\). Let

and let \(B_\epsilon (j)=D_\epsilon (j){\setminus } D_\epsilon (j-1)\). On \(B_\epsilon (j)\), the integrand in (10) satisfies

for \(j=1,2,\ldots ,1/\epsilon \), assuming for ease of notation that \(1/\epsilon \) is an integer. Combined with the fact that \(B_\epsilon (j)\) are disjoint for \(j=1,2,\dots \) and the fact that \(m\alpha ^2=1\), it follows that

Since

for j sufficiently large, say \(j>j_0\), it follows that

for some constant \({\hat{c}}_0\). Thus we have a lower bound for \(I_\epsilon (\alpha )\) and we look to obtain a corresponding upper bound.

We observe that the upper bounds (19) and (24) hold also for \(m\alpha ^2=1\). If \(m=2\) and \(m\alpha ^2=1\), then the right hand side of (24) is of order \(\log \epsilon ^{-1}\) as \(\epsilon \rightarrow 0\). We assume that \(m>2\) and integrate in the variable \(s_{\sigma (m-1)}\). We have \(2\alpha ^2=2/m<1\), and it follows that

as \(\epsilon \rightarrow 0\), and thus

as \(\epsilon \rightarrow 0\) where integration on the right hand side is taken over \(0<s_{\sigma (m-2)}<\dots<s_{\sigma (1)}<1\). We redefine \(J_\epsilon (v)\) as follows

with integration taken over \(0<s_{\sigma (m-v-1)}<\dots<s_{\sigma (1)}<1\). Since \(m\alpha ^2=1\), we note that the power of \(s_{\sigma (m-v-1)}\) is greater than \(-1\) for \(v=1,2,\ldots ,m-3\), namely:

and it follows that \(J_\epsilon (v)={\mathcal {O}}\left( J_\epsilon (v+1)\right) \) as \(\epsilon \rightarrow 0\) for such v. It follows that

as \(\epsilon \rightarrow 0\). Since \(m\alpha ^2=1\), it follows that the right hand side is simply \({\mathcal {O}}\left( \log \epsilon ^{-1}\right) \). Thus, combined with (26) and Theorem 1.1, we have proven Corollary 2.1 for \(m\alpha ^2=1\).

3 Statistics of Impenetrable Bosons in 1 Dimension

Consider

It was proven by Girardeau [36] that it has the following properties:

-

\(\psi \) is the ground-state solution to the general time-independent Schrödinger equation in one-dimension with n particles.

-

\(\psi \) is symmetric with respect to interchange of \(x_i\) and \(x_j\) for \(i\ne j\) (Bose–Einstein statistics).

-

\(\psi \) is translationally invariant with period L.

-

\(\psi \) vanishes when \(x_i=x_j\) for \(i\ne j\) (mutual impenetrabililty of particles).

In fact Girardeau only proved the above for odd n, but as noted by Lieb and Liniger [51] (footnote 6), it is equally valid for even n. When the system is in ground state, the wave function \(\psi \) gives rise to a probability distribution for both the position and momentum. The position of the particles on [0, L) has joint probability density function \(\psi (x_1,\ldots ,x_n)^2\). Following the footsteps of Girardeau, we take as our starting point that the wave function for the momentum is given by the Fourier transform of the wave function of the position:

Thus the probability of the j’th particle having momentum \(2\pi {\mathcal {M}}_j/L\) for each \(j=1,\ldots , n\) is given by

It is easily verified that \(\phi ({\mathcal {M}}_1,\ldots ,{\mathcal {M}}_n)\) is independent of L, and that

Thus \(|\phi |^2\) may simply be viewed as a probability distribution on \({\mathbb {Z}}^n\), which is the viewpoint we will take in Corollary 3.1 below, where we fix \(L=2\pi \) without loss of generality.

Since the particles are indistinguishable from one another, it is preferable to characterize the distribution as a point process, which we do as follows. Let \(N_{{\mathcal {M}}}(n)\) denote the number of particles with momentum \(2\pi {\mathcal {M}}/L\). Then if \({\mathcal {M}}_1,\ldots , {\mathcal {M}}_k\) are distinct, it follows from (27) by a straightforward calculation that

where

The above is only valid for distinct particles, for moments of \(N_{\mathcal {M}}\) we have

where \(N_{{\mathcal {M}}}=N_{{\mathcal {M}}}(n)\). Then the expected number of particles with 0 momentum is given by \(\pi _{1,n}(0)\). In 1963, Schultz [58] proved that \(\pi _{1,n}(0)={\mathcal {O}} (n^{-\pi /4})\) as \(n \rightarrow \infty \), which shows that there is no Bose–Einstein condensation according to the Penrose-Onsager criterion (the criterion states that if the proportion of the particles expected to have 0 momentum tends to 0 as \(n\rightarrow \infty \), then there is no Bose–Einstein condensation). The upper bound obtained by Schultz was not optimal. In 1964 Lenard [49] was able to improve on this, and obtained that \({\mathbb {E}}(N_0(n))={\mathcal {O}}(n^{1/2})\) as \(n\rightarrow \infty \). Lenard’s approach was to make a connection to Toeplitz determinants with Fisher–Hartwig singularities by observing that if we denote the k particle reduced density matrix by

then \( \rho _{k,n}\) is a Toeplitz determinant with 2k FH singularities. This observation relies on the multiple integral formula (7). By (29) and (31) it is easily verified that

Thus, to obtain the asymptotics of \(\pi _{k,n}\) one must obtain those of \(\rho _{k,n}\). The asymptotics of \( \rho _{k,n}(x_1,\ldots , x_k, y_1,\ldots , y_k)\) was studied in the limit \(n\rightarrow \infty \) with \(L=n\) and for fixed \(x_j, y_j\) independent of n in [42, 64]. This is equivalent to studying Toeplitz determinants with 2k FH singularities with \(\alpha _j=1/2\) for \(j=1,\ldots , 2k\), in the double scaling limit where the singularities are all at a distance of length \({\mathcal {O}}(1/n)\) from each other. This gave rise to some of the first connections to Painlevé V in the study of Toeplitz determinants. To obtain more detailed asymptotics for \(\pi _{k,n}\) however, uniform asymptotics of \(\rho _{k,n}\) are required. As mentioned in the introduction, Claeys and Krasovsky [16] obtained uniform asymptotics for \(\rho _{1,n}\), and they relied on (4) to prove that

as \(n\rightarrow \infty \) (see formula (1.53) of [16]).

We are interested in not just the expectation of \(N_0(n)/\sqrt{n}\), but also the variance and higher moments. By combining (2)–(3) with Theorem 1.1, we obtain the following.

Corollary 3.1

Fix \(L=2\pi \) and let \({\mathcal {M}}_1,\ldots , {\mathcal {M}}_n \in {\mathbb {Z}}\) be random variables with the probability distribution

Then, as \(n\rightarrow \infty \),

where

Proof

To study higher moments of \(N_0=N_0(n)\), we have by (30) that

for any fixed k.

We note that \(\pi _{k,n}(0,\ldots ,0)\) is independent of L, and so we set \(L=2\pi \). Thus, by (31)–(32),

where we recall the notation (9). Thus \(\pi _{k,n}(0,\ldots ,0)\) is evaluated by combining (2)–(3) and Theorem 1.1 as \(n\rightarrow \infty \) by using similar types of arguments as in Sect. 2.1.1, and we obtain

Thus the corollary follows from (33). \(\quad \square \)

4 Method of Proof of Theorem 1.1

Denote \(\psi _0(z)=\chi _0=1/\sqrt{D_1(f)}\) and define the polynomials \(\psi _j\) for \(j=1,2,\dots \) by

where the leading coefficient \(\chi _j\) is given by

By the representation (7), it follows that \(D_j(f)>0\) and we fix \(\chi _j>0\). It is easily seen that \(\psi _j\) are orthonormal on the unit circle:

for \(j,k=0,1,2,\dots \). By (34) and the definition \(\chi _0=1/\sqrt{D_1(f)}\),

In order to obtain asymptotics for \(\log D_n(f)\) as \(n\rightarrow \infty \), we will obtain the asymptotics of \(\log \chi _N\) as \(N\rightarrow \infty \) in Proposition 4.1 below, and take the sum of these contributions. The asymptotics of \(\log \chi _N\) will depend on the locations of the singularities, and our strategy to systemetize the different asymptotic behaviour that occurs for different constellations of singularities is to classify singularities that are close together as being in the same cluster, a notion which we will formalize shortly. Very roughly speaking, our goal is to prove that if all the singularities in a cluster are of distance o(1/N) apart then they behave as a single point, and if all the clusters are sufficiently well separated by a distance at least U/N for some sufficiently large U, then for our purposes the interaction between the clusters is small. This is the content of Proposition 4.1 (b) below. For configurations which do not fit into this setting, i.e. where there exists two singularities such that \(N\left| e^{it_j}-e^{it_{j+1}}\right| \) is neither small nor large, we still divide the points into clusters, but the division into clusters is somewhat more arbitrary in this situation and we obtain less detailed asymptotics. This is the content of Proposition 4.1 (a) below, which covers all possible configurations.

We now formalize the notion of the clusters. Given \(U>\epsilon >0\), we say that the parameters \(t_1,\ldots , t_m\) satisfy condition \((\epsilon ,U,n)\) if \(t_1,\ldots ,t_m\in {\mathcal {S}}_t\), where

and for each \(1\le j<k\le m\), either \(t_k-t_j< \epsilon /n\) or \(t_k-t_j\ge U/n\). The assumption that \(t_m<2\pi -\pi /m\) one can make without loss of generality when studying Toeplitz determinants, since the Toeplitz determinant is rotationally invariant (i.e. \(D_n(f(e^{i\theta }))=D_n(f(e^{i(\theta +x)}))\) for all \(x\in [0,2\pi )\)).

If \(t_1,\ldots ,t_m\) satisfies condition \((\epsilon ,U,n)\), the points \(\{t_1,\ldots ,t_m\}\) partition naturally into clusters \(\mathbf{Cl} _1(\epsilon ,U,n),\ldots , \mathbf{Cl} _r(\epsilon ,U,n)\), where \(r=r(\epsilon ,U,n)\), satisfying the following conditions.

-

The radius of each cluster is less than \(\epsilon /n\). Namely, \(\epsilon _n<\epsilon \), where

$$\begin{aligned} \epsilon _n=n\max _{j=1,\ldots ,r}\, \max _{x,y\in \mathbf{Cl}_j(\epsilon ,U,n)} |x-y|. \end{aligned}$$ -

The distance between any two clusters is greater than U/n. Namely, \({\widehat{u}}_n>U\), where

$$\begin{aligned} {\widehat{u}}_n=n\min _{1\le j<k\le r} \, \min _{\begin{array}{c} x\in \mathbf{Cl}_j\\ y\in \mathbf{Cl}_k \end{array}} |x-y|. \end{aligned}$$(38)

If there is only one singularity in each cluster we take \(\epsilon _n=0\). If all the singularities are in a single cluster, we take \({\widehat{u}}_n={\hat{k}} n\) for some sufficiently small constant \({\hat{k}}>0\) (the meaning of "sufficiently small" will be determined in the Riemann-Hilbert analysis of Sect. 6).

In Sects. 5–6, we prove the following proposition.

Proposition 4.1

-

(a)

As \(n\rightarrow \infty \),

$$\begin{aligned} \log \chi _n=-V_0/2 +{\mathcal {O}}(1/n), \end{aligned}$$uniformly for \(t_1,\ldots ,t_m\in {\mathcal {S}}_t\).

-

(b)

There exists \(U_1>U_0>0\), \(C>0\) and \(n_0>0\), such that if the parameters \(t_1,\ldots ,t_m\) satisfy condition \((U_0,U_1,n)\) and \(n>n_0\), then

$$\begin{aligned} \left| \log \chi _n+V_0/2 +H_n(\alpha _j,\beta _j,t_j)_{j=1}^m\right| <C\left( \frac{1}{n{\widehat{u}}_n}+\frac{\epsilon _n}{n}\right) , \end{aligned}$$where

$$\begin{aligned} H_n(\alpha _j,\beta _j,t_j)_{j=1}^m= \frac{1}{2n}\sum _{j=1}^m \left( \alpha _j^2 - \beta _j^2\right) +\frac{1}{n}\sum _{1\le j<k\le m}(\alpha _j\alpha _k-\beta _j\beta _k)\mathbb {1}_{U_0/n}(|t_k-t_j|),\end{aligned}$$where

$$\begin{aligned} \mathbb {1}_{U_0/n}(x)={\left\{ \begin{array}{ll} 1&{}0<x<U_0/n,\\ 0&{}U_0/n<x. \end{array}\right. } \end{aligned}$$

Using Proposition 4.1, we now compute the asymptotics of \(D_n(f)\) as \(n\rightarrow \infty \), for a specific configuration \(0\le t_1<t_2<\dots<t_m<2\pi -\pi /m\), but with error terms which are uniform over all configurations. Let \(n_0\) be a fixed positive integer such that the asymptotics of Proposition 4.1 are valid for \(n\ge n_0\). Then \(D_{n_0}(f)\) is a continuous function in terms of \(t_j\) on the compact set \(t_1,\ldots ,t_m\in [0,2\pi ]\), and is thus uniformly bounded as \(t_j\) vary. Thus by (36)

as \(n\rightarrow \infty \), uniformly over \({\mathcal {S}}_t\). Denote \({\mathbb {N}}_0={\mathbb {N}} {\setminus } \{0,1,2,\ldots ,n_0\}\), and let

with complement \(J^c_t={\mathbb {N}}_0{\setminus } J_t\). Then

Written differently,

and it follows that

uniformly for \(t_{j+1}-t_j>0\). Since \(U_0\) and \(U_1\) are fixed, it follows that the right hand side is uniformly bounded, and by Proposition 4.1 (a) and the fact that \(H_N={\mathcal {O}}(1/N)\),

uniformly for \(t_1,\ldots ,t_m\in [0,2\pi -\pi /m)\).

Suppose that \(t_1,\ldots ,t_m\) satisfy condition \((U_0,U_1,N)\) for N in an interval \(N_1,N_1+1,\ldots ,N_2\). By Proposition 4.1 (b),

Since \({\widehat{u}}_N=N(t_i-t_{i-1})\) for some \(i\in \{2,3,\ldots ,m\}\) (where i is fixed for \(N\in [N_1,N_2]\)), it follows that \(\frac{{\widehat{u}}_N}{N}=\frac{{\widehat{u}}_{N_1}}{N_1}\), and as a consequence (bearing in mind that \({\widehat{u}}_{N_1}>U_1\)) we have \({\widehat{u}}_N>\frac{NU_1}{N_1}\). Thus,

Similarly, \(\epsilon _N/N=\epsilon _{N_2}/N_2\), and

Since \(J_t^c\) is composed of at most \(m-1\) disjoint intervals, it follows that \(J_t\) is composed of at most m disjoint intervals, and it follows by (41)–(43) that

Since \(U_0, U_1,n_0\) are just arbitrary constants, the right hand side is bounded uniformly over \({\mathcal {S}}_t\). Thus, by (39), (40), (44), it follows that

uniformly over \({\mathcal {S}}_t\). Since

as \(n\rightarrow \infty \), with the implicit constant depending only on \(U_0\) which is fixed,

as \(n\rightarrow \infty \), uniformly over \({\mathcal {S}}_t\). For such \(t_1,\ldots ,t_m\),

with uniform error terms, which yields Theorem 1.1 for \(t_1,\ldots ,t_m\in {\mathcal {S}}_t\), and the full theorem follows from the aforementioned rotational invariance of the Toeplitz determinant.

4.1 Structure of the proof of Proposition 4.1

We will prove the following proposition, which holds if and only Proposition 4.1 (a) holds.

Proposition 4.2

Given \(u>0\), there exists \({\widetilde{U}}>u\), \({\widetilde{C}}>0\), and \({\widetilde{N}}_0>0\) such that if the parameters \(t_1,\ldots ,t_m\) satisfy condition \((u,{\widetilde{U}},n)\) and \(n>{\widetilde{N}}_0\), then

We now show that Proposition 4.2 implies Proposition 4.1 (a). It will be useful make the dependence of the implicit constants in Proposition 4.2 more explicit, so we denote \({\widetilde{U}}={\widetilde{U}}(u)\), \({\widetilde{C}}={\widetilde{C}}(u)\), and \({\widetilde{N}}_0={\widetilde{N}}_0(u)\). Let \(U_0,U_1\) be two constants for which Proposition 4.1 (b) holds. For \(j=1,2,\ldots ,m-1\), we define \(U_{j+1}={\widetilde{U}} (U_j)\) (meaning that given \(u=U_j\), Proposition 4.2 holds with \({\widetilde{U}}={\widetilde{U}}(U_j)\), which we define to be equal to \(U_{j+1}\)). For each \(N=1,2,\ldots ,n\), we have a sequence of conditions

Then the configuration \(t_1,\ldots ,t_m\) will satisfy one of the conditions in the sequence (46), because otherwise, for each \(k=1,2,\ldots ,m\), one would have a corresponding \(j=j(k)=2,3,\ldots ,m\) such that \(U_{k-1}/N\le t_j-t_{j-1}< U_k/N\), meaning that there are at least \(m+1\) distinct points in \(\{t_j\}_{j=1}^m\), which is a contradiction. Thus, if we denote the maximum of the implicit constants \({\widetilde{C}}(U_j)\) and \({\widetilde{N}}_0(U_j)\) over \(j=1,\ldots , m-1\) by \({\widetilde{C}}_{\text {max}}\) and \({\widetilde{N}}_{0, \text {max}}\) we obtain

for any \(n\ge {\widetilde{N}}_{0, \text {max}}\), which proves Proposition 4.1 (a).

We will prove Proposition 4.1 (b) and Proposition 4.2 by applying the Deift–Zhou steepest descent analysis [26] to a Riemann–Hilbert (RH) problem associated to the orthogonal polynomials \(\psi _j\). Under the Deift–Zhou steepest descent framework, there are several standard ingredients, including the opening of the lens, and the construction of a main parametrix and local parametrices. Among these ingredients, the opening of the lense and the construction of a local parametrix is the most involved. Each local parametrix contains a cluster \(\mathbf{Cl}_j(u,{\widetilde{U}},n)\), and we map a model RH problem to a shrinking disc containing \(\mathbf{Cl}_j(u,{\widetilde{U}},n)\). We construct and analyze the model RH problem in the next section, Sect. 5, and use these results in Sect. 6 to prove Propositions 4.1(b) and 4.2.

5 Model RH Problem

In this section we introduce and analyze a model Riemann–Hilbert problem, which will be an important tool in the analysis of the asymptotics of the leading coefficient \(\chi _N\) defined in (34). While specialists in the field will be well aware of the significance of the model problem in the analysis of Riemann–Hilbert problems, we have not yet demonstrated its utility, and the reader may find it illuminating to first have a glance at Sect. 6 to see how we rely on the model problem to prove Propositions 4.1(b) and 4.2 in Sect. 6. A good reference to Riemann–Hilbert problems in random matrix theory is the book by Deift [21], and the author also recommends two sets of lecture notes by Deift [22] and Kuijlaars [48].

We pose a Riemann–Hilbert problem for \(\Phi =\Phi \left( \zeta ;(w_j,\alpha _j,\beta _j)_{j=1}^{\mu }\right) \) with parameters

-

\(\mu =1,2,3,\dots \),

-

\(-u/2\le w_\mu<w_{\mu -1}<\dots<w_2<w_1\le u/2\), where \(w_1\ge 0\),

-

\(\alpha _j\ge 0\) and \(\mathrm{Re \,}\beta _j=0\), with \((\alpha _j,\beta _j)\ne (0,0)\) for \(j=1,2,\ldots ,\mu \),

where \(u>0\) is some fixed constant.

The model RH problem will later be used to construct a local parametrix at each cluster of points, where \(\mu \) will be the number of points in the cluster. In particular it means that the ordering of the \(\alpha _j,\beta _j\) here do not necessarily correspond with those in the definition of the Toeplitz determinant, we again refer to Sect. 6 for details on the manner in which the model RH problem is utilized.

RH problem for \(\Phi \)

-

(a)

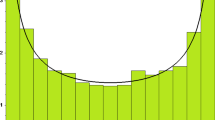

\(\Phi \) is analytic on \({\mathbb {C}}{\setminus } \Gamma _\Phi \), where \(\Gamma _\Phi \) is described by Fig. 1 for \(\mu =4\), and is in general given by

$$\begin{aligned} \begin{aligned} \Gamma _\Phi&=\cup _{j=0}^5 \Gamma _j ,&\Gamma _0&=[-iu,iu],\\ \Gamma _1&=iu+e^{\pi i/4}{\mathbb {R}}_+,&\Gamma _2&=iu+e^{3\pi i /4}{\mathbb {R}}_+,&\Gamma _3&=-iu+e^{5\pi i/4}{\mathbb {R}}_+\\ \Gamma _4&=-iu+e^{7\pi i /4}{\mathbb {R}}_+,&\Gamma _5&=\cup _{j=1}^\mu \{z:\mathrm{Im \,}z=w_j\}, \end{aligned} \end{aligned}$$where \(iu+e^{\pi i j/4}{\mathbb {R}}_+=\{z:\arg (z-iu)=\pi j/4\}\), with the orientation of \(\Gamma _5\) taken to the right, and the orientation of \(\Gamma _j\) taken upwards for \(j=0,\ldots , 4\). On each line segment of the contour, we denote the left hand and right hand side by the + and - side respectively, where left and right are with respect to the orientation of the curve.

-

(b)

\(\Phi \) has continuous boundary values \(\Phi _+(\zeta )\) and \(\Phi _-(\zeta )\) as \(\zeta \in \Gamma _\Phi {\setminus }\{iw_1,\ldots , iw_\mu ,iu,-iu\}\) is approached nontangentially from the \(+\) and − side respectively. Furthermore, \(\Phi _+\) and \(\Phi _-\) are related by the following jumps on \(\Gamma _\Phi \):

$$\begin{aligned} \begin{aligned} \Phi _+(\zeta )&=\Phi _-(\zeta )e^{\pi i (\alpha _j+\beta _j)}&\text {for }\mathrm{Im \,}\zeta =w_j\text { and }\mathrm{Re \,}\zeta >0,\\ \Phi _+(\zeta )&=\Phi _-(\zeta )e^{\pi i (\alpha _j-\beta _j)}&\text {for }\mathrm{Im \,}\zeta =w_j\text { and }\mathrm{Re \,}\zeta <0,\\ \Phi _+(\zeta )&=\Phi _-(\zeta )\begin{pmatrix} 1&{}1\\ 0&{}1 \end{pmatrix}&\text {for }\zeta \in \Gamma _1,\Gamma _4,\\ \Phi _+(\zeta )&=\Phi _-(\zeta )\begin{pmatrix} 1&{}0\\ -1&{}1 \end{pmatrix}&\text {for }\zeta \in \Gamma _2,\Gamma _3,\\ \Phi _+(\zeta )&=\Phi _-(\zeta )J_0&\text {for }\zeta \in \Gamma _0{\setminus } \{-iu,iu,iw_1,\ldots ,iw_\mu \},\\ \end{aligned} \end{aligned}$$where \(J_0=\begin{pmatrix} 0&{}1\\ -1&{}1 \end{pmatrix}\).

-

(c)

As \(\zeta \rightarrow \infty \),

$$\begin{aligned} \begin{aligned} \Phi (\zeta )&=\left( I+\frac{\Phi _1}{\zeta }+{\mathcal {O}}(\zeta ^{-2})\right) e^{-\frac{\zeta }{2} \sigma _3}\prod _{j=1}^\mu (\zeta -iw_j)^{-\beta _j\sigma _3}\\&\quad \exp \left[ \pi i(\beta _j-\alpha _j)\chi _{w_j}(\zeta )\sigma _3\right] , \\ \chi _w(\zeta )&={\left\{ \begin{array}{ll} 0&{}\text {for }\mathrm{Im \,}\zeta >w,\\ 1&{}\text {for }\mathrm{Im \,}\zeta <w,\\ \end{array}\right. } \end{aligned} \end{aligned}$$where the branches are chosen such that \(\arg (\zeta -iw_j)\in [0,2\pi )\) for \(j=1,\ldots ,\mu \), and where \(\Phi _1=\Phi _1\left( \mu ;(w_j,\alpha _j,\beta _j)_{j=1}^\mu \right) \) is independent of \(\zeta \).

-

(d)

\(\Phi (\zeta )\) is bounded as \(\zeta \rightarrow \pm iu\). As \(\zeta \rightarrow iw_j\) for \(j=1,\ldots , k\) in the sector \(\arg (\zeta -iw_j)\in (\pi /2,\pi )\),

$$\begin{aligned} \Phi (\zeta )={\left\{ \begin{array}{ll}F_j(\zeta )(\zeta -iw_j)^{\alpha _j\sigma _3}\begin{pmatrix} 1&{}g(\alpha _j,\beta _j)\\ 0&{}1 \end{pmatrix} &{}\text {for }2\alpha _j\notin {\mathbb {N}}=\{0,1,2,\dots \},\\ F_j(\zeta )(\zeta -iw_j)^{\alpha _j\sigma _3}\begin{pmatrix} 1&{}g(\alpha _j,\beta _j)\log (\zeta -iw_j)\\ 0&{}1 \end{pmatrix}&\text {for }2\alpha _j\in {\mathbb {N}}, \end{array}\right. } \end{aligned}$$for some function \(F_j\) which is analytic on a neighbourhood of \(iw_j\), and

$$\begin{aligned} g(\alpha ,\beta )={\left\{ \begin{array}{ll}-\frac{e^{2\pi i \beta }-e^{-2\pi i \alpha }}{2i\sin 2\pi \alpha }&{}\text {for }2\alpha \notin {\mathbb {N}},\\ \frac{i}{2\pi }\left( (-1)^{2\alpha }e^{2\pi i \beta }-1\right) &{}\text {for }2\alpha \in {\mathbb {N}}. \end{array}\right. } \end{aligned}$$(47)Furthermore, as \(\zeta {\rightarrow } iw_j\), from any sector, \(\Phi (\zeta ) = {\mathcal {O}}\left( |\zeta -iw_j|^{-\alpha _j}|\log (\zeta {-}iw_j)|\right) \).

Remark 5.1

The RH problem for \(\Phi \) gives rise to an RH problem for the determinant of \(\Phi \) as follows. Since the jump matrices of the RH problem for \(\Phi \) have determinant 1, it follows that \(\det \Phi \) extends to a meromorphic functions in the complex plane. Since \(\det \Phi (\zeta )\rightarrow 1\) as \(\zeta \rightarrow \infty \) and as \(\zeta \rightarrow iw_j\) for \(j=1,\ldots , \mu \), we obtain \(\det \Phi (\zeta )=1\) for all \(\zeta \in {\mathbb {C}}\) by Liouville’s theorem, and in particular \(\Phi \) is invertible. Furthermore, if a solution exists to the RH problem for \(\Phi \), then it is unique, because if \({\widetilde{\Phi }}\) were a second solution, then \({\widetilde{\Phi }}(\zeta )\Phi (\zeta )^{-1}\) extends to a meromorphic function in the complex plane by condition (b) for the RH problem for \(\Phi \), and by condition (c) and (d) and Liouville’s theorem it is in fact the identity. It is not clear a priori that a solution does exist, this is the content of Proposition 5.3 below.

Remark 5.2

We use the standard notation

Note that \(\pm iu\) are not special points, and therefore the values of u are not significant, aside from the fact that \(|w_j|<u\) for \(j=1,\ldots , \mu \). We present the RH problem in this manner for notational convenience and to make it clear that the local behaviour at each singularity can be presented in the same form, also for the top and bottom singularity.

We also note that g was chosen such that the local behaviour of \(\Psi \) at the point \(iw_j\) is consistent with the jumps.

5.0.1 The case of a single singularity \(\mu =1\)

When there is only one singularity \(\mu =1\) and \(w_1,u=0\), the RH problem for \(\Phi \) (and equivalent versions of it) has been studied by many authors. It was first solved by Kuijlaars and Vanlessen in [47, 65] for \(\beta =0\) in terms of Bessel functions, and brought to the setting of determinants by Krasovsky in [46] (the topic in [46] was Hankel determinants, see also [12]). For \(\alpha =0\) it was solved by Its and Krasovsky in [41], and a solution for general \(\alpha ,\beta \) was found in terms of confluent hypergeometric functions by Deift, Its, Krasovsky in [23, 25] and Moreno in [54].

Claeys, Its and Krasovsky [15] brought the above solution to the form which we will refer to. In [15] the RH problem is denoted by M, which we will denote by \(M_{\text {CIK}}\), and by comparison of RH problems it follows that

when one takes \(u=0\). The solution to \(M_{\text {CIK}}\) may also be found in [16], Section 4, where we find the following formula

where \(\Gamma \) is Euler’s \(\Gamma \) function.

5.0.2 The case of multiple singularities \(\mu >1\)

In the case of 2 singularities \(\mu =2\), an equivalent version of the RH problem for \(\Phi \) was proven to have a unique solution by Claeys and Krasovsky [16], and to be connected to the Painlevé V equation. See also [30] for a reference on Riemann–Hilbert problems connected to the Painlevé equations. The proof of a unique solution by [16] generalizes easily to our situation of \(\mu =1,2,3,\dots \) singularities, and we have included a proof of the following proposition in the Appendix for the reader’s convenience.

Proposition 5.3

Let \(\alpha _j\ge 0\) and \(\mathrm{Re \,}\beta _j=0\) for \(j=1,2,\dots \mu \). There exists a unique solution to the Riemann–Hilbert problem for \(\Phi \).

5.1 Continuity of \(\Phi \) for varying \(w_j\)’s

The main result of Sect. 5 is the following.

Lemma 5.4

Let \(\alpha _j\ge 0\) and \(\mathrm{Re \,}\beta _j=0\) for \(j=1,2,\ldots , \mu \). Then the following two statements hold.

-

(a)

Given \(u>0\),

$$\begin{aligned} \Phi (\zeta )e^{\frac{\zeta }{2} \sigma _3}\prod _{j=1}^\mu (\zeta -iw_j)^{\beta _j\sigma _3}\exp \left[ \pi i(-\beta _j+\alpha _j)\chi _{w_j}(\zeta )\sigma _3\right] =I+{\mathcal {O}} \left( \frac{1}{\zeta }\right) , \end{aligned}$$(49)as \(\zeta \rightarrow \infty \), uniformly for \(-u/2\le w_\mu<\dots <w_1\le u/2\).

-

(b)

As \(w_1-w_\mu \rightarrow 0\),

$$\begin{aligned} \Phi _1\left( \mu ;(w_j,\alpha _j,\beta _j)_{j=1}^\mu \right)= & {} \begin{pmatrix} {\mathcal {A}}^2-{\mathcal {B}}^2&{}-e^{-\pi i ({\mathcal {A}}+{\mathcal {B}})}\frac{\Gamma (1+{\mathcal {A}}-{\mathcal {B}})}{\Gamma ({\mathcal {A}}+{\mathcal {B}})}\\ e^{\pi i ({\mathcal {A}}+{\mathcal {B}})}\frac{\Gamma (1+{\mathcal {A}}+{\mathcal {B}})}{\Gamma ({\mathcal {A}}-{\mathcal {B}})} &{}{\mathcal {B}}^2-{\mathcal {A}}^2 \end{pmatrix}\nonumber \\&\quad +{\mathcal {O}}(w_1-w_\mu ), \end{aligned}$$(50)where \({\mathcal {A}}=\sum _{j=1}^\mu \alpha _j\) and \({\mathcal {B}}=\sum _{j=1}^\mu \beta _j\).

The first step in the proof of Lemma 5.4 is to transform the RH problem for \(\Phi \) to a RH problem for \({\widehat{\Phi }}\) which is analytic except on the imaginary axis \(\mathrm{Re \,}z=0\), and in particular the jump contour is independent of the locations of the singularities \(w_j\) (though the jumps themselves will vary with the location of the singularities).

5.2 Transformation of RH problem

Let

where I-VI are regions in the complex plane given in Fig. 2.

Let \({\widehat{\Phi }}\) be defined in terms of \(\Phi \) as follows.

where \(\chi _{w_j}\) was defined in condition (c) of the RH problem for \(\Phi \).

Then \({\widehat{\Phi }}\) solves the following RH problem.

RH problem for \({\widehat{\Phi }}\)

-

(a)

\({\widehat{\Phi }}\) is analytic on \({\mathbb {C}}{\setminus } (-i\infty ,i\infty )\), with the orientiation of \((-i\infty ,i\infty )\) upwards.

-

(b)

Let \(w_0=+\infty \) and \(w_{\mu +1}=-\infty \). On \((iw_{j+1},iw_j)\),

$$\begin{aligned} {\widehat{\Phi }}_+={\widehat{\Phi }}_-J_j, \end{aligned}$$for \(j=0,1,\ldots ,\mu \), where \(J_0\) was defined in condition (b) of the RH problem for \(\Phi \), and

$$\begin{aligned} J_j=\begin{pmatrix} 0&{}\quad \exp \left[ -2\pi i \sum _{\nu =1}^j \alpha _\nu \right] \\ -\exp \left[ 2\pi i \sum _{\nu =1}^j \alpha _\nu \right] &{}\quad \exp \left[ 2\pi i \sum _{\nu =1}^j \beta _\nu \right] \end{pmatrix} \end{aligned}$$for \(j=1,2,\ldots , \mu \).

-

(c)

The behaviour of \({\widehat{\Phi }}(\zeta )\) as \(\zeta \rightarrow \infty \) is inherited from conditions (c) of the RH problem for \(\Phi \).

-

(d)

As \(\zeta \rightarrow iw_j\) for \(j=1,\ldots , k\) in the sector \(\arg \zeta \in (\pi /2,3\pi /2)\),

$$\begin{aligned} {\widehat{\Phi }}(\zeta )=F_j(\zeta )(\zeta -iw_j)^{\alpha _j\sigma _3}\begin{pmatrix} 1&{}\quad g(\alpha _j,\beta _j)\\ 0&{}1 \end{pmatrix} \prod _{\nu =1}^{j-1} \exp \left[ \pi i(\alpha _j-\beta _j)\sigma _3\right] , \end{aligned}$$for \(2\alpha _j\notin {\mathbb {N}}=\{0,1,2,\dots \}\), while for \(2\alpha _j\in {\mathbb {N}}\),

$$\begin{aligned} {\widehat{\Phi }}(\zeta )=F_j(\zeta )(\zeta -iw_j)^{\alpha _j\sigma _3}\begin{pmatrix} 1&{}\quad g(\alpha _j,\beta _j)\log (\zeta -iw_j)\\ 0&{}1 \end{pmatrix}\prod _{\nu =1}^{j-1} \exp \left[ \pi i(\alpha _j-\beta _j)\sigma _3\right] , \end{aligned}$$for some function \(F_j\) analytic in a neighbourhood of \(iw_j\), and where \(g(\alpha ,\beta )\) was defined in condition (d) of the RH problem for \(\Phi \).

5.3 Steepest descent analysis of \({\widehat{\Phi }}\)

We will consider the asymptotics of \({\widehat{\Phi }}(\zeta )\) as \(\epsilon \rightarrow 0\), with the goal of proving Lemma 5.4, and on the way we will prove that \({\widehat{\Phi }}\) is continuous with respect to the parameters \(w_1,\ldots ,w_\mu \) (we describe what we mean by continuity below equation (58)).

Partition \(\{w_1,\ldots ,w_\mu \}\) into \(\tau \) disjoint sets

such that

where \({\mathcal {W}}_1,\ldots ,W_\tau \) are distinct fixed points.

We order the points so that

Denote

for \(j=1,2,\ldots , \tau \).

We plan to approximate the RH problem associated with the \(w_j\)’s by the RH problem associated with the \({\mathcal {W}}_j\)’s, and so for increased clarity we label them as different functions.

Let the RH problem associated with \(w_1,\ldots ,w_{\mu }\) be denoted by

and the RH problem associated with \({\mathcal {W}}_1,\ldots , {\mathcal {W}}_\tau \) by

We aim to analyze the RH problem for \(\Psi \) in terms of the RH problem for N. We note that N has the same jumps as \(\Psi \) except on neighbourhoods containing \({\mathcal {W}}_1,\ldots ,{\mathcal {W}}_\tau ,\) and that \(\Psi (\zeta )N(\zeta )^{-1}\rightarrow I\) as \(\zeta \rightarrow \infty \) by condition (c) for the RH problem for \(\Phi \) and the definition of \({\widehat{\Phi }}\), and thus N will be used as a main parametrix.

We will additionally need to show that there exists a local parametrix \(Q(\zeta )\) on fixed neighbourhoods \(U_{{\mathcal {W}}_j}\) of \(i{\mathcal {W}}_j\), such that \(\Psi (\zeta )Q(\zeta )^{-1}\) is analytic on \(U_{{\mathcal {W}}_j}\), and

as \(\epsilon \rightarrow 0\), uniformly for \(\zeta \in \partial U_{{\mathcal {W}}_j}\). Although we only need existence of such a local parametrix, we prove the existence by construction, and we do this in the next subsection, Sect. 5.3.1. By standard theory of small norm problems, see e.g. [21], we will obtain that N approximates \(\Psi \) well outside of the neighbourhoods \(\cup _{j=1}^\tau U_{{\mathcal {W}}_j}\), and more specifically that

uniformly for \(\zeta \) bounded away from \({\mathcal {W}}_1,\ldots , {\mathcal {W}}_\tau \), as \(\epsilon \rightarrow 0\), which is what we referred to as continuity of \({\widehat{\Phi }}\) with respect to \(w_1,\ldots ,w_\mu \) in the first sentence of the present section.

We will subsequently rely on (58) in Sect. 5.4 to prove Lemma 5.4.

5.3.1 Local parametrix

We construct a local parametrix at the point \(i{\mathcal {W}}_j\) which will contain the points \(iw_1^{(j)},\ldots ,iw_{M_j}^{(j)}\), for \(j=1,\ldots , \tau \), and are inspired here by a similar construction in [16] in the special case of two singularities. Thus, letting \(U_{{\mathcal {W}}_j}\) be a fixed open disc centered at \(i{\mathcal {W}}_j\) with a fixed radius \(R>0\), we aim to construct an explicit function Q(z) on \(\cup _{j=1}^\tau U_{{\mathcal {W}}_j} \) with the same jumps as \(\Psi \) on each of these discs such that \(\Psi (\zeta )Q(\zeta )^{-1}\) is analytic on \(\cup _{j=1}^\tau U_{{\mathcal {W}}_j} \), satisfying (57) uniformly on \(\partial U_{W_j}\).

Throughout the Sect. 5.3.1, j will be fixed, and to reduce the number of superscripts, we denote throughout the section

for \(\nu =1,2,\ldots ,M_j\).

We first take a transformation \(\Psi \rightarrow \Psi _j\), where \(\Psi _j\) is analytic for all

and similarly a transformation \(N\rightarrow N_j\) such that \(N_j\) is analytic for all

On \(U_{{\mathcal {W}}_j}\), we define

where

for \(j=2,3,\ldots ,\tau \), where \(J_0\) was defined in condition (b) of the RH problem for \(\Phi \), and

Denote

On \((iy_{\nu +1},i y_\nu )\), \(\Psi _j\) has the jumps

for \(\nu =1,\ldots ,M_{j-1}\) where orientation of the contour is taken upwards, where \(J_0\) was defined in condition (b) of the RH problem for \(\Phi \), and on \(\arg (\zeta -y_{M_j})=3\pi /2\),

We search for a local parametrix \(Q_j\) such that \(Q_j\) has the same jumps as \(\Psi _j\) on \(U_{{\mathcal {W}}_j}\) and such that \(Q_jN_j^{-1}=I+{\mathcal {O}}(\epsilon )\) as \(\epsilon \rightarrow 0\), uniformly on the boundary \(\partial U_{{\mathcal {W}}_j}\) for \(j=1,2,\ldots , \tau \). By defining Q on \(\cup _{j=1}^{\tau }U_{{\mathcal {W}}_j}\) by

where \(J_L^{(j)}\) and \(J_R^{(j)}\) were defined in (62), we thus obtain the function Q we were aiming for, satisfying the conditions that \(\Psi (\zeta )Q(\zeta )^{-1}\) is analytic on \(\cup _{j=1}^\tau U_{{\mathcal {W}}_j} \), and that \(Q(\zeta )N(\zeta )^{-1}= I+{\mathcal {O}}(\epsilon )\) as \(\epsilon \rightarrow 0\) uniformly on \(\cup _{j=1}^\tau \partial U_{{\mathcal {W}}_j} \).

The approach depends on whether or not \(2{\mathcal {A}}_{M_j}\in {\mathbb {N}}\).

Local parametrix for \(2{\mathcal {A}}_{M_j}\notin {\mathbb {N}}\)

Assume that \(2{\mathcal {A}}_{M_j}\notin {\mathbb {N}}\), and define

where g was defined in (47), \(y_\nu \) were defined in (59), \(E_j\) is an analytic function given below in (70), \(\arg (\zeta -iy_\nu )\in (-\pi /2,3\pi /2)\), and

and we recall that \({\mathcal {A}}_\nu \) and \({\mathcal {B}}_\nu \) were defined in (63).

We first consider the jumps of \(Q_j\) on \( U_{{\mathcal {W}}_j}\), and aim to show that they are the same as the jumps of \(\Psi _j\). If

for \(a<b\) and \(h\in L^{2}([ia,ib])\), then F is analytic on \({\mathbb {C}}{\setminus } [ia,ib]\). It follows that \({\widehat{Q}}_j\) is analytic on \(U_{{\mathcal {W}}_j}{\setminus } [iy_{M_j},iy_1]\), and it is easily verified by comparison with (65) that \(Q_{j,-}^{-1}Q_{j,+}=\Psi _{j,-}^{-1}\Psi _{j,+}\) for \(\zeta \) with \(\arg \left( \zeta -iy_{M_j}\right) =3\pi /2\). If in addition \(h(\lambda )\) extends to an analytic function on a an open set containing (ia, ib), then

for all \(\zeta \in (ia,ib)\), with upward orientation, so

for \(\zeta \in (iy_{\nu +1},iy_{\nu })\), \(\nu =1,2,\ldots , M_j-1\), and it follows that

By comparison with (64) and the definition of \(c_\nu \), it follows that \(Q_{j,-}^{-1}Q_{j,+}=\Psi _{j,-}^{-1}\Psi _{j,+}\) on \((iy_{\nu +1},iy_{\nu })\), \(\nu =1,2,\ldots , M_j-1\). Since the jumps match, and neither \(\Psi _j\) nor \(Q_j\) have essential singularities, it follows that \(\Psi _jQ_j^{-1}\) is meromorphic on \( U_{{\mathcal {W}}_j}\). To prove, in addition, that \(\Psi _jQ_j^{-1}\) is analytic on \( U_{{\mathcal {W}}_j}\), it thus remains to prove boundedness on \( U_{{\mathcal {W}}_j}\) as functions of \(\zeta \). Since \(E_j\) and \({\widehat{Q}}_j\) are bounded on \( U_{{\mathcal {W}}_j}\), it follows by the definition of \(Q_j\) that

is bounded on \( U_{{\mathcal {W}}_j}\) as a function of \(\zeta \). By the definition of \(\Psi \) in (55) and condition (d) for the RH problem for \({\widehat{\Phi }}\),

is bounded on \( U_{{\mathcal {W}}_j}\) as a function of \(\zeta \).

Since \(\Psi _j(\zeta )Q_j(\zeta )^{-1}\) is meromorphic on \(U_{{\mathcal {W}}_j}\) and by the boundedness of (68) and (69) it follows that \(\Psi _j(\zeta )Q_j(\zeta )^{-1}\) is analytic on \( U_{{\mathcal {W}}_j}\).

We define \(E_j\) by

We recall that \({\mathcal {A}}_{M_j}={\mathcal {A}}^{(j)}\). Thus, by the definition of \(N_j\) and condition (d) for the RH problem for \(\Phi \), it follows that \(N_j(\zeta )=\Phi \left( \zeta ;\left( {\mathcal {W}}_i,{\mathcal {A}}^{(i)},{\mathcal {B}}^{(i)}\right) _{i=1}^\tau \right) \) for \(\arg (\zeta )\in (\pi /2,\pi )\), and one verifies that the singularity of \(N_j\) cancels with that of \((\zeta -i{\mathcal {W}}_j)^{-{\mathcal {A}}_{M_j}\sigma _3}\). It is easily seen that \(E_j\) has no jumps on \(U_{{\mathcal {W}}_j}\), and thus it is analytic. For \(\zeta \in \partial U_{{\mathcal {W}}_j}\), define

We first note that \(E_j\) are analytic functions on \(U_{{\mathcal {W}}_j}\), and since they are independent of \(\epsilon \), they are uniformly bounded on \(\partial U_{{\mathcal {W}}_j}\). Since

as \(\epsilon \rightarrow 0\), uniformly for \(\zeta \in \partial U_{{\mathcal {W}}_j}\), it follows by (67) and the boundedness of \(E_j\) on \(\partial U_{{\mathcal {W}}_j}\), that

as \(\epsilon \rightarrow 0\), uniformly for \(\zeta \in \partial U_{{\mathcal {W}}_j}\).

Local parametrix for \(2{\mathcal {A}}_{M_j}\in {\mathbb {N}}\)

Assume that \(2{\mathcal {A}}_{M_j}\in {\mathbb {N}}\), and define

where g was defined in (47), \(y_\nu \) were defined in (59), \(E_j\) is an analytic function given below in (72), the argument \(\arg \left( \zeta -iy_\nu \right) \in (-\pi /2,3\pi /2)\), and

and we recall that \({\mathcal {A}}_\nu \) and \({\mathcal {B}}_\nu \) were defined in (63). As in the case for \(2{\mathcal {A}}_{M_j}\notin {\mathbb {N}}\), it is easily seen that \({\widehat{Q}}_j\) is analytic on \(U_{{\mathcal {W}}_j}{\setminus }\left[ iy_{M_j},iy_1\right] \), and it follows that \(Q_{j,-}^{-1}Q_{j,+}=\Psi _{j,-}^{-1}\Psi _{j,+}\) on \(\arg (\zeta -iy_{M_j})=3\pi /2\). On \( (iy_{\nu +1},iy_{\nu })\), \(\nu =1,2,\ldots , M_j-1\),

and it follows that

By comparison with (64) and the definition of \(d_\nu \), \(e_\nu \), it follows that \(Q_{j,-}^{-1}Q_{j,+}=\Psi _{j,-}^{-1}\Psi _{j,+}\) on \((iy_{\nu +1},iy_{\nu })\), \(\nu =1,2,\ldots , M_j-1\). Thus \(\Psi _jQ_j^{-1}\) is meromorphic on \(U_{{\mathcal {W}}_j}\), and in a similar manner to the case \(2{\mathcal {A}}_{M_j}\notin {\mathbb {N}}\), one verifies that \(\Psi _jQ_j^{-1}\) is also bounded, and thus analytic on \(U_{{\mathcal {W}}_j}\).

We define \(E_j\) by

and in a similar manner to the case \(2{\mathcal {A}}_{M_j}\notin {\mathbb {N}}\), it follows that \(E_j\) is analytic on \(U_{{\mathcal {W}}_j}\), and that

as \(\epsilon \rightarrow 0\), uniformly for \(\zeta \in \partial U_{{\mathcal {W}}_j}\).

5.3.2 Small norm matrix

Recall Q defined by (66), and \(\Psi \) and N defined in (55) and (56) respectively. Let \( {\widehat{R}}\) be given by

In Sect. 5.3.1 we proved that \(\Psi Q^{-1}\) was analytic on \(U_{{\mathcal {W}}_j}\) and that Q satisfied (57), from which it follows that \({\widehat{R}}\) satsisfies the following RH problem.

RH problem for \({\widehat{R}}\)

-

(a)

\({\widehat{R}}\) is analytic on \({\mathbb {C}}{\setminus } \cup _{j=1}^\tau \partial U_{{\mathcal {W}}_j}\), with the orientation of \(\partial U_{W_j}\) taken in a clockwise direction.

-

(b)

As \(\epsilon \rightarrow 0\),

$$\begin{aligned} {\widehat{R}}_+(\zeta )={\widehat{R}}_-(\zeta )(I+{\mathcal {O}}(\epsilon )), \end{aligned}$$uniformly on \(\cup _{j=1}^\tau U_{{\mathcal {W}}_j}\).

-

(c)

\({\widehat{R}}(\zeta )=I+{\mathcal {O}}(\zeta ^{-1})\) as \(z\rightarrow \infty \).

By standard small norm analysis, see e.g. [21],

as \(\epsilon \rightarrow 0\) uniformly for the parameters

for fixed \({\mathcal {W}}_1,\ldots ,{\mathcal {W}}_\tau \), and uniformly for \(\zeta \in {\mathbb {C}}{\setminus } \cup _{j=1}^\tau \partial U_{{\mathcal {W}}_j}\), with the implicit constant depending only on u, and the parameters \(\alpha _j,\beta _j\).

5.4 Proof of Lemma 5.4

We prove (a) of Lemma 5.4 by contradiction. Denote the left hand side of (49) by \(F_{\Phi }(\zeta ;w_1,\ldots ,w_\mu )\). Assume that there is a sequence of points \(-u/2\le w_\mu (k)<\dots <w_1(k)\le u/2\) for \(k=1,2,\dots \) and corresponding \(\zeta _k\) such that \(\zeta _k\rightarrow \infty \) as \(k\rightarrow \infty \), satisfying

as \(k\rightarrow \infty \). Then there would be a subsequence \(k_i\) such that \(w_j(k_i)\rightarrow w_j\) for \(j=1,2,\ldots ,\mu \), for some points

We denote \(\{w_1,\ldots ,w_\mu \}=\{{\mathcal {W}}_1,\ldots ,{\mathcal {W}}_\tau \}\), where the points \({\mathcal {W}}_\tau<\dots < {\mathcal {W}}_1\) are distinct. Let

By (74), it follows that

as \(k_i\rightarrow \infty \). By condition (c) for the RH problem for \( \Phi \left( \zeta ;({\mathcal {W}}_j,{\mathcal {A}}_j,{\mathcal {B}}_j)_{j=1}^\tau \right) \), and the definition of \({\widehat{\Phi }}\) in (51), it follows that

where \(r(\zeta )\) is given by

where the branch cuts of r are a subset of \([-iu/2,iu/2]\) and \(r(\zeta )\rightarrow I\) as \(\zeta \rightarrow \infty \). By condition (c) of the RH problem for \(\Phi \left( \zeta _{k_i};({\mathcal {W}}_j,{\mathcal {A}}_j,{\mathcal {B}}_j)_{j=1}^{\tau }\right) \), and the fact that \(r(\zeta )=I+{\mathcal {O}}(1/\zeta )\) uniformly in \(k_i\) as \(\zeta \rightarrow \infty \), it follows that

as \(k_i \rightarrow \infty \). Thus the left hand side of (75) is bounded as \(k_i\rightarrow \infty \), which is a contradiction, concluding the proof of Lemma 5.4 (a).

To prove (b), we note that if \(|w_1|,\ldots ,|w_\mu |<\epsilon \) and \(\epsilon \rightarrow 0\), then similarly to (76) we have

as \(\zeta \rightarrow \infty \) and \(\epsilon \rightarrow 0\), where \(r_0(\zeta )\) is given by

where the branch cuts of \(r_0\) are a subset of \([-iu/2,iu/2]\) and \(r_0(\zeta )\rightarrow I\) as \(\zeta \rightarrow \infty \). Then part (b) of the lemma follows by (48) and noting that \(r_0(\zeta )=I+{\mathcal {O}} \left( \frac{\epsilon }{|\zeta |}\right) \) as \(\zeta \rightarrow \infty \) and \(\epsilon \rightarrow 0\).

6 Asymptotics of the Orthogonal Polynomials

Define \(Y=Y(z)\) in terms of the orthogonal polynomials \(\psi _n\) satisfying (35):

where we recall that \(\chi _n\) is the leading coefficient of \(\psi _n\), with the integration taken in counter-clockwise direction on the unit circle \({\mathcal {C}}\), and where \({\overline{\psi }}_{n-1}(z)=\overline{\psi _{n-1}({\overline{z}})}\). The function Y uniquely solves the following Riemann–Hilbert Problem

-

(a)

\(Y:{\mathbb {C}} {\setminus } {\mathcal {C}} \rightarrow {\mathbb {C}}^{2\times 2}\) is analytic;

-

(b)

\(Y_+(z)=Y_-(z)\begin{pmatrix} 1&{}f(z)z^{-n}\\ 0&{}1 \end{pmatrix}\) for \(|z|=1\), \(\arg z\ne t_1,t_2,\ldots ,t_m\);

-

(c)

\(Y(z)=(I+\mathcal {O}(1/z))\begin{pmatrix}z^n&{}0\\ 0&{}z^{-n}\end{pmatrix}\) as \(z\rightarrow \infty \).

That Y defined in (77) solves the RH problem for Y is easily verified, and is a result due to Baik, Deift, Johansson [6], who were inspired by a similar observation by Fokas, Its, Kitaev [29] concerning orthogonal polynomials on the real line. See e.g. [21] for an introduction to analysis of RH problems in random matrix theory, and also two sets of lecture notes [22, 48]. Similarly to the RH problem for \(\Phi \), standard theory dictates that the determinant of Y is 1, and that (77) is the unique solution to the RH problem for Y.

It is immediate from (77) that

We rely on the Deift-Zhou [26] steepest descent analysis for RH problems to obtain the asymptotics of Y(0) as \(n\rightarrow \infty \), which will provide the asymptotics of \(\chi _{n-1}\) necessary for the proof of Proposition 4.1 (b) and Proposition 4.2

The Szegő function \({\mathcal {D}}(z)=\exp \frac{1}{2\pi i }\int _C \frac{\log f(s)}{s-z}ds\) plays an important role. Define

analytic on \({\mathbb {C}}{\setminus } \{z:\arg z=\arg t_j\}\). In [23], it was noted that for \(|z|<1\), we have \({\mathcal {D}}(z)={\mathcal {D}}_{\mathrm{in}}(z)\) and for \(|z|>1\) we have \({\mathcal {D}}(z)={\mathcal {D}}_{\mathrm{out}}(z)\).

Furthermore,

for \(z\in {\mathcal {C}}{\setminus } \left( \cup _{j=1}^me^{it_j}\right) \), and we extend the definition of f by letting f be defined by (80) on \({\mathbb {C}}{\setminus } \left( \{0\}\cup \{z:\arg z=\arg t_j\}\right) \). It follows that on \(\{z:\arg z=\arg t_j\}\),

with the orientation taken away from 0 and toward \(\infty \).

6.1 Transformation of the RH problem for Y, and opening of the lens

Define

As \(z\rightarrow \infty \), we have that \(T(z)\rightarrow I\), and on the unit circle in the complex plane \({\mathcal {C}}\),

We observe that \(z^n\) and \(z^{-n}\) oscillate on the unit circle, and our goal in this section is, roughly speaking, to define a function S in terms of T, with a modified jump contour, such that the jumps of S have no oscillations on the unit circle. This procedure is known as the opening of the lens. The shape of the lens is depicted in Fig. 3, and outside the lens we let \(S=T\), while inside each region of the lens \(T^{-1}S\) will be analytic. Thus Fig. 3 also depicts the jump contour of S, and the jumps of S will be close to the identity on the edges of the lenses as \(n\rightarrow \infty \). The requirement that \(T^{-1}S\) is analytic on each region of the lens is the reason why the shape of the lens cannot contain any of the singularities, and we do not open the lens between singularities in the same cluster.

We now formalize the above discussion regarding the opening of the lens, and introduce some notation. Given u, let \({\widehat{U}}>0\) be such that the asymptotics of Lemma 5.4 (a) hold for \(|\zeta |>{\widehat{U}}/3\), for any \(\mu =1,2,\ldots ,m\). Recall the notation from Sect. 4, namely that \(t_1,\ldots ,t_m\) satisfies condition \((u,{\widehat{U}},n)\) if \(t_1,\ldots ,t_m\) partition into clusters where the radius of each cluster is less than u/n, while the distance between any two clusters is greater than U/n. We denote the clusters by \(\mathbf{Cl} _j= \mathbf{Cl} _j(u,{\widehat{U}},n)\). Denote the number of points in each set \(\mathbf{Cl} _j\) by \(\mu _j\) for \(j=1,2,\ldots ,r\), and let

We denote the elements of \(\mathbf{Cl} _j=\{t^{(j)}_1,\ldots ,t^{(j)}_{\mu _j}\}\) for \(j=1,2,\ldots ,r\), and denote the parameters associated with \(t^{(j)}_i\) by \(\alpha _i^{(j)},\beta _i^{(j)}\), and order the points so that \(t^{(j)}_1>\dots >t^{(j)}_{\mu _j}\). In this way we have a natural partition

We let

so that \(\{e^{it}:t\in \mathbf{Cl} _j\}\subset \Lambda _j\) and so that \(e^{it_1^{(j)}}, e^{it_{\mu _j}^{(j)}}\) are not the endpoints of the arc \(\Lambda _j\), and define \(\Lambda =\cup _j \Lambda _j\). We open a lens around each arc comprising \( {\mathcal {C}} {\setminus } \Lambda \), where \({\mathcal {C}}\) is the unit circle, as in Fig. 3.

In the lenses, f is given by (80), and we define

By the jumps of T in (83) and using the factorisation

it is easily verified that S uniquely solves the following RH problem.

RH problem for S

-

(a)

S is analytic on \({\mathbb {C}}{\setminus } \Sigma _S\), where \(\Sigma _S\) is the union of the unit circle and the contours of the lenses.

-

(b)

S has the following jumps on \(\Sigma _S\):

$$\begin{aligned} \begin{aligned} S_+(z)&=S_-\begin{pmatrix} 1&{}0 \\ z^{-n}f(z)^{-1}&{}1 \end{pmatrix}&\text {on the edge of the lenses, |z|>1,}\\ S_+(z)&=S_-\begin{pmatrix} 1&{}0 \\ z^{n}f(z)^{-1}&{}1 \end{pmatrix}&\text {on the edge of the lenses, |z|<1,}\\ S_+(z)&=S_-(z)\begin{pmatrix} 0&{}f(z)\\ -f(z)^{-1}&{}0 \end{pmatrix}&\text {for } z\in {\mathcal {C}}{\setminus } \Lambda ,\\ S_+(z)&=S_-(z) \begin{pmatrix} z^n&{}f(z)\\ 0&{}z^{-n} \end{pmatrix}&\text {for }z\in \Lambda . \end{aligned} \end{aligned}$$ -

(c)

As \(z\rightarrow \infty \),

$$\begin{aligned} S(z)=I+{\mathcal {O}}(1/z). \end{aligned}$$ -

(d)

As \(z\rightarrow e^{it_j}\), \(j=1,\ldots ,m\), in the region outside the lens,

$$\begin{aligned} S(z)={\mathcal {O}}(|\log |z-e^{it_j}||). \end{aligned}$$

6.2 Main parametrix

Recall \({\mathcal {D}}_{\mathrm{in}}\) and \({\mathcal {D}}_{\mathrm{out}}\) from (79), and define M by

We observe that M is analytic on \({\mathbb {C}} {\setminus } {\mathcal {C}}\), that \(M(z)\rightarrow I \) as \(z\rightarrow \infty \), and that M same jumps as S on the unit circle, namely:

Thus \(S M^{-1}\) solves an RH problem with jumps that converge pointwise to I as \(n\rightarrow \infty \) except on the shrinking contour \(\Lambda \), and \(S M^{-1}(z)\rightarrow I\) as \(z\rightarrow \infty \), so we take M to be our main parametrix, and will prove in Lemma 6.1 below that M(z) approximates S(z) for z bounded away from the clusters.

6.3 Local parametrix

We define open sets \({\mathcal {U}}_1,\ldots ,{\mathcal {U}}_r\) containing each cluster \(\mathbf{Cl} _1,\ldots ,\mathbf{Cl} _r\) respectively by

where we recall \({\widehat{t}}_j\) from (84) is the average of all the points in a cluster, and \({\widehat{u}}_n\) from (38) is defined so that \(n{\widehat{u}}_n\) is the minimal distance between any two clusters, which implies that if \(t_1,\ldots , t_m\) satisfy condition \((u,{\widehat{U}},n)\) then \({\widehat{u}}_n>{\widehat{U}}\). In the case where there is only a single cluster, we recall that \({\widehat{u}}_n={\hat{k}} n\) for some sufficiently small \({\hat{k}}\), and we require that \({\hat{k}}\) is small enough so that V(z) is analytic on \({\mathcal {U}}_1\). The asymptotics we take in this section will be valid in the limit \({\widehat{u}}_n\rightarrow \infty \), which should be interpreted as the clusters separating, and our goal is to construct local parametrices on \({\mathcal {U}}_j\): namely to find functions \(P_j\) on \({\mathcal {U}}_j\) with the same jumps as S with \(S(z)P_j(z)^{-1}\) analytic on \({\mathcal {U}}_j\), such that \(P_j(z)M(z)^{-1}\rightarrow I\) for \(z\in \partial U_j\) as \({\widehat{u}}_n\rightarrow \infty \).

Let

for \(z\in {\mathcal {U}}_j\).

Recall the notation (85). We define

for \(\nu =1,\ldots ,\mu _j\). Then \(\zeta _j\) is a conformal map on \({\mathcal {U}}_j\) mapping \(\Lambda _j\) to \([-iu,iu]\). For \(z\in \partial {\mathcal {U}}_j\),

Recall the model RH problem \(\Phi \) from Sect. 5. On \({\mathcal {U}}_j\), we define

where, recalling the definition of f below (80),

and where

recalling that \(\chi _w(\zeta )\) was defined in condition (c) of the RH problem for \(\Phi \), and the branches of \(\zeta _j(z)-iw_\nu ^{(j)}\) are such that \(\arg \left( \zeta _j(z)-iw_\nu ^{(j)}\right) \in (0,2\pi )\), and M was defined in (87). By the jumps of f in (81), the definition of M, and the definition of W, it follows that \(E_j\) has no jumps on \({\mathcal {U}}_j\). By the definitions of \({\mathcal {D}}_{\mathrm{in}}\) and \({\mathcal {D}}_{\mathrm{out}}\) in (79), of f in (80), and of M in (87), it is easily seen that \(M(z)W(z)^{-1}\) is bounded on \(\overline{{\mathcal {U}}_j}\). Thus \(E_j\) is analytic on \({\mathcal {U}}_j\), and uniformly bounded on \(\partial {\mathcal {U}}_j\) as \(n\rightarrow \infty \).

By the jumps of f in (81) and condition (b) for the RH problem for \(\Phi \), it follows that \(P_j\) and S have the same jumps on \({\mathcal {U}}_j\), and thus \(SP_j^{-1}\) is meromorphic. By condition (d) for the RH problem for \(\Phi \), the definition of W, and condition (d) for the RH problem for S, the singularities of \(SP_j^{-1}\) at \(e_{it_j}\) are removable, and thus \(SP_j^{-1}\) is analytic on \({\mathcal {U}}_j\). By condition (c) for the RH problem for \(\Phi \), and the boundedness of \(E_j(z)\) on \(\partial {\mathcal {U}}_j\), given \(u>0\), there exists \({\widehat{U}}>u\) such that

as \(n\rightarrow \infty \), uniformly for \(z\in \partial {\mathcal {U}}_j\), and by (49) the error term is also uniform for \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widehat{U}},n)\), which completes the construction of the local parametrix.

6.4 Small norm matrix

Let R be given by

R satisfies the following RH problem.

RH problem for R

-

(a)

R is analytic on \({\mathbb {C}}{\setminus }\Sigma _R\), where \(\Sigma _R\) is the union of the edges of the lenses and \( \cup _{j=1}^r\partial {\mathcal {U}}_j\).

-

(b)

On \(\Sigma _R\),

$$\begin{aligned} R_+(z)=R_-(z)(I+\Delta (z)), \end{aligned}$$with orientiation taken clockwise, and by (92) and condition (b) for the RH problem for S we have that given \(u>0\), there exists \({\widehat{U}}>u\), such that

$$\begin{aligned} \Delta (z)={\left\{ \begin{array}{ll} {\mathcal {O}}({\widehat{u}}_n^{-1}) &{} \text { for }z\in \partial {\mathcal {U}}_j\text { and }j=1,\ldots ,r,\\ {\mathcal {O}}(|z|^n)&{}\text { for }z\text { on the edge of the lenses and} |z|<1, \\ {\mathcal {O}}(|z|^{-n})&{}\text { for }z\text { on the edge of the lenses and }|z|>1,\end{array}\right. } \end{aligned}$$(94)as \(n\rightarrow \infty \), uniformly for \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widehat{U}}, n)\).

-

(c)

\(R(z)=I+{\mathcal {O}}(z^{-1})\) as \(z\rightarrow \infty \).

Lemma 6.1

Let \(\alpha _j\ge 0\) and \(\mathrm{Re \,}\beta _j=0\) for \(j=1,\ldots ,m\). Then given u, there exists \({\widetilde{U}}>u\) such that \(R(z)=I+{\mathcal {O}}({\widehat{u}}_n^{-1})\), as \(n\rightarrow \infty \), uniformly for \(z\in {\mathbb {C}}{\setminus } \Sigma _R\) and \(t_1,\ldots , t_m\) satisfying condition \((u,{\widetilde{U}}, n)\).

Proof

Small-norm analysis of RH—problems with fixed contours is standard material, see e.g. [21], but for RH—problems with shrinking contours the theory is less developed. In the following, we follow [17], where a slightly more detailed description may be found for a similar problem.

It is easily verified that

Consider

and assume this maximum is achieved at a point \(z_{\max }\in {\mathbb {C}}\cup \{\infty \}\) (or that \(R_+\) or \(R_-\) achieves this supremum at \(z_{\mathrm{max}}\)). We piecewise analytically continue \(R_-\) and \(\Delta \) to strips of width of order \(2c{\widehat{u}}_n/n\) containing \(\Sigma _R\), for some fixed but sufficiently small \(c>0\). On these strips the bounds on \(\Delta \) from (94) still hold. Furthermore, on these strips R is either equal to \(R_-\) or \(R_-(I+\Delta )\), either way it follows by (94) that

for n sufficiently large, for all z in the strips. By deforming the contour of integration \(\Sigma _R\), but keeping it in the strips, we may assume that \(z_{\max }\) is of distance greater than \(c{\widehat{u}}_n/n\) from \(\Sigma _R\). Crucially, (96) still holds on this deformed contour, and combined with (95), it follows that

where we now assume that \(z_{\max }\) is of distance greater than \(c{\widehat{u}}_n/n\) from \(\Sigma _R\). Thus

By the fact that \(z_{\max }\) is of at least distance \(c{\widehat{u}}_n/n\) from \(\Sigma _R\) for some \(c>0\), by (94), and by the fact that \(\partial {\mathcal {U}}_j\) is of length of order \({\widehat{u}}_n/n\), it follows that given \(u>0\), there exists \({\widehat{U}}>u\) such that

as \(n\rightarrow \infty \), uniformly for \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widehat{U}}, n)\). Let \(\Sigma _{\text {Edge}}^{\text {out}}\) denote the edges of the lenses in the exterior of the unit disc in the complex plane. Then

as \(n\rightarrow \infty \) in the same limit. We pick \({\widetilde{U}}>{\widehat{U}}\) such that the right hand side of (98) and (99) are less than 1/4 for all \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widetilde{U}}, n)\) (if \(t_1,\ldots ,t_m\) satisfy condition \((u,{\widetilde{U}}, n)\) then \({\widehat{u}}_n>{\widetilde{U}}\) so this is indeed possible). Then

for all \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widetilde{U}}, n)\). Now consider

and assume this supremum is achieved at a point \(z_{\max ,2}\in {\mathbb {C}}\cup \{\infty \}\). By deforming the contour of integration, we may assume that \(z_{\max ,2}\) is of distance greater than \(c{\widehat{u}}_n/n\) from \(\Sigma _R\), for some constant \(c>0\). Thus, by (100), (96), (95), it follows that

The lemma follows upon integration, by similar arguments to (98) and (99). \(\quad \square \)

Lemma 6.2

Let \(\alpha _j\ge 0\) and \(\mathrm{Re \,}\beta _j=0\). Then given u, there exists \({\widetilde{U}}\) such that the following two statements hold.

-

(a)

As \(n \rightarrow \infty \),

$$\begin{aligned} R(0)=I+{\mathcal {O}}(1/n), \end{aligned}$$uniformly for \(t_1,\ldots , t_m\) satisfying condition \((u,{\widetilde{U}},n)\).

-

(b)

As \(n\rightarrow \infty \)

$$\begin{aligned} R(0)=I+\sum _{j=1}^r\int _{\partial U_j}\frac{\Delta _1(s)}{s}\frac{ds}{2\pi i }+{\mathcal {O}}\left( \frac{1}{n{\widehat{u}}_n}\right) , \end{aligned}$$uniformly for \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widetilde{U}},n)\), where

$$\begin{aligned} \Delta _{1,22}(z)=\frac{\Phi _{1,11}\left( \mu _j;\left( w_\nu ^{(j)},\alpha _{\nu }^{(j)},\beta _\nu ^{(j)}\right) _{\nu =1}^{\mu _j}\right) }{\zeta _j(z)}, \end{aligned}$$for \(z\in \partial U_j\), with \(\Phi _1\) as in condition (c) for the RH problem for \(\Phi \).

Part (a) of Lemma 6.2 will be relied on to prove Proposition 4.2, while part (b) of Lemma 6.2 will be relied on to prove Proposition 4.1 (b).

Proof

We choose \({\widetilde{U}}\) as in Lemma 6.1, and evaluate (95) as \(n\rightarrow \infty \). The integration contour \(\Sigma _R\) partitions naturally into two parts, \(\cup _{j=1}^r \partial {\mathcal {U}}_j\) and the edges of the lenses \(\Sigma _{\mathrm{edge}}\). Denote the edges of the lenses on the inside of the unit disc by \(\Sigma _{\mathrm{Edge }}^{\mathrm{in}}\). Then by Lemma 6.1 and (94),

for some constants \(C_i>0\), \(i=1,\ldots ,4\), and sufficiently large \({\widehat{u}}_n\), for \(j,k\in \{1,2\}\). A similar statement can be made for the edges of the lense outside the unit disc. We note that the length of the contour \(\cup _{j=1}^r \partial {\mathcal {U}}_j\) is of order \({\widehat{u}}_n/n\) as \(n\rightarrow \infty \), and so since \(\Delta (s)={\mathcal {O}}(1/{\widehat{u}}_n)\) for \(s\in \cup _{j=1}^r \partial {\mathcal {U}}_j\), it follows by Lemma 6.1 and (94), that

as \(n\rightarrow \infty \). Part (a) of the lemma follows from (101)–(102). By the definition of R in (93) and condition (c) of the RH problem for \(\Phi \), we have that \(\Delta (z)=\Delta _1(z)+{\mathcal {O}}({\widehat{u}}_n^{-2})\) as \(n\rightarrow \infty \), where

for \(z\in \partial U_j\). By (101) it follows that as \(n\rightarrow \infty \),

where the orientation of the integral is clockwise. Since

for some analytic function \(g_{E,j}\), it follows by (103) that

and thus we have proven part (b) of the lemma. \(\quad \square \)

6.5 Proof of Proposition 4.1

By (78), and the definition of T, S, R in (82), (86), (93), it follows that

By (87),

From Lemma 6.2 it follows that, given \(u>0\), there exists \({\widetilde{U}}>u\) such that \(R(0)=I+{\mathcal {O}}(n^{-1})\) uniformly for all \(t_1,\ldots , t_m\) satisfying condition \((u,{\widetilde{U}}, n)\) as \(n\rightarrow \infty \). Thus, substituting these asymptotics and (106) into (105), we obtain

in the same limit, which proves Proposition 4.2. This in turn proves Proposition 4.1(a) by the discussion following Proposition 4.2.

Substituting (106) and the asymptoticss of Lemma 6.2(b) into (105), we obtain that given \(u>0\) there exists \({\widetilde{U}}>u\) such that

as \(n\rightarrow \infty \), uniformly for \(t_1,\ldots ,t_m\) satisfying condition \((u,{\widetilde{U}},n)\), with the integral taken with clockwise orientation. By (50),

as \(w_{\mu _j}^{(j)}-w_1^{(j)}\rightarrow 0\). By the definition of \(\zeta _j\) in (89) and the fact that the orientation of the integral is clockwise, it follows that

Substituting (107) and (108) into (109) proves Proposition 4.1 (b).

Notes

As an example where the approach fails to provide optimal error terms, consider \(m=3\) and \(\alpha ^2=2/5\). Then we obtain

$$\begin{aligned} I_\epsilon ^{(\alpha )}\le \int _0^1ds_1\int _0^1ds_2(s_1+\epsilon )^{-4/5}(s_2+\epsilon )^{-8/5}={\mathcal {O}}\left( \epsilon ^{-3/5}\right) , \end{aligned}$$as \(\epsilon \rightarrow 0\). However the optimal bound we are looking to obtain is of order \(\epsilon ^{(m-1)(1-m\alpha ^2)}=\epsilon ^{-2/5}\).

References

Arguin, L.P., Belius, D., Bourgade, P.: Maximum of the characteristic polynomial of random unitary matrices. Commun. Math. Phys. 349, 703–751 (2017)

Assiotis, T., Keating, J.P.: Moments of moments of characteristic polynomials of random unitary matrices and lattice point counts, Random Matrices Theory Appl., preprint on arXiv:1905.06072

Bailey, E.C., Keating, J.P.: On the moments of the moments of the characteristic polynomials of random unitary matrices, To appear in Commun. Math. Phys.. https://doi.org/10.1007/s00220-019-03503-7

Basor, E.: Asymptotic formulas for Toeplitz determinants. Trans. Am. Math. Soc. 239, 33–65 (1978)

Basor, E.: A localization theorem for Toeplitz determinants. Indiana Univ. Math. J. 28, (1979)

Baik, J., Deift, P., Johansson, K.: On the distribution of the length of the longest increasing subsequence of random permutations. J. Am. Math. Soc. 12, 1119–1178 (1999)

Böttcher, A.: The Onsager formula, the fisher-hartwig conjecture, and their influence on research into Toeplitz operators. J. Stat. Phys. 78, 575–584 (1995)

Böttcher, A., Silberman, B.: Analysis of Toeplitz Operators, 2nd edn. Springer, Berlin (2006)

Böttcher, A., Silbermann, B.: Introduction to Large Truncated Toeplitz Matrices. Springer, Berlin (1999)

Böttcher, A., Silbermann, B.: Toeplitz operators and determinants generated by symbols with one Fisher–Hartwig singularity. Math. Nachr. 127, 95–123 (1986)

Böttcher, A., Widom, H.: Szegő via Jacobi. Lin. Alg. Appl. 419, 656–667 (2006)

Charlier, C.: Asymptotics of Hankel determinants with a one-cut regular potential and Fisher–Hartwig singularities. IMRN 24, 7515–7576 (2019)

Chhaibi, R., Madaule, T., Najnudel, J.: On the maximum of the C\(\beta \)E field. Duke Math. J. 167, 2243–2345 (2018)

Claeys, T., Fahs, B.: Random Matrices with Merging Singularities and the Painlevé V Equation. SIGMA 12, 031, 44 (2016)

Claeys, T., Its, A., Krasovsky, I.: Emergence of a singularity for Toeplitz determinants and Painlevé V. Duke Math. J. 160, 207–262 (2011)

Claeys, T., Krasovsky, I.: Toeplitz determinants with merging singularities. Duke Math. J. 164, (2015)

Claeys, T., Fahs, B., Lambert, G., Webb, C.: How much can the eigenvalues of a random matrix fluctuate? Preprint arXiv:1906.01561 (To appear in Duke Math J.)