Abstract

We consider a branching random walk on \(\mathbb {Z}\) started by n particles at the origin, where each particle disperses according to a mean-zero random walk with bounded support and reproduces with mean number of offspring \(1+\theta /n\). For \(t\ge 0\), we study \(M_{nt}\), the rightmost position reached by the branching random walk up to generation [nt]. Under certain moment assumptions on the branching law, we prove that \(M_{nt}/\sqrt{n}\) converges weakly to the rightmost support point of the local time of the limiting super-Brownian motion. The convergence result establishes a sharp exponential decay of the tail distribution of \(M_{nt}\). We also confirm that when \(\theta >0\), the support of the branching random walk grows in a linear speed that is identical to that of the limiting super-Brownian motion which was studied by Pinsky (Ann Probab 23(4):1748–1754, 1995). The rightmost position over all generations, \(M:=\sup _t M_{nt}\), is also shown to converge weakly to that of the limiting super-Brownian motion, whose tail is found to decay like a Gumbel distribution when \(\theta <0\).

Similar content being viewed by others

1 Introduction and main results

The study of extreme values of branching particle systems has attracted a considerable amount of attention during the last few decades. Early works on the tail behavior of branching Brownian motion trace back to Sawyer and Fleischman [14] and Lalley and Sellke [24]. During the same time period, the strong law of large numbers for the maxima of branching random walk was studied by Hammersley [15], Kingman [20], Biggins [5] and Bramson [8].

The tail behavior of the maximal displacement of branching random walk was only derived recently. We classify these results into three subclasses according to the mean number of offspring, which we denote by \(\mu \). In the supercritical case (\(\mu >1\)), the asymptotic tail distribution of the position of the rightmost particle was derived by Aidekon in [2]. It was proved by Aidekon that the maximal displacement converges weakly to a random shift of the Gumbel distribution (see also [1, 4, 9, 10, 17]).

The subcritical case (\(\mu <1\)) was studied in [29]. It was proved in [29] that the tail distribution of the position of the rightmost particle decays exponentially. Moreover, the exact rate of decay was derived.

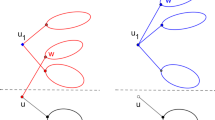

The case where the branching law is critical, that is \(\mu =1\), was studied by Lalley and Shao in [25]. Let \(R_{n}\) be the rightmost position at generation n of a branching random walk started by one particle at the origin. It was proved in [25] that when the jump distribution has mean 0, then under some moment assumptions, the distribution of \(R_{n}/\sqrt{n}\) conditional on the branching process surviving for n generations, converges weakly to a distribution G given by

where \(\{\widetilde{X}_{t}\}_{t \ge 0}\) is a super-Brownian motion, and \(P_{\delta _0}\) stands for the probability distribution of \((\widetilde{X}_t)\) with \(\widetilde{X}_0=\delta _0\), the Dirac measure at the origin.

In this paper, we consider the near-critical case, namely, when the mean number of offsprings \(\mu =1+\theta /n\) for some \(\theta \in \mathbb {R}\). This is a regime where phase transitions occur and interesting phenomena arise. Moreover, different from [25], we consider the rightmost position of the local time process rather than the process itself. The local time process plays a critical role in some other studies, for example, the study of the susceptible-infected-recovered (SIR) epidemic model [23, 26].

Specifically, let \(X_k(x)\) denote the number of particles at site x at generation k. Recall that the local time process of a spatial particle system X is given by

Suppose that the branching random walk starts with n particles at the origin and the mean number of offsprings is \(1+\theta /n\) for some \(\theta \in \mathbb {R}\). It is well known that if each particle carries mass 1/n, and if we rescale time by 1/n and space by \(1/\sqrt{n}\), then as \(n\rightarrow \infty \), the measure-valued process converges weakly to a super-Brownian motion with drift \(\theta \); see, e.g., [30]. The rescaled process \(\big (n^{-3/2}L_{[ nt]}(\sqrt{n}x)\big )_{t\ge 0,\, x\in \mathbb {R}}\) also converges weakly to the local time density of the super-Brownian motion; see [22, 26].

Note that the maxima of the support of \(L_{nt}\) equals \(M_{nt}\), the rightmost position reached by the branching random walk up to generation [nt]. The weak convergence of the branching random walk to super-Brownian motion, however, does not imply the weak convergence of \(M_{nt}/\sqrt{n}\). The reason is that \(M_{nt}/\sqrt{n}\) is not a continuous function of measures with respect to the topology of weak convergence (see, for example, the discussion after Theorem 3 in [25]). Our first main result, Theorem 1.1, confirms that \(M_{nt}/\sqrt{n}\) converges weakly to \(\widetilde{M}_t\), the rightmost support point of the limiting super-Brownian up to time t.

[25] also studied the tail distribution of the rightmost position over all generations, namely,

It was proved in [25] that in the critical case and under some moment assumptions,

Here \(\alpha \) is a constant that depends on the standard deviations of the jump distribution and the offspring distribution. The asymptotics (1.2) implies that for a critical branching random walk started with n particles at the origin, the tail distribution of \(M/\sqrt{n}\), that is \(P\big (M\ge \sqrt{n}x\big )\), decays at rate \(O(1/x^2)\) for large values of n (see Corollary 2 in [25]). We will show in Corollary 1.5(i) that the corresponding tail distribution of \(M_{nt}/\sqrt{n}\) decays with a rate of \(\exp (- c(t) x^2)\). The difference between the two convergence rates implies that the heavy-tail behavior of M in the critical case is due to particles that survive more than O(n) generations.

In the supercritical case, precise estimates on the tail distribution of the radius of the support of a super-Brownian motion were established by Pinsky in [31] and [32]. Let \(B_{r}(0)\) be the ball of radius r centered at the origin. It was proved in [32] (see equation (6) therein) that for a super-Brownian motion \(\widetilde{X}=\{\widetilde{X}^{}_{t}\}_{t\ge 0}\) with drift \(\theta >0\), diffusion coefficient \(\sigma _R^2\) and branching coefficient \(\sigma ^2\), one has

where \(P^\theta _{\delta _{0}}\) stands for the probability distribution of \(\widetilde{X}^{}\) with drift \(\theta \), \(\widetilde{X}^{}_0=\delta _0\), and

is the local time process associated with \(\widetilde{X}.\) As to \(u_{r}{(\cdot )},\) for any \(r>0\), \(u_{r}({t,x})\) is the minimal positive solution to the following nonlinear PDE:

The existence of a positive solution to (1.3) was derived in Theorem 1 of [31] along with some sharp bounds on the minimal positive solution. The uniqueness of positive solutions to (1.3) can be established by a similar argument to the proof of Proposition 4.4 in this paper.

One important implication of Theorem 1 of [31] is the growth rate of the support of \(\widetilde{X}\). It was proved in Theorem 1 of [32] that the large time growth rate is linear with rate \({\bar{\gamma }} := (2\theta \sigma _R ^{2})^{-1/2}\). Specifically, one has

and

where \(\zeta _{\widetilde{X}}\) is the extinction time of \(\widetilde{X}\). The above convergence in probability is strengthened to be almost sure convergence in [21].

The aforementioned growth rate result brings up the second aim of this paper, namely, to derive the growth rate of near-critical branching random walks. As we mentioned earlier, results on the limiting measure-valued processes in most cases are not precise enough for the research of discrete particle systems. The motivation for this work comes from the study of population and epidemic models, where sharp bounds on the local time are key elements in the proofs of phase transitions. For example, in [23], a phase transition for the spatial measure-valued susceptible-infected-recovered (SIR) epidemic models was established. A key ingredient in the proof is the growth rate of the support of the local time (see the discussion in Section 1.2 of [23]).

Before we state our main results, we define more carefully the branching random walk that we study.

The model For any fixed \(n\in \mathbb {N}\) and a constant \(\eta \in \mathbb {R}\), \(P^{\eta }_{n}\) stands for the probability distribution of a discrete time branching random walk \(X^{}=(X^{}_k(x))_{k\ge 0, x\in \mathbb {Z}}\) initiated by n particles at the origin and with the following properties. In each generation, particles first jump (independently from each other) according a distribution with a finite range, \(F_{RW}= \{a_{k}\}_{k\in [-R,R]}\), which has mean 0 and variance \(\sigma _R^{2}\), and then each particle branches independently according to an offspring distribution \(F^{\eta }_{B}=\{p^{\eta }_{i}\}_{i\ge 0}\), which has \(p^{\eta }_{0}>0\), expectation \(1+\eta \), variance \(\sigma ^{2}(\eta )\) and third moment \(\gamma (\eta )\). The \(\sigma (\eta )\) and \(\gamma (\eta )\) satisfy that for some \(\delta >0\),

We remark that (1.4) is the only assumption that we make on \(F^{\eta }_{B}\) for different values of \(\eta \).

Notation We often use the abbreviated notation \(P_{n}= P_{n}^{0}\), \(F_{B}= F^{0}_{B}\), \(P^\eta = P_{1}^{\eta }, E^\eta = E_{1}^{\eta }\), etc.

Observe that under this model, particles jump first and then reproduce, just as in [25]. This does not change the limiting tail behavior of the maximal displacement as explained in Remark 3 therein and noting the Taylor expansion of function Q given by (2.3) below.

We study the tail behavior of the maximal displacement of X up to generation [nt] for \(t\ge 0\), that is,

Define \((u_{k}^{\eta }(y))\) to be function obtained by linear interpolation in y from the values

Let \(\theta \in \mathbb {R}\) and \(n\in \mathbb {N}\). Our first main result establishes the weak convergence of \(M_{nt}/\sqrt{n}\) under \(P^{\theta /n}_{n}\), to the rightmost point in the support of the local time \(\widetilde{L}_t\) of the limiting super-Brownian motion.

Theorem 1.1

For every \(t\ge 0\) and \(x\ge 0\),

Remark 1.2

The convergence in Theorem 1.1 is new even when \(\theta =0\), i.e., the critical case. In Corollary 2 of [25], the tail behaviour of the maximal displacement over all generations was derived for the critical case. However, such results are very different from the tail behaviour of \(M_{nt}\), which describes the propagation of the support of branching random walks. In terms of the proof, Theorem 1.1 requires different methods for deriving the tightness of the sequence \(\left( u^{ \theta /n}_{[ n_{} t]}(\sqrt{n} x): t\ge 0, \, x>0\right) _{n\ge 1}\) as shown in Sect. 3, and for the analysis of the limiting functional which satisfies a singular parabolic PDE as discussed in Sect. 4.

Remark 1.3

When \(\theta \ne 0\) and \(F^{\theta /n}_{B}\) is either Poisson or Binomial with mean \(1+\theta /n\), the convergence of \(u_{n\cdot }^{\theta /n}(\sqrt{n}\cdot )\) follows from the convergence in the critical case and the convergence of the likelihood ratio between the near-critical and critical systems. We refer to Section 3.3 of [26] for a similar argument on the convergence of the likelihood ratio between SIR epidemics and critical branching random walks. In general, such an argument fails because there may be no likelihood ratio not to mention its convergence.

Remark 1.4

The finite range assumption of the underling random walk facilitates controlling the overshoot of the random walk in analyzing a discrete Feynman-Kac formula; see, for example, (3.5), (3.8), (3.12) and (4.3). By using the finite moment results of the overshoot distribution (Lemma 10 in [25] and Exercise 6, p. 232 of [35]), one can relax the assumption to be finite 5th moment (the exponential decay in Lemma 3.3 (ii) becomes polynomial decay, but main theorems remain true.) See Remark 3.6 for an example on how to modify the argument under a finite 5th moment assumption.

We now describe a corollary to Theorem 1.1. Consider the following Fisher–Kolmogorov–Petrovskii–Piscounov (FKPP) equation

The existence and uniqueness of positive solutions to (1.6) will be proved in Sect. 4.

We will also need the following nonlinear ODE. By Proposition 2 in [31], for each \(\rho \in (0,\sqrt{2})\), there exists a unique positive increasing solution \(f=f_\rho \in \mathcal C^2([0,\infty ))\) to the equation

Moreover, one has

We will show in Corollary 1.5 that a traveling-wave sub-solution of (1.6) can be obtained from \(f_{\rho }\). See Section 1 of [16] for a discussion about the FKPP equation and traveling-wave solutions.

In the following corollary, we derive some exponential bounds on \(u_{n \cdot }^{\theta /n}(\sqrt{n}\cdot )\).

Corollary 1.5

For all \( x>0, t>0\), we have

where the following bounds on \(\phi \) hold:

-

(i)

when \(\theta \ge 0\), for every \(\varepsilon >0\), there exists \(c_{\varepsilon }>0\) and \(M_{\varepsilon }>0\) such that for all \( x>M_{\varepsilon }\),

$$\begin{aligned} \phi (t,x) \le \frac{1}{\sigma ^2}\Big (\theta +\frac{12\sigma _R^{2}}{x^2}\Big )\exp \Big ( -\Big (\frac{x^{2}}{2\sigma _R^{2}(1+\varepsilon )t }- \theta t -c_{\varepsilon }\Big )_{+}\Big ), \quad \text {for all } t\ge 0. \end{aligned}$$ -

(ii)

when \(\theta > 0\), for each \(\rho \in (0,\sqrt{2})\), we have

$$\begin{aligned} \phi (t,x) \ge \frac{2\theta }{\sigma ^2} f_{\rho }\left( \left( \rho \theta t - \frac{\sqrt{\theta }}{\sigma _R}x\right) _+\right) , \quad \text {for all } x>0, \ t\ge 0. \end{aligned}$$

Remark 1.6

The convergence in (1.8) cannot be strengthened to be uniform convergence in (t, x) over any infinite domain of the form \(\{(t,x): t\ge t_0,\ x\ge x_0\}\). The reason is due to the discontinuity of \(u_{n\cdot }^{\theta /n}(\sqrt{n}\cdot )\) near the boundary of the support of the branching random walk; see the next theorem for the precise statement.

The next main result establishes the large time growth rate of the support of \(X^{\theta /n}\):

Theorem 1.7

Let \({\bar{\gamma }} = (2\theta \sigma _R^{2})^{-1}\) for \(\theta >0\).

-

(i)

For any \(\gamma <{\bar{\gamma }}\), there exists \(N(\gamma )>0\) such that for all \(n>N(\gamma )\),

$$\begin{aligned} P^{\theta /n}_{n}\big (L_{n\gamma t}((\sqrt{n} t,\infty )) =0 \text { for all } t \text { large enough}\big ) =1; \end{aligned}$$ -

(ii)

for any \(\gamma >{\bar{\gamma }}\), there exists \(N(\gamma )>0\) such that for all \(n>N(\gamma )\),

$$\begin{aligned} P^{\theta /n}_{n} \big (X^{}_{n\gamma t }((\sqrt{n} t,\infty )) >0 \text { for all } t \text { large enough} \, | \, X \text { survives} \big ) =1. \end{aligned}$$

Remark 1.8

In [32], the author established the linear growth of the support of supercritical super-Brownian motions by observing that the bounds on \((\phi (t,x))\) given in Corollary 1.5 imply that

where \(\zeta _{\widetilde{X}}\) is the extinction time of \(\widetilde{X}\). It follows from Corollary 1.5 that for any \(\varepsilon >0\), there exist \(T_0>0\) such that for any \(t>T_0\), we can find an \(N_0=N_0(t)\) such that for all \(n\ge N_0\),

This result, however, is not enough to establish the linear growth of the support of branching random walk because we would need (1.10) to hold for all t large enough. Such a uniform convergence seems difficult to prove given the discontinuity of the limit in (1.9) as a function of \(\gamma \). We prove the linear growth result using another argument.

Finally, analogous to the critical case in [25], we derive the tail distribution of the maximal displacement over all generations, namely, \(M=\sup _k M_k\).

Theorem 1.9

When \(\theta \ne 0\), for every \(x_0> 0\), uniformly over \(x\in [x_0,\infty ),\) we have

where

is the unique solution to

Moreover, we have

where \(\theta ^+=\max (\theta ,0).\)

Remark 1.10

When \(\theta =0\), the convergence (1.11) and ODE (1.13) are also true; see Corollary 2 and Proposition 23 (and its proof) in [25]. The tail behavior of M, however, is completely different according to whether \(\theta =0\) or not. When \(\theta =0,\) by Corollary 2 in [25], the tail distribution of \(M/\sqrt{n}\) decays at a rate of \(1/x^2\). In fact, by solving (1.13) with \(\theta =0\) along the same lines as in the proof of Theorem 1.9, one gets that

A theorem by Dynkin (see e.g. Theorem 8.6 in [12]) links (1.13) with \(\theta =0\) to the support of super Brownian motion. In contrast, in the sub-near-critical case (or the super-near-critical case and conditioned on extinction), the tail distribution of \(M/\sqrt{n}\) is similar to a Gumbel distribution.

Remark 1.11

In [25], the convergence (1.11) was established by first proving the convergence of a complicated object \(\lim _{x\rightarrow \infty } w_\infty (x + y/w_\infty (x))/w_\infty (x)\), where \(w_\infty (x)=P_1(M>x)\) for any x; see equation (23) therein for the precise statement. In this paper, we prove the convergence of \(P^{\theta /n}_n(M\ge \sqrt{n}x)\) directly.

Organization of the paper: The rest of this paper is organized as follows. In Sect. 2, we establish a discrete Feynman-Kac formula for the tail distribution of the maximal displacement, which will be used in Sect. 3 to establish the tightness of \((nw_{n\cdot }^{\theta /n}(\sqrt{n}\cdot ))\), where \(w_{k}^{\theta /n}(x)=P_{1}^{\theta /n} (M_{k}\ge x)\) for each k and x. In Sect. 4, we identify the limit as a unique solution to a nonlinear parabolic PDE with infinite boundary condition, based on which we establish Theorem 1.1 and Corollary 1.5. In Sect. 5 we prove Theorem 1.7, and in Sect. 6 we prove Theorem 1.9.

2 A discrete Feynman–Kac formula

Recall that \(F^{\theta /n}_{B}\) stands for an offspring distribution with mean \(1+\theta /n\). Denote by \(f^{\theta /n}\) the probability generating function of \(F^{\theta /n}_{B}\). Define

The function is increasing and concave with \(Q^{\theta /n}(0)=0, (Q^{\theta /n})'(0)=1+\theta /n\) and \(Q^{\theta /n}(1) = 1-p_0^{\theta /n} < 1\). We also define

The derivation of the Feynman–Kac formula uses ideas from Section 2.2 in [25]. The following lemma (see Lemma 4.1 in [29]) gives a convolution equation for \({w}_k^{\theta /n}(\cdot )\) based on \(Q^{\theta /n}(\cdot )\) and the random walk distribution \(F_{RW} = {\{a_y\}_{y\in \mathbb {Z}}}\). It is obtained by conditioning on the first generation, in which a single particle first jumps according to the step distribution \( \{a_{k}\}\) and then reproduces according to \(F_B^{{\theta /n}}\), which results in i.i.d. subtrees.

Lemma 2.1

For all \( k\ge 1\),

Recall that \(F^{\theta /n}_{B}\) has a bounded third moment, hence by the Taylor expansion of \(Q^{\theta /n}(\cdot )\) at \(s=0\) we have

where

Define

and

Lemma 2.1 can be rewritten as the following, which is more convenient for our purpose.

Lemma 2.2

For all integers \( x\ge 1\) and \( k \ge 1\),

We will also need the following result on the boundedness and monotonicity of \(H^{\theta /n}\) (see Lemma 4.3 in [29]):

We denote by \(\{ {\mathcal {W}}_{k}\}_{n \ge 0}\) a random walk on \(\mathbb {Z}\) with the following law:

in other words, \(\{{\mathcal {W}}_{k}\}_{k \ge 0}\) is a reflection of W, the random walk associated with our branching system. We use \(P_{x}\) and \(E_{x}\) to denote the probability measure and expectation of \(\{{\mathcal {W}}_{k}\}_{k \ge 0}\) with \({\mathcal {W}}_{0}=~x\), and omit the subscript when \(x=0\) (and when there is no confusion). Moreover, to improve readability, we often abbreviate the notation \(E_{[ \sqrt{n}x ]}\) to \(E_{ \sqrt{n}x}\), \({\mathcal {W}}_{[nt]}\) to \({\mathcal {W}}_{ nt }\), \(w_{[nt]}^{\theta /n_{}}\) to \(w_{nt}^{\theta /n_{}}\), etc. We also denote by \(\mathcal {F}^{{\mathcal {W}}}=(\mathcal {F}^{{\mathcal {W}}}_{k})_{k\ge 0}\) the natural filtration of \(\{{\mathcal {W}}_{k}\}_{k \ge 0}\).

For any \( 0 \le x \le y<\infty \), define the stopping times

and

Further define for each \(m\ge 0\) and \(0\le k \le m\),

where we use the convention that for any \(k\le 0,\) \(\sum _{j=1}^{k}=0\) and \(\prod _{j=1}^{k}=1\). In particular, \({Y^{(n)}_0}=w^{\theta /n}_{m}({\mathcal {W}}_0)\).

Similarly to Lemma 4.4 in [29], we have that \(Y^{}=\{Y^{(n)}_{k}\}_{0\le k\le {m}}\) is a martingale. To recall why this holds, define

Note that \(Y^{(n),2}_{k+1} \in \mathcal F^{{\mathcal {W}}}_k\), so Y is a martingale if and only if

which is verified by using Lemma 2.2 and considering \({\bar{\tau }}<k\), \({\bar{\tau }}=k\) and \({\bar{\tau }} > k\), respectively.

Lemma 2.3

If \({\mathcal {W}}_0=x\ge 0\), then \(Y^{}\) is a martingale with respect to \(\mathcal F^{{\mathcal {W}}}\).

The following lemma gives a discrete Feynman–Kac formula.

Lemma 2.4

For any \(m \in \mathbb {N}\), \(0\le k\le m\) and \( 0 \le y\le x< z\le \infty \), we have

-

(i)

$$\begin{aligned} \begin{aligned} w^{\theta /n}_{m}(x) =&E_{x}\Big (\Big (1+\frac{\theta }{n}\Big )^{{\bar{\tau }}_{y,z}\wedge (m-k)} w_{m-(m-k)\wedge {\bar{\tau }}_{y,z}}^{\theta /n}({\mathcal {W}}_{{\bar{\tau }}_{y,z}\wedge (m-n)}) \\&\qquad \times \prod _{j=1}^{{\bar{\tau }}_{y,z}\wedge (m-k)}\big [1-H^{\theta /n}\big ( w_{m-j}^{\theta /n}({\mathcal {W}}_{j})\big )\big ] \Big ). \end{aligned} \end{aligned}$$

-

(ii)

$$\begin{aligned} \begin{aligned} w^{\theta /n}_{m}(x) =&E_{x}\Big (\Big (1+\frac{\theta }{n}\Big )^{{\bar{\tau }}_{y,z}\wedge m} w_{m- {\bar{\tau }}_{y,z}\wedge m}^{\theta /n}({\mathcal {W}}_{{\bar{\tau }}_{y,z}\wedge m}) \\&\qquad \times \prod _{j=1}^{{\bar{\tau }}_{y,z}\wedge m}\big [1-H^{\theta /n}\big ( w_{m-j}^{\theta /n}({\mathcal {W}}_{j})\big )\big ] \Big ). \end{aligned} \end{aligned}$$

Proof

Note that \({\bar{\tau }}_{y,z} \le {\bar{\tau }}\) for every \( 0 \le y< z\le \infty \). The conclusions follow by taking the stopping times \({\bar{\tau }}_{y,z}\wedge (m-k)\) and \({\bar{\tau }}_{y,z}\wedge m\) and applying the optional stopping theorem to the martingale \(\{Y^{(n)}_{k}\}\). \(\square \)

3 Tightness

In this section, we establish the tightness of the function sequence \(\left( n w^{\theta /n}_{[ n_{} t]}(\sqrt{n} x)\right) _{n\ge 1}\). Because at \(x=0\), \(n w^{\theta /n}_{[ n_{} t]}(\sqrt{n} x)=n\rightarrow \infty \), the sequence of functions cannot be tight when allowing (t, x) to vary over the whole domain \(\{(t,x): t\ge 0,x\ge 0\}\). Special treatments are needed to deal with such a singularity.

We start with some exponential bounds on the distribution of the maximum of W.

Lemma 3.1

Let \(Y_{1},Y_{2},...\) be i.i.d. random variables with mean 0 and \(P(Y_{1}\ge R) =0\) for some R. Let \(S_{n}=Y_{1}+...+Y_{n}\).

-

(i)

There exist constants \(C_{3.1},\beta _{3.1}>0\) such that

$$\begin{aligned} P\Big (\max _{i=0,...,n} |S_{i}|\ge s \sqrt{n} \Big ) \le C_{3.1}e^{-\beta _{3.1}s^{2}}, \quad \text {for all } n \ge 0, \ s>0. \end{aligned}$$ -

(ii)

There exist constants \(C_{3.1}',\beta '_{3.1}>0\) such that

$$\begin{aligned} P\Big ( \max _{i=0,...,n} S_{i} \le s\sqrt{n}\Big ) \le C'_{3.1}e^{-\beta '_{3.1}/s^{2}}, \quad \text {for all } n\ge 0, \ s>0. \end{aligned}$$

Proof

(i) is a special case of as Corollary A.2.7 in [27].

(ii) The result follows from equation (2.51) in Proposition 2.4.5 of [27]; see also Exercise 2.7 therein. \(\square \)

In the following lemma, we compute the probability that \(X^{}\) (under \(P_{n}^{\theta /n}\)) dies out as \(n\rightarrow \infty \).

Lemma 3.2

Assume that \(F^{\theta /n}_{B}\) satisfies (1.4). When \(\theta >0\), we have

Proof

Recall that \(f^{\theta / n}\) is the probability generating function of \(F^{\theta / n}_{B}\). Let \(q_{n}\) be the smallest non-negative root of the equation \(f^{\theta /n}(q)=q\). By Theorem 2 in Chapter I.A.5 of [3], \( P^{\theta / n}_{1}\big (X \text { dies out }\big ) = q_n. \) By (1.4), for \(q\in (0,1)\),

It follows that

and

\(\square \)

Before stating our next lemma, we recall the duality principle which states that a supercritical branching process conditional on extinction has the same distribution as its dual subcritical process; see, for example, Theorem 3 in Chapter I.D.12 in [3]. Specifically, let \(\overline{Z}= \{\overline{Z}_{n}\}_{n\ge 0}\) be a Galton-Watson process with \(\overline{Z}_0=1\) and an offspring distribution \(\overline{F}_{B} = \{\overline{p}_i\}_{i\ge 0}\) that has mean \(\mu >1\) and \(\overline{p}_0>0\). Define

to be the event of extinction, and let \(q=P(B)\in (0,1)\). Then the duality principle says that the process \(\{\overline{Z}_{n}\}_{n\ge 0}\) conditional on event B has the same distribution as a subcritical Galton-Watson branching process \(\{ \widetilde{Z}_{n}\}_{n\ge 0}\) with \(\widetilde{Z}^{}_{0}=1\) and

where \(\overline{f}^{}\) denotes the probability generating function of \(\overline{F}_{B}\).

In the following lemma, we derive an exponential bound on \(\sup _{n\ge 1}n_{}w^{\theta /n_{}}_{nt}(\sqrt{n} x)\).

Lemma 3.3

-

(i)

For any \(\delta >0,\)

$$\begin{aligned} \sup _{x \ge \delta } \sup _{n\ge 1}nw_{nt}^{\theta /n_{}}(\sqrt{n}x) < \infty ; \end{aligned}$$ -

(ii)

there exists \(\beta >0\) such that

$$\begin{aligned} \lim _{x \rightarrow \infty } \sup _{n\ge 1} e^{\beta x^2/t}\cdot nw_{nt}^{\theta /n_{}}(\sqrt{n}x) =0, \quad \text {for all } t>0. \end{aligned}$$

Proof

(i) We shall only prove the result when \(\theta \ge 0\). Recall that \(M= \sup _{n\ge 0} M_{n}\) stands for the maximal displacement over all generations. From Theorem 1 in [25], which applies to critical branching random walks, we have

Therefore, if we denote by B the event of extinction of the branching random walk X, then by the duality principle we get

Moreover, by (3.2), there exist positive constants \(c_1\) and \(c_2\) independent of n and x such that

and we get (i).

(ii) By Lemma 2.4(ii) and (2.7), we have

Because \(w^{\theta /n}_0(y) = 0\) for \(y\ge 1\), we have for all \(x >0\) and \(n\ge 1\),

Recall that \({\mathcal {W}}\) has a range R. From the monotonicity of \(w^{\theta /n}_{nt}(x)\) in x and part (i), we get that there exists a positive constant C such that

where we used Lemma 3.1(i) in the last inequality. The conclusion follows. \(\square \)

The following lemmas are key ingredients in proving the tightness of of \((n w^{\theta /n}_{nt}(\sqrt{n} x))\).

Lemma 3.4

For any \(T>0\), there exists \(C(T)>0\) such that for all \(x, y \in \mathbb {Z}/\sqrt{n}\) with \( |x-y| \le 1\) and \( t\in [0,T],\)

Proof

We only need to prove for the case when \(y>x\) because otherwise the LHS equals 0. We will also only prove for the case when \(\theta \ge 0\); the case when \(\theta <0\) can be proved similarly.

Note that

For \(I_{1}(n,y-x,t)\), we have

Because \(e^{x}\) is a Lipschitz function, we get

About term \(I_{2}(n,y-x)\), using Lemma 3.1(ii) we get

and the result follows. \(\square \)

Lemma 3.5

For any \(0<t_0<T<\infty \) and \(x_{0}>0\), there exist \(N_0>0\) and \(C=C(t_0,T,x_0)>0\) such that for all \(n \ge N_0\) and \(t\in [t_0,T]\) we have

Proof

Let \(x_{0}>0\), \(x_{0}< x< y <\infty \) and \(t\ge t_0\). It follows from Lemma 2.4(ii) with \(m=nt\) and (2.7) that

where in the second inequality we used the monotonicity of \(w^{\theta /n}_{nt}(x)\) in x.

We first handle \(I_{1}(n,x,y,t)\). By Lemma 3.4, for all \(0\le y-x\le 1\),

which, by Lemma 3.3(i), is bounded by \(C(y-x)/n\).

Next we bound \(I_{2}(n,x,y,t)\). Using the monotonicity of \(w^{\theta /n}_{nt}(x)\) in x again we have

where in the last inequality we used Lemma 3.1(ii) and the Optional Stopping Theorem. The conclusion follows from Lemma 3.3(i). \(\square \)

Remark 3.6

The bound in (3.8) is a place where we need the random walk to have a finite 5th moment. Specifically, when \(F_{RW}\) has an unbounded support but a finite 5th moment, we can estimate term \(I_{2}(n,x,y,t)\) as follows. Note that to prove the conclusion in the lemma, by the triangular inequality, it suffices to prove for the case when \(y=x+1/\sqrt{n},\) and we want to show that \(n I_{2}(n,x,y,t)=O(1/\sqrt{n})\). We have

where in the last inequality we used the following estimates.

-

(i)

\(P\big (\tau _{1} > {\bar{\tau }}_{-k}\big ) = O(1/k)\) as \(k\rightarrow \infty \). To see this, note that by the Optional Stopping Theorem,

$$\begin{aligned} E({\mathcal {W}}_{{\bar{\tau }}_{1,-k}} \mathbb {1}_{\{\tau _{1} < {\bar{\tau }}_{-k}\}} ) = - E({\mathcal {W}}_{{\bar{\tau }}_{1,-k}} \mathbb {1}_{\{\tau _{1}> {\bar{\tau }}_{-k}\}} ) \ge k P\big (\tau _{1} > {\bar{\tau }}_{-k}\big ). \end{aligned}$$Moreover, by exercise 6, p.232 of [35], \(E({\mathcal {W}}_{\tau _1})<\infty \) if \(F_{RW}\) has finite variance, hence \(P\big (\tau _{1} > {\bar{\tau }}_{-k}\big ) = O(1/k)\).

-

(ii)

\(P\big ({\mathcal {W}}_{{\bar{\tau }}_{-k}} \le - \alpha k\big ) = O(1/k^{3})\) as \(k\rightarrow \infty \). This follows from Lemma 10 (and its proof) in [25], by which we have, if \(F_{RW}\) has a finite 5th moment, then the limiting overshoot distribution has a finite 3rd moment.

Lemma 3.7

For any \(0<t_0<T<\infty \) and \(x_{0}>0\), there exist \(\delta >0\), \(N_0>0\) and \(C(t_0,T,x_0)>0\) such that for all \(n>N_0\), \(t\in [t_0,T]\), \(nt \le m \le n (t+\delta )\) and \( x_{0}< x<\infty \), we have

Proof

From monotonicity it is enough to prove the lemma when \(m =[ n(t+\delta )] \). Let \( x_{0}< x<\infty \) and let \(\xi >0\) be a small number to be chosen later. From the bound (2.7) and Lemma 2.4(i) with \(y=x-\xi \), \(z=\infty \) and \(k=[nt]\) we get

Note that \( m-[nt] \le \delta n +1\). Using the monotonicity of \(w^{\theta /n}_{k}(x)\) in x we get that

By Lemma 3.5 and Lemma 3.3(i), if \(\xi \) and \(\delta \) are small enough then

As to \(J_{2}(m,n,x)\), using the monotonicity of \(w^{\theta /n}_{k}(x)\) in k and x, the finite range of \({\mathcal {W}}\) and noting that \(m-[nt] \le \delta n+1\), we have

By Lemma 3.1(i), there exist \(C_2>0\) and \( \beta >0\) such that for all \(x\ge x_0\),

By choosing \(\delta \) to be small enough and using Lemma 3.3(i) we get

The conclusion follows. \(\square \)

Corollary 3.8

For any \(\varepsilon >0\), \(0<t_0<T<\infty \) and \(x_{0}>0\), there exist \(\delta >0\) and \(N_0>0\) such that for all \(n>N_0\), \(t_0\le s, t\le T\) with \(|s-t|\le \delta \) and \( x, y \in [x_0,\infty )\) satisfying \(|x-y|\le \delta \), we have

Proposition 3.9

For any sequence \(\{n_{i}\}\) of positive integers that increase to infinity, there exists a subsequence \(\{n_{i_j}\}\) along which the functions \(\left( n_{i_j}w^{\theta /n_{i_j}}_{[n_{i_j} t]}\left( \sqrt{n_{i_j}} x \right) \right) _{t>0, x>0}\) converge. The convergence is uniform over any compact region inside \(\{(t,x): t>0, x>0\}\). Moreover, any subsequential limiting function \(\phi (t,x)\) is increasing in t, decreasing and Lipschitz in x with

Proof

The conclusions follow from Lemmas 3.3, 3.5, Corollary 3.8, Arzelà-Ascoli Theorem and a standard diagonal argument. \(\square \)

4 Scaling limit

In this section, we prove that the limiting function \(\phi (\cdot )\) from Proposition 3.9 satisfies a nonlinear PDE with an infinite boundary condition at \(x=0\). Then we prove uniqueness of solutions to the PDE.

The main difficulty with the analysis of the limiting function is that it satisfies a nonlinear parabolic PDE with singularity at the origin; see Corollary 4.2 and Lemma 4.3. In Proposition 4.1 and Lemma 4.3, we develop probabilistic arguments to characterize the limiting function. We further use PDE methods to prove uniqueness of the solution to Eq. (1.6) (Proposition 4.4) and to link the solution with the tail distribution of the support of super-Brownian motion (Theorem 1.1).

For any continuous process \(\{Y_{t}\}_{t\ge 0}\), define

The following proposition gives a Feynman–Kac representation of \(\phi (t,x)\).

Proposition 4.1

Let \((\phi (t,x))_{t>0, x>0}\) be any sub-sequential limiting function from Proposition 3.9. Then for any \(x_0>0\) and for all \(x> x_0, \, t>0,\) we have

where under \(P_{x}\), \(\{B_{t}\}_{t\ge 0}\) is a standard Brownian motion starting at \(x/\sigma _R\).

Proof

For any \(x>x_0\) and \(t>0\), by Lemma 2.4(ii), we have

Using (2.6) and that \(\log (1-x) = -x+ O(x^{2})\) for \(|x|<1\), we get

By Lemma 3.3(i), the error term

Moreover, by Donsker’s invariance principle (see, e.g., Theorem 8.2 in [7]), under \(P_{\sqrt{n} x}\), \(\left( {{\mathcal {W}}_{nt}}/(\sqrt{n}\sigma _R)\right) _{t\ge 0}\) converges to a standard Brownian motion \((B_{t})_{t\ge 0}\) starting at \(x/\sigma _R\) as \(n \rightarrow \infty \). In addition, \(n^{-1}{\bar{\tau }}_{\sqrt{n}x_0}\) converges weakly to \({\bar{\tau }}^{\sigma _R B}_{ x_0}\); see, for example, Theorem 1 in [19] and Theorem 3 in [28]. Using the Skorokhod embedding theorem, strong Markov property and the fact that a Brownian almost surely takes both positive and negative values in any interval \([0,\delta ]\), one can further strengthen the previous two convergences to be joint convergence. Therefore, by our assumption on the convergence of \(nw_{[nt] }^{\theta /n}(\sqrt{n}x)\) to \(\phi (t,x)\), we get

Furthermore, from (1.4) and (2.4) we get \( \widetilde{\sigma }^2(\theta /n) \rightarrow \sigma ^2\). By the same reasoning as for (4.4) we get

Finally, we have

Plugging the above limits into (4.3), together with bounded convergence theorem we get the conclusion. \(\square \)

Corollary 4.2

Suppose that \((\phi (t,x))_{t>0, x>0}\) is a sub-sequential limiting function from Proposition 3.9. Then it satisfies the following PDE:

Proof

This follows from Kac’s theorem; see, for example, Section 2.6 in [18] or Theorem 4.1 in Section 3.4 of [33]. \(\square \)

In the following lemma, we derive the initial and boundary conditions of \(\phi \) from Proposition 3.9.

Lemma 4.3

-

(i)

For any \(x>0\),

$$\begin{aligned} \lim _{t\downarrow 0} \phi (t,x) = 0. \end{aligned}$$ -

(ii)

For any \(t>0\),

$$\begin{aligned} \lim _{x\downarrow 0} \phi (t,x) = \infty . \end{aligned}$$ -

(iii)

For any \(T>0\),

$$\begin{aligned} \lim _{x\rightarrow \infty } \phi (t,x) = 0, \quad \text {uniformly on } [0,T]. \end{aligned}$$

Proof

(i) It is sufficient to show that for any \(x>0\) and \(\varepsilon >0\), there exists \(t_0>0\) such that

This follows from the bounds (3.4) and (3.5).

(ii) We need to prove that for any \(t>0\),

Note that for any \(x\le t\) we have

where we used Lemma 3.1(ii) in the last inequality. To bound the probability in the last term, note that by the weak convergence of X to \(\widetilde{X}\) we have

which goes to 1 as \(x\rightarrow 0\). Hence

The conclusion follows.

(iii) Part (iii) follows from Lemma 3.3(ii) and the monotonicity of \(\phi \) in t. \(\square \)

Next we prove uniqueness of positive solutions to (4.5), with the initial and boundary conditions from Lemma 4.3. Note that this equation was presented in (1.6).

Proposition 4.4

There exists at most one positive solution \(\phi \) to equation (1.6).

Proof

Without loss of generality, we set \(\sigma _R= \sigma =1\) in (1.6). Define

which satisfies

Suppose that (4.8) has two positive solutions \(u_1\) and \(u_2\) such that \(u_1(t_0,x_0)\ne u_2(t_0,x_0)\) for some \(x_0>1\) and \(t_0\in (0,T)\). Without loss of generality, suppose \(u_1(t_0,x_0)< u_2(t_0,x_0)\). Then by continuity, for \(c>1\) that is close enough to 1 we have

Define

Then \(v_2\) satisfies

Let

We have

By (4.9), \(\delta =\delta (c):= - \inf _{t,x} f(t,x;c) > 0.\) By (4.8) and (4.10), there exists \(M~>~0\) such that

and therefore

It follows that the infimum of \(f(\cdot ) = f(\cdot ;c)\) must be attained at some point \((t^*,x^*)=((t^*(c),x^*(c)))\in (1,M) \times (0,T]\), and we have

However, by (4.8) and (4.10) we have

When \(\theta \ge 0,\) the last term is negative due to that \(0< u_1(t^*,x^*) < v_2(t^*,x^*)\). This contradicts (4.13), and we conclude the proof.

Consider now the case when \(\theta <0\). Take any sequence \((c_n)\) such that (4.9) holds for all \(c_n\) and \(c_n\downarrow 1\). Note that by continuity we can choose M independent of \(c_n\) such that for all n large enough,

Consider the point sequence \((t^*(c_n),x^*(c_n))\subseteq (1,M) \times (0,T]\), and suppose that \((t^o,x^o)\) is a limiting point. Note that

It follows that \((t^o,x^o)\) must be also inside \((1,M) \times (0,T]\), and by (4.14), when n is large enough,

and we again get contradiction with (4.13). \(\square \)

We are now ready to prove Theorem 1.1.

Proof of Theorem 1.1

By Proposition 3.9, Corollary 4.2, Lemma 4.3 and Proposition 4.4, \((nw^{\theta /n}_{n t}(\sqrt{n}x)_{t>0,x>0}\) converges to \((\phi (t,x))_{t>0,x>0}\), which is the unique positive solution to (1.6). Recall that \(w^{\theta /n}_{\cdot }(\cdot )\) is for the case with a single initial particle, while \(u^{\theta /n}_{\cdot }(\cdot )\) is for the case with n initial particles. We have

Therefore,

To finish the proof of Theorem 1.1, in the below we analyze the exit probability of the limiting super-Brownian motion \(\widetilde{X}\) with drift \(\theta \), diffusion coefficient \(\sigma _R^{2}\) and branching coefficient \(\sigma ^2\).

For any \(r>0\), choose a sequence of functions \(\{\psi _{r,m}(x)\}_{r,m =1}^{\infty } \in C^{\infty }(\mathbb {R})\) satisfying the following:

and

Let \(v_{r,m}\) be the solution to

with the initial condition \(v_{r,m}(0,x) \equiv 0\). By the same argument as for the convergence (5) in [32], \(v_{r,m}\) is increasing in m, and we can define

Moreover, by repeating the argument for equation (6) in [32], with \(\delta _{x}\) as the initial measure, we have

We now analyze \(v_r(t,x)\). By (4.16), (4.17) and the monotone convergence theorem and using further (4.18), we get that that \(v_r\) is a weak solution to

We want to strengthen the conclusion to be that \(v_r\) is a classical solution to (4.19). To see this, recall that \(u_{r}\) is the minimal positive solution to (1.3). By Theorem A and Proposition A in [32], we have

and \(u_{r}\) satisfies that for every \(\varepsilon >0\), there exists \(c_{\varepsilon }>0\) and \(M_{\varepsilon }>0\) such that for all \( r>M_{\varepsilon }\), for all \(t\ge 0 \) and \( x\in [0,r),\)

Noting that \(v_r(t,x) \le u_r(t,x)\), using the bound (4.21) and regularity of weak solutions to parabolic PDE (see Chapter 7.1.3 of [13]), we see that \(v_r\) is a positive classical solution to (4.19).

It follows that \(\eta (t,x):=v_r(t,r-x), \ t>0, x>0\) solves (1.6). However, by Proposition 4.4, the positive solution to (1.6) is unique, therefore

Taking \(r=x\) and using (4.18) we get the desired conclusion. \(\square \)

Next, we prove Corollary 1.5.

Proof of Corollary 1.5

First note that the convergence in (1.8) has been derived in the proof of Theorem 1.1.

Next we prove Part (i). By Theorem 1.1 and (4.20) we have

The bound in (i) then follows from (4.21).

To prove Part (ii), note that \(w(t,x):= 2\theta /\sigma ^2 \cdot f_{\rho }((\rho \theta t - \sqrt{\theta } x/\sigma _R )_{+})\) satisfies

Note that \(\lim _{x \downarrow 0} w(t,x) <\infty \) for any \(t>0\).

We want to show that \(w(t,x) \le \phi (t,x)\) for all \(x>0\) and \(t\ge 0\). To do so, without loss of generality, we set \(\sigma _R= \sigma =1\) and define

The function \(h(\cdot ,\cdot )\) satisfies

Note that \((\phi +w)\ge 0\). By the same argument as in the proof of Proposition 4.4, we get that \(h(t,x)\ge 0\).

The conclusion follows. \(\square \)

5 Proof of Theorem 1.7

We first prove Part (i).

Proof of Theorem 1.7 (i)

Recall that \(\{L_k^{x}\}_{k \ge 0}\) stands for the local time process of X. By Corollary A.2.7 in [27], there exists \(C>0\) such that for all \(v>0\) and \(k\in \mathbb {N}\),

It follows that for every \(v>\sqrt{2\theta \sigma _{R}^{2}}\), there exists \(N_{0}=N_{0}(v,\sigma _{R},\theta )>0\) such that for all \( n>N_{0},\)

Because \((L_\cdot (\cdot ))\) is an integer-valued process, by the Borel-Cantelli lemma, we get that

Note that for all \(t>0\),

The conclusion follows. \(\square \)

Next we prove Part (ii). The proof uses ideas from the proof of Theorem 2.1 in [21]. Recall that \(X_k(x)\) is the number of particles at site x at generation k. For any \(\gamma \in \mathbb {R}\), define

and

Then \(\{W^{\gamma }_{k}\}_{k\ge 0}\) is a martingale; see Chapter VI.4 of [3] or Theorem 1 in [20]. Because \(\{W^{\gamma }_{k}\}_{k\ge 0}\) is nonnegative, the limit \(W^{\gamma } := \lim _{k\rightarrow \infty }W_{k}^{\beta }\) almost surely exists, and by Fatou’s lemma, \(E_1^{\theta /n}(W^{\gamma })\le 1\). The following lemma characterizes when \(E_1^{\theta /n}(W^{\gamma })~=~1\) and provides the key ingredient in proving Part (ii) of Theorem 1.7.

Lemma 5.1

If \(|\beta | < \sqrt{{2\theta }/{\sigma ^2_R}}\), then for all sufficiently large n,

Proof

The proof is based on Lemma 5 in [6] (see also Theorem 3.2 in [34]). From our assumptions on the step distribution and branching law, by Lemma 5 in [6], we need to verify that if \(|\beta | < \sqrt{{2\theta }/{\sigma ^2_R}}\), then for all sufficiently large n,

Note that

By the Taylor expansion and using the fact that \(\sum _{z \in \mathbb {Z}} z a_z =0\) we get

and

It follows that

From (5.2), (5.4) and (5.5) we get

and we verify (5.3). \(\square \)

We are now ready to prove Theorem 1.7 (ii).

Proof of Theorem 1.7 (ii)

Because the branching random walk is homogeneous, the probability that the martingale limit \(W^\gamma \) is positive is independent of the start position. Hence, by standard Galton-Watson arguments, similar to the proof of Lemma 2.2 in [34], for any \(\gamma \in \mathbb {R}\), \(P_1^{\theta /n}(W^{\gamma } > 0) \) is either 0 or equal to the survival probability of \(X^{}\). Therefore by Lemma 5.1, if \(|\beta | < \sqrt{2\theta /\sigma ^2_R}\), then for all sufficiently large n,

Define

and

Now pick any \(0<\beta < \sqrt{2\theta /\sigma ^2_R}\), and let \(\varepsilon \in (0,\beta )\) be an arbitrarily small number. Note that

It follows from the Taylor expansion in the proof of Lemma 5.1 that for all n large enough,

Because \(\widetilde{W}_t^{-(\beta -\varepsilon )/\sqrt{n}}\) converges almost surely, the last term converges to 0 as \(t\rightarrow \infty \), and we obtain that

It follows from (5.7) and (5.6) that

Because \(\beta \) can be arbitrarily close to \(\sqrt{2\theta /\sigma ^2_R}\) and \(\varepsilon \) can be arbitrarily small, we get the desired conclusion. \(\square \)

6 Proof of Theorem 1.9

In this section, we study M, the maximum displacement throughout the whole process. Denote

In the following lemma, we show that for any \(\theta \in \mathbb {R}\) and \(x_0>0\), the function sequence \((n w_\infty ^{\theta /n}(\sqrt{n} x))_{x\ge x_0}\) uniformly converges as \(n\rightarrow \infty .\) Recall that the convergence of \(nw^{\theta /n}_{n t}(\sqrt{n}x)\) to \(\phi (t,x)\) was established in the proof of Theorem 1.1.

Lemma 6.1

For any \(\theta \in \mathbb {R}\) and \(x_0>0,\) \(\big (n w_\infty ^{\theta /n}(\sqrt{n} x)\big )_{x\ge x_0}\) uniformly converges to \(\big (\psi (x):=\lim _{t\rightarrow \infty } \phi (t,x)\big )_{x\ge x_0}.\)

Proof

By duality between the supercritical and subcritical branching processes (see discussion before Lemma 3.3), we need only to show the convergence when \(\theta \le 0.\) For any \(t>0\), we have

It follows that \(n w_\infty ^{\theta /n}(\sqrt{n} x)\) pointwise converges to \(\psi (x)\), and by Theorem 1.1 and Proposition 3.9, the limiting function \(\psi (\cdot )\) is finite and continuous. Moreover, by Dini’s Theorem, the convergence of \(\phi (t,x)\rightarrow \psi (x)\) is uniform over any compact interval inside \((0,\infty )\). By (6.1) again, \(n w_\infty ^{\theta /n}(\sqrt{n} x)\) converges to \(\psi (x)\) uniformly over any compact interval. Note further that \( n w_{\infty }^{\theta /n}(\sqrt{n} x)\le n w_{\infty }(\sqrt{n} x)\), which, by Theorem 1 in [25], is \( O(1/x^2)\), so the uniform convergence over \([x_0,\infty )\) follows. \(\square \)

In the following proposition, we describe the limiting function \(\psi (\cdot ).\)

For any \(\theta > 0\), define

By duality between the supercritical and subcritical branching processes we have \((n\widetilde{w_\infty ^{\theta /n}}(\sqrt{n} x))\) converges to \((\widetilde{\psi }(x))\), which, by (3.2), satisfies the following relationship with \((\psi (x))\):

Recall that \({\bar{\tau }}^{Y}_{x}\) was defined in (4.1) for any continuous process \(\{Y_{t}\}_{t\ge 0}\) and \(x\in \mathbb {R}\). We further define for \(x<y \le \infty \),

Proposition 6.2

The limiting function \(\psi (\cdot )\) in Lemma 6.1 satisfies that

Proof

The fact that \(\psi (\cdot )\) satisfies the boundary conditions in (6.3) follows from (6.1), (6.2), Lemma 4.3 and Theorem 1 in [25]. It remains to show that \(\psi (\cdot )\) satisfies the ODE in (6.3). By (6.2) again, it suffices to show the case when \(\theta \le 0\).

We only give the sketch of proof because it uses similar techniques to proving the convergence of \((n w_{nt}^{\theta /n}(\sqrt{n} x))\). A simple modification of the proof of Lemma 4.5 in [29] yields the following discrete Feynman–Kac formula for \(w_\infty ^{\theta /n}(x)\): for all \(0<x_0< {x}<y\le \infty \),

It follows by a similar argument to the proofs of Proposition 4.1 and Corollary 4.2 that the limiting function \(\psi (\cdot )\) satisfies that for all \(0<x_0<y\le \infty \),

Based on this expression, we want to show that \(\psi (\cdot )\) satisfies

By Theorem 3.1 in Chapter 6 of [11], we only need to verify that \(\psi (\cdot )\) is Lipschitz. To do so, we take \(y=\infty \) in (6.4), which yields

By the strong Markov property, for any \(\delta \ge 0\) and \(x> x_0,\)

It follows that \(\psi (x)\) is decreasing in x, and we have

Therefore, \(\psi (\cdot )\) is Lipschitz and we complete the proof. \(\square \)

In the rest of this section we assume that \(\theta > 0\).

We are interested in the asymptotic behavior of \(\psi (x)\) as \(x\rightarrow \infty \). By (6.2), it suffices to study the asymptotic behavior of \(\widetilde{\psi }(x)\). For notational ease, denote

Then \(\widetilde{\psi }(\cdot )\) satisfies

In the following lemma, we establish uniqueness of solutions to (6.6) (note that the uniqueness does not follow from Theorem 3.1 in [11], which applies to the case with bounded domain and given boundary condition).

Lemma 6.3

For any \(a> 0\) and \( b >0\), there exists at most one solution to (6.6).

Proof

The proof is similar to that of Proposition 4.4. Suppose that there exist two solutions \(\widetilde{\psi }_i,\ i = 1, 2,\) to (6.6), and suppose that \(\widetilde{\psi }_1(x_0) < \widetilde{\psi }_2(x_0)\) for some \(x_0>0\). Take \(c>1\) close enough to 1 so that

Define \(\eta (x) = \widetilde{\psi }_2(c x + c-1)\) for all \(x>0\), which satisfies that

Let \(f(x) = \widetilde{\psi }_1(x) - \eta (x)\) for \(x>0\). Then \(f(\cdot )\) must attain its minimum at some point \(x^*\in (0,\infty )\), and we have

which is a contradiction. \(\square \)

We are now ready to prove Theorem 1.9.

Proof of Theorem 1.9

The convergence (1.11) and that \(\psi (\cdot )\) satisfies (1.13) follow from Lemma 6.1, Proposition 6.2 and Lemma 6.3. It remains to show (1.12) and (1.14). By (6.2), it suffices to study \(\widetilde{\psi }(\cdot ),\) which satisfies Eq. (6.6).

Note that (6.6) is a second-order autonomous ODE, and it admits the following positive solution given by an implicit function:

where \(C_1\) and \(C_2\) are two constants. Letting \(x\rightarrow \infty \) and noting that

\(\lim _{x \rightarrow \infty } \widetilde{\psi }(x) = 0\), we conclude that \(C_1=0.\) When \(C_1=0\), the left hand side of (6.8) can be explicitly integrated out, and we obtain

where for any \(s> 1\),

Letting \(x\rightarrow 0 +\) and noting that \(\lim _{x \rightarrow 0+} \widetilde{\psi }(x) = \infty \), we see that \(C_2=0\) and so

Therefore,

and so

Plugging a and b in (6.5) yields

References

Addario-Berry, L., Reed, B.: Minima in branching random walks. Ann. Probab. 37(3), 1044–1079 (2009)

Aïdékon, E.: Convergence in law of the minimum of a branching random walk. Ann. Probab. 41(3A), 1362–1426 (2013)

Athreya, K.B., Ney, P.E.: Branching Processes. Springer, New York (1972)

Bachmann, M.: Limit theorems for the minimal position in a branching random walk with independent log concave displacements. Adv. Appl. Probab. 32(1), 159–176 (2000)

Biggins, J.D.: The first- and last-birth problems for a multitype age-dependent branching process. Adv. Appl. Probab. 8(3), 446–459 (1976)

Biggins, J.D.: Martingale convergence in the branching random walk. J. Appl. Probab. 14(1), 25–37 (1977)

Billingsley, P.: Convergence of Probability Measures, 2nd edn. Wiley, New York (1999)

Bramson, M.: Minimal displacement of branching random walk. Z. Wahrsch. Verw. Gebiete 45(2), 89–108 (1978)

Bramson, M., Ding, J., Zeitouni, O.: Convergence in law of the maximum of nonlattice branching random walk. Ann. Inst. Henri Poincaré Probab. Stat. 52(4), 1897–1924 (2016)

Bramson, M., Zeitouni, O.: Tightness for a family of recursion equations. Ann. Probab. 37(2), 615–653 (2009)

Dynkin, E.B.: Diffusions, Superdiffusions and Partial Differential Equations. American Mathematical Society Colloquium Publications, vol. 50. American Mathematical Society, Providence, RI (2002)

Etheridge, A.M.: An Introduction to Superprocesses. University Lecture Series, vol. 20. American Mathematical Society, Providence, RI (2000)

Evans, L.C.: Partial Differential Equations, Volume 19 of Graduate Studies in Mathematics, 2nd edn. American Mathematical Society, Providence, RI (2010)

Fleischman, J., Sawyer, S.: Maximum geographic range of a mutant allele considered as a subtype of a Brownian branching random field. PNAS 76(2), 872–875 (1979)

Hammersley, J.M.: Postulates for subadditive processes. Ann. Probab. 2(4), 652–680 (1974)

Harris, J.W., Harris, S.C., Kyprianou, A.E.: Further probabilistic analysis of the Fisher–Kolmogorov–Petrovskii–Piscounov equation: one sided travelling-waves. Ann. Inst. Henri Poincare B Probab. Stat. 42(1), 125–145 (2006)

Hu, Y., Shi, Z.: Minimal position and critical martingale convergence in branching random walks, and directed polymers on disordered trees. Ann. Probab. 37(2), 742–789 (2009)

Itô, K., McKean, H.P., Hu, Y., Shi, Z.: Diffusion processes and their sample paths. Second printing, corrected, Die Grundlehren der mathematischen Wissenschaften, Band 125, Springer, Berlin (1974)

Kennedy, D.P.: Estimates of the rates of convergence in limit theorems for the first passage times of random walks. Ann. Math. Stat. 43(6), 2090–2094 (1972)

Kingman, J.F.C.: The first birth problem for an age-dependent branching process. Ann. Probab. 3(5), 790–801 (1975)

Kyprianou, A.E.: Asymptotic radial speed of the support of supercritical branching Brownian motion and super-Brownian motion in \({ \mathbb{R}}^d\). Markov Process. Relat. Fields 11(1), 145–156 (2005)

Lalley, S.P.: Spatial epidemics: critical behavior in one dimension. Probab. Theory Relat. Fields 144(3–4), 429–469 (2009)

Lalley, S.P., Perkins, E.A., Zheng, X.: A phase transition for measure-valued SIR epidemic processes. Ann. Probab. 42(1), 237–310 (2014)

Lalley, S.P., Sellke, T.: A conditional limit theorem for the frontier of a branching Brownian motion. Ann. Probab. 15(3), 1052–1061 (1987)

Lalley, S.P., Shao, Y.: On the maximal displacement of critical branching random walk. Probab. Theory Relat. Fields 162(1–2), 71–96 (2015)

Lalley, S.P., Zheng, X.: Spatial epidemics and local times for critical branching random walks in dimensions 2 and 3. Probab. Theory Relat. Fields 148(3–4), 527–566 (2010)

Lawler, G.F., Limic, V.: Random Walk: A Modern Introduction. Cambridge University Press, Cambridge (2010)

Mirakhmedov, S.A.: Estimates of speed of convergence in limit theorems for first passage times. Math. Notes Acad. Sci. USSR 23(3), 263–268 (1978)

Neuman, E., Zheng, X.: On the maximal displacement of subcritical branching random walks. Probab. Theory Relat. Fields 167(3–4), 1137–1164 (2017)

Perkins, E.: Dawson–Watanabe superprocesses and measure-valued diffusions. In: Lectures on probability theory and statistics (Saint-Flour, 1999), volume 1781 of Lecture Notes in Math., pp. 125–324. Springer, Berlin (2002)

Pinsky, R.G.: K-p-p-type asymptotics for nonlinear diffusion in a large ball with infinite boundary data and on \(\mathbb{R}^d\) with infinite initial data outside a large ball. Commun. Partial Differ. Equ. 20(7–8), 1369–1393 (1995)

Pinsky, R.G.: On the large time growth rate of the support of supercritical super-Brownian motion. Ann. Probab. 23(4), 1748–1754 (1995)

Pinsky, R.G.: Positive Harmonic Functions and Diffusion. Cambridge University Press, Cambridge (1995)

Shi, Z.: Branching random walks, volume 2151 of Lecture Notes in Mathematics. Springer, Cham (2015). Lecture notes from the 42nd Probability Summer School held in Saint Flour, 2012, École d’Été de Probabilités de Saint-Flour [Saint-Flour Probability Summer School]

Spitzer, F.: Principles of Random Walk. Graduate Texts in Mathematics, vol. 34. Springer, New York (1976)

Acknowledgements

We are very grateful to an anonymous referee for careful reading of the manuscript, and for a number of useful comments and suggestions that significantly improved this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Availability of data and material

Not applicable.

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Code availability.

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research partially supported by GRF 606010 and 607013 of the HKSAR and the HKUST IAS Postdoctoral Fellowship.

Eyal Neuman would like to thank HKUST where part of the research was carried out.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Neuman, E., Zheng, X. On the maximal displacement of near-critical branching random walks. Probab. Theory Relat. Fields 180, 199–232 (2021). https://doi.org/10.1007/s00440-021-01042-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-021-01042-8