Abstract

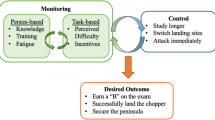

Cognitive and metacognitive processes during learning depend on accurate monitoring, this investigation examines the influence of immediate item-level knowledge of correct response feedback on cognition monitoring accuracy. In an optional end-of-course computer-based review lesson, participants (n = 68) were randomly assigned to groups to receive either immediate item-by-item feedback (IF) or no immediate feedback (NF). Item-by-item monitoring consisted of confidence self-reports. Two days later, participants completed a retention test (IF = NF, no significant difference). Monitoring accuracy during the review lesson was low, and contrary to expectations was significantly less with immediate feedback (IF < NF, Cohen’s d = .62). Descriptive data shows that (1) monitoring accuracy can be attributed to cues beyond actual item difficulty, (2) a hard-easy effect was observed where item difficulty was related to confidence judgements as a non-monotonic function, (3) response confidence was predicted by the Coh-Metrix dimension Word Concreteness in both the IF and NF treatments, and (4) significant autocorrelations (hysteresis) for confidence measures were observed for NF but not for IF. It seems likely that monitoring is based on multiple and sometimes competing cues, the salience of each relates in some degree to content difficulty, but that the stability of individual response styles plays a substantive role in monitoring. This investigation shows the need for new applications of technology for monitoring multiple measures on the fly to better understand SRL processes to support all learners.

Similar content being viewed by others

References

Arnold, M. M., Graham, K., & Hollingworth-Hughes, S. (2017). What’s context got to do with it? Comparative difficulty of test questions influences metacognition and corrected scores for formula-scored exams. Applied Cognitive Psychology, 31, 146–155.

Azevedo, R. (2014). Issues in dealing with sequential and temporal characteristics of self- and socially-regulated learning. Metacognition and Learning, 9, 217–228. https://doi.org/10.1007/s11409-014-9123-1

Azevedo, R., & Hadwin, A. F. (2005). Scaffolding self-regulated learning and metacognition – Implications for the design of computer-based scaffolds. Instructional Science, 33(5–6), 367–379.

Bandura, A. (1991). Social cognitive theory of self-regulation. Organizational Behavior and Human Decision Processes, 50, 248–287.

Benwell, C. S. Y., Beyer, R., Wallington, F., & Ince, R. A. A. (2020). History biases reveal novel dissociations between perceptual and metacognitive decision-making. bioRxiv. https://doi.org/10.1101/737999

Bjork, R. A., Dunlosky, J., & Kornell, N. (2013). Self-regulated learning: beliefs, techniques, and illusions. Annual Review of Psychology, 64, 417–444.

Bol, L., & Hacker, D. J. (2012). Calibration research: Where do we go from here? Frontiers in Psychology, 3(229), 1–6.

Bol, L., Riggs, R., Hacker, D. J., & Nunnery, J. (2010). The calibration accuracy of middle school students in math classes. Journal of Research in Education, 21, 81–96.

Brady, M., Rosenthal, J. L., Forest, C. P., & Hocevar, D. (2020). Anonymous versus public student feedback systems: metacognition and achievement with graduate learners. Educational Technology Research and Development, 68, 2853–2872. https://doi.org/10.1007/s11423-020-09800-6

Burson, K. A., Larrick, R. P., & Klayman, J. (2006). Skilled or unskilled, but still unaware of it: How perceptions of difficulty drive miscalibration in relative comparisons. Journal of Personality and Social Psychology, 90(1), 60–77.

Butler, D. L., & Winne, P. H. (1995). Feedback and self-regulated learning: A theoretical synthesis. Review of Educational Research, 65(3), 245–281.

Carver, C. S., & Scheier, M. F. (2000). On the structure of behavioral self-regulation. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 41–84). Academic Press.

Cascallar, E., Bockaerts, M., & Costigan, T. (2006). Assessment in the evaluation of self-regulation as a process. Educational Psychology Review, 18, 297–306. https://doi.org/10.1007/s10648-006-9023-2

Clariana, R. B. (1990). A comparison of answer until correct feedback and knowledge of correct response feedback under two conditions of contextualization. Journal of Computer-Based Instruction, 17, 125–129.

Clariana, R. B., Ross, S. L., & Morrison, G. R. (1991). The effects of different feedback strategies using computer-assisted multiple-choice questions as instruction. Educational Technology Research and Development, 39(2), 5–17.

Corbett, A. T., & Anderson, J. R. (2001, March). Locus of feedback control in computer-based tutoring: Impact on learning rate, achievement and attitudes. In Proceedings of the SIGCHI conference on Human factors in computing systems (pp. 245–252). ACM.

Dinsmore, D. I., & Parkinson, M. M. (2013). What are confidence judgments made of? Students’ explanations for their confidence ratings and what that means for calibration. Learning and Instruction, 24, 4–14.

Dowell, N. M. M., Graesser, A. C., & Cai, Z. (2016). Language and discourse analysis with Coh-Metrix: Applications from educational material to learning environments at scale. Journal of Learning Analytics, 3(3), 72–95. https://doi.org/10.18608/jla.2016.33.5

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16(4), 228–232.

Dunlosky, J., & Rawson, K. A. (2012). Overconfidence produces underachievement: Inaccurate self-evaluations undermine students’ learning and retention. Learning and Instruction, 22, 271–280. https://doi.org/10.1016/j.learninstruc.2011.08.003

Dunlosky, J., & Rawson, K. A. (2015). Do students use testing and feedback while learning? A focus on key concept definitions and learning to criterion. Learning and Instruction, 39, 32–44.

Dunlosky, J., & Thiede, K. W. (2013). Four cornerstones of calibration research: Why understanding students’ judgments can improve their achievement. Learning and Instruction, 24, 58–61.

Finn, B., & Metcalfe, J. (2007). The role of memory for past test in the underconfidence with practice effect. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 238–244.

Finn, B., & Metcalfe, J. (2008). Judgments of learning are influenced by memory for past test. Journal of Memory and Language, 58, 19–34.

Fleming, S. M., & Lau, H. C. (2014). How to measure metacognition. Frontiers in Human Neuroscience, 8, 1–9.

Follmer, D. J., & Clariana, R. B. (2020). Predictors of adults’ metacognitive monitoring ability: The roles of task and item characteristics. Journal of Experimental Education. https://doi.org/10.1080/00220973.2020.1783193

Grabowski, B. L. (2004). Generative learning contributions to the design of instruction and learning. In D. H. Jonassen (Ed.), Handbook of research on educational communications and technology (2nd ed., pp. 719–743). Erlbaum.

Greene, J. A., & Azevedo, R. (2007). A theoretical review of Winne and Hadwin’s model of self-regulated learning: New perspectives and directions. Review of Educational Research, 77(3), 334–372. https://doi.org/10.3102/003465430303953

Hacker, D. J., Bol, L., & Bahbahani, K. (2008). Explaining calibration accuracy in classroom contexts: The effects of incentives, reflection, and explanatory style. Metacognition and Learning, 3(2), 101–121.

Hacker, D. J., Bol, L., Horgan, D. D., & Rakow, E. A. (2000). Test prediction and performance in a classroom context. Journal of Educational Psychology, 92, 160–170.

Händel, M., Harder, B., & Dresel, M. (2020). Enhanced monitoring accuracy and test performance: Incremental effects of judgement training over and above repeated testing. Learning and Instruction, 65, 101245. https://doi.org/10.1016/j.learninstruc.2019.101245

Hartwig, M. K., & Dunlosky, J. (2017). Category learning judgments in the classroom: Can students judge how well they know course topics? Contemporary Educational Psychology, 49, 80–90.

Hertzog, C., Hines, J. C., & Touron, D. R. (2013). Judgments of learning are influenced by multiple cues in addition to memory for past test accuracy. Archive of Scientific Psychology, 1(1), 23–32.

Illingworth, J. (2011). Control Systems 2003 (html lecture notes). Retrieved from the University of Leeds website: http://www.bmb.leeds.ac.uk/illingworth/control/

Javaras, K. N., & Ripley, B. D. (2007). An “unfolding” latent variable model for Likert attitude data: Drawing inferences adjusted for response style. Journal of the American Statistical Association, 102, 454–463.

Jonassen, D. H. (2000). Toward a design theory of problem solving. Educational Technology Research and Development, 48, 63–85.

Juslin, P., Winman, A., & Olsson, H. (2000). Naïve empiricism and dogmatism in confidence research: A critical examination of the hard-easy effect. Psychological Review, 107, 384–396.

Kahneman, D. (2013). Thinking, fast and slow. Farrar, Straus, and Girox.

Kavousi, S., Miller, P. A., & Alexander, P. A. (2020). The role of metacognition in the first-year design lab. Educational Technology Research and Development, 68, 3471–3494. https://doi.org/10.1007/s11423-020-09848-4

Kelemen, W. L., Frost, P. J., & Weaver, C. A., III. (2000). Individual differences in metacognition: Evidence against a general metacognitive ability. Memory and Cognition, 28, 92–107.

Keren, G. (1991). Calibration and probability judgments: Conceptual and methodological issues. Acta Psycholica, 77, 217–273. https://www.sciencedirect.com/science/article/pii/000169189190036Y

Kornell, N., & Bjork, R. A. (2009). A stability bias in human memory: overestimating remembering and underestimating learning. Journal of Experimental Psychology: General, 138, 449–468.

Krueger, J., & Mueller, R. A. (2002). Unskilled, unaware, or both? The better-than-average heuristic and statistical regression predict errors in estimates of own performance. Journal of Personality and Social Psychology, 82, 180–188.

Kruger, J., & Dunning, D. (1999). Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of Personality and Social Psychology, 77, 1121–1134.

Kulhavy, R. W., Yekovich, F. R., & Dyer, J. W. (1976). Feedback and response confidence. Journal of Educational Psychology, 68(5), 522–528.

Laerd Statistics (2018). Spearman's Rank-Order Correlation using SPSS Statistics. Retrieved from https://statistics.laerd.com/spss-tutorials/spearmans-rank-order-correlation-using-spss-statistics.php

Lichtenstein, S., & Fischhoff, B. (1977). Do those who know more also know more about how much they know? Organizational Behavior and Human Performance, 20(2), 159–183.

Lin, L.-M., & Zabrucky, K. M. (1998). Calibration of comprehension: Research and implications for education and instruction. Contemporary Educational Psychology, 23, 345–391. https://doi.org/10.1006/ceps.1998.0972

López, C. E., & Tucker, C. S. (2018). Towards personalized performance feedback: Mining the dynamics of facial keypoint data in engineering lab environments. A paper presented at the 2018 ASEE Mid-Atlantic Spring Conference, April 6–7, 2018 – University of the District of Columbia. Retrieved from https://peer.asee.org/towards-personalized-performance-feedback-mining-the-dynamics-of-facial-keypoint-data-in-engineering-lab-environments.pdf

Merriman, K. A., Clariana, R. B., & Bernardi, R. J. (2012). Goal orientation and feedback congruence: Effects on discretionary effort and achievement. Journal of Applied Social Psychology, 42(11), 2776–2796.

Mills, C., D’Mello, S. K., & Kopp, K. (2015). The influence of consequence value and text difficulty on affect, attention, and learning while reading instructional texts. Learning and Instruction, 40, 9–20.

Nelson, T. O., & Dunlosky, J. (1991). When people’s judgements of learning (JOL)s) are extremely accurate at predicting subsequent recall: The “delayed-JOL effect.” Psychological Science, 2(4), 267–270.

Nietfeld, J. L., Cao, L., & Osborne, J. W. (2005). Metacognitive monitoring accuracy and student performance in the postsecondary classroom. The Journal of Experimental Education, 74, 7–28.

Nietfeld, J. L., Cao, L., & Osborne, J. W. (2006). The effect of distributed monitoring exercises and feedback on performance, monitoring accuracy, and self-efficacy. Metacognition and Learning, 1(2), 159–179.

Nugteren, M. L., Jarodzka, H., Kester, L., & van Merriënboer, J. J. G. (2018). Self-regulation of secondary school students: Self assessments are inaccurate and insufficiently used for learning-task selection. Instructional Science, 46, 357–381.

Panadero, E. (2017). A review of self-regulated learning: Six models and four directions for research. Frontiers in Psychology, 8, 422. https://doi.org/10.3389/fpsyg.2017.00422

Pintrich, P. R., Wolters, C. A., & Baxter, G. P. (2000). Assessing metacognition and self- regulated learning. In G. Schraw & J. C. Impara (Eds.), Issues in the measurement of metacognition (pp. 43–97). Lincoln.

Pressley, M., Ghatala, E. S., Woloshyn, V., & Pirie, J. (1990). Sometimes adults miss the main idea and do not realize it: Confidence in responses to short-answer and multiple-choice comprehension questions. Reading Research Quarterly, 25, 232–249.

Rae, C., Scott, R. B., Lee, M., Simpson, J. M., Hines, N., Paul, C., Anderson, M., Karmiloff-Smith, A., Styles, P., & Radd, G. K. (2003). Brain bioenergetics and cognitive ability. Developmental Neuroscience, 25, 324–331. https://doi.org/10.1159/000073509

Reid, A. J., Morrison, G. R., & Bol, L. (2017). Knowing what you know: improving metacomprehension and calibration accuracy in digital text. Educational Technology Research and Development, 65, 29–45.

Rutherford, T. (2014). Calibration of confidence judgments in elementary mathematics: Measurement, development, and improvement. (Doctoral dissertation). Retrieved from UC Irvine Electronic Theses and Dissertations: http://escholarship.org/uc/item/99z17038

Stock, W. A., Kulhavy, R. V., & Pridemore, D. R. (1992). Responding to feedback after multiple-choice answers: The influence of response confidence. Quarterly Journal of Experimental Psychology, 45, 649–667.

Stone, N. J. (2000). Exploring the relationship between calibration and self-regulated learning. Educational Psychology Review, 12(4), 437–475.

Tekin, E., & Roediger, H. L. (2017). The range of confidence scales does not affect the relationship between confidence and accuracy in recognition memory. Cognitive Research: Principles and Implications, 2(1), 49–61.

Vasilyeva, E., Pechenizkiy, M., & De Bra, P. (2008). Tailoring of feedback in web-based learning: The role of response certitude in the assessment. In B. P. Woolf, E. Aïmeur, R. Nkambou, & S. Lajoie (Eds.), Intelligent tutoring systems. ITS 2008. Lecture notes in computer science (Vol. 5091). Springer.

Walkington, C., Clinton, V., & Shivraj, P. (2018). How readability factors are differentially associated with performance for students of different backgrounds when solving mathematics word problems. American Educational Research Journal, 55(2), 362–414.

Weaver, C. A., III., & Bryant, D. S. (1995). Monitoring of comprehension: The role of text difficulty in metamemory for narrative and expository text. Memory and Cognition, 23, 12–22.

Weijters, B., Geuens, M., & Schillewaert, N. (2010). The stability of individual response styles. Psychological Methods, 15, 96–110.

Zhu, M., Bonk, C. J., & Doo, M. Y. (2020). Self-directed learning in MOOCs: exploring the relationships among motivation, self-monitoring, and self-management. Educational Technology Research and Development, 68, 2073–2093. https://doi.org/10.1007/s11423-020-09747-8

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest, this research was conducted without external funding

Ethical approval

All participation was voluntary, anonymous, and was conducted under an approved institutional IRB.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Clariana, R.B., Park, E. Item-level monitoring, response style stability, and the hard-easy effect. Education Tech Research Dev 69, 693–710 (2021). https://doi.org/10.1007/s11423-021-09981-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-021-09981-8