Abstract

Population protocols (Angluin et al. in PODC, 2004) are a model of distributed computation in which indistinguishable, finite-state agents interact in pairs to decide if their initial configuration, i.e., the initial number of agents in each state, satisfies a given property. In a seminal paper Angluin et al. classified population protocols according to their communication mechanism, and conducted an exhaustive study of the expressive power of each class, that is, of the properties they can decide (Angluin et al. in Distrib Comput 20(4):279–304, 2007). In this paper we study the correctness problem for population protocols, i.e., whether a given protocol decides a given property. A previous paper (Esparza et al. in Acta Inform 54(2):191–215, 2017) has shown that the problem is decidable for the main population protocol model, but at least as hard as the reachability problem for Petri nets, which has recently been proved to have non-elementary complexity. Motivated by this result, we study the computational complexity of the correctness problem for all other classes introduced by Angluin et al., some of which are less powerful than the main model. Our main results show that for the class of observation models the complexity of the problem is much lower, ranging from \(\varPi _2^p\) to PSPACE.

Similar content being viewed by others

1 Introduction

Population protocols are a theoretical model for the study of ad hoc networks of tiny computing devices without any infrastructure [5, 6]. The model postulates a “soup” of indistinguishable, finite-state agents that behave identically. Agents repeatedly interact in pairs, changing their states according to a joint transition function. A global fairness condition ensures that every global configuration that is reachable infinitely often is also reached infinitely often. The purpose of a population protocol is to allow agents to collectively compute some information about their initial configuration, defined as the function that assigns to each local state the number of agents that initially occupy it. For example, assume that initially each agent picks a boolean value by choosing, say, \(q_0\) or \(q_1\) as its initial state. The (many) majority protocols described in the literature allow the agents to eventually reach a stable consensus on the value chosen by a majority of the agents. More formally, let \(x_0\) and \(x_1\) denote the initial numbers of agents in states \(q_0\) and \(q_1\); majority protocols compute the predicate \(\varphi (x_0, x_1) :\mathbb {N}\times \mathbb {N}\rightarrow \{0, 1\}\) given by \(\varphi (x_0, x_1) = (x_1 \ge x_0)\). Throughout the paper, we use the term “predicate” as an abbreviation for “function from \(\mathbb {N}^k\) to \(\{0,1\}\) for some k”.

The expressive power of population protocols (that is, which predicates they can compute), and their efficiency (how fast they can compute them) have been both extensively studied (see e.g. [2,3,4, 28]). In a seminal paper [7], Angluin et al. showed that population protocols can compute exactly the predicates definable in Presburger arithmetic. In the same publication, they observed that while the two-way communication discipline of the standard population protocol model is adequate for natural computing applications, where agents represent molecules or cells that communicate by means of physical encounters, it is less so when agents represent electronic devices, where communication usually takes place by asynchronous message-passing, and information flows only from the sender to the receiver. For this reason, they also conducted a thorough investigation of the expressive power of the population protocol model when two-way communication is replaced by one-way communication. They classified one-way communication models into transmission models, where the sender is allowed to change its state as a result of sending a message, and observation models, where it is not. Intuitively, in observation models the receiver observes the state of the sender, who may not even be aware that it is being observed. Further, they distinguished between immediate delivery models, where a send event and its corresponding receive event occur simultaneously, delayed delivery models, where delivery may take time, but receivers are always willing to receive any message, and queued delivery models, where delivery may take time, and receivers may choose to postpone incoming messages until they have sent a message themselves. This results in five one-way models: immediate and delayed observation, immediate and delayed transmission, and queued transmission. Angluin et al. showed that no one-way model is more expressive than the two-way model, and some of them are strictly less expressive. In fact, they characterized the expressive power of each model in terms of natural classes of Presburger predicates.

In this paper we investigate the correctness problem for population protocols, that is, the problem of deciding if a given protocol computes a given Presburger predicate. For each possible input, deciding if the protocol reaches a consensus only requires to inspect one of these finite transition systems, and can be done automatically using a model checker. This approach has been followed in [19, 21, 45, 49], but it only proves the correctness of a protocol for a finite number of (typically small) inputs. The question whether the protocol reaches the right consensus for all inputs remained open until 2015, when Esparza et al. showed that the problem is decidable [32]. However, in the same paper they proved that the correctness problem is at least as hard as the reachability problem for Petri nets. This problem, which was known to be EXPSPACE-hard since the 1970s [42], has recently been shown to be TOWER-hard [24], where TOWER is the union of the classes of problems solvable in k-EXPTIME for every \(k \ge 0\). Motivated by this high complexity of the two-way model, we examine the complexity of the problem for the one-way models studied in [7]. We show that, very satisfactorily, for observation models the complexity decreases dramatically. In our two main positive results, we prove that correctness is \(\varPi _2^p\)-complete for the delayed observation model, and PSPACE-complete for the immediate observation model, when predicates are specified as quantifier-free formulas of Presburger arithmeticFootnote 1. Surprisingly, we show that this is also the complexity of checking that the protocol is correct for one single given input. So, loosely speaking, in observation models checking correctness for one input and for all infinitely many possible inputs has the same complexity.

In the second part of the paper we present negative results on the transmission models: In all of them, correctness is at least as hard as the reachability problem for Petri nets, and thus TOWER-hard. Further, for the delayed delivery and queued delivery models the single input case is already TOWER-hard, while for the immediate transmission model the single-input problem is PSPACE-complete. On the positive side, we show that the decidability proof of [32] can be easily extended to the immediate and delayed transmission models, but not to the queued transmission model. In fact, for the queued transmission model we leave the decidability of the correctness problem as an open question. However, we also show that this question is less relevant for queued models than for the others. Indeed, in this model the fairness condition of [7] bears no immediate relation to the probabilistic interpretation of population protocols used in the literature in order to study their efficiency. Table 1 summarizes the results and shows their places in the paper.

The paper is organized as follows. Section 2 recalls the protocol models introduced in [7]. Section 3 presents our lower bounds for observation models. Sections 4, 5 and 6, the most involved part of the paper, prove the results leading to the upper bounds for observation models. Section 7 contains the decidability and TOWER-hardness results for transmission-based models. Section 8 gives a brief overview of the most closely related models and approaches that we are aware of.

Previous versions of some of the results of this paper were published in [33] and [34].

2 Protocol models

After some preliminaries (Sect. 2.1), we recall the definitions of the models introduced by Angluin et al. in [7] (Sects. 2.2 to 2.4), formalize the correctness problem (Sect. 2.5), and rephrase it in two different ways as a reachability problem (Sect. 2.6).

2.1 Multisets and populations

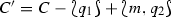

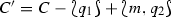

A multiset on a finite set \(E\) is a mapping \(C :E \rightarrow \mathbb {N}\), i.e. \(C(e)\) denotes the number of occurrences of an element \(e \in E\) in \(C\). Operations on \(\mathbb {N}\) are extended to multisets by defining them componentwise on each element of \(E\). We define in this way the sum \(C_1 + C_2\), comparison \(C_1 \le C_2\), or maximum \(\max \{C_1, C_2\}\) of two multisets \(C_1, C_2\). Subtraction, denoted \(C_1 - C_2\), is allowed only if \(C_1 \ge C_2\). We let \(|C|{\mathop {=}\limits ^{\text {def}}}\sum _{e\in E} C(e)\) denote the total number of occurrences of elements in C, also called the size of C. We sometimes write multisets using set-like notation. For example, both  and

and  denote the multiset C such that \(C(a) = 1\), \(C(b) = 2\) and \(C(e) = 0\) for every \(e \in E \setminus \{a, b\}\). Sometimes we use yet another representation, by assuming a total order \(e_1 \prec e_2 \prec \cdots \prec e_n\) on E, and representing a multiset C by the vector \((C(e_1), \ldots , C(e_n))\in \mathbb {N}^n\).

denote the multiset C such that \(C(a) = 1\), \(C(b) = 2\) and \(C(e) = 0\) for every \(e \in E \setminus \{a, b\}\). Sometimes we use yet another representation, by assuming a total order \(e_1 \prec e_2 \prec \cdots \prec e_n\) on E, and representing a multiset C by the vector \((C(e_1), \ldots , C(e_n))\in \mathbb {N}^n\).

A population P is a multiset on a finite set E with at least two elements, i.e. \(P(E)\ge 2\). The set of all populations on E is denoted \(\text {Pop}(E)\).

2.2 A unified model

We recall the unified framework for protocols introduced by Angluin et al. in [7], which allows us to give a generic definition of the predicate computed by a protocol.

Definition 2.1

A generalized protocol is a quintuple \(\mathscr {P}= (\textit{Conf}, \varSigma , Step , I, O)\) where

-

\(\textit{Conf}\) is a countable set of configurations.

-

\(\varSigma \) is a finite alphabet of input symbols. The elements of \(\text {Pop}(\varSigma )\) are called inputs.

-

\( Step \subseteq \textit{Conf}\times \textit{Conf}\) is a reflexive step relation, capturing when a first configuration can reach another one in one step.

-

\(I :\text {Pop}(\varSigma ) \rightarrow \textit{Conf}\) is an input function that assigns to every input an initial configuration.

-

\(O :\textit{Conf}\rightarrow \{0,1\}\) is a partial output function that assigns an output to each configuration on which it is defined.

We write \(C \xrightarrow {} C'\) and \(C \xrightarrow {*} C'\) to denote \((C, C') \in Step \) and \((C, C') \in Step ^*\), respectively. We say \(C'\) is reachable from C if \(C \xrightarrow {*} C'\). An execution of \(\mathscr {P}\) is a (finite or infinite) sequence of configurations \(C_0, C_1, \ldots \) such that \(C_j \xrightarrow {} C_{j+1}\) for every \(j \ge 0\). Observe that, since we assume that the step relation is reflexive, all maximal executions (i.e., all executions that cannot be extended) are infinite.

An execution \(C_0, C_1, \ldots \) is fair if for every \(C \in \textit{Conf}\) the following property holds: If there exist infinitely many indices \(i \ge 0\) such that \(C_i \xrightarrow {*} C\), then there exist infinitely many indices \(j \ge 0\) such that \(C_j = C\). In words, in fair sequences every configuration which can be reached infinitely often is reached infinitely often.

A fair execution \(C_0, C_1, \ldots \) converges to \(b \in \{0,1\}\) if there exists an index \(m \ge 0\) such that for all \(j \ge m\) the output function is defined on \(C_j\) and \(O(C_j) =b\). A protocol outputs \(b \in \{0,1\}\) for input \(a \in \text {Pop}(\varSigma )\) if every fair execution starting at I(a) converges to b. A protocol computes a predicate \(\varphi :\text {Pop}(\varSigma ) \rightarrow \{0,1\}\) if it outputs \(\varphi (a)\) for every input \(a \in \text {Pop}(\varSigma )\).

The correctness problem for a class of protocols consists of deciding for a given protocol \(\mathscr {P}\) in the class, and a given predicate \(\varphi :\text {Pop}(\varSigma ) \rightarrow \{0,1\}\), where \(\varSigma \) is the alphabet of \(\mathscr {P}\), whether \(\mathscr {P}\) computes \(\varphi \). The goal of this paper is to determine the decidability and complexity of the correctness problem for the classes of protocols introduced by Angluin et al. in [7].

In the rest of the section we formally define the six protocol classes studied by Angluin et al., and summarize the results of [7] that characterize the predicates they can compute. Angluin et al. distinguish between models in which agents interact directly with each other, with zero-delay, and models in which agents interact through messages with possibly non-zero transit time. We describe them in Sects. 2.3 and 2.4, respectively.

2.3 Immediate delivery models

In immediate interaction models, a configuration only needs to specify the current state of each agent. In delayed models, the configuration must also specify which messages are in transit. Angluin et al. study three immediate delivery models.

Standard Population Protocols (PP). Population protocols describe the evolution of a population of finite-state agents. Agents are indistinguishable, and interaction is two-way. When two agents meet, they exchange full information about their current states, and update their states in reaction to this information.

Definition 2.2

A standard population protocol is a quintuple \(\mathscr {P}=(Q,\delta ,\varSigma , \iota , o)\) where Q is a finite set of states, \(\delta :Q^2\rightarrow Q^2\) is the transition function, \(\varSigma \) is a finite set of input symbols, \(\iota :\varSigma \rightarrow Q\) is the initial state mapping, and \(o :Q\rightarrow \{0,1\}\) is the state output mapping.

Observe that \(\delta \) is a total function, and so we assume that every pair of agents can interact, although the result of the interaction can be that the agents do not change their states. Every standard population protocol determines a protocol in the sense of Definition 2.1 as follows, where \(C, C' \in \text {Pop}(Q)\), \(D \in \text {Pop}(\varSigma )\), and \(b \in \{0,1\}\):

-

the configurations are the populations over Q, that is, \(\textit{Conf}= \text {Pop}(Q)\);

-

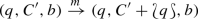

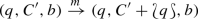

\((C,C') \in Step \) if there exist states \(q_1,q_2,q_3,q_4 \in Q\) such that \(\delta (q_1,q_2)= (q_3,q_4)\),

, and

, and  . The inequality cannot be omitted because some of the states can coincide.

. The inequality cannot be omitted because some of the states can coincide. -

\(I(D)=\sum _{\sigma \in \varSigma } D (\sigma )\iota (\sigma )\); in other words, if the input D contains k copies of \(\sigma \in \varSigma \), then the configuration I(D) places k agents in the state \(\iota (\sigma )\);

-

\(O(C)=b\) if \(o(q)=b\) for all \(q \in Q\) such that \(C(q)>0\); in other words, \(O(C)=b\) if in the configuration C all agents are in states with output b. We often call a configuration C satisfying this property a b-consensus.

The two other models with immediate delivery are one-way. They are defined as subclasses of the standard population protocol model.

Immediate Transmission Protocols (IT). In these protocols, at each step an agent (the sender) sends its state to another agent (the receiver). Communication is immediate, that is, sending and receiving happen in one atomic step. The new state of the receiver depends on both its old state and the old state of the sender, but the new state of the sender depends only on its own old state, and not on the old state of the receiver. Formally:

Definition 2.3

A standard population protocol \(\mathscr {P}=(Q,\delta , \varSigma , \iota , o)\) is an immediate transmission protocol if there exist two functions \(\delta _1: Q\rightarrow {}Q,\ \delta _2 :Q^2 \rightarrow Q\) satisfying \(\delta (q_1,q_2)=(\delta _1(q_1), \delta _2(q_1,q_2))\) for every \(q_1,q_2\in Q\).

Immediate Observation Protocol (IO). In these protocols, the state of a first agent can be observed by a second agent, which updates its state using this information. Unlike in the immediate transmission model, the first agent does not update its state (intuitively, it may not even know that it has been observed). Formally:

Definition 2.4

A standard population protocol \(\mathscr {P}=(Q,\delta ,\varSigma , \iota , o)\) is an immediate observation protocol if there exists a function \(\delta _2 :Q^2 \rightarrow Q\) satisfying \(\delta (q_1,q_2)=(q_1, \delta _2(q_1,q_2))\) for every \(q_1,q_2\in Q\).

Notation. We sometimes write \(q_1,q_2 \rightarrow q_3,q_4\) for \(\delta (q_1,q_2)=(q_3, q_4)\). In the case of IO protocols we sometimes write \(q_2 \xrightarrow {q_1} q_4\) for \(\delta (q_1,q_2)=(q_1, q_4)\), and say that the agent moves from \(q_2\) to \(q_4\) by observing \(q_1\).

2.4 Delayed delivery models

In delayed delivery models agents communicate by sending and receiving messages. The set of messages that can be sent (and received) is finite. Messages are sent to and received from one single pool of messages; in particular, the sender does not choose the recipient of the message. The pool can contain an unbounded number of copies of a message. Agents update their state after sending or receiving a message. Angluin et al. define the following three delayed delivery models.

Queued Transmission Protocols (QT). The set of messages an agent is willing to receive depends on its current state. In particular, in some states the agent may not be willing to receive any message.

Definition 2.5

A queued transmission protocol is a septuple \(\mathscr {P}=(Q,M,\delta _s,\delta _r,\varSigma , \iota , o)\) where Q is a finite set of states, M is a finite set of messages, \(\delta _s :Q\rightarrow M\times Q\) is the partial send function, \(\delta _r :Q\times M \rightharpoonup Q\) is the partial receive function, \(\varSigma \) is a finite set of input symbols, \(\iota :\varSigma \rightarrow Q\) is the initial state mapping, and \(o :Q\rightarrow \{0,1\}\) is the state output mapping.

Every queued transmission protocol determines a protocol in the sense of Definition 2.1 as follows, where \(C, C' \in \text {Pop}(Q)\), \(D \in \text {Pop}(\varSigma )\), and \(b \in \{0,1\}\):

-

the configurations are the populations over \(Q\cup M\), that is, \(\textit{Conf}= \text {Pop}(Q\cup M)\);

-

\((C, C') \in Step \) if there exist states \(q_1,q_2\) and a message m such that

-

\(\delta _s(q_1)= (m,q_2)\),

, and

, and  ; or

; or -

\(\delta _r(q_1,m)= q_2\),

, and

, and  .

.

-

-

\(I(D)=\sum _{\sigma \in \varSigma } C(\sigma )\iota (\sigma )\); notice that since \(\iota \) does not map symbols of \(\varSigma \) to M, the configuration I(D) has no messages;

-

\(O(C)=b\) if \(o(q)=b\) for all \(q \in Q\) such that \(C(q)>0\).

Delayed Transmission Protocols (DT). DT protocols are the subclass of QT protocols in which, loosely speaking, agents can never refuse to receive a message. This is modeled by requiring the receive transition function to be total.

Definition 2.6

A queued transmission protocol \(\mathscr {P}\) is a delayed transmission protocol if its receive function \(\delta _r\) is a total function.

Delayed Observation Protocols (DO). Intuitively, DO protocols are the subclass of DT-protocols in which “sender” and “receiver” actually means “observee” and “observer”. This is modeled by forbidding the sender to change its state when it sends a message (since the observee many not even know it is being observed).

Definition 2.7

Let \(\mathscr {P}=(Q,M,\delta _s,\delta _r,\varSigma , \iota , o)\) be a queued transmision protocol. \(\mathscr {P}\) is a delayed observation protocol if \(\delta _r\) is a total function and for every \(q \in Q\) the send funtion \(\delta _s\) satisfies \(\delta _s(q)=(m,q)\) for some \(m\in M\).

Notation. We write \(q_1 \xrightarrow {m +} q_2\) when \(\delta _s(q_1)=(m, q_2)\), and \(q_1 \xrightarrow {m-} q_2\) when \(\delta _r(q_1,m)= q_2\), denoting that the message m is added to or removed from the pool of messages. In the case of DO protocols, we sometimes write simply \(q_1 \xrightarrow {m +}\).

The following fact follows immediately from the definitions, but is very important.

Fact. In immediate delivery protocols (PP, IT, IO), if \(C \xrightarrow {*} C'\) then \(|C|=|C'|\). Indeed, in these models configurations are elements of \(\text {Pop}(Q)\), and so the size of a configuration is the total number of agents, which does not change when transitions occur. In particular, for every configuration C the number of configurations reachable from C is finite.

In delayed delivery protocols (QT, DT, DO), configurations are elements of \(\text {Pop}(Q \cup M)\), and so the size of a configurations is the number of agents plus the number of messages sent but not yet received. Since transitions can increase or decrease the number of messages, the number of configurations reachable from a given configuration can be infinite.

Table 2 summarizes the different transition functions and restrictions of the models.

2.5 Expressive power and correctness problem

Let \(\varSigma = \{\sigma _1, \ldots , \sigma _n\}\) be a finite alphabet. We introduce the class of predicates \(\varphi :\text {Pop}(\varSigma ) \rightarrow \{0,1\}\) definable in Presburger arithmetic, the first-order theory of addition.

A population \(P \in \text {Pop}(\varSigma )\) is completely characterized by the number \(k_i\) of occurrences of each input symbol \(\sigma _i\) in P, and so we can identify P with the vector \((k_1, \ldots , k_n)\). A predicate \(\varphi :\text {Pop}(\varSigma ) \rightarrow \{0,1\}\) is a threshold predicate if there are coefficients \(a_1, \ldots , a_n, b \in \mathbb {Z}\) such that \(\varphi (k_1, \ldots , k_n) = 1\) iff \(\sum _{i=1}^n a_i \cdot k_i < b\). The class of Presburger predicates is the closure of the threshold predicates under boolean operations and existential quantification. By the well-known result that Presburger arithmetic has a quantifier elimination procedure, a predicate is Presburger iff it is a boolean combination of threshold and modulo predicates, defined as the predicates of the form \(\sum _{i=1}^n a_i \cdot k_i \equiv _c b\) (see e.g. [22]). Abusing language, we call a boolean combination of threshold and modulo terms a quantifier-free Presburger predicate.

In [7], Angluin et al. characterize the predicates computable by the six models of protocols we have introduced. Remarkably, all the classes compute only Presburger predicates. More precisely:

-

DO computes the boolean combinations of predicates of the form \(x \ge 1\), where x is an input symbol. This is the class of predicates that depend only on the presence or absence of each input symbol.

-

IO computes the boolean combinations of predicates of the form \(x \ge c\), where x is an input symbol and \(c \in \mathbb {N}\).

-

IT and DT compute the Presburger predicates that are similar to a boolean combination of modulo predicates for sufficiently large inputs; for the exact definition of similarity we refer the reader to [7].

-

PP and QT compute exactly the Presburger predicates.

The results of [7] are important in order to define the correctness problem. The inputs to the problem are a protocol and a predicate. The protocol is represented by giving its sets of places, transitions, etc. However, we still need a finite representation for Presburger predicates. There are three possible candidates: full Presburger arithmetic, quantifier-free Presburger arithmetic, and semilinear sets. Semilinear sets are difficult to parse by humans, and no paper on population protocols uses them to describe predicates. Full Presburger arithmetic is very succinct, but its satisfiability problem lies between 2-NEXP and 2-EXPSPACE [10, 35, 37]. Since the satisfiability problem can be easily reduced to the correctness problem, choosing full Presburger arithmetic would “mask” the complexity of the correctness problem in the size of the protocol for several protocol classes. This leaves quantifier-free Presburger arithmetic, which also has several advantages of its own. First, standard predicates studied in the literature (like majority, threshold, or remainder predicates) are naturally expressed without quantifiers. Second, there is a synthesis algorithm for population protocols that takes a quantifier-free Presburger predicate as input and outputs a population protocol (not necessarily efficient or succinct) that computes it [5, 6]; a recent, more involved algorithm even outputs a protocol with polynomially many states in the size of the predicate [13]. Third, the satisfiability problem for quantifier-free Presburger predicates is “only” NP-complete, and, as we shall see, the complexity in the size of the protocol will always be higher for all protocol classes.

Taking these considerations into account, we formally define the correctness problem as follows:

Correctness problem

Given: A protocol \(\mathscr {P}\) over an alphabet \(\varSigma \), belonging to one of the six classes PP, DO, IO, DT, IT, QT; a quantifier-free Presburger predicate \(\varphi \) over \(\varSigma \).

Decide: Does \(\mathscr {P}\) compute the predicate represented by \(\varphi \)?

We also study the correctness problem over a single input. We refer to it as the single-instance correctness problem and define it in the following way:

Single-instance correctness problem

Given: A protocol \(\mathscr {P}\) over an alphabet \(\varSigma \) and with initial state mapping \(\iota \), belonging to one of the six classes PP, DO, IO, DT, IT, QT; an input \(D \in \text {Pop}(\varSigma )\), and a boolean b.

Decide: Do all fair executions of \(\mathscr {P}\) starting at I(D) converge to b ?

2.6 Correctness as a reachability problem

In the coming sections we will obtain matching upper and lower bounds on the complexity of the correctness problem for different protocol classes. The upper bounds are obtained by reducing the correctness problem to two different reachability problems. The reductions require the protocols to be well behaved. We first define well-behaved protocols, and then present the two reductions.

Well-behaved protocols. Let \(\mathscr {P}\) be a generalized protocol. A configuration C of \(\mathscr {P}\) is a bottom configuration if \(C \xrightarrow {*} C'\) implies \(C' \xrightarrow {*} C\) for every configuration \(C'\). In other words, C is a bottom configuration if it belongs to a bottom strongly connected component (SCC) of the configuration graph of the protocol.

Definition 2.8

A generalized protocol is well-behaved if every fair execution contains a bottom configuration.

We show that all our protocols are well behaved, with the exception of queued-transmission protocols. Essentially, this is the reason why the decidability of the correctness problem for QT is still open.

Lemma 2.9

Standard population protocols (PP) and delayed-transmission protocols (DT) are well behaved.

Proof

In standard population protocols, if \(C \xrightarrow {*} C'\) then \(|C|=|C'|\). It follows that for every configuration \(C\in \textit{Conf}\) the set of configurations reachable from C is finite. So every fair execution eventually visits a bottom configuration.

In delayed-transmision protocols, the size of a configuration is equal to the number of agents plus the number of messages in transit. So there is no bound on the size of the configurations reachable from a given configuration C, and in particular the set of configurations reachable from C can be infinite. However, since agents can always receive any message, for every configuration C there is at least one reachable configuration Z without any message in transit. Since the number of such configurations with a given number of agents is finite, for every fair execution \(\pi = C_0, C_1, \ldots \) there is a configuration Z without messages in transit such that \(C_i=Z\) for infinitely many i. By fairness, every configuration \(C'\) reachable from Z also appears infinitely often in \(\pi \), and so every configuration \(C'\) reachable from Z verifies \(C' \xrightarrow {*} Z\). So Z is a bottom configuration. \(\square \)

Since IT and IO are subclasses of PP and DO is a subclass of DT, the proof is valid for IT, IO, and DT as well. The following example shows that queued-transmission protocols are not necessarily well-behaved.

Example 2.10

Consider a queued-transmission protocol in which an agent in state q can send a message m, staying in q. Assume further that no agent can ever receive a message m (because, for example, there are no receiving transitions for it). Then any execution in which the agent in state q sends the message m infinitely often and never receives any messages is fair: Indeed, after k steps the system can only reach configurations with at least k messages, and so no configuration is reachable from infinitely many configurations in the execution. Since this fair execution does not visit any bottom configuration, the protocol is not well-behaved. Moreover, if q is the only state of the protocol, there are no bottom configurations at all.

Characterizing correctness of well-behaved protocols. We start with a useful lemma valid for arbitrary protocols.

Lemma 2.11

([7]) Every finite execution of a generalized protocol can be extended to a fair execution.

Proof

Let \(\textit{Conf}\) be the set of configurations of the protocol, and let \(\pi \) be a finite execution. Fix an infinite sequence \(\rho = C_0, C_1, \ldots \) of configurations such that every configuration of \(\textit{Conf}\) appears infinitely often in \(\rho \). Define the infinite execution \(\pi _0 \, \pi _1 \, \pi _2 \ldots \) and the infinite subsequence \(C_{i_0}, C_{i_1}, C_{i_2} \ldots \) of \(\rho \) inductively as follows. For \(i=0\), let \(\pi _0 := \pi \) and \(C_{i_0} := C_0\). For every \(j \ge 0\), let \(\pi _0 \, \ldots \, \pi _j \,\pi _{j+1}\) be any execution leading to the first configuration of \(\rho \) after \(C_{i_j}\) that is reachable from the last configuration of \(\pi _0 \, \ldots \, \pi _j\). It is easy to see that \(\pi _0 \, \pi _1 \, \pi _2 \ldots \) is fair. \(\square \)

Now we introduce some notations. Let \(\mathscr {P}=(\textit{Conf}, \varSigma , Step , I, O)\) be a generalized protocol, and let \(\varphi \) be a predicate.

-

The sets of predecessors and successors of a set \(\mathscr {M}\) of configurations of \(\mathscr {P}\) are defined as follows:

$$\begin{aligned} \begin{array}{rcl} pre ^*(\mathscr {M}) &{} {\mathop {=}\limits ^{\text {def}}}&{} \{ C' \in \textit{Conf}\mid \exists C \in \mathscr {M} \, . \, C' \xrightarrow {*} C \} \\ post ^*(\mathscr {M}) &{} {\mathop {=}\limits ^{\text {def}}}&{} \{ C \in \textit{Conf}\mid \exists C' \in \mathscr {M} \, . \, C' \xrightarrow {*} C \} \end{array} \end{aligned}$$ -

For every \(b \in \left\{ 0,1\right\} \), we define \(Con_{b} {\mathop {=}\limits ^{\text {def}}}O^{-1}(b)\), the set of configurations with output b. We call \(Con_{b}\) the set of b-consensus configurations.

-

For every \(b \in \{0, 1\}\), we let \(St_{b}\) denote the set of configurations C such that every configuration reachable from C (including C itself) has output b. \(St_{}\) stands for stable output. It follows easily from the definitions of \( pre ^*\) and \( post ^*\) that

$$\begin{aligned} St_{b} = \overline{ pre ^*\left( \overline{Con_{b}}\right) } \ , \end{aligned}$$where \(\overline{\mathscr {M}} {\mathop {=}\limits ^{\text {def}}}\textit{Conf}\setminus \mathscr {M}\) for every set of configurations \(\mathscr {M} \subseteq \textit{Conf}\). Indeed, the equation states that a configuration belongs to \(St_{b}\) iff it cannot reach any configuration with output \(1-b\), or with no output.

-

For every \(b \in \{0, 1\}\), we define \(I_b {\mathop {=}\limits ^{\text {def}}}\{I(D) \mid D \in \text {Pop}(\varSigma ) \wedge \varphi (D) = b \}\). In other words, \(I_b\) is the set of initial configurations for which \(\mathscr {P}\) should output b in order to compute \(\varphi \).

Proposition 2.12

Let \(\mathscr {P}=(\textit{Conf}, \varSigma , Step , I, O)\) be a well-behaved generalized protocol and let \(\varphi \) be a predicate. \(\mathscr {P}\) computes \(\varphi \) iff

holds for every \(b \in \{0,1\}\).

Proof

Assume that \( post ^*(I_b) \subseteq pre ^*(St_{b})\) holds for \(b \in \{0,1\}\). Let \(\pi =C_0, C_1, \ldots \) be a fair execution with \(C_0 \in I_b\) for some \(b \in \{0,1\}\). We show that \(\pi \) converges to b. Protocol \(\mathscr {P}\) is well-behaved, so \(\pi \) contains a bottom configuration C of a bottom SCC \(B \subseteq \mathscr {B}\). By assumption, we know that \(St_{b}\) is reachable from C, so there exists \(C' \in St_{b}\) such that \(C \xrightarrow {*} C'\). This entails \(C' \in B\). Since for all \(D \in St_{b}\), if \(D \xrightarrow {*} D'\) then \(D' \in St_{b}\), we obtain that \(B \subseteq St_{b}\). Every configuration of \(St_{b}\) is a b-consensus so \(\pi \) converges to b.

Assume that \(\mathscr {P}\) computes \(\varphi \), i.e. that every fair execution starting in \(I_b\) converges to b for \(b \in \left\{ 0,1\right\} \). Let us show that \( post ^*(I_b) \subseteq pre ^*(St_{b})\) holds. Consider \(C \in post ^*(I_b)\). There exists \(C_0 \in I_b\) such that \(C_0 \xrightarrow {*} C\) and, by Lemma 2.11, this finite execution can be extended to a fair infinite execution \(\pi \). Since \(\mathscr {P}\) is well-behaved, the execution contains a bottom configuration \(C'\) of a bottom SCC \(B \subseteq \mathscr {B}\). If \(B \subseteq St_{b}\) then \(C \in pre ^*(St_{b})\) and our proof is done. Suppose this is not the case, i.e. \(B \cap \overline{St_{b}} \ne \emptyset \). This means that there is a configuration \(\hat{C} \notin Con_{b}\) that is in B. It is thus reachable from any configuration of \(\pi \) and so by fairness it is reached infinitely often. Thus \(\pi \) does not converge to b, contradicting the correctness assumption. \(\square \)

A second characterization. Proposition 2.12 is useful when it is possible to compute adequate finite representations of the sets \( post ^*(I_b)\) and \( pre ^*(St_{b})\). We will later see that this is the case for IO and DO protocols. Unfortunately, such finite representations have not yet been found for PP or for transmission protocols. For this reason, our results for these classes will be based on a second characterization.

Let \(\mathscr {P}=(\textit{Conf}, \varSigma , Step , I, O)\) be a well-behaved generalized protocol, and let \(\mathscr {B}\) denote the set of bottom configurations of \(\mathscr {P}\). Further, for every \(b \in \{0,1\}\), let \(\mathscr {B}_b\) denote the set of configurations \(C \in \mathscr {B}\) such that every configuration \(C'\) reachable from C satisfies \(O(C')=b\). Equivalently, \(\mathscr {B}_b {\mathop {=}\limits ^{\text {def}}}\mathscr {B}\cap St_{b}\).

Observe that every fair execution of a well-behaved protocol eventually gets trapped in a bottom strongly-connected component of the configuration graph and, by fairness, visits all its configurations infinitely often. Further, if any configuration of the SCC belongs to \(\mathscr {B}_b\), then all of them belong to \(\mathscr {B}_b\). This occurs independently of whether the SCC contains finitely or infinitely many configurations.

Proposition 2.13

Let \(\mathscr {P}\) be a well-behaved generalized protocol and let \(\varphi \) be a predicate. \(\mathscr {P}\) computes \(\varphi \) iff for every \(b \in \{0,1\}\) the set \(\mathscr {B}\setminus \mathscr {B}_b\) is not reachable from \(I_b\).

Proof

Assume that \(\mathscr {B}\setminus \mathscr {B}_b\) is reachable from \(\varphi ^{-1}(b)\) for some \(b \in \{0,1\}\). Then there exists an input \(a \in \text {Pop}(\varSigma )\) and an execution \(C_0, C_1, \ldots , C_i\) such that \(\varphi (a)=b\), \(I(a) = C_0\), and \(C_i \in \mathscr {B}\setminus \mathscr {B}_b\). By Lemma 2.11 the execution can be extended to a fair execution \(C_0, C_1, \ldots \). Since \(C_{i+k} \xrightarrow {*} C_i\) for every \(k \ge 0\), the execution visits \(C_i\) and all its successors infinitely often. Since \(C_i \notin \mathscr {B}_b\), the execution does not converge to b. So \(\mathscr {P}\) does not compute \(\varphi \).

Assume that \(\mathscr {P}\) does not compute \(\varphi \). Then there exists an input \(a \in \text {Pop}(\varSigma )\), a boolean \(b \in \{0,1\}\), and a fair execution \(\pi =C_0, C_1, \ldots \) such that \(\varphi (a)=b\) and \(I(a) = C_0\), but \(\pi \) does not converge to b. Since \(\mathscr {P}\) is well-behaved, \(\pi \) contains a configuration \(C_i \in \mathscr {B}\). Since \(\pi \) does not converge to b, there is \(j > i\) such that \(O(C_j)\) is undefined, or defined but different from b. Since \(C_j\) belongs to the same SCC as \(C_i\), we have \(C_i \notin \mathscr {B}_b\). \(\square \)

3 Lower bounds for observation models

We prove that the correctness problem is PSPACE-hard for IO protocols and \(\varPi _2^p\)-hard for DO protocols, and that these results also hold for the single-instance problem.

3.1 Correctness of IO protocols is PSPACE-hard

We prove that the single-instance correctness and correctness problems for IO protocols are PSPACE-hard by reduction from the acceptance problem for bounded-tape Turing machines. We show that the standard simulation of bounded-tape Turing machines by 1-safe Petri nets, as described for example in [20, 29], can be modified to produce an IO protocol. This can be done for IO protocols but not for DO protocols: the simulation of the Turing machine relies on the fact that a transition will only occur in an IO protocol if an agent observes another agent in a certain state at the present moment.

We fix a deterministic Turing machine M with set of control states Q, alphabet \(\varSigma \) containing the empty symbol  , and partial transition function \(\delta :Q\times \varSigma \rightarrow Q\times \varSigma \times D\) (\(D=\{-1,+1\}\)). Let K denote an upper bound on the number of tape cells visited by the computation of M on empty tape. We assume that K is provided with M in unary encoding.

, and partial transition function \(\delta :Q\times \varSigma \rightarrow Q\times \varSigma \times D\) (\(D=\{-1,+1\}\)). Let K denote an upper bound on the number of tape cells visited by the computation of M on empty tape. We assume that K is provided with M in unary encoding.

The implementation of M is the IO protocol \(\mathscr {P}_M\) described below. Strictly speaking, \(\mathscr {P}_M\) is not a complete protocol, only two sets of states and transitions. The rest of the protocol, which is slightly different for the single-instance correctness and the correctness problems, is described in the proofs.

States of \(\mathscr {P}_M\). The protocol \(\mathscr {P}_M\) contains two sets of cell states and head states modeling the state of the tape cells and the head, respectively. The cell states are:

-

\(\textit{off}[\sigma ,n]\) for each \(\sigma \in \varSigma \) and \(1\le n\le K\). An agent in \(\textit{off}[\sigma ,n]\) denotes that cell n contains symbol \(\sigma \), and the cell is “off”, i.e., the head is not on it.

-

\(\textit{on}[\sigma ,n]\) for each \(\sigma \in \varSigma \) and \(1\le n\le K\), with analogous intended meaning.

The head states are:

-

\(\textit{at}[q,n]\) for each \(q\in Q\) and \(1\le n\le K\). An agent in \(\textit{at}[q,n]\) denotes that the head is in control state q and at cell n.

-

\(\textit{move}[q, \sigma , n, d]\) for each \(q\in Q\), \(\sigma \in \varSigma \), \(1\le n\le K\) and every \(d\in D\) such that \(1\le n+d\le K\). An agent in \(\textit{move}[q, \sigma , n, d]\) denotes that head is in control state q, has left cell n after writing symbol \(\sigma \) on it, and is currently moving in the direction given by d.

Finally, the protocol also contains two special states observer and success. Intuitively, \(\mathscr {P}_M\) uses them to detect that M has accepted.

Transitions of \(\mathscr {P}_M\). Intuitively, the implementation of M contains a set of cell transitions in which a cell observes the head and changes its state, a set of head transitions in which the head observes a cell. Each of these sets contains transitions of two types. The set of cell transitions contains:

-

Type 1a: A transition \(\textit{off}[\sigma ,n] \xrightarrow {\textit{at}[q,n]} \textit{on}[\sigma ,n]\) for every state \(q \in Q\), symbol \(\sigma \in \varSigma \), and cell \(1 \le n \le K\).

The n-th cell, currently off, observes that the head is on it, and switches itself on.

-

Type 1b: A transition \(\textit{on}[\sigma ,n] \xrightarrow {\textit{move}[q, \sigma ', n, d]} \textit{off}[\sigma ',n]\) for every \(q \in Q\), \(\sigma \in \varSigma \), and \(1 \le n \le K\) such that \(1 \le n+d \le K\).

The n-th cell, currently on, observes that the head has left after writing \(\sigma '\), and switches itself off (accepting the character the head intended to write).

The set of head transitions contains:

-

Type 2a: A transition

$$\begin{aligned} \textit{at}[q,n] \xrightarrow {\textit{on}[\sigma ,n]} \textit{move}[\delta _Q(q,\sigma ), \delta _\varSigma (q,\sigma ), n, \delta _D(q,\sigma )] \end{aligned}$$for every \(q \in Q\), \(\sigma \in \varSigma \), and \(1 \le n \le K\) such that \(1\le n+\delta _D(q,\sigma )\le K\).

The head, currently on cell n, observes that the cell is on, writes the new symbol on it, and leaves.

-

Type 2b: A transition \(\textit{move}[q, \sigma , n, d] \xrightarrow {\textit{off}[\sigma ,n]}\textit{at}[q,n+d]\) for every \(q \in Q\), \(\sigma \in \varSigma \), and \(1 \le n \le K\) such that \(1 \le n+d \le K\).

The head, currently moving, observes that the old cell has turned off, and places itself on the new cell.

Figure 1 graphically represents some of the states and transitions of \(\mathscr {P}_M\); the double arcs indicates the states being observed. We define the configuration of \(\mathscr {P}_M\) that corresponds to a given configuration of the Turing machine.

Definition 3.1

Given a configuration c of M with control state q, tape content \(\sigma _1\sigma _2\cdots \sigma _K\), and head on cell \(n \le K\), let \(C_c\) be the configuration that puts one agent in \(\textit{off}[\sigma _i,i]\) for each \(1\le i\le K\), one agent in \(\textit{at}[q,n]\), and no agents elsewhere.

Theorem 3.2 below formalizes the relation between the Turing machine M and its implementation \(\mathscr {P}_M\).

Theorem 3.2

For every two configurations \(c, c'\) of M that write at most K cells: \(c \xrightarrow {} c'\) iff \(C_c \xrightarrow {t_1t_2t_3t_4} C_{c'}\) in \(\mathscr {P}_M\) for some transitions \(t_1, t_2, t_3, t_4\) of types 1a, 2a, 1b, 2b, respectively.

Proof

By Lemma A.3, for all c there is either zero or one possibility for the sequence \(t_1,t_2,t_3,t_4\) starting in \(C_c\). It is easy to see from the definition of steps configuration \(\textit{move}[\cdot , \cdot , \cdot , \cdot ]\) states that if such a sequence exists, it results in \(c'\) such that \(c\xrightarrow {}c'\). If such a sequence doesn’t exist, the failure must occur when trying to populate a \(\textit{move}[\cdot , \cdot , \cdot , \cdot ]\) state. In that case the configuration c must be blocked, either by the transition being undefined or by going out of bounds. \(\square \)

Now we can finally prove the PSPACE lower bound.

Theorem 3.3

The single-instance correctness and correctness problems for IO protocols are PSPACE-hard.

Proof

By reduction from the following problem: Given a polynomially space-bounded deterministic Turing machine M with two distinguished states \(q_{acc}, q_{rej}\), such that the computation of M on empty tape ends when the head enters for the first time \(q_{acc}\) or \(q_{rej}\) (and one of the two occurs), decide whether M accepts, i.e., whether the computation on empty tape reaches \(q_{acc}\). The problem is known to be PSPACE-hard.

Single-instance correctness. We construct a protocol \(\mathscr {P}\) and an input \(D_0\) such that M accepts on empty tape iff all fair executions of \(\mathscr {P}\) starting at the configuration \(I(D_0)\) converge to 1.

Definition of \(\mathscr {P}\). Let \(\mathscr {P}_M\) be the IO protocol implementation of M. We add two states to \(\mathscr {P}_M\), called observer and success. We also add transitions allowing an agent in state observer to move to success by observing any agent in a state of the form \(\textit{at}[q_{acc},i]\), as well as transitions allowing an agent in success to “attract” agents in all other states to success:

-

(i)

\(\textit{observer} \xrightarrow {\textit{at}[q_{acc},i]} \textit{success}\) for every \(1 \le i \le K\), and

-

(ii)

\(q \xrightarrow {\textit{success}} \textit{success}\) for every \(q \ne \textit{success}\).

Further, we set the output function to 1 for the state success, and to 0 for all other states. Finally, we choose the alphabet of input symbols of \(\mathscr {P}\) as \(\{1,2, \ldots , K+2\}\), and define the input function as follows:  for every \(1 \le i \le K\); \(\iota (K+1) =\textit{at}[q_0,0]\); and \(\iota (K+2) =\textit{observer}\).

for every \(1 \le i \le K\); \(\iota (K+1) =\textit{at}[q_0,0]\); and \(\iota (K+2) =\textit{observer}\).

Definition of \(D_0\). We choose \(D_0\) as the input satisfying \(D_0(i)=1\) for every input symbol of \(\mathscr {P}\). It follows that \(I(D_0)\) is the configuration of \(\mathscr {P}\) corresponding to the initial configuration of M on empty tape. By Theorem 3.2, the fair executions of \(\mathscr {P}\) from \(I(D_0)\) simulate the execution of M on empty tape.

Correctness of the reduction. If M accepts, then, since \(\mathscr {P}\) simulates the execution of M on empty tape, every fair execution of \(\mathscr {P}\) starting at \(I(D_0)\) eventually puts an agent in a state of the form \(\textit{at}[q_{acc},i]\). This agent stays there until the agent in state observer eventually moves to success (transitions of (i)), after which all agents are eventually attracted to success (transitions of (ii)). So all fair computations of \(\mathscr {P}\) starting at \(I(D_0)\) converge to 1. If M rejects, then no computation of \(\mathscr {P}\) starting at \(I(D_0)\) (fair or not) ever puts an agent in success. Since all other states have output 0, all fair computations of \(\mathscr {P}\) starting at \(I(D_0)\) converge to 0.

Correctness. Notice that the hardness proof for single-instance correctness establishes PSPACE-hardness already for restricted instances \((\mathscr {P}, D)\) satisfying \(D(q) \in \{0, 1\}\) for every state q. Call this restricted variant the 0/1-single-instance correctness problem for IO. We claim that the 0/1-single-instance correctness problem for IO is polynomial-time reducible to the correctness problem for IO. By PSPACE-hardness of the 0/1-single-instance correctness problem for IO, the claim entails PSPACE-hardness for the latter.

Let us now show the claim. Given an IO protocol \(\mathscr {P}\) and some configuration D for the 0/1-instance-correctness problem, we provide a polynomial-time construction of an IO protocol \(\mathscr {P}'\) such that \(\mathscr {P}'\) computes the constant predicate \(\varphi (\mathbf {x}) = 0\) if and only if every fair run of \(\mathscr {P}\) starting in D stabilizes to 0. It is well known that, given two protocols \(\mathscr {P}_1\) and \(\mathscr {P}_2\) with \(n_1\) and \(n_2\) states and computing two predicates \(\varphi _1\) and \(\varphi _2\), it is possible to construct a third protocol computing \(\varphi _1 \wedge \varphi _2\), often called the synchronous product, whose states are pair of states of \(\mathscr {P}_1\) and \(\mathscr {P}_2\), and has therefore \(O(n_1 \cdot n_2)\) states (see e.g. [6]). We define \(\mathscr {P}'\) as the synchronous product of \(\mathscr {P}\) with a protocol \(\mathscr {P}_D\) that computes whether the input is equal to D. The output function of \(\mathscr {P}'\) maps the product state \((q_1, q_2)\) to 1 if and only if both \(q_1\) and \(q_2\) map to output 1 in their respective protocols. Thus, a fair run of \(\mathscr {P}'\) stabilizes to 1 if and only if the input configuration equals D and \(\mathscr {P}\) stabilizes to 1 for input D, which is precisely the case if \((\mathscr {P}, D)\) is a positive instance for the 0/1-single-instance problem.

It remains to show that \(\mathscr {P}_D\) is polynomial-time constructible. Such a protocol is well-known, but we repeat the definition. Let \(D = (d_1, \ldots , d_m)\) with \(d_i \in \{0, 1\}\), and let \(i_1 \le i_2 \le \ldots \le i_k\) be the maximal sequence of indices satisfying \(d_{i_j} = 1\) for every j. Since every population has at least two agents, we have \(k \ge 2\). We first construct an IO protocol \(\mathscr {P}_\psi \) that computes the predicate \(\psi = d_{i_1} \ge 1 \wedge d_{i_2} \ge 1 \wedge \ldots \wedge d_{i_k} \ge 1\), using \(m + k - 1\) states: The states of \(\mathscr {P}_\psi \) are \(Q_\mathscr {P}\uplus \{2, \ldots , k\}\) where \(Q_\mathscr {P}\) is the set of states of \(\mathscr {P}\). The input mapping of \(\mathscr {P}_\psi \) is identical to the input mapping of \(\mathscr {P}\). Let \(q_{i_j}\) denote the state that corresponds to the entry \(d_{i_j}\) in D. The transitions of \(\mathscr {P}_\psi \) are given by

All states except k shall map to output 0. It is readily seen that \(\mathscr {P}_\psi \) computes \(\psi \). Further notice that the predicate \(\mathbf {x} = D\) is equivalent to \(\psi \wedge |\mathbf {x}| \le k\). Moreover, it is well-known that the right conjunct \(|\mathbf {x}| \le k\) is computable with k states in an immediate observation protocol (see e.g. [6]), and thus we can define \(\mathscr {P}_D\) as the synchronous product of the protocol \(\mathscr {P}_\psi \) with the protocol that computes \(|\mathbf {x}| \le k\), using poly(k) states. This completes the proof. \(\square \)

3.2 Correctness of DO protocols is \(\varPi _2^p\)-hard

We show that the single-instance correctness and the correctness problems are \(\varPi _2^p\)-hard for DO protocols, where \(\varPi _2^p= \text {co}\textsf {NP}^{\text {co}\textsf {NP}}\) is one of the two classes at the second level of the polynomial hierarchy [48]. Consider the natural complete problem for \(\varSigma _2^p\): Given a boolean circuit \(\varGamma \) with inputs \(\mathbf{x} =(x_1, \ldots , x_n)\) and \(\mathbf{y} = (y_1, \ldots , y_m)\), is there a valuation of \(\mathbf{x} \) such that for every valuation of \(\mathbf{y} \) the circuit outputs 1? We call the inputs of \(\mathbf{x} \) and \(\mathbf{y} \) existential and universal, respectively. Given \(\varGamma \) with inputs \(\mathbf{x} \) and \(\mathbf{y} \), we construct in polynomial time a DO protocol \(\mathscr {P}_\varGamma \) with input symbols \(\{x_1, \ldots , x_n\}\) that computes the false predicate, i.e., the predicate answering 0 for all inputs, iff \(\varGamma \) does not satisfy the property above. This shows that the correctness problem for DO protocols is \(\varPi _2^p\)-hard. A little modification of the proof shows that single-instance correctness is also \(\varPi _2^p\)-hard.

The section is divided in several parts. We first introduce basic notations about boolean circuits. Then we sketch a construction that, given a boolean circuit \(\varGamma \), returns a circuit evaluation protocol \(\widehat{\mathscr {P}}_\varGamma \) that nondeterministically chooses values for the input nodes, and simulates an execution of \(\varGamma \) on these inputs. In a third step we add some states and transitions to \(\widehat{\mathscr {P}}_\varGamma \) to produce the final DO protocol \(\mathscr {P}_\varGamma \). The fourth and final step proves the correctness of the reduction.

Boolean circuits. A boolean circuit \(\varGamma \) is a directed acyclic graph. The nodes of \(\varGamma \) are either input nodes, which have no incoming edges, or gates, which have at least one incoming edge. A gate with k incoming edges is labeled by a boolean operation of arity k. We assume that k is bounded by some constant. This assumption is innocuous since it is well known that every boolean function can be implemented using a combination of gates of constant arity. The nodes with outgoing edges leading to a a gate g are called the arguments of g. There is a distinguished output gate \(g_o\) without outgoing edges. We assume that every node is connected to the output gate by at least one path.

A circuit configuration assigns to each input node a boolean value, 0 or 1, and to each gate a value, 0, 1, or \(\square \), where \(\square \) denotes that the value has not yet been computed and so it is still unknown. A configuration is initial if it assigns \(\square \) to all gates. The step relation between circuit configurations is defined as usual: a gate can change its value to the result of applying the boolean operation to the arguments; if at least one of the arguments has value \(\square \), then by definition the result of the boolean operation is also \(\square \).

The protocol \(\widehat{\mathscr {P}}_\varGamma \). Given a circuit \(\varGamma \) with output node \(g_o\), we define the circuit evaluation protocol \(\widehat{\mathscr {P}}_\varGamma =(Q, M, \delta _s, \delta _r, \varSigma , \iota , o)\). As mentioned above, \(\widehat{\mathscr {P}}_\varGamma \) nondeterministically chooses input values for \(\varGamma \), and simulates an execution on them.

States. The set Q of states contains all tuples \((n, v_n, \arg , v_o)\), where:

-

n is a node of \(\varGamma \) (either an input node or a gate);

-

\(v_n \in \{0,1,\square \}\) represents the current opinion of the agent about the value of n;

-

\(\arg \in \{0,1,\square \}^k\), where k is the number of arguments of n, represents the current opinion of the agent about the values of the arguments of n (if n is an input node then \(\arg \) is the empty tuple);

-

\(v_o \in \{0, 1, \square \}\) represents the current opinion of the agent about the value of the output gate \(g_o\).

Alphabet, input and output functions. The set \(\varSigma \) of input symbols is the set of nodes of \(\varGamma \). The initial state mapping \(\iota \) maps each node n to the state \(\iota (n) := (n, \square , (\square , \ldots , \square ), \square )\), i.e., to the state with node n, and with all values still unknown. The output function is defined by

Intuitively, agents have opinion 1 if they think the circuit outputs 1, and 0 if they think the circuit outputs 0 or has not yet produced an output.

Messages. The set M of messages contains all pairs (n, v), where n is a node, and \(v \in \{0,1,\square \}\) is a value.

Transitions. An agent in state \((n, v_n, \arg , v_o)\) can

-

Send the message \((n, v_n)\), i.e., an agent can send its node and its current opinion on the value of the node.

-

Receive a message \((m, v_m)\), after which the agent updates its state as follows:

-

(1)

If n is an input node and \(v_n = \square \), then if \(m=n\) the agent moves to state \((n, 0, \arg , v_o)\), i.e., updates its value to 0, and if \(m = g_o\) it moves to state \((n, 1, \arg , v_o)\), i.e., updates its value to 1. Intuitively, this is an artificial but simple way of ensuring that each input node nondeterministically chooses a value, 0, or 1, depending on whether it first receives a message from itself, or from the output node.Footnote 2

-

(2)

If n is a gate and m is an argument of n, then the agent moves to \((n, v_n', \arg ', v_o)\), where \(\arg '\) is the result of updating the value of m in \(\arg \) to \(v_m\), and \(v_n'\) is the result of applying the boolean operation of the gate to \(\arg \).

-

(3)

If n is any node, \(m = g_o\), and \(v_m \ne \square \), then the agent moves to \((n, 0, \arg , v_m)\), i.e., it updates its opinion of the output of the circuit to \(v_m\).

-

(1)

Notice that if an agent is initially in state \(\iota (n)\), then it remains forever in states having n as node. So it makes sense to speak of the node of an agent.

Let us examine the behaviour of \(\widehat{\mathscr {P}}_\varGamma \) from the initial configuration \(C_0\) that puts exactly one agent in state \(\iota (n)\) for every node n. The executions of \(\widehat{\mathscr {P}}_\varGamma \) from \(C_0\) exactly simulate the executions of the circuit. Indeed, the transitions of (1) ensure that each input agent (i.e., every agent whose node is an input node) eventually chooses a value, 0 or 1. The transitions of (2) simulate the computations of the gates. Finally, the transitions of (3) ensure that every node eventually updates its opinion of the value of \(g_o\) to the value computed by \(\varGamma \) for the chosen input. The following lemma, proved in the “Appendix B”, formalizes this.

Lemma 3.4

Let \(\varGamma \) be a circuit and let \(\widehat{\mathscr {P}}_\varGamma \) be its evaluation protocol. Let \(C_0\) be the initial configuration that puts exactly one agent in state \(\iota (n)\) for every node n. A fair execution starting at \(C_0\) eventually reaches a configuration C where each input agent is in a state with value 0 or 1, and these values do not change afterwards. The tail of the execution starting at C converges to a stable consensus equal to the output of \(\varGamma \) on these assigned inputs.

Observe, however, that \(\widehat{\mathscr {P}}_\varGamma \) also has initial configurations whose executions may not simulate any execution of \(\varGamma \). For example, this is the case of an initial configuration that puts two agents in state \(\iota (n)\) for some node n, and the executions in which one of these agents chooses input 0 for n, and the other input 1. It is also the case of an initial configuration that puts zero agents in state \(\iota (n)\) for some node n. Observe further that \(\widehat{\mathscr {P}}_\varGamma \) can only select values for the inputs, and simulate an execution of \(\varGamma \). We need a protocol that selects values for the existential inputs, and can then repeatedly simulate the circuit for different values of the universal inputs. These two problems are solved by appropriately extending \(\widehat{\mathscr {P}}_\varGamma \) with new states and transitions.

The protocol \(\mathscr {P}_\varGamma \). We add a new state and some transitions to \(\widehat{\mathscr {P}}_\varGamma \) in order to obtain the final protocol \(\widehat{\mathscr {P}}_\varGamma \).

-

Add a new failure state \(\bot \) with \(o(\bot )=0\) to the set of states Q, and a new message \(m_\bot \) to the set of messages M.

-

Add the following send and receive transitions:

-

(4)

An agent in state \(\bot \) can send the message \(m_\bot \).

-

(5)

An agent in state \(\bot \) that receives any message (including \(m_\bot \)) stays in state \(\bot \); an agent (in any state, including \(\bot \)) that receives \(m_\bot \) moves to state \(\bot \).

(In particular, if some agent ever reaches state \(\bot \), then all agents eventually reach state \(\bot \) and stay there, and so the protocol converges to 0.)

-

(6)

If an agent in state \((n, v_n, \arg , v_o)\), where n is an existential input node and \(v_n \ne \square \), receives a message \((n, v_n')\) such that \(v_n \ne v_n' \ne \square \), then the agent moves to state \(\bot \).

(Intuitively, if an agent discovers that another agent has chosen a different value for the same existential input, then the agent moves to \(\bot \), and so, by the observation above, the protocol converges to 0.)

-

(7)

If an agent in state \((n, v_n, \arg , v_o)\), where n is a universal input node and \(v_n \ne \square \), receives a message \((g_o, 1)\), then the agent moves to state \((n,1-v_n, \arg , v_o)\).

(Intuitively, this allows the protocol to flip the values of any universal inputs whenever the output gate takes value 1.)

-

(4)

Proof of the reduction. We claim that \(\mathscr {P}_\varGamma \) does not compute the false predicate (i.e., the predicate that answers 0 for every input) iff \(\exists \mathbf {x} \forall \mathbf {y} \varGamma (\mathbf {x}, \mathbf {y}) = 1\), that is, if there is a valuation of the existential inputs of \(\varGamma \) such that, for every valuation of the universal inputs, \(\varGamma \) returns 1. Let us sketch the proof of the claim. We consider two cases:

\(\exists \mathbf {x} \forall \mathbf {y} \varGamma (\mathbf {x}, \mathbf {y}) = 1\) is true. Let \(C_0\) be the initial configuration that puts exactly one agent in state \(\iota (n)\) for every node n. We show that not every fair execution from \(C_0\) converges to 0, and so that \(\mathscr {P}_\varGamma \) does not compute the 0 predicate.

Let \(\mathbf {x}_0\) be a valuation of \(\mathbf {x}\) such that \(\forall \mathbf {y} \varGamma (\mathbf {x}_0, \mathbf {y}) = 1\). The execution proceeds as follows: first, the agents for the inputs of \(\mathbf {x}\) receive messages, sent either by themselves or by the output node, that make them choose the values of \(\mathbf {x}_0\). An inspection of the transitions of \(\mathscr {P}_\varGamma \) shows that these values cannot change anymore. Let C be the configuration reached after the agents have received the messages. Since \(\varGamma (\mathbf {x}_0, \mathbf {y}) = 1\) holds for every \(\mathbf {y}\), by Lemma 3.4 every configuration \(C'\) reachable from C can reach a consensus of 1. Indeed, it suffices to first let the agents receive all messages of \(C'\) (which does not change the values of the existential inputs), then let the agents for \(\mathbf {y}\) that still have value \(\square \) pick a boolean value (nondeterministically), and then let all agents simulate the circuit. Since \(\varGamma (\mathbf {x}_0, \mathbf {y}) = 1\) holds for every \(\mathbf {y}\), after the simulation the node for \(g_o\) has value 1. Finally, we let all agents move to states satisfying \(v_o=1\).

\(\exists \mathbf {x} \forall \mathbf {y} \varGamma (\mathbf {x}, \mathbf {y}) = 1\) is false. This case requires a finer analysis. We have to show that \(\mathscr {P}_\varGamma \) computes the false predicate, i.e., that every fair execution from every initial configuration converges to 0. By fairness, it suffices to show that for every initial configuration \(C_0\) and for every configuration C reachable from \(C_0\), it is possible to reach from C a stable consensus of 0.

Thanks to the \(\bot \) state, which is introduced for this purpose, configurations C in which two agents for the same existential input node choose inconsistent values eventually reach the configuration with all agents in state \(\bot \), which is a stable consensus of 0. Thanks to the assumption that every node is connected to the output gate by at least one path, configurations C in which there are no agents for some node cannot reach any configuration in which some agent populates a state with \(v_o=1\), and so C itself is a stable consensus of 0. So, loosely speaking, configurations in which the agents pick more than one value, or can pick no value at all, for some existential input eventually reach a stable consensus of 0.

Consider the case in which, for every node n, the configuration C has at least one agent in a state with node n. By fairness, C eventually reaches a configuration \(C'\) at which each agent for an existential input has chosen a boolean value, and we can assume that all agents for the same input choose the same value. This fixes a valuation \(\mathbf {x}_0\) of the existential inputs. Recall that this valuation cannot change any more, since the protocol has no transitions for that. By assumption, there is \(\mathbf {y}_0\) such that \(\varGamma (\mathbf {x}_0, \mathbf {y}_0) = 0\). We sketch how to reach a stable consensus of 0 from \(C'\). First, let the agents consume all messages of \(C'\), and let \(C''\) be the resulting configuration. If \(C''\) cannot reach any configuration with circuit output 1, then the configuration reached after informing each agent about the value of \(g_o\) is a stable consensus of 0, and we are done. Otherwise, starting from such a configuration with output 1, let the agents send and receive the appropriate messages so that all agents for \(\mathbf {y}\) choose the values of \(\mathbf {y}_0\). After that, let the agent for \(g_o\) consume all remaining messages, if any, and let the protocol simulate \(\varGamma \) on \(\mathbf {x}_0, \mathbf {y}_0\). Notice that the simulation can be carried out even if there are multiple agents for the same gate g. Indeed, in this case, for every argument \(g'\) of g, we let at least one of the agents corresponding to \(g'\) send the message with the correct value for \(g'\) to all the agents for n. Since \(\varGamma (\mathbf {x}_0, \mathbf {y}_0) = 0\) by assumption, the agents for \(g_o\) eventually update their value to 0, and eventually all agents change their opinion about the output of the circuit to 0. Let \(C'''\) be the configuration so reached. We claim that \(C'''\) is a stable consensus of 0. Indeed, the state of a gate cannot change without a change in the argument values or the output gate \(g_o\). Therefore it is enough to prove that the input values cannot change. Since no transition can change \(\mathbf {x}_0\), this can only happen by changing the values \(\mathbf {y}_0\) of the universal inputs. But these values can only change by the transitions of (7), which require the agent to receive a message \((g_o, 1)\). This is not possible because the current value of \(g_o\) is 0, and the claim is proved.

This concludes the reduction to the correctness problem for DO protocols. We can easily transform it into a reduction to the single-instance correctness problem. Indeed, it suffices to observe that the executions of the circuit \(\varGamma \) correspond to the fair executions of \(\mathscr {P}_\varGamma \) from the unique initial configuration \(C_0\) with exactly one agent in state \(\iota (n)\) for every node n. So \(\mathscr {P}_\varGamma \) computes 0 from \(C_0\) iff \(\exists \mathbf {x} \forall \mathbf {y} \varGamma (\mathbf {x}, \mathbf {y}) = 1\), and we are done. So we have:

Theorem 3.5

The single-instance correctness and correctness problems for DO protocols are \(\varPi _2^p\)-hard.

4 Reachability in observation models: the pruning and shortening theorems

In the next three sections we prove that the correctness problem is PSPACE-complete for IO protocols and \(\varPi _2^p\)-complete for DO protocols. These are the most involved results of this paper. They can only be obtained after a detailed study of the reachability problem of IO and DO protocols, which we believe to be of independent interest. The roadmap for the three sections is as follows.

Section 4. Section 4.1 introduces message-free delayed-observation protocols (MFDO), an auxiliary model very close to DO protocols, but technically more convenient. As its name indicates, agents of MFDO protocols do not communicate by messages. Instead, they directly observe the current or past states of other agents. As a consequence, a configuration of an MFDO protocol is completely determined by the states of its agents, which has technical advantages. At the same time, MFDO and DO protocols are very close, in the following sense. We call a configuration of a DO protocol a zero-message configuration if all messages sent by the agents have already been received. Given a DO protocol \(\mathscr {P}\) we can construct an MFDO protocol \(\widehat{\mathscr {P}}\), with the same set of states, such that for any two zero-message configurations \(Z, Z'\) of \(\mathscr {P}\), we have \(Z \xrightarrow {*} Z'\) in \(\mathscr {P}\) iff \(Z \xrightarrow {*} Z'\) in \(\widehat{\mathscr {P}}\). (Observe that, since \(\mathscr {P}\) and \(\widehat{\mathscr {P}}\) have the same set of states, a zero-message configuration of \(\mathscr {P}\) is also a configuration of \(\widehat{\mathscr {P}}\).) So, any question about the reachability relation between zero-message configurations of \(\mathscr {P}\) can be “transferred” to \(\widehat{\mathscr {P}}\), and answered there.

The rest of the section is devoted to the Pruning and Shortening Theorems. Say that a configuration C is coverable from \(C'\) if there exists a configuration \(C''\) such that \(C' \xrightarrow {*} C'' \ge C\). The Pruning Theorems state that if a configuration C of a protocol with n states is coverable from \(C'\), then it is also coverable from a “small” configuration \(D \le C'\), where small means \(|D| \le |C|+ f(n)\) for a low-degree polynomial f. The Shortening Theorem states that every execution \(C \xrightarrow {*} C'\) can be “shortened” to an execution \(C \xrightarrow {\xi } C'\), where \(\xi =t_1^{k_1} t_2^{k_2} \ldots t_m^{k_m}\) and \(m \le f(n)\) for some low-degree polynomial f that depends only on n, not on C or \(C'\). Intuitively, if we assume that the \(k_i\) occurrences of \(t_i\) are executed synchronously in one step, then the execution only takes m steps.

Section 5. This section applies the Pruning and Shortening Theorems to the reachability problem between counting sets of configurations. Intuitively, a counting set of configurations is a union of cubes, and a cube is the set of all configurations C lying between a lower bound configuration L and an upper bound configuration U with possibly infinite components. Observe that counting sets may be infinite, but always have a finite representation. The reachability problem for counting sets asks, given two counting sets \(\mathscr {C}\) and \(\mathscr {C}'\), whether some configuration of \(\mathscr {C}'\) is reachable from some configuration of \(\mathscr {C}'\). The section proves two very powerful Closure Theorems for IO and DO. The Closure Theorems state that for every counting set \(\mathscr {C}\), the set \( post ^*(\mathscr {C})\) of all configurations reachable from \(\mathscr {C}\) is also a counting set; further, the same holds for the set \( pre ^*(\mathscr {C})\) of all configurations from which \(\mathscr {C}\) can be reached. So, loosely speaking, counting sets are closed under reachability. Furthermore, the section shows that if \(\mathscr {C}\) has a representation with “small” cubes, in a sense to be determined, then so do \( pre ^*(\mathscr {C})\) and \( post ^*(\mathscr {C})\).

Section 6. This section applies the Pruning, Shortening, and Closure Theorems to prove the PSPACE and \(\varPi _2^p\) upper bounds for the correctness problems of IO and DO protocols, respectively. The section shows that this is also the complexity of the single-instance correctness problems.

Notation. Throughout these sections, the last three components of the tuples describing protocols (input symbol set \(\varSigma \), initial set mapping \(\iota \), and output mapping o) play no role. Therefore we represent a DO protocol by the simplified tuple \((Q,M,\delta _s,\delta _r)\), and an IO protocol as just a pair \((Q,\delta )\).

Section 4.2 proves the Pruning Theorems for IO and MFDO protocols. Section 4.3 proves the Shortening Theorem for MFDO protocols. Finally, making use of the tight connection between MFDO and DO protocols, Sect. 4.4 proves the Pruning and Shortening Theorems for DO protocols.

4.1 An auxiliary model: message-free delayed-observation protocols

Immediate observation and delayed observation protocols present similarities. Essentially, in an immediate observation protocol an agent updates its state when it observes that another agent is currently in a certain state q, while in a delayed observation protocol the agent observes that another agent was in a certain state q, provided that agent emitted a message when it was in q. In a message-free delayed observation protocol we assume that a sufficient amount of such messages is always emitted by default; this allows us to dispense with the message, and directly postulate that an agent can observe whether another agent went through a given state in the past. So the model is message-free, and, since agents can observe events that happened in the past, we call it “message-free delayed observation”.

Definition 4.1

A message-free delayed observation (MFDO) protocol is a pair \(\mathscr {P}= (Q, \delta )\), where Q is a set of states and \(\delta : Q^2 \rightarrow Q\) is a transition function. Considering \(\delta \) as a set of transitions, we write \(q \xrightarrow {o} q'\) for \(((q, o), q') \in \delta \). The set of finite executions of \(\mathscr {P}\) is the set of finite sequences of configurations defined inductively as follows. Every configuration \(C_0\) is a finite execution. A finite execution \(C_0, C_1, \ldots , C_i\) enables a transition \(q \xrightarrow {o} q'\) if \(C_i(q)\ge 1\) and there exists \(j\le i\) such that \(C_j(o)\ge 1\). (We say the agent of \(C_i\) at state q observes that there was an agent in state o at \(C_j\).) If \(C_i\) enables \(q \xrightarrow {o} q'\), then \(C_0, C_1, \ldots , C_i, C_{i+1}\) is also a finite execution of \(\mathscr {P}\), where  . An infinite sequence of configurations is an execution of \(\mathscr {P}\) if all its finite prefixes are finite executions.

. An infinite sequence of configurations is an execution of \(\mathscr {P}\) if all its finite prefixes are finite executions.

We assign to every DO protocol an MFDO protocol.

Definition 4.2

Let \(\mathscr {P}_\textit{DO}=(Q, M, \delta _r, \delta _s)\) be a DO protocol. The MFDO protocol corresponding to \(\mathscr {P}_\textit{DO}\) is \(\mathscr {P}_\textit{MFDO}=(Q,\delta )\), where \(\delta \) is the set of transitions \(q \xrightarrow {o} q'\) such that \(q' = \delta _r(q,m)\) for some message \(m\in M\), and o is a state satisfying \( \delta _s(o) = (m,o)\).

Notice that if Q has multiple states \(o_1, \ldots , o_k\) such that \(\delta _s(o_i)=(m,o_i)\) for every \(1 \le i \le k\), then \(\mathscr {P}_\textit{MFDO}\) contains a transition \(q \xrightarrow {o_i} q'\) for every \(1 \le i \le k\).

Example 4.3

Consider the DO protocol \(\mathscr {P}_\textit{DO}=(Q, M, \delta _r, \delta _s)\) where \(Q=M=\left\{ a, b, ab\right\} \) and \(\varSigma =\left\{ a,b\right\} \). The send transitions are given by \(\delta _s(q)=(q, q)\) for all \(q \in Q\), i.e., every state can send a message with its own identity to itself, denoted \(q \xrightarrow {q +} q\). The receive transitions are \(\delta _r(a,b)=ab\) and \(\delta _r(b,a)=ab\), denoted \(a \xrightarrow {b-} ab\) and \(b \xrightarrow {a-} ab\).

The corresponding MFDO protocol is \(\mathscr {P}_\textit{MFDO}=(Q, \delta )\), where \(\delta \) contains the transitions \(a \xrightarrow {b} ab\) and \(b \xrightarrow {a} ab\).

Notice that an agent of a DO protocol can “choose” not to send a message when it goes through a state, and thus not enable a future transition that consumes such a message. This does not happen in MFDO protocols. In particular, if a configuration C of an MFDO protocol enables a transition \(q \xrightarrow {o} q\), then the transition remains enabled forever, and in particular \(C^\omega \) is an execution. This is not the case for a transition \(q \xrightarrow {o-} q'\) of a DO protocol, because each occurrence of the transition consumes one message, and eventually there are no messages left.

Despite this difference, a DO protocol and its corresponding MFDO protocol are equivalent with respect to reachability questions in the following sense. Observe that a configuration of \(\mathscr {P}_\textit{DO}\) with zero messages is also a configuration of \(\mathscr {P}_\textit{MFDO}\). From now on, given a DO protocol, we denote by \(\mathscr {Z}\) the set of its zero-message configurations. For every \(Z \in \mathscr {Z}\), we overload the notation Z by also using it to denote the configuration of the corresponding MFDO protocol which is the restriction of Z to a multiset over Q. The following lemma shows that for any two configurations Z and \(Z'\) with zero messages, \(Z'\) is reachable from Z in \(\mathscr {P}_\textit{DO}\) iff it is reachable in \(\mathscr {P}_\textit{MFDO}\).

Lemma 4.4

Let \(\mathscr {P}_\textit{DO}=(Q, M, \delta _s, \delta _r)\) be a DO protocol, and let \(\mathscr {P}_\textit{MFDO}=(Q,\delta )\) be its corresponding MFDO protocol. Let \(Z,Z' \in \mathscr {Z}\) be two zero-message configurations. Then \(Z\xrightarrow {*}Z'\) in \(\mathscr {P}_{DO}\) if and only if \(Z \xrightarrow {*} Z'\) in \(\mathscr {P}_\textit{MFDO}\).

Proof

DO to MFDO. Let \(Z \xrightarrow {\xi } Z'\) be an execution of \(\mathscr {P}_\textit{DO}\) with \(Z,Z' \in \mathscr {Z}\). Let \(\xi = t_1 t_2 \cdots t_n\), and let \(C_0, C_1,C_2,\ldots ,C_n\) be the configurations describing the number of agents in each state along \(\xi \). In particular, \(C_0=Z\) and \(C_n=Z'\). Define the sequence \(\tau \) as follows. For every transition \(t_i\):

-

If \(t_i\) is a send transition (i.e., if \(t_i = q \xrightarrow {m+} q\) for some q and m), then delete \(t_i\).

Observe that, since the occurrence of \(t_i\) does not change the state of any agent, we have \(C_{i} = C_{i+1}\), and so in particular \(C_{i} \xrightarrow {\epsilon } C_{i+1}\) in \(\mathscr {P}_\textit{MFDO}\).

-

If \(t_i\) is a receive transition, i.e., if \(t_i = q \xrightarrow {m-} q'\) for some q, \(q'\), and m, then replace it by the transition \(q \xrightarrow {o} q'\), where o is any state satisfying \(t_j = o \xrightarrow {m+} o\) for some index \(j \le i\).

Observe that the transition \(t_j\) must exist, because every message received has been sent. Further, since both \(t_i\) and \(q \xrightarrow {o} q'\) move an agent from q to \(q'\), we have \(C_{i} \xrightarrow {u_i} C_{i+1}\) in \(\mathscr {P}_\textit{MFDO}\) for \(u_i = q \xrightarrow {o} q'\).