Abstract

We consider the Random Euclidean Assignment Problem in dimension \(d=1\), with linear cost function. In this version of the problem, in general, there is a large degeneracy of the ground state, i.e. there are many different optimal matchings (say, \(\sim \exp (S_N)\) at size N). We characterize all possible optimal matchings of a given instance of the problem, and we give a simple product formula for their number. Then, we study the probability distribution of \(S_N\) (the zero-temperature entropy of the model), in the uniform random ensemble. We find that, for large N, \(S_N \sim \frac{1}{2} N \log N + N s + {\mathcal {O}}\left( \log N \right) \), where s is a random variable whose distribution p(s) does not depend on N. We give expressions for the moments of p(s), both from a formulation as a Brownian process, and via singularity analysis of the generating functions associated to \(S_N\). The latter approach provides a combinatorial framework that allows to compute an asymptotic expansion to arbitrary order in 1/N for the mean and the variance of \(S_N\).

Similar content being viewed by others

1 Introduction

The Euclidean Assignment Problem (EAP) is a combinatorial optimization problem in which one has to pair N white points to N black points minimizing a total cost function that depends on the Euclidean distances among the points. The Euclidean Random Assignment Problem (ERAP) is the statistical system in which these 2N points are drawn from a given probability measure. In the latter version of the problem, one is interested in characterizing the statistical properties of the optimal solution, such as its average cost, average structural properties, etc...

The EAP problem has applications in Computer Science, where it has been used in computer graphics, image processing and machine learning [20], and it is also a discretised version of a problem in functional analysis, called optimal transport problem, where the N-tuples of points are replaced by probability measures. The optimal transport problem has recently seen a growing interest in pure mathematics, where it has found applications in measure theory and gradient flows [2].

To be more definite, the EAP problem is the optimization problem defined by

where

-

\(J=(\{w_i\},\{b_j\})\) is the instance of the problem, i.e., in the Euclidean version in dimension d, the datum of the positions of N white points \(\{w_i\}\) and N black points \(\{b_j\}\) in \({\mathbb {R}}^d\);

-

\(\pi \) is a bijection between the two sets of points, linking biunivocally each white point to a unique black point. In other words, is a perfect matching. We denote by \({\mathfrak {S}}_N\) the set of all possible bijections between sets of size N;

-

\(H_J(\pi )\) is the cost function

$$\begin{aligned} \begin{aligned} H_J(\pi ) = \sum _{i=1}^N c\left( \mathrm {dist}\left( w_i, b_{\pi (i)}\right) \right) \, \end{aligned} \end{aligned}$$(2)i.e. the sum of the costs of the links of \(\pi \), where a link is weighted using a link cost function \(c(x):{\mathbb {R}}^+\rightarrow {\mathbb {R}}\), depending only on the Euclidean distance among the two points.

In the EAP, J is considered as fixed, while in the ERAP, J is a random variable with a fixed probability distribution.

It is a longstanding project to understand the “phase diagram” of the model, in the plane (p, d), say, for definiteness, in the version of this problem where J is given by 2N i.i.d. points from the d-dimensional hypercube \([0,1]^d\), and the link cost function is given by \(c(x)=x^p\). Even the definition of phase diagram requires a clarification, as it is not obvious which observable should be adopted as order parameter. A first relevant quantity is the scaling in N of the cost of the optimal matching, a quantity that, as we will see in a moment, undergoes phase transitions that are connected to changes in the structural properties of the optimal matchings.

This phase diagram is, to a certain extent, still mysterious, although some progress has been made recently (see for example [1]), and all the information collected so far is coherent with the assumption that, for all (p, d), there exist exponents \(\gamma \) and \(\gamma '\) such that the optimal cost at size N scales as \(E_N \sim N^\gamma (\ln N)^{\gamma '}\), where \(\gamma =\gamma (p,d)\) is continuous and piece-wise smooth, and \(\gamma '=\gamma '(p,d)\) is zero everywhere except possibly on the lines of discontinuity of \(\gamma (p,d)\) (which are the critical lines). It is possible that one critical line is the half-line \(d=2\) and \(p \ge 1\), and two other critical lines reach a tricritical point \((p,d)=(1,2)\), starting from \(d=0\), and passing through \(p=\frac{1}{2}\) and \(p=1\) when \(d=1\). In particular, when \(d=1\), the analytical characterization of optimal solutions is quite tractable [4, 16], and the emerging landscape is as follows:

-

For \(p>1\), the optimal matching is orderedFootnote 1 independently on J, and this is due to the fact that the link cost function is increasing and convex. In this case, plenty of results have been obtained on the statistics of the average optimal cost [7,8,9, 11].

-

For \(0<p<1\), the optimal matching is non-crossing, meaning that pairs of matched points are either nested one inside the other, or disjoint (that is, matched pairs do not interlace) [16]. This property is imposed by the concavity of the link cost function. In this case, the optimal matching is not uniquely determined by the ordering of the points (although the viable candidates are typically reduced, roughly, from n! to \(\sqrt{n!}\)), and a comparatively smaller number of results has been found so far for the random version of the problem [3, 5, 10, 15]. Note that, remarkably, it is expected that, within this interval, there are two transitions: one at \(p=1\), with \(\gamma '(1,1)=0\), somewhat corresponding to the ordered / non-crossing structural transition, and one at \(p=\frac{1}{2}\), with \(\gamma '(\frac{1}{2},1)=1\), corresponding to a transition in which, in the cost of the optimal matching, the leading contribution comes from the few longest edges (of length \({\mathcal {O}}(1)\), when \(p>\frac{1}{2}\)) or from the many shortest edges (of length \({\mathcal {O}}(N^{-1})\), when \(p<\frac{1}{2}\)), and the logarithmic correction at \(p=\frac{1}{2}\) comes from the fact that, in this case, all length scales contribute to the leading part of the optimal cost [5].

-

For \(p<0\), due to the fact that an overall positive factor in c(x) is irrelevant in the determination of the optimal matching, it is questionable if one should consider the analytic continuation of the cost function \(c(x) = x^p\) (which has the counter-intuitive property that the preferred links are the longest ones), or of the cost function \(c(x) = p \, x^p\) (which has the property that the preferred links are the shortest ones, and that the limit \(p \rightarrow 0\) is well-defined, as it corresponds to \(c(x)=\log x\), but has the disadvantage of having average cost \(-\infty \) when \(p \le -d\)). In the first case, the optimal matching is cyclical, meaning that the permutation that describes the optimal matching has a single cycle, and some result on the average optimal cost where obtained in [7]. In the second case, in the pertinent range \(-d<p\le 0\), it seems that the qualitative features of the \(0< p <\frac{1}{2}\) regime are preserved.

We notice that in all the cases above, as well as in all the cases with \(d \ne 1\) and any p, the optimal matching is ‘effectively unique’, meaning that it is almost surely unique, and, even in presence of an instance showing degeneracy of ground states, almost surely an infinitesimal perturbation immediately lifts the degeneracy.

This generic non-degeneracy property does not hold only for the \(d=p=1\) case (note that \(p=1\) is the exponent at which the cost function changes concavity, that is, the critical point for the ordered / non-crossing structural transition). It is known [4] that in this case there are (almost surely) at least two distinct optimal matchings for each instance of the problem, the ordered matching and the Dyck matching [5] which coincide only in the rather atypical case (with probability \(N!/(2N-1)!!\)) in which, for all i, the i-th white and black points are consecutive along the segment.

These observations suggest some fundamental questions for the \((d,p)=(1,1)\) problem:

-

1.

is it possible to characterize all the optimal matchings of a fixed instance J of the EAP?

-

2.

how many optimal configurations are there for a fixed instance J of the EAP?

-

3.

for random J’s, what are the statistical properties of the number of optimal configurations?

The aim of this paper is to answer the three questions above.

Our interest is not purely combinatorial. In fact, the ERAP is a well-known and well-studied toy model of Euclidean spin glass [6, 17,18,19]. The characterization of the set \({\mathcal {Z}}_J \subseteq {\mathfrak {S}}_N\) of the optimal matchings of J is thus related to the computation of the zero-temperature partition function \(Z_J = |{\mathcal {Z}}_J|\) of the disordered model, and of its zero-temperature entropy \(S_{N}(J) =\log (Z_J)\).

In this manuscript we will focus on the statistical properties of the zero-temperature entropy, the thermodynamic potential that rules the physics of the model when the disorder is quenched, i.e. when the timescale of the dynamics of the disorder degrees of freedom of the model is much larger than that of the microscopic degrees of freedom. We leave to future investigations the study of the annealed and replicated partition functions \(Z_J\) and \(Z_J^k\), which are less fundamental from the point of view of statistical physics, but, as we will show elsewhere, have remarkable combinatorial and number-theoretical properties.

1.1 Summary of Results

In Sect. 2.1 we prove that, given a fixed instance J of the \((d,p) = (1,1)\) EAP problem, a matching \(\pi \) is optimal if and only if

where \(k_\mathrm{LB}(z)\) is a function of J that counts the difference of the numbers of white and black points on the left of z, and \(k_\pi (z)\) counts the number of links of \(\pi \) whose endpoints lie on opposite sides of z.

In Sect. 2.2, we show that \({\mathcal {Z}}_J\), the set of optimal matchings, depends on J only through the ordering of the points, while it is independent on their positions (provided that the ordering is not changed). Using a straightforward bijection between the ordering of bi-colored point configurations on the line and a class of lattice paths (the Dyck bridges), we provide a combinatorial recipe to construct the set \({\mathcal {Z}}_J\). As a corollary, we obtain a rather simple product formula for the cardinality \(Z_J := |{\mathcal {Z}}_J|\), that is, roughly speaking,

where the product runs over the descending steps of the Dyck bridge associated with the ordering of the points of configuration J, and the height of a step is, roughly speaking, the absolute value of its vertical position. This implies an analogous sum formula for the entropy \(S_N (J) = \log (Z_J)\) (we stress that the size of J equals 2N using a subscript).

Then, we study the statistics of \(S_N (J)\) when J is a random instance of the problem of fixed size N. Our techniques apply equally well, and with calculations that can be performed in parallel, to two interesting statistical ensembles of 2N points:

-

Dyck bridges: the case in which white and black points are just i.i.d. on the unit interval.

-

Dyck excursions: the restriction of the previous ensemble to the case in which, for all \(z \in [0,1]\), there are at least as many white points on the left of z than black points.

The fact that we can study these two ensembles in parallel is present also in our study for the distribution of the energy distribution of the “Dyck matching”, that we perform elsewhere [5, 10].

In Sect. 3.1, we highlight a connection between \(S_N\) (in the two ensembles) and the observable

over Brownian bridges (or excursions) \({\varvec{\sigma }}\). Simple scaling arguments imply that

is a random variable with a non-trivial limit distribution for large N.

We provide integral formulas for the integer moments of s, and we use these formulas to compute analytically its first two moments in the two ensembles.

In Sect. 3.2, we complement this analysis with a combinatorial framework at finite size N. We use this second approach to provide an effective strategy for the computation of finite-size corrections, that we illustrate by calculating the first and second moment, for both bridges and excursions.

In particular, we can establish that

where s is a random variable whose distribution depends on the ensemble (among bridges and excursions). For Dyck bridges we have

and for Dyck excursions we have

In Sect. 4 we provide numerical evidence that the distribution of the rescaled entropy s is non-Gaussian (but we leave unsolved the question whether the centered distribution for the excursions is an even function), and we confirm that the predicted values for the first two moments match with the simulated data.

With both our approaches it seems possible to access also higher-order moments, however we cannot prove at present that, for any finite moment, the evaluation can be performed in closed form, and that (as we conjecture) the result is in the form of a rational polynomial that only involves \(\gamma _E\) and (multiple) zeta functions. We will investigate these aspects in future works.

2 Optimal Matchings at \(p=1\)

In the following, we focus on the EAP with \(p=d=1\). We have N white points \(W=\{w_{i}\}_{i=1}^{N}\) and N black points \(B=\{b_{i}\}_{i=1}^{N}\) on a segment [0, L] (i.e., we have ‘closed’ boundary conditions, instead of ‘periodic’, as we would have if the points were located on a circle). We assume that the instance is generic (i.e., no two coordinates are the same), and we label the points so that the lists above are ordered (\(w_i<w_{i+1}\) and \(b_i<b_{i+1}\)).

2.1 Characterization of Optimal Matchings

We start by giving an alternate, integral representation of the cost function \(H_J(\pi )\). For a given matching \(\pi \), and every \(z \in {\mathbb {R}}\) which is not a point of W or B, define the function

In words, \(\kappa (z,x,y)\) is just the indicator function over the segment with extreme points x and y, and \(k_\pi (z)\) counts the number of links of \(\pi \) that have endpoints on opposite sides of z.

Furthermore, define the function

where \(\#(I)\) denotes the cardinality of the set I. As well as \(k_{\pi }(z)\), also \(k_\mathrm{LB}\) is defined for all \(z \in {\mathbb {R}} \setminus (W \cup B)\), and counts the excess of black or white points on the left of z.

Then, we have two simple observations

Proposition 1

At \(p=1\)

Moreover, at \(p=1\), for all \(\pi \) and all z,

This has the immediate corollary that, for all \(\pi \),

Now, call \(\pi _\mathrm{id}\) the identity permutations, that is the so-called ordered matching. By simple inspection, we have that \(H_J(\pi _\mathrm{id}) = H_J^\mathrm{LB}\). This implies that \(\pi _\mathrm{id}\) is optimal, and more generally

Corollary 1

\(\pi \in {\mathcal {Z}}_J\) iff the functions \(k_\mathrm{LB}\) and \(k_{\pi }\) coincide.

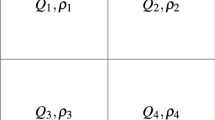

See Fig. 1 for the description of all optimal matchings at \(N=2\).

In the following we will provide a simple algorithm to construct the optimal matchings of a given instance. In order to do this, we shall now give another characterization of optimal matchings:

Definition 1

Let \(\pi \) be a matching. Let us call \(P=(p_1,\ldots ,p_{2N})\) the ordered list of the points in \(W \cup B\). For \(1\le i \le 2N\), we call \(P_i(\pi )\) the stack of \(\pi \) at i, that is, the set of points in \(\{p_1,\ldots ,p_i\}\) that are paired by \(\pi \) to points in \(\{p_{i+1},\ldots ,p_{2N}\}\) (see Fig. 2).

The stack of a matching at position i is given by all the points on the left side of point i (including i itself) that are matched to a point on the right side of point i. In the picture, \(w_{i}\) is the i-th white point from the right, and analogously \(b_{i}\) is the i-th black point from the right. At the locations specified by the dashed lines, we show the stack of the represented matching

Proposition 2

\(\pi \in {\mathcal {Z}}_J\) iff, for all \(1 \le i \le 2N\), the stack of \(\pi \) at i is either empty or monochromatic, i.e. if \(P_i(\pi ) \cap W = \varnothing \) or \(P_i(\pi ) \cap B = \varnothing \).

Proof

Suppose that for, some \(1 \le i \le 2N\), the stack of \(\pi \) at i is non-empty and non-monochromatic. Then there are \(p_w \in P_i(\pi ) \cap W\) and \(p_b \in P_i(\pi ) \cap B\) which are matched to \(q_b \in P_i^c(\pi ) \cap B\) and \(q_w \in P_i^c \cap W\), respectively. In the matching \(\pi '\) in which we swap these two pairs, we have \(k_{\pi '}(z) = k_{\pi }(z)-2\) for all \(\max (p_w,p_b)<z<\min (q_w,q_b)\), and \(k_{\pi '}(z) = k_{\pi }(z)\) elsewhere, thus, by Corollary 1, \(\pi \) cannot be optimal.

Viceversa, let \(\pi \) have only empty or monochromatic stacks. This means that, in a right neighbourhood of \(p_i\), the cardinality of the stack, which by definition coincides with \(k_\pi (x)\), is exactly given by \(k_\mathrm{LB}\). Furthermore, both these functions are constant on the intervals between the points (where they jump by \(\pm 1\)), so the two functions coincide everywhere on the domain. This means, by Corollary 1, that \(\pi \) must be optimal. \(\square \)

2.2 Enumeration of Optimal Matchings

We are now interested in enumerating the optimal matchings at \(p=1\) for a fixed configuration J of size N. First of all, we give an alternative representation of J (already adopted in [5]) that will be useful in the following. A configuration J can be encoded by sorting the 2N points in order of increasing coordinate (as in Definition 1 above), and defining

-

a vector of spacings \(\mathbf {s}(J) \in ({\mathbb {R}}^+)^{2N}\), given by \(\mathbf {s}(J)=(p_1,p_2-p_1,p_3-p_2,\ldots ,p_{2N}-p_{2N-1})\);

-

a vector of signs, \({\varvec{\sigma }}(J) \in \{-1,+1\}^{2N}\) such that if \(p_i\) is white (resp. black), \(\sigma _{i}=+1\) (resp. \(-1\)). Note that \(\sum _i \sigma _i = 0\).

It is easily seen that the criterium in Proposition 2 is stated only in terms of \({\varvec{\sigma }}(J)\). This makes clear that the set \({\mathcal {Z}}_J\) itself is fully determined by \({\varvec{\sigma }}(J)\). Thus, from this point onward, we understand that \({\mathcal {Z}}({\varvec{\sigma }})\), \(Z({\varvec{\sigma }})\) and \(S({\varvec{\sigma }})\) are synonims of the quantities \({\mathcal {Z}}_J\), \(Z_J\) and \(S_J\), for any J with \({\varvec{\sigma }}(J)={\varvec{\sigma }}\).

Binary vectors can be represented as lattice paths, i.e. paths in the plane, starting at the origin and composed by up-steps (or rises) \((+1,+1)\) and down-steps (or falls) \((+1,-1)\). So we have a bijection among zero-sum binary vectors \({\varvec{\sigma }}\) and lattice bridges, in which the i-th step of the path is \((i,\sigma _i)\). The bijection between color orderings, binary vectors and lattice paths is so elementary that in the following, with a slight abuse of notation, we will just identify the three objects.

We are interested in two classes of binary vectors / lattice paths:

-

Dyck bridges \({\mathcal {B}}_N\) of semi-lenght (size) N. These are lattice paths with an equal number of up- and down-steps. They are precisely in bijection with the color orderings of N white and N black points, i.e. with the color ordering of all the possible configurations J. There are \(B_N = |{\mathcal {B}}_N| = \left( {\begin{array}{c}2N\\ N\end{array}}\right) \) Dyck bridges of size N.

-

Dyck paths \({\mathcal {C}}_N\) of semi-length (size) N. These are lattice bridges that never reach negative ordinate (we will also call them excursions, in analogy with their continuum counterparts). They are in bijection with configurations J in the forementioned “Dyck excursions” ensemble. There are \(C_N = |{\mathcal {C}}_N| = \frac{1}{N+1} B_N\) Dyck paths of size N.

In the following, the generating function of the series \(B_N\) and \(C_N\) will turn useful. We have

Our notion of height will be associated to the steps of the path. We call \(h_{i}({\varvec{\sigma }})\) the height of the path at step i, that is, the height of the midpoint of the i-th step of the path, that in terms of the binary vector reads

The choice of the midpoint to compute the height is arbitrary, but has the advantage of being a symmetric definition with respect to reflections w.r.t. the x-axis, while taking values in an equispaced range of integers. We can then define \({\bar{h}}_i\) as the positive integers

Then we have

Lemma 1

Proof

The proof goes through the characterisation of the stacks of optimal configurations, given in Proposition 2. First of all, notice that the list of positions given by the condition \(h_i \sigma _i < 0\) is the one at which the stack at \(i-1\) decreases its size, because one point in the stack is paired to the i-th point. We shall call closing steps the elements of this set (and closing points the associated points in P), and opening steps those in the complementary set. Indeed, the cardinality of the stack at \(i-1\) is exactly \({\bar{h}}_i\), while the sign of \(h_i\) determines the colour of the points in the stack at \(i-1\) (which is also the colour of the stack at i, unless the latter is empty). So, there are exactly \({\bar{h}}_i\) choices for the pairing at i, while, if i is not in the list above, the choice is unique. As the cardinalities of the stacks are the same for all optimal configurations, the choice at i does not affect the number of possible choices at \(j>i\), and we end up with Eq. (18). \(\square \)

Notice that Eq. (18) is trivially equivalent to

The proof above has a stronger implication. Write each optimal matching \(\pi \in Z_j\) in the form \(\pi =((i_1,j_1),\ldots ,(i_N,j_N)\}\) where \(i_a\) and \(j_a\) are in the range \(\{1,\ldots ,2N\}\), and are the indices of the white and black points (altogether), ordered from left to right. Order the pairs canonically, by setting \(i_a<j_a\) for all \(a=1,\ldots ,N\), and the \(i_a\)’s in increasing order. Observe that, calling \(\varvec{i}(\pi )\) the list of the \(i_a\)’s in the resulting order, we have that all the optimal matchings have the same list \(\varvec{i}\) (and no non-optimal matching has this same list). Then, call \(\varvec{j}(\pi )\) the list of the \(j_a\)’s, in the order induced by the \(i_a\)’s. Finally, order the set \(Z_J\) according to the lexicographic order of the strings \(\varvec{j}(\pi )\).

Despite the fact that, as is apparent from Lemma 1, the typical values of \(Z_J\) are potentially at least exponential in N, we can construct the m-th of the \(Z_J\) solutions (in the order above) by a polynomial-time algorithm (which takes on average time \(\sim N \log N\) and space \(\sim \sqrt{N}\) if the suitable data structure is used). The algorithm goes as follows. First, rewrite m in the form \(m-1=a_1 + a_2 {\bar{h}}_1 + a_3 {\bar{h}}_1 {\bar{h}}_2 + \cdots + a_N {\bar{h}}_1 {\bar{h}}_2 \cdots {\bar{h}}_{N-1}\), with \(0 \le a_j < {\bar{h}}_j\). Then, say that \(i(j)=i\) if the j-th closing point is \(p_i\). Now, produce the N pairs of the m-th optimal matching by pairing the closing points, in order of increasing j, by pairing this point to the \(a_j\)-th of the stack in \(i(j)-1\), when this is sorted in increasing order.

3 Statistical Properties of \(S_N\)

We are now interested in the statistical properties of the entropy

when \({\varvec{\sigma }}(J)\) is a random variable induced by some probability measure on the space of configurations J of 2N points, and N tends to infinity. The subscript N reminds us that we are at size \(|W|=|B|=N\).

In matching problems, the typical choices for the configurational probability measure are factorized over a measure on the spacings and a measure on the color orderings, i.e.

(see [5] for more details and examples). As \(S_N(J) = S_N({\varvec{\sigma }}(J))\), we can again forget about the spacing degrees of freedom, and study the statistics of \(S_N({\varvec{\sigma }})\) induced by some measure \(\mu _\mathrm{color}({\varvec{\sigma }})\). In particular, we will study the cases in which \({\varvec{\sigma }}\) is uniformly drawn from the set of Dyck paths, or uniformly drawn from the set of Dyck bridges.

3.1 Integral Formulas for the Integer Moments of \(S_N\) via Wiener Processes

It is well known (see Donsker’s theorem [12]) that lattice paths such as Dyck paths and bridges converge, as \(N\rightarrow \infty \) and after a proper rescaling, to Brownian bridges and Brownian excursions. Brownian bridges are Wiener processes constrained to end at null height, while Brownian excursions are Wiener processes constrained to end at null height and to lie in the upper half-plane. The correct rescaling of the steps of the lattice paths that highlights this convergence is given by \((+1,\pm 1) \rightarrow \left( +\frac{1}{N}, \pm \frac{1}{\sqrt{N}} \right) \).

These scalings suggest to consider a rescaled version of the entropy

In the limit \(N\rightarrow \infty \), the rescaled entropy will converge to an integral operator over Wiener processes

where \({\varvec{\sigma }}(x)\) is a Brownian bridge/excursion.

The integer moments of \(s[{\varvec{\sigma }}]\) can be readily computed as correlation functions of the Brownian process:

where \(\varDelta _k \subset {\mathbb {R}}^k\) is the canonical symplex \(\{0 = t_0< t_1< t_2< \cdots< t_k < t_{k+1} = 1\}\), and \({\mathcal {D}}_\mathrm{B/E}[{\varvec{\sigma }}]\) is the standard measure on the Brownian process of choice among bridges and excursions. The last integral is the probability that the Brownian process we are interested in starts and ends at the origin and visits the points \((t_1,x_1),\dots ,(t_k, x_k )\), while subject to its constraints.

Let us consider Brownian bridges first. In this case, the probability that a Wiener process travels from \((t_i,x_i)\) to \((t_f,x_f)\) is given by \({\mathcal {N}}( x_f-x_i | 2(t_f-t_i))\) where \({\mathcal {N}}(x | \sigma ^2 )\) is the p.d.f. of a centered Gaussian distribution with variance \(\sigma ^2\). The factor 2 comes from Donsker’s theorem, and is due to the fact that the variance of the distribution of the steps in the lattice paths is exactly 2. Thus, for Brownian bridges

where \(x_0=0\), \(x_{k+1}=0\) and the factor \(\sqrt{4 \pi }\) is a normalization, so that

Brownian excursions can be treated analogously using the reflection principle. In this case, the conditional probability that a Wiener process travels from \((t_i,x_i)\) to \((t_f,x_f)\) without ever reaching negative heights, given that it already reached \((t_i ,x_i )\), is given by \({\mathcal {N}}( x_f-x_i | 2 (t_f - t_i) ) - {\mathcal {N}}( x_f+x_i | 2 ( t_f -t_i ) )\) for \(x_{i,f} > 0\), while for \(x_{i}=0\) and \(x_f = x\) (or viceversa) it equals \(\frac{|x|}{2 (t_f -t_i )} {\mathcal {N}}(x| 2 (t_f -t_i ))\). Moreover, now all \(x_i\)’s are constrained to be positive. Thus, for Brownian excursions

In both cases, the Gaussian integrations on the heights \(x_i\) can be explicitly performed. First of all, we replace

Then, we treat the contact terms. In the case of bridges, the contact terms can be rewritten (under integration) as

where the hyperbolic sine term is discarded due to the parity of the rest of the integrand in the variables \(x_a\). In the case of excursions, the contact term instead reads

In both cases, we can expand the hyperbolic function in power-series, so that the integrations in the \(x_a\) variables are now factorized and of the kind

(in the case of excursions, a factor 1/2 must be added to take into account the halved integration domain).

Using these manipulations, the first two moments for both bridges and excursions can be analytically computed. We detail the computations in Appendix A. The results are:

where \(\gamma _E\) is the Euler–Mascheroni constant, and

The approach presented in this Section is simple in spirit, and allows to connect our problem to the vast literature on Wiener processes. Moreover, it is suitable for performing Monte Carlo numerical integration to retrieve the moments of \(s({\varvec{\sigma }})\).

In this Section we worked directly in the continuum limit. In the next Section, we provide a combinatorial approach that allows to recover the values of the first two moments in a discrete setting, and to compute finite-size corrections in the limit \(N\rightarrow \infty \).

3.2 Combinatorial Properties of the Integer Moments of \(S_N\) at Finite N

In this section, we introduce a combinatorial method to compute the moments of \(S_N({\varvec{\sigma }})\) in the limit \(N\rightarrow \infty \). This new approach allows to retain informations on the finite-size corrections.

The underlying idea is to reproduce Eq. (24) in the discrete setting for the variable \(S_N({\varvec{\sigma }})\), and to study its large-N behaviour using methods from analytic combinatorics.

We start again from

In the following, the superscript/subscript \(\mathrm T=E,B\) will stand for Dyck paths (excursions, E) and Dyck bridges (B) respectively, \(T_N = C_N,B_N\) and \({\mathcal {T}}_N = {\mathcal {C}}_N, {\mathcal {B}}_N\); we will mantain the notation unified whenever possible.

The k-th integer moment equals

where \({\mathcal {M}}^\mathrm{(T)}_N(t_1, \dots , t_c;{\bar{h}}_1, \dots , {\bar{h}}_c)\) is the number of paths of type \(\mathrm T\) that has closing steps at horizontal positions \(t_1, \dots t_c\), and at heights \(h_1 = \pm ({\bar{h}}_1 - 1/2), \dots , h_c = \pm ({\bar{h}}_c - 1/2)\).

The last equation reproduces, as anticipated earlier, Eq. (24) in the discrete setting. Notice that here we must take into account the multiplicities \(\nu _a\), while in the continuous setting we could just set \(c=k\) and \(\nu _a=1\) for all a, as the contribution from the other terms is washed out in the continuum limit. This suggests that, in this more precise approach, we will verify explicitly that the leading contributions in the large-N limit comes from the \(c=k\) term of Eq. (35).

In order to study Eq. (35), we take the following route. As this equation depends on N only implicitly through the summation range, and explicitly through a normalization, we would like to introduce a generating function

that will decouple the summation range over the variables \(t_{i+1}-t_i\). By singularity analysis [14], the asymptotic expansion for \(N \rightarrow \infty \) of \(M^\mathrm{(T)}_{N,k}\) will be then retrieved by the singular expansion of \(M_k^\mathrm{(T)}(z)\) around its dominant singularity.

We start by giving an explicit form for \({\mathcal {M}}^\mathrm{(T)}_N\) for Dyck paths and Dyck bridges.

Proposition 3

In the case of Dyck bridges, we have

where

is the number of unconstrained paths that start at (x, y) and end at \((x+a,y+b)\).

In the case of Dyck paths, we have

where

is the number of paths that start at (x, y), end at \((x+a,y+b)\) and never fall below height \(y-d\), and \(\theta (x)=1\) for \(x\ge 0\) and zero otherwise,

Notice that, while in general the \(\theta \) factors are necessary for the definition of \(C_{a,b,d}\) in terms of \(B_{a,b}\), in our specific case they are all automatically satisfied, as \({\bar{h}}_a \ge 1\) for all \(1\le a \le c\).

Proof

Let us start by considering Dyck bridges. The idea is to decompose a path contributing to the count of \({\mathcal {M}}^\mathrm{(B)}_N (t_1,\ldots ,t_c;{\bar{h}}_1,\ldots ,{\bar{h}}_c)\) around its closing steps:

-

the first closing step starts at coordinate \(\left( t_1-1,\pm {\bar{h}}_1 \right) \). There are \(B_{t_1-1,{\bar{h}}_1} + B_{t_1-1,-{\bar{h}}_1} = 2B_{t_1-1,{\bar{h}}_1}\) different portions of path joining the origin to the starting point of the first closing step.

-

the a-th closing step happens \(t_a - t_{a-1}-1\) steps after the \((t-1)\)-th one, and, based on the relative sign of the heights of the two closing steps, their difference in height equals \({\bar{h}}_a - ({\bar{h}}_{a-1}-1)\) or \({\bar{h}}_a + ({\bar{h}}_{a-1}-1)\). Thus, there are \(B_{t_a - t_{a-1} -1, {\bar{h}}_a - ({\bar{h}}_{a-1}-1)} + B_{t_a - t_{a-1} -1, {\bar{h}}_a + ({\bar{h}}_{a-1}-1)}\) different portions of path connecting the two closing steps.

-

the last closing step happens \(2N-t_c\) steps before the end of the path and at height \(h_c = \pm \left( {\bar{h}}_c - \frac{1}{2} \right) \). Thus, there are \(B_{2N-t_c,{\bar{h}}_c-1}\) portions of path concluding the original path.

The product of the contribution of each subpath recovers Eq. (37).

The case of Dyck paths can be treated analogously, with a few crucial differences. In fact, each of the portions of path between the i-th and \((i+1)\)-th closing steps (which, for excursions, are just down-steps) has now the constraint that it must never fall below the horizontal axis, i.e. must never reach a height \(\left( {\bar{h}}_i -\frac{1}{2} \right) \) lower with respect to its starting step. Let us count these paths. A useful trick to this end is the discrete version of the reflection method, that we already used in Sect. 3.1. Call a the total number of steps, b the relative height of the final step with respect to the starting step, and c the maximum fall allowed with respect to the starting step. Moreover, call bad paths all paths that do not respect the last constraint. A bad path is characterized by reaching relative height \(-c-1\) at some point (say, the first time after s steps). By reflecting the portion of path composed of the first s steps, we obtain a bijection between bad paths and unconstrained paths that start at relative height \(-2(c+1)\), and reach relative height b after a steps. Thus, the total number of good paths \(C_{a,b,d}\) is given by subtraction as

This line of thought holds for all values of \(a,d > 0\) and \(b \ge -d\); if \(b < -d\) we just have \(C_{a,b,d} = 0\). Moreover, by properties of \(B_{a,b}\), \(C_{a,b,d}=0\) if \(a+b\) is not an even number.

Equation (39) can be easily established by decomposing a generic (marked) path around its closing steps, and by applying our result above. \(\square \)

The fact that we want to exploit now is that, while a given binomial factor \(B_{a,b}\) (and its constrained variant \(C_{a,b,d}\)) are not easy to handle exactly, their generating function in a have simple expressions, induced by analogously simple decompositions, that we collect in the following:

Proposition 4

where, as in (15),

Proof

To obtain Eq. (42), observe that a path going from (0, 0) to (a, b) with non-negative b can be uniquely decomposed as \(w = w_{0} u_{1} w_{1} u_{2} w_{2} \dots u_{h} w_{h}\) where \(u_{i}\) is the right-most up-step of w at height \(i-1/2\), and \(w_{i}\) is a (possibly empty) Dyck path, for all \(i=1,\dots ,h\), while \(w_{0}\) is a (possibly empty) Dyck bridge. Thus,

For negative b, the same reasoning holds with \(u_i\)’s replaced by down-steps, hence the absolute value on b in the result. Equation (42) then follows easily.

Equation (43) follows from \(C_{a,b,d} = \left( B_{a,b} - B_{a,b+2(d+1)} \right) \theta (b+d)\).

Equation (44) can be derived either as a special case of Eq. (43), or as a variation of (42) where \(w_0\) must be a Dyck path. The equivalence of these two decompositions is granted by the fact that \(C(z) = \left( 1-z C(z)^2 \right) B(z)\). \(\square \)

Let us introduce the symbol \(x=x(z)\) for the recurrent quantity

which, if used to parametrise the other relevant quantities, gives

Then, Eq. (36) reads

where

Proposition 5

Using x to denote x(z), we have that for bridges

and for excursions

Proof

First of all, we notice that

for some functions \(f_i\) that depend on the type of paths \(\mathrm T\) that we are studying. Thus, by performing the change of summation variables \(\{t_1,\ldots ,t_c,N\} \rightarrow \{\alpha _1, \ldots , \alpha _{c+1}\}\) such that

we have that

so that all summations are now untangled. Equations (51) and (52) can now be recovered by using the explicit form of the functions \(f_i\) for Dyck paths and Dyck bridges given in Proposition 3, and the analytical form for the generating functions given in Proposition 4. Again, notice that the \(\theta \) functions are all automatically satisfied as \({\bar{h}}_i \ge 1\) for all \(1 \le i \le c\). \(\square \)

At this point, we have obtained a quite explicit expression for \(M^\mathrm{(T)}_k(z)\). In the following sections, we will study the behaviour near the leading singularities of the quantities above, for the first two moments, i.e. \(k=1,2\). Higher-order moments require a more involved computational machinery that will be presented elsewhere.

3.2.1 Singularity Analysis for \(k=1\)

We start our analysis from the simplest case, \(k=1\), to illustrate how singularity analysis is applied in this context. We expect to recover Eq. (32). From now on, for simplicity, as the \({\bar{h}}\) indices are mute summation indices, we will call them simply h. We have

and

where \({{\,\mathrm{Li}\,}}_{s,r}(x) = \sum _{h\ge 1} h^{-s} \left( \log (h) \right) ^r x^h\) is the generalized polylogarithm function [13].

In both cases, the dominant singularity is at \(x(z)=1\), i.e. at \(z=1/4\). We have

and

where \({{\,\mathrm{L}\,}}(x) = \log \left( (1-x)^{-1} \right) \). Here and in the following, the rewriting of \({{\,\mathrm{Li}\,}}_{\alpha ,r}(x)\) (for \(-\alpha , r \in {\mathbb {N}}\)) in the form \(P({{\,\mathrm{L}\,}}(x))/(1-x)^{1-\alpha }\), with P(y) a polynomial of degree r, can be done either by matching the asymptotics of the coefficients in the two expressions (and appealing to the Transfer Theorem), or by using the explicit formulas in [14, Thm. VI.7]. In this paper we mostly adopt the first strategy. Passing to the variable z gives

Thus, the singular expansion of \(M^\mathrm{(T)}_1(z)\) is given by

and

The behaviour of \(T_N M^\mathrm{(T)}_{N,1} = [z^N] M_1 ^\mathrm{(T)} (z)\) for large N can be now estimated by using the so-called transfer theorem, that allows to jump back and forth between singular expansion of generating functions and the asymptotic expansion at large order of their coefficients (see [14], in particular Chapter VI for general informations, the table in Fig. VI.5 for the explicit formulas). The partinent result is also reported here in Appendix B, namely in Eq. (102). In practice, we can expand the approximate generating functions given in Eqs. (61) and (62) to get an asymptotic approximation for \(T_N M^\mathrm{(T)}_{N,1}\). Finally, using the asymptotic expansion for \(T_N\), i.e.

for \(N\rightarrow \infty \), we obtain an asymptotic expansion for the first moment of \(S_N\) (which agrees with what we already found in Eq. (32))

Notice that, although we have truncated our perturbative series at the first significant order, in principle the combinatorial method gives us access to finite-size corrections at arbitrary finite order.

3.2.2 Singularity Analysis for \(k=2\)

For \(k=2\), we compute Eq. (49) by studying separately terms at different values of c. Let us start from bridges. For \(c=1\), and thus \(\nu _1=2\), we have

while for \(c=2\), and thus \(\nu _1=\nu _2=1\), we have

The presence of the absolute value \(|h_2-h_1+1|\) forces us to consider separately the case \(h_1 > h_2\) and \(h_1 \le h_2\). In the first case we get

while in the second case we obtain

The combination of these two terms gives

In the case of excursions, the computations are completely analogous, and give

In order to compute the singular expansion of \({{\,\mathrm{Li}\,}}_{0,2}(x)\) and of \(\sum _{h\ge 1}( \log (h^2+h) \log (h!) x^h)\), one can again use the transfer theorem, obtaining

and

We see that, for both Dyck bridges and paths, the \(c=1\) term is subleading with respect to the \(c=2\) term, by a factor \(1-x\) which, after use of the Transfer Theorem, implies a factor \({\mathcal {O}}(N^{-1})\). It is easy to imagine (and in agreement with the discussion in Sect. 3.1) that this pattern will hold also for all subsequent moments, that is, the leading term for the k-th moment will be given by the \(c=k\) contribution alone, all other terms altogether giving a correction \({\mathcal {O}}(N^{-1})\).

After substituting x(z) with its expansion around \(z = 1/4\), and after having performed a series of tedious but trivial computations, we obtain

Finally, we recover the moments of \(s({\varvec{\sigma }})\)

Details for these computations can be found in Appendix B.

Top: distribution of s for the Dyck excursions (left) and Dyck bridges (right) ensembles at various sizes N. The shaded fillings are histograms, while the black profiles are kernel density estimates. Bottom: ratio between empirical and predicted values of the first two moments as a function of the size N, for both ensembles

4 Numerical Results

To check our analytical predictions, we performed an exact sampling of our configurations, and collected a statistics on the resulting entropies, from which we estimated the distribution of the rescaled entropy

For both bridges and excursions, and for values of \(N=10^3,10^4,10^5,10^6\), we sampled uniformly \(10^5\) random paths.

Figure 3 summarises our results. We clearly see that as N grows larger and larger, the prediction for the first two moments of s matches better and better the empirical value in both ensembles. The distribution of s is clearly non-Gaussian in the case of Dyck bridges. For Dyck excursions, a quick Kolmogorov test rules out the Gaussian hypothesis (in particular, more easily, the 4-th centered moment is only \(\sim 2.7\) times the 2-nd centered moment squared, instead of a factor 3 required for gaussianity). However, at the present statistical precision we cannot rule out the hypothesis that the centered distribution is symmetric, i.e. that all the centered odd moments vanish, although we have no theoretical argument for conjecturing this fact, neither from the probabilistic approach of Sect. 3.1, nor from the combinatorial approach of Sect. 3.2. It would be interesting to understand the reasons of this unexpected (to us) apparent symmetry in the distribution for Dyck excursions.

The code and the raw data used to produce Fig. 3 are available at https://github.com/vittorioerba/EntropyMatching.

Availability of data and material

Not applicable.

Notes

The ordered matching is the one in which the first white point from the left is matched with the first black point and so on, and is represented by the identity permutation \(\pi _\mathrm{ord}(i) = i\) if the points are sorted by increasing coordinate.

References

D’Achille, M.P.: Statistical properties of the Euclidean random assignment problem. Theses, Université Paris-Saclay (2020). https://tel.archives-ouvertes.fr/tel-03098672

Ambrosio, L., Gigli, N.: A User’s Guide to Optimal Transport. In: Ambrosio, L., Bressan, A., Helbing, D., Klar, A., Zuazua, E. (eds.) Modelling and Optimisation of Flows on Networks: Cetraro, Italy 2009, Editors: Benedetto Piccoli, Michel Rascle, Lecture Notes in Mathematics, pp. 1–155. Springer, Berlin, Heidelberg (2013). https://doi.org/10.1007/978-3-642-32160-3_1

Bobkov, S.G., Ledoux, M.: Transport Inequalities on Euclidean spaces with non-Euclidean metrics. J. Fourier Anal. Appl. 26(4), 60 (2020). https://doi.org/10.1007/s00041-020-09766-2

Boniolo, E., Caracciolo, S., Sportiello, A.: Correlation function for the Grid-Poisson Euclidean matching on a line and on a circle. J. Stat. Mech. 2014(11), P11023 (2014). https://doi.org/10.1088/1742-5468/2014/11/P11023

Caracciolo, S., D’Achille, M.P., Erba, V., Sportiello, A.: The Dyck bound in the concave 1-dimensional random assignment model. J. Phys. A 53(6), 064001 (2020). https://doi.org/10.1088/1751-8121/ab4a34

Caracciolo, S., D’Achille, M.P., Malatesta, E.M., Sicuro, G.: Finite size corrections in the random assignment problem. Phys. Rev. E 95, 052129 (2017). https://doi.org/10.1103/PhysRevE.95.052129

Caracciolo, S., D’Achille, M.P., Sicuro, G.: Random euclidean matching problems in one dimension. Phys. Rev. E 96, 042102 (2017). https://doi.org/10.1103/PhysRevE.96.042102

Caracciolo, S., D’Achille, M.P., Sicuro, G.: Anomalous scaling of the optimal cost in the one-dimensional random assignment problem. J. Statist. Phys. 174(4), 846–864 (2019). https://doi.org/10.1007/s10955-018-2212-9

Caracciolo, S., Di Gioacchino, A., Malatesta, E.M., Molinari, L.G.: Selberg integrals in 1D random Euclidean optimization problems. J. Stat. Mech. 2019, 063401 (2019). https://doi.org/10.1088/1742-5468/ab11d7

Caracciolo, S., Erba, V., Sportiello, A.: The p-Airy distribution. arXiv preprint arXiv:2010.14468 (2020)

Caracciolo, S., Sicuro, G.: On the one dimensional euclidean matching problem: exact solutions, correlation functions and universality. Phys. Rev. E 90, 042112 (2014). https://doi.org/10.1103/PhysRevE.90.042112

Donsker, M.D.: Justification and extension of Doob’s heuristic approach to the Kolmogorov-Smirnov theorems, pp. 277–281. The Institute of Mathematical Statistics (1952). https://doi.org/10.1214/aoms/1177729445

Fill, J.A., Flajolet, P., Kapur, N.: Singularity analysis, Hadamard products, and tree recurrences. J. Comput. Appl. Math. 174(2), 271–313 (2005). https://doi.org/10.1016/j.cam.2004.04.014

Flajolet, P., Sedgewick, R.: Analytic Combinatorics. Cambridge University Press, Cambridge; New York (2009)

Juillet, N.: On a solution to the Monge transport problem on the real line arising from the strictly concave case. SIAM J. Math. Anal. 52(5), 4783–4805 (2020). https://doi.org/10.1137/19M1277242

McCann, R.J.: Exact solutions to the transportation problem on the line. Proc. R. Soc. Lond. A 455(1984), 1341–1380 (1999). https://doi.org/10.1098/rspa.1999.0364

Mézard, M., Parisi, G.: Replicas and optimization. Le Journal de Physique - Lettres 46(17), 771–778 (1985). https://doi.org/10.1051/jphyslet:019850046017077100

Mézard, M., Parisi, G.: Mean-field equations for the matching and the travelling salesman problems. Europhys. Lett. 2(12), 913–918 (1986). https://doi.org/10.1209/0295-5075/2/12/005

Orland, H.: Mean-field theory for optimization problems. Le Journal de Physique - Lettres 46(17), 773–770 (1985). https://doi.org/10.1051/jphyslet:019850046017076300

Peyré, G., Cuturi, M.: Computational optimal transport: With applications to data science. Found. Trends Mach. Learn. 11(5–6), 355–607 (2019). https://doi.org/10.1561/2200000073

Funding

Open access funding provided by Universitá degli Studi di Milano within the CRUI-CARE Agreement. A. Sportiello is partially supported by the Agence Nationale de la Recherche, Grant Numbers ANR-18-CE40-0033 (ANR DIMERS) and ANR-15-CE40-0014 (ANR MetACOnc).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to all phases of the research and writing process.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Code availability

Numerical code is available at https://github.com/vittorioerba/EntropyMatching.

Additional information

Communicated by Federico Ricci-Tersenghi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

The First Two Moments of the Rescaled Entropy in the Integral Representation

In this Appendix we provide the computation for the first two moments of the rescaled entropy in the bridges ensemble, using the integral representation. The case of excursion can be treated analogously.

1.1 Useful Formulas

We start by providing some useful identities.

We start giving an explicit representation for the non-integer Gaussian moments:

In the following we shall also use the duplication formula for the Gamma function

and the expansion for the hyperbolic cosine

Finally, we recall the definition of the hypergeometric function

and the Euler identity

which implies that

1.2 First Moment

We wish to compute

First of all, we substitute \(\log x^2 \rightarrow x^{2k} \). We will later take the derivative in k and evaluate our expressions for \(k=0\) to obtain back the logarithmic contribution.

Thus, we start from

where \(\lambda = 4 t (1 - t)\) so that

Then,

where \(\psi _0 (z) = \frac{d}{d z} \log \varGamma (z) \) is the digamma function, and

Finally,

1.3 Second Moment

We wish to compute

Again, we substitute \(\log x^2 \rightarrow x^{2k_1}\) and \(\log y^2 \rightarrow y^{2k_2}\), and we will recover the correct logarithmic factors by taking derivatives in \(k_1\) and \(k_2\) later. We start by dealing with the contact term:

where the hyperbolic sine was discarded due to parity in x and y. With this substitution, the integrals in x and y are decoupled, and can be evaluated as

where

(we recall that \(t_0 =0\) and \(t_3 = 1\) by convention). We also notice that the following identity holds

as it can be explicitly verified by substituting the definitions.

By using the previous identity and Eq. (82), we can rewrite \(I_2 (t_1 ,t_2 ,k_1 ,k_2 )\) as

where in the last line we used the fact that \(\varGamma (-k) = k^{-1} + \dots \) as k goes to zero to highlight the linear dependence in \(k_1\) and \(k_2\) in the first term. Notice that

We can now take the derivative with respect to \(k_1\) and \(k_2\) and evaluate the expression in \(k_1 =k_2 =0\) to obtain \(I_2 (t_1 ,t_2 )\)

The second line is easily treated by noting that it is symmetric under \(t_1 \rightarrow 1- t_1\), giving

Thus, the variance of \(s[{\varvec{\sigma }}]\) is given by

Computations Needed in Sect. 3.2.2

In this Appendix, we present in greater detail the computations sketched in Sect. 3.2.2.

First of all, let us state the transfer theorem of singularity analysis in a slightly unprecise, but operatively correct form. See [14, Chapter VI] for the details.

Theorem 1

Let f(z), g(z) and h(z) be generating function with unit radius of convergence, and let \(f_n, g_n\) and \(h_n\) be their coefficients. Then

if and only if

In practice, one builds a standard scale of functions whose coefficient expansion is known, expands a generic generating function over the standard scale (assuming that the scale is rich enough to admit the generating function of interest in its linear span), and uses the transfer theorem to guarantee that an asymptotic equivalence at the level of the generating functions implies (and is implied) by an asymptotic equivalence of their coefficients. A standard scale adopted already in [14, Section VI.8], and which is rich enough for our purposes, is given by functions of the form

where \({{\,\mathrm{L}\,}}(x) = \log \left( (1-x)^{-1} \right) \). In this case, if \(\alpha \) is not a negative integer, the coefficients \(f_n\) satisfy

for \(n \rightarrow \infty \). See [14, Fig. VI.5] for the behaviour of subleading corrections.

1.1 Singular Behaviour of \({{\,\mathrm{Li}\,}}_{0,2}(x)\)

The coefficient of \({{\,\mathrm{Li}\,}}_{0,2}(x)\) equals \(\log ^2(h)\). It is easy to see, using [14, Fig. VI.5], that the singular expansion of \({{\,\mathrm{Li}\,}}_{0,2}(x)\) onto the standard scale must be made by the terms \((1-x)^{-1} L(x)^2, (1-x)^{-1} L(x), (1-x)^{-1}\) and higher-order terms. The coefficient of the expansion can be retrieved by basic linear algebra:

-

the coefficient of \((1-x)^{-1} L(x)^2\) must be 1 to reconstruct the \(\log (h)^2\) term;

-

the coefficient of \((1-x)^{-1} L(x)\) must be \(-2 \gamma _E\) to cancel the \(\log (h)\) term introduced by the first standard scale function;

-

the coefficient of \((1-x)^{-1}\) must be \(\zeta (2) + \gamma _E^2 = \pi ^2/6 + \gamma _E^2\) to cancel the constant terms introduced in the expansion by the previous standard scale functions.

The error term can be obtained by observing that all expansions used above are valid up to order \({\mathcal {O}}\left( h^{-1} \log h \right) \). Thus

1.2 Singular Behaviour of \(\sum _{h \ge 1} \left( \log (h^2+h) \log h! \right) x^{h}\)

The procedure is analogous to the computation for the \({{\,\mathrm{Li}\,}}_{0,2}(x)\). We just need to use Stirling’s approximation for \(\log h!\) to obtain the expansion of the coefficients in h. We have, for the coefficient of order k of this function,

so that the singular expansion must be made by the terms \((1-x)^{-2} L(x)^2, (1-x)^{-2} L(x), (1-x)^{-2}\), and higher-order ones. The coefficients can be found using the same strategy adopted for \({{\,\mathrm{Li}\,}}_{0,2}(x)\), obtaining

1.3 The Change of Variable x(z)

First of all, we rewrite the change of variable \(x(z) = C(z) -1\) in terms of the singular variables \(X = (1-x)^{-1}\) and \(Z = (1-4z)^{-1}\). We have that

so that

These relations are enough to convert singular expansions in X into singular expansions in Z at the leading algebraic order. The formulas of this subsection are useful, in principle, also at higher moments.

1.4 Explicit Expressions for \(M^\mathrm{(T)}_2(z)\)

We report here the explicit expression of \(M^\mathrm{(T)}_2(z)\) both as a function of x and as a function of z:

These expressions can be easily recalculated also with smaller error terms, repeating the procedure of the previous paragraphs with more terms in the Taylor expansions, and provide an evaluation of the variance of our quantities of interest, at the desired order in N.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Caracciolo, S., Erba, V. & Sportiello, A. The Number of Optimal Matchings for Euclidean Assignment on the Line. J Stat Phys 183, 3 (2021). https://doi.org/10.1007/s10955-021-02741-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-021-02741-1