Abstract

In this paper, we suggest an explainable machine learning approach to model the claim frequency of a telematics car dataset. In fact, we use a data-driven method based on tree ensembles, namely, the random forest, to create a claim frequency model. Then, we present a method to build a tree that faithfully synthesizes the predictions of a tree ensemble model such as those derived from the random forest or gradient boosting. This tree serves as a global explanation of the predictions of the black-box. Thanks to this surrogate model, we can extract knowledge from a black-box tree ensemble model. Then, we provide an application to improve the performance of a generalized linear model. Indeed, we integrate this new knowledge into a generalized linear model to increase the predictive power.

Similar content being viewed by others

References

Beard RE, Pentikäinen T, Pesonen E (1984) Risk theory. Springer Netherlands.https://doi.org/10.1007/978-94-011-7680-4

Boucher JP, Côté S, Guillen M (2017) Exposure as duration and distance in telematics motor insurance using generalized additive models. Risks 5(4):54. https://doi.org/10.3390/risks5040054. http://www.mdpi.com/2227-9091/5/4/54

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Breiman L, Friedman J, Stone CJ, Olshen RA (1984) Classification and regression trees. CRC Press, Boca Raton

Chen T, Guestrin C (2016) XGBoost: extreme gradient boosting. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining. ACM. https://doi.org/10.1145/2939672.2939785

Chipman HA, George EI, McCulloch RE (2010) BART: Bayesian additive regression trees. Ann Appl Stat 4(1):266–298. https://doi.org/10.1214/09-aoas285

Cui Z, Chen W, He Y, Chen Y (2015) Optimal action extraction for random forests and boosted trees. In: Proceedings of the 21st ACM SIGKDD international conference on knowledge discovery and data mining—KDD ’15. ACM Press. https://doi.org/10.1145/2783258.2783281

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B (Methodol) 39(1):1–22

Doshi-Velez F, Kim B (2017) Towards a rigorous science of interpretable machine learning

Frees EW, Derrig RA, Meyers G (2014) Predictive modeling applications in actuarial science, vol 1. Cambridge University Press, Cambridge

Friedman J (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29:1189–1232

Friedman J, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors). Ann Stat 28(2):337–407. https://doi.org/10.1214/aos/1016218223

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63(1):3–42. https://doi.org/10.1007/s10994-006-6226-1

Goldstein A, Kapelner A, Bleich J, Pitkin E (2015) Peeking inside the black box: visualizing statistical learning with plots of individual conditional expectation. J Comput Graph Stat 24(1):44–65. https://doi.org/10.1080/10618600.2014.907095

Guidotti R, Monreale A, Ruggieri S, Turini F, Giannotti F, Pedreschi D (2019) A survey of methods for explaining black box models. ACM Comput Surv 51(5):1–42. https://doi.org/10.1145/3236009

Hara S, Hayashi K (2016) Making tree ensembles interpretable. arXiv:1606.05390 [stat]

Hara S, Hayashi K (2018) Making tree ensembles interpretable: a Bayesian model selection approach. In: Storkey A, Perez-Cruz F (eds) Proceedings of the twenty-first international conference on artificial intelligence and statistics, Proceedings of machine learning research, vol 84. PMLR, Playa Blanca, pp 77–85. http://proceedings.mlr.press/v84/hara18a.html

Hastie T, Tibshirani R, Friedman J, Franklin J (2009) The elements of statistical learning: data mining, inference and prediction. Math Intell 27(2):83–85

Henckaerts R, Antonio K, Clijsters M, Verbelen R (2018) A data driven binning strategy for the construction of insurance tariff classes. Scand Actuar J 8:681–705. https://doi.org/10.1080/03461238.2018.1429300

Henckaerts R, Côté MP, Antonio K, Verbelen R (2019) Boosting insights in insurance tariff plans with tree-based machine learning methods. arXiv:1904.10890 [cs, stat]

Jacobs RA, Jordan MI, Barto AG (1991) Task decomposition through competition in a modular connectionist architecture: the what and where vision tasks. Cogn Sci 15(2):219–250. https://doi.org/10.1207/s15516709cog1502_2

Jacobs RA, Jordan MI, Nowlan SJ, Hinton GE (1991) Adaptive mixtures of local experts. Neural Comput 3(1):79–87. https://doi.org/10.1162/neco.1991.3.1.79

Jordan M, Jacobs R (1993) Hierarchical mixtures of experts and the EM algorithm. In: Proceedings of 1993 international conference on neural networks (IJCNN-93-Nagoya, Japan), vol 2. IEEE, pp 1339–1344. https://doi.org/10.1109/ijcnn.1993.716791

Jordan MI, Jacobs RA (1991) Hierarchies of adaptive experts. In: NIPS

Lundberg SM, Lee SI (2017) A unified approach to interpreting model predictions. In: Advances in neural information processing systems, pp 4765–4774

McLachlan G, Peel D (2000) Finite mixture models. Wiley. https://doi.org/10.1002/0471721182

Miller T (2019) Explanation in artificial intelligence: insights from the social sciences. Artif Intell 267:1–38. https://doi.org/10.1016/j.artint.2018.07.007

Noll A, Salzmann R, Wuthrich MV (2018) Case study: French motor third-party liability claims. SSRN Electron J. https://doi.org/10.2139/ssrn.3164764

Ohlsson E, Johansson B (2010) Non-life insurance pricing with generalized linear models. Springer, Berlin. https://doi.org/10.1007/978-3-642-10791-7

Ribeiro M, Singh S, Guestrin C (2016) “Why should i trust you?”: explaining the predictions of any classifier. In: Proceedings of the 2016 conference of the North American chapter of the association for computational linguistics: demonstrations. Association for Computational Linguistics. https://doi.org/10.18653/v1/n16-3020

Therneau T, Atkinson E (2015) An introduction to recursive partitioning using the rpart routines

Tselentis DI, Yannis G, Vlahogianni EI (2016) Innovative insurance schemes: pay as/how you drive. Transp Res Procedia 14:362–371. https://doi.org/10.1016/j.trpro.2016.05.088

Verbelen R, Antonio K, Claeskens G (2018) Unravelling the predictive power of telematics data in car insurance pricing. J R Stat Soc Ser C (Appl Stat) 67(5):1275–1304. https://doi.org/10.1111/rssc.12283

Vickrey W (1968) Automobile accidents, tort law, externalities, and insurance: an economist’s critique. Law Contemp Probl 33(3):464. https://doi.org/10.2307/1190938. https://www.jstor.org/stable/1190938?origin=crossref

Wuthrich MV, Buser C (2019) Data analytics for non-life insurance pricing. Swiss Finance Institute Research Paper (16-68)

Yang Y, Qian W, Zou H (2018) Insurance premium prediction via gradient tree-boosted tweedie compound Poisson models. J Bus Econ Stat 36(3):456–470. https://doi.org/10.1080/07350015.2016.1200981

Acknowledgements

We thank the two anonymous reviewers for their careful reading of our manuscript and their insightful and relevant suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Results on partitions

Proof

The proof is organized in two parts. First, we prove that the reunion of \(\tilde{{\mathcal {R}}}_g\) elements covers \({\mathbb {R}}^p\), i.e.,

-

For each \(x \in {\mathbb {R}}^p\), there exists \(i \in \{1,..,I_1\}\) and \(k \in \{1,..,I_2\}\) such that \(x \in \tilde{R}_{1, i} \cap \tilde{R}_{2, k}\).

Subsequently, we prove that the elements of \(\tilde{{\mathcal {R}}}_g\) are pairwise disjoint, i.e.,

-

if \(\tilde{R}_{g, 1} \in \tilde{R}_g\) and \(\tilde{R}_{g, 2} \in \tilde{R}_g\) then \(\tilde{R}_{g, i_1} \cap \tilde{R}_{g, i_2} = \emptyset \).

Let \(x \in {\mathbb {R}}^p\), \(\tilde{{\mathcal {R}}}_1\) be a partition of \({\mathbb {R}}^p\), so there exists \(i \in \{ 1, I_1 \}\) such that \(x \in \tilde{R}_{1, i}\). Symmetrically, there exists \(k \in \{ 1, I_2 \}\) such that \(x \in \tilde{R}_{2, k}\). Hence, \(x \in \tilde{R}_{1, i} \cap \tilde{R}_{2, k}\). It means that \(\tilde{R}_{1, i} \cap \tilde{R}_{2, k} \ne \emptyset \) and prove the first point.

Now, let us suppose that \(\tilde{R}_{g, 1} \in \tilde{{\mathcal {R}}}_{g}\) and \(\tilde{R}_{g, 2} \in \tilde{{\mathcal {R}}}_{g}\). There exists \(i_1, i_2 \in \{ 1, I_1 \}\) and \(k_1, k_2 \in \{ 1, I_2 \}\) such that \( \tilde{R}_{g, 1} = \tilde{R}_{1, i_1} \cap \tilde{R}_{2, k_1} \) and \( \tilde{R}_{g, 2} = \tilde{R}_{1, i_2} \cap \tilde{R}_{2, k_2} \) where \(\tilde{R}_{1, i_1}, \tilde{R}_{1, i_2} \in \tilde{{\mathcal {R}}}_{1}\) and \(\tilde{R}_{2, k_1}, \tilde{R}_{2, k_2} \in \tilde{{\mathcal {R}}}_{2}\). Suppose that \(\tilde{R}_{g, 1} \cap \tilde{R}_{g, 2} \ne \emptyset \), then there exists \(x \in {\mathbb {R}}^p\) such that

It means that \(x \in \tilde{R}_{1, i_1} \cap \tilde{R}_{1, i_2}\) and hence that \(\tilde{R}_{1, i_1} \cap \tilde{R}_{1, i_2} \ne \emptyset \). However, \(\tilde{{\mathcal {R}}}_1\) is a partition. Therefore, the only way that \(\tilde{R}_{1, i_1} \cap \tilde{R}_{1, i_2} = \emptyset \) is that \(\tilde{R}_{1, i_1} = \tilde{R}_{1, i_2}\). The same argument holds for \(\tilde{R}_{2, k_1} \cap \tilde{R}_{2, k_2}\). It follows directly that \(\tilde{R}_{g, 1} = \tilde{R}_{g, 2}\). Hence, we prove that if two elements of \(\tilde{{\mathcal {R}}}_g\) are not disjoint, they are equal. This concludes the proof. \(\square \)

B Exploratory data analysis

1.1 Exposure variable selection

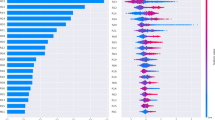

To visualize the relationships between the claim frequency and explanatory variables, we represent a scatter plot of the log claim frequency as a function of each continuous policy predictor: License seniority, Age, and Age registration. Those graphs allow us to see immediately that there is no log-linear relationship between these variables and the target. Hence, we need to discretize continuous predictors to utilize the complex relationship between predictors and the target. As we can see, there is a clear trend for License seniority: the more experienced the driver is, the lower the claim frequency. This is less obvious for Age and Age registration. This is why we choose to discretize our variables with a tree- based method described below.

Remark 14

This dataset contains very few points. Sometimes, for the highest values of continuous predictors, the claim frequency is null because no claim was reported. To represent this in Fig. 3, we remove those points because the log is not defined for these observations. Moreover, the Kwatt variable has too many zeros to be useful in a scatter plot. Therefore, we decided to represent only the discretized variable.

C Grid search

Rights and permissions

About this article

Cite this article

Maillart, A. Toward an explainable machine learning model for claim frequency: a use case in car insurance pricing with telematics data. Eur. Actuar. J. 11, 579–617 (2021). https://doi.org/10.1007/s13385-021-00270-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13385-021-00270-5