Abstract

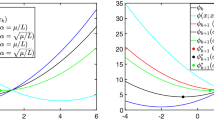

This paper investigates online algorithms for smooth time-varying optimization problems, focusing first on methods with constant step-size, momentum, and extrapolation-length. Assuming strong convexity, precise results for the tracking iterate error (the limit supremum of the norm of the difference between the optimal solution and the iterates) for online gradient descent are derived. The paper then considers a general first-order framework, where a universal lower bound on the tracking iterate error is established. Furthermore, a method using “long-steps” is proposed and shown to achieve the lower bound up to a fixed constant. This method is then compared with online gradient descent for specific examples. Finally, the paper analyzes the effect of regularization when the cost is not strongly convex. With regularization, it is possible to achieve a non-regret bound. The paper ends by testing the accelerated and regularized methods on synthetic time-varying least-squares and logistic regression problems, respectively.

Similar content being viewed by others

References

Allen-Zhu, Z., Hazan, E.: Optimal black-box reductions between optimization objectives (2016). [Online] Available at: arXiv:1603.05642

Allen-Zhu, Z., Orecchia, L.: Linear coupling: An ultimate unification of gradient and mirror descent. In: Proceedings of the 8th Innovations in Theoretical Computer Science, ITCS ’17 (2017). Full version available at arXiv:1407.1537

Arjevani, Y., Shalev-Shwartz, S., Shamir, O.: On lower and upper bounds in smooth and strongly convex optimization. J. Mach. Learn. Res. 17(1), 4303–4353 (2016)

Aujol, J., Dossal, C.: Stability of over-relaxations for the forward-backward algorithm, application to fista. SIAM J. Optim. 25(4), 2408–2433 (2015)

Aybat, N., Fallah, A., Gürbüzbalaban, M., Ozdaglar, A.: Robust accelerated gradient methods for smooth strongly convex functions. SIAM J. Optim. 30, 717–751 (2020)

Bauschke, H., Combettes, P.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd edn. Springer-Verlag, New York (2017)

Beck, A.: First-Order Methods in Optimization. MOS-SIAM Series on Optimization (2017)

Bernstein, A., Dall’Anese, E., Simonetto, A.: Online primal-dual methods with measurement feedback for time-varying convex optimization. IEEE Trans. Signal Process. 67(8), 1978–1991 (2019)

Bertsekas, D., Tsitsiklis, J.: Gradient convergence in gradient methods with errors. SIAM J. Optim. 10(3), 627–642 (2000)

Besbes, O., Gur, Y., Zeevi, A.: Non-stationary stochastic optimization. Oper. Res. 63(5), 1227–1244 (2015)

Bianchin, G., Pasqualetti, F.: A network optimization framework for the analysis and control of traffic dynamics and intersection signaling. In: IEEE Conference on Decision and Control, pp. 1017–1022. IEEE (2018)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Bubeck, S.: Convex Optimization: Algorithms and Complexity. In: Foundations and Trends in Machine Learning, (2015)

Chen, J., Lau, V.K.N.: Convergence analysis of saddle point problems in time varying wireless systems: Control theoretical approach. IEEE Trans. Signal Process. 60(1), 443–452 (2012)

Cyrus, S., Hu, B., Scoy, B.V., Lessard, L.: A robust accelerated optimization algorithm for strongly convex functions. In: IEEE Annual American Control Conference, pp. 1376–1381 (2018)

Dall’Anese, E., Simonetto, A., Becker, S., Madden, L.: Optimization and learning with information streams: Time-varying algorithms and applications. IEEE Signal Processing Magazine (2020). To appear; see arXiv preprint arXiv:1910.08123

Dall’Anese, E., Simonetto, A.: Optimal power flow pursuit. IEEE Trans. Smart Grid 9, 942–952 (2018)

Devolder, O., Glineur, F., Nesterov, Y.: First-order methods of smooth convex optimization with inexact oracle. Math. Program. 146, 37 (2014)

Dixit, R., Bedi, A.S., Tripathi, R., Rajawat, K.: Online learning with inexact proximal online gradient descent algorithms. IEEE Trans. Signal Process. 67(5), 1338–1352 (2019)

Drori, Y., Teboulle, M.: Performance of first-order methods for smooth convex minimization: a novel approach. Math. Program. 145, 541 (2012)

Hall, E.C., Willett, R.M.: Online convex optimization in dynamic environments. IEEE J. Select. Top. Signal Process. 9(4), 647–662 (2015)

Hazan, E., Agarwal, A., Kale, S.: Logarithmic regret algorithms for online convex optimization. Mach. Learn. 69(2), 169–192 (2007)

Jadbabaie, A., Rakhlin, A., Shahrampour, S., Sridharan, K.: Online optimization: competing with dynamic comparators. PMLR 38, 398–406 (2015)

Karimi, H., Nutini, J., Schmidt, M.: Linear convergence of gradient and proximal-gradient methods under the polyak-łojasiewicz condition. In: Machine Learning and Knowledge Discovery in Database - European Conference, pp. 795–811 (2016)

Kim, D., Fessler, J.: Optimized first-order methods for smooth convex minimization. Math. Program. 159, 81 (2016)

Koshal, J., Nedic, A., Shanbhag, U.: Multiuser optimization: distributed algorithms and error analysis. SIAM J. Optim. (2011). https://doi.org/10.1137/090770102

Lessard, L., Recht, B., Packard, A.: Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 26, 57–95 (2016)

Madden, L., Becker, S., Dall’Anese, E.: Online sparse subspace clustering. In: IEEE Data Science Workshop (2019)

Mokhtari, A., Shahrampour, S., Jadbabaie, A., Ribeiro, A.: Online optimization in dynamic environments: Improved regret rates for strongly convex problems. In: 2016 IEEE 55th Conference on Decision and Control (CDC), pp. 7195–7201 (2016)

Nemirovsky, A., Yudin, D.: Problem Complexity and Method Efficiency in Optimization. Wiley, Hoboken (1983)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate o(1/k2) (1983)

Nesterov, Y.: Lectures on Convex Optimization, Springer Optimization and Its Applications, vol. 137, 2 edn. Springer, Switzerland (2018)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, Applied Optimization, vol. 87. Kluwer, Boston (2004)

Nocedal, J., Wright, S.: Numerical Optimization. Operations Research and Financial Engineering, 2nd edn. Springer, Berlin (2006)

O’Donoghue, B., Candes, E.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. 53, 715 (2015)

Polyak, B.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 4, 1–17 (1964)

Popkov, A.Y.: Gradient methods for nonstationary unconstrained optimization problems. Autom. Remote Control 66(6), 883–891 (2005)

Rockafeller, R.: Augmented lagrangians and applications of the proximal point algorithm in convex programming. Math. Oper. Res. 1(2), 97 (1976)

Ryu, E., Boyd, S.: A primer on monotone operator methods. Appl. Comput. Math. 15(1), 3–43 (2016)

Schmidt, M., Roux, N., Bach, F.: Convergence rates of inexact proximal-gradient methods for convex optimization. In: Advances in neural information processing systems, pp. 1458–1466 (2011)

Shalev-Shwartz, S.: Online Learning and Online Convex Optimization. Found. Trends Mach. Learn. 4, 107 (2012)

Shalev-Shwartz, S., Ben-David, S.: Understanding machine learning: From theory to algorithms. Cambridge University Press, Cambridge (2014)

Simonetto, A., Leus, G.: Double smoothing for time-varying distributed multiuser optimization. In: IEEE Glob. Conf. Signal Inf. Process. (2014)

Simonetto, A.: Time-varying convex optimization via time-varying averaged operators (2017). [Online] Available at: arXiv:1704.07338

Tang, Y., Dvijotham, K., Low, S.: Real-time optimal power flow. IEEE Trans. Smart Grid 8, 2963–2973 (2017)

Taylor, A., Hendrickx, J., Glineur, F.: Smooth strongly convex interpolation and exact worst-case performance of first-order methods. Math. Program. 161, 307 (2016)

Tseng, P.: Approximation accuracy, gradient methods, and error bounds for structured convex optimization. Math. Program. 125, 263 (2010)

Villa, S., Salzo, S., Baldassarre, L., Verri, A.: Accelerated and inexact forward-backward algorithms. SIAM J. Optim. 23(3), 1607–1633 (2013)

Yang, T., Zhang, L., Jin, R., Yi, J.: Tracking slowly moving clairvoyant: Optimal dynamic regret of online learning with true and noisy gradient. In: International Conference on Machine Learning (2016)

Yang, T.: Accelerated gradient descent and variational regret bounds (2016)

Acknowledgements

All three authors gratefully acknowledge support from the NSF program “AMPS-Algorithms for Modern Power Systems” under Award # 1923298.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jérôme Bolte.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Madden, L., Becker, S. & Dall’Anese, E. Bounds for the Tracking Error of First-Order Online Optimization Methods. J Optim Theory Appl 189, 437–457 (2021). https://doi.org/10.1007/s10957-021-01836-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-021-01836-9