Abstract

Given appropriate training, human observers typically demonstrate clear improvements in performance on perceptual tasks. However, the benefits of training frequently fail to generalize to other tasks, even those that appear similar to the trained task. A great deal of research has focused on the training task characteristics that influence the extent to which learning generalizes. However, less is known about what might predict the considerable individual variations in performance. As such, we conducted an individual differences study to identify basic cognitive abilities and/or dispositional traits that predict an individual’s ability to learn and/or generalize learning in tasks of perceptual learning. We first showed that the rate of learning and the asymptotic level of performance that is achieved in two different perceptual learning tasks (motion direction and odd-ball texture detection) are correlated across individuals, as is the degree of immediate generalization that is observed and the rate at which a generalization task is learned. This indicates that there are indeed consistent individual differences in perceptual learning abilities. We then showed that several basic cognitive abilities and dispositional traits are associated with an individual’s ability to learn (e.g., simple reaction time; sensitivity to punishment) and/or generalize learning (e.g., cognitive flexibility; openness to experience) in perceptual learning tasks. We suggest that the observed individual difference relationships may provide possible targets for future intervention studies meant to increase perceptual learning and generalization.

Similar content being viewed by others

When human observers are given repeated training on a perceptual task, they typically demonstrate clear and sustained improvements in performance on the trained task itself (Dosher & Lu, 2017; Gibson & Gibson, 1955; Green, Banai, Lu, & Bavelier, 2018; Maniglia & Seitz, 2018; Sagi, 2011; Seitz, 2017; Watanabe & Sasaki, 2015). For example, if participants are repeatedly shown two intervals of moving dots and are asked whether the direction of motion in the two intervals was the same or was offset by 4°, participants show a clear increase in d' (sensitivity) over the course of training (e.g., Ball & Sekuler, 1982). Similarly, if participants are presented with a texture pattern composed of either all similarly oriented lines or the same basic pattern, but with one of the lines presented in a different orientation from the rest, participants typically show dramatic reductions in the minimum presentation duration that is required in order to differentiate these two types of patterns (Ahissar & Hochstein, 1997).

Although individuals usually do show improvements in performance on the very task on which they are trained, it is often the case that this training does not generalize to other tasks, even if they are highly similar to the training. For example, seemingly minor alterations to the size, direction, appearance, spatial or retinal location, or the orientation of stimuli can sometimes (but not always) result in performance dropping back to pretraining levels (Ahissar, 1999; Ahissar & Hochstein, 1993, 1997; Ball & Sekuler, 1982; Fahle & Morgan, 1996; Fiorentini & Berardi, 1981; Poggio, Fahle, & Edelman, 1991). This lack of generalization to even highly similar tasks, known as specificity of learning, is of clear theoretical interest, as it speaks to possible mechanisms underlying the perceptual learning process (Ahissar, Nahum, Nelken, & Hochstein, 2009; Lu & Dosher, 2009; Maniglia & Seitz, 2018; Shibata, Sagi, & Watanabe, 2014). It is also particularly problematic given the goal of applying perceptual learning to real-world situations (Deveau & Seitz, 2014). In order to have real-world value, it is typically necessary for the benefits of training to generalize across a variety of task parameters and situations. As such, researchers have attempted to disentangle the many factors that might contribute to whether learning on a perceptual task is more generalizable or specific.

Here, the vast majority of work has focused on factors inherent to training tasks that influence the degree of learning specificity that is observed. Such factors include training task difficulty (Ahissar, 1999; Ahissar & Hochstein, 1997; Liu & Weinshall, 2000), amount of stimulus variability (Deveau, Lovcik, & Seitz, 2014; Deveau & Seitz, 2014; Fulvio, Green, & Schrater, 2014; Wang, Zhang, Klein, Levi, & Yu, 2012; Xiao et al., 2008), duration of training (Jeter, Dosher, Liu, & Lu, 2010), training schedule (e.g., if sleep occurs between sessions; Karni, Tanne, Rubenstein, Askenasy, & Sagi, 1994), length of adaptive staircases (Hung & Seitz, 2014), the noise present in the training stimuli (DeLoss, Watanabe, & Andersen, 2015), the amount of top-down attentional control required (Byers & Serences, 2012), the type of response that is required (Green, Kattner, Siegel, Kersten, & Schrater, 2015), the concordance between training and transfer tasks (Snell, Kattner, Rokers, & Green, 2015), and the feedback given during training (Herzog & Fahle, 1997; Watanabe & Sasaki, 2015). Yet, while this previous work has elegantly demonstrated many ways in which perceptual training paradigms can be manipulated to push population-level learning outcomes toward greater or lesser specificity, considerably less work has examined the sizable variations in learning ability and generalization that exist across individuals (Baldassarre et al., 2012; Fahle & Henke-Fahle, 1996; Green et al., 2015; Schmidt & Bjork, 1992; Withagen & van Wermeskerken, 2009). Indeed, while the examination of individual-difference predictors (e.g., personality, motivation, cognitive abilities) of learning and generalization has been common in domains such as educational and IO psychology (Barrick & Mount, 1991; Blume, Ford, Baldwin, & Huang, 2010; Burke & Hutchins, 2007; Colquitt, LePine, & Noe, 2000; Grossman & Salas, 2011; Herold, Davis, Fedor, & Parsons, 2002; Holmes & Gathercole, 2014; Machin & Fogarty, 2003; Naquin & Holton III, 2002; Pugh & Bergin, 2006; Richardson & Abraham, 2009; Schultz, Alderton, & Hyneman, 2011; Titz & Karbach, 2014; Uttal, Miller, & Newcombe, 2013), the topic has largely been neglected in perceptual learning.

In fact, within the domain of perceptual learning, there is very little work on the even more fundamental question of whether the individual differences that have been observed to date in learning and/or generalization of learning represent meaningful patterns or idiosyncratic noise. It is certainly the case that before considering what individual-difference factors may influence “perceptual learning” or “perceptual generalization,” it is important to first demonstrate that such general abilities exist (otherwise questions about individual differences may be idiosyncratic to what individual perceptual learning task is considered). While the question of whether aspects of performance (e.g., initial ability, rate of learning, asymptotic level of performance) are correlated at the individual level across multiple perceptual learning tasks is a seemingly basic question, it has significant implications both for theory and for possible translational applications of perceptual learning. For instance, one recent theoretical framework has put forth that certain types of perceptual training (in particular, training with action video games) can result in enhancements in the general ability to learn new perceptual tasks (Bavelier, Bediou, & Green, 2018; Bejjanki et al., 2014). Such a framework requires that there is a general ability that underpins each individual’s ability to learn to perform new perceptual tasks, and that this ability can subsequently be enhanced through training. Another influential framework has argued that the behavioral results of perceptual learning studies can be used as a window into the potential underlying neural bases of the learning (Ahissar et al., 2009). For example, under this framework, highly stimulus-specific learning could be indicative of a low-level locus of learning. Therefore, if significant commonalities are seen across tasks (particularly tasks that often produce stimulus-specific learning), it would suggest that at least some portion of the learning is occurring in more stimulus and/or task general areas.

The only paper (to our knowledge) that has examined whether there is a common ability to learn to perform perceptual tasks is recent work by Yang et al. (2020), who had participants perform five visual perceptual learning tasks in addition to an auditory perceptual learning task and an N-back working memory learning task. While none of the individual pairwise correlations in the magnitude of learning between tasks was significant, a multivariate regression model fit across the entirety of the data set revealed a significant participant-level factor. This result is thus the strongest indication to date that individual differences in perceptual learning are not idiosyncratic noise, but do in fact represent (at least partially) a consistent difference in ability across participants. This group further found that a number of other individual difference measures, including those related to cognitive ability (e.g., IQ) and personality (e.g., neuroticism and agreeableness), were related to this general ability to perform perceptual learning tasks.

Although our research was conducted prior to the publication of Yang et al. (2020), and thus was not designed specifically in light of that work, our work both complements and extends their findings. Specifically, like Yang et al. (2020), we had participants complete multiple perceptual learning tasks (two, in our case—motion and texture discrimination) along with a battery of possible cognitive and personality/dispositional predictors. Our study, however, extended their work in a number of ways. In particular, we assessed both learning and generalization to new stimuli on the same task after learning (e.g., train on a motion task with dots moving in one direction; test generalization on the same motion task with dots moving in another direction, which has often been associated with specificity). To this end, our training tasks were chosen because they induce both significant individual differences in learning of the core training task and in the degree to which that learning generalizes to new stimuli. This, in turn, allowed us to assess not just whether there is a global ability to learn new perceptual tasks, but also whether there is an independent ability to transfer learning to new tasks. Furthermore, our methods and analytic approach allowed for a finer-grained measure of the perceptual learning curves and potential commonalities across tasks. Specifically, Yang et al.’s (2020) training tasks utilized adaptive staircases. While such adaptive staircases have a host of benefits, they are not ideal for measuring fine-grained details of learning because they necessarily confound time and task difficulty. Staircase methods also provide metrics of performance that are aggregations over many trials, thus “initial performance” is not truly an estimate of performance on Trial 1, but rather an estimate of performance on Block 1 which aggregates over hundreds of trials. Our training task design instead allowed for time-continuous (at the trial-by-trial level) estimates of participant performance.

Given our full design, we thus sought to assess (a) whether there are significant correlations across two different perceptual learning tasks in terms of initial ability, learning rate, and/or asymptotic performance; (b) whether there are significant correlations in the ability to generalize perceptual learning to new stimuli (above and beyond what could be explained by differences in initial learning); and, finally, (c) whether any individual difference-level traits (e.g., cognitive, personality) were related to these outcomes.

Method

Participants

A total of 35 University of Wisconsin-Madison undergraduate students (23 females, 12 males), ranging in age from 18 to 33 years (M = 20.5, SD = 2.9), were recruited via posted advertisements and received $60 for completing the study. Six participants were ultimately excluded either for not completing the tasks as instructed (two), or for having sufficiently poor performance that the key dependent measures could not be appropriately computed on at least one training task (four), leaving a total of 29 participants in the final analysis. This sample size was chosen based upon our primary research questions (i.e., whether there is a general ability that underlies perceptual learning and/or learning generalization across multiple perceptual learning tasks). As there is no “gold standard” for how strong correlations need to be in order to indicate this type of common underlying ability, we chose to be powered to detect measures sharing approximately 25% of variance.

Study overview

The study took place over the course of four sessions (90 minutes each; always on separate days) that were scheduled within no more than 10 total days (see below and Supplemental Materials for a full description of the tasks and design). On the first day, participants completed several computerized tasks examining basic cognitive abilities as well as a number of trait/personality questionnaires. On the second day, they completed three blocks of training on one of two possible perceptual learning tasks. On the third day, they completed a fourth training block on the same perceptual training task from the day before, a generalization block on that task, and then three blocks of training on the second perceptual learning task. Finally, on the fourth day, they completed a fourth block of training on the second perceptual learning task as well as a generalization block on that task. They finished the study by completing a number of other questionnaires (see Supplemental Materials for full order details).

Apparatus

Across the four sessions of the study, participants completed both pen-and-paper questionnaires as well as computerized tasks. The computerized tasks were created and controlled using MATLAB and the Psychophysics Toolbox (PTB-3; Brainard, 1997; Kleiner, Brainard, & Pelli, 2007). All tasks were performed in a dimly lit testing room on a Dell OptiPlex 780 computer with a 23-inch flat-screen monitor with an unrestrained viewing distance of approximately 60 cm. All responses were made via manual button press on the keyboard, or with the computer mouse. Participants received instructions prior to each task and completed practice trials under the supervision of the experimenter, after which they completed the remaining trials on their own.

Stimuli and design

Perceptual learning/generalization tasks

Dot-motion task

In brief, on each trial of this task participants viewed a 100 ms presentation of 50 black dots presented within a gray circular aperture moving at 1 degree per second. The direction of the dots was drawn from a uniform distribution between 105 and 165 degrees (i.e., 135 degrees ± 30 degrees; see Green et al., 2015; Kattner, Cochrane, Cox, Gorman, & Green, 2017a; Kattner, Cochrane, & Green, 2017b; Snell et al., 2015, for use of this type of stimulus generation). Following a 500 ms mask consisting of randomly moving dots, participants were asked to indicate whether the dots were moving more vertically (up arrow key) or horizontally (down arrow key; note that piloting suggested that the “up/down” explanation of the task response, rather than the “clockwise/counterclockwise” explanation, produced better understanding and compliance with the task goals). After each response, participants received a feedback tone that informed them whether they were correct.

Participants first completed five practice trials of the task and then completed three training blocks of 200 trials each within a single session. In the following session, participants completed one final training block and then completed a transfer block of 200 trials. The transfer block was identical to the training blocks with the exception that the direction of dot motion was now centered on 225 degrees, and no feedback was provided. The training and transfer tasks were counterbalanced across participants such that a subset of the sample received training on the 135 angle stimuli, as described above, and the remaining participants received training on the 225 angle stimuli.

Texture task

The texture task was adapted from Ahissar and Hochstein (1997). On each trial an orienting tone was played for 500 ms, followed by a variable delay of between 1,000 and 2,000 ms. After the delay, a 7 × 7 item matrix of black lines was presented in the center of the screen. On half of the trials, all 49 lines were presented at a 16° angle (“same” trials), whereas on the other half of trials one of the 49 lines was presented at a 36° angle (“different” trials). These lines appeared on the screen for a variable stimulus-to-mask stimulus-onset asynchrony (SOA; 16, 30, 90, 120, 300, or 500 ms), after which they were replaced with a mask that remained on the screen until the participant made a response (“same” or “different”). Participants received feedback displayed on the screen (“Correct” or “Incorrect”) following each trial.

Participants completed six practice trials at an SOA of 700 ms to familiarize themselves with the task, and then completed three training blocks of 210 trials (i.e., 15 repetitions of each combination of SOA and same/different) within a single session. In the following session, participants completed one final training block, and then completed a generalization block of 140 trials. The generalization block was identical to the training blocks, with the exception that the lines were now oriented at a 106° angle, with the odd lines oriented at a 126° angle, and no feedback was provided. The training and generalization tasks were counterbalanced across participants such that a subset of the sample received training on the 16° and 36° angle stimuli, as described above, and the remaining participants received training on the 106° and 126° angle stimuli.

Cognitive predictor battery

Given the reasonable paucity of work on individual-level predictors of perceptual learning and/or perceptual learning generalization, the tasks utilized in our cognitive predictor battery were chosen because they represent a variety of constructs (e.g., reaction time, working memory capacity, visual attention) that have frequently been implicated in individual learning or generalization differences in other domains (e.g., education or job-related performance). A detailed description of each task can be found in the Supplementary Materials.

To assess reaction time (RT), a factor that has often been associated with learning and learning generalization (Edwards, Ruva, O’Brien, Haley, & Lister, 2013; Green, Pouget, & Bavelier, 2010; Heppe, Kohler, Fleddermann, & Zentgraf, 2016; Ross et al., 2016; Schubert et al., 2015), we employed three tasks: a simple go task (press a button as soon as a stimulus appears), a simple discrimination task (an arrow appears pointing left or right, press the corresponding arrow key), and a three-alternative forced-choice (3AFC) discrimination task (one of three boxes lights up on the screen; press the corresponding button). For all three RT tasks, a recursive trial-by-trial outlier rejection procedure was performed in order to remove trials on which RT was excessively long (i.e., greater than three standard deviations from the mean), after which average RT across the remaining valid trials was used as the dependent measure.

Cognitive flexibility was assessed using a task-switching paradigm. Learning and learning generalization have previously been shown to relate to cognitive flexibility, particularly the ability to task-switch and/or multi-task (Glass, Maddox, & Love, 2013). In our task-switching measure, participants were asked to classify digits as higher/lower than 5 or odd/even, depending on instructions presented on the screen (Rogers & Monsell, 1995). Performance was measured by examining the average reaction time (for correct trials only) for switch and nonswitch trials separately. Additionally, switch costs were calculated as the difference in RT for nonswitch and switch trials (smaller switch costs/faster RTs = better cognitive flexibility).

A filtering task, adapted from Ophir, Nass, and Wagner (2009), was used to measure selective attention. Like cognitive flexibility, selective attention has also been commonly observed to relate to learning abilities and, as such, is a frequent target for cognitive training (Bavelier et al., 2018; Edwards et al., 2013). In this task, participants determined whether a set of target lines changed orientation from one presentation to another while simultaneously ignoring distractor lines. Performance was measured by calculating a sensitivity score (hits − false alarms) as a function of distractor set size (2 or 10; less reduction in sensitivity with increasing distractors = better selective attention).

Working memory abilities have been consistently tied to learning outcomes, at least partially by virtue of the links between working memory and fluid intelligence (Bergman Nutley & Söderqvist, 2017; Karbach & Unger, 2014). As such, we employed an operation span (OSPAN) task that was adapted from Turner and Engle (1989) to assess working memory. An OSPAN score was calculated in two ways: a “harsh” measure of the total number of letters correctly recalled in order, and a “lenient” measure of the total number of letters recalled, regardless of order. The final OSPAN score was the average of the harsh and lenient measures.

Fluid intelligence is likely the most widely noted individual difference level predictor of learning in many domains, including in education (where the construct in many ways originated; e.g., Binet & Simon, 1916; Ritchie & Tucker-Drob, 2018; Rohde & Thompson, 2007). As such, we utilized a subset of the Raven’s Advanced Progressive Matrices (RAPM) to measure this construct. Performance was measured by taking the total number of items out of 18 that were correctly answered.

The final cognitive predictor task, adapted from Kornell and Bjork (2008), was used to assess complex learning. In this task, called the painting task, participants learned associations between painting styles and artist names. They were then shown a new set of paintings and asked to identify the artist. As such, this task requires participants to generalize complex perceptual experience to new stimuli. Accuracy for correctly identifying the artist was used as an index of performance on the task.

Dispositional/lifestyle habits predictor battery

In addition to the battery of cognitive tasks, participants were asked to complete several measures designed to assess dispositional traits (e.g., personality), as well as lifestyle factors. The goal of the full dispositional/lifestyle habits predictor battery was to capture key traits that previous research has indicated is predictive of learning outcomes, including those related to personality, sensitivity to reward and punishment, motivation and persistence, and use of modern media (e.g., Barrick & Mount, 1991; Blume et al., 2010; Burke & Hutchins, 2007; Large et al., 2019; Richardson & Abraham, 2009; Schultz et al., 2011). Details for each measure can be found in the Supplementary Materials.

In total, we included five different measures of personality and related dispositional factors. To measure the five dimensions of the Big Five personality model, we employed the NEO-PI Big Five Questionnaire (Costa & McCrae, 1992). Approach and avoidant behavioral tendencies were assessed using the BIS/BAS measurement tool (Carver & White, 1994). The Sensitivity to Reward/Sensitivity to Punishment Questionnaire (SPSRQ; Torrubia, Avila, Moltó, & Caseras, 2001) was used to assess sensitivity to both reward and punishment. To measure individual differences in cognitive breadth, we used a global/local shape task adapted from Kimchi and Palmer (1982). Finally, we assessed trait positive (PA) and negative (NA) affect using the Positive and Negative Affect Schedule (PANAS; Watson, Clark, & Tellegen, 1988).

Additionally, we included two measures of motivation. We used a persistence scale, adapted from Ventura, Shute, and Zhao (2013) to measure the tendency to persevere even the face of great difficulty. We also included a measure of grit, which is defined as “perseverance and passion for long-term goals” (Duckworth, Peterson, Matthews, & Kelly, 2007).

Lastly, we included a Media Multitasking Index (MMI) adapted from Ophir et al. (2009). Although we also assessed video game experience, none of the participants were experienced gamers, and thus data from this questionnaire were not analyzed further.

Results

Analytical methods for perceptual learning/generalization tasks

Performance on each of the perceptual learning tasks were fit as hierarchical continuous-time evolving regressions (motion = logistic; texture = Weibull; Kattner, Cochrane, Cox, et al., 2017a; Kattner, Cochrane, & Green, 2017b), via the R package brms (Bürkner, 2017; see additional details in Supplementary Materials). This allowed us to parameterize performance (i.e., as a starting value, asymptotic value, amount of time to change) in the threshold of the appropriate psychometric function (note that lower threshold values indicate better performance on both tasks, and lower rate parameters indicate faster learning; i.e., fewer trials required to achieve learning). Decomposition of perceptual learning into these dimensions not only allows clarity in tests of individual differences across tasks, but also facilitates a more mechanistically grounded account of cross-participant variation (Ackerman & Cianciolo, 2000; Kattner, Cochrane, Cox, et al., 2017). All bootstrapped models were fit with the R package TEfits (Cochrane, 2020; see additional details in Supplementary Materials).

All correlations reported are Spearman rank correlations that represent relationships across/within the learning tasks. The significance threshold (given n of 29 and alpha of .05) is approximately ρ = .356 (corresponding to t = 2.05).

Did participants learn and generalize in the perceptual learning tasks?

Before examining detailed patterns of relationships between and within tasks, it is first critical to demonstrate that the tasks met the basic criteria laid out in the introduction—namely, that we observed learning on the tasks over the period of training (~800 trials) and that we observed some intermediate degree of generalization (see Fig. 1a). First, with respect to learning during initial training, asymptotic thresholds were reliably lower than starting thresholds in both the texture (paired t test Mdiff = −0.60, CI = [−0.69, −0.51]), t(28) = −13.3, dCohen = −4.9, and dot-motion (paired t test Mdiff = −18.7, CI = [−26.1, −11.3]), t(28) = −5.2, dCohen = −1.9, tasks.

a In both the dot-motion task (a.1) and the texture task (a.2), participants showed clear evidence of learning during training. We also saw an intermediate degree of learning generalization (whereby initial performance on the generalization task was better than initial performance on the training task, but worse than asymptotic performance on the training task). This is plotted more explicitly in Panel b (left panel − values less than zero mean better initial performance on the generalization task than on the training task; right panel – values greater than zero mean that initial performance on the generalization task was worse than the asymptotic level of performance on the training task). Finally, as seen in Panel c, we also noted that participants learned the generalization task more rapidly than they had learned the training task (values less than zero indicating faster learning on the generalization task), suggesting that previous learning can speed the learning of subsequent tasks that share facets (i.e., learn to learn)

Second, with respect to generalization, we observed reliable increases in thresholds from the end of training to the start of generalization (generalization cost; Wilcoxon signed-rank test Z = 5.78), as well as reliable decreases in thresholds from the start of training to the start of generalization (generalization benefit; Wilcoxon signed-rank test Z = −4.16; see Fig. 1b). Generalization learning happened in less time than did initial learning (i.e., smaller rate parameters; Wilcoxon signed-rank test Z = −2.13; see Fig. 1c). In sum, these results indicate a nuanced pattern of partial generalization, providing further justification for tests of individual differences in generalization.

Did performance (learning and generalization) correlate across the two perceptual learning tasks?

First, we confirmed that overall performance on the two perceptual learning tasks was related, with overall percent correct correlating at Spearman ρ = .404. This value exceeds the statistical significance threshold of ρ = .356 (given n = 29 and α = .05). To be conservative, however, below we use this overall percentage correct correlation value of 0.404 (which is associated with a two-tailed p value of .03) as our baseline in discussing relations with specific components of the learning curves across tasks. We then moved to examining correlations between specific aspects of the learning process (starting performance, rate, and asymptote).

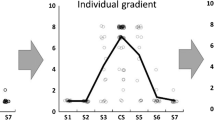

Starting-performance parameters were not correlated across learning tasks (ρ = −0.005; see Fig. 2a). In contrast, the correlation of rate of learning (ρ = 0.475; Fig. 2b), as well as asymptotic performance parameters (ρ = 0.488; Fig. 2c), exceeded the raw accuracy correlation value. Here, it is critical to note that the increase in variance explained is not necessarily additive, yet neither are the correlations simply reflections of collinearity (i.e., within tasks’ rate and asymptote parameters share less than 30% of their variance; texture ρ= 0.501; dot-motion ρ= 0.33).

Correlations between parameter estimates on dot-motion perceptual learning (x-axes) and texture perceptual learning (y-axes). The top row of plots shows parameter estimates during initial training, while the bottom row shows parameter estimates during subsequent generalization. Left column shows starting thresholds, middle column is rate of learning, and right column shows asymptotic thresholds. Lines and shaded areas demonstrate a standard OLS best fit line and 95% CIs. Rho is the Spearman rank-order correlation between tasks’ parameter estimates. While most parameters show associations, training starting thresholds and generalization asymptotic thresholds do not

We then turned to examining relationships in generalization across tasks (see Fig. 2d–f). These were each bivariate partial rank correlations, partialling out the variance associated with theparameters during initial learning. Overall block-level generalization accuracies were correlated even more highly than training-task accuracies (ρ = 0.472), while generalization parameters were less correlated than parameters estimated from training data (start ρ = 0.37, rate ρ = 0.31, asymptote ρ = 0.07).

Was generalization related to initial learning?

To test the individual-level relationships between training and generalization, geometric means were calculated across each of the texture and dot-motion task parameters. This reduces the noise in the estimate of individual-level variation in learning starting points, rates, and asymptotes. The following analyses therefore consider each of the above three training parameters and three generalization parameters (i.e., starting point, rate, and asymptotic value).

A mixed pattern of correlations was found between training parameters and generalization learning parameters. Starting parameters in generalization and training (ρ = 0.383) did not meet our threshold for a reliable correlation, with correlations between starting parameters and other parameters being smaller still. In contrast, correlations between training and generalization asymptote and rate parameters were reliable (rate ρ = 0.503; asymptote ρ = 0.585). Notably, an even higher correlation was observed between training asymptote and generalization rate (ρ = 0.676), possibly indicating a mechanism of generalization in which rate of learning is particularly enhanced by initial learning (Kattner, Cochrane, & Green, 2017b).

These findings provide further evidence that learning rate and asymptotic performance each reflect meaningful individual-level variation in generalization. There was also some evidence that the correlations are independent. Using bootstrapped robust regression to test the degree to which generalization rate was predicted by training asymptote and rate in a single model, only asymptote was found to be a reliable predictor (asymptote b = 3.00, CI = [0.39, 9.20], ΔR2oos = 0.121; rate b = 0.55, CI = [−0.83, 2.00], ΔR2oos = 0.023). In other words, training-task asymptotic performance was related to generalization learning rate even when controlling for training-task learning rate. The same applies to generalization asymptote, which was only related to training asymptote when the analogous model is fit (asymptote b = 0.17, CI = [0.06, 0.31], ΔR2oos = 0.250; rate b = 0.015, CI = [−0.034, 0.059], ΔR2oos = −0.058).

Were variations in learning and generalization explained by individual differences?

Descriptive statistics of the individual difference predictors are reported in Table S2. As discussed above, the three learning parameters (initial performance, rate, asymptote) reported here are composite scores formed by calculating the geometric means between the dot-motion and the texture task parameters. The following are the coefficients of various predictors in bootstrapped bivariate robust regression models with training-task parameters as outcome variables (see Table 1; Fig. 3). Reported coefficient values are from models fit to the original nonresampled data. Lower values are better in all cases (i.e., lower thresholds or times to learn). Caution should be taken when interpreting the baseline (pretraining) effects, however, due to the lack of correlation between the two tasks’ starting thresholds. Finally, given that there were strong a priori expectations for the direction of the expected relationships in some cases (e.g., based upon previous theory or upon the results of Yang et al., 2020), while others were purely exploratory, for ease of interpretation we did not correct for multiple comparisons (which would have involved utilizing different alphas across the various cells in Tables 1 and 2; note that we do indicate for each cell whether the relationship falls outside the 99%, 95%, or 90% bootstrapped CI).

Bivariate relations between four predictors (rows) and three components of learning in initial training (columns). Scores on the painting learning task, BAS-FS, and sensitivity to punishment were each associated with multiple components of learning. Response time (RT) composite was associated only with variations in initial performance. While all statistics reported predict learning parameters, these parameters are on the x-axis for the sake of plotting convenience. Scatterplots show Yeo-Johnson transformed variables; b and CI indicate the overall RLM slope and bootstrapped 95% CI of the slope. Lines and shaded areas are standard OLS linear regression fits and 95% CI

First, scores on the RT tasks predicted pretraining (baseline) thresholds, such that lower RTs predicted lower initial thresholds. Additionally, lower Neuroticism, BIS, and Punishment Sensitivity scores, as well as higher BAS-FS scores, were each associated with lower initial thresholds. Next, higher scores on the Painting task and higher Global Bias, as well as lower Neuroticism, BAS-FS, BAS-RR, and Punishment Sensitivity scores, were each associated with faster rates of learning. Finally, superior asymptotic performance (i.e., lower threshold) was predicted by lower BAS-FS scores, as well as higher scores on both the OSPAN working memory task and the Painting category learning task.

We next considered predictors of learning in generalization, above and beyond initial learning (see Table 2; Fig. 4). We controlled for initial learning parameters by including the relevant parameter in our bootstrapped robust linear models (e.g., when predicting generalization rate using RT, training-task learning rate would be included in the model as a covariate). Lower initial generalization threshold, controlling for initial training threshold (i.e., generalization benefit), was predicted by lower BAS-FS scores and higher Persistence scores. Faster generalization learning rate, controlling for training learning rate, was predicted by smaller Task Switch Costs, lower Openness to Experience, and higher Punishment Sensitivity. Lower asymptotic threshold in generalization, when controlling for training asymptotic threshold, was predicted by higher Painting category learning scores and by lower Grit scores. Finally, lower generalization cost, or initial generalization threshold controlling for final training threshold, was predicted by higher Neuroticism and lower BAS-FS scores.

Bivariate relations between four predictors (rows) and three components of learning in generalization (columns; these parameters controlled for the variance of the corresponding initial learning components). Rate of generalization is related to task switch cost, openness to experience, and sensitivity to punishment. Asymptotic performance in generalization was related to scores on the painting learning task. While all statistics reported predict learning parameters, these parameters are on the x-axis for the sake of plotting convenience. Scatterplots show Yeo-Johnson transformed variables; b and CI indicate the overall RLM slope and bootstrapped 95% CI of the slope. Lines and shaded areas are standard OLS linear regression fits and 95% CI

Discussion

Although it is well documented that there are substantial inter-individual differences in learning and generalization on perceptual learning tasks, few studies have examined the individual characteristics that might predict which individuals show different amounts of learning and/or specificity on these tasks. Here, echoing results by Yang et al. (2020), we found that such individual differences are not simply idiosyncratic noise, but partially represent a shared ability to perform perceptual learning tasks. Interestingly, although in the work by Yang et al. (2020) none of the pairwise correlations between tasks were significant, in our case we observed reliable relations between tasks. This discrepancy may be partially attributed to differences in methodology between the work of Yang et al. (2020) and our own. In particular, while Yang and colleagues’ approach allowed for only a reasonably coarse estimate of learning (i.e., with performance aggregated across sizeable blocks of the tasks), our methods and analytic techniques allowed for a time-continuous estimate of performance. This is consistent with the fact that in our data the learning rate and asymptotic performance parameters were both more strongly correlated across tasks than an aggregated measure (i.e., overall accuracy). We note, however, that the “learning rate” parameter in the work of Yang and colleagues could also be viewed as a combination of rate and “learning magnitude” (i.e., their log-linear models conflated rate of change with the difference between initial and final performance), while in our case “learning rate” is best thought of as a true rate (i.e., a half-life—the time to make it halfway between initial and final performance). Thus, these are not exactly apples to apples comparisons. These differences in methodology may also explain why we found essentially zero systematic patterns in terms of initial task performance (which, in our case, is very much the estimate of performance on Trial 1). Early performance in our data instead appeared to add noise to estimates of individual-level variation (i.e., over and above task-level variation). A more direct comparison of these techniques and the inferences they allow for could thus result in additional insight in the future.

Extending the previous work of Yang et al. (2020), we also found significant correlations across perceptual learning tasks in the extent to which learning generalized to new stimuli (after controlling for the ability to learn the primary task). This suggests that there is not only a shared capacity to learn that plays a role across perceptual learning tasks, but also that there is a somewhat independent ability to generalize experience to new situations. Generalization, however, was certainly not fully independent of initial task learning abilities. Instead, both generalization task learning rate and asymptote were associated with initial learning task asymptote, such that individuals with a lower asymptotic threshold following training showed significantly better learning rates as well as lower asymptotic thresholds on the generalization task. This suggests that the individuals who came to the best level of performance at the end of the learning task (when controlling for baseline ability) had best encoded the underlying principles of the task, and were thus better able to apply these principles to the generalization orientation (Harlow, 1949; Kemp, Goodman, & Tenenbaum, 2010). This is consistent with previous work that has demonstrated a relationship between amount of learning and generalization (e.g., Duncan & Underwood, 1952; Fiser & Lengyel, 2019). Interestingly, learning asymptote predicted generalization asymptote over and above learning rate, suggesting that it is the degree of learning that most strongly relates to the ability to generalize, rather than the speed at which an individual learns a given task.

Next, although this aspect of the study was exploratory in nature, previous research has demonstrated that cognitive factors, such as top-down attentional control (Byers & Serences, 2012) and matrix reasoning (Colquitt et al., 2000; Yang et al., 2020), are associated with increased learning in outside domains. Additionally, several studies have suggested that various facets of personality, such as conscientiousness (Barrick & Mount, 1991; Blume et al., 2010; Burke & Hutchins, 2007; Richardson & Abraham, 2009; Schultz et al., 2011), extraversion (Barrick & Mount, 1991), openness to experience (Barrick & Mount, 1991), and neuroticism (Blume et al., 2010; Yang et al., 2020), are associated with learning ability on a variety of tasks. As such, we anticipated that similar patterns would emerge in our study.

While the relationship between several of these cognitive factors and learning rate were clearly in the expected direction (e.g., fluid intelligence, reaction time), they did not reach significance. However, we note that we were powered only to detect the somewhat large effects we expected for the correlation between perceptual learning tasks. Thus, it is possible that these nulls reflect lack of power. We also found, consistent with various views in the field, that cognitive flexibility was particularly related to the ability to generalize (in particular in terms of learning rate on the generalization task). Finally, and consistent with the work by Yang et al. (2020), we found that neuroticism was negatively related to the speed of learning (i.e., higher neuroticism = slower learning).

Together, these results are broadly consistent, both with a number of theoretical viewpoints that posit a shared global underlying learning ability (e.g., “g” in the cognitive psychology literature; Spearman, 1904), as well as with theoretical approaches that suggest not only that such a shared ability exists in the perceptual domain, but is one that could then potentially be enhanced via training (i.e., “learning to learn”; Bavelier, Green, Pouget, & Schrater, 2012; Bejjanki et al., 2014). Although our work was purely in the visual domain, the presence of an auditory task in the work of Yang et al. (2020), as well as a pure cognitive learning task, strongly suggests that these findings are not completely localized in visual learning. However, it is still important to note that only around 25% of the variance—for instance, in learning rate—was shared across performance measures in the two perceptual learning tasks. This leaves a great deal of task-dependent variability remaining, as would be consistent with frameworks that suggest a somewhat low neural locus for learning in these tasks (as our population level behavior would most closely correspond to “partial specificity”; Ahissar et al., 2009). Here, we note further that given our sample size, it is possible that certain aspects of learning that were not seen to be significantly related in our data set could in fact be correlated, but again, at values that would indicate a very sizeable amount of task-dependent variability.

These results also have a number of potential translational implications. For example, there has recently been a surge of interest in using both simple perceptual tasks, as well as more complex tasks like video games (e.g., Bediou et al., 2018; Green & Bavelier, 2003; Powers, Brooks, Aldrich, Palladino, & Alfieri, 2013; Toril, Reales, & Ballesteros, 2014; Wang et al., 2016), to improve human performance and well-being. For example, such tasks have been adapted as a rehabilitation tool for individuals recovering from strokes (e.g., Huxlin et al., 2009), as a treatment for individuals with amblyopia (e.g., Li, Ngo, Nguyen, & Levi, 2011), and as an intervention to help stave off age-related cognitive decline (e.g., Toril et al., 2014). Additionally, perceptual training tasks have been used to help train new laparoscopic surgeons (e.g., Ou, McGlone, Camm, & Khan, 2013; Schlickum, Hedman, Enochsson, Kjellin, & Felländer-Tsai, 2009) and fighter pilots (Gopher, Weil, & Bareket, 1994; McKinley, McIntire, & Funke, 2011). Our findings not only give hope that such training could be broadly beneficial (particularly if it improved the global learning ability), but also suggests the potentially important caveat that naturally occurring differences in learning and generalization ability could significantly influence the efficacy of these interventions. As such, identifying individual predictors of learning ability, and the propensity to generalize learning to a new task, would make it possible to tailor training regimens to individuals who fit a certain personality or cognitive profile, or identify ideal candidates for studies on the mechanics of learning and generalization.

While there were several limitations to this study, including the purely correlational nature of the measures and the fact that the study was powered primarily for large effects, these findings demonstrate, consistent with work by Yang et al. (2020), that the large variations in learning and generalization that are generally seen on tasks of perceptual learning may not only be influenced by the types of tasks that are used, but also by naturally occurring characteristics of the participant. More research is necessary in order to better understand how the factors identified here influence the specificity of learning (in particular, the personality factors for which there is limited theoretical work in the perceptual domain).

References

Ackerman, P. L., & Cianciolo, A. T. (2000). Cognitive, perceptual-speed, and psychomotor determinants of individual differences during skill acquisition. Journal of Experimental Psychology: Applied, 6(4), 259–290. https://doi.org/10.1037/1076-898X.6.4.259

Ahissar, M. (1999). Perceptual learning. Society, 8(4), 124–128. https://doi.org/10.1111/1467-8721.00029

Ahissar, M., & Hochstein, S. (1993). Attentional control of early perceptual learning. Proceedings of the National Academy of Sciences of the United States of America, 90(12), 5718–5722. https://doi.org/10.1073/pnas.90.12.5718

Ahissar, M., & Hochstein, S. (1997). Task difficulty and the specificity of perceptual learning. Nature, 387(6631), 401–406. https://doi.org/10.1038/387401a0

Ahissar, M., Nahum, M., Nelken, I., & Hochstein, S. (2009). Reverse hierarchies and sensory learning. Philosophical Transactions of the Royal Society B: Biological Sciences, 364(1515), 285-299. https://doi.org/10.1098/rstb.2008.0253

Baldassarre, A., Lewis, C. M., Committeri, G., Snyder, A. Z., Romani, G. L., & Corbetta, M. (2012). Individual variability in functional connectivity predicts performance of a perceptual task. Proceedings of the National Academy of Sciences of the United States of America, 109(9), 3516–3521. https://doi.org/10.1073/pnas.1113148109

Ball, K., & Sekuler, R. (1982). A specific and enduring improvement in visual motion discrimination. Science, 218(4573), 697-698. https://doi.org/10.1126/science.7134968

Barrick, M. R., & Mount, M. K. (1991). The Big Five personality dimensions and job performance: A meta-analysis. Personnel Psychology, 44(1), 1–26. https://doi.org/10.1111/j.1744-6570.1991.tb00688.x

Bavelier, D., Bediou, B., & Green, C. S. (2018). Expertise and generalization: Lessons from action video games. Current Opinion in Behavioral Sciences, 20, 169–173. https://doi.org/10.1016/j.cobeha.2018.01.012

Bavelier, D., Green, C. S., Pouget, A., & Schrater, P. (2012). Brain plasticity through the life span: Learning to learn and action video games. Annual Review of Neuroscience, 35, 391–416. https://doi.org/10.1146/annurev-neuro-060909-152832

Bediou, B., Adams, D. M., Mayer, R. E., Tipton, E., Green, C. S., & Bavelier, D. (2018). Meta-analysis of action video game impact on perceptual, attentional, and cognitive skills. Psychological Bulletin, 144(1), 77–110. https://doi.org/10.1037/bul0000130

Bejjanki, V. R., Zhang, R., Li, R., Pouget, A., Green, C. S., Lu, Z., & Bavelier, D. (2014). Action video game play facilitates the development of better perceptual templates. Proceedings of the National Academy of Sciences of the United States of America, 111(47), 16961–16966. https://doi.org/10.1073/pnas.1417056111

Bergman Nutley, S., & Söderqvist, S. (2017). How is working memory training likely to influence academic performance? Current evidence and methodological considerations. Frontiers in Psychology, 8, 69. https://doi.org/10.3389/fpsyg.2017.00069

Binet, A., & Simon, T. (1916). The development of intelligence in children (The Binet-Simon Scale). Williams & Wilkins.

Blume, B. D., Ford, J. K., Baldwin, T. T., & Huang, J. L. (2010). Transfer of training: A meta-analytic review. Journal of Management, 36(4), 1065–1105. https://doi.org/10.1177/0149206309352880

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10(4), 433–436. https://doi.org/10.1163/156856897X00357

Burke, L. A., & Hutchins, H. M. (2007). Training transfer: An integrative literature review. Human Resource Development Review, 6(3), 263–296. https://doi.org/10.1177/1534484307303035

Bürkner, P.-C. (2017). brms: An R package for Bayesian multilevel models using Stan. Journal of Statistical Software, 80(1), 1–28. https://doi.org/10.18637/jss.v080.i01

Byers, A., & Serences, J. T. (2012). Exploring the relationship between perceptual learning and top-down attentional control. Vision Research, 74, 30–39. https://doi.org/10.1016/j.visres.2012.07.008

Carver, C. S., & White, T. L. (1994). Behavioral inhibition, behavioral activation, and affective responses to impending reward and punishment: the BIS/BAS scales. Journal of Personality and Social Psychology, 67(2), 319–334.

Cochrane, A. (2020). TEfits: Nonlinear regression for time-evolving indices. Journal of Open Source Software, 5(52), 2535. https://doi.org/10.21105/joss.02535

Colquitt, J. A., LePine, J. A., & Noe, R. A. (2000). Toward an integrative theory of training motivation: A meta-analytic path analysis of 20 years of research. Journal of Applied Psychology, 85(5), 678–707. https://doi.org/10.1037/0021-9010.85.5.678

Costa, P. T., & McCrae, R. R. (1992). Normal personality assessment in clinical practice: The NEO Personality Inventory. Psychological Assessment, 4(1), 5–13.

DeLoss, D. J., Watanabe, T., & Andersen, G. J. (2015). Improving vision among older adults: Behavioral training to improve sight. Psychological Science, 26(4), 456–466. https://doi.org/10.1177/0956797614567510

Deveau, J., Lovcik, G., & Seitz, A. R. (2014). Broad-based visual benefits from training with an integrated perceptual-learning video game. Vision Research, 99, 134–140. https://doi.org/10.1016/j.visres.2013.12.015

Deveau, J., & Seitz, A.R. (2014). Applying perceptual learning to achieve practical changes in vision. Frontiers in Psychology, 5, Article 1166. https://doi.org/10.3389/fpsyg.2014.01166

Dosher, B., & Lu, Z. L. (2017). Visual perceptual learning and models. Annual Review of Vision Science, 3, 343–363. https://doi.org/10.1146/annurev-vision-102016-061249

Duckworth, A. L., Peterson, C., Matthews, M. D., & Kelly, D. R. (2007). Grit: Perseverance and passion for long-term goals. Journal of Personality and Social Psychology, 92(6), 1087–1101. https://doi.org/10.1037/0022-3514.92.6.1087

Duncan, C. P., & Underwood, B. J. (1952). Retention of transfer in motor learning after 24 hours and after 14 months as a function of degree of first-task learning and inter-task similarity (WADC Technical Report 52-224). Wright Air Development Center.

Edwards, J. D., Ruva, C. L., O’Brien, J. L., Haley, C. B., & Lister, J. J. (2013). An examination of mediators of the transfer of cognitive speed of processing training to everyday functional performance. Psychology and Aging, 28(2), 314–321. https://doi.org/10.1037/a0030474

Fahle, M., & Henke-Fahle, S. (1996). Interobserver variance in perceptual performance and learning. Investigative Ophthalmology and Visual Science, 37(5), 869–877.

Fahle, M., & Morgan, M. (1996). No transfer of perceptual learning between similar stimuli in the same retinal position. Current Biology, 6(3), 292–297. https://doi.org/10.1016/S0960-9822(02)00479-7

Fiorentini, A., & Berardi, N. (1981). Learning in grating waveform discrimination: Specificity for orientation and spatial frequency. Vision Research, 21(7), 1149–1158. https://doi.org/10.1016/0042-6989(81)90017-1

Fiser, J., & Lengyel, G. (2019). A common probabilistic framework for perceptual and statistical learning. Current Opinion in Neurobiology, 58, 218–228. https://doi.org/10.1016/j.conb.2019.09.007

Fulvio, J. M., Green, C. S., & Schrater, P. R. (2014). Task-specific response strategy selection on the basis of recent training experience. PLOS Computational Biology, 10, e1003425. https://doi.org/10.1371/journal.pcbi.1003425

Gibson, J. J., & Gibson, E. J. (1955). Perceptual learning: Differentiation or enrichment? Psychological Review, 62(1), 32–41. https://doi.org/10.1037/h0048826

Glass, B. D., Maddox, W. T., & Love, B. C. (2013). Real-time strategy game training: emergence of a cognitive flexibility trait. PLOS ONE, 8(8), e70350. https://doi.org/10.1371/journal.pone.0070350

Gopher, D., Weil, M., & Bareket, T. (1994). Transfer of skill from a computer game trainer to flight. Human Factors, 36(3), 387–405. https://doi.org/10.1177/001872089403600301

Green, C. S., & Bavelier, D. (2003). Action video game modifies visual selective attention. Nature, 423(6939), 534–537. https://doi.org/10.1038/nature01647

Green, C. S., Pouget, A., & Bavelier, D. (2010). Improved probabilistic inference as a general learning mechanism with action video games. Current Biology, 20(17), 1573–1579. https://doi.org/10.1016/j.cub.2010.07.040

Green, C. S., Kattner, F., Siegel, M. H., Kersten, D., & Schrater, P. R. (2015). Differences in perceptual learning transfer as a function of training task. Journal of Vision, 15(10), Article 5. https://doi.org/10.1167/15.10.5

Green, C. S., Banai, K., Lu, Z. L., & Bavelier, D. (2018). Perceptual learning. In J. T. Serences (Ed.), Stevens’ handbook of experimental psychology and cognitive neuroscience (Vol. 2). Wiley.

Grossman, R., & Salas, E. (2011). The transfer of training: What really matters. International Journal of Training and Development, 15(2), 103–120. https://doi.org/10.1111/j.1468-2419.2011.00373.x

Harlow, H. F. (1949). The formation of learning sets. Psychological Review, 56(1), 51–65. https://doi.org/10.1037/h0062474

Heppe, H., Kohler, A., Fleddermann, M. T., & Zentgraf, K. (2016). The relationship between expertise in sports, visuospatial, and basic cognitive skills. Frontiers in Psychology, 7, 904. https://doi.org/10.3389/fpsyg.2016.00904

Herold, D. M., Davis, W., Fedor, D. B., & Parsons, C. K. (2002). Dispositional influences on transfer of learning in multistage training programs. Personnel Psychology, 55(4), 851–869. https://doi.org/10.1111/j.1744-6570.2002.tb00132.x

Herzog, M. H., & Fahle, M. (1997). The role of feedback in learning a vernier discrimination task. Vision Research, 37(15), 2133–2141. https://doi.org/10.1016/S0042-6989(97)00043-6

Holmes, J., & Gathercole, S. (2014). Taking working memory training from the laboratory into schools. Educational Psychology, 34(4), 440–450. https://doi.org/10.1080/01443410.2013.797338

Hung, S. C., & Seitz, A. R. (2014). Prolonged training at threshold promotes robust retinotopic specificity in perceptual learning. The Journal of Neuroscience, 34(25), 8423–8431. https://doi.org/10.1523/JNEUROSCI.0745-14.2014

Huxlin, K. R., Martin, T., Kelly, K., Riley, M., Friedman, D. I., Burgin, W. S., & Hayhoe, M. (2009). Perceptual relearning of complex visual motion after V1 damage in humans. Journal of Neuroscience, 29(13), 3981–3991. https://doi.org/10.1523/JNEUROSCI.4882-08.2009

Jeter, P. E., Dosher, B. A., Liu, S.-H., & Lu, Z.-L. (2010). Specificity of perceptual learning increases with increased training. Vision Research, 50(19), 1928–1940. https://doi.org/10.1016/j.visres.2010.06.016

Karbach, J., & Unger, K. (2014). Executive control training from middle childhood to adolescence. Frontiers in Psychology, 5, Article 390. https://doi.org/10.3389/fpsyg.2014.00390

Karni, A., Tanne, D., Rubenstein, B. S., Askenasy, J. J. M., & Sagi, D. (1994). Dependence on REM sleep of overnight improvement of a perceptual skill. Science, 265(29), 679–682. https://doi.org/10.1126/science.8036518

Kattner, F., Cochrane, A., Cox, C. R., Gorman, T. E., & Green, C. S. (2017a). Perceptual learning generalization from sequential perceptual training as a change in learning rate. Current Biology, 27(6), 840–846. https://doi.org/10.1016/j.cub.2017.01.046

Kattner, F., Cochrane, A., & Green, C. S. (2017b). Trial-dependent psychometric functions accounting for perceptual learning in 2-AFC discrimination tasks. Journal of Vision, 17(11), 3. https://doi.org/10.1167/17.11.3

Kemp, C., Goodman, N. D., & Tenenbaum, J. B. (2010). Learning to learn causal models. Cognitive Science, 34(7), 1185–1243. https://doi.org/10.1111/j.1551-6709.2010.01128.x

Kimchi, R., & Palmer, S. E. (1982). Form and texture in hierarchically constructed patterns. Journal of Experimental Psychology: Human Perception and Performance, 8(4), 521-535. https://doi.org/10.1037/0096-1523.8.4.521

Kleiner, M., Brainard, D., & Pelli, D. (2007). What’s new in Psychtoolbox-3? Perception, 36, (ECVP Abstract Supplement).

Kornell, N., & Bjork, R. A. (2008). Learning concepts and categories: Is spacing the “enemy of induction”? Psychological Science, 19(6), 585–592. https://doi.org/10.1111/j.1467-9280.2008.02127.x

Large, A., Bediou, B., Cekic, S., Hart, Y., Bavelier, D., & Green, C.S. (2019). Cognitive and behavioral correlates of achievement in a complex multi-player video game. Media and Communication, 7(4), 198–212. https://doi.org/10.17645/mac.v7i4.2314

Li, R. W., Ngo, C., Nguyen, J., & Levi, D. M. (2011). Video-game play induces plasticity in the visual system of adults with amblyopia. PLOS Biology, 9(8), Article e1001135. https://doi.org/10.1371/journal.pbio.1001135

Liu, Z., & Weinshall, D. (2000). Mechanisms of generalization in perceptual learning. Vision Research, 40(1), 97–109. https://doi.org/10.1016/s0042-6989(99)00140-6

Lu, Z. L., & Dosher, B. A. (2009). Mechanisms of perceptual learning. Learning & Perception, 1(1), 19–36. https://doi.org/10.1556/lp.1.2009.1.3

Machin, M. A., & Fogarty, G. J. (2003). Perceptions of training-related factors and personal variables as predictors of transfer implementation intentions. Journal of Business and Psychology, 18(1), 51–71. https://doi.org/10.1023/A:1025082920860

Maniglia, M., & Seitz, A. R. (2018). Towards a whole brain model of perceptual learning. Current Opinion in Behavioral Sciences, 20, 47–55. https://doi.org/10.1016/j.cobeha.2017.10.004

McKinley, R. A., McIntire, L.K., & Funke, M. (2011). Operator selection for unmanned aerial systems: Comparing video game players and pilots. Aviation, Space and Environmental Medicine, 82(6), 635–642. https://doi.org/10.3357/ASEM.2958.2011.

Naquin, S. S., & Holton, E. F., III. (2002). The effects of personality, affectivity, and work commitment on motivation to improve work through learning. Human Resource Development Quarterly, 13(4), 357–376. https://doi.org/10.1002/hrdq.1038

Ophir, E., Nass, C., & Wagner, A. D. (2009). Cognitive control in media multitaskers. Proceedings of the National Academy of Sciences of the United States of America, 106(37), 15583–15587. https://doi.org/10.1073/pnas.0903620106

Ou, Y., McGlone, E. R., Camm, C. F., & Khan, O. (2013). Does playing video games improve laparoscopic skills? International Journal of Surgery, 11(5), 365–369. https://doi.org/10.1016/j.ijsu.2013.02.020.

Poggio, T., Fahle, M., & Edelman, S. (1991). Fast perceptual learning in visual hyperacuity. Science, 256(5059), 1018-1021. https://doi.org/10.1126/science.1589770

Pugh, K. J., & Bergin, D. A. (2006). Motivational influences on transfer. Educational Psychologist, 41(3), 147–160. https://doi.org/10.1207/s15326985ep4103_2

Powers, K. L., Brooks, P. J., Aldrich, N. J., Palladino, M. A., & Alfieri, L. (2013). Effects of video-game play on information processing: A meta-analytic investigation. Psychonomic Bulletin & Review, 20(6), 1055-1079. https://doi.org/10.3758/s13423-013-0418-z

Richardson, M., & Abraham, M. (2009). Conscientiousness and achievement motivation predict performance. European Journal of Personality, 23, 589–605. https://doi.org/10.1002/per

Ritchie, S. J., & Tucker-Drob, E. M. (2018). How much does education improve intelligence? A meta-analysis. Psychological Science, 29(8), 1358–1369. https://doi.org/10.1177/0956797618774253

Rogers, R. D., & Monsell, S. (1995). Costs of a predictable switch between simple cognitive tasks. Journal of Experimental Psychology: General, 124(2), 207–231. https://doi.org/10.1037/0096-3445.124.2.207

Rohde, T. E., & Thompson, L. A. (2007). Predicting academic achievement with cognitive ability. Intelligence, 35(1), 83–92. https://doi.org/10.1016/j.intell.2006.05.004

Ross, L. A., Edwards, J. D., O’Connor, M. L., Ball, K. K., Wadley, V. G., & Vance, D. E. (2016). The transfer of cognitive speed of processing training to older adults’ driving mobility across 5 years. Journals of Gerontology: Series B, 71(1), 87–97. https://doi.org/10.1093/geronb/gbv022

Sagi, D. (2011). Perceptual learning in vision research. Vision Research, 51(13), 1552–1566. https://doi.org/10.1016/j.visres.2010.10.019

Schlickum, M. K., Hedman, L., Enochsson, L., Kjellin, A., & Felländer-Tsai, L. (2009). Systematic video game training in surgical novices improves performance in virtual reality endoscopic surgical simulators: A prospective randomized study. World Journal of Surgery, 33(11), 2360–2367. https://doi.org/10.1007/s00268-009-0151-y

Schmidt, R. A., & Bjork, R. A. (1992). New conceptualizations of practice: Common principles in three paradigms suggest new concepts for training. Psychological Science, 3(4), 207–217. https://doi.org/10.1111/j.1467-9280.1992.tb00029.x

Schubert, T., Finke, K., Redel, P., Kluckow, S., Müller, H., & Strobach, T. (2015). Video game experience and its influence on visual attention parameters: An investigation using the framework of the theory of visual attention (TVA). Acta Psychologica, 157, 200–214. https://doi.org/10.1016/j.actpsy.2015.03.005

Schultz, R., Alderton, D., & Hyneman, A. (2011). Individual differences and learning performance in computer-based training (No. NPRST-TN-11-4). https://apps.dtic.mil/dtic/tr/fulltext/u2/a539353.pdf. Accessed 5 Aug 2015

Seitz, A. R. (2017). Perceptual learning. Current Biology, 27(13), R631–R636. https://doi.org/10.1016/j.cub.2017.05.053

Shibata, K., Sagi, D., & Watanabe, T. (2014). Two-stage model in perceptual learning: Toward a unified theory. Annals of the New York Academy of Sciences, 1316, 18-28. https://doi.org/10.1111/nyas.12419

Snell, N., Kattner, F., Rokers, B., & Green, C. S. (2015). Orientation transfer in vernier and stereoacuity training. PLOS ONE, 10(12), Article e0145770. https://doi.org/10.1371/journal.pone.0145770

Spearman, C. (1904). “General intelligence,” objectively determined and measured. American Journal Psychology, 15, 201–292.

Titz, C., & Karbach, J. (2014). Working memory and executive functions: Effects of training on academic achievement. Psychological Research, 78(6), 852–868. https://doi.org/10.1007/s00426-013-0537-1

Toril, P., Reales, J. M., & Ballesteros, S. (2014). Video game training enhances cognition of older adults: A meta-analytic study. Psychology and Aging, 29(3), 706–716. https://doi.org/10.1037/a0037507

Torrubia, R., Avila, C., Moltó, J., & Caseras, X. (2001). The Sensitivity to Punishment and Sensitivity to Reward Questionnaire (SPSRQ) as a measure of Gray’s anxiety and impulsivity dimensions. Personality and Individual Differences, 31(6), 837–862. https://doi.org/10.1016/S0191-8869(00)00183-5

Turner, M. L., & Engle, R. W. (1989). Is working memory capacity task dependent?. Journal of Memory and Language, 28(2), 127–154. https://doi.org/10.1016/0749-596X(89)90040-5

Uttal, D. H., Miller, D. I., & Newcombe, N. S. (2013). Exploring and enhancing spatial thinking: Links to achievement in science, technology, engineering, and mathematics?. Current Directions in Psychological Science, 22(5), 367–373. https://doi.org/10.1177/0963721413484756

Ventura, M., Shute, V., & Zhao, W. (2013). The relationship between video game use and a performance-based measure of persistence. Computers & Education, 60(1), 52–58. https://doi.org/10.1016/j.compedu.2012.07.003

Wang, P., Liu, H. H., Zhu, X. T., Meng, T., Li, H. J., & Zuo, X. N. (2016). Action video game training for healthy adults: A meta-analytic study. Frontiers in Psychology, 7, 907. https://doi.org/10.3389/fpsyg.2016.00907

Wang, R., Zhang, J. Y., Klein, S. A., Levi, D. M., & Yu, C. (2012). Task relevancy and demand modulate double-training enabled transfer of perceptual learning. Vision Research, 61, 33–38. https://doi.org/10.1016/j.visres.2011.07.019

Watanabe, T., & Sasaki, Y. (2015). Perceptual learning: Toward a comprehensive theory. Annual Review of Psychology, 66, 197–221. https://doi.org/10.1016/j.biotechadv.2011.08.021

Watson, D., Clark, L. A., & Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality and Social Psychology, 54(6), 1063–1070. https://doi.org/10.1037//0022-3514.54.6.1063

Withagen, R., & van Wermeskerken, M. (2009). Individual differences in learning to perceive length by dynamic touch: Evidence for variation in perceptual learning capacities. Attention, Perception & Psychophysics, 71(1), 64–75. https://doi.org/10.3758/APP

Xiao, L. Q., Zhang, J. Y., Wang, R., Klein, S. A., Levi, D. M., & Yu, C. (2008). Complete transfer of perceptual learning across retinal locations enabled by double training. Current Biology, 18(24), 1922–1926. https://doi.org/10.1016/j.cub.2008.10.030

Yang, J., Yan, F. F., Chen, L., Xi, J., Fan, S., Zhang, P., ... & Huang, C. B. (2020). General learning ability in perceptual learning. Proceedings of the National Academy of Sciences, 117(32), 19092–19100. https://doi.org/10.1073/pnas.2002903117

Funding

This research was supported by an Office of Naval Research grant to C.S.G N00014-17-1-2049.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

We have no conflicts of interest to disclose.

Additional information

Open practices statement

The data and materials for all experiments will be made freely available upon publication on an open-science platform. This experiment was not preregistered.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 1640 kb)

Rights and permissions

About this article

Cite this article

Dale, G., Cochrane, A. & Green, C.S. Individual difference predictors of learning and generalization in perceptual learning. Atten Percept Psychophys 83, 2241–2255 (2021). https://doi.org/10.3758/s13414-021-02268-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-021-02268-3