Abstract

The present paper is devoted to clustering geometric graphs. While the standard spectral clustering is often not effective for geometric graphs, we present an effective generalization, which we call higher-order spectral clustering. It resembles in concept the classical spectral clustering method but uses for partitioning the eigenvector associated with a higher-order eigenvalue. We establish the weak consistency of this algorithm for a wide class of geometric graphs which we call Soft Geometric Block Model. A small adjustment of the algorithm provides strong consistency. We also show that our method is effective in numerical experiments even for graphs of modest size.

Similar content being viewed by others

1 Introduction

Graph clustering—the task of identifying groups of tightly connected nodes in a graph—is a widely studied unsupervised learning problem, with applications in computer science, statistics, biology, economy or social sciences [7].

In particular, spectral clustering is one of the key graph clustering methods [15]. In its most basic form, this algorithm consists in partitioning a graph into two communities using the eigenvector associated with the second smallest eigenvalue of the graph’s Laplacian matrix (the so-called Fiedler vector [6]). Spectral clustering is popular, as it is an efficient relaxation of the NP-hard problem of cutting the graph into two balanced clusters so that the weight between the two clusters is minimal [15].

In particular, spectral clustering is consistent in the Stochastic Block Model (SBM) for a large set of parameters [1, 11]. The SBM is a natural basic model with community structure. It is also the most studied one [1]. In this model each node is assigned to one cluster, and edges between node pairs are drawn independently and with probability depending only on the community assignment of the edge endpoints.

However, in many situations, nodes also have geometric attributes (a position in a metric space). Thus, the interaction between a pair of nodes depends not only on the community labelling, but also on the distance between the two nodes. We can model this by assigning to each node a position, chosen in a metric space. Then, the probability of an edge appearance between two nodes will depend both on the community labelling and on the positions of these nodes. Recent proposals of random geometric graphs with community structure include the Geometric Block Model (GBM) [8] and Euclidean random geometric graphs [2]. The nodes’ interactions in geometric models are no longer independent: two interacting nodes are likely to have many common neighbors. While this is more realistic (‘friends of my friends are my friends’), this also renders the theoretical study more challenging.

Albeit spectral clustering was shown to be consistent in some specific geometric graphs [13], the geometric structure can also heavily handicap a cut-based approach. Indeed, one could partition space into regions such that nodes between two different regions interact very sparsely. Thus, the Fiedler vector of a geometric graph might be associated only with a geometric configuration, and bear no information about the latent community labelling. Moreover, the common technique of regularization [18], which aims to penalize small size communities in order to bring back the vector associated with the community structure in the second position, will not work in geometric graphs as the regions of space can contain a balanced number of nodes. Nonetheless, this observation does not automatically render spectral clustering useless. Indeed, as we shall see, in some situations there is still one eigenvector associated with the community labelling. Thus, it is now necessary to distinguish the eigenvectors corresponding to a geometric cut—hence potentially useless for cluster recovery—from the one corresponding to the community labelling. In other words, to achieve a good performance with spectral clustering in such a setting, one needs to select carefully the correct eigenvector, which may no longer be associated with the second smallest eigenvalue.

Our working model of geometric graphs with clustering structure will be the Soft Geometric Block Model (SGBM). It is a block generalization of soft random geometric graphs and includes as particular cases the SBM and the GBM. Another important example is the Waxman Block Model (WBM) where the edge probabilities decrease exponentially with the distance. The SGBM is similar to the model of [2], but importantly we do not assume the knowledge of nodes’ positions.

In this paper, we propose a generalization of standard spectral clustering based on a higher-order eigenvector of the adjacency matrix. This eigenvector is selected using the average intra- and inter-community degrees, and is not necessarily the Fiedler vector. The goal of the present work is to show that this algorithm performs well both theoretically and practically on SGBM graphs.

Our specific contributions are as follows. We establish weak consistency of higher-order spectral clustering on the SGBM in the dense regime, where the average degrees are proportional to the number of nodes. With a simple additional step, we also establish strong consistency. One important ingredient of the proof is the characterization of the spectrum of the clustered geometric graphs, and can be of independent interest. In particular, it shows that the limiting spectral measure can be expressed in terms of the Fourier transform of the connectivity probability functions. Additionally, our numerical simulations show that our method is effective and efficient even for graphs of modest size. Besides, we also illustrate by a numerical example the unsuitability of the Fiedler vector for community recovery in some situations.

Let us describe the structure of the paper. We introduce in Section 2 the Soft Geometric Block Model and the main notations. The characterization of the limiting spectrum is given in Section 3. This characterization will be used in Section 4 to establish the consistency of higher-order spectral clustering in dense SGBM graphs. Finally, Section 5 shows numerical results and Section 6 concludes the paper with a number of interesting future research directions.

2 Model Definition and Notations

2.1 Notations

Let \(\mathbf {T}^d = \mathbb {R}^d / \mathbb {Z}^d\) be the flat unit torus in dimension d represented by \(\left[ -\frac{1}{2}, \frac{1}{2} \right] ^d\). The norm \(\ell _{\infty }\) in \(\mathbb {R}^d\) naturally induces a norm on \(\mathbf {T}^d\) such that for any vector \(x = (x_1, \ldots , x_d) \in \mathbf {T}^d\) we have \(\Vert x \Vert = \max \limits _{1 \le i \le d} |x_i|\).

For a measurable function \(F : \mathbf {T}^d \rightarrow \mathbb {R}\) and \(k \in \mathbb {Z}^d\), we denote \(\widehat{F}(k) = \int _{\mathbf {T}^d} F(x) e^{-2i\pi \langle k,x \rangle } \, dx\) the Fourier transform of F. The Fourier series of F is given by

For two integrable functions \(F, \, G: \mathbf {T}^d \rightarrow \mathbb {R}\), we define the convolution operation \(F * G (y) \ = \ \int _{\mathbf {T}^d} F(y-x) G(x) \, d x\) and \(F^{*m} = F * F * \dots * F\) (m times). We recall that \(\widehat{F * G}(k) = \widehat{F}(k) \widehat{G}(k)\).

2.2 Soft Geometric Block Model

A Soft Geometric Block Model (SGBM) is defined by a dimension d, a number of nodes n, a set of blocks K and a connectivity probability function \(F : \mathbf {T}^d \times K \times K \rightarrow [0, 1]\). The node set is taken as \(V = [n]\). The model is parametrized by a node labelling \(\sigma : V \rightarrow K\) and nodes’ positions \(X = (X_1, \dots , X_n) \in \left( \mathbf {T}^d \right) ^n\). We suppose that \(F(\cdot , \sigma , \sigma ') = F(\cdot , \sigma ', \sigma )\) and for any \(X \in \mathbf {T}^d\), F(X) depends only on the norm \(\Vert X\Vert \). The probability of appearance of an edge between nodes i and j is defined by \(F\left( X_i-X_j, \sigma _i, \sigma _j \right) \). Note that this probability depends only on the distance between \(X_i\) and \(X_j\) and the labels \(\sigma _i, \sigma _j\). Consequently, the model parameters specify the distribution

of the adjacency matrix \(A = (A_{ij})\) of a random graph.

Furthermore, for this work we assume that the model has only two equal size blocks, i. e., \(K = \{1, 2\}\), and \(\sum _{i = 1}^n 1(\sigma _i = 1) = \sum _{i = 1}^n 1(\sigma _i = 2) = \frac{n}{2}\). The labels are assigned randomly, that is, the set \(\{i \in [n] :\sigma _i = 1\}\) is chosen randomly over all the \(\frac{n}{2}\)-subsets of [n]. We assume that the entries of X and \(\sigma \) are independent and \(\forall i \in V\), \(X_i\) is uniformly distributed over \(\mathbf {T}^d\). Finally, suppose that for any \(x \in \mathbf {T}^d\)

where \(F_\mathrm{in}, F_\mathrm{out}: \mathbf {T}^d \rightarrow [0,1]\) are two measurable functions. We call these functions connectivity probability functions.

The average intra- and inter-community edge densities are denoted by \(\mu _\mathrm{in}\) and \(\mu _\mathrm{out}\), respectively. Their expressions are given by the first Fourier modes of \(F_\mathrm{in}\) and \(F_\mathrm{out}\):

These quantities will play an important role in the following, as they represent the intensities of interactions between nodes in the same community and nodes in different communities. In particular, the average inside community degree is \(\left( \frac{n}{2}-1 \right) \mu _\mathrm{in}\), and the average outside community degree is \(\frac{n}{2}\mu _\mathrm{out}\).

Example 1

An SGBM where \(F_\mathrm{in}(x) = p_\mathrm{in}\) and \(F_\mathrm{out}(x) = p_\mathrm{out}\) with \(p_\mathrm{in}, p_\mathrm{out}\) being constants is an instance of the Stochastic Block Model.

Example 2

An SGBM where \(F_\mathrm{in}(x) = 1(\Vert x\Vert \le r_\mathrm{in})\), \(F_\mathrm{out}(x) = 1(\Vert x\Vert \le r_\mathrm{out})\) with \(r_\mathrm{in}, r_\mathrm{out}\in \mathbb {R}_+\) is an instance of the Geometric Block Model introduced in [8].

Example 3

We call Waxman Block Model (WBM) an SGBM with \(F_\mathrm{in}(x) = \min ( 1, q_\mathrm{in}\mathrm{e}^{-s_\mathrm{in} || x || })\), \(F_\mathrm{out}(x) = \min (1, q_\mathrm{out}\mathrm{e}^{-s_\mathrm{out}|| x || } )\). This is a clustered version of the Waxman model [16], which is a particular case of soft geometric random graphs [12].

Formally, clustering or community recovery problem is the following problem: given the observation of the adjacency matrix A and the knowledge of \(F_\mathrm{in}, F_\mathrm{out}\), we want to recover the latent community labelling \(\sigma \). Given an estimator \(\widehat{\sigma }\) of \(\sigma \), we define the loss \(\ell \) as the ratio of misclassified nodes, up to a global permutation \(\pi \) of the labels: \( \ell \left( \sigma , \widehat{\sigma }\right) \ = \ \frac{1}{n} \min _{\pi \in \mathcal {S}_2} \sum _{i} 1\left( \sigma _i \not = \pi \circ \widehat{\sigma }_i \right) . \) Then, \(\widehat{\sigma }\) is said to be weakly consistent (or equivalently, achieves almost exact recovery) if

and strongly consistent (equivalently, achieves exact recovery) if

3 The Analysis of Limiting Spectrum

3.1 Limit of the Spectral Measure

Theorem 1

Consider an SGBM defined by (1)-(2). Assume that \(F_\mathrm{in}(0), F_\mathrm{out}(0)\) are equal to the Fourier series of \(F_\mathrm{in}(\cdot ),F_\mathrm{out}(\cdot )\) evaluated at 0. Let \(\lambda _1, \dots , \lambda _n\) be the eigenvalues of A, and

be the spectral measure of the matrix \(\frac{1}{n}A\). Then, for all Borel sets B with \(\mu \left( \partial B \right) = 0\) and \(0 \not \in \bar{B}\), a.s.,

where \(\mu \) is the following measure:

Remark 1

The limiting measure \(\mu \) is composed of two terms. The first term, \(\sum _{k \in \mathbb {Z}^d} \delta _{ \frac{\widehat{F}_\mathrm{in}(k) + \widehat{F}_\mathrm{out}(k)}{2}}\) corresponds to the spectrum of a random graph with no community structure, and where edges between two nodes at distance x are drawn with probability \(\frac{F_\mathrm{in}(x) + F_\mathrm{out}(x)}{2}\). In other words, it is the null-model of the considered SGBM. Hence, the eigenvectors associated with those eigenvalues bear no community information, but only geometric features.

On the contrary, the second term \(\sum _{k \in \mathbb {Z}^d} \delta _{ \frac{\widehat{F}_\mathrm{in}(k) - \widehat{F}_\mathrm{out}(k)}{2}}\) corresponds to the difference between intra- and inter-community edges. In particular, as we shall see later, the ideal eigenvector for clustering is associated with the eigenvalue \(\widetilde{\lambda }\) closest to \( \lambda _* = n \frac{\widehat{F}_\mathrm{in}(0) - \widehat{F}_\mathrm{out}(0)}{2} \). Other eigenvectors might mix some geometric and community features and hence are harder to analyze.

Last, the eigenvalue \( \widetilde{\lambda }\) is not necessarily the second largest eigenvalue, as the ordering of eigenvalues here depends on the Fourier coefficients \(\widehat{F}_\mathrm{in}(k)\) and \(\widehat{F}_\mathrm{out}(k)\), and is in general non trivial.

Remark 2

The assumptions on \(F_\mathrm{in}(0)\) and \(F_\mathrm{out}(0)\) are validated for a wide range of reasonable connectivity functions. For instance, by Dini’s criterion, all the functions that are differentiable at 0 satisfy these conditions. Another appropriate class consists of piecewise \(C^1\) functions that are continuous at 0 (this follows from the Dirichlet conditions).

Proof

The outline of the proof of Theorem 1 follows closely [4]. First, we show that \(\forall m \in \mathbb {N}\), \(\lim \limits _{n \rightarrow \infty } \, \mathbb {E}\, \mu _n \left( P_m \right) = \mu (P_m)\) where \(P_m(t) = t^m\). Second, we use Talagrand’s concentration inequality to prove that \(\mu _n(P_m)\) is not far from its mean, and conclude with Borel–Cantelli lemma.

(i) By Lemma 1 in the Appendix, in order to establish the desired convergence it is enough to show that \(\lim \limits _{n \rightarrow \infty } \mathbb {E}\mu _n \left( P_m \right) = \mu (P_m)\) for any \(m\in \mathbb {N}\). First,

By definition,

with \(\alpha = (i_1, \dots , i_m) \in [n]^m \) and \(i_{m+1} = i_1\). We denote \(\mathcal {A}^m_n\) the set of m-permutations of [n], that is \(\alpha \in \mathcal {A}^m_n\) iff \(\alpha \) is an m-tuple without repetition. We have,

where \(R_m = \sum \limits _{ \alpha \in [n]^m \backslash \mathcal {A}^m_n } \, \prod _{j=1}^m A_{i_j, i_{j+1} } \).

We first bound the quantity \(R_m\). Since \(| A_{ij}| \le 1\), we have

where we used \(\dfrac{n!}{(n-m)! } = n^m - n^{m-1} \sum _{i=0}^{m-1} i + o(n^{m-1}) \). Hence

Moreover,

where \(G(\alpha ) = \int _{(\mathbf {T}^d ) ^m } \prod _{j=1}^m F( x_{i_j} - x_{i_{j+1}}, \sigma _{i_j}, \sigma _{i_{j+1} } ) dx_{i_1} \dots dx_{i_m} \) for \(\alpha \in \mathcal {A}^m_n\).

Let us first show that the value of \(G(\alpha )\) depends only on the number of consecutive indices corresponding to the nodes from the same community. More precisely, let us define the set \(\mathcal S(\alpha ) = \{ j \in [m] : \sigma _{i_j} = \sigma _{i_{j+1} } \}\). Using Lemma 2 in the Appendix and the fact that the convolution is commutative, we have

We introduce the following equivalence relationship in \(\mathcal {A}^m_n\): \(\alpha \sim \alpha '\) if \(|\mathcal S(\alpha )| = |\mathcal S(\alpha ')|\). We notice than \(G(\cdot )\) is constant on each equivalence class, and equals to \(F_\mathrm{in}^{* p} * F_\mathrm{out}^{* (m-p)} (0)\) for any \(\alpha \in \mathcal {A}^m_n \) such that \(|\mathcal S(\alpha )| = p\).

Then, let us calculate the cardinal of each equivalence class with \(|\mathcal S(\alpha )| = p\). First of all, we choose the set \(\mathcal S(\alpha )\) which can be done in \(\left( {\begin{array}{c}m\\ p\end{array}}\right) \) ways if \(m-p\) is even and cannot be done if \(m-p\) is odd. The latter follows from the fact that p (the number of ‘non-changes’ in the consecutive community labels) has the same parity as m (the total number of indices) since \(i_{m+1} = i_1\). The set \(\mathcal S(\alpha )\) defines the community labels up to the flip of communities since \(\sigma _{i_j} = \sigma _{i_{j+1}}\) for any \(j \in \mathcal S(\alpha )\) and \(\sigma _{i_j} \ne \sigma _{i_{j+1}}\) for \(j \in [m] \backslash \mathcal S(\alpha )\).

Let \(N_1(\alpha )\) be the number of indices \(i_j\) with \(\sigma _{i_j} = 1\). Consider first the case \(\sigma _{i_1} = 1\) and note that \(N_1(\alpha )\) is totally defined by the set \(\mathcal S(\alpha )\). There are \(\frac{n}{2}\) possible choices for \(i_1\). Now we have two possibilities. If \(\sigma _{i_1} = \sigma _{i_2}\) then we have \(\frac{n}{2}-1\) possible choices for the index \(i_2\) (since \(\alpha \in \mathcal A_n^m\)). Otherwise, if \(\sigma _{i_1} \ne \sigma _{i_2}\) then the index \(i_2\) can be chosen in \(\frac{n}{2}\) ways. Resuming the above operation, we choose \(N_1(\alpha )\) indices from the first community, and it can be done in \(n/2(n/2 - 1)\ldots (n/2 - N_1(\alpha )) \) ways. The indices from the second community can be chosen in \(n/2(n/2 - 1) \ldots (n/2 - (m - N_1(\alpha )))\) ways. Thus in total the number of possible choices of indices is

The same reasoning applies if \(\sigma _{i_1} =2\). Hence, when n goes to infinity, the cardinal of each equivalence class is

This can be rewritten as

Hence,

Therefore, equations (3), (4) and (5) give us:

Finally, since \(F_\mathrm{in}, F_\mathrm{out}\) are equal to their Fourier series at 0, and using \( \widehat{F * G} (k) = \widehat{F}(k) \widehat{G}(k)\), we have

(ii) For each \(m \ge 1\), and n fixed, we define

where \(SGBM(F_\mathrm{in}, F_\mathrm{out})\) denotes the set of adjacency matrices of an SGBM random graph with connectivity functions \(F_\mathrm{in}, F_\mathrm{out}\). Note that \(Q_m(A) = n \mu _n(P_m)\).

Let \(A, \widetilde{A}\) be two adjacency matrices. We denote the Hamming distance by \({\mathrm{d}_\mathrm{Ham}}\left( A, \widetilde{A} \right) = \sum _{i=1}^n \sum _{j=1}^n 1( A_{ij} \not = \widetilde{A}_{ij})\). Using Lemma 5 in the Appendix, we show that the function \(Q_m\) is (m/n)–Lipschitz for the Hamming distance:

Let \(M_m\) be the median of \(Q_m\). Talagrand’s concentration inequality [14, Proposition 2.1] states that

which after integrating over all t gives

since \(\mathbb {E}X = \int _{0}^\infty \mathbb {P}(X>t) dt\) for any positive random variable X. The constant \(C_m\) is equal to \(8 m \int _0^\infty e^{-u^2} du\).

Moreover,

Let \(s > 0\). Since \(C_m / n^2\) goes to 0 when n goes to infinity, we can pick n large enough such that \(s > \frac{C_m}{n^2}\). Thus, using again inequality (8), we have

However, by (6), \(\lim \limits _{n \rightarrow \infty } \mathbb {E}\mu _n(P_m) = \mu (P_m)\). Hence \(\mu _n(P_m)\) converges in probability to \(\mu (P_m)\). Let \(s_n = \frac{1}{n^\kappa }\) with \(\kappa > 0\), and

Since \( \sum _{n \in \mathbb {N}} \epsilon _n < \infty \) when \(\kappa < 2\), an application of Borel–Cantelli lemma shows that the convergence holds in fact almost surely. This concludes the proof. \(\square \)

3.2 Conditions for the Isolation of the Ideal Eigenvalue

As noticed in Remark 1, the ideal eigenvector for clustering is associated with the eigenvalue of the adjacency matrix A closest to \(n \frac{\mu _\mathrm{in}- \mu _\mathrm{out}}{2}\) (recall that \(\mu _\mathrm{in}= \widehat{F}_\mathrm{in}(0)\) and \(\mu _\mathrm{out}= \widehat{F}_\mathrm{out}(0)\)). The following proposition shows that this ideal eigenvalue is isolated from other eigenvalues under certain conditions.

Proposition 1

Consider the adjacency matrix A of an SGBM defined by (1)-(2), and assume that:

with \(\mu _\mathrm{in}\ne \mu _\mathrm{out}\). Then, the eigenvalue of A closest to \(n \frac{\mu _\mathrm{in}- \mu _\mathrm{out}}{2}\) is of multiplicity one. Moreover, there exists \(\epsilon > 0\) such that for large enough n every other eigenvalue is at distance at least \(\epsilon n\).

Proof

Let \(\lambda _1, \dots , \lambda _n\) be the eigenvalues of A. Let \(i^* \in \mathrm{argmin}_{i \in [n]} \left| \frac{\lambda _i}{n} - \frac{\mu _\mathrm{in}- \mu _\mathrm{out}}{2} \right| \). We shall show that there exists \(\epsilon >0\) such that for large enough n, we have for all \(i \not = i^*\):

Due to condition (9), and the fact that

there is some fixed \(\epsilon _1 > 0\) such that

Similarly, condition (10) ensures the existence of \(\epsilon _2 >0\) such that

Denote \(\epsilon _3 = \frac{|\mu _\mathrm{in}- \mu _\mathrm{out}|}{4}\). Let \(\epsilon = \min \left( \epsilon _1, \epsilon _2, \epsilon _3\right) \), and consider the interval \(B = \left[ \frac{\mu _\mathrm{in}- \mu _\mathrm{out}}{2} - \epsilon , \frac{\mu _\mathrm{in}- \mu _\mathrm{out}}{2} + \epsilon \right] \). By Theorem 1, a.s.,

Therefore, for n large enough the only eigenvalue of A in the interval B is \(\lambda _{i^*}\). \(\square \)

The following proposition shows that conditions (9) and (10) of Proposition 1 are almost always verified for a GBM.

Proposition 2

Consider the d-dimensional GBM model, where \(F_\mathrm{in}, F_\mathrm{out}\) are 1-periodic, and defined on the flat torus \(\mathbf {T}^d\) by \(F_\mathrm{in}(x) = 1(\Vert x\Vert \le r_\mathrm{in})\) and \(F_\mathrm{out}(x) = 1(\Vert x\Vert \le r_\mathrm{out})\), with \(r_\mathrm{in}> r_\mathrm{out}> 0\). Denote by \(\mathcal B_+\) and \(\mathcal B_-\) the sets of parameters \(r_\mathrm{in}\) and \(r_\mathrm{out}\) defined by negation of conditions (9) and (10):

Then these sets of ‘bad’ parameters are of zero Lebesgue measure:

Hence for \(\mathcal B = \mathcal B^+ \cup \mathcal B^-\)

Proof

It is clear that

Thus, it is enough to show that \(\mathrm{Leb}(\mathcal B^+) = 0\) and \(\mathrm{Leb}(\mathcal B^-) = 0\). We shall establish the first equality, and the second equality can be proved similarly.

By Lemma 3 in the Appendix, the condition (9) for given functions \(F_\mathrm{in}\) and \(F_\mathrm{out}\) is as follows:

Notice that \(\lim _{k_j \rightarrow \infty } \mathrm{sinc}(2\pi r_\mathrm{in}k_j) = 0\) and \(\lim _{k_j \rightarrow \infty } \mathrm{sinc}(2\pi r_\mathrm{out}k_j) = 0\) while the right-hand side of the above equation is fixed. Therefore, this equation can hold only for k from a finite set \(\mathcal K\). Let us fix some \(k = (k_1, \ldots , k_d) \in \mathcal K\) and denote

Let us now fix \(r_\mathrm{in}\), and consider the condition defining \(\mathcal B_k^+\) as an equation on \(r_\mathrm{out}\). Define the functions

Then the mentioned equation takes the form

Consider the function \(h_k: {\mathbb {C}} \rightarrow {\mathbb {R}}\):

Clearly, this function coincides with \(f_k\) on \({\mathbb {R}}\). Moreover, it is holomorphic in \({\mathbb {C}}\), as \(\mathrm{sinc}(z)\) is holomorphic (it can be represented by the series \(\sum _{n = 0}^{\infty } \frac{(-1)^n}{(2n+1)!} z^{2n}\)), and the product of holomorphic functions is again holomorphic. But then the derivative \(h'_k(z)\) is also holomorphic, therefore, it has a countable number of zeros in \({\mathbb {C}}\). Clearly, \(h'_k \equiv f'_k\) on \({\mathbb {R}}\), which yields that \(f'_k\) has a countable number of zeros in \({\mathbb {R}}\).

Hence, \({\mathbb {R}}_+\) is divided into a countable number of intervals on which the function \(f_k(x)\) is strictly monotone. That is, \({\mathbb {R}}_+ = \sqcup _{i = 0}^{\infty } [a_i(k), b_i(k)]\) where \(f_{k, i} = f_{k} \bigr |_{[a_i(k), b_i(k)]}\) is strictly monotone. Then the function \(f_{k, i}^{-1}(x)\) is correctly defined and, since \(f_{k, i}\) is measurable and injective, \(f_{k, i}^{-1}\) is measurable as well. Consequently, there is a unique solution \(r_\mathrm{out}= f_{k, i}^{-1}(g_k(r_\mathrm{in}))\) of equation (11) for \(r_\mathrm{in}\in [\min f_{k,i}; \max f_{k,i}]\). If \(r_\mathrm{in}\not \in [\min f_{k,i}; \max f_{k,i}]\), there is no solution at all.

Therefore, \(\mathcal B^+_{k, i} = \left\{ \left( r_\mathrm{in}, f_{k, i}^{-1}(g_k(r_\mathrm{in})) \right) :r_\mathrm{in}\in [\min f_{k,i}; \max f_{k,i}] \right\} \) is the graph of some measurable function in \({\mathbb {R}}_+^2\). Since such a graph has a zero Lebesgue measure (see e.g., [17, Lemma 5.3]), we have:

Hence, we can conclude that

Carrying out similar argumentation for \(\mathcal B^-\) completes the proof. \(\square \)

4 Consistency of Higher-Order Spectral Clustering

In this section we show that spectral clustering based on the ideal eigenvector (see Algorithm 1) is weakly consistent for SGBM (Theorem 2). We then show that a simple extra step can in fact achieve strong consistency.

Remark 3

The worst case complexity of the eigenvalue factorization is \(O\left( n^3 \right) \) [5]. However, when the matrix is sufficiently sparse and the eigenvalues are well separated, the empirical complexity can be close to O(kn), where k is the number of required eigenvalues [5]. Moreover, since Algorithm 1 uses only the sign of eigenvector elements, a quite rough accuracy can be sufficient for classification purposes.

4.1 Weak Consistency of Higher-Order Spectral Clustering

Theorem 2

Let us consider the d-dimensional SGBM with connectivity probability functions \(F_{in}\) and \(F_{out}\) satisfying conditions (9)-(10). Then Algorithm 1 is weakly consistent. More precisely, Algorithm 1 misclassifies at most \(O(\log n)\) nodes.

Proof

Let us introduce some notations. Recall that \(\mu _\mathrm{in}= \widehat{F}_\mathrm{in}(0)\) and \(\mu _\mathrm{out}= \widehat{F}_\mathrm{out}(0)\). In the limiting spectrum, the ideal eigenvalue for clustering is

We consider the closest eigenvalue of A to \(\lambda _*\):

Also, let \(\widetilde{v}\) be the normalized eigenvector corresponding to \(\widetilde{\lambda }\). In this proof, the Euclidean norm \(\Vert \cdot \Vert _2\) is used.

The plan of the proof is as follows. We consider the vector

where we supposed without loss of generality that the n/2 first nodes are in Cluster 1, and the n/2 last nodes are in Cluster 2. The vector \(v_*\) gives the perfect recovery by the sign of its coordinates. We shall show that with high probability for some constant \(C > 0\)

We say that an event occurs with high probability (w. h. p.) if its probability goes to 1 as \(n\rightarrow \infty \). With the bounding (12), we shall then show that at most o(n) of entries of \(\widetilde{v}\) have a sign that differs from the sign of the respective entry in \(v_*\); hence \(\widetilde{v}\) retrieves almost exact recovery.

In order to establish inequality (12) we shall use the following theorem from [10].

Theorem 3

Let A be a real symmetric matrix. If \(\widetilde{\lambda }\) is the eigenvalue of A closest to \(\rho (v) = \frac{v^T A v}{v^T v}\), \(\delta \) is the separation of \(\rho \) from the next closest eigenvalue and \(\widetilde{v}\) is the eigenvector corresponding to \(\widetilde{\lambda }\), then

First we deal with \(\rho (v_*)\). Since \(v_*\) is normalized and real-valued (by the symmetry of A), we have

Denote \(u = Av_*\). Then, obviously,

It is clear that each entry \(A_{ij}\) with \(i \ne j\) is a Bernoulli random variable with the probability of success either \(\mu _\mathrm{in}\) or \(\mu _\mathrm{out}\). This can be illustrated by the element-wise expectation of the adjacency matrix:

Let us consider the first term in the right-hand side of (13) for \(i \le n/2\). Since \(A_{ij}\) are independent for fixed i, it is easy to see that \(Y_i := \sum _{j = 1}^{n/2}{A_{ij}} \sim \mathrm {Bin}(n/2-1, \mu _\mathrm{in})\) with the expectation \({\mathbb {E}}Y_i = (n/2-1)\mu _\mathrm{in}\). Then we can use the Chernoff bound to estimate a possible deviation from the mean. For any \(0< t < 1\)

Let us take \(t = \frac{2 \sqrt{\log n}}{\sqrt{(n/2 - 1)\mu _\mathrm{in}}}\). Then for large enough n,

Similarly,

and

Denote \(\gamma _n = \sqrt{2(\mu _\mathrm{in}+ \mu _\mathrm{out}) \log n}\) and notice that \(\gamma _n = \varTheta (\sqrt{\log n})\). By the union bound, we have for large enough n

By the same argumentation,

Now let us calculate \(\rho (v_*)\):

We already established that \(u_i \sim (\mu _\mathrm{in}- \mu _\mathrm{out}) \frac{\sqrt{n}}{2}\) for \(i \le \frac{n}{2}\) (which means that \(\lim \frac{2 u_i}{(\mu _{in} - \mu _{out})\sqrt{n}} = 1\) w.h.p.) and, therefore, that \(\frac{1}{\sqrt{n}} \sum _{i = 1}^{n/2} u_i \sim (\mu _\mathrm{in}- \mu _\mathrm{out}) \frac{n}{4}\). More precisely, by (16),

In the same way, by (17),

Finally,

Now let us denote \(w = Av_* - \rho (v_*) v_* = u - \rho (v_*) v_*\). As we already know, \(u_i \sim (\mu _\mathrm{in}- \mu _\mathrm{out}) \frac{\sqrt{n}}{2}\) and \((\rho (v_*) v_*)_i \sim (\mu _\mathrm{in}- \mu _\mathrm{out}) \frac{\sqrt{n}}{2}\) for \(i \le \frac{n}{2}\). Clearly, for \(i \le \frac{n}{2}\)

Then

A similar bound can be derived for the case \(i > n/2\). Taking into account that \(\rho (v_*)\) does not depend on i, using the union bound and equations (15) and (18), we get that

One can readily see that \(\Vert w\Vert _2 \le \sqrt{n \cdot \max _i w^2_i} = \sqrt{n} \max _i |w_i|\). Thus, we finally can bound the Euclidean norm of the vector w:

Now we can use Theorem 3. According to this result,

where \(\delta = \min _i |\lambda _i(A) - \rho (v_*)|\) over all \(\lambda _i \ne \widetilde{\lambda }\). Since we have assumed that (9) and (10) hold, by Proposition 1, \(\delta > \epsilon n\). Then, since \(v_*\) is normalized, a simple geometric consideration guarantees that

Let us denote the number of errors by

If we now remember that the vector \(v_*\) consists of \(\pm \frac{1}{\sqrt{n}}\), it is clear that for any i with \(\mathrm{sign}((v_*)_i) \ne \mathrm{sign}(\widetilde{v}_i)\)

The number of such coordinates is r. Therefore,

Then, by (19), the following chain of inequalities holds:

Hence, with high probability

Thus, the vector \(\widetilde{v}\) provides almost exact recovery. This completes the proof. \(\square \)

4.2 Strong Consistency of Higher-Order Spectral Clustering with Local Improvement

In order to derive a strong consistency result, we shall add an extra step to Algorithm 1. Given \(\widetilde{\sigma }\), the prediction of Algorithm 1, we classify each node to be in the community where it has the most neighbors, according to the labeling \(\widetilde{\sigma }\). We summarize this procedure in Algorithm 2, and Theorem 4 states the exact recovery result.

Remark 4

The local improvement step runs in \(O(n d_\mathrm{max})\) operations, where \(d_\mathrm{max}\) is the maximum degree of the graph. Albeit the local improvement step being convenient for the theoretical proof, we will see in Section 5 (Figure 3) that in practice Algorithm 1 already works well, often giving 100% accuracy even without local improvement.

Theorem 4

Let us consider the d-dimensional SGBM defined by (1)-(2), and connectivity probability functions \(F_\mathrm{in}\) and \(F_\mathrm{out}\) satisfying conditions (9)-(10). Then Algorithm 2 provides exact recovery for the given SGBM.

Proof

We need to prove that the almost exact recovery of Algorithm 1 (established in Theorem 2) can be transformed into exact recovery by the local improvement step. This step consists in counting neighbours in the obtained communities. For each node i we count the number of neighbours in both supposed communities determined by the sign of the vector \(\widetilde{v}\) coordinate:

According to Algorithm 2, if \(\widetilde{Z}_1(i) > \widetilde{Z}_2(i)\), we assign the label \(\widehat{\sigma }_i = 1\) to node i, otherwise we label it as \(\widehat{\sigma }_i = 2\). Suppose that some node i is still misclassified after this procedure and our prediction does not coincide with the true label: \(\widehat{\sigma }_i \ne \sigma _i\). Let us assume without loss of generality that \(\sigma _i = 1\) and, therefore, \(\widehat{\sigma }_i = 2\). Then, clearly, \(\widetilde{Z}_2(i) > \widetilde{Z}_1(i)\).

Let us denote by \(Z_1(i)\) and \(Z_2(i)\) degrees of node i in the communities defined by the true labels \(\sigma \):

Since \(\mathrm{sign}(\widetilde{v}_j)\) coincides with the true community partition for all but \(C\log n\) nodes (see the end of the proof of Theorem 2),

which implies that

Hence, taking into account that \(\widetilde{Z}_2(i) \, > \, \widetilde{Z}_1(i)\),

which means that the inter-cluster degree of node i is asymptotically not less than its intra-cluster degree (since \(Z_j(i) = \varTheta (n) \;\; w. h. p.\)). Intuitively, this should happen very seldom, and Lemma 4 in the Appendix gives an upper bound on the probability of this event. Thus, by Lemma 4, for large n,

Then each node is classified correctly with high probability and Theorem 4 is proved. \(\square \)

5 Numerical Results

5.1 Higher-Order Spectral Clustering on 1-Dimensional GBM

Let us consider a 1-dimensional GBM, defined in Example 2. We first emphasize two important points of the theoretical study: the ideal eigenvector for clustering is not necesarily the Fiedler vector, and some eigenvectors, including the Fiedler vector, could correspond to geometric configurations.

Figure 1 shows the accuracy (i.e., the ratio of correctly labeled nodes, up to a global permutation of the labels if needed, divided by the total number of nodes) of each eigenvector for a realization of a 1-dimensional GBM. We see that, although the Fiedler vector is not suitable for clustering, there is nonetheless one eigevector that stands out of the crowd.

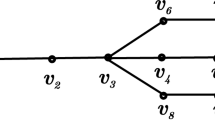

Then, in Figure 2 we draw the nodes of a GBM according to their respective position. We then show the clusters predicted by some eigenvectors. We see some geometric configurations (Figures 2a and 2c), while the eigenvector leading to the perfect accuracy corresponds to index 4 (Figure 2b).

Example of clustering done using the eigenvector associated to the k-th largest eigenvalue of the adjacency matrix of a 1-dimensional GBM (\(n = 150\), \(r_\mathrm{in}= 0.2\), \(r_\mathrm{out}= 0.05\)). For clarity edges are not shown, and nodes are positioned on a circle according to their true positions. The Fiedler vector (\(k = 2\)) is associated with a geometric cut, while the 4-th eigenvector corresponds to the true community labelling and leads to the perfect accuracy. The vector \(k = 8\) is associated with yet another geometric cut (Color figure online)

Figure 3 shows the evolution of the accuracy of Algorithms 1 and 2 when the number of nodes n increases. As expected, the accuracy increases with n. Moreover, we see no significant effect of using the local improvement of Algorithm 2. Thus, we conduct all the rest of numerical experiments with the basic Algorithm 1.

In Figure 4, we illustrate the statement of Proposition 2: for some specific values of the pair \((r_\mathrm{in}, r_\mathrm{out})\), the Conditions (9) and (10) do not hold, and Algorithm 1 is not guaranteed to work. We observe in Figure 4 that these pairs of bad values exactly correspond to the moments when the index of the ideal eigenvector jumps from one value to another.

Finally, we compare in Figure 5 the accuracy of Algorithm 1 with the motif counting algorithms presented in references [8] and [9]. Those algorithms consist in counting the number of common neighbors, and clustering accordingly. We call Motif Counting 1 (resp. Motif Counting 2) the algorithm of reference [8] (resp. of reference [9]). We thank the authors for providing us the code used in their papers. We observed that with present realizations the motif counting algorithms take much more time than HOSC takes. For example on a GBM with \(n = 3000\), \(r_\mathrm{in}= 0.08\) and \(r_\mathrm{out}= 0.04\), HOSC takes 8 seconds, while Motif Counting 1 takes 130 seconds and Motif Counting 2 takes 60 seconds on a laptop with 1.90GHz CPU and 15.5 GB memory.

5.2 Waxman Block Model

Let us now consider the Waxman Block Model introduced in Example 3. Recall that \(F_\mathrm{in}(x) = \min (1, q_\mathrm{in} e^{-s_\mathrm{in} x})\) and \(F_\mathrm{out}(x) = \min (1, q_\mathrm{out} e^{-s_\mathrm{out} x})\), where \(q_\mathrm{in}, q_\mathrm{out}, s_\mathrm{in}, s_\mathrm{out}\) are four positive real numbers. We have the following particular situations:

-

if \(s_\mathrm{out}= 0\), then \(F_\mathrm{out}(x) = q_\mathrm{out}\) and the inter-cluster interactions are independent of the nodes’ positions. If \(s_\mathrm{in} = 0\) as well, we recover the SBM;

-

if \(q_\mathrm{in}= e^{r_\mathrm{in}s_\mathrm{in} }\) and \(q_\mathrm{out}= e^{r_\mathrm{out}s_\mathrm{out}}\), then in the limit \(s_\mathrm{in}, s_\mathrm{out}\gg 1\) we recover the 1-dimensional GBM.

We show in Figure 6 the accuracy of Algorithm 1 on a WBM. In particular, we see that we do not need \(\mu _\mathrm{in}> \mu _\mathrm{out}\), and we can recover disassociative communities. However, there are obvious dips when \(q_\mathrm{in}\) is close to \(q_\mathrm{out}\) or \(s_\mathrm{in}\) is close to \(s_\mathrm{out}\). It is clear that if \(q_\mathrm{in}= q_\mathrm{out}\) on the left-hand side picture or \(s_\mathrm{in} = s_\mathrm{out}\) on the right-hand side picture, one cannot distinguish two communities in the graph. Thus, for small n, we observe some ranges around these ‘bad’ values where Algorithm 1 fails. As expected, the dips become narrower when n increases.

6 Conclusions and Future Research

In the present paper we devised an effective algorithm for clustering geometric graphs. This algorithm is close in concept to the classical spectral clustering method but it makes use of the eigenvector associated with a higher-order eigenvalue. It provides weak consistency for a wide class of graph models which we call the Soft Geometric Block Model, under some mild conditions on the Fourier transform of \(F_\mathrm{in}\) and \(F_\mathrm{out}\). A small adjustment of the algorithm leads to strong consistency. Moreover, our method was shown to be effective in numerical simulations even for graphs of modest size.

The problem stated in the current paper might be investigated further in several directions. One of them is a possible study on the SGBM with more than two clusters. The situation here is quite different from the SBM where the spectral clustering algorithm with few eigenvectors associated with the smallest non-zero eigenvalues provides good performance. In the SGBM even the choice of such eigenvectors is not trivial, since the ‘optimal’ eigenvalue for distinguishing two clusters is often not the smallest one.

Another natural direction of research is the investigation of the sparse regime, since all our theoretical results concern the case of degrees linear in n (this assumption is used for the analysis of the adjacency matrix spectrum and for finding the spectral gap around the ‘ideal’ eigenvalue \(\tilde{\lambda }\)). In sparser regimes, there are effective algorithms for some particular cases of the SGBM (e. g., for the GBM), but there is no established threshold when exact recovery is theoretically possible. Unfortunately, the method of the current paper without revision is not appropriate for this situation, and the technique will very likely be much more complicated.

Finally, considering weighted geometric graphs could be an interesting task for applications where clustering of weighted graphs is pertinent. For instance, the functions \(F_\mathrm{in}\) and \(F_\mathrm{out}\) can be considered as weights on the edges in a graph. We believe that most of the results of the paper may be easily transferred to this case.

References

Abbe, E.: Community detection and stochastic block models: recent developments. J. Mach. Learn. Res. 18(1), 6446–6531 (2017)

Abbe, E., Baccelli, F., Sankararaman, A.: Community detection on Euclidean random graphs. arXiv preprint arXiv:1706.09942v5 (2017)

Aheizer, N.I., Kemmer, N.: The Classical Moment Problem and Some Related Questions in Analysis. Oliver & Boyd, Edinburgh (1965)

Bordenave, C.: Eigenvalues of Euclidean random matrices. Random Struct. Algorithms 33(4), 515–532 (2008)

Demmel, J.W., Marques, O.A., Parlett, B.N., Vömel, C.: Performance and accuracy of Lapack’s symmetric tridiagonal eigensolvers. SIAM J. Sci. Comput. 30(3), 1508–1526 (2008)

Fiedler, M.: A property of eigenvectors of nonnegative symmetric matrices and its application to graph theory. Czechoslov. Math. J. 25(4), 619–633 (1975)

Fortunato, S.: Community detection in graphs. Phys. Rep. 486(3–5), 75–174 (2010)

Galhotra, S., Mazumdar, A., Pal, S., Saha, B.: The geometric block model. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Galhotra, S., Mazumdar, A., Pal, S., Saha, B.: Connectivity of random annulus graphs and the geometric block model. Approximation, Randomization, and Combinatorial Optimization. Algorithms and Techniques (APPROX/RANDOM 2019) 145, 53 (2019)

Kahan, W., Parlett, B., Jiang, E.: Residual bounds on approximate eigensystems of nonnormal matrices. SIAM J. Numer. Anal. 19(3), 470–484 (1982)

Lei, J., Rinaldo, A.: Consistency of spectral clustering in stochastic block models. Ann. Stat. 43(1), 215–237 (2015)

Penrose, M.D.: Connectivity of soft random geometric graphs. Ann. Appl. Probab. 26(2), 986–1028 (2016)

Spielman, D.A., Teng, S.H.: Spectral partitioning works: planar graphs and finite element meshes. Linear Algebra Appl. 421(2), 284–305 (2007)

Talagrand, M.: A new look at independence. Ann. Probab. 24(1), 1–34 (1996)

Von Luxburg, U.: A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007)

Waxman, B.M.: Routing of multipoint connections. IEEE J. Sel. Areas Commun. 6(9), 1617–1622 (1988)

Wheeden, R.L., Zygmund, A.: Measure and Integral: An Introduction to Real Analysis. Chapman & Hall, New York (1977)

Zhang, Y., Rohe, K.: Understanding regularized spectral clustering via graph conductance. In: Advances in Neural Information Processing Systems, pp. 10631–10640 (2018)

Acknowledgements

This work has been done within the project of Inria - Nokia Bell Labs “Distributed Learning and Control for Network Analysis”.

The authors are most grateful to Laura Cottatellucci, Mounia Hamidouche and Mindaugas Bloznelis for discussions that helped to stimulate this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Auxiliary Results

A Auxiliary Results

1.1 A.1 Hamburger Moment Problem for the Limiting Measure

Lemma 1

Assume that \(F: \mathbf {T}^d \rightarrow \mathbb R\) is such that F(0) is equal to the Fourier series of F(x) evaluated at 0 and \(0 \le F(x) \le 1\). Consider the measure \(\mu \) defined by the function F:

Then \(\mu \) is defined uniquely by the sequence of its moments \(\{M_n\}_{n = 1}^{\infty }\).

Proof

It is enough to show that Carleman’s condition holds true for \(\mu \) (see [3]):

As one can easily see,

From the bounds \(0 \le F(x) \le 1\) it follows that \(\widehat{F}(k) \le 1\). Then it is clear that \(\widehat{F}^n(k) \le \widehat{F}(k)\) for any \(n \in \mathbb N\). We can write

Here we used the assumption that F(0) equals its Fourrier series evaluated at 0. Then

Thus, the series in the right-hand side of (20) is divergent and Carleman’s condition is verified. \(\square \)

1.2 A.2 m-times Convolution

Lemma 2

Let \(m\in \mathbb {N}\) and \(F_1, \dots , F_m\) be integrable functions over \(\mathbf {T}^d\). Then,

with the notation \(z_{m+1} = z_1\).

Proof

With the change of variable \(u_i = z_i - z_{i+1}\) for \(i=1, \dots , m-1\), we have

We notice that

Hence,

Therefore,

which ends the proof. \(\square \)

1.3 A.3 Fourier Transform of the Square Wave

Lemma 3

Let \(0< r < \frac{1}{2}\). Let \(F : \mathbb {R}^d \rightarrow \mathbb {R}\) be 1-periodic such that \(F(x) = 1\left( \Vert x\Vert \le r \right) \) for \(x \in \mathbf {T}^d\). Then,

where \(k = (k_1, \ldots , k_d) \in \mathbb Z^d\) and

Proof

We shall use the set \([-1/2, 1/2]^d\) as a representation of \(\mathbf {T}^d\). Let us first notice that for \(x \in [-1/2, 1/2]^d\)

Then

Let us consider some \(1 \le j \le d\). If \(k_j = 0\), we have \(\int _{-1/2}^{1/2} 1(|x_j| \le r) d x_j = \int _{-r}^r d x = 2r\). Moreover, for \(k_j \not = 0\),

Hence,

as was needed. \(\square \)

1.4 A.4 Number of Neighbours in Different Clusters

Lemma 4

Let us consider the SGBM with connectivity probability functions \(F_\mathrm{in}\) and \(F_\mathrm{out}\) for which \(\mu _\mathrm{in}= \widehat{F}_\mathrm{in}(0) > \widehat{F}_\mathrm{out}(0) = \mu _\mathrm{out}\). Denote by \(Z_\mathrm{in}(i)\) (resp., \(Z_\mathrm{out}(i)\)) the ‘intra-cluster’ (resp., ‘inter-cluster’) degree of i:

Denote \(\mathcal {B}_i := \left\{ Z_\mathrm{in}(i) - Z_\mathrm{out}(i) \, < \, \sqrt{2(\mu _\mathrm{in}+ \mu _\mathrm{out}) n \log n} \right\} \). Then

Proof

Let us fix \(i \in [n]\). Clearly, \(Z_\mathrm{in}(i) \sim \mathrm {Bin}(\frac{n}{2} - 1, \mu _\mathrm{in})\) and \(Z_\mathrm{out}(i) \sim \mathrm {Bin}(\frac{n}{2}, \mu _\mathrm{out})\). We again use Chernoff inequality (14). By this bound, for \(t = \frac{2\sqrt{\log n}}{\sqrt{(n/2-1)\mu _\mathrm{in}}}\) and large enough n

By the same reason, \(Z_{out}\) is well concentrated around its mean \(\mu _\mathrm{out}\frac{n}{2}\):

Therefore, since \(\mu _\mathrm{in}> \mu _\mathrm{out}\),

By the union bound,

which proves the lemma. \(\square \)

1.5 A.5 Trace Operator is Lipschitz

Lemma 5

Let \(A, \widetilde{A} \in \{0,1\}^{n \times n}\) be two adjacency matrices, and \(m \ge 1\). Then,

Proof

Since A and \(\widetilde{A}\) are adjacency matrices of graphs without self-loops, we have \({\text {Tr}}A = 0 = {\text {Tr}}\widetilde{A}\). Hence \(\left| {\text {Tr}}A - {\text {Tr}}\widetilde{A} \right| =0 \le \frac{1}{n} {\mathrm{d}_\mathrm{Ham}}\left( A,\widetilde{A} \right) \), and the statement holds for \(m = 1\).

Let us now consider \(m \ge 2\). We have

with the notation \(i_{m+1} = i_1\). The quantity \(\prod _{j=1}^m A_{i_j i_{j+1} }\) is equal to one if \(A_{i_j i_{j+1} } = 1\) for all \(j=1,\dots , m\), and equals zero otherwise. Hence, the difference \(\left| \prod _{j=1}^m A_{i_j i_{j+1} } - \prod _{j=1}^m \widetilde{A}_{i_j, i_{j+1}} \right| \) is non-zero and is equal to one in two scenarii:

-

\(A_{i_j i_{j+1}}\) = 1 for all \(j = 1, \dots , m\), while there is a \(j'\) such that \(\widetilde{A}_{i_{j'} i_{j'+1}} = 0\),

-

there is a \(j'\) such that \(A_{i_{j'} i_{j'+1}} = 0\) and \(\widetilde{A}_{i_j i_{j+1}}\) = 1 for all \(j = 1, \dots , m\).

Thus,

But,

Similarly,

Therefore,

This leads to

where the last line holds since for all \(j = 1, \dots , m\) and \(m\ge 2\)

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Avrachenkov, K., Bobu, A. & Dreveton, M. Higher-Order Spectral Clustering for Geometric Graphs. J Fourier Anal Appl 27, 22 (2021). https://doi.org/10.1007/s00041-021-09825-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00041-021-09825-2