Abstract

We study diffusion-type equations supported on structures that are randomly varying in time. After settling the issue of well-posedness, we focus on the asymptotic behavior of solutions: our main result gives sufficient conditions for pathwise convergence in norm of the (random) propagator towards a (deterministic) steady state. We apply our findings in two environments with randomly evolving features: ensembles of difference operators on combinatorial graphs, or else of differential operators on metric graphs.

Similar content being viewed by others

1 Introduction

Randomly switching dynamical systems stand in between deterministic evolution equations (where the dynamics of the system is prescribed and completely known a priori) and stochastic differential equations, where the dynamics is perturbed by the introduction of noise.

Such systems are described by a continuous component, which follows a (deterministic) evolution driven by an operator \(A_j\) which is selected among a class of operators \(\mathcal C= \{A_1, \dots , A_n\}\) by a discrete jump process.

These problems are related to a large—but somehow disjoint—literature, which treats piecewise deterministic Markov processes [5, 11, 12, 29], switched dynamical systems [7], products of random matrices [22], random walk in random environment [39, 40] with applications in biology [10], physics [9] or finance [38], for instance.

In the present paper, we study the asymptotic behavior of a class of random evolution problems that may be relevant in some applications. Our main results (Theorems 2.11 and 2.13 below) state that the system consisting of a random switching between parabolic evolution equations driven by contractive, compact analytic semigroups satisfying suitable additional conditions converges towards an orthogonal projector provided the process spends enough time at each state, and we are able to estimate the rate of convergence: we refer to Sect. 2 for the theorems’ formulation and Sect. 3 for their proof. As a motivation to our study, we provide in this section an example concerning the dynamics of the discrete heat equation on a system of random varying graphs. This example will be further analyzed in Sect. 4, which is devoted to the study of combinatorial graphs: there we discuss some further examples which relate our results to the existing literature. Finally, Sect. 5 is devoted to an application of our theory to a randomly switching evolution system on metric graphs. This section takes advantage of a novel formal definition of metric graphs [30] which can be exploited to verify the assumptions of our construction.

1.1 A Motivating Example

Let \(\mathsf {G}_1, \ldots , \mathsf {G}_N\) be a family of simple (i.e., with no loops or multiple edges) but not necessarily connected graphs on a fixed set of vertices \(\mathsf {V}\) with cardinality \(|\mathsf {V}|\). We consider the function space defined as the complex, finite-dimensional Hilbert space \(\mathbb C^\mathsf {V}\equiv \{u: \mathsf {V}\rightarrow \mathbb C\}\).

On every graph \(\mathsf {G}_k\) we introduce the graph Laplacian \(\mathcal L_k\) (for a formal definition, see Sect. 4) , which (under our convention on the sign) is negative semi-definite and whose eigenvalue \(\lambda _1 =0\) has multiplicity equal to the number of connected components in \(\mathsf {G}_k\). The corresponding eigenspace is spanned by the collection of indicator functions on each connected component. In particular, if \(\mathsf {G}_k\) is connected, then \(\ker \mathcal L_k = \langle \mathbb {1}\rangle \) is the space of constant functions on the vertices.

It is known ([31, Ch. 4]) that the solution of the Cauchy problem

can be expressed in the form

and in the limit for \(t \rightarrow \infty \), it converges to the projector \({P_k}f\) onto the null space \(\ker \mathcal L_k\), where the projection equals the average of f on each connected component of \(\mathsf {G}_k\).

Let us introduce a random mechanism of switching the graphs over time. Having fixed a probability space \((\Omega , \mathcal F, \mathbb P)\), we introduce a Markov chain \(\{X_k,\ k \ge 0\}\)) with state space \(E = \{1, \dots , N\}\) (which defines the environment where the evolution takes place) and a sequence of increasing random times \(\{T_k,\ k \ge 0\}\), \(T_0 = 0\), such that the Cauchy problem is defined by the operator \(\mathcal L_{X_k}\) on the time interval \([T_k,T_{k+1})\)

We can associate with (1.1) the random propagator

which maps each initial data \(f\in \mathbb C^\mathsf {V}\) into the solution u(t) of (1.1) at time t. This settles the issue of well-posedness of (1.1). The main question we are going to address in this paper is however the following:

- (P):

-

Does the random propagator \((S(t))_{t\ge 0}\) converge as \(t \rightarrow \infty \)? Towards which limit?

The asymptotic behavior of a random propagator \((S(t))_{t\ge 0}\) associated with problem (1.1) has not yet been studied in a general, possibly infinite-dimensional setting. Some results are known for finite-dimensional time-discrete dynamical systems, where the random propagator \((S(T_n))_{n\in \mathbb N}\) defined likewise is a product of random matrices (PRM for short): this theory dates back to the 1960s, see e.g. Furstenberg [20].

In Theorem 2.11 we show that under suitable assumptions on the random switching mechanism and on the involved operators, the random propagator converges towards the orthogonal projection on \(\bigcap _{j=1}^N \ker \mathcal L_j\); and in Theorem 2.13 we estimate the rate of convergence. In Sect. 2 we set the mathematical stage and then formulate both results; their proofs are led in Sect. 3, after collecting some necessary lemmata of probabilistic and operator theoretical nature. Our main results require an accurate analysis of the null spaces of the operators driving the relevant evolution equations (in our motivating example, the discrete Laplacians \({\mathcal L_k}\)): notice that even when all \(\ker \mathcal L_k\) have the same dimension, there is no reason why this should agree with the dimension of \(K:= \bigcap _{j=1}^N \ker \mathcal L_j\); describing the orthogonal projector onto K is therefore, not an easy task. Coming back to our motivating example of graphs, we observe in Sect. 4 that K can be explicitly described in terms of the null space of a new operator A that is related to the Laplacians on the graphs \(\mathsf {G}_1,\ldots ,\mathsf {G}_N\) but acts on a different class of functions. The key point here is that in doing so we can relate the long time behavior of a Cauchy problem with random coefficients with that of an associated (deterministic) Cauchy problem supported on a different “union” structure. We are not going to elaborate on this functorial viewpoint, but content ourselves with discussing in Sect. 5 a different, more sophisticated setting where the same principle can be seen in action. While in our two main applications we focus for the sake of simplicity on heat equations, our abstract theory is by no means restricted to this scope: general reaction-diffusion equations, evolution equations driven by poly-harmonic operators, or even systems switching between these two classes could, for example, be studied as well, see Remark 2.14. An easy application to a heat equation (on a fixed interval) with random boundary conditions is sketched in Example 2.1.

The case of combinatorial graphs is tightly related to the topic of random walk in random environments, see e.g. the classical surveys by Zeitouni [39, 40], which roughly speaking describe the behavior of a random walker who at each step finds herself moving in a new realization of a d-dimensional bond-percolation graph. We also mention the connection to the somehow dual approach in [23], where Hussein and the third author develop a theory of evolution equations whose time domain is a (given!) tree-like metric graph: on each branch of the tree a different parabolic equation is considered. In comparison, in the present paper we restrict to the easiest possible case (the tree is simply \(\mathbb R_+\)), but its branches can have random length.

At the same time, if the evolution of \(\mathcal L(t)\) is, in fact, deterministic, then (1.1) is essentially a non-autonomous evolution equation; well-posedness theory of such problems is a classical topic of operator theory, while some criteria for exponential stability have been recently obtained in [2] in the context of diffusion on metric graphs: in comparison with ours, the conditions therein are much more restrictive in that each realization of the considered graph is assumed to be connected.

The convergence of piecewise deterministic Markov processes (or random switching system) is discussed, in particular concerning the ergodicity of the Markov processes [5, 11]. The results in [8] are concerned with the non-ergodicity of a switching system in the fast jump rate regime and open the path to similar results in [27].

2 Setting of the Problem and Main Results

In this section we introduce a general setting for abstract random evolution problems: we will successively show that our motivating problem (P) is but one special instance of a system that can be described in this way.

To begin with, we construct the random mechanism of switching by means of a semi-Markov process. These processes have been introduced by Levy [28] and Smith [36] in order to overcome the limitation induced by the exponential distribution of the jump-time intervals and developed by Pyke [33, 34]. These models are widely used in the literature to model random evolution problems and, more generally, evolution in random media, see e.g. Korolyuk [26].

Let \((Z(t),\ t \ge 0)\) be a semi-Markov process taking values in a set E, which denotes a given set of indices, defined on a suitable probability space \((\Omega , \mathcal F, \mathbb P)\). By definition, this means that there exists a Markov renewal process \(\{(X_n, \tau _n): n\in \mathbb N\}\), where \(\{X_n\}\) is a Markov chain with values in E and \(\{\tau _n\}\) are the renewal times between jumps. The distribution function of \(\tau _n\) depends on the state of the Markov process \(X_n\) and, conditioned on \(X_n = x\), it is given by

If we denote \(\theta _x\) the renewal time in the state x (i.e., the time spent in x before the next jump) then the distribution function of \(\theta _x\) is just \(\Phi _x(t)\).

If we introduce the counting process \(N(t) := \max \{n\,:\, T_n \le t\}\), then \(Z(t) = X_{N(t)}\). The joint distribution is given by the transition probability function q(x, y, t)

By definition, for fixed t, \((x,y) \mapsto q(x,y,t)\) is a sub-Markovian transition function, i.e.,

The non-negative random variables \(\tau _n\) define the time intervals between jumps, while the Markov renewal times \(\{ T_n,\ n \in \mathbb N\}\) defined by

are the regeneration times.

For simplicity, in the sequel we assume that random variables \(\{\tau _n\}\) are independent and the distribution of \(\tau _{n+1}\) only depends on the state of the Markov chain \(X_n = x\). Therefore, the transition probability function can be represented in the form

where \(\big ( \pi (x,y) \big )\) is a Markov transition matrix and \(\Phi _x(t)\) are, for any \(x \in E\), the probability distribution functions of the renewal time in the state x.

Clearly, Markov chains and Markov processes with discrete state space are examples of semi-Markov processes (the first is associated with \(\tau _n \equiv 1\), the second with independent, exponentially distributed \(\tau _n\)). Our standing probabilistic assumptions are summarized in the following.

Assumption 2.1

\(Z = (Z(t))_{t \ge 0}\) is a semi-Markov process based on a Markov renewal process \(\{ (X_n,\tau _n):n\in \mathbb N\}\) over the state space \(E\times [0,\infty )\) such that

-

(1)

the Markov transition matrix \(\big ( \pi (x,y) \big )\) defines an irreducible Markov chain with finite state space \(E = \{1, \dots , N\}\);

-

(2)

the inter-arrival times \(\tau _n\) are either constant, or their distribution functions \(\Phi _x(t)\), for every \(x \in E\), have a finite continuous density function \(\phi _x(t) > 0\) for a.e. \(t > 0\); and

-

(3)

the inter-arrival times \(\tau _n\) have finite expected value \(\mathbb E^x[\tau _n] = \mu _x > 0\).

The sequence of jump times associated to the process Z is \(T_0 = 0\), \(T_{n+1} = T_{n} + \tau _{n+1}\) for \(n \ge 0\).

Remark 2.2

Since the embedded Markov process X is irreducible, there exists a unique invariant distribution \(\rho = (\rho _1, \dots , \rho _N)\) for it.

Moreover, this implies that the total time spent in any state by the semi-Markov process Z is infinite almost surely, and the fraction of time spent in \(x \in E = \{1, \dots , N\}\) satisfies

Once our random environment has been described, we can introduce the evolution problem.

We consider an ensemble \(\mathcal K= \{ A_1, \dots , A_{N} \}\) of linear operators on a normed space H; clearly, the cardinality of \(\mathcal K\) is the same as that of the state space E of the Markov chain.

We can now introduce the abstract random Cauchy problem

where \(A(Z(t)) = A_{X_n}\) for \(t \in [T_n, T_{n+1})\). The solution of (2.2) is a random process, where the stochasticity enters the picture through the semi-Markov process \((Z(t))_{t\ge 0}\). Notice that (1.1) is a special case of (2.2) on the finite-dimensional space \(H = \mathbb C^d\).

In the literature, (deterministic) non-autonomous Cauchy problems of the form (2.2) are a classical topic with a well-developed theory, see e.g. [3, 15, 37]. In this paper, we shall use the following natural modification of the notion of solution.

Definition 2.3

Assume that there exists a finite partition \(0=T_0<T_1<\ldots <T_N=:T\) of [0, T] such that \(A(Z(t)) = A_{X_n}\) for all \(t\in [T_{n-1}, T_n)\), \(n=1,\ldots ,N\). We say that a càglàd function \(u:[0,T]\rightarrow H\) is a solution of (2.2) on [0, T] if

-

(1)

\(u\in C^1((T_{n-1}, T_n);H)\) for all \(n=1,\ldots ,N\);

-

(2)

\(u(t)\in D(A_{X_n})\) for all \(t\in (T_{n-1}, T_n)\) and \(n=1,\ldots ,N\);

-

(3)

\(u'(t) = A_{X_n} u(t) \) for all \(t \in (T_{n-1}, T_n)\) and \(n=1,\ldots ,N\).

Sufficient conditions for well-posedness of (2.2) are given by the following.

Assumption 2.4

H is a separable, complex Hilbert space and for every \(j \in \{1, \dots , N\}\) the closed, densely defined operator \(A_j : D(A_j) \subset H \rightarrow H\) generates a strongly-continuous, analytic semigroup of contractions and it has no spectral values on \(i\mathbb R\), with the possible exception of 0.

Definition 2.5

A solution u for (2.2) is a stochastic process \(\{u(t), t \ge 0\}\) which is required to be adapted to the filtration \(\{\mathcal F_t\}\) generated by the SMP Z:

and whose trajectories solve the identity \(u'(t) = A(Z(t))u(t)\) almost surely in the sense of Definition 2.3.

Existence and uniqueness of the solution in the sense of previous definition is a consequence of the well-posedness of the Cauchy problem driven by the operator \(A_{X_n}\) on the time interval \((T_{n-1}, T_n)\).

Theorem 2.6

Under the Assumptions 2.1 and 2.4, given \(f\in H\), (2.2) has a unique solution u, which can be expressed as \(u(t) = S(t)f\) in terms of the random propagator \((S(t))_{t\ge 0}\subset {\mathcal L}(H)\) defined by

In particular, u is a continuous function (almost surely).

Proof

Let us fix a path of the semi-Markov process Z, which is identified by the sequences of states \(\{X_n\}\) and times \(\{T_n\}\). By standard arguments (see e.g. [18]) there exists a unique solution u(t) of the Cauchy problem in \([0,T_1)\) with leading operator \(A_{X_0}\): this solution has limit \(u(T_1) = \lim _{t \uparrow T_1} u(t)\). Next, we consider the Cauchy problem in \([T_1,T_2)\) with leading operator \(A_{X_1}\) and initial condition \(u(T_1)\). Notice that the solution is continuous in \(T_1\). Again, there exists a unique solution which has limit in \(T_2\). We can repeat this argument: since by assumption \(\lim T_n = +\infty \) almost surely, we obtain the thesis. \(\square \)

We notice the following equivalent expression of the random propagator \((S(t))_{t\ge 0}\) in terms of the inter-arrival times \(\{\tau _n\}\) and the counting process \((N(t))_{t \ge 0}\) introduced above:

After establishing well-posedness of our abstract random Cauchy problem, we are interested in studying the long-time behavior of its solutions. To this purpose, we are going to impose the following.

Assumption 2.7

\(A_j\) has compact resolvent for every \(j \in \{1, \dots , N\}\).

It follows from the Assumptions 2.4 and 2.7 that each \(A_j\) has finite-dimensional null space, hence a fortiori

is finite-dimensional, too. If \(k:=\dim K>0\), then we denote by \(\{e_1,\ldots ,e_k\}\) an orthonormal basis of K.

We shall throughout denote by \(P_K\) the orthogonal projector onto K and \(P_j\) the projector onto \(\ker A_j\). In general, for a projector P, its orthogonal operator is \(P^\perp {:}{=}I - P\). For the sake of consistency of notation, we use the same notation also in the case \(K = \{0\}\).

Remark 2.8

In particular, it holds that \(A_j e_i=0\), for all \(j=1,\ldots , N\) and all \(i=1,\ldots ,k\). Since the range of \({P_K}\) is spanned by null vectors of \(A_j\) for each \(j=1,\ldots ,N\), \({P_K}\) commutes with each \(A_j\), each semigroup operator \(e^{t A_j}\), and each spectral projector \({P}_j\) onto \(\ker A_j\), \(j=1,\ldots ,N\), \(t\ge 0\).

Remark 2.9

Let A be an operator which satisfies our Assumptions 2.4 and 2.7. Notice that they require A to be dissipative and, thanks to Assumption 2.7, the spectrum of A is discrete. By [18, Cor. IV.3.12 and Cor. V.2.15] there exists a spectral decomposition \(H = H_0 \oplus H_d\) where \(H_0 = \ker (A)\) and \(H_d = H_0^\perp \) and the restriction of A to \(H_d\) generates an analytic contraction semigroup with strictly negative growth bound \(s_d(A) = \sup \{ \mathfrak {R}(\lambda ) \,:\, \lambda \in \sigma (A) \setminus \{0\}\} < 0\).

In order to examine the long time behavior of the solution, we introduce a notion of convergence in the almost sure sense.

Definition 2.10

We say that a random propagator \((S(t))_{t\ge 0}\subset {\mathcal L}(H)\) converges in norm \(\mathbb P\)- almost surely towards a (deterministic) operator \(M\in \mathcal L(H)\) if

Next result provides the main result about the asymptotic behaviour of the random propagator \((S(t))_{t\ge 0}\) associated to the Cauchy problem (2.2).

Theorem 2.11

Under the Assumptions 2.1, 2.4, and 2.7 the random propagator \((S(t))_{t\ge 0}\) for the Cauchy problem (2.2) converges in norm \(\mathbb P\)-almost surely towards the orthogonal projector \({P_K}\) onto \(K := \bigcap _{j=1}^N \ker A_j\).

Let us finally discuss the asymptotic behavior of the random evolution problem (2.2) under an additional assumption that is inspired by a result from [2], where non-autonomous diffusion equations on a fixed network are studied. Our aim is to study when the solution converges exponentially, for all initial data f, towards the orthogonal projector of f onto the eigenspace with respect to the simple eigenvalue 0. Adapting the ideas of [2] to our general setting, we shall impose the following.

Assumption 2.12

There is one operator, say \(A_1\), whose null space \(\ker A_1\) is contained in the null space of all other operators \(A_2,\ldots ,A_N\). In this case, we have that \(K := \bigcap _{j=1}^N \ker A_j = \ker A_1\).

It turns out that under this additional assumption \((S(t))_{t\ge 0}\) converges in norm exponentially fast towards the orthogonal projector \(P_K\) onto K. We stress that this is again a probabilistic assertion, since the result depends on the path of the system—actually, on the number of visits to the state corresponding to \(A_1\).

Theorem 2.13

Under the assumptions of Theorem 2.11, let additionally the Assumption 2.12 hold. Then the almost sure convergence of the random propagator towards \(P_K\) is exponential with rate \(\alpha \), where

and \(s_d(A_1)\), introduced in Remark 2.9, is strictly negative thanks to the Assumption 2.12 and \(\Theta _1\) was introduced in (2.1).

We postpone the proofs of our main results to Sect. 3.

Remark 2.14

1) The Assumption 2.4 is especially satisfied if each \(A_j\) is self-adjoint and negative semi-definite. In this case, moreover, \(s_d(A_j) = \lambda _{k_j+1}(A_j)\) the largest non-zero eigenvalue, where \(k_j\) is the dimension of \(\ker (A_j)\) (we denote \(\lambda _k\) the sequence of eigenvalues of \(A_j\), and w.l.o.g. we assume that they are ordered: \(\lambda _1 \ge \lambda _2 \ge \dots \); then \(\lambda _k = 0\) for all \(k < k_j\)).

There are, however, further classes of operators satisfying it. If the semigroup generated by \(A_j\) is positive and irreducible, for example, it follows from the Kreĭn–Rutman Theorem that the generator’s spectral bound is a simple, isolated eigenvalue. A more general class of examples can be found invoking the theory of eventually positive semigroups, see [13, Thm. 8.3].

2) If we strengthen Assumption 2.12 by requiring that all operators in the ensamble \(\mathcal K\) satisfy \(\ker (A_j) = K\), then the statement of Theorem 2.13 becomes deterministic in the sense that the convergence towards the orthogonal projector \({P_K}\) always occurs.

2.1 Randomly Switching Heat Equations

The scope of our result is not restricted to graphs and networks. To illustrate this, we consider a toy model—a heat equation with initial data \(f\in L^2(0,1)\), under different boundary conditions—where the switching takes place at the level of operators, rather than underlying structures. Here we show that convergence to the projector onto the intersection of the null spaces holds. A more complex example, where the thermostat model with switching in the boundary conditions, is given in [27]: in that case, non-ergodicity is possible under certain conditions on the parameters.

-

(1)

We first consider two different realizations \(A_1\), \(A_2\) of the Laplacian acting on \(L^2(0,1)\): with Neumann and with Krein–von Neumann boundary conditions, which lead to the domains

$$\begin{aligned} D(A_1):= \Big \{u \in H^2(0,1): u'(0) = u'(1) = 0\Big \} \end{aligned}$$(2.5)and

$$\begin{aligned} D(A_2):=\left\{ u \in H^2(0,1):u'(0) = u'(1) =u(1)-u(0)\right\} \end{aligned}$$(2.6)respectively, [35, Exa. 14.14]. Both operators satisfy the Assumption 2.4. Furthermore, the null space of the former realization is one-dimensional, as it consists of the constant functions; whereas a direct computation shows that null space of the latter realization is 2-dimensional, as it consists of all affine functions on [0, 1]; hence the intersection K of both null spaces is spanned by the constant function \(\mathbb {1}\) on (0, 1). Both associated heat equations are well-posed, yet the latter is somewhat exotic in that the governing semigroup is not sub-markovian. We are interested in the long-time behavior of this mixed system (2.2), with \(A(Z(t))\in \{A_1,A_2\}\): if the switching obeys the rule in the Assumption 2.1, the random propagator \((S(t))_{t\ge 0}\) converges in norm \(\mathbb P\)-almost surely towards the orthogonal projector onto the intersection of both null spaces, i.e., onto the space of constant functions on [0, 1]; hence the solution of the abstract random Cauchy problem (2.2) converges \(\mathbb P\)-almost surely towards the mean value of the initial data \(f\in L^2(0,1)\).

-

(2)

On the other hand, if we aim at studying the switching between Dirichlet and Neumann boundary conditions, and thus introduce the realization \(A_3\) with domain

$$\begin{aligned} D(A_3):= \{u \in H^2(0,1): u(0) = u(1) = 0\}, \end{aligned}$$(2.7)then one sees the intersection space K is trivial, as \(\ker A_3=\{0\}\), hence the random propagator converges in norm \(\mathbb P\)-almost surely to 0 if the Assumption 2.1 is satisfied.

-

(3)

Also observe that upon perturbing \(A_3\) we find the new operator

$$\begin{aligned} \begin{aligned} \tilde{A}_3 u&:= A_3 u+\pi ^2 u\\ D(\tilde{A}_3)&:=D(A_3), \end{aligned} \end{aligned}$$whose null space is now one-dimensional, as it is spanned by \(\sin (\pi \cdot )\). Nevertheless, \(\ker A_1\cap \ker \tilde{A}_3=\{0\}\), hence again under the Assumption 2.1 the system switching between \(A_1,\tilde{A}_3\) converges towards 0.

-

(4)

Finally, let us consider a switching between \(A_1\) and \(A_4\) defined as

$$\begin{aligned} \begin{aligned} A_4 u&:= \frac{d}{dx}\left( p\frac{du}{dx}\right) \\ D(A_4)&:=D(A_1), \end{aligned} \end{aligned}$$where \(p\in W^{1,\infty }(0,1)\), \(p(x)>0\) for all \(x\in [0,1]\). Because \(\ker A_1\) and \(\ker A_4\) both agree with the space of constant functions, under the Assumption 2.1 the random propagator converges in norm \(\mathbb P\)-almost surely towards the orthogonal projector onto the space of the constant functions.

Moreover, as a consequence of Theorem 2.13 we can observe the exponential convergence of the random propagator for some (but not all) of these toy models. In particular, this holds whenever we take \(A_3\) in the ensemble \(\mathcal K\): indeed, we have \(K=\{0\}\) and then the Assumption 2.12 is satisfied, since the first eigenvalue \(\lambda _1^{(3)}\) of \(A_3\) is strictly negative. The exponential convergence of \((S(t))_{t\ge 0}\) can be shown also for randomly switching systems where \(\mathcal K\subset \{A_1,A_2,A_4\}\). In all of these cases K agrees with the space of constant functions on (0, 1), hence it is one-dimensional and the Assumption 2.12 is still fulfilled, since the second eigenvalue \(\lambda _{2}^{(j)}<0,\) for \(j=1,4\). On the other hand, we cannot apply Theorem 2.13 and then prove the exponential convergence of the random propagator for all those models which switch \(\tilde{A}_3\) with \(A_1\) or/and \(A_2\) or/and \(A_4\). In fact, this implies that the intersection space K is trivial again, but no one operator has strictly negative first eigenvalue.

3 Technical Lemmas and Proofs

3.1 A Monotonicity Lemma

The following lemma 3.4 provides the crucial tool to prove the assertion of Theorem 2.11. It shows how we can bound the norm of the random product of matrices which generates the random propagator \((S(t))_{t\ge 0}\) with respect to the stopping times.

Let \(L\ge N\) and \((k_1, \dots , k_L)\) be a sequence of indices that covers the whole \(E = \{1, \dots , N\}\). Given an ensemble \(\mathcal K\) of operators satisfying the Assumption 2.4, let us consider the associated sequence of operators \((A_{k_1}, \dots , A_{k_L})\) taken from \(\mathcal K\). We shall denote \(P_j\) the projection on the kernel \(\ker A_j\) and \(P_K\) the projection on \(K = \cap _{j=1}^L \ker A_{k_j} = \cap _{i=1}^N \ker A_i\).

Remark 3.1

In the proof we will need some known results in functional analysis: if T is a compact operator on a reflexive Banach space X, then there exists x belonging to the unit sphere of X such that \(\Vert T\Vert =\Vert Tx\Vert \), i.e., the norm of T is attained: see e.g. [1, Cor. 1]. This is in particular true if \(T=T(t)\) for some \(t>0\), provided the semigroup generated by A is analytic (or even merely norm continuous) and A has compact resolvent, see [18, Thm. II.4.29]. Finally, we will need the well-known fact that the compact operators form a two-side ideal in in the space \(\mathcal L(H)\) of bounded linear operators on H.

The following results are necessary steps in order to prove the main result of this section.

Lemma 3.2

Let \((T(t))_{t\ge 0}\) be a contractive, analytic strongly continuous semigroup on a Hilbert space H whose generator A has compact resolvent and no eigenvalue on the imaginary axis, with the possible exception of 0. Then the following assertions hold:

-

(1)

\(\Vert T(t')x\Vert <\Vert T(t)x\Vert \) for all \(x\not \in \ker A\) and all \(t'>t\ge 0\);

-

(2)

\(\ker A=\{x\in H:\Vert T(t_0)x\Vert =\Vert x\Vert \}\) for some \(t_0>0\).

Proof

(1) Fix \(x\not \in \ker A\) and let \(0 \le t < t'\).

Let us first consider the case of injective A, so that \(P = 0\), where we denote by P the orthogonal projector onto \(\ker A\). Then \(x \not = 0\), and \(T(t)x \rightarrow 0\) as \(t \rightarrow \infty \) by the Jacobs–deLeeuw–Glicksberg theory, see [18, Thm. V.2.14 and Cor. V.2.15]. Due to analyticity of the semigroup, the mapping \(\varphi : (0,\infty ) \ni t \mapsto \Vert {T(t)x}\Vert ^2 \in \mathbb {R}\) is real analytic: indeed, for each \(x\in H\) the mapping \((0,\infty ) \ni t \mapsto T(t)x\in H\) is real analytic, hence it can be represented by an absolutely converging power series, say \(T(t)x=\sum _{k=0}^\infty t^k f_k \); but then, the Cauchy product of \(\sum _{k=0}^\infty t^k f_k \) with itself, given by \(\sum _{m=0}^\infty t^m \sum _{l=0}^m (f_l,f_{m-l})\), is absolutely converging towards \(\Vert {T(t)x}\Vert ^2=(T(t)x,T(t)x)\).

If \(\Vert T(t)x\Vert = \Vert T(t')x\Vert \), then \(\varphi \) is constant on the interval \([t,t']\): indeed, by contractivity of the semigroup

Due to the identity theorem for real analytic functions, \(\varphi \) is now constant on \((0,\infty )\)—a contradiction, since \(\varphi (t) \rightarrow \Vert x\Vert ^2 \not = 0\) as \(t \searrow 0\), but \(\varphi (t) \rightarrow 0\) as \(t \rightarrow \infty \). This proves the theorem in case that \(P = 0\).

Let us now consider the case of general P: observe that \(Px\ne x\), since \(x \not \in \ker A\). Applying the first step of the proof to the restriction of \((T(t))_{t\ge 0}\) to the \(H\ominus \ker A\), we see that

hence by Pythagoras’ theorem

where the second to last identity holds because the fixed space of \((T(t))_{t\ge 0}\)

agrees with the null space of its generator A by [18, Cor. IV.3.8], hence \(T(t)y=y\) for all \(y\in \ker A\) and all \(t\ge 0\).

(2) We see that

This concludes the proof, since as recalled before \(\ker A=\mathrm{fix}(T(t))_{t\ge 0}\). \(\square \)

The following is probably linear algebraic folklore, but we choose to give a proof since we could not find an appropriate reference.

Lemma 3.3

Let H be a Hilbert space and let \(P_1,\ldots ,P_m\) be finitely many orthogonal projectors on H; let \(P_K\) be the orthogonal projector onto \(\displaystyle K:=\bigcap _{i=1}^m {{\,\mathrm{rg}\,}}P_i\). If \(P_i\) is compact for at least one \(i=1,\ldots ,L\), then the operator \(P_{m} \dots P_{1} P_K^\perp \) has norm strictly less than 1:

Proof

It is obvious that \(\Vert P_{m} \dots P_{1} P_K^\perp \Vert \le 1\). We proceed by contradiction and assume that

Since at least one \(P_i\) is compact, so is the whole product, hence it is norm-attainable, see Remark 3.1: there exists \(x \in H\) with \(\Vert x\Vert = 1\) such that \(\Vert P_{m} \dots P_{1} P_K^\perp x\Vert = \Vert x\Vert = 1\).

Notice that

hence \(\Vert P_K^\perp x\Vert = 1 = \Vert x\Vert \) and it follows that \(x = P_K^\perp x\). We then substitute in previous equality and get

and the same reasoning implies \(\Vert P_1 x \Vert = 1\), and \(x = P_1 x\). Reiterating the same argument we obtain \(x = P_j x\) for any \(j=1, \dots , m\), therefore \(x \in K\); but we have \(x = P_K^\perp x\), which implies \(x=0\), a contradiction to \(\Vert x\Vert =1\). Therefore, (3.2) is false and the thesis follows. \(\square \)

We now proceed to prove the main result of this section. Recall that the operators \(A_j\) are assumed to be dissipative and \(P_j\) is the projection on \(\ker A_j\).

Lemma 3.4

Under the Assumptions 2.4 and 2.7, let \(L\ge N\) and \((k_1, \dots , k_L)\) be a sequence of indices that covers the whole \(E = \{1, \dots , N\}\). For \(\eta > 0\) small enough there exists \(\delta > 0\) such that, for \(t_i \ge \delta > 0\), \(i=1, \dots , L\), we have

Proof

Recall that \(P_i\) is the orthogonal projection on \(\ker A_i\), \(K = \cap _{i=1}^N \ker (A_i)\), and the projection on K satisfies \(P_K P_i = P_K = P_i P_K\), \(P_K^\perp P_i^\perp = P_i^\perp = P_i^\perp P_K^\perp \). Recall that Young’s inequality for product implies that \(ab \le \frac{a^2}{2\varepsilon } + \frac{\varepsilon b^2}{2}\) for every \(\varepsilon > 0\); we further simplify by noticing that \(2\varepsilon > \varepsilon /2\), hence the first term on the right is bounded by \(\frac{a^2}{\varepsilon /2}\). In the sequel, we shall repeatedly use this estimate in the form: for all \(\alpha >0\), \((a + b)^2 \le (1+\alpha ) a^2 + (1 + \alpha ^{-1}) b^2\).

We have

where we use the fact that \(e^{t_1A_{k_1}}P_{k_1}x = P_{k_1}x\) for any \(x \in H\), \(t_1 \ge 0\), and that \(\ker A_{k_1}\supset K\), so \(( \ker A_{k_1})^\perp \subset K^\perp \); the first estimate follows from Young’s inequality. Notice further that all semigroups involved are contraction operators, hence \(\Vert e^{t_L A_{k_L}} \dots e^{t_2A_{k_2}}\Vert ^2 \le 1\); finally, we have \(\Vert e^{t_1A_{k_1}}P_{k_1}^\perp x \Vert \le e^{- t_1 s_d(A_{k_1})} \Vert P_{k_1}^\perp x \Vert \le e^{- t_1 s_d(A_{k_1})} \Vert x \Vert \). We recall that \(s_d(A_j)\) is the growth bound of the operator \(A_j\) on \(( \ker A_{j})^\perp \), see Remark 2.9, and in our assumptions \(s_d(A_j) < 0\). It follows that

We continue by splitting the first term in the right hand side

and by recursion, we finally obtain

The operator in the first term is bounded in norm by \(1-\varepsilon \), thanks to Lemma 3.3; therefore, we obtain the estimate

The thesis follows by first taking \(\alpha \) small enough such that the first addendum is bounded by \(1-2\eta \), then taking \(\delta \) large enough such that the second addendum is bounded by \(\eta \).Footnote 1

\(\square \)

Notice that in previous lemma there is not any a priori bound on the required \(\delta \), which can be arbitrarily large. However, in the case of a fixed, deterministic clock, the same result follows for arbitrary \(\delta > 0\) however small.

Lemma 3.5

Under the Assumptions 2.4 and 2.7, let \(L\ge N\) and \((k_1, \dots , k_L)\) be a sequence of indices that covers the whole \(E = \{1, \dots , N\}\). Then for all \(\delta >0\)

Proof

Let us now prove the inequality by contradiction: because all semigroups as well as the projector \(P_K^\perp \) are contractive and hence certainly \(\Vert P_K^\perp e^{\delta A_{k_L}} \cdots e^{\delta A_{k_1}}\Vert \le 1\), it suffices to assume that

since the product operator is a compact operator, as stated before, there would then exist some \(x\in H\), \(x\ne 0\), with \(\Vert P_K^\perp e^{\delta A_{k_L}} \cdots e^{\delta A_{k_1}}x\Vert =\Vert x\Vert \). Because

it follows that \(\Vert e^{\delta A_{k_1}}x\Vert =\Vert x\Vert \) and hence, by Lemma 3.2.(2), \(x\in \ker A_{k_1}\), i.e., \(e^{\delta A_{k_1}}x=x\). Proceeding recursively we see that \(x\in \bigcap _{i=1}^L \ker A_{k_i}\subset K\), whence \(e^{\delta A_{k_i}}x=x\) for all i and hence

a contradiction. \(\square \)

3.2 Proof of Theorem 2.11

We aim to apply Lemma 3.4, hence we start by fixing a sequence \(\xi = (\xi _0,\dots , \xi _{L-1})\) of states in E which covers E and is admissible for the sequence X, meaning that the probability that X passes from the successive elements of \(\xi \) in the given order is positive. Since X is irreducible, we can also require that the transition \(\xi _{L-1} \rightarrow \xi _0\) has positive probability.

Next step is to prove that, almost surely, the sequence \(\xi \) occur infinitely times in the path of X and that the waiting times are longer than \(\delta \). Then the theorem follows as a consequence of our construction.

In the sequel, the constant L is fixed and given by the length of the sequence \(\xi \).

It is known that an irreducible Markov chain with finite state space \(\{X_n\}\) is recurrent, i.e., every state is visited infinitely times, with a finite mean waiting time between successive visits. In the sequel, we need to prove that also any admissible cycle is recurrent.

An admissible cycle is a finite sequence of states of fixed length L which returns to the starting point with positive probability. Formally, we require that \(\xi = (\xi _0, \xi _1, \dots , \xi _{L-1})\) is an admissible cycle if

- -:

-

the Markov chain follows this cycle with a strictly positive probability:

$$\begin{aligned} p_{\xi _0, \xi _1} \cdots p_{\xi _{L-2}, \xi _{L-1}} p_{\xi _{L-1}, \xi _{0}} > 0; \end{aligned}$$ - -:

-

by a suitable rotation of the indexes, it is always possible to let \(\xi _0 = X_0\).

Now, we can divide the path of X in blocks of length L: \(Y(0) = (X_0, \dots , X_{L-1})\), \(Y(1) = (X_L, \dots , X_{2L-1})\), ..., and consider the stochastic process Y taking values in a subset \(\Lambda \) of \(E^L\), where \(\Lambda = \{\theta = (\theta _0, \dots , \theta _{L-1}) \in E^L \,:\, \prod p_{\theta _i,\theta _{i+1}} > 0\}\).

Proof

In order to prove Markov property, we exploit the Markov property of the process X and we obtain

We shall denote \(\bar{p} = \big ( \bar{p}_{\theta ,\eta }\big )_{\theta ,\eta \in \Lambda }\) the transition matrix associated to the Markov chain Y. \(\square \)

Let d be the period of the Markov chain X (\(d=1\) if the chain is aperiodic). We notice first that d|L. If \(d > 1\), the state space E can be divided into p sub-classes \(E_0, \dots , E_{d-1}\), such that \(\cup E_i = E\), and \(E_i \cap E_j = \emptyset \) for \(i \not = j\). For an aperiodic irreducible chain, we can set \(E=E_0\). It holds that \(p^{n}(x,y) > 0\) only if \(x \in E_i\) and \(y \in E_{i+n}\) (where all the indices are taken modulus d) and \(p^{nd}(x,y) > 0\) for all sufficiently large n and for all x, y in the same class \(E_r\).

The Markov chain Y inherits an analog division, i.e., \(\Lambda = C_0 \cup \dots \cup C_{d-1}\), where \(C_i = \{\theta = (\theta _0, \dots , \theta _{L-1}) \in \Lambda ,\mid \theta _0 \in E_i\}\). Assume that there exists n such that \(\bar{p}^n(\theta , \eta ) > 0\). Then \(\theta _0 \in E_i\), \(\theta _{L-1} \in E_{i+L-1} = E_{i-1}\) (since L is a multiple of d, hence \(i+L-1 \cong i-1\) (mod d)), \(\eta _0 \in E_{i + nL} = E_i\). If \(d > 1\), previous computation implies that Y is no longer irreducible; however, if one consider the restriction of Y to any of the classes \(C_i\), we have the following result.

Proposition 3.6

If X is a homogeneous, irreducible Markov chain of period d, then Y restricted to any of the classes \(C_i\) (\(i+0, \dots , d-1\)) is a homogeneous, irreducible, aperiodic Markov chain on the given class (if X is aperiodic, i.e. \(d=1\), then Y is irreducible and aperiodic on the whole \(\Lambda \)).

Proof

Let \(\theta \) and \(\eta \) in the same class \(C_i\), which implies that \(\theta _{L-1} \in E_{i-1}\) and \(\eta _0 \in E_i\). Then there exists \(n_0\) large enough such that for \(n > n_0\), \(p^{nd + 1}(\theta _{L-1}, \eta _0) > 0\). Taking \(n > n_0\) in such a way that \(k = n d/L\) is integer, we get that \(\bar{p}^{k}(\theta , \eta ) > 0\), but since \(\theta \) and \(\eta \) are arbitrary, this implies that the class \(C_i\) is closed and irreducible. Moreover, since \((k + 1) L = (n + L/d) d\) it follows that also \(\bar{p}^{k+1}(\theta , \eta ) > 0\), which implies that Y is aperiodic on the class \(C_i\), and this concludes the proof. \(\square \)

Corollary 3.7

Assume that X is a homogeneous, irreducible Markov chain. Then any admissible state y for the Markov chain Y is recurrent.

Let \(\xi = (\xi _0,\dots , \xi _{L-1})\) be the admissible cycle fixed at the beginning of the proof. Recall the representation of S(t) given in (2.4), and take \(t = n L - 1\) for simplicity:

Let \(N(n) = \sum _{k=0}^n \mathbb {1}_{Y_k = \xi } \mathbb {1}_{\tau _{kL+1} \ge \delta } \dots \mathbb {1}_{\tau _{k(L+1)} \ge \delta }\) be the number of visit up to time n to the state \(\xi \) by the Markov chain Y introduced above, such that all the waiting times in the successive states are at least as long as \(\delta \). Since the state \(\xi \) is recurrent for the chain Y and the events \(\{\tau _{kL+1} \ge \delta \}\) are independent and have strictly positive probability, it follows that \(N(n) \rightarrow \infty \) as \(n \rightarrow \infty \), almost surely.

Now, we notice that in the right hand side of (3.6) we have N(n) terms which can be bounded, thanks to Lemma 3.4, by \(1-\eta < 1\) and the remaining terms have norm bounded by 1, hence

By Remark 2.8 we can write the random propagator as

and the thesis

follows by (3.7).

3.3 Proof of Theorem 2.13

As done in the proof of Theorem 2.11, we can write the random propagator as

and show that \(\Vert P_K^\perp S(t)\Vert \rightarrow 0\) as \(t \rightarrow +\infty \) in order to obtain the thesis.

Denote \(u(t) := S(t)f\) for all initial data f; we can estimate the norm of the vector \(P_K^\perp u(t) \in H\) by

where the last equality holds due to Remark 2.8 and because \(\frac{d}{ds}\) and \(P_K^\perp \) commute. We split the above integral with respect to the various states of Z(t):

and since all the \(A_k\)’s are dissipative, we have the trivial estimate

Now, by Assumption 2.12, \(K = \ker (A_1)\) and, by Remark 2.9, \(A_1\) restricted to \(K^\perp \) has growth bound \(s_d(A_1)\) strictly negative, hence the above estimate becomes

By Gronwall’s Lemma we deduce that

The thesis now follows from Remark 2.2: indeed, the integral diverges to \(+\infty \) \(\mathbb P\)-almost surely, hence \(||P_K^\perp S(t)|| \rightarrow 0\). Moreover, as stated in Remark 2.2, the fraction of time spent in a state by the semi-Markov process Z can be computed to be

where \(\Theta _1\) was introduced in Remark 2.2. It follows from (3.9) that the speed of convergence to 0 is at least equal to \((-2 s_d(A_1))\Theta _1\).

4 Combinatorial Graphs

A simple (finite, undirected) combinatorial graph \(\mathsf {G}= (\mathsf {V}, \mathsf {E})\) is a couple defined by a finite set \(\mathsf {V}\) of vertices \(\mathsf {v}\) and a subset \(\mathsf {E}\subset \mathsf {V}^{(2)}\) of unordered pairs \(\mathsf {e}:=\{\mathsf {v},\mathsf {w}\}\) of elements of \(\mathsf {V}\); such a pair \(\mathsf {e}\) is interpreted as the edge connecting the vertices \(\mathsf {v},\mathsf {w}\).

Given a simple graph \(\mathsf {G}= (\mathsf {V}, \mathsf {E})\), let us introduce a positive weight function on the set of vertices \(\mathsf {V}\)

which induces the scalar product

on the space \(\mathbb C^\mathsf {V}\) of complex valued functions \(f :\mathsf {V}\rightarrow \mathbb C\): we denote by \(\ell ^2_m(\mathsf {V})\) the Hilbert space \(\mathbb C^\mathsf {V}\) with respect to \((\cdot ,\cdot )_m\). In addition, let

be a positive weight function on the set of edges \(\mathsf {E}\). We call the 4-tuple \((\mathsf {V}, \mathsf {E}, m, \mu )\) a weighted combinatorial graph.

Remark 4.1

We stress that each weighted graph is a metric space with respect to the shortest path metric; while the topology does depend on the weights, any two weights define equivalent topologies, and in particular it does not depend on \(m,\mu \) whether \(\mathsf {G}\) is connected or not.

Let us recall the notion of discrete Laplacian (or Laplace–Beltrami matrix) \(\mathcal L_{m,\mu }\) on a weighted graph \(\mathsf {G}=(\mathsf {V},\mathsf {E},m,\mu )\), cf. [31, § 2.1.4]—or shortly: weighted Laplacian. For any vertex \(\mathsf {v}\in \mathsf {V}\), let \(\mathsf {E}_\mathsf {v}\) denote the set of all edges having \(\mathsf {v}\) as an endpoint. Then \(\mathcal L_{m,\mu } :\ell ^2_m(\mathsf {V}) \rightarrow \ell ^2_m(\mathsf {V})\) is defined by

\(\mathcal L_{m,\mu }\) reduces to the discrete, negative semi-definite Laplacian if \(\mu \equiv 1\) and \(m\equiv 1\); i.e., \(\mathcal L_{1,1}\) is minus the Laplacian matrix that is common in the literature [31, § 2.1.4]. Indeed, we stress that we have not adopted the usual sign convention of algebraic graph theory, as any such \(\mathcal L_{m,\mu }\) is self-adjoint and negative semi-definite. More generally, \(\mathcal L_{m,\mu }\) satisfies the Assumptions 2.4 and 2.7 and it can be shown that \(\mathcal L_{m,\mu }\) (and not \(-\mathcal L_{m,\mu }\)) generates a Markovian semigroup. The associated sesquilinear form \(q :\ell ^2_m(\mathsf {V}) \times \ell ^2_m(\mathsf {V}) \rightarrow \mathbb C\) is given by

and satisfies

accordingly, its Rayleigh quotient is

It follows from (4.1) that \(\lambda = 0\) is an eigenvalue of each weighted Laplacian \(\mathcal L_{m,\mu }\): the associated eigenfunctions are constant on each connected component of \(\mathsf {G}=(\mathsf {V},\mathsf {E},m,\mu )\). Therefore, it turns out that the null space of \(\mathcal L_{m,\mu }\) agrees with the null space of the unweighted Laplacian (on \((\mathsf {V},\mathsf {E})\)) associated with \(\mathsf {G}\).

4.1 The General Model

Throughout this section we consider a finite collection \(\mathcal C\) of graphs.

Assumption 4.2

\(\mathcal C= \{\mathsf {G}_1, . . . , \mathsf {G}_N \}\), where \(\mathsf {G}_1=(\mathsf {V},\mathsf {E}_1,m_1,\mu _1), . . . , \mathsf {G}_N=(\mathsf {V},\mathsf {E}_N,m_N,\mu _N)\) are simple graphs with same vertex set \(\mathsf {V}\) but possibly different edge sets \(\mathsf {E}_i\), vertex weights \(\mu _i\), and edge weights \(\mu _i\), \(i=1,\ldots ,N\).

The following seems to be natural but not quite standard: we prefer to note it explicitly.

Definition 4.3

(Union and intersection of weighted graphs) The union of \(\mathsf {G}_i=(\mathsf {V},\mathsf {E}_i,m_i,\mu _i)\), \(i=1,\ldots ,N\), is the weighted graph \(\mathsf {G}_\cup =(\mathsf {V},\mathsf {E},m,\mu )\) with set of vertices \(\mathsf {V}\), set of edges \(\mathsf {E}:= \bigcup _{i=1}^N \mathsf {E}_i\), vertex weights

and edge weights

Likewise, the intersection of \(\mathsf {G}_i=(\mathsf {V},\mathsf {E}_i,m_i,\mu _i)\), \(i=1,\ldots ,N\), is the weighted graph \(\mathsf {G}_\cap =(\mathsf {V},\mathsf {E},m,\mu )\) with set of vertices \(\mathsf {V}\), set of edges \(\mathsf {E}:= \bigcap _{i=1}^N \mathsf {E}_i\), vertex weights

and edge weights

here we set \(\mu _i(\mathsf {e}):= 0\) if \(\mathsf {e}\not \in \mathsf {E}_i\).

In this way, it is possible to study the behavior of the intersection of the null spaces of the Laplacian operators \(\mathcal L_{m_k,\mu _k}(\mathsf {G}_k)\) associated with the graphs in \(\mathcal C\). This result seems interesting on its own, since it explicitly connects the geometry of the graph with the algebraic property of the Laplacian operator.

Lemma 4.4

Given \(\mathsf {G}_1,\ldots ,\mathsf {G}_N\) combinatorial graphs satisfying the Assumption 4.2, let \(\mathsf {G}\) be their weighted union graph (see Definition 4.3) and let \(\mathcal L_{m,\mu }(\mathsf {G})\) be the discrete Laplacian on \(\mathsf {G}\). Then

Proof

It suffices to work with unweighted graphs; for simplicity, moreover, we only prove the case \(N=2\). In general, suppose that \(\mathsf {G}\), \(\mathsf {G}_1\) and \(\mathsf {G}_2\) are written as disjoint unions of connected components:

Any eigenfunction associated with the null eigenvalue of \(\mathcal L\) shall be constant on any connected component of \(\mathsf {G}\), hence \(\mathcal {B}_{\mathsf {G}}=\{ \mathbb {1}_{h}, \ h=1,\ldots ,l \}\), where

is a basis for \(\ker \mathcal {L}(\mathsf {G})\). Similarly, \(\mathcal {B}_1=\{\mathbb {1}_{1,j}, \ j=1,\ldots ,m\}\) and \(\mathcal {B}_2=\{\mathbb {1}_{2,k}, \ k=1,\ldots ,n\}\) are a basis of \(\ker \mathcal {L}(\mathsf {G}_1)\) and \(\ker \mathcal {L}(\mathsf {G}_2)\), respectively. Hence, we only need to prove that for all \(h=1,\ldots ,l\), \(\mathbb {1}_h\) is in the intersection of the null spaces in \(\mathcal {C}\) and then extend the result to \(\ker \mathcal {L}(\mathsf {G})\) by linearity. In particular, denoting

then by construction the function \( \mathbb {1}_h\) will be

and

thus \(\mathbb {1}_h \in \ker \mathcal {L}(\mathsf {G}_1) \cap \ker \mathcal {L}(\mathsf {G}_2),\) in fact it can be written as linear combination of both bases \(\mathcal {B}_1\) and \(\mathcal {B}_2\) as

On the other hand, given \(f \in \ker \mathcal {L}(\mathsf {G}_1) \cap \ker \mathcal {L}(\mathsf {G}_2)\), we have

and

where \(\mathcal {B}_1\) and \(\mathcal {B}_2\) as above. Then, comparing the expressions (4.2) and (4.3), we get that

and f can also be expressed in terms of \(\mathcal {B}_\mathsf {G}\) as

thus \(f \in \ker \mathcal {L}(\mathsf {G}).\) \(\square \)

As a corollary, we notice the following result, concerning the relation between the connectedness of the union graph \(\mathsf {G}\) (see Remark 4.1) and the dimension of the kernel of the (weighted or unweighted) Laplacian operator.

Corollary 4.5

Given \(\mathsf {G}_1,\ldots ,\mathsf {G}_N\) combinatorial graphs satisfying the Assumption 4.2, let \(\mathsf {G}\) be their weighted union graph. Then

Partially motivated by Corollary 4.5, with a slight abuse of notation we adopt in the following the notation \(\mathcal L_i:=\mathcal L_{m_i,\mu _i}(\mathsf {G}_i)\), \(i=1,\ldots ,N\). Fixed a probability space \((\Omega ,\mathcal F,\mathbb P)\), let \((Z(t))_{t \ge 0}\) be a semi-Markov process on the state space \(E = \{1,\dots , N\}\) which satisfies Assumption 2.1. In this section, we shall consider the random Cauchy problem

where \(\mathcal L_{X_k}\) is the discrete Laplace operator associated with the currently selected graph \(\mathsf {G}_{X_k}\). The above equation is also known in the literature as the (random) discrete heat equation.

We can now state our main result in this section. We recall that the relevant operators \(\mathcal L_{m,\mu }\) satisfy the Assumptions 2.4 and 2.7, hence the following is a direct consequence of Theorem 2.11.

Theorem 4.6

Let \((Z(t))_{t\ge 0}\) be a semi-Markov process and \(\mathcal C\) be a family of graphs that satisfy the Assumptions 2.1 and 4.2, respectively. Then the random propagator \((S(t))_{t\ge 0}\) for the Cauchy problem (4.5) converges in norm \(\mathbb P\)-almost surely towards the orthogonal projector \({P_K}\) onto the space \(K = \displaystyle \bigcap _{i=1}^N \ker \mathcal L_i\).

The limiting operator can be identified with the orthogonal projector onto the constant functions, provided \(\mathsf {G}\) is connected.

Corollary 4.7

Under the assumptions of Theorem 4.6, \((S(t))_{t\ge 0}\) converges in norm \(\mathbb P\)-almost surely to \(P_0\) if and only if the union graph \(\mathsf {G}\) is connected.

Proof

Corollary 4.5 implies that \(P_0 = {P_K}\) if and only if the union graph \(\mathsf {G}\) is connected, while Theorem 4.6 implies the convergence of S(t) towards \({P_K}\), hence the sufficiency and necessity of the condition. \(\square \)

In the last part of this section we present two special cases of evolution on combinatorial graphs where we discuss the relation between our result and the existing literature.

4.2 Connected Graphs

In this section we assume that all the graphs in \(\mathcal C\) are connected. As we have already seen, this assumption is unnecessarily strong if we aim at solving (P).

However, we are going to show an interesting link between our problem and the analysis of the so-called left-convergent product sets [14]. For simplicity, in this section we assume that \(\tau _n = 1\) for every n, hence \(T_n = n\) and \(Z(t) = Z(\lfloor t \rfloor ) = X_{\lfloor t \rfloor }\).

A set \(\mathcal K=\left\{ M_1, \ldots , M_{N} \right\} \) of matrices is said to have the left-convergent product property, or simply to be an LCP set, if for every sequence \(\mathsf {j}= (j_n)_{ n \in \mathbb N}\) taking values in \(\{1,\ldots ,N \}\) the infinite left-product \(M_\mathsf {j}:= \displaystyle \prod _{k=0}^\infty M_{j_k}\) converges. Given two sequences \(\mathsf {j}\) and \(\mathsf {j}'\), define the metric

and call the topology induced by \(\mathsf {d}\) on \(\mathbb S= \left\{ \mathsf {j}= (j_n)_{n \in \mathbb N}, \ j_n \in \{1,\ldots ,N\} \right\} \) as sequence topology on \(\mathbb S\). It is known [17] that \(\mathcal K\) is an LCP set if \(\mathcal K\) is paracontracting, meaning that for some matrix norm

The issue of convergence of infinite products of matrices has been finally settled in a fundamental paper by Daubechies and Lagarias: in particular, see [14, Thm. 4.1 and Thm. 4.2] and also the erratum in [16].

Proposition 4.8

[14, Thm. 4.2] Let \(\mathcal K\) be a finite set of \(d \times d\) matrices. Then the following are equivalent.

-

(a)

\(\mathcal K\) is an LCP set whose limit function \(\mathsf {j}\mapsto M_\mathsf {j}\) is continuous with respect to the sequence topology on \(\mathbb S\).

-

(b)

All matrices \(M_i\) in \(\mathcal K\) have the same eigenspace \(E_1\) with respect to the eigenvalue 1, this eigenspace is simple for all \(M_i\), and there exists a vector space V such that \(\mathbb C^{d} = E_1 \oplus V\) and such that if \(P_V\) is the oblique projector onto V away from \(\mathsf {e}_1\), then \(P_V \mathcal KP_V\) is an LCP set whose limit function is identically 0.

In particular, if \(E_1\) is a 1-dimensional subspace, then the limit function M is the projector onto this space.

Now we can state this result in the setting of combinatorial graphs. Under the assumption of connectedness of all graphs, Theorem 2.11 states that S(t) will converge to \(P_0\) (the projector on the subspace \(\langle \mathbb {1}\rangle \) of constant functions) no matter which sequence of graphs we follow in (4.5), thus it provides the same result as in the deterministic case treated in Proposition 4.8. We shall give in Lemma 4.9 an alternative proof to this result, which specializes to the notation of graph theory.

Lemma 4.9

Let \(\mathcal {C}=\{\mathsf {G}_1, \ldots , \mathsf {G}_N\}\) be a finite family of connected graphs and \(Z = (X_n, \tau _n=1)\) be an irreducible Markov chain. Then for any path of the process Z the limit

holds for the random evolution problem (4.5).

Proof

In our assumptions, 0 is a simple eigenvalue of each Laplacian matrix \(\mathcal L_k:=\mathcal L(\mathsf {G}_k)\) with associated eigenvector \(\mathbb {1}\).

The orthogonal operator \(P_0^\perp \) is again an orthogonal projector operator with range \(\langle \mathbb {1} \rangle ^{\perp }\). Notice that \(P_0 S(t) = P_0\) because \({{\,\mathrm{rg}\,}}P_0 = \langle \mathbb {1}\rangle \) is contained in

for every \(1 \le k \le N\). Therefore,

and we can prove the assertion by showing that

First of all, by definition \(P_0^\perp \) is idempotent and commutes with the exponential matrix of every Laplace operator. Hence

We claim that

By the finiteness of \(\mathsf {E}\), we denote by

For all \(t>0,\) let \(k \in \mathbb {N}\) be such that \(k \le t < k+1\). By sub-multiplicativity of the matrix norm we have

If \(t \rightarrow +\infty \), then \(k \rightarrow +\infty \) and we finally get

which implies the thesis.

In order to complete the proof it remains to show that claim (4.8) holds. We have proved a more refined version of this claim in Lemma 3.4; however, in the current setting, the proof is straightforward. Let \(\mathcal L\) denote the Laplacian operator for a connected graph \(\mathsf {G}\). By a direct computation we have for all \(t>0\)

whence \(\Vert (I-{P_0}) e^{\delta \mathcal L} \Vert ^2<e^{2 \delta \lambda _{2}} <1\) since \(\lambda _{2} < 0\). \(\square \)

4.3 Randomly Switching Combinatorial Graphs with Non-zero Second Eigenvalue

The goal here is to apply our exponential convergence criteria to combinatorial graphs. Consider the random evolution problem (4.5); we are going to show the exponential convergence of the random propagator \((S(t))_{t\ge 0}\) provided that the following assumption holds:

Assumption 4.10

There exists one combinatorial graph in \(\mathcal C\), say \(\mathsf {G}_1\), such that each connected component of \(\mathsf {G}_j\), \(j \ne 1\), is contained in one of the connected components of \(\mathsf {G}_1\).

As shown in the proof of Lemma 4.4, a consequence of the assumption above is that \(\ker \mathcal L_1 \subseteq \ker \mathcal L_j,\) \(j \ne 1,\) therefore the Assumption 2.12 is satisfied and we can directly apply Theorem 2.13.

Corollary 4.11

Let \((Z(t))_{t\ge 0}\) be a semi-Markov process and \(\mathcal C\) be a family of graphs that satisfy the Assumptions 2.1 and 4.2, respectively. Let additionally the Assumption 4.10 hold.

Then the random propagator \((S(t))_{t \ge 0}\) converges in norm \(\mathbb P\)-almost surely exponentially fast towards the orthogonal projector \(P_K\) with an exponential rate no lower

than

that is the average of the values \(s_d(\mathcal L_j)\) (introduced in Remark 2.9) with respect to the fraction of time \(\Theta _j\) spent by the process Z(t) in the various states.Footnote 2

Proof

The assertion follows from Theorem 2.13. Notice that the exponential rate can be computed by

which converges, as \(t \rightarrow \infty \), to (compare Remark 2.2)

This concludes the proof. \(\square \)

Remark 4.12

Assume that \(\mathsf {G}_1\) is connected (then the Assumption 4.10 is verified). It follows that the union graph \(\mathsf {G}\) is connected, too, hence the intersection space K is one-dimensional and \(P_K = P_0\) is the projection onto the space of constant functions on \(\mathsf {V}\). Moreover \(s_d(\mathcal L_1)=\lambda _{2}(\mathcal L_1) <0\). Adapting the proof of [19, Cor. 3.2] (where the convention is adopted that \(\mathcal L\) is positive semi-definite) we see that each of the discrete Laplacians \(\mathcal L_k\) on the weighted combinatorial graph \(\mathsf {G}_k\) has second largest eigenvalue \(\lambda _2(\mathcal L_k) := \lambda _2(\mathsf {G}_k)\in [\lambda _2(\mathsf {G}_\cup ),\lambda _2(\mathsf {G}_\cap )]\), where \(\mathsf {G}_\cup ,\mathsf {G}_\cap \) are the union and intersection graph introduced in Lemma 4.4, respectively: therefore we conclude that the convergence to equilibrium for the randomly switching problem is not faster (resp., not slower) than in the case of the heat equation on \(\mathsf {G}_\cup \) (resp., on \(\mathsf {G}_\cap \); observe that \(\mathsf {G}_\cap \) may however be disconnected, and hence \(\lambda _2(\mathsf {G}_\cap )\) may vanish, even if all \(\mathsf {G}_k\) are connected).

Estimates on the rate of convergence to equilibrium of the random propagator are readily available: it is well-known that, for a generic unweighted connected graph \(\mathsf {G}\), \(-|\mathsf {V}|\le \lambda _2(\mathsf {G})\le -2(1-\cos \frac{\pi }{|\mathsf {V}|})\), where the second inequality is an equality if and only if \(\mathsf {G}\) is a path graph, see [19, 3.10 and 4.3]. It follows that \(\lambda _2(\mathcal L_k)\in [-|\mathsf {V}|,-2(1-\cos \frac{\pi }{|\mathsf {V}|})]\) if in particular \(\mathsf {G}_\cap \) is connected; this gives an estimate on the convergence rate in Corollary 4.11.

5 Metric Graphs

In this section we discuss the application of Theorem 2.11 to finite metric graphs. Roughly speaking, metric graphs are usual graphs (as known from discrete mathematics) whose edges are identified with real intervals—in this case, finitely many interval of finite length; loops and multiple edges between vertices are allowed. While this casual explanation is usually sufficient [6, 31], for our purposes we will need a more formal definition. We are going to follow the approach and formalism in [30].

Let \(\mathsf {E}\) be a finite set. Given some \((\ell _\mathsf {e})_{\mathsf {e}\in \mathsf {E}}\subset (0,\infty )\), we consider the disjoint union of intervals

we adopt the usual notation \((x,\mathsf {e})\) for the element of \(\mathcal E\) with \(x\in [0,\ell _\mathsf {e}]\) and \(\mathsf {e}\in \mathsf {E}\).

We can define on \(\mathcal E\) a (generalized) metric by setting

Consider the set

of endpoints of \(\mathcal E\). Given any equivalence relation \(\equiv \) on \(\mathcal V\), we extend it to an equivalence relation on \(\mathcal E\) as follows: two distinct elements \((x_1,\mathsf {e}_1) \not = (x_2,\mathsf {e}_2)\in \mathcal E\) belong to the same equivalence class in \(\mathcal E\) if and only if they belong to the set of vertices \(\mathcal V\) and they are equivalent with respect to the relation \(\equiv \) on \(\mathcal V\), \((x_1,\mathsf {e}_1)\equiv (x_2,\mathsf {e}_2)\): we denote this equivalence relation on \(\mathcal E\) again by \(\equiv \) and we call \(\mathcal {G}:={\mathcal {E}}/{\equiv }\) a metric graph, with \(\mathsf {E}\) its set of edges and \(\mathsf {V}:={\mathcal {V}}/{\equiv }\) its set of vertices. So, a vertex \(\mathsf {v}\in \mathsf {V}\) is by definition an equivalence class consisting of boundary elements from \(\mathcal E\), like \((0,\mathsf {e})\) or \((\ell _\mathsf {f},\mathsf {f})\). Beyond our formalism, the equivalence relation on \(\mathcal V\) can be understood as follows: two elements of \(\mathcal V\) belong to the same equivalence class and can hence be identified if they are endpoints of two adjacent edges corresponding to the same vertex of the underlying combinatorial graph: see [30, Rem. 1.7] for more details, which will however not be necessary in the present context.

Two edges \(\mathsf {e},\mathsf {f}\in \mathsf {E}\) are said to be adjacent if one endpoint of \(\mathsf {e}\) and one endpoint of \(\mathsf {f}\) lie in the same equivalence class \(\mathsf {v}\in \mathsf {V}\) (i.e., if \(\mathsf {e},\mathsf {f}\) share an endpoint, up to identification by \(\equiv \)); in this case we write \(\mathsf {e}\sim \mathsf {f}\). Also, two vertices \(\mathsf {v},\mathsf {w}\in \mathsf {V}\) are said to be adjacent if there exists some (not necessarily unique) \(\mathsf {e}\in \mathsf {E}\) such that \(\{\mathsf {x},\mathsf {y}\}=\{(0, \mathsf {e}), (\ell _\mathsf {e}, \mathsf {e})\}\) for representatives \(\mathsf {x}\) of \(\mathsf {v}\) and \(\mathsf {y}\) of \(\mathsf {w}\) (i.e., if there is an edge whose endpoints are \(\mathsf {v},\mathsf {w}\), up to identification by \(\equiv \)); in this case we write \(\mathsf {v}\sim \mathsf {w}\).

Let us stress that by definition a metric graph is uniquely determined by a family \((\ell _\mathsf {e})_{\mathsf {e}\in \mathsf {E}}\) and an equivalence relation on \(\mathcal V\); however, its metric structure is independent on the orientation of the edges!

As a quotient of metric spaces, any metric graph is a metric space in its own right with respect to the canonical quotient metric defined by

where the infimum is taken over all \(k\in \mathbb N\) and all pairs of k-tuples \((\xi _1,\ldots , \xi _k)\) and \((\theta _1,\ldots , \theta _k)\) with \(\xi =\xi _1\), \(\theta =\theta _k\), and \(\theta _i\sim \xi _{i+1}\) for all \(i=1,\ldots ,k-1\), [4, Def. 3.1.12], where \(\sim \) denotes the adjacency relation introduced before. We call \(d_{\mathcal G}\) the path metric of \(\mathcal G\). A metric graph is said to be connected if the path metric doesn’t attain the value \(\infty \); in other words, if any two points of \(\mathcal G\) can be linked by a path. Along with this metric structure there is a natural measure induced by the Lebesgue measure on each interval; accordingly, we can introduce the spaces

as well as

Again, these definitions do not depend on the orientation of the metric graph; but the notation

does.

On the graph \(\mathcal G\) we aim to introduce a differential operator acting as the second derivative on the functions \(f_j(x)\) on every edge \(\mathsf {e}_j\); and possibly more general operators of the form

for some elliptic coefficient \(p\ge p_0>0\) of class \(W^{1,\infty }\), \(p_0\in \mathbb R\). While it is natural to require that \(f_\mathsf {e}\in H^2(0,\ell _\mathsf {e})\) for every edge \(\mathsf {e}\), taking \(\bigoplus _{\mathsf {e}\in \mathsf {E}} H^2(0,\ell _\mathsf {e})\) as domain only defines an operator acting on functions on \(L^2(\mathcal E)\): this is not sufficient in order to define a self-adjoint operator and suitable boundary conditions shall thus be imposed in order for \(A_{\max }\) to satisfy the Assumption 2.4.

Each realization of the elliptic operator A we are interested in is equipped with natural vertex conditions: for each element u in its domain

-

\(u\in C(\mathcal G)\), and in particular u is continuous across vertices;

-

u satisfies the Kirchhoff condition at each vertex, namely

$$\begin{aligned} \forall \, \mathsf {v}\in \mathsf {V}:\qquad \sum _{\begin{array}{c} \mathsf {e}\in \mathsf {E}\\ (0,\mathsf {e})\in \mathsf {v} \end{array}} p_{\mathsf {e}}(\mathsf {v}) u'_\mathsf {e}(\mathsf {v})= \sum _{\begin{array}{c} \mathsf {f}\in \mathsf {E}\\ (\ell _{\mathsf {f}},\mathsf {f})\in \mathsf {v} \end{array}} p_{\mathsf {f}}(\mathsf {v}) u'_\mathsf {f}(\mathsf {v}), \end{aligned}$$(Kc)i.e., the weighted sum of the inflows equals the weighted sum of the outflows.

(Observe that in any vertex with degree 1 the latter becomes a Neumann boundary condition; and that the case \(p \equiv 1\) defines the usual Laplacian \(\Delta \) with natural vertex conditions on the metric graph \(\mathcal G\).)

We can now define the operator A with natural vertex conditions on \(\mathcal {G}\), i.e.

Let us summarize the main results we need in our construction for the operator A with natural vertex conditions. They are part of a general, well-established theory, see e.g. [25, Thm. 2.5 and Cor. 3.3] for edgewise constant coefficients \(p_\mathsf {e}\) and [31, Thm. 6.67] for the general case.

Proposition 5.1

The operator A with natural vertex conditions on \(H = L^2(\mathcal G)\) is densely defined, closed, self-adjoint, and negative semi-definite; it has compact resolvent.

Thus, the Assumptions 2.4 and 2.7 are satisfied and A generates a contractive strongly continuous semigroup, denoted by \((e^{t A})_{t \ge 0}\). Hence the abstract Cauchy problem

is well-posed: for every \(f \in L^2(\mathcal G)\) there exists a unique mild solution given by

Moreover, continuous dependence on the initial data holds. Because A is self-adjoint and hence the semigroup is analytic, the solution u is for all \(f\in L^2(\mathcal G)\) of class \(C^1((0,\infty );L^2(\mathcal G))\cap C((0,\infty );D(A))\).

By Proposition 5.1, the spectrum of A consists of negative eigenvalues of finite multiplicity and the spectral radius satisfies \(s(A) = 0 \in \sigma (A)\). The study of the complete spectrum is still an open problem: actually, only in few cases it is fully determined and in general just some upper and lower bounds on the eigenvalues are known. In this work, we are going to emphasize the following property of \(\sigma (A)\), see [25, Theorem 4.3].

Proposition 5.2

Let \(\mathcal {G} \) be a finite metric graph and denote by \(\mathcal G^{(1)},\ldots ,\mathcal G^{(l)}\) its connected components. Then, the multiplicity of 0 as eigenvalue of the operator A with natural vertex conditions is l. In particular, the piecewise constant functions \(\left\{ \mathbb {1}_h\right\} _{h=1}^{l}\), where

for all \(h=1,\ldots ,l\), form a basis of \(\ker A\).

5.1 A Motivating Example

Let us study on the interval [0, 2] the heat equation

where \(u_0 \in L^2(0,2)\). In particular, we are going to analyze two different and well-known boundary value problems: in one case, we impose two Neumann conditions at \(x=0\) and \(x=2\), whereas the second setting keeps the same constraints at the boundaries, plus one additional Neumann condition at the middle point \(x=1\).

Model A describes the evolution of the heat equation on [0, 2] with Neumann boundary conditions in 0 and 2. Formally, however, we consider [0, 2] as the graph \(\mathcal G_1\) with \(\mathsf {V}= \{0,1,2\}\) and edges \(\mathsf {e}_1 = [0,1]\) and \(e_2=[1,2]\).

The evolution is thus described by the Laplace operator \(\Delta _1\) given by

The spectrum of \(\Delta _1\) clearly agrees with that of the Laplacian with Neumann conditions on [0, 2], i.e.,

with associated eigenfunctions

In this way, for every initial condition \(u_0 \in L^2(0,2)\), we can explicitly write the solution in terms of the spectral representation

and, as expected, the limit distribution for long times agrees with the average of \(u_0\) computed on the interval [0, 2]

Model B describes the evolution of the heat equation on [0, 2] with Neumann boundary conditions in 0, in 2, as well as in 1. Formally, we consider [0, 2] as the graph \(\mathcal G_2\) with \(\mathsf {V}= \{0,1, 1', 2\}\) and edges \(\mathsf {e}_1 = [0,1]\) and \(e_2=[1',2]\).

The Laplace operator associated with \(\mathcal G_2\) is \(\Delta _2\) with domain

Here the dynamics is somehow different from the previous one: in fact, the Neumann condition placed in \(x=1\) acts like an insulating “wall” through which heat exchanges are not allowed. The spectrum in this case is

where every eigenvalue has now multiplicity two.

For every initial condition \(g = (g_1, g_2) \in L^2(\mathcal {G})\) the solution u(t) converges, as \(t \rightarrow \infty \), to the vector-valued function whose two coordinates are the mean value of \(g_1\) and \(g_2\), respectively.

Starting from these two models, we now introduce the following scenario: imagine that we are going to study the heat diffusion along the interval [0, 2] with Neumann boundary conditions. However, at each renewal time \(T_n\) we can decide to add or remove one third Neumann condition at \(x=1\). In particular, the choice of considering three or two constraints is determined by a suitable random process. This means that the system switches between Model A and Model B and the stochastic evolution problem is of the form (2.2).

We shall see that the asymptotic behavior of our systems is given by the uniform \(\mathbb P\)-almost sure convergence towards the orthogonal projector \(P_0\) to the constant functions.

5.2 The General Model

Like in Sect. 4, we are going to introduce ensembles of metric graphs.

Assumption 5.3

\(\mathcal C= \{\mathcal G_1, . . . , \mathcal G_N\}\), where \(\mathcal G_1, . . . , \mathcal G_N\) are metric graphs with the same edge set \(\mathcal E\) (i.e., defined upon the same finite set \(\mathsf {E}\) and the same vector \((\ell _\mathsf {e})_{\mathsf {e}\in \mathsf {E}}\)) but possibly different sets of vertices \(\mathcal V_1:=\mathcal V(\mathcal G_1), . . . , \mathcal V_N:=\mathcal V(\mathcal G_N)\) (i.e., the equivalence relations \(\equiv _1,\ldots , \equiv _N\) may be different).

Once again, we introduce a probability space \((\Omega ,\mathcal {F},\mathbb {P})\) and a semi-Markov process \((Z(t))_{t\ge 0}\) satisfying Assumption 2.1.

At this point, we can associate with each graph \(\mathcal G_i\) in \(\mathcal {C}\) an operator \(A_i\) with natural vertex conditions and elliptic coefficient \(p_i \in W^{1,\infty }\) as in (5.2), which we denote by

we emphasize that the different vertex sets induce different operator domains, even though all operators satisfy the same class of vertex conditions: for example, “cutting through a vertex”, hence producing two vertices of lower degree out of a vertex of larger degree, induces a new operator with relaxed continuity conditions (and two new Kirchhoff conditions).

By Proposition 5.1, all these operators satisfy the Assumptions 2.4 and 2.7. We can state our main problem, i.e., the continuous random evolution on metric graphs

We recall that S(t) is the random propagator associated with problem (5.5) such that \(u(t) = S(t)f\). Our interest is again to prove a link between the convergence of S(t) towards the orthogonal projector \(P_0\) with the connectedness of the union of the graphs in \(\mathcal C\). However, the key point here is to give a definition of the concept of union graph in the metric setting: this follows immediately from the above formalism, see [30].

Definition 5.4

(Union and intersection of metric graphs)

Let \(\mathcal {G}_1,\ldots ,\mathcal {G}_N\) be metric graphs defined on the same \(\mathcal E\), i.e., \(\mathcal G_i={\mathcal E}/{\equiv _i}\), \(i=1,\ldots ,N\). Denote by \(\equiv _\cup \) and by \(\equiv _\cap \) the equivalence relations obtained by taking the reflexive, symmetric, and transitive closure of \(\bigcup _{i=1}^N \equiv _i \ \subset \mathcal V\times \mathcal V\) and \(\bigcap _{i=1}^N \equiv _i \ \subset \mathcal V\times \mathcal V\), respectively. Then, we call union and intersection metric graph the metric graphs

respectively.

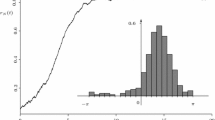

In Fig. 3 we can consider some examples of union graphs.

Remark 5.5

We observe that for fixed \(\equiv _1,\equiv _2\), the union metric graph \(\mathcal {G}_1 \cup \mathcal {G}_2\) does depend on the orientations of the edges in \(\mathcal E\) (as so do \(\mathcal {G}_1, \mathcal {G}_2\), too); this is in sharp contrast to the case of combinatorial graphs.

For instance, we can take the same graphs \(\mathcal {G}_1\) and \(\mathcal {G}_2\) in the third example in Fig. 3 and just reverse the orientation of one edge as shown in Fig. 4.

Our main result in this section is the following lemma, which characterizes the null space of elliptic operators with natural vertex conditions associated with the union graph with its connectedness.

Lemma 5.6

Given \(\mathcal {G}_1,\ldots ,\mathcal {G}_N\) metric graphs satisfying the Assumption 5.3, let \(\mathcal G\) be their union graph (see Definition 5.4). Let \(A_i\) be the elliptic operators associated with \(\mathcal G_i\) with natural vertex conditions operators and coefficients \(p_i \in W^{1,\infty }(\mathcal G_i)\). Then

Notice that this lemma is remarkably similar to Corollary 4.5 (which deals with combinatorial graphs) and also their proofs will be similar.

Proof

We show the proof for \(N=2\), then one can easily extend the result for an arbitrary N by induction. In general, both \(\mathcal {G}_1\) and \(\mathcal {G}_2\) have a certain number of disjoint connected components:

and

Since connectedness is just a topological property, notice that the connected components remain the same for every choice of orientation.

Now assume that \(\mathcal {G}\) is connected: we need to show that \( \ker A_1 \cap \ker A_2 \subseteq \langle \mathbb {1} \rangle \). Thus, we take \(f \in \ker A_1 \cap \ker A_2\), in particular from the results in Proposition 5.2 it is well-known that f is constant on each connected component of both \(\mathcal {G}_1\) and \(\mathcal {G}_2\). Take \(\xi = (x,\mathsf {e}_h)\) and \(\theta = (y,\mathsf {e}_l)\) in \(\mathcal {G}\): we are going to show that

(We can assume that \(h \ne l\), otherwise the assertion is trivial.) By connectedness of \(\mathcal {G}\), there exists a chain of adjacent (in \(\mathcal G_1\) and/or in \(\mathcal G_2\)) edges \(\Gamma _{\xi \theta }=\{\mathsf {e}_h,\mathsf {e}_{i_1},\ldots ,\mathsf {e}_{i_M},\mathsf {e}_l\}\) linking \(\xi \) and \(\theta \):

Thus, taking into account that f is constant on the connected components of both graphs, we deduce that f is constant along \(\Gamma _{xy}\) and in particular \(f(\xi )=f(\theta )\). Because \(\xi , \theta \) are arbitrary, we conclude that f is constant.

In order to prove the opposite implication, we are going to show that if \(\mathcal {G}\) is disconnected, then we can find a non constant function such that \(f \in \ker A_1 \cap \ker A_2\). Take two connected components \(\mathcal {G}^{(A)}\) and \(\mathcal {G}^{(B)}\) of \(\mathcal G\). Then, both contain a certain number of connected components of \(\mathcal {G}_1\) and \(\mathcal {G}_2\). In particular, we set

and

Due to the fact that \(\mathcal {G}\) is disconnected, it follows that

in fact there exist no connected components, either of \(\mathcal {G}_1\) or \(\mathcal {G}_2\), shared by \(\mathcal {G}^{(A)}\) and \(\mathcal {G}^{(B)}\): this is true regardless of the chosen orientation of the edges of \(\mathcal G\). Hence, the indicator functions on each connected component of \(\mathcal G_1\) (resp. \(\mathcal G_2\)) form a basis for the respective kernel

Since every graph has the same set of edges (with possibly different connections), and every edge in \(\mathcal G^{(A)}\) belongs to one and only one component \(\mathcal G_1^{(j)}\) on \(\mathcal G_1\) (and also to one and only one component \(\mathcal G_2^{(k)}\) of \(\mathcal G_2\)) we can write

and similarly for \(\mathcal G^{(B)}\):

At this point, we only need to take any function of the form

and from (5.7) and (5.8) one gets that f can be written as a linear combination of elements of the bases of both \(\ker A_1\) and \(\ker A_2\):

and

Thus, the proof is complete. \(\square \)

In the end, we can finally state the following characterization of the asymptotic behavior of the solutions to (5.5) in terms of the connectedness of the union graph. The proof is, at this point, a direct consequence of Theorem 2.11 and Lemma 5.6.

Theorem 5.7

Let \((Z(t))_{t\ge 0}\) be a semi-Markov process and \(\mathcal C\) be a family of graphs that satisfy the Assumptions 2.1 and 5.3, respectively. Then the random propagator \((S(t))_{t\ge 0}\) for the Cauchy problem (5.5) converges in norm \(\mathbb P\)-almost surely towards the orthogonal projector \(P_0\) onto the constants if and only if the union graph \(\mathcal G\) is connected.

5.3 Randomly Switching Metric Graphs with Non-zero Second Eigenvalue

As we have previously seen in the combinatorial setting, we are going to apply exponential convergence results in the framework of metric graphs. We shall work under the following additional assumption.

Assumption 5.8

There exists one metric graph in \(\mathcal C\), say \(\mathcal G_1\), such that each connected component of \(\mathcal G_j\), \(j\ne 1\), is contained in one of the connected components of \(\mathcal G_1\).

By a similar argument as in the proof of Lemma 5.6, this implies that the null space \(\ker A_1\) is contained in all the null spaces of \(A_j, j\ne 1\), thus Assumption 2.12 is verified. The application of Theorem 2.13 then reads as follows.

Corollary 5.9