Abstract

The recent availability of affordable and lightweight tracking sensors allows researchers to collect large and complex movement data sets. To explore and analyse these data, applications are required that are capable of handling the data while providing an environment that enables the analyst(s) to focus on the task of investigating the movement in the context of the geographic environment it occurred in. We present an extensible, open-source framework for collaborative analysis of geospatial–temporal movement data with a use case in collective behaviour analysis. The framework TEAMwISE supports the concurrent usage of several program instances, allowing to have different perspectives on the same data in collocated or remote set-ups. The implementation can be deployed in a variety of immersive environments, for example, on a tiled display wall and mobile VR devices.

Graphic abstract

Similar content being viewed by others

1 Introduction

Animal behaviour as a concept refers to everything animals do, including movement, daily activities, interaction in groups, and underlying mental processes (Seeley and Sherman 2019). After a long period of animal behaviour research that was mainly grounded on direct observation and subsequent interpretation of the behaviour, researchers have now advanced technology at their disposal which allows them to collect large amounts of data in an unsupervised fashion. This data deluge comes, however, with the drawback that the data need to be processed and prepared for human analysis. Hence, a major challenge for computer science is to design, develop, and realise methods that facilitate the analysis of those growing data sets with both automated and human-guided approaches, providing an opportunity for interdisciplinary research that involves biologists as well as computer scientists.

TEAMwISE on a tiled display wall, showing several synchronised view perspectives on a flock of storks: overview (lower right), focus bird (upper right), and use of thermals in the flock, with altitude analysis charts and data windows (left). The twelve storks were fitted with GPS tracking devices that provide one GPS fix per second. Trajectory colours can be chosen freely, in this case individual colours were used for each of the birds. The stork with the light blue trajectory started soaring earlier than the others and thus gives the rest of the flock orientation on the movement of the thermal

Animal behaviour research investigates a combination of spatial and temporal information for a variety of data: animal movement, with often only position and acceleration samples over time, and sometimes more details such as heading, direction, and wing flapping for birds either measured or derived, static environmental features such as mountains and rivers, slow changing features such as land cover, dynamic features such as weather conditions and temperature, and sometimes physiological parameters such as heart rate. Directly measured data can be combined with information from a variety of sources such as online databases. This is in particular important regarding additional environmental information that cannot easily be measured with, and retrieved from, small sensor tags attached to the animals, where large repositories such as the USGS Earth Explorer (USGS 2020) and the ESA Climate Change Initiative’s open data portal (ESA CCI 2020) can provide valuable data on the environmental context. The analysis of the data, in particular the identification of behavioural impact factors, is difficult, as there are many potential factors, but their impact and interrelation in combination cannot always be clearly defined, and data resolution and confidence can be low, for example, by a lot of uncertainty in the data due to the collection and transmission methods. In addition, incorporation of a lot of information in visual analysis can lead to perceptual and cognitive overload, and interaction with several tools for different types of data and representations can be tedious. The required interpolation or aggregation of data due to the limited sampling and long time periods might further reduce the information to a point where interesting trends and outliers in the original data cannot be detected anymore. Thus, research on effective and expressive interactive visualisations is required, that is, visualisations which support a researcher to investigate the data in an easy but accurate manner and show representations that are as close to the underlying data as possible.

The relationship between animals and their environment is complex, and the activity is often based on the specific features of an area. On the other hand, animal behaviour can also heavily affect the environment, for example, pollination by bees is important for ecosystem preservation and diversity. Current research in animal behaviour therefore is also concerned with the identification of the features and stimuli that inform animals’ decisions, trigger actions, influence behaviour, and facilitate orientation and navigation. Many analyses, such as habitat and corridor configuration, foraging quantity and quality analysis, and migration paths, all involve the application of environmental feature data. Thus, in order to analyse animal behaviour properly, environmental features have to be taken into account and hence it needs to be investigated which features can be collected and how they can be provided and integrated in approaches and tools for animal behaviour researchers.

There have been already large-scale attempts to build foundations for such research on several aspects, for example, the work of Demšar et al. provides an overview of analysis and visualisation of trajectory data developed in the European COST action ‘MOVE–Knowledge discovery from moving objects’. The overview summarises movement studied in the context of four ecological themes (Demšar et al. 2015), with three of them concerned with the analysis of spatio-temporal data and one devoted to the link between movement data and environmental data. Wikelski and Kays developed the concept of Movebank, a large online repository for animal movement data (Wikelski and Kays 2020).

Substantial progress has been made in the development of analysis and visualisation methods and solutions in recent years, for example, regarding the classification and visualisation of trajectories, the classification of behaviour (Demšar et al. 2015), and geographic information systems (GIS). Many of those solutions have a particular focus on (visual) analytics, statistics or computational tasks, rather than on an unbiased explorative approach. Thus, there is a need to further integrate visual, analytical, and user-centred methods in a methodological framework (Aigner et al. 2008; MacEachren and Kraak 2001). Some promising methods in this direction have been proposed, for example, event-based analytics (Aigner et al. 2008) or analysis by enrichment (Spretke et al. 2011).

We present TEAMwISE (Tool for Exploration and Analysis of Movement data within Immersive Synchronised Environments)—an extensible framework for the exploration of movement data across devices in an immersive fashion. TEAMwISE supports collaborative data analysis, either collocated in an immersive or in a semi-immersive environment, such as a tiled 2D/3D display wall (TDW, (Sommer et al. 2019)), or via shared mobile virtual environments. It combines a visualisation of trajectories in the geographic context with movement analysis and supports the synchronised interactive visualisation of multiple views. Our framework has been developed in close collaboration with researchers from the Max Planck Institute for Ornithology and the Department of Collective Behaviour at the University of Konstanz, who provided valuable insight into their research and feedback on our designs and implementation prototypes.

The remainder of this paper is as follows: First, we describe research questions in Sect. 2 and related work for the development of our framework in the context of animal movement analysis in Sect. 3. Section 4 describes goals and our concept for the framework, followed by an application case in Sect. 5, an overview on implementation aspects in Sect. 6, and a short discussion and an outlook in Sect. 7.

2 Research questions

Animal behaviour can be investigated either in an artificial laboratory setting or in a natural environment, using direct observation or analysis of collected data. One way used by biologists for the latter case is to tag animals in the wild using sensors that collect data on the movement as well as environmental and physiological parameters over a longer period of time. Often, GPS sensors are used that provide a good estimate of the movement over time. The data under analysis are usually a combination of directly measured data, which are obtained from sensors that the animals are tagged with, known data, as, for example, age and species, derived data, such as environmental conditions like wind speed calculated from dead reckoning (Weinzierl et al. 2016), and additional data obtained from other sources, e.g. large-scale weather conditions.

We aim to support the analysis and interpretation of such data, where usually no or only short-term observation of the animals in the wild has been performed. We conducted interviews with ten animal behaviour researchers at the University of Konstanz and the Max Planck Institute of Animal Behavior and also performed a literature search, in order to identify the most important research questions tackled and the further analysis requirements. The researchers have been selected from several research groups that investigate different animals and aspects of behaviour, in order to cover a wide range of requirements and research questions. They were invited via personal communication to semi-structured interviews of about 45 minutes at either their or our offices. All but one were experienced group leaders or postdoctoral researchers, which allowed them to give us insight into a variety of past and present research projects. Interview questions targeted, among other topics, the analysis workflows in projects, tasks and general research questions, analysis methods, visualisations, and tools used, as well as data collection and formats. From these interviews and further discussions, we extracted a list of research questions, as well as methods and tools that were employed to answer them. In addition, the results informed our framework design regarding the requirements for interfaces, analysis, and visualisation.

The main research questions that the scientists try to answer include

-

What are the behavioural patterns of individual animals and animal groups?

-

What are the main influence factors that animals base their decisions on?

-

How can behaviour and its influencing factors be derived from movement data, which patterns occur in movement data?

-

How do animals communicate and interact with other animals and the environment?

-

Are there specific roles for animals in a group, and how are they assigned and distributed?

-

What are specific or recurring events during the lifetime or a specific time span of an animal, for example, reproduction or feeding, and how can they be classified?

These research questions aim at a characterisation of the behaviour either on an individual level, e.g. the utilisation of the home range, or within a group, e.g. courtship behaviour, but also at the influencing factors and stimuli from the environment as well as the evolutionary context. Also, the plasticity of the behaviour over time, due to changes in the environmental conditions, e.g. based on human impact, is of interest. The resulting information can be exploited for a variety of applications, such as control of disease spread, early warning of natural disasters (Mai et al. 2018), or better understanding of the impact of climate change (Buchholz et al. 2019; Hamilton et al. 2019).

There are a variety of models for animal behaviour, which allow to reduce the complex nature of living organisms to several focus aspects in order to effectively and efficiently analyse behaviour, see e.g. Bod’ová et al. (2018), Schank et al. (2011), Rands (2011), Sumpter et al. (2012), Sumpter (2006), and Reynolds (1987). They are specified on different levels of detail, generality, and precision, range from rules of thumb to computational simulation models, might describe local or global aspects, and include different types such as physical, game-theoretic, mathematical, and agent-based models. The modelling used in a specific analysis depends on the type of animal, the available data, and the questions to be answered, e.g. on motion or individual or social behaviour on a certain level. A software framework that aims to support such analyses should be flexible enough to cover analysis use cases across different models instead of focusing on a single one. Our framework allows to analyse the behaviour, and thus to contribute to the modelling, for example by detecting environmental and motion features or group interactions that trigger and determine decisions and behavioural patterns. Thus, the system supports a cyclic workflow that feeds into a model generation, e.g. based on insight created on such decisions, which then can inform further visualisations or analysis methods that are integrated either through the programmatical interfaces, e.g. via R or as an extension. Model parameters such as physiological or environmental data can be associated with animals, animal groups, or geographic locations and subsequently mapped onto visual representations and used as input for analysis methods.

Regarding the further analysis requirements, we got valuable information on the researchers’ preferences. One major finding is that environmental features are important factors in the analysis and interpretation of movement data. A further interesting finding is that they see support for collaborative analysis as a desirable feature, which is currently not supported well in their tools.

Several research questions for computer scientists naturally arise when methods, approaches, and environments have to be developed that support the domain experts in answering their questions. Some of the most important ones coincide with fundamental research questions in immersive analytics research, tailored towards the specific use case of animal behaviour research:

-

How can abstract/spatial data and 2D/3D representations be combined to clearly depict the environment, allowing intuitive interpretation of analysis results in this context? This question covers the aspect of analysing 3D movement in a natural environment that has a strong impact on the animals decision, but for which the impact factors are not fully explored, and which is combined with data that are traditionally represented with 2D charts.

-

What are natural human–computer interaction patterns for biologists in immersive environments? The biologists’ operations within the analysis workflow, which are based on their own mental model of the data and animal behaviour, as well as the differing settings for analysis, e.g. collaborative analysis with large displays, should be smoothly supported by corresponding interaction metaphors suited for the respective environment.

-

What are the requirements for efficient algorithms and environment design for human-centred/human-in-the-loop immersive analytics? Given the goal of creating an integrated framework for analysis, the different algorithmic steps that are performed within the workflows need to be easily accessible via an interface within the immersive environment to avoid switching between environments and tools.

-

What are suitable methodologies for collaborative immersive data analytics of animal movement data? While collaborative analysis is common and important to discuss aspects and interpretations of animal behaviour, most analysis tools are lacking specific support for it. Immersive environments can support collaboration, e.g. through shared environments.

-

How can the proposed methodologies and environments be evaluated for their efficiency in practice? As immersive analytics adds further facets to the design space of analysis solutions, such as the environment characteristics, and aims at supporting full workflows in an application domain, the evaluation is even more difficult than in classical data visualisation research.

3 Related work

Movement analysis and, in particular, the analysis and visualisation of trajectory data (Andrienko and Andrienko 2011; Li et al. 2017; McArdle et al. 2014; Tominski et al. 2012; Wang and Yuan 2014) have been a research topic for a long time in a variety of contexts, including algorithmic treatment (Zheng 2015) as well as applications (Andrienko et al. 2013; Klein et al. 2019b). A particular focus has been on the development and implementation of respective methods in the field of visual analytics; see Andrienko et al. (2013), Boyandin et al. (2011), and Spretke et al. (2011) or for reviews Aigner et al. (2008), Andrienko et al. (2007), and Demšar et al. (2015).

Methods developed for the visual representation of spatio-temporal data are often either abstracting data to highlight certain characteristics, or only use two of the three axes in 3D visualisations for spatial dimensions. The space-time cube (STC) (Hägerstrand 1970) uses two axes for spatial dimensions and the third for the temporal dimension (Gonçalves et al. 2013; McArdle et al. 2014). The visual expressiveness of the STC is limited, and its applicability is heavily depending on the data domain and the research task (Demšar et al. 2015; Kjellin et al. 2008; Lee et al. 2014).

However, in case of 3D spatial data sets, the visualisation in three spatial dimensions can enable a more precise analysis of spatial relationships and increase the degree of reality, in particular using stereoscopic 3D (S3D) technologies (Greffard et al. 2011; McIntire et al. 2012; Sommer et al. 2014).

In movement ecology, abstraction or aggregation might not always yield an appropriate result since the observed individuals are then implicitly assumed to be homogeneous. Thus, a main challenge is to provide an environment that enables exploration of individual behaviour within a broader data set, preserving the semantic context of the data. This covers the fact that the object of investigation is a living being, whose decisions and observed movement may have been influenced by its environment (We refer to this as semantic preservation.). Some existing approaches try to explicitly address this (Lee et al. 2014; Slingsby and van Loon 2016; Tominski et al. 2012; Tracey et al. 2014). One aspect of semantic preservation is the animation of the data, as it reflects movement. This has been incorporated into an application by Andrienko et al. (2000), and the importance has been highlighted by Kjellin et al. (2008). To ensure semantic preservation, it is required to integrate the environmental context into the visualisation, for example, by satellite maps or terrain models. Several approaches to incorporate satellite maps have been proposed; see Andres et al. (2009), Konzack et al. (2019), Li et al. (2017), McArdle et al. (2014), and Slingsby and van Loon (2016). MacEachren and Kraak (2001) also state the need for more integration of the reality in the visual approaches as a challenge in geovisualisation. Yang et al. (2018) investigate the use of maps and globes in VR. An early example of a 3D visualisation that maps trajectory data onto topographic maps is MAMVis, a visualisation for the analysis of marine mammal behaviour (Fedak et al. 1996). While some work on the calculation of derived data exists, in particular for wind estimation (Weinzierl et al. 2016), it adds further uncertainty that needs to be taken into account.

The principles of giving the user control over the time and the opportunity to see the data from different perspectives have been investigated by Andrienko et al. (2000). Additionally, the work of Li et al. (2017) concerns an algorithm that computes interesting viewpoints for further analysis. But their visualisation again focuses on the aggregation of the underlying data. Aigner et al. (2008) partially adapt a temporal classification of Frank (1998), which includes the distinction of ordered time domains and domains with multiple perspectives. They mention that most of the available applications for the visualisation of time-oriented data focus on ordered time domains. MacEachren and Kraak (2001) accordingly state the need of applications that support dynamically linked views.

Zhang et al. (2016) investigate the possibilities of bringing the visual exploration of geo-spatial data into immersive environments by using a spherical visualisation in VR. However, they did not incorporate methods for time control or animation. The recently emerging field of immersive analytics (Chandler et al. 2015; Dwyer et al. 2018) investigates how immersive environments can best support analysts in their tasks by maximising mental involvement and hence efficiency, in particular in collaborative work scenarios. An early prototype of our framework was used in a comparison study of the potential of different immersive environments for collective behaviour analysis (Nim et al. 2017). Lee et al. (2014) propose an approach for spatio-temporal data exploration by allowing the analyst to control the projected image via hand gestures. Arsenault et al. (2004) present a system that allows the user to navigate through space and time in spatio-temporal data, including animation, but their system is designed to be used on a desktop computer as a single user.

Trajectories show the three-dimensional movement over a user-adjustable time window, embedded into a scenery that represents the environment with satellite imagery and terrain information. In addition to direct mapping of attributes onto objects in the 3D view, 2D charts can be employed, and information panes can be used for text representation. The 2D charts (middle right) are created using D3 and shown on top of the 3D view. Here, the altitude of the selected bird over time is shown in comparison with the average of the flock. The current position in time is shown by the yellow dot

4 Goals and concept

In a process of over two years of development, we defined and refined goals and a corresponding concept for TEAMwISE in close exchange with our collaborators. Starting from design studies that evaluated the potential of different hardware and software environments (Nim et al. 2017), and interviews conducted with the domain experts as described in Section 2, we developed prototypes that were used for the analysis of several real-world data sets (Klein et al. 2019b) to further explore options for data representation and interaction. Small workshops and discussion rounds targeted important aspects of specific analysis tasks for which we first created experimental prototypes before merging promising features into the TEAMwISE code. The feedback from our domain experts, which was collected regularly during the development, allowed us to select and prioritise the most helpful features for development of a versatile framework, e.g. regarding data access and import, movement visualisation and animation, interaction operations, and analysis methods. Several goals naturally arise from the research questions stated in Sect. 2. A main goal is to provide a framework that enables analysts to observe the same movement situation from multiple perspectives, both in a single-user and in a collaborative setting. In discussions with our expert collaborators, we concluded that different perspectives on the same animal, and similar or differing perspectives on multiple animals, can provide not only overview and detail of movement, but support the analysis of interaction between animals and between animals and environment. The perspectives may vary in the viewpoint (e.g. a static view of a bird flock and views of an individual, see Fig. 1) or in the point in time (with a temporal gap between perspectives). Additionally, we want to present relevant aspects of the movement context (e.g. environmental information) and support collaborative analysis, which is often an important part of the analysis workflow.

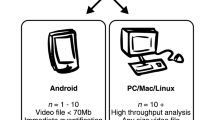

A further aspect to consider is the requirement that the framework might be used both in a laboratory or in an office environment for detailed analysis, or in the field for a quick overview, for example, on a tablet. In order to allow easy access on a multitude of device types, we use a web-based solution that can be easily accessed via a web browser and also allows a combination of devices at the same time, e.g. a tablet and a monitor wall. See Sect. 6 for implementation details.

The main pillars of our framework are the visual representation, the synchronisation between program instances (across devices), and the underlying analytics. In addition, we provide built-in support to access the major animal movement data repository Movebank.

4.1 Visual representation

There are several types of visual data representations commonly used in animal behaviour research, including standard 2D charts, network visualisations, projections, and trajectory plots, often overlaid on 2D maps and 3D geographic visualisations and animated over time. TEAMwISE supports such visualisations either natively, e.g. by the map and globe visualisation, or by using tools that can create them and are integrated in the GUI, e.g. using R scripts that compute projections or plots. Regarding the geographic visualisation, users can view and analyse the movement in the environmental context in which it happened, either as static trajectories or as an animation. We focus on semantic preservation by providing a visualisation that includes relevant features of the real-world environment. Time is represented as a continuous stream, and the three-dimensional visualisation space can be used for the spatial visualisation. Especially for the investigation of bird movement data, incorporating the altitude in addition to longitude and latitude may be crucial. We visualise the movement trajectory as a user-adjustable 3D polyline with additional data mappings on visual cues such as colour; see Fig. 2. The visualised context information includes satellite imagery and terrain information, which covers geographic context as well as vegetation, land use, and features that might be used for navigation or movement by animals, for example, rivers and roads. While the decision for a 3D visualisation sounds natural for 3D movement, the related research question of how abstract/spatial data and 2D/3D representations can be combined is not fully answered by it. In particular, when a multitude of additional attributes needs to be visualised, or an overview on the changes over time is important, the animated 3D visualisation can get crowded and might not be sufficient anymore. In order to provide sufficient flexibility, we therefore also support 2D charts based on D3 (D3js 2019) or R; see Fig. 2. In order to cater for the dynamic nature of networks, e.g. when based on pairwise relations in a flock, network visualisations can in addition to 2D diagrams also be directly integrated in the 3D view, as shown in Fig. 7. In addition, the 3D globe view can be changed to a 2D map view, e.g. when altitude and terrain information is not required, such as in the analysis of land animal movement in Figs. 4 and 5.

Within the map and globe visualisation, the user can freely move around the virtual camera (viewpoint) in the environment, attach its position to an individual, or select a fixed perspective on an individual or a group. The system also provides several pre-defined perspectives for ease of use via menu entries; see Fig. 3 (left). The time itself is represented as a timeline at the bottom of a view, with a movable slider handle showing the current point in time; see Fig. 2. The analyst can choose to animate the data with a customisable velocity, or just explore different time points by freely moving the handle to any point in time. The analyst can also parameterise the time span for which the trajectory of an individual is represented in the visualisation. Results from the automated analysis can be mapped to visual variables like colour, size, shapes, etc.; see Fig. 4. In case the data are available from drone or plane flights, the visualisation can be further enriched with point clouds, for example, to incorporate vegetation structure. Furthermore, depending on the animal under investigation, the standard 3D animal model can be simply replaced with a fitting one, including animated models.

4.2 Web-based synchronisation

Our framework facilitates collaboration by supporting synchronised instances and shared immersive environments, and thus addressing one of the main research questions in Sect. 2. Making use of the Node.js runtime environment (Nodejs 2019), TEAMwISE provides a web server-based solution that allows the user to connect either from the local host or from a remote client machine on a specified port. Multiple server instances can be started concurrently by using different ports.

Having started a TEAMwISE server on the command line, one can start several TEAMwISE instances on this server, either on the same or on multiple devices that can connect to the server host via http. These instances can be either independent, e.g. to have a ‘private’ view in a collaborative setting, or synchronised, e.g. to perform an analysis on multiple views or to present it to collaborators on several devices. Synchronisation of instances is achieved via a WebSocket server, which allows to share feature settings across these instances. There are two types of instances that can be chosen for synchronisation: controller and spectator. While any change in one of the synchronised features in a controller instance will directly be propagated to any registered instance connected to the same server, the spectators are not able to change features. Synchronisation includes several dimensions that can be configured by the analyst. As a base case, one can synchronise the data set under investigation and the current point in time of the animation, as well as the corresponding user operations of pausing or changing animation speed. Additionally, one may also decide to synchronise the camera position (in order to show interesting perspectives to others) or the marking or annotation of individuals.

Distributed collaboration is possible, for example, by starting spectator views for remote controller views, allowing to mutually discuss interpretation of the data. For a single analyst, multi-screen and multi-perspective arrangements with different viewpoints and time points can be customised depending on the user’s task, preferences, and data set specifics. This enables the same analyst to view the animation from several perspectives, that is, through different virtual cameras. Collocated collaborative analysis is easily possible on large display walls and immersive multi-screen environments; see Fig. 1. Multiple screens can, for example, be designated for a common controller view, with multiple additional personal controller or spectator views on further screens, such that multiple researchers can observe different animals or different perspectives in a time-synchronised fashion. Through the web-based architecture, there are several environments from which one can join a session, such as a TDW, a regular desktop set-up, a smartphone or tablet (natural touch interaction is supported), or in mobile VR via a smartphone in a cardboard.

Interface and visualisation for the Recurse (Bracis et al. 2018) clustering algorithm. Each cluster is drawn with its centre point and a circular visitation area around it, defined by the radius used as the algorithm’s parameter. Clusters are coloured according to their revisitation count, from blue (low) to green (high). The visitation statistics for every cluster are given in an info box on the right, including revisitation count and range of visit durations. Red dots are indication markers for predefined locations, here trees

4.3 Analysis

Correct interpretation of animal behaviour might be based on the application of several analysis methods, ranging from simple calculations to complex approaches. The TEAMwISE framework has several basic analysis methods and corresponding visualisations, for example, on entities’ distances, altitude changes, and profiles. For one of the most prevalent approaches in movement analysis, clustering, we implemented an interface that supports easy addition of methods, including UI elements and result visualisation; see Figs. 4 and 5. In addition, we implemented several example analyses such as leader–follower classification (Nagy et al. 2010), wind speed estimation (Weinzierl et al. 2016), and nearest neighbour relations to test and showcase the potential of the framework. These existing methods can be complemented by extensions. The modular structure of our framework allows to integrate different algorithms via an extension mechanism, and to have the user parameterise and run them from the GUI. The resulting information is stored internally for further use during the session.

As there are a large number of methods available in R packages, which the biologists are also partially familiar with, we decided to provide an interface to R by implementing a corresponding extension. The interface allows to run analyses on the data in TEAMwISE by using R scripts and packages, with the additional benefit that calculations run on an R server might be significantly faster than browser-based calculations. Scripts can specify input and output in a comment header in YAML format, and the user can select subsets of animals and specify the time window for analysis via the GUI; see Fig. 6. The results from the script execution are available for further use in TEAMwISE, for example subsequent analysis or mapping onto visual variables. Even graphics such as plots can be created and then displayed within the TEAMwISE GUI; see Fig. 7. The extension is implemented using the OpenCPU (Ooms 2013) framework, which provides a JavaScript client library and an HTTP API to integrate R functionality.

4.4 Data import

To allow the researchers to easily access their existing data, we provide two basic ways to import data for analysis. Users can either simply load data from local files, for example, new or confidential data from their own projects, or from the online repository Movebank (Max Planck Society 2019), which is the major data repository for animal movement. For the latter case, we implemented access to Movebank by using our R interface (see Sect. 4.3), which then employs the move package (Kranstauber et al. 2013) to access the repository. As a result, users can enter their Movebank credentials in TEAMwISE, which allows them to retrieve and directly import all data sets that they have access rights for. For the local file loading, TEAMwISE can parse two of the most common formats, Keyhole Markup Language (.kml) and Comma-Separated Values (.csv).

5 Application case

To exemplify the system usage, we present a part of a recent study that we worked on together with our biologist collaborators. In this study, we investigated how storks find their way back after winter migration. The underlying data reflect a homing flight of a one-year-old stork called Sierit back to Radolfzell, Germany (the end of the trajectory in Fig. 9 close to Lake Constance) in 2015. On its way back home, Sierit takes several wrong decisions, which analysts can easily spot in the visualisation. Their aim is to investigate the decision points to see why these mistakes probably happened, and how the overall navigation process actually works.

Last part of Sierit’s route (blue line), seen from the West; the arrow shows her position at the same time point as in Fig. 8. Lake Constance can be seen in the upper left of the image close to the end of the blue trajectory line

Here, we show a situation that arose quite at the end of the homing flight. Sierit was close to Lake Constance, but did not manage to directly head over and instead kept flying straight to the south. Moving to the point in time where this decision was taken, one can see that from her actual position the lake was not visible but hidden by a mountain range to the left; see Fig. 8. Lake Zürich, however, is visible in the south, leading to a misinterpretation and misnavigation; see Fig. 9. In that year, Sierit finally reaches Lake Constance after significant detours. In the following years, Sierit has learned from past experiences and improves her navigation until she takes a direct route. TEAMwISE supports the analysis by allowing an overview and comparison; see Fig. 10.

Sierit’s return routes over the first three years of her winter migration in comparison, seen from the South. The first year’s route (red line) shows a lot of detours, the second year’s route (blue line) a clear improvement, and in the third year (green line) Sierit flies straight back to Lake Constance

TEAMwISE facilitates the analysis of such situations and helps to identify navigation patterns and landmarks, thereby greatly supporting the biologists’ workflow. Figure 1 shows multiple perspectives on a tiled S3D Display wall. These perspectives can be distributed freely across the monitors and switched between 2D and S3D mode. By using S3D, the user is able to better perceive the stork’s movement direction within the flock towards the sky. The landscape below the storks is visible from several perspectives, supporting semantic preservation. Different perspectives also support the analysis of the field of view of individual storks, illustrating potential orientation towards leading or neighbouring storks in the flock.

6 Implementation

We implemented TEAMwISE based on the Cesium framework (CesiumJS 2020), employ R (R Core Team 2013) and Python (Python Core Team 2018) language scripts mainly for automated analysis, and use D3 (D3js 2019) for abstract data visualisation. Cesium is a JavaScript library for geographical globe and map visualisations that allows us to create map-based visualisations that include animated trajectory representations, but also to freely design and extend visualisations, data representations, and interactions. As Cesium provides web-based interactive visualisations and features a VR-mode; it allows us to provide one implementation that can be used in diverse set-ups and environments such as in the field in a normal browser set-up, in mobile VR, and in immersive environments (Dwyer et al. 2018; Sommer et al. 2019). Furthermore, using Node.js (Nodejs 2019), TEAMwISE provides a web server-based solution that allows the user to connect either from the local host or from a remote client machine. We use one web server to host the content and another WebSocket server to manage the synchronisation features, both running in the Node.js runtime. TEAMwISE comprises an implementation core that provides basic functionality, e.g. data import and movement data representation, but also a mechanism for loading of extensions. Further functionality is provided by existing extensions, such as the R interface extension, which is implemented using the OpenCPU (Ooms 2013) framework. A developer can simply create an extension to add features by making use of the extension interface, which is described in detail in the documentation. For further pre-processing and analysis, we implemented methods in Python and JavaScript.

7 Conclusion and outlook

This paper presents TEAMwISE, a web-based framework for animal behaviour analysis in immersive environments, and demonstrates its use in an application case. TEAMwISE allows the user to map movement data onto the three-dimensional visualisation of the environmental context and to animate the animals’ movement. The user can follow the movement or freely navigate in the 3D environment to investigate the movement from different perspectives. TEAMwISE supports several analysis tools that help to analyse and interpret the data and can be easily extended by users to integrate further analysis methods via the extension interface. In addition, it supports the synchronised interactive visualisation of multiple views. Due to the web-based architecture, TEAMwISE can be used on a variety of devices such as tiled display walls, tablets, or mobile phones, also in combination. Our ongoing developments include integrated support for preprocessing such as data segmentation, more natural interaction with the TDW environment, and the integration of more data sources, for example, on land use and vegetation, in order to provide further information relevant for the analysis. We will also evaluate synchronisation concepts and representations for collaborative work. Future research includes the treatment and representation of the uncertainty in the data, e.g. to indicate potential deviation from the trajectory between GPS fixes or sensor inaccuracies. The data sets used for the use-case evaluations are available from Movebank (Max Planck Society 2019), and more up-to-date information on the TEAMwISE framework can be found on the project homepage (TEAMwISE Team 2020).

References

Aigner W, Miksch S, Müller W, Schumann H, Tominski C (2008) Visual methods for analyzing time-oriented data. IEEE Trans Vis Comput Graph 14(1):47–60. https://doi.org/10.1109/TVCG.2007.70415

Andres JM, Davis M, Fujiwara K, Anderson JC, Fang T, Nedbal M (2009) A geospatially enabled, PC-based, software to fuse and interactively visualize large 4D/5D data sets. In: OCEANS 2009, pp 1–9, 10.23919/OCEANS.2009.5422372

Andrienko G, Andrienko N, Wrobel S (2007) Visual analytics tools for analysis of movement data. SIGKDD Explor Newsl 9(2):38–46. https://doi.org/10.1145/1345448.1345455

Andrienko G, Andrienko N, Bak P, Keim D, Wrobel S (2013) Visual analytics of movement. Springer, Berlin

Andrienko N, Andrienko G (2011) Spatial generalization and aggregation of massive movement data. IEEE Trans Vis Comput Graph 17(2):205–219. https://doi.org/10.1109/TVCG.2010.44

Andrienko N, Andrienko G, Gatalsky P (2000) Supporting visual exploration of object movement. In: Proc. working conference on advanced visual interfaces, ACM, AVI ’00, pp 217–220, https://doi.org/10.1145/345513.345319

Arsenault R, Ware C, Plumlee M, Martin S, Whitcomb LL, Wiley D, Gross T, Bilgili A (2004) A system for visualizing time varying oceanographic 3D data. In: Oceans ’04 MTS/IEEE Techno-Ocean ’04 (IEEE Cat. No.04CH37600), vol 2, pp 743–747 Vol.2

Bod’ová K, Mitchell GJ, Harpaz R, Schneidman E, Tkačik G (2018) Probabilistic models of individual and collective animal behavior. PLOS ONE 13(3):1–30. https://doi.org/10.1371/journal.pone.0193049

Boyandin I, Bertini E, Bak P, Lalanne D (2011) Flowstrates: an approach for visual exploration of temporal origin-destination data. Comput Gr Forum 30(3):971–980. https://doi.org/10.1111/j.1467-8659.2011.01946.x

Bracis C, Bildstein KL, Mueller T (2018) Revisitation analysis uncovers spatio-temporal patterns in animal movement data. Ecography. https://doi.org/10.1111/ecog.03618

Buchholz R, Banusiewicz JD, Burgess S, Crocker-Buta S, Eveland L, Fuller L (2019) Behavioural research priorities for the study of animal response to climate change. Anim Behav 150:127–137. https://doi.org/10.1016/j.anbehav.2019.02.005

CesiumJS (2020) cesiumjs API reference. http://cesiumjs.org/refdoc.html. Last accessed 11 Dec 19

Chandler T, Cordeil M, Czauderna T, Dwyer T, Glowacki J, Goncu C, Klapperstueck M, Klein K, Marriott K, Schreiber F, Wilson E (2015) Immersive analytics. IEEE Big Data Vis Anal 2015:73–80

D3js (2019) d3js API reference. https://github.com/d3/d3/blob/master/API.md. Last accessed 30 Jun 19

Demšar U, Buchin K, Cagnacci F, Safi K, Speckmann B, Van de Weghe N, Weiskopf D, Weibel R (2015) Analysis and visualisation of movement: an interdisciplinary review. Mov Ecol 3(1):5

Dwyer T, Marriott K, Isenberg T, Klein K, Riche N, Schreiber F, Stuerzlinger W, Thomas BH (2018) Immersive analytics: an introduction. In: Marriott K, Schreiber F, Dwyer T, Klein K, Riche NH, Itoh T, Stuerzlinger W, Thomas BH (eds) Immersive analytics. Springer, Berlin, pp 1–23. https://doi.org/10.1007/978-3-030-01388-2_1

ESA CCI (2020) ESA climate change initiative open data portal. http://cci.esa.int/data, http://cci.esa.int/data. Last accessed 12 Jul 20

Fedak MA, Lovell P, McConnell BJ (1996) Mamvis: a marine mammal behaviour visualization system. The J Vis Comput Anim 7(3):141–147. https://doi.org/10.1002/(SICI)1099-1778(199607)7:3<141::AID-VIS147>3.0.CO;2-N

Frank AU (1998) Different types of “times” in GIS. Spatial and temporal reasoning in geographic information systems pp 40–62

Gonçalves T, Afonso AP, Martins B, Gonçalves D (2013) ST-TrajVis: Interacting with trajectory data. In: Proc. 27th international BCS human computer interaction conference, British Computer Society, BCS-HCI ’13, pp 48:1–48:6

Greffard N, Picarougne F, Kuntz P (2011) Visual community detection: an evaluation of 2D, 3D perspective and 3D stereoscopic displays. In: International symposium on graph drawing, Springer, pp 215–225

Hägerstrand T (1970) What about people in regional science? Pap Reg Sci Assoc 24(1):6–21. https://doi.org/10.1007/BF01936872

Hamilton CD, Vacquié-Garcia J, Kovacs KM, Ims RA, Kohler J, Lydersen C (2019) Contrasting changes in space use induced by climate change in two arctic marine mammal species. Biol Lett 15(3):20180834. https://doi.org/10.1098/rsbl.2018.0834

Kjellin A, Pettersson LW, Seipel S, Lind M (2008) Evaluating 2D and 3D visualizations of spatiotemporal information. ACM Trans Appl Percept 7(3):19:1-19:23. https://doi.org/10.1145/1773965.1773970

Klein K, Aichem M, Sommer B, Erk S, Zhang Y, Schreiber F (2019a) Teamwise: Synchronised immersive environments for exploration and analysis of movement data. In: Proc. 12th international symposium on visual information communication and interaction, ACM, VINCI’2019, 10.1145/3356422.3356450

Klein K, Sommer B, Nim HT, Flack A, Safi K, Nagy M, Feyer SP, Zhang Y, Rehberg K, Gluschkow A, Quetting M, Fiedler W, Wikelski M, Schreiber F (2019b) Fly with the flock: immersive solutions for animal movement visualization and analytics. J R Soc Interface 16(153):e794. https://doi.org/10.1098/rsif.2018.0794

Konzack M, Gijsbers P, Timmers F, van Loon E, Westenberg MA, Buchin K (2019) Visual exploration of migration patterns in gull data. Inf Vis 18(1):138–152. https://doi.org/10.1177/1473871617751245

Kranstauber B, Smolla M, Scharf AK (2013) Move: visualizing and analyzing animal track data. R Package Vers 1(360):r365

Lee JG, Lee KC, Shin DH (2014) A new approach to exploring spatiotemporal space in the context of social network services. In: Proc. 6th international conference on social computing and social media - volume 8531, Springer, New York, Inc., pp 221–228. https://doi.org/10.1007/978-3-319-07632-4_21

Li J, Xiao Z, Kong J (2017) A viewpoint based approach to the visual exploration of trajectory. J Vis Lang Comput 41(C):41–53. https://doi.org/10.1016/j.jvlc.2017.04.001

MacEachren AM, Kraak MJ (2001) Research challenges in geovisualization. Cartogr Geogr Inf Sci 28(1):3–12

Mai PM, Wikelski M, Scocco P, Catorci A, Keim D, Pohlmeier W, Fechteler G (2018) Monitoring pre-seismic activity changes in a domestic animal collective in Central Italy. In: EGU general assembly conference abstracts, EGU general assembly conference abstracts, p 19348

Max-Planck-Society (2019) Movebank data repository. http://www.movebank.org. Last accessed 12 Sep 19

McArdle G, Demšar U, van der Spek S, McLoone S (2014) Classifying pedestrian movement behaviour from GPS trajectories using visualization and clustering. Ann GIS 20(2):85–98. https://doi.org/10.1080/19475683.2014.904560

McIntire JP, Havig PR, Geiselman EE (2012) What is 3D good for? A review of human performance on stereoscopic 3D displays. In: Head- and helmet-mounted displays XVII, international society for optics and photonics (SPIE), vol 8383, pp 83830X–1–83830X–13, 10.1117/12.920017

Nagy M, Ákos Z, Biro D, Vicsek T (2010) Hierarchical group dynamics in pigeon flocks. Nature 464:890–893. https://doi.org/10.1038/nature08891

Nim HT, Sommer B, Klein K, Flack A, Safi K, Nagy M, Fiedler W, Wikelski M, Schreiber F (2017) Design considerations for immersive analytics of bird movements obtained by miniaturised GPS sensors. In: Eurographics workshop on visual computing for biology and medicine, The Eurographics Association, pp 27–31. 10.2312/vcbm.20171234

Nodejs (2019) Node.js API reference. https://nodejs.org/en/docs/. Last accessed 12 Nov 19

Ooms J (2013) OpenCPU: producing and reproducing results. http://www.opencpu.org/

Python Core Team (2018) Python: a dynamic, open source programming language. Python Foundation for Statistical Computing. https://www.python.org/

R Core Team (2013) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna. http://www.R-project.org/

Rands SA (2011) Approximating optimal behavioural strategies down to rules-of-thumb: energy reserve changes in pairs of social foragers. PLOS ONE 6(7):1–8. https://doi.org/10.1371/journal.pone.0022104

Reynolds CW (1987) Flocks, herds and schools: a distributed behavioral model. SIGGRAPH Comput Gr 21(4):25–34. https://doi.org/10.1145/37402.37406

Schank J, Joshi S, May C, Tran JT, Bish R (2011) A multi-modeling approach to the study of animal behavior. In: Braha D, Bar-Yam Y, Minai AA (eds) Unifying themes in complex systems. Springer, Berlin, pp 304–312

Seeley TD, Sherman PW (2019) Animal behaviour. Encyclopaedia Britannica, Inc. https://www.britannica.com/science/animal-behavior. Last accessed 20 Jan 2020

Slingsby A, van Loon E (2016) Exploratory visual analysis for animal movement ecology. Comput Gr Forum 35(3):471–480. https://doi.org/10.1111/cgf.12923

Sommer B, Bender C, Hoppe T, Gamroth C, Jelonek L (2014) Stereoscopic cell visualization: from mesoscopic to molecular scale. J Electron Imaging 23(1):1–11

Sommer B, Diehl A, Aichem M, Meschenmoser P, Rehberg K, Weber D, Zhang Y, Klein K, Keim D, Schreiber F (2019) Tiled stereoscopic 3D display wall—concept, applications and evaluation. In: IS&T electronic imaging—stereoscopic displays and applications XX, pp 641–1–641–15

Spretke D, Bak P, Janetzko H, Kranstauber B, Mansmann F, Davidson S (2011) Exploration through enrichment: a visual analytics approach for animal movement. In: Proc. 19th ACM SIGSPATIAL international conference on advances in geographic information systems, ACM, GIS ’11, pp 421–424. https://doi.org/10.1145/2093973.2094038

Sumpter DJT (2006) The principles of collective animal behaviour. Philos Trans R Soc London Ser B Biol Sci 361(1465):5–22. https://doi.org/10.1098/rstb.2005.1733

Sumpter DJT, Mann RP, Perna A (2012) The modelling cycle for collective animal behaviour. Interface Focus 2(6):764–773. https://doi.org/10.1098/rsfs.2012.0031

TEAMwISE Team (2020) TEAMwISE Homepage. University of Konstanz, Life Science Informatics. http://team-wise.org/

Tominski C, Schumann H, Andrienko G, Andrienko N (2012) Stacking-based visualization of trajectory attribute data. IEEE Trans Vis Comput Graph 18(12):2565–2574

Tracey JA, Sheppard J, Zhu J, Wei F, Swaisgood RR, Fisher RN (2014) Movement-based estimation and visualization of space use in 3d for wildlife ecology and conservation. PLOS ONE 9(7):1–15. https://doi.org/10.1371/journal.pone.0101205

USGS (2020) USGS earth explorer. https://earthexplorer.usgs.gov/. https://earthexplorer.usgs.gov/. Last accessed 18 Mar 20

Wang Z, Yuan X (2014) Urban trajectory timeline visualization. In: 2014 International conference on big data and smart computing (BIGCOMP), pp 13–18. https://doi.org/10.1109/BIGCOMP.2014.6741397

Weinzierl R, Bohrer G, Kranstauber B, Fiedler W, Wikelski M, Flack A (2016) Wind estimation based on thermal soaring of birds. Ecol Evolut 6(24):8706–8718

Wikelski M, Kays R (2020) Movebank: archive, analysis and sharing of animal movement data. Hosted by the Max Planck Institute for Animal Behavior. http://www.movebank.org. Last accessed 07 Jan 21

Yang Y, Jenny B, Dwyer T, Marriott K, Chen H, Cordeil M (2018) Maps and globes in virtual reality. Comput Graph Forum 37(3):427–438. https://doi.org/10.1111/cgf.13431

Zhang MJ, Li J, Zhang K (2016) An immersive approach to the visual exploration of geospatial network datasets. In: Proc. 15th ACM SIGGRAPH conference on virtual-reality continuum and its applications in industry—volume 1, ACM, VRCAI ’16, pp 381–390. https://doi.org/10.1145/3013971.3013983

Zheng Y (2015) Trajectory data mining: an overview. ACM Trans Intell Syst Technol 6(3):29:1-29:41. https://doi.org/10.1145/2743025

Acknowledgements

We would like to thank Martin Wikelski, Mate Nagy, Andrea Flack, Wolfgang Fiedler, Michael Quetting and Kamran Safi for sharing their expertise and giving valuable feedback. Supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—Project-ID 251654672—TRR 161 and Germany’s Excellence Strategy—EXC 2117—422037984. This is an extended version of the VINCI 2019 paper ‘TEAMwISE: Synchronised Immersive Environments for Exploration and Analysis of Movement Data’ (Klein et al. 2019a).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Klein, K., Aichem, M., Zhang, Y. et al. TEAMwISE: synchronised immersive environments for exploration and analysis of animal behaviour. J Vis 24, 845–859 (2021). https://doi.org/10.1007/s12650-021-00746-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12650-021-00746-2