Abstract

Recent research conducted at numerous universities has found evidence of instructor-gender differences in student evaluations of teaching (SET). This paper examines whether such gender effects exist in “instructor overall” ratings within a database of SET that includes almost 600,000 observations from the past 11 years for the Faculty of Arts and Sciences (FAS) at a large research university in the northeastern United States. First, using multivariate OLS regression analysis, we tested 32 hypotheses of gender differences within discipline-rank combinations. Of the 32, only two hypothesis tests showed statistically significant gender differences in the instructor overall rating; one discipline-rank combination had higher average scores for male instructors, and one discipline-rank combination had higher average scores for female instructors. Second, using quasi-experimental data from calculus courses, we found that mean instructor overall scores of female instructors were different from those of male instructors only for Teaching Assistants (TAs) and Teaching Fellows (TFs), with higher scores for female TAs and TFs. Overall, we find no evidence of systematic gender differences in our analysis.

Similar content being viewed by others

Notes

The instructor overall rating asks students to “Evaluate your instructor overall,” with a scale of 1 = unsatisfactory, 2 = fair, 3 = good, 4 = very good, 5 = excellent.

For a summary table of recent studies examining gender bias in teaching evaluations, please see Table 4 in Appendix 1.

Due to confidentiality concerns, the data used for the observational and quasi-experimental analyses are not publicly available. However, aggregated data and code are available upon request.

Non-ladder/other instructors include instructors on term appointments who are not on a tenure track. This category also includes visiting faculty, staff teaching appointments, TA/TFs acting as instructors, etc. Non-FAS instructors are instructors who have a teaching appointment (either permanent or temporary) at the institution, but not within the Faculty of Arts and Sciences.

For a definition of each independent variable included in our model, please see Table 5 in Appendix 2.

In Table 6 in Appendix 3, we report all of the interactive model’s estimated coefficients. We also run a version of the model with age and age-squared interacted with gender. The results of this model are similar to our final model, and are available upon request.

See Table 6 in Appendix 3 for the OLS model.

An equivalent way of looking at this would be to enforce the Bonferroni correction which results in a 5%/32 = 0.16% individual test significance level (assuming 5% family-wise error rate threshold) and therefore non-significant results in the average scores of male and female instructors for all disciplines and rank groups.

We excluded from consideration Postdoctoral Fellows, Instructors with appointments in the Division of Continuing Education, Temporary Student instructors, and Visiting Professors.

In the latter case, we used instructor-invariant course fixed effects specific to a course offering and semester that the course was being taught (e.g., fall semester Multivariable Calculus). The fixed effects allowed us to take into account the possibility of unobserved course and semester heterogeneity.

We would like to emphasize that in all cases, representative age is equal for males and females.

Results for Professors followed the same pattern, with the expected female SET score being higher than for a male Professor of the same age. The confidence intervals, however, are extremely wide (because there was only one female Professor in the sample) and are not displayed in Fig. 4. The difference for Professors was not statistically significant.

There was also a difference in sign for the interaction of gender with Professor (as we mention in the previous footnote, there is only one female professor in the sample).

References

Adams, M. J., & Umbach, P. (2012). Nonresponse and online student evaluations of teaching: Understanding the influence of salience, fatigue, and academic environments. Research in Higher Education, 53, 576–591.

Algozzine, B. B. (2004). Student Evaluations of College Teaching: A practice in search of principles. College Teaching, 52(4), 134–141.

Angrist, J. D., & Pischke, J.-S. (2010). The credibility revolution in empirical econometrics: How better research design is taking the con out of econometrics. Journal of Economic Perspectives, 24(2), 3–30.

Barre, E. (2018). Research on student ratings continues to evolve. We should, too. Reflections on teaching and learning: The CTE blog. Rice Center for Teaching Excellence.

Bettinger, E., Fox, L., Loeb, S., & Taylor, E. S. (2017). Virtual classrooms: How online college courses affect student success. American Economic Review, 107(9), 2855–2875.

Boring, A. (2017). Gender biases in student evaluations of teaching. Journal of Public Economics, 145, 27–41.

Boring, A., Ottoboni, K., & Stark, P. (2016). Student evaluations of teaching (mostly) do not measure teaching effectiveness. ScienceOpen Research. https://doi.org/10.14293/S2199-1006.1.SOR-EDU.AETBZC.v1.

Centra, J. A., & Gaubatz, N. B. (2000). Is there gender bias in student evaluations of teaching? The Journal of Higher Education, 71(1), 17–33.

Davies, M., Hirschberg, J., Lye, J., & Johnstone, C. (2010a). A systematic analysis of quality of teaching surveys. Assessment & Evaluation in Higher Education, 35(1), 83–96.

Davies, M., Hirschberg, J., Lye, J., Johnston, C., & McDonald, I. (2010b). Systematic influences on teaching evaluations: The case for caution. Assessment & Evaluation in Higher Education, 35(1), 83–96.

Esarey, J., & Valdes, N. (2020). Unbiased, reliable, and valid student evaluations can still be unfair. Assessment & Evaluation in Higher Education, 45(8), 1106–1120.

Feldman, K. A. (1993). College students’ views of male and female college teachers: PartII: Evidence from students’ evaluations of their classroom teachers. Research in Higher Education, 34(2), 151–211.

Key, E., & Ardoin, P. (n.d.). Gender bias in teaching evaluations: What can be done? (Working Paper).

Loeher, L.L.-M. (2006). Guide to evaluation of instruction. Regents of the University of California.

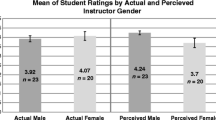

MacNell, L., Driscoll, A., & Hunt, A. (2015). What’s in a name: Exposing gender bias in student ratins of teaching. Innovation in Higher Education, 40, 291–303.

Marsh, H. W. (2007). Students’ evaluations of university teaching: a multidimensional perspective. In R. Perry & J. Smart (Eds.), The scholarship of teaching and learning in higher education: an evidence-based perspective (pp. 319–384). Springer.

Marsh, H. W., & Roche, L. A. (1997). Making students’ evaluations of teaching effectiveness effective: The critical issues of validity, bias, and utility. American Psychologist, 52(11), 1187–1197.

Martin, L. L. (2016). Gender teaching evaluations and professional success in political science. PS: Political Science and Politics, 49(2), 313–319.

Mengel, F., Sauermann, J., & Zolitz, U. (2019). Gender bias in teaching evaluations. Journal of the European Economic Association, 17(2), 535–566.

Miles, P., & House, D. (2015). The tail wagging the dog; An overdue examination of student teaching evaluations. International Journal of Higher Education, 4(2), 116–126.

Mitchell, K., & Martin, J. (2018). Gender bias in student evaluations. American Political Science Association, 51, 648–652.

Theall, M., & Franklin, J. (2001). Looking for bias in all the wrong places: A search for truth or a witch hunt in student ratings of instruction. New Directions for Instituional Research, 2001(109), 45.

Zipser, N., & Mincieli, L. (2018). Administrative and structural changes in student evaluations of teaching and their effects on overall instructor scores. Assessment and Evaluation in Higher Education, 43(6), 995–1008.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

See Table 4

.

Appendix 2

See Table 5

.

Appendix 3

See Table 6

.

Appendix 4

Regression Models for Calculus Instructors’ SET Scores

We test for gender effects using the following ordinary least squares (OLS) multivariate models:

where index i enumerates instructors, c is the course offering,

and time is indexed by

Here, SETict is the average score of instructor i for course c received in semester t, and female is the dummy variable that takes value 1 if the instructor is a female and 0 otherwise. The control variable rank represents a set of four dummy variables for the instructor being a Lecturer/Preceptor, Professor, Senior non-ladder instructor, or Teaching Assistant/Teaching Fellow. For example, if an instructor is a preceptor in a given semester, then the rank variable for this instructor will be (1, 0, 0, 0), while a teaching post-doc will be represented by (0, 0, 0, 0). We notice that faculty rank may change over time and thus, generally, rank is time-dependent. Finally, ageit is the instructor age in years at the beginning of a given semester. One can see that model (1) is equivalent to a t-test for a difference between mean SET scores in the two gender groups; in model (2) we control for instructor rank and age; and finally, interactions between faculty rank and gender and also between year-term and gender are added in (3). Here, year-term denotes 1, 2,…, 22 which correspond to Fall 2006, Spring 2007,…, Spring 2017, respectively. Alternatively to the OLS models (1, 2 and 3), we consider their variations as follows:

where αct are the instructor invariant fixed effects that satisfy the following constraints:

in each of (4, 5 and 6) cases.

Appendix 5

See Table 7

.

Rights and permissions

About this article

Cite this article

Zipser, N., Mincieli, L. & Kurochkin, D. Are There Gender Differences in Quantitative Student Evaluations of Instructors?. Res High Educ 62, 976–997 (2021). https://doi.org/10.1007/s11162-021-09628-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-021-09628-w