Abstract

With advanced digitalisation, we can observe a massive increase of user-generated content on the web that provides opinions of people on different subjects. Sentiment analysis is the computational study of analysing people's feelings and opinions for an entity. The field of sentiment analysis has been the topic of extensive research in the past decades. In this paper, we present the results of a tertiary study, which aims to investigate the current state of the research in this field by synthesizing the results of published secondary studies (i.e., systematic literature review and systematic mapping study) on sentiment analysis. This tertiary study follows the guidelines of systematic literature reviews (SLR) and covers only secondary studies. The outcome of this tertiary study provides a comprehensive overview of the key topics and the different approaches for a variety of tasks in sentiment analysis. Different features, algorithms, and datasets used in sentiment analysis models are mapped. Challenges and open problems are identified that can help to identify points that require research efforts in sentiment analysis. In addition to the tertiary study, we also identified recent 112 deep learning-based sentiment analysis papers and categorized them based on the applied deep learning algorithms. According to this analysis, LSTM and CNN algorithms are the most used deep learning algorithms for sentiment analysis.

Similar content being viewed by others

1 Introduction

Sentiment analysis or opinion mining is the computational study of people's opinions, sentiments, emotions, and attitudes towards entities such as products, services, issues, events, topics, and their attributes (Liu 2015). As such, sentiment analysis can allow tracking the mood of the public about a particular entity to create actionable knowledge. Also, this type of knowledge can be used to understand, explain, and predict social phenomena (Pozzi et al. 2017). For the business domain, sentiment analysis plays a vital role in enabling businesses to improve strategy and gain insight into customers' feedback about their products. In today's customer-oriented business culture, understanding the customer is increasingly important (Chagas et al. 2018).

The explosive growth of discussion platforms, product review websites, e-commerce, and social media facilitates a continuous stream of thoughts and opinions. This growth makes it challenging for companies to get a better understanding of customers' aggregate opinions and attitudes towards products. The explosion of internet-generated content coupled with techniques like sentiment analysis provides opportunities for marketers to gain intelligence on consumers' attitudes towards their products (Rambocas and Pacheco 2018). Extracting sentiments from product reviews helps marketers to reach out to customers who need extra care, which will improve customer satisfaction, sales, and ultimately benefits businesses (Vyas and Uma 2019).

Sentiment analysis is a multidisciplinary field, including psychology, sociology, natural language processing, and machine learning. Recently, the exponentially growing amounts of data and computing power enabled more advanced forms of analytics. Machine learning, therefore, became a dominant tool for sentiment analysis. There is an abundance of scientific literature available on sentiment analysis, and there are also several secondary studies conducted on the topic.

A secondary study can be considered as a review of primary studies that empirically analyze one or more research questions (Nurdiani et al. 2016). The use of secondary studies (i.e., systematic reviews) in software engineering was suggested in 2004, and the term “Evidence-based Software Engineering” (EBSE) was coined by Kitchenham et al. (2004). Nowadays, secondary studies are widely used as a well-established tool in software engineering research (Budgen et al. 2018). The following two kinds of secondary studies can be conducted within the scope of EBSE:

-

Systematic Literature Review (SLR): An SLR study aims to identify relevant primary studies, extract the required information regarding the research questions (RQs), and synthesize the information to respond to these RQs. It follows a well-defined methodology and assesses the literature in an unbiased and repeatable way (Kitchenham and Charters 2007).

-

Systematic Mapping Study (SMS): An SMS study presents an overview of a particular research area by categorizing and mapping the studies based on several dimensions (i.e., facets) (Petersen et al. 2008).

SLR and SMS studies are different than traditional review papers (a.k.a., survey articles) because we systematically search in electronic databases and follow a well-defined protocol to identify the articles. There are also several differences between SLR and SMS studies (Catal and Mishra 2013; Kitchenham et al. 2010b). For instance, while RQs of the SLR studies are very specific, RQs of SMS are general. The search process of the SLR is driven by research questions, but the search process of the SMS is based on the research topic. For the SLR, all relevant papers must be retrieved, and quality assessments of identified articles must be performed; however, requirements for the SMS are less stringent.

When there is a sufficient number of secondary studies on a research topic, a tertiary study can be performed (Kitchenham et al. 2010a; Nurdiani et al. 2016). A tertiary study synthesizes data from secondary studies and provides a comprehensive review of research in a research area (Rios et al. 2018). They are used to summarize the existing secondary studies and can be considered as a special form of review that uses other secondary studies as primary studies (Raatikainen et al. 2019).

Although sentiment analysis has been the topic of some SLR studies, a tertiary study characterizing these systematic reviews has not been performed yet. As such, the aim of our study is to identify and characterize systematic reviews in sentiment analysis and present a consolidated view of the published literature to better understand the limitations and challenges of sentiment analysis. We follow the research methodology guidelines suggested for the tertiary studies (Kitchenham et al. 2010a).

The objective of this study is thus to better understand the sentiment analysis research area by synthesizing results of these secondary studies, namely SLR and SMS, and providing a thorough overview of the topic. The methodology that we followed applies a systematic literature review to a sample of systematic reviews, and therefore, this type of tertiary study is valuable to determine the potential research areas for further research.

As part of this tertiary study, different models, tasks, features, datasets, and approaches in sentiment analysis have been mapped and also, challenges and open problems in this field are identified. Although tertiary studies have been performed for other topics in several fields such as software engineering and software testing (Raatikainen et al. 2019; Nurdiani et al. 2016; Verner et al. 2014; Cruzes and Dybå, 2011; Cadavid et al. 2020), this is the first study that performs a tertiary study on sentiment analysis.

The main contributions of this article are three-fold:

-

We present the results of the first tertiary study in the literature on sentiment analysis.

-

We identify systematic review studies of sentiment analysis systematically and explain the consolidated view of these systematic studies.

-

We support our study with recent survey papers that review deep learning-based sentiment analysis papers and explain the popular lexicons in this field.

The rest of the paper is organized as follows: Sect. 2 provides the background and related work. Section 3 explains the methodology, which was followed in this study. Section 4 presents the results in detail. Section 5 provides the discussion, and Sect. 6 explains the conclusions.

2 Background and related work

Sentiment analysis and opinion mining are often used interchangeably. Some researchers indicate a subtle difference between sentiments and opinions, namely that opinions are more concrete thoughts, whereas sentiments are feelings (Pozzi et al. 2017). However, sentiment and opinion are related constructs, and both sentiment and opinion are included when referring to either one. This research adopts sentiment analysis as a general term for both opinion mining and sentiment analysis.

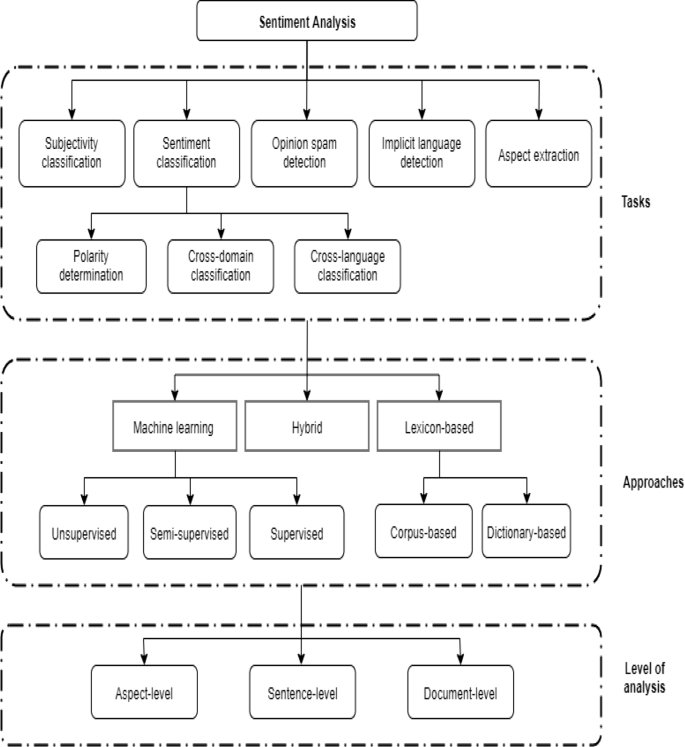

Sentiment analysis is a broad concept that consists of many different tasks, approaches, and types of analysis, which are explained in this section. In addition, an overview of sentiment analysis is represented in Fig. 1, which is adapted from (Hemmatian and Sohrabi 2017; Kumar and Jaiswal 2020; Mite-Baidal et al. 2018; Pozzi et al. 2017; Ravi and Ravi 2015). Cambria et al. (2017) stated that a holistic approach to sentiment analysis is required, and only categorization or classification is not sufficient. They presented the problem as a three-layer structure that includes 15 Natural Language Processing (NLP) problems as follows:

-

Syntactics layer: Microtext normalization, sentence boundary disambiguation, POS tagging, text chunking, and lemmatization

-

Semantics layer: Word sense disambiguation, concept extraction, named entity recognition, anaphora resolution, and subjectivity detection

-

Pragmatics layer: Personality recognition, sarcasm detection, metaphor understanding, aspect extraction, and polarity detection

Cambria (2016) state that approaches for sentiment analysis and affective computing can be divided into the following three categories: knowledge-based techniques, statistical approaches (e.g., machine learning and deep learning approaches), and hybrid techniques that combine the knowledge-based and statistical techniques.

Sentiment analysis models can adopt different pre-processing methods and apply a variety of feature selection methods. While pre-processing means transforming the text into normalized tokens (e.g., removing article words and applying the stemming or lemmatization techniques), feature selection means determining what features will be used as inputs. In the following subsections, related tasks, approaches, and levels of analysis are presented in detail.

2.1 Tasks

2.1.1 Sentiment classification

One of the most widely known and researched tasks in sentiment analysis is sentiment classification. Polarity determination is a subtask of sentiment classification and is often improperly used when referring to sentiment analysis. However, it is merely a subtask aimed at identifying sentiment polarity in each text document. Traditionally, polarity is classified as either positive or negative (Wang et al. 2014). Some studies include a third class called neutral. Cross-domain and cross-language classification are subtasks of sentiment classification that aim to transfer knowledge from a data-rich source domain to a target domain where data and labels are limited. The cross-domain analysis predicts the sentiment of a target domain, with a model (partly) trained on a more data-rich source domain. A popular method is to extract domain invariant features whose distribution in the source domain is close to that of the target domain (Peng et al. 2018). The model can be extended with target domain-specific information. The cross-language analysis is practiced in a similar way by training a model on a source language dataset and testing it on a different language where data is limited, for example by translating the target language to the source language before processing (Can et al. 2018). Xia et al. (2015) stated that opinion-level context is beneficial to solve polarity ambiguity of sentiment words and applied the Bayesian model. Word polarity ambiguity is one of the challenges that need to be addressed for sentiment analysis. Vechtomova (2017) showed that the information retrieval-based model is an alternative to machine learning-based approaches for word polarity disambiguation.

2.1.2 Subjectivity classification

Subjectivity classification is a task to determine the existence of subjectivity in the text (Kasmuri and Basiron 2017). The goal of subjectivity classification is to restrict unwanted objective data objects for further processing (Kamal 2013). It is often considered the first step in sentiment analysis. Subjectivity classification detects subjective clues, words that carry emotion or subjective notions like ‘expensive’, ‘easy’, and ‘better’ (Kasmuri and Basiron 2017). These clues are used to classify text objects as subjective or objective.

2.1.3 Opinion spam detection

The growing popularity of e-commerce websites and review websites caused opinion spam detection to be a prominent issue in sentiment analysis. Opinion spams also referred to as false or fake reviews are intelligently written comments that either promote or discredit a product. Opinion spam detection aims to identify three types of features that relate to a fake review: review content, metadata of review, and real-life knowledge about the product (Ravi and Ravi 2015). Review content is often analyzed with machine learning techniques to uncover deception. Metadata includes the star rating, IP address, geo-location, user-id, etc.; however, in many cases, it is not accessible for analysis. The third method includes real-life knowledge. For instance, if a product has a good reputation, and suddenly the inferior product is rated superior in some period, reviews of that period might be suspected.

2.1.4 Implicit language detection

Implicit language refers to humor, sarcasm, and irony. There are vagueness and ambiguity in this form of speech, which is sometimes hard to detect even for humans. However, an implicit meaning to a sentence can completely flip the polarity of a sentence. Implicit language detection often aims at understanding facts related to an event. For example, in the phrase “I love pain”, pain is a factual word with a negative polarity load. The contradiction of the factual word ‘pain’ and subjective word ‘love’ can indicate sarcasm, irony, and humor. More traditional methods for implicit language detection include exploring clues such as emoticons, expressions for laughter, and heavy punctuation mark usage (Filatova 2012).

2.1.5 Aspect extraction

Aspect extraction refers to retrieving the target entity and aspects of the target entity in the document. The target entity can be a product, person, event, organization, etc. (Akshi Kumar and Sebastian 2012). People's opinions on various parts of a product need to be identified for fine-grained sentiment analysis (Ravi and Ravi 2015). Aspect extraction is especially important in sentiment analysis of social media and blogs that often do not have predefined topics.

Multiple methods exist for aspect extraction. The first and most traditional method is frequency-based analysis. This method finds frequently used nouns or compound nouns (POS tags), which are likely to be aspects. A rule of thumb that is often used is that if the (compound) noun occurs in at least 1% of the sentences, it is considered an aspect. This straightforward method turns out to be quite powerful (Schouten and Frasincar 2016). However, there are some drawbacks to this method (e.g., not all nouns are referring to aspects).

Syntax-based methods find aspects by means of syntactic relations they are in. A simple example is identifying aspects that are preceded by a modifying adjective that is a sentiment word. This method allows for low-frequency aspects to be identified. The drawback of this method is that many relations need to be found for complete coverage, which requires knowledge of sentiment words. Extra aspects can be found if more sentiment words that serve as adjectives can be identified. Qiu et al. (2009) propose a syntax-based algorithm that identifies aspects as well as sentiment words that works both ways. The algorithm identifies sentiment words for known aspects and aspects for known sentiment words.

2.2 Approaches

2.2.1 Machine learning-based approaches

Machine learning approaches for sentiment analysis tasks can be divided into three categories: unsupervised learning, semi-supervised learning, and supervised learning.

The unsupervised learning methods group unlabelled data into clusters that are similar to each other. For example, the algorithm can consider data as similar based on common words or word pairs in the document (Li and Liu 2014).

Semi-supervised learning uses both labeled and unlabelled data in the training process (da Silva et al. 2016a, b). A set of unlabelled data is complemented with some examples of labeled data (often limited) included building a classifier. This technique can yield decent accuracy and requires less human effort compared to supervised learning. In cross-domain and cross-language classification, domain, or language invariant features can be extracted with the help of unlabelled data, while fine-tuning the classifier with labeled target data (Peng et al. 2018). Semi-supervised learning is especially popular for Twitter sentiment analysis, where large sets of unlabelled data are available (da Silva et al. 2016a, b). Hussain and Cambria (2018) compared the computational complexity of several semi-supervised learning methods and presented a new semi-supervised model based on biased SVM (bSVM) and biased Regularized Least Squares (bRLS). Wu et al. (2019) developed a semi-supervised Dimensional Sentiment Analysis (DSA) model using the variational autoencoder algorithm. DSA calculates the sentiment score of texts based on several dimensions, such as dominance, valence, and arousal. Xu and Tan (2019) proposed the target-oriented semi-supervised sequential generative model (TSSGM) for target-oriented aspect-based sentiment analysis and showed that this approach outperforms two semi-supervised learning methods. Han et al. (2019) developed a semi-supervised model using dynamic thresholding and multiple classifiers for sentiment analysis. They evaluated their model on the Large Movie Review dataset and showed that it provides higher performance than the other models. Duan et al. (2020) proposed the Generative Emotion Model with Categorized Words (GEM-CW) model for stock message sentiment classification and demonstrated that this model is effective. Gupta et al. (2018) investigated the semi-supervised approaches for low resource sentiment classification and showed that their proposed methods improve the model performance against supervised learning models.

The most widely known machine learning method is supervised learning. This approach trains a model with labeled source data. The trained model can subsequently make predictions for an output considering new unlabelled input data. In most cases, supervised learning often outperforms unsupervised and semi-supervised learning approaches, but the dependency on labeled training data can require lots of human effort and is therefore sometimes inefficient (Hemmatian and Sohrabi 2017).

Machine learning methods are increasingly popular for aspect extraction. The most commonly used approach for aspect extraction is topic modeling, an unsupervised method that assumes any document contains a certain amount of hidden topics (Hemmatian and Sohrabi 2017). Latent Dirichlet Allocation (LDA) algorithm, which has many different variations, is a popular topic modeling algorithm (Nguyen and Shirai 2015) that allows observations to be explained by unsupervised grouping of similar data. LDA outputs some topics of a text document and attributes each word in the document to one of the identified topics. The drawback of machine learning methods is that they require lots of labeled data.

2.2.2 Deep learning-based approaches

Deep learning is a sub-branch of machine learning that uses deep neural networks. Recently, deep learning algorithms have been widely applied for sentiment analysis. In this section, first, we discuss the articles that present an overview of papers that applied deep learning for sentiment analysis. These articles are neither SLR nor SMS papers. Instead, they are either traditional review (a.k.a., survey) articles or comparative assessment papers that explain the existing deep learning-based approaches in addition to the experimental analysis. Later, we also present some of the deep learning-based models used in sentiment analysis papers.

In Table 1, we present the survey papers that analyzed deep learning-based sentiment analysis papers. In this table, we also show the number of papers investigated in these survey papers.

Dang et al. (2020) presented a summary of 32 deep learning-based sentiment analysis papers and analyzed the performance of Deep Neural Networks (DNN), Convolutional Neural Networks (CNN), and Recurrent Neural Networks (RNN) on eight datasets. They selected these deep learning algorithms because they are the most widely used deep learning algorithms according to their analysis of 32 deep learning-based sentiment analysis papers. They used both word embedding and term frequency-inverse document frequency (TF-IDF) to prepare inputs for classification algorithms and reported that the RNN-based model using word embedding achieved the best performance among other algorithms. However, the processing time of the RNN-based model is ten times larger than the CNN-based one. In addition, they reported that the following deep learning algorithms were used in the 32 deep learning-based sentiment analysis papers: CNN, Long-Short Term Memory (LSTM) (tree-LSTM, discourse-LSTM, coattention-LSTM, bi-LSTM), Gated Recurrent Units (GRU), RNN, Coattention-MemNet, Latent Rating Neural Network (LRNN), Simple Recurrent Networks (SRN), and Recurrent Neural Tensor Network (RNTN)).

Yadav and Vishwakarma (2019) reviewed 130 research papers that apply deep learning techniques in sentiment analysis. They identified the following deep learning methods used for sentiment analysis: CNN, Recursive Neural Network (Rec NN), RNN (LSTM and GRU), Deep Belief Networks (DBN), Attention-based Network, Bi-RNN, and Capsule Network. They reported that LSTM provides better results, and the use of deep learning approaches for sentiment analysis is promising. However, they stated that they require a huge amount of data, and there is a lack of training datasets.

Zhang et al. (2018) published a survey article on the application of deep learning methods for sentiment analysis. They explained several papers that address one of the following levels: document level, sentence level, and the aspect level sentiment classification. The applied algorithms per analysis level are listed as follows:

-

Document-level sentiment classification: Artificial Neural Networks (ANN), Stacked Denoising Autoencoder (DSA), Denoising Autoencoder, CNN, LSTM, GRU, Memory Network, and GRU-based Encoder

-

Sentence-level sentiment classification: CNN, RNN, Semi-supervised Recursive Autoencoders Network (RAE), Recursive Neural Network, Recursive Neural Tensor Network, Dynamic CNN, LSTM, CNN-LSTM, Bi-LSTM, and Recurrent Random Walk Network

-

Aspect-level sentiment classification: Adaptive Recursive Neural Network, LSTM, Bi-LSTM, Attention-based LSTM, Memory Network, Interactive Attention Network, Recurrent Attention Network, and Dyadic Memory Network

Rojas‐Barahona (2016) presented an overview of deep learning approaches used for sentiment analysis and divided the techniques into the following categories:

-

Non-Recursive Neural Networks: RNN (variant: Bi-RNN), LSTM (variant: Bi-LSTM), and CNN (variants: CNN-Multichannel, CNN-non-static, Dynamic CNN)

-

Recursive Neural Networks: Recursive Autoencoders and Constituency Tree Recursive Neural Networks

-

Combination of Non-Recursive and Recursive Methods: Tree-Long Short-Term Memory (Tree-LSTM) and Deep Recursive Neural Networks (Deep RsNN)

For the movie reviews dataset, Rojas‐Barahona (2016) showed that the Dynamic CNN model provides the best performance. For the Sentiment TreeBank dataset, the Constituency Tree‐LSTM that is a Recursive Neural Network outperforms all the other algorithms.

Habimana et al. (2020a) reviewed papers that applied deep learning algorithms for sentiment analysis and also performed several experiments with the specified algorithms on different datasets. They reported that dynamic sentiment analysis, sentiment analysis for heterogeneous information, and language structure are the main challenges for the sentiment analysis research field. They categorized the techniques used in the papers based on several analysis levels that are listed as follows:

-

Document-level Sentiment Analysis: CNN-based models, RNN with attention-based models, RNN with the user and product attention-based models, Adversarial Network Models, and Hybrid Models

-

Sentence-Level Sentiment Classification: Unsupervised Pre-Trained Networks (UPN), CNN, Recurrent Neural Networks, Deep Reinforcement Learning (DRL), RNN, RNN with cognition attention-based models

-

Aspect-based Sentiment Analysis: Attention-based models with aspect information, attention-based models with the aspect context, RNN with attention memory model, RNN with commonsense knowledge model, CNN-based model, and Hybrid model

Do et al. (2019) presented an overview of over 40 deep learning approaches used for aspect-based sentiment analysis. They categorized papers based on the following categories: CNN, RNN, Recursive Neural Network, and Hybrid methods. Also, they presented the advantages, disadvantages, and implications for aspect-based sentiment analysis (ABSA). They concluded that deep learning and ABSA are still in the early stages, and there are four main challenges in this field, namely domain adaptation, multi-lingual application, technical requirements (labeled data and computational resources and time), and linguistic complications.

Minaee et al. (2020) reviewed more than 150 deep learning-based text classification studies and presented their strengths and contributions. 22 of these studies proposed approaches for sentiment analysis. They provided more than 40 popular text classification datasets and showed the performance of some deep learning models on popular datasets. Since they did not only focus on sentiment analysis problems, they explained other kinds of models used for other tasks such as news categorization, topic analysis, question answering (QA), and natural language inference. They explained the following deep learning models in their paper: Feed-forward neural networks, RNN-based models, CNN-based models, Capsule Neural Networks, Models with attention mechanism, Memory augmented networks, Transformers, Graph Neural Networks, Siamese Neural Networks, Hybrid models, Autoencoders, Adversarial training, and Reinforcement learning. The challenges reported in this study are new datasets for multi-lingual text classification, interpretable deep learning models, and memory-efficient models. They concluded that the use of deep learning in text classification improves the performance of the models.

Some of the highly cited deep learning-based sentiment analysis papers are shown in Table 2.

Kim (2014) performed several experiments with the CNN algorithm for sentence classification and showed that even with little parameter tuning, the CNN model that includes only one convolutional layer provides better performance than the state-of-the-art models of sentiment analysis.

Wang et al. (2016) developed an attention-based LSTM approach that can learn aspect embeddings. These aspects are used to compute the attention weights. Their models provided a state-of-the-art performance on SemEval 2014 dataset. Similarly, Pergola et al. (2019) proposed a topic-dependent attention model for sentiment classification and showed that the use of recurrent unit and multi-task learning provides better representations for accurate sentiment analysis.

Chen et al. (2017) developed the Recurrent Attention on Memory (RAM) model and showed that their model outperforms other state-of-the-art techniques on four datasets, namely SemEval 2014 (two datasets), Twitter dataset, and Chinese news comment dataset. Multiple attentions were combined with a Recurrent Neural Network in this study.

Ma et al. (2018) incorporated a hierarchical attention mechanism to the LSTM network and also extended the LSTM cell to incorporate commonsense knowledge. They demonstrated that the combination of this new LSTM model called Sentic LSTM and the attention architecture outperforms the other models for targeted aspect-based sentiment analysis.

Chen et al. (2016) developed a hierarchical LSTM model that incorporates user and product information via different levels of attention. They showed that their model achieves significant improvements over models without user and product information on IMDB, Yelp2013, and Yelp2014 datasets.

Wehrmann et al. (2017) proposed a language-agnostic sentiment analysis model based on the CNN algorithm, and the model does not require any translation. They demonstrated that their model outperforms other models on a dataset, including tweets from four languages, namely English, German, Spanish, and Portuguese. The dataset consists of 1.6 million annotated tweets (i.e., positive, negative, and neutral) from 13 European languages.

Ebrahimi et al. (2017) presented the challenges of building a sentiment analysis platform and focused on the 2016 US presidential election. They reported that they reached the best accuracy using the CNN algorithm, and the content-related challenges were hashtags, links, and sarcasm.

Poria et al. (2018) investigated three deep learning-based architectures for multimodal sentiment analysis and created a baseline based on state-of-the-art models.

Xu et al. (2019) developed an improved word representation approach, used the weighted word vectors as input into the Bi-LSTM model, obtained the comment text representation, and applied the feedforward neural network classifier to predict the comment sentiment tendency.

Majumder et al. (2019) proposed a GRU-based Neural Network that can be trained on sarcasm or sentiment datasets. They demonstrated that multitask learning-based approaches provide better performance than standalone classifiers developed on sarcasm and sentiment datasets.

After investigating these above-mentioned survey and highly cited articles, we searched in Google Scholar by using our search criteria (i.e., “deep learning” and “sentiment analysis”) to reach the recent state-of-the-art deep learning-based studies published in 2020. We retrieved 112 deep learning-based sentiment analysis papers published in 2020 and extracted the applied deep learning algorithms from these papers. In Appendix (Table 16), we present these recent deep learning-based sentiment analysis papers. In Table 3, we show the distribution of applied deep learning algorithms used in these 112 recent papers.

According to this table, the most applied algorithm is the LSTM algorithm (i.e., 35.53%) and the second most used algorithm is CNN (i.e., 33.33%). The other widely used algorithms are GUR (i.e., 8.77%) and RNN (i.e., 7.89%) algorithms. However, the other well-known deep learning algorithms such as DNN, Recursive Neural Network (ReNN), Capsule Network (CapN), Generative Adversarial Network (GAN), Deep Q-Network, and Autoencoder have not been preferred much and used only in a few studies. Most of the hybrid approaches also combined the CNN and LSTM algorithms and therefore, they were represented under these categories. As this analysis indicates, most of the recent deep learning-based studies followed the supervised learning machine learning approach.

2.2.3 Lexicon-based approaches

The traditional approach for sentiment analysis is the lexicon-based approach (Hemmatian and Sohrabi 2017). Lexicon-based methods scan through the documents for words that express positive or negative feelings to humans. Negatives words would be ‘bad’, ‘ugly’, ‘scary’ while positive words are, for example, ‘good’ or ‘beautiful’. The values of these words are documented in a lexicon. Words with high positive or negative values are mostly adjectives and adverbs. Sentiment analysis shows to be extremely dependent on the domain of interest (Vinodhini 2012). For example, analyzing movie reviews can yield very different results compared to analyzing Twitter data due to different forms of language used. Therefore, the lexicon used for sentiment analysis needs to be adjusted according to the domain of interest. This can be a time-consuming process. However, lexicon-based methods do not require training data, which is a big advantage (Shayaa et al. 2018).

There are two main approaches to creating sentiment lexicons: dictionary-based and corpus-based. The dictionary-based approach starts with a small set of sentiment words, and iteratively expands the lexicon with synonyms and antonyms from existing dictionaries. In most cases, the dictionary-based approach works best for general purposes. Corpus-based lexicons can be tailored to specific domains. The approach starts with a list of general-purpose sentiment words and discovers other sentiment words from a domain corpus based on co-occurring word patterns (Mite-Baidal et al. 2018).

2.2.4 Hybrid approaches

There are different hybrid approaches in the literature. Some of them aim to extend machine learning models with lexicon-based knowledge (Behera et al. 2016). The goal is to combine both methods to yield optimal results using an effective feature set of both lexicon and machine learning-based techniques (Munir Ahmad et al. 2017). This way, the deficiencies and limitations of both approaches can be overcome.

Recently, researchers focused on the integration of symbolic and subsymbolic Artificial Intelligence (AI) for sentiment analysis (Cambria et al. 2020). Machine learning (also, deep learning) is considered to be a bottom-up approach and applies subsymbolic AI. This is extremely useful for exploring a huge amount of data and discovering interesting patterns in the data. Although this type of bottom-up approach works quite well for image classification tasks, they are not very effective for natural language processing tasks. For effective communication, we learn many issues such as cultural awareness and commonsense in a top-down manner instead of a bottom-up manner (Cambria et al. 2020). Therefore, these researchers applied subsymbolic AI (i.e., deep learning) to recognize patterns in text and represented them in a knowledge base using symbolic AI (i.e., logic and semantic networks). They built a new commonsense knowledge base called SenticNet for the sentiment analysis problem and concluded that coupling symbolic AI and subsymbolic AI is crucial to passing to the natural language understanding stage from natural language processing.

Minaee et al. (2019) developed an ensemble model using LSTM and CNN algorithm and demonstrated that this ensemble model provides better performance than the individual models.

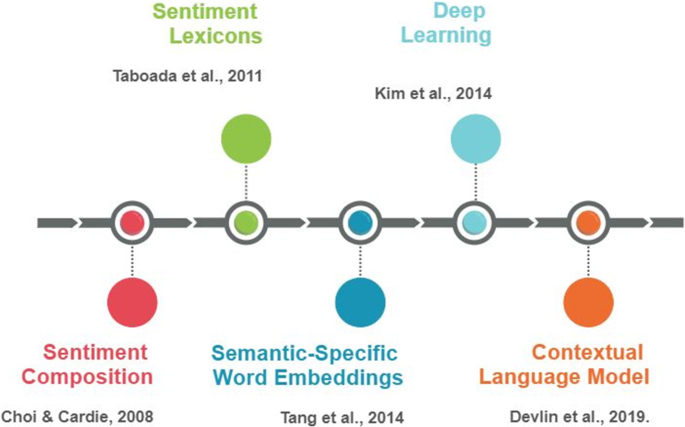

2.2.5 Milestones of sentiment analysis research

Recently, Poria et al. (2020) investigated the challenges and new research directions in sentiment analysis research. Also, they presented the key milestones of sentiment analysis for the last two decades. We adapted their timeline figure for the last decade. In Fig. 2, we present the most promising works of sentiment analysis research. For a more detailed illustration of milestones, we refer the readers to the article of Poria et al. (2020).

2.3 Levels of analysis

Sentiment analysis can be implemented at the following three levels: document, sentence, and aspect level. We elaborate on these in the next paragraphs.

2.3.1 Document-level

Document-level analysis considers the whole text document as a unit of analysis (Wang et al. 2014). It is a simplified task that presumes that the entire document originates from a single opinion holder. Document analysis comes with some issues, namely that there could be multiple and mixed opinions in a document expressed in many different ways, sometimes with implicit language (Akshi Kumar and Sebastian 2012). Typically, documents are revised on a sentence or aspect level before determining the polarity of the entire text document.

2.3.2 Sentence-level:

Sentence-level analysis considers specific sentences in a text and is especially used for subjectivity classification. Text documents typically consist of sentences that either contain opinion or not. Subjectivity classification analyses individual sentences in a document to detect whether the sentence contains facts or emotions and opinions. The main goal of subjectivity classification is to exclude sentences that do not contain sentiment or opinion (Akshi Kumar and Sebastian 2012). This analysis often includes subjectivity classification as a step to either include or exclude sentences for analysis.

2.3.3 Aspect-level

Aspect-level analysis is a challenging topic in sentiment analysis. It refers to analyzing sentiments about specific entities and their aspects in a text document, not merely the overall sentiment of the document (Tun Thura Thet et al. 2010). It is also known as entity-level or feature-level analysis. Even though the general sentiment of a document may be classified as positive or negative, the opinion holder can have a divergent opinion about specific aspects of an entity (Akshi Kumar and Sebastian 2012). In order to measure aspect-level opinion, aspects of the entity need to be identified. Valdivia et al. (2017) stated that aspect-based sentiment analysis is beneficial to the business manager because customer opinions are extracted in a transparent way. Also, they reported that ironic expression detection in TripAdvisor is still an open problem and also, labeling of reviews should not only focus on user ratings because some users write positive sentences on negative user ratings and vice versa. Poria et al. (2016) proposed a new algorithm called Sentic LDA (Latent Dirichlet Allocation) and improved the LDA algorithm with semantic similarity for aspect-based sentiment analysis. They concluded that this new algorithm helps researchers to pass to the semantics analysis from the syntactical analysis in aspect-based sentiment analysis by using the common-sense computing (Cambria et al. 2009) and improves the clustering process (Poria et al. 2016).

2.4 Popular lexicons

Several survey articles discussed the popular lexicons used in sentiment analysis. Dang et al. (2020) reported the following popular sentiment analysis lexicons in their article: Sentiment 140, Tweets Airline, Tweets Semeval, IMDB Movie Reviews (1), IMDB Movie Reviews (2), Cornell Movie Reviews, Book Reviews, and Music Reviews datasets. Habimana et al. (2020a) explained the following popular lexicons in their survey article: IMDB, IMDB2, SST-5, SST-2, Amazon, SemEval 2014-D1, SemEval 2014-D2, SemEval 2017, STS, STS-Gold, Yelp, HR (Chinese), MR, Sanders, Deutsche Bahn (Deutsch), ASTD (Arabic), YouTube, CMU-MOSI, and CMU-MOSEI. Do et al. (2019) reported the following datasets widely used in sentiment analysis papers: Customer review data, SemEval 2014, SemEval 2015, SemEval 2016, ICWSM 2010 JDPA Sentiment Corpus, Darmstadt Service Review Corpus, FiQA ABSA, and target-dependent Twitter sentiment classification dataset. Minaee et al. (2020) explained the following datasets used for sentiment analysis: Yelp, IMDB, Movie Review, SST, MPQA, Amazon, and aspect-based sentiment analysis datasets (SemEval 2014 Task-4, Twitter, and SentiHood). Researchers who would like to perform a new study are suggested to look at these articles because links and other details per lexicon are presented in detail in these articles.

2.5 Advantages, disadvantages, and performance of the models

Several studies have been performed to compare the performance of existing models for sentiment analysis. Each model has its own advantages and weaknesses. For the aspect-based sentiment analysis, Do et al. (2019) divided models based on the following three categories: CNN, RNN, and Recurrent Neural Networks. The advantages of CNN-based models are fast computation, the ability to extract local patterns and represent non-linear dynamics. The disadvantage of the CNN-based model is the high demand for data. The advantages of RNN-based models are that they do not require a huge amount of data, they have a distributed hidden state that stores previous computations, and they require fewer parameters. The disadvantages are that they cannot capture long-term dependencies, and they select the last hidden state to represent the sentence. The advantages of Recurrent Neural Networks are their simple architectures and their ability to learn tree structures. The disadvantages are that they require parsers that might be slow, and they are still at early stages. It was reported that RNN-based models provide better performance than CNN-based models, and more research is required for Recurrent Neural Networks.

Yadav and Vishwakarma (2019) reported that deep learning-based models are gaining popularity for different sentiment analysis tasks. They stated that CNN followed by LSTM (an RNN algorithm) provides the highest accuracy for document-level sentiment classification, researchers focused on RNN algorithms (particularly, LSTM) for sentence-level sentiment classification and aspect-level sentiment classification, and RNN models the best-performing ones for multi-domain sentiment classification. They also discussed the merits and demerits of CNN, Recursive Neural Networks (RecNN), RNN, LSTM, GRU, DBN models.

The advantage of DBN is the ability to learn the dimension of vocabulary using different layers. The disadvantages of DBN are that they are computationally expensive and unable to remember the previous task.

The advantage of GRU is that it is computationally less expensive, it has a less complex structure, and it can capture interdependencies between sentences. The disadvantage of GRU is that it does not have a memory unit, and its performance is lower than the LSTM model on larger datasets.

The advantage of LSTM is that they perform better than CNN, they can extract sequential information, and they can forget/remember things selectively. The disadvantage of LSTM is that it is considerably slower, each output should be reconciled to a sentence, and it is computationally expensive.

The advantage of RNN models is that they provide better performance than CNN models, have fewer parameters, and capture long-distance dependency features. The disadvantage of RNN models is that they cannot process long sequences.

The advantage of CNN models is that they are less expensive in terms of computational complexity and faster compared to RNN, LSTM, and GRU algorithms. Also, they can discover relevant features from different parts of the word. The disadvantage of LSTM models is that they cannot preserve long-term dependency and ignores this type of long-distance features.

The advantage of RecNN is that they are good at learning hierarchical structure and therefore, they provide better performance for NLP tasks. The disadvantage of RecNN models is that their efficiency is dramatically affected in the case of informal data that do not have grammatical rules and training can be difficult because structure changes for every sample.

Despite the excellent performance of deep learning models, there are some drawbacks. The following drawbacks are discussed by Yadav and Vishwakarma (2019):

-

A huge amount of data is required to train the models and finding these large datasets is not easy in many cases

-

They work like a black box, it is hard to understand how they predict the sentiment of the text

-

The performance of the models is affected by the hyperparameters and the selection of these hyperparameters is very challenging

-

Training time is very long and most of the time they require GPU support and large RAM

Yadav and Vishwakarma (2019) performed experiments to compare the execution time and accuracy of several deep learning algorithms. They reported that the LSTM algorithm and its variations such as Bi-LSTM and GRU require long training and execution time compared to other deep learning models. However, these LSTM-based algorithms better performance. Therefore, there is a trade-off between time and accuracy parameters when selecting the deep learning model.

3 Methodology

In this section, the methodology of our tertiary study is presented. This study can be considered as a systematic review study that targets secondary studies on sentiment analysis, which is a widely researched topic. There are several reviews and mapping studies available on sentiment analysis in the literature. In this section, we focus on synthesizing the results of these secondary studies. Hence, we conduct a tertiary study. The study design is based on the systematic literature review (SLR) protocol suggested by Kitchenham and Charters (2007) and the format followed by the tertiary study papers of Curcio et al. (2019); Raatikainen et al. (2019). This study reviews two types of secondary studies:

-

SLR: These studies are performed to aggregate results related to specific research questions.

-

SMS: These studies aim to find and classify primary studies in a specific research topic. This method is more explorative compared to the SLR and is used to identify available literature prior to undertaking an SLR.

Both are considered secondary studies as they review primary studies. A pragmatic comparison between SLR and SMS is discussed by Kitchenham et al. (2011). Three main phases for conducting this research are planning, conducting, and reporting the review (Kitchenham 2004). Planning refers to identifying the need for the review and developing the review protocol. The goal of this tertiary study is to gather a broad overview of the current state of the art in sentiment analysis and to identify open problems and challenges in the field.

3.1 Research questions

The following research questions have been defined for this study:

-

RQ1 What are the adopted features (input/output) in sentiment analysis?

-

RQ2 What are the adopted approaches in sentiment analysis?

-

RQ3 What domains have been addressed in the adopted data sets?

-

RQ4 What are the challenges and open problems with respect to sentiment analysis?

3.2 Search process

This section provides insight into the process of determining secondary studies to include. Not all databases are equally relevant to this research topic. Databases that are used to identify secondary studies are adopted from the search strategy of secondary studies on sentiment analysis (Genc-Nayebi and Abran 2017; Hemmatian and Sohrabi 2017; Kumar and Jaiswal 2020; Sharma and Dutta 2018). The following databases are included in this study: IEEE, Science Direct, ACM, Springer, Wiley, and Scopus. To find the relevant literature, databases are searched for the title, abstract, and keywords based on the following query:

(“sentiment analysis” OR “sentiment classification” OR “opinion mining”) AND (“SLR” OR “systematic literature review” OR “systematic mapping” OR “mapping study”)

This query results in 43 hits. As stated before, this study only considers systematic literature reviews and systematic mapping studies since they are considered of higher quality and more in-depth compared to survey articles. Inclusion and exclusion criteria are formulated, as shown in Table 4.

All secondary studies are analyzed and classified according to the inclusion and exclusion criteria in Table 4. After this process, 16 secondary studies are selected.

3.3 Quality assessment

The confidence placed in the secondary studies is on the quality assessment of the articles. For a tertiary study, the quality assessment is especially important (Goulão et al. 2016). The DARE criteria proposed by York University Centre for Reviews and Dissemination (CDR) and adopted in this study are often used in the context of software engineering (Goulão et al. 2016; Rios et al. 2018; Curcio et al. 2019; Goulão et al. 2016; Kitchenham et al. 2010a). The criteria are based on four questions (CQs), as shown in Table 5. For each selected article, the criteria are scored based on a three-point scale, as described in Table 6, adopted from (Kitchenham et al. 2010a, b).

The scoring procedure is Yes = 1, Partial = 0.5, and No = 0. The assessment is conducted by the researchers. The results of the quality assessment are shown in Table 7. Two studies are excluded based on the results, leaving a total amount of 14 studies remaining for analysis.

3.4 Additional data

In order to provide an overview of the selected secondary studies, Table 8 shows the following data extracted from the articles: Research focus, number of primary studies included in the review, year of publication, paper type (conference/journal/book chapter), and source. In addition, an overview of the research questions of the secondary studies is provided, as shown in Table 9. The reference numbers in Table 8 are used throughout the rest of this paper.

4 Results

This section addresses the results of the research questions derived from 14 secondary studies. For each research question, tables with aggregate results and in-depth descriptions and interpretations are presented. The selected secondary studies discuss specific sentiment analysis tasks. It is important to note that different tasks in sentiment analysis require different features and approaches. Therefore, a brief overview of each paper is presented. Note that in-depth analysis and synthesis of the articles are presented later in this section.

-

1.

Genc-Nayebi and Abran (2017) identify mobile app store opinion mining techniques. Their paper is mainly focused on statistical data mining techniques based on manual classification and correlation analysis. Some machine learning algorithms are discussed in the context of cross-domain analysis and app aspect-extraction. Some interesting challenges in sentiment analysis are proposed.

-

2.

Al-Moslmi et al. (2017) review the cross-domain sentiment analysis. Specific algorithms for cross-domain sentiment analysis are described.

-

3.

Qazi et al. (2017) research the opinion types and sentiment analysis. Opinion types are classified into the following three categories: regular, comparative, and suggestive. Several supervised machine learning techniques are used. Sentiment classification algorithms are mapped.

-

4.

Ahmed Ibrahim and Salim (2013) perform sentiment analysis of Arabic tweets. Their study is focused on mapping features and techniques used for general sentiment analysis.

-

5.

Shayaa et al. (2018) research the big data approach to sentiment analysis. A solid overview of machine learning methods and challenges is presented.

-

6.

A. Kumar and Sharma (2017) research sentiment analysis for government intelligence. Techniques and datasets are mapped.

-

7.

M. Ahmad et al. (2018) focus their research on SVM classification. SVM is the most used machine learning technique in sentiment classification.

-

8.

A. Kumar and Jaiswal (2020) discuss soft computing techniques for sentiment analysis on Twitter. Soft computing techniques include machine learning techniques. Deep learning (CNN in particular) is mentioned as upcoming in recent articles. KPIs are described thoroughly.

-

9.

A. Kumar and Garg (2019) research context-based sentiment analysis. They stress the importance of subjectivity in sentiment analysis and show that deep learning offers opportunities for context-based sentiment analysis.

-

10.

Kasmuri and Basiron (2017) research the subjectivity analysis. Its purpose is to determine whether the text is subjective or objective with objective clues. Subjectivity analysis is a classification problem, and thus, machine learning algorithms are widely used.

-

11.

Madhala et al. (2018) research customer emotion analysis. They review articles that classify emotions from 4 to 51 different classes.

-

12.

Mite-Baidal et al. (2018) research sentiment analysis in the education domain. E-learning is upcoming, and due to the online nature, lots of review data is generated on forums of MOOCS and social media.

-

13.

Salah et al. (2019) research the social media sentiment analysis. Mainly twitter data is used because of the high dimensionality (e. g., retweets, location, user followers no.) and structure.

-

14.

De Oliveira Lima et al. (2018) research opinion mining of hotel reviews specifically aimed at sustainability practices aspects. Limited information on used features is available. The following sections dive into the different models that are used in sentiment analysis, including adopted features, approaches, and datasets.

4.1 RQ1 “What are the adopted features in sentiment analysis?”

Table 10 depicts the common input and output features that articles present for the sentiment analysis approach. Checkmarks indicate that the features are explicitly discussed in the referred article. Traditional approaches commonly use Bag-Of-Words (BOW) method. BOW counts the words, referred to as n-grams, in the text and creates a sparse vector with 1 s for present words and 0 s for absent words. These vectors are used as input to machine learning models. N-grams are sets of words that occur next to each other that are combined into one feature. This way, the order of words will be maintained when the text is vectorized. Part-Of-Speech (POS) tags provide feature tags for similar words with a different part of speech in the context. The term frequency-inverse document frequency (TF-IDF) method highlights words or word pairs that often occur in one document but are low in frequency in the entire text corpus. Negation is an important feature to include in lexicon-based approaches. Negation means contradicting or denying something, which can flip the polarity of an opinion or sentiment.

Word embeddings are often used as feature learning techniques in deep learning models. Word embeddings are dense vectors with real numbers for a word or sentence in the text based on the context of the word in the text corpus. This approach, although considered promising, is only discussed to a limited extent in the selected articles.

Output variables differ per sentiment analysis task. Output classes of the identified secondary studies are polarity, subjectivity, emotions classes, or spam identification. Polarity indicates the extent to which the input is considered positive or negative in sentiment. In most cases, the output is classified in a binary way, either positive or negative. Some models include a neutral class as well. Multiple classes of polarity are shown to drastically reduce performance (Al-Moslmi et al. 2017) and are, therefore, not frequently used. One study (Madhala et al. 2018) focuses specifically on emotion classification, with up to 51 different classes of emotions. Some studies (Ahmed Ibrahim and Salim 2013; Kasmuri and Basiron 2017) include subjectivity analysis as part of sentiment analysis. Finally, spam detection is an important task in sentiment analysis, referring to extracting illegitimate means for a review. Examples of spam are untruthful opinions, reviews on the brand instead of on the product, and non-reviews like advertisements and random questions or text (Jindal and Liu 2008).

A clear pattern exists in the use of input and output features. Traditional machine learning models commonly use unigrams and n-grams as input. Variable features are TF-IDF values and POS-tags. Not every feature extraction method is as effective in differing domains. Combinations of input features are often made to reach better performance. Word embeddings are upcoming input features. The most recent articles (Kumar and Garg 2019; Kumar and Jaiswal 2020) explicitly discuss them. Text classification with word embeddings as input is considered a promising technique that is often combined with deep learning methods like recurrent neural networks. The output shows a similar pattern with common and variable features. The common feature is polarity, and variable output features include emotions, subjectivity, and spam type.

4.2 RQ2 “What are the adopted approaches in sentiment analysis?”

Different tasks in sentiment analysis require different approaches. Therefore, it is important to note which task requires which approach. Table 11 shows the categories that are used throughout different sentiment analysis tasks.

Table 12 depicts the commonly used approaches for sentiment analysis per selected paper. Machine learning algorithms, including deep learning (DL), unsupervised learning, and ensemble learning, are widely used for sentiment analysis tasks, as well as lexicon-based and hybrid methods. Checkmarks indicate that approaches are explicitly discussed in the referred article. Results are divided into five categories with specific subcategories. Each category with corresponding subcategories are described as follows:

4.2.1 Deep learning

Deep learning models are complex architectures with multiple layers of neural networks to progressively extract high-level features from input. CNN uses convolutional filters to recognize patterns in data. CNN is widely used in image recognition and, to a lesser extent, in the field of NLP. RNN is designed for recognizing sequential patterns. RNN is especially powerful in cases where context is critical. For this reason, RNN is very promising in sentiment analysis. LSTM networks are a special kind of RNN, that is capable of learning long-term context and dependencies. LSTM is especially powerful in NLP, where long-term dependencies are often important. The discussed deep learning algorithms are considered promising techniques and able to boost the performance of NLP tasks (Socher et al. 2013).

4.2.2 Traditional machine learning

Traditional ML algorithms are still widely used in all kinds of sentiment analysis tasks, including sentiment classification. While deep learning is a promising field, in many cases, traditional ML performs sufficiently well or even better for a specific task compared to deep learning methods, usually on smaller datasets. The traditional supervised machine learning algorithms are Support Vector Machines (SVM), Naive Bayes (NB), Neural Networks (NN), Logistic Regression (LogR), Maximum Entropy (ME), k-Nearest Neighbor (kNN), Random Forest (RF), and Decision Trees (DT).

4.2.3 Lexicon-based

Lexicon-based learning is a traditional approach to sentiment analysis. Lexicon-based methods scan through the documents for words that express positive or negative feelings to humans. Words are defined in a lexicon beforehand, so no learning data is required for this approach.

4.2.4 Hybrid models

In the context of sentiment classification, hybrid models combine the lexicon-based approach with machine learning techniques (Behera et al. 2016) to create a lexicon-enhanced classifier. Lexicons are used for defining domain-related features that are used as input for a machine learning classifier.

4.2.5 Ensemble classification

Ensemble classifiers approach adopts multiple learning algorithms to obtain better performance (Behera et al. 2016). Three main types of ensemble classification methods are bagging (bootstrap aggregating), boosting, and stacking. The bagging method independently learns homogeneous algorithms with data points randomly picked from the training set, following a deterministic averaging process. Boosting learns homogeneous algorithms in a sequential and adaptive way before following an averaging process. Stacking learns heterogeneous classifiers in parallel and combines them to predict an output. An overview of ensemble classifiers is shown in Table 13.

Support Vector Machines (SVM) is the dominant algorithm in the field of sentiment classification. All selected papers include SVM for classification purposes, and in most cases, this technique yields the best performance. Naive Bayes is the second most used algorithm and is praised for its high performance despite the simplicity of the technique. Besides these two dominant algorithms, methods like NN, LogR, ME, kNN, RF, and DT are used throughout different tasks of sentiment analysis. A popular unsupervised approach for aspect extraction is LDA. Hybrid approaches to sentiment classification have been effective by using domain-specific knowledge to create extra features that enhance the performance of the model. Ensemble and hybrid methods often improve the performance and reliability of predictions.

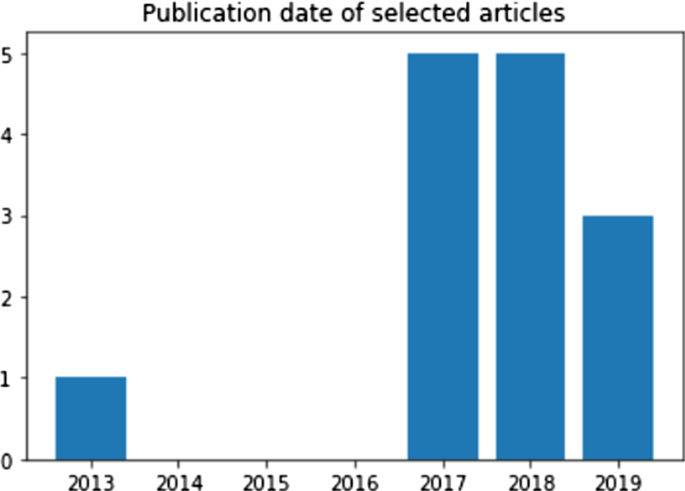

Deep learning algorithms are rising techniques in sentiment analysis. Especially, RNNs and the more complex RNN architecture, LSTM, are increasing in popularity. Even though deep learning is promising for increasing the performance of NLP and sentiment analysis models (Al-Moslmi et al. 2017; Kumar and Garg 2019; Kumar and Jaiswal 2020; Socher et al. 2013), the selected papers only discuss deep learning to a limited extent. The papers that discuss deep learning algorithms are recent papers published in 2018 and 2019, which stresses that sentiment analysis is a timely research subject and that the state-of-the-art is evolving rapidly. Figure 3 shows the year-wise distribution of selected articles. Except for one study from 2013, all selected studies are published in 2017, 2018, and 2019.

4.3 RQ3 “What domains have been addressed in the adopted data sets?”

Datasets for sentiment analysis are typically user-generated textual content. The text differs a lot depending on the domain and platform that the content is derived from. For example, social media data is usually very subjective and full of informal speech, whereas news article websites are mostly objective and formally written. Twitter data is limited to a certain number of characters and contains hashtags and references, whereas product review websites take a specific product into account and describe this in-depth. ML models trained on a specific domain provide poor performance when tested on a dataset from a different domain. Different domains have different language use and, therefore, require different methods for analysis. Table 14 depicts the domains of the adopted datasets per study. Checkmarks indicate that datasets from the domain are explicitly mentioned in the referred article.

Social media data is the most widely used source of data. This data is usually easy to obtain through APIs. Especially, tweets are popular because they are relatively similar in format (e.g., a limited number of characters). Twitter has an API where tweets can be scraped on specific subjects, time range, hashtags, etc. Tweets contain worldwide real-time information on entities. Furthermore, scraped tweets contain information about the location, number of retweets, number of likes, and much more. Some reviewed articles focus specifically on Twitter data (Ahmed Ibrahim and Salim 2013; Kumar and Jaiswal 2020). Other social media platforms like Facebook and Tumblr are also used for sentiment analysis.

Reviews of products, hotels, and movies are also commonly used for text classification models. Reviews are usually combined with a star rating (i.e., label), which makes them suitable for machine learning models. Star ratings indicate polarity. This way, no labor-intensive manual labeling process or predefined lexicon is required.

4.4 RQ4 “What are the challenges and open problems with respect to sentiment analysis?”

All of the 14 selected papers include challenges and open problems in sentiment analysis. Table 15 shows the challenges that are explicitly described in the papers. These challenges are categorized and sorted by the number of selected papers that explicitly mention the challenge.

Domain dependency is a well-known challenge in sentiment analysis; most of the models that we build are dependent on the domain it was built in. Linguistic dependency is the second most stated and well-known challenge that originates from the same deeper problem. Specific text corpora per domain or language need to be available for the optimal performance of the ML model. Some studies investigate multi-lingual or multi-domain models.

Most papers use English text corpora. Spanish and Chinese are the second most used languages in sentiment analysis. Limited literature is available in other languages. Some studies attempted to create a multi-language model (Al-Moslmi et al. 2017), but this is still a challenging task (Kumar and Garg 2019; Qazi et al. 2017). Multi-lingual systems are an interesting topic for further research.

Deep learning is a promising but complex technique where syntactic structures and word order can be retained. Deep learning still poses some challenges and is not widely researched in the selected articles. Opinion spam or fake review detection is a prominent issue in sentiment analysis where the internet has become an integral part of life, and false information spreads just as fast as accurate information on the web (Vosoughi et al. 2018). Another major challenge is the multi-class classification. In general, more output classes in a classifier reduce the performance (Al-Moslmi et al. 2017). Multiple polarity classes and multiple classes of emotions (Madhala et al. 2018) have been shown to dramatically reduce the performance of the model.

Further challenges are incomplete information, implicit language, typos, slang, and all other kinds of inconsistencies in language use. Combining text with corresponding pictures, audio, and video is also challenging.

5 Discussion

The goal of this study is to present an overview of the current application of machine learning models and corresponding challenges in sentiment analysis. This is done by critically analyzing the selected secondary studies and extracting the relevant data considering the predefined research questions. This tertiary study follows the guidelines proposed by Kitchenham and Charters (2007) for conducting systematic literature reviews. The study initially selected 16 secondary studies. After the quality assessment, 14 secondary papers remained for data extraction. The research methodology is transparent and designed in such a way that it can be reproduced by other researchers. Like any secondary study, there are also some limitations to this tertiary study.

The SLRs included in this study have their specific research focus on sentiment analysis. Even though the methodology of the 14 secondary studies is similar, the documentation of techniques and methods differs a lot. Besides that, some SLR papers are more comprehensive than others. This made the data extraction process harder and prone to mistakes. Another limitation concerns the selection process. The criteria for inclusion are restricted to SLR and SMS papers. Some other studies chose to include non-systematic literature reviews as well to complement results, but we did not include traditional survey papers because they do not systematically synthesize the papers in a field.

The first threat to validity is related to the inclusion criteria for methods in research questions. Checkmarks in the tables of RQ2, RQ3, and RQ4 are placed when something is explicitly mentioned in the referred paper. The included secondary studies have their specific research focus with different sentiment analysis tasks and corresponding machine learning approaches. For instance, Kasmuri and Basiron (2017) discuss subjectivity classification, which typically uses different approaches compared to other sentiment analysis tasks. This variation in research focus influences the checkmarks placed in the tables.

Another threat related to inclusion criteria is that some secondary studies have more included papers than others. For example, Kumar and Sharma (2017) included 194 primary studies, where Mite-Baidal et al. (2018) only included eight primary studies. It is likely that papers with a higher number of included primary articles mention more different techniques and challenges, and thus, more checkmarks are placed in the tables compared to papers with a lower number of primary articles included.

Lastly, this tertiary study only considers the selected secondary papers and does not consult the primary papers selected by the secondary papers. If any mistakes are made in the documentation of results in the secondary articles, these mistakes will be reflected in this study as well.

6 Conclusion and future work

This study provides the results of a tertiary study on sentiment analysis methods whereby we aimed to highlight the adopted features (input/output), adopted approaches, the adopted data sets, and the challenges with respect to sentiment analysis. The answers to the research questions were derived based on in-depth secondary studies.

A different number of input and output features could be identified. Interestingly, some features appeared to be described in all the secondary studies, while other features were more specific to a selected set of secondary studies. The results further indicate that sentiment analysis has been applied in various domains, among which social media is the most popular. Also, the study showed that different domains require the use of different techniques.

There also seems a trend towards using more complex deep learning techniques, since they can detect more complex patterns in text and perform particularly well with larger datasets. In some use cases like, for example, advertisement, slight improvements in performance that can be obtained through deep learning can have a great impact. However, it should be noted that traditional machine learning models are less computationally expensive and perform sufficiently well for sentiment analysis tasks. They are widely praised for their performance and efficiency.

This study showed that the most prominent challenges in sentiment analysis are domain and language dependency. Specific text corpora are required for differing languages and domains of interest. Attempts for cross-domain and multi-lingual sentiment analysis models have been made, but this challenging task should be explored further. Other prominent challenges are opinion spam detection and the application of deep learning for sentiment analysis tasks. Overall, the study shows that sentiment analysis is a timely and important research topic. The adoption of a tertiary study showed the additional value that could not be derived from each of the secondary studies.

The following future directions and challenges have also been mainly discussed in deep learning-based survey papers: New datasets are required for more challenging tasks, common sense knowledge must be modeled, interpretable deep learning-based models must be developed, and memory-efficient models are required (Minaee et al. 2020). Domain adaptation techniques are needed, multi-lingual applications should be addressed, technical requirements such as a huge amount of labeled data requirement must be considered, and linguistic complications must be investigated (Do et al. 2019). Popular deep learning techniques such as deep reinforcement learning and generative adversarial networks can be evaluated to solve some challenging tasks, advantages of the BERT algorithm can be considered, language structures (e.g., slangs) can be investigated in detail, dynamic sentiment analysis can be studied, and sentiment analysis for heterogeneous data can be implemented (Habimana et al. 2020a). Dependency trees in recursive neural networks can be investigated, domain adaptation can be analyzed in detail, and linguistic-subjective phenomena (e.g., irony and sarcasm) can be studied (Rojas-Barahona 2016). Different applications of sentiment analysis (e.g., medical domain and security screening of employees) can be implemented, and transfer learning approaches can be analyzed for sentiment classification (Yadav and Vishwakarma 2019). Comparative studies should be extended with new approaches and new datasets, and also hybrid approaches to reduce computational cost and improve performance must be developed (Dang et al. 2020).

References

Abid F, Li C, Alam M (2020) Multi-source social media data sentiment analysis using bidirectional recurrent convolutional neural networks. Comput Commun 157:102–115

Ahmad M, Aftab S, Ali I, Hameed N (2017) Hybrid tools and techniques for sentiment analysis: a review 8(4):7

Ahmad M, Aftab S, Bashir MS, Hameed N (2018) Sentiment analysis using SVM: a systematic literature review. Int J Adv Comput Sci Appl 9(2):182–188 (Scopus)

Ahmed Ibrahim M, Salim N (2013) Opinion analysis for twitter and Arabic tweets: a systematic literature review. J Theor Appl Inf Technol 56(3):338–348 (Scopus)

Alam M, Abid F, Guangpei C, Yunrong LV (2020) Social media sentiment analysis through parallel dilated convolutional neural network for smart city applications. Comput Commun 154:129–137

Alarifi A, Tolba A, Al-Makhadmeh Z, Said W (2020) A big data approach to sentiment analysis using greedy feature selection with cat swarm optimization-based long short-term memory neural networks. J Supercomput 76(6):4414–4429

Alexandridis G, Michalakis K, Aliprantis J, Polydoras P, Tsantilas P, Caridakis G (2020) A deep learning approach to aspect-based sentiment prediction. In: IFIP International conference on artificial ıntelligence applications and ınnovations. Springer, Cham, pp 397–408

Al-Moslmi T, Omar N, Abdullah S, Albared M (2017) Approaches to cross-domain sentiment analysis: a systematic literature review. IEEE Access 5:16173–16192 (Scopus)

Almotairi, M. (2009) A framework for successful CRM implementation. In: European and mediterranean conference on information systems. pp 1–14

Aslam A, Qamar U, Saqib P, Ayesha R, Qadeer A (2020) A novel framework for sentiment analysis using deep learning. In: 2020 22nd International conference on advanced communication technology (ICACT). IEEE, pp 525–529

Basiri ME, Abdar M, Cifci MA, Nemati S, Acharya UR (2020) A novel method for sentiment classification of drug reviews using fusion of deep and machine learning techniques. Knowl-Based Syst 198:1–19

Becker JU, Greve G, Albers S (2009) The impact of technological and organizational implementation of CRM on customer acquisition, maintenance, and retention. Int J Res Mark 26(3):207–215

Behera RN, Manan R, Dash S (2016) Ensemble based hybrid machine learning approach for sentiment classification-a review. Int J Comput Appl 146(6):31–36

Beseiso M, Elmousalami H (2020) Subword attentive model for arabic sentiment analysis: a deep learning approach. ACM Trans Asian Low-Resour Lang Inf Process (TALLIP) 19(2):1–17

Blum A, Mitchell T (1998) Combining labeled and unlabeled data with co-training. Proc Eleventh Ann Conf Comput Learn Theory COLT’ 98:92–100

Bondielli A, Marcelloni F (2019) A survey on fake news and rumour detection techniques. Inf Sci 497:38–55

Budgen D, Brereton P, Drummond S, Williams N (2018) Reporting systematic reviews: some lessons from a tertiary study. Inf Softw Technol 95:62–74

Cadavid H, Andrikopoulos V, Avgeriou P (2020) Architecting systems of systems: a tertiary study. Inf Softw Technol 118(106202):1–18

Cai Y, Huang Q, Lin Z, Xu J, Chen Z, Li Q (2020) Recurrent neural network with pooling operation and attention mechanism for sentiment analysis: a multi-task learning approach. Knowl-Based Syst 203(105856):1–12

Cambria E (2016) Affective computing and sentiment analysis. IEEE Intell Syst 31(2):102–107

Cambria E, Hussain A, Havasi C, Eckl C (2009) Common sense computing: from the society of mind to digital intuition and beyond. In: European workshop on biometrics and ıdentity management. Springer, Berlin, Heidelberg, pp 252–259

Cambria E, Poria S, Gelbukh A, Thelwall M (2017) Sentiment analysis is a big suitcase. IEEE Intell Syst 32(6):74–80

Cambria E, Li Y, Xing FZ, Poria S, Kwok K (2020) SenticNet 6: ensemble application of symbolic and subsymbolic AI for sentiment analysis. In: Proceedings of the 29th ACM International conference on ınformation & knowledge management. pp 105–114

Can EF, Ezen-Can A., & Can, F. (2018). Multi-lingual sentiment analysis: an RNN-based framework for limited data. In: Proceedings of ACM SIGIR 2018 workshop on learning from limited or noisy data, Ann Arbor

Catal C, Mishra D (2013) Test case prioritization: a systematic mapping study. Softw Qual J 21(3):445–478

Chandra Y, Jana A (2020) Sentiment analysis using machine learning and deep learning. In: 2020 7th International conference on computing for sustainable global development (INDIACom). IEEE, pp. 1–4

Chapelle O, Zien A (2005) Semi-supervised classification by low density separation. In: AISTATS vol 2005, pp 57–64

Che S, Li X (2020) HCI with DEEP learning for sentiment analysis of corporate social responsibility report. Curr Psychol. https://doi.org/10.1007/s12144-020-00789-y

Chen IJ, Popovich K (2003) Understanding customer relationship management (CRM). Bus Process Manag J 9(5):672–688. https://doi.org/10.1108/14637150310496758

Chen L, Chen G, Wang F (2015) Recommender systems based on user reviews: the state of the art. User Model User-Adap Inter 25(2):99–154. https://doi.org/10.1007/s11257-015-9155-5

Chen H, Sun M, Tu C, Lin Y, Liu Z (2016) Neural sentiment classification with user and product attention. In: Proceedings of the 2016 conference on empirical methods in natural language processing. pp 1650–1659

Chen P, Sun Z, Bing L, Yang W (2017) Recurrent attention network on memory for aspect sentiment analysis. In: Proceedings of the 2017 conference on empirical methods in natural language processing. pp 452–461

Chen H, Liu J, Lv Y, Li MH, Liu M, Zheng Q (2018) Semi-supervised clue fusion for spammer detection in Sina Weibo. Inf Fusion 44:22–32. https://doi.org/10.1016/j.inffus.2017.11.002

Cheng Y, Yao L, Xiang G, Zhang G, Tang T, Zhong L (2020) Text sentiment orientation analysis based on multi-channel CNN and bidirectional GRU with attention mechanism. IEEE Access 8:134964–134975

Choi Y, Cardie C (2008) Learning with compositional semantics as structural inference for subsentential sentiment analysis. In: Proceedings of the 2008 conference on empirical methods in natural language processing. pp 793–801

Colón-Ruiz C, Segura-Bedmar I (2020) Comparing deep learning architectures for sentiment analysis on drug reviews. J Biomed Inform 110(103539):1–11