Abstract

We study coagulation equations under non-equilibrium conditions which are induced by the addition of a source term for small cluster sizes. We consider both discrete and continuous coagulation equations, and allow for a large class of coagulation rate kernels, with the main restriction being boundedness from above and below by certain weight functions. The weight functions depend on two power law parameters, and the assumptions cover, in particular, the commonly used free molecular and diffusion limited aggregation coagulation kernels. Our main result shows that the two weight function parameters already determine whether there exists a stationary solution under the presence of a source term. In particular, we find that the diffusive kernel allows for the existence of stationary solutions while there cannot be any such solutions for the free molecular kernel. The argument to prove the non-existence of solutions relies on a novel power law lower bound, valid in the appropriate parameter regime, for the decay of stationary solutions with a constant flux. We obtain optimal lower and upper estimates of the solutions for large cluster sizes, and prove that the solutions of the discrete model behave asymptotically as solutions of the continuous model.

Similar content being viewed by others

1 Introduction

Atmospheric cluster formation processes [22], where certain species of the gas molecules (called monomers) can stick together and eventually produce macroscopic particles, are an important component in cloud formation and radiation scattering. The above cluster formation processes are modelled with the so-called General Dynamic Equation (GDE) [22]. Under atmospheric conditions, the particle clusters are often aggregates of various molecular species and formed by collisions of several different monomer types, cf. [36, 44] for more details and examples. Accordingly, in the GDE one needs to label clusters not only by the total number of monomers in them but also by counting each monomer type. This results in multicomponent labels for the concentration vector, with nonlinear interactions between the components. Another feature of the GDE which has been largely absent from most of the previous mathematical work on coagulation equations, is the presence of an external monomer source term. Such sources are nevertheless important for atmospheric phenomena (for more details about the chemical and physical origin and relevance of the sources we refer for instance to [16, 27]), although this problem has been barely considered in the mathematical literature.

In this work, we focus on the effect the addition of a source term has on solutions of standard one-component coagulation equations. This is by no means to imply that multicomponent coagulation equations would not have interesting new mathematical features but these will be the focus of a separate work. Here, we consider only one species of monomers, and we are interested in the distribution of the concentration of clusters formed out of these monomers. Let \(n_\alpha \ge 0\) denote the concentration of clusters with \(\alpha \in {{\mathbb {N}}}\) monomers.

Considering the regime in which the precise spatial structure and loss of particles by deposition are not important, the GDE yields the following nonlinear evolution equation for the concentrations \(n_\alpha \):

The coefficients \(K_{\alpha ,\beta }\) describe the coagulation rate joining two clusters of sizes \(\alpha \) and \(\beta \) into a cluster of size \(\alpha +\beta \), as dictated by mass conservation. Analogously, the coefficients \(\Gamma _{\alpha ,\beta }\) describe the fragmentation rate of clusters of size \(\alpha \) into two clusters which have sizes \(\beta \) and \(\alpha -\beta \). We denote with \(s_{\alpha }\) the (external) source of clusters of size \(\alpha \). In applications, typically only monomers or small clusters are being produced, so we make the assumption that the function \(\alpha \mapsto s_\alpha \) has a bounded, non-empty support. In what follows, we make one further simplification and consider only cases where also fragmentation can be ignored, \(\Gamma _{\alpha ,\beta }=0\); the reasoning behind this choice is discussed later in Section 1.1. An overview of the currently available mathematical results for coagulation-fragmentation models can be found in [9, 30].

Therefore, we are led to study the evolution equation

In this paper, we are concerned with the existence or nonexistence of steady state solutions to (1.2) for general coagulation rate kernels K, including in particular the physically relevant kernels discussed in Section 1.1. The source is here assumed to be localized on the “left boundary” of the system which have small cluster sizes. Such source terms often lead to nontrivial stationary solutions towards which the time-dependent solutions evolve as time increases. These stationary solutions are nonequilibrium steady states since they involve a steady flux of matter from the source into the system. The characterization of nonequilibrium stationary states exhibiting transport phenomena is one of the central problems in statistical mechanics.

The main result of this paper gives a contribution in this direction. More precisely, we address the question of existence of such stationary solutions to (1.2). We prove that for a large class of kernels—including in particular the diffusion limited aggregation kernel given in (1.9)—stationary solutions to (1.2) yielding a constant flux of monomers towards clusters with large sizes exist. On the contrary, for a different class of kernels—including the free molecular coagulation kernel with the form (1.7)—such a class of stationary solutions does not exist.

In the case of collision kernels for which stationary nonequilibrium solutions to (1.2) exist, we can even compute the rate of formation of macroscopic particles, which we identify here with infinitely large particles, from an analysis of the properties of these stationary solutions, cf. Section 2.1. We find that in this case the main mechanism of transport of monomers to large clusters corresponds to coagulation between clusters with comparable sizes, cf. Lemma 6.1, Section 6.

The non-existence of such stationary solutions under a monomer source for a general class of coagulation kernels yielding coagulation for arbitrary cluster sizes is one of the novelties of our work. It has been pointed out in Remark 8.1 of [12] that for kernels \(K_{\alpha ,\beta }\) which vanish if \(\alpha >1\) or \(\beta >1\), and sources \(s_{\alpha }\) which are different from zero for \(\alpha {\geqq }2\), stationary solutions of (1.2) cannot exist. Although the example in [12] refers to the continuous counterpart of (1.2) (c.f. (1.3)), the argument works similarly for discrete kernels. The example of non existence of stationary solutions in [12] relies on the fact that coagulation does not take place for sufficiently large particles and therefore cannot compensate for the addition of particles due to the source term \(s_{\alpha }.\) In the class of kernels considered in this paper coagulation takes place for all particle sizes and therefore the nonexistence of steady states must be due to a different reason. At first glance this result might appear counterintuitive, since this non-existence result includes kernels for which the dynamics seems to be well-posed. Hence, one needs to explain what will happen at large times to the monomers injected into the system. Our results suggest that for such kernels the aggregation of monomers with large clusters is so fast that it cannot be compensated by the constant addition of monomers described by the injection term \(s_\alpha \). Then the cluster concentration \(n_{\alpha }\) would converge to zero as \(t\rightarrow \infty \) for bounded \(\alpha \) even if \(n_{\alpha }=0\) is not a stationary solution to (1.2) if \((s_{\beta })\ne 0\).

We remark that our non-existence result of stationary solutions includes in particular the so called free molecular kernel (cf. (1.6) below) derived from kinetic theory which is commonly used for microscopic computations involving aerosols (cf. for instance [36]).

In this paper we consider, in addition to the stationary solutions of (1.2), the stationary solutions of the continuous counterpart of (1.2),

In fact, we will allow f and \(\eta \) in this equation to be positive measures. This will make it possible to study the continuous and discrete equations simultaneously, using Dirac \(\delta \)-functions to connect \(f(\xi )\) and \(n_\alpha \) via the formula \(f(\xi ) d\xi = \sum _{\alpha =1}^\infty n_\alpha \delta (\xi - \alpha )d\xi \).

In most of the mathematical studies of the coagulation equation to date, it has been assumed that the injection terms \(s_{\alpha }\) and \(\eta \left( x\right) \) are absent. In the case of homogeneous kernels, that is, kernels satisfying

for any \(r>0\), the long time asymptotics of the solutions of (1.3) with \(\eta \left( x\right) =0\) might be expected to be self-similar for a large class of initial data. This has been rigorously proved in [32] for the particular choices of kernels \(K( x,y) =1\) and \(K( x,y) =x+y\). In the case of discrete problems, the distribution of clusters \( n_{\alpha }\) has also been proved to behave in self-similar form for large times and for a large class of initial data if the kernel is constant, \( K_{\alpha ,\beta }=1\), or additive, \(K_{\alpha ,\beta }=\alpha +\beta \) [32]. For these kernels it is possible to find explicit representation formulas for the solutions of (1.2), (1.3) using Laplace transforms.

For general homogeneous kernels construction of explicit self-similar solutions is no longer possible. However, the existence of self-similar solutions of (1.3) with \(\eta =0\) has been proved for certain classes of homogeneous kernels K(x, y) using fixed point methods. These solutions might have a finite monomer density (that is, \(\int _{0}^{\infty }xf\left( x,t\right) \text {d}x<\infty \)) as in [18, 21], or infinite monomer density (that is, \(\int _{0}^{\infty }xf \left( x,t\right) \text {d}x=\infty \)) as in [3, 4, 34, 35]. Similar strategies can be applied to other kinetic equations [25, 26, 33].

Problems like (1.2), (1.3) with nonzero injection terms \(s_{\alpha }\), \( \eta \left( x\right) \) have been much less studied both in the physical and mathematical literature. In [10] it has been observed using a combination of asymptotic analysis arguments and numerical simulations that solutions of (1.2), (1.3) with a finite monomer density behave in self-similar form for long times and for a class of homogeneous coagulation kernels, even considering source terms which depend on time following a power law \(t^{\omega }\). Coagulation equations with sources have also been considered in [31] using Renormalization Group methods and leading to predictions of analogous self-similar behaviour. For what concerns the rigorous mathematical literature, in [12], the existence of stationary solutions has been obtained in the case of bounded kernels. Well-posedness of the time-dependent problem for a class of homogeneous coagulation kernels with homogeneity \(\gamma \in [0,2]\) has been proven in [17]. For the constant kernel, the stability of the corresponding solutions has been proven using Laplace transform methods (cf. [12]). Convergence to equilibrium for a class of coagulation equations containing also growth terms as well as sources has been studied in [23, 24]. Analogous stability results for coagulation equations with the form of (1.1) but containing an additional removal term on the right-hand side with the form \(-r_{\alpha }n_{\alpha },\ r_{\alpha }>0\) have been obtained in [28].

In this paper we study the stationary solutions of (1.2), (1.3) for coagulation kernels satisfying

for some \(c_1,c_2>0\) and for all x, y. The weight function w depends on two real parameters: the homogeneity parameter \(\gamma \) and the “off-diagonal rate” parameter \(\lambda \). The parameter \(\gamma \) yields the behaviour of kernel K under the scaling of the particle size while the parameter \(\lambda \) measures how relevant the coagulation events between particles of different sizes are. However, let us stress that we do not assume the kernel K itself to be homogeneous, even though the weight functions are.

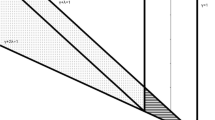

The main result of this paper is the following: given \(\eta \) compactly supported there exists at least one nontrivial stationary solution to the problem (1.3) if and only if \(|\gamma +2\lambda |<1\). In particular, if \(|\gamma +2\lambda |\ge 1\) no such stationary solutions can exist. Note that the parameters \(\gamma \) and \(\lambda \) are arbitrary real numbers and they may be negative or greater than one here. Therefore, these results do not depend on having global well-posedness of mass-preserving solutions for the time-dependent problem (1.3). In particular, our theorems cover ranges of parameters for which the solutions to the time-dependent problem (1.3) can exhibit gelation in finite or zero time. A detailed description of the current state of the art concerning wellposedness and gelation results can be found in [1]. At a first glance, the fact that the existence of stationary solutions of (1.3) does not depend on having or not solutions for the time dependent problem might appear surprising. However, the reason for this becomes clearer if we notice that the homogeneity of the kernel is one of the main factors determining the wellposedness of the time dependent problem (1.3). On the other hand, the homogeneity of the kernel K is not an essential property of the stationary solution problem as it can be seen (cf. [11]) noticing that if f is a stationary solution of (1.3), then \(x^{\theta }f\left( x\right) \) is a stationary solution of (1.3) with kernel \(\frac{K\left( x,y\right) }{\left( xy\right) ^{\theta }}\) and the same source \(\eta \). This new kernel satisfies (1.5) with \(\gamma \) and \(\lambda \) replaced by \(\left( \gamma -2\theta \right) \) and \(\lambda +\theta \) respectively. In particular, we can so obtain kernels with arbitrary homogeneity and having basically the same steady states, up to the product by a power law.

We also prove in this paper the analog of these existence and non-existence results for the discrete coagulation equation (1.2). Moreover, we derive upper and lower estimates, as well as regularity results, for the stationary solutions to (1.2), (1.3) for the range of parameters for which these solutions exist, that is \(|\gamma +2\lambda |<1\). Finally, we also describe the asymptotics for large cluster sizes of these stationary solutions.

1.1 On the Choice of Coagulation and Fragmentation Rate Functions

Although we do not keep track of any spatial structure, the coagulation rates \(K_{\alpha ,\beta }\) do depend on the specific mechanism which is responsible for the aggregation of the clusters. These coefficients need to be computed for example using kinetic theory and the result will depend on what is assumed about the particle sizes and the processes yielding the motion of the clusters.

For instance, in the case of electrically neutral particles with a size much smaller than the mean free path between two collisions between clusters, the coagulation kernel is (cf. [22])

where \(V( \alpha ) \) and \(m( \alpha ) \) are respectively the volume and the mass of the cluster characterized by the composition \( \alpha \). We denote as \(k_{B}\) the Boltzmann constant, as T the absolute temperature, and if \(m_1\) is the mass of one monomer, we have above \(m(\alpha )= m_1 \alpha \). In the derivation, one also assumes a spherical shape of the clusters. If the particles are distributed inside the sphere with a uniform mass density \(\rho \), assumed to be independent of the cluster size, we also have \(V(\alpha )=\frac{m_1}{\rho } \alpha \). Changing the time-scale we can set all the physical constants to one. Finally, it is possible to define a continuum function K(x, y) by setting \(\alpha =x\), \(\beta =y\) in the above formula. We call this function the free molecular coagulation kernel, given explicitly by

It is now straightforward to check that with the parameter choice \(\gamma =\frac{1}{6}\), \(\lambda =\frac{1}{2}\) there are \(c_1,c_2>0\) such that (1.5) holds for all \(x,y>0\). Since here \(\gamma + 2 \lambda = \frac{7}{6}>1\), the free molecular kernel belongs to the second category which has no stationary state.

Another often encountered example is diffusion limited aggregation which was studied already in the original work by Smoluchowski [40]. Suppose that there is a background of non-aggregating neutral particles producing cluster paths resembling Brownian motion between their collisions. Then one arrives at the coagulation kernel

where \(\mu >0\) is the viscosity of the gas in which the clusters move.

As before, we then set \(V(\alpha )=\frac{m_1}{\rho } \alpha \) and define a continuum function K(x, y) by setting \(\alpha =x\), \(\beta =y\) on the right hand side of (1.8). The constants may then be collected together and after rescaling time one may use the following kernel function

which we call here diffusive coagulation kernel or Brownian kernel. In this case, for the parameter choice \(\gamma =0\), \(\lambda =\frac{1}{3}\) there are \(c_1,c_2>0\) such that for all \(x,y>0\) (1.5) holds. Since here we have that \(0<\gamma + 2 \lambda = \frac{2}{3}<1\), the diffusive kernel belongs to the first category which will have some stationary solutions.

Several other coagulation kernels can be found in the physical and chemical literature. For instance, the derivation of the free molecular kernel (1.6) and the Brownian kernel (1.8) is discussed in [22]. The derivation of coagulation describing the aggregation between charged and neutral particles can be found in [41]. Applications of these three kernels to specific problems in chemistry can be found for instance in [36].

Concerning the fragmentation coefficients \(\Gamma _{\alpha ,\beta }\), it is commonly assumed in the physics and chemistry literature that these coefficients are related to the coagulation coefficients by means of the following detailed balance condition (cf. for instance [36])

where \(\Delta G_{\text {ref},\alpha }\) is the Gibbs energy of formation of the cluster \(\alpha \) and \(P_{\text {ref}}\) is the reference pressure at which these energies of formation are calculated. Since we assume the coagulation kernel to be symmetric, \( K_{\alpha ,\beta }=K_{\beta ,\alpha }\), the fragmentation coefficients then satisfy a symmetry requirement \(\Gamma _{\alpha +\beta ,\alpha }=\Gamma _{\alpha +\beta ,\beta }\) for all \(\alpha ,\beta \in {\mathbb {N}}^{d}\).

In the processes of particle aggregation, usually the formation of larger particles is energetically favourable, which means that

Under this assumption, it follows from (1.10) that

and then we might expect to approximate the solutions of (1.1) by means of the solutions of (1.2). The description of the precise conditions on the Gibbs free energy \(\Delta G_{\text {ref},\alpha }\) which would allow to make this approximation rigorous is an interesting mathematical problem that we do not address in the present paper. Therefore, we restrict our analysis here to the coagulation equations (1.2) and (1.3).

1.2 Notations and Plan of the Paper

Let I be any interval such that \(I \subset {{\mathbb {R}}}_+=[0,\infty )\). We reserve the notation \({{\mathbb {R}}}_*\) for the case \(I=(0,\infty )\). We will denote by \(C_{c}(I )\) the space of compactly supported continuous functions on I and by \(C_b(I)\) the space of functions that are continuous and bounded on I. Unless mentioned otherwise, we endow both spaces with the standard supremum norm. Then \(C_b(I)\) is a Banach space and \(C_{c}(I )\) is its subspace. We denote the completion of \(C_{c}(I)\) in \(C_b(I)\) by \(C_0(I)\) which naturally results in a Banach space. For example, then \(C_0({{\mathbb {R}}}_+)\) is the space of continuous functions vanishing at infinity and \(C_{0}(I )=C_{c}(I )=C_b(I)\) if I is a finite, closed interval.

Moreover, we denote by \( {\mathcal {M}}_{+}(I) \) the space of nonnegative Radon measures on I. Since I is locally compact, \( {\mathcal {M}}_{+}(I) \) can be identified with the space of positive linear functionals on \(C_{c}(I )\) via Riesz–Markov–Kakutani theorem. For measures \(\mu \in {\mathcal {M}}_{+}(I) \), we denote its total variation norm by \(\Vert \mu \Vert \) and recall that since the measure is positive, we have \(\Vert \mu \Vert =\mu (I)\). Unless I is a closed finite interval, not all of these measures need to be bounded. The collection of bounded, positive measures is denoted by \({\mathcal {M}}_{+,b}(I):=\{\mu \in {\mathcal {M}}_{+}(I) \,|\,\mu (I)<\infty \}\) and we denote the collection of bounded complex Radon measures by \({\mathcal {M}}_{b}(I)\). We recall that the total variation indeed defines a norm in \({\mathcal {M}}_{b}(I)\), and this space is a Banach space which can be identified with the dual space \(C_{0}(I )^*=C_c(I)^*\). In addition, \({\mathcal {M}}_{+,b}(I)\) is a norm-closed subset of \({\mathcal {M}}_{b}(I)\). Alternatively, we can endow both \({\mathcal {M}}_{b}(I)\) and \({\mathcal {M}}_{+,b}(I)\) with the \(*\)–weak topology which is generated by the functionals \(\langle \varphi ,\mu \rangle =\int _I \varphi (x) \mu (d x)\) with \(\varphi \in C_c(I)\).

We will use indistinctly \(\eta (x) \text {d}x\) and \(\eta (\text {d}x)\) to denote elements of these measure spaces. The notation \(\eta ( \text {d}x) \) will be preferred when performing integrations or when we want to emphasize that the measure might not be absolutely continuous with respect to the Lebesgue measure.

For the sake of notational simplicity, in some of the proofs we will resort to a generic constant C which may change from line to line.

The paper is structured as follows: in Section 2 we discuss the types of solutions considered here and we state the main results. In Section 3 we prove the existence of steady states for the coagulation equation with source in the continuum case (1.3) assuming \(|\gamma +2\lambda |<1\). We prove the complementary nonexistence of stationary solutions to (1.3) for \(|\gamma +2\lambda |{\geqq }1\) in Section 4. The analogous existence and nonexistence results for the discrete model (1.2) are collected into Section 5. In Section 6 we derive several further estimates for the solutions of both continuous and discrete models, including also estimates for moments of the solutions. These estimates imply in particular that the only relevant collisions are those between particles of comparable sizes. Finally, in Section 7 we prove that the stationary solutions of the discrete model (1.2) behave as the solutions of the continuous model (1.3) for large cluster sizes.

2 Setting and Main Results

2.1 Different Types of Stationary Solutions for Coagulation Equations

The stationary solutions to the discrete equation (1.2) satisfy

where \(\alpha \in {\mathbb {N}}\) and \(s_\alpha \) is supported on a finite set of integers. Analogously, in the continuous case, the stationary solutions to (1.3) satisfy

where the source term \(\eta \left( x\right) \) is compactly supported in \([1, \infty )\). Although we write the equation using a notation where f and \(\eta \) are given as functions, the equation can be extended in a natural manner to allow for measures. The details of the construction are discussed in Section 3 and the explicit weak formulation may be found in (2.15).

We remark that equation (2.1) can be written as

where we define \(J_0(n)=0\) and, for \(\alpha {\geqq }1\), we set

Notice that we will use indistintly the notation \( K_{\beta ,\gamma }\) or \( K(\beta ,\gamma )\). On the other hand, for sufficiently regular functions f equation (2.2) can similarly be written as

where

This implies that the fluxes \(J_\alpha (n)\) and J(x; f) are constant for \(\alpha \) and x sufficiently large due to the fact that s is supported in a finite set and \(\eta \) is compactly supported, and we prove in Lemma 2.8 that this property continues to hold even when f is a measure. If \(s_\alpha \) or \(\eta (x)\) decay sufficiently fast for large values of \(\alpha \) or x then \(J_\alpha (n)\) or J(x; f) converges to a positive constant as \(\alpha \) or x tend to infinity.

Given that other concepts of stationary solutions are found in the physics literature, we will call the solutions of (2.1) and (2.2) stationary injection solutions. In this paper we will be mainly concerned with these solutions. The physical meaning of these solutions, when they exist, is that it is possible to transport monomers towards large clusters at the same rate at which the monomers are added into the system.

For comparison, let us also discuss briefly other concepts of stationary solutions and the relation with the stationary injection solutions. One case often considered in the physics literature are constant flux solutions (cf. [42]). These are solutions of (2.2) with \(\eta \equiv 0\) satisfying

where \(J_0 \in {{\mathbb {R}}}_+\) and J(x; f) is defined in (2.5). Explicit stationary solutions for coagulation equations have been obtained and discussed in [13,14,15, 38, 39]. In these papers the collision kernel K under consideration is not homogeneous. In the case of homogeneous kernels K there is an explicit method to obtain power solutions of (2.2) by means of some transformations of the domain of integration that were introduced by Zakharov in order to study the solutions of the Weak Turbulence kinetic equations (cf. [45, 46]). Zakharov’s method has been applied to coagulation equations in [7].

Alternatively, we can obtain power law solutions of (2.6) using the homogeneity \(\gamma \) of the kernel (cf. (1.4)). Indeed, suppose that \(f\left( x\right) =c_{s}\left( x\right) ^{-\alpha }\) for some \(c_{s}\) positive and \(\alpha \in {{\mathbb {R}}}\). Using the homogeneity of the kernel K we obtain

under the assumption that

Using (2.6), we then obtain \(\alpha = (3+\gamma )/2\) and \(c_{s}=\sqrt{\frac{J_{0}}{G\left( \alpha \right) }}.\) Therefore, (2.7) holds if and only if \(|\gamma +2\lambda | <1\). Notice that (2.7) yields a necessary and sufficient condition to have a power law solution of (2.6). However, one should not assume that all solutions of (2.6) are given by a power law; indeed, we have preliminary evidence that there exist smooth homogeneous kernels satisfying (1.5) for which there are non- power law solutions to (2.6).

Finally, let us mention one more type of solutions associated with the discrete coagulation equation (2.1) that have some physical interest. This is the boundary value problem in which the concentration of monomers is given and the coagulation equation (2.1) is satisfied for clusters containing two or more monomers (\(\alpha {\geqq }2\)). The problem then becomes

where \(c_1 >0\) is given.

Notice that if we can solve the injection problem (2.1) for some source \(s=s_1\delta _{\alpha ,1} \) with \(s_1 >0\), then we can solve the boundary value problem (2.8) for any \(c_1>0\). Indeed, let us denote by \(N_\alpha (s_1)\), \(\alpha \in {{\mathbb {N}}}\), the solution to (2.1) with source \(s=s_1\delta _{\alpha ,1} \). Then equation (2.1) for \(\alpha =1\) reduces to

This implies that \(0<N_1(s_1) <\infty \). Then the solution to (2.8) is given by

Moreover, if we can solve (2.1) for some \(s_1 > 0\), then we can solve (2.1) for arbitrary values of \(s_1\) due to the fact that if n is a solution of (2.1) with source s then for any \(\Lambda >0,\) \(\sqrt{\Lambda }n\) solves (2.1) with source \(\Lambda n.\)

In this paper we will consider the problems (2.1) and (2.2) in Sections 2 to 6. In Section 7 we prove that a rescaled version of the solutions to (2.1) and (2.2) behaves for large cluster sizes as a solution to (2.6). We will not discuss solutions to (2.8) in this paper.

In this paper we will study the solutions of (2.1), (2.2) for kernels \(K\left( x,y\right) \) which behave for large clusters as \(x^{\gamma +\lambda } y^{-\lambda }+y^{\gamma +\lambda }x^{-\lambda }\) for suitable coefficients \(\gamma ,\lambda \in {\mathbb {R}}\) in the case of the equation (2.2) as well as their discrete counterpart in the case of (2.1). (See next Subsection for the precise assumptions on the kernels, in particular (2.11), (2.12).) The main result that we prove in this paper is that the equations (2.1), (2.2) with nonvanishing source terms \(s_{\alpha },\ \eta \), respectively, have a solution if \(\left| \gamma +2\lambda \right| <1\) and they do not have solutions at all if \(\left| \gamma +2\lambda \right| {\geqq }1.\) The heuristic idea behind this result is easy to grasp. We will describe it in the case of the equation (2.2), since the main ideas are similar for (2.1).

The equation (2.2) can be reformulated as (2.4), (2.5). Since \(\eta \) is compactly supported we obtain that \(J\left( x;f\right) \) is a constant \(J_{0}>0\) for x sufficiently large. The homogeneity of the kernel \(K\left( x,y\right) =x^{\gamma +\lambda }y^{-\lambda }+y^{\gamma +\lambda }x^{-\lambda }\) suggests that the solutions of the equation \(J\left( x;f\right) =J_{0}\) should behave as \(f\left( x\right) \approx Cx^{-\frac{\gamma +3}{2}}\) for large \(x,\ \)with \(C>0.\) Actually this statement holds in a suitable sense that will be made precise later. However, this asymptotic behaviour for \(f\left( x\right) \) cannot take place if \(\left| \gamma +2\lambda \right| {\geqq }1\) because the integral in (2.5) would be divergent. Therefore, solutions to (2.2) can only exist for \(\left| \gamma +2\lambda \right| <1.\)

2.2 Definition of Solution and Main Results

We restrict our analysis to the kernels satisfying (1.5), or at least one of the inequalities there. To account for all the relevant cases, let us summarize the assumptions on the kernel slightly differently here. We always assume that

and for all x, y,

We also only consider kernels for which one may find \(\gamma ,\lambda \in {{\mathbb {R}}}\) such that at least one of the following holds: there is \(c_1>0\) such that for all \((x,y)\in {\mathbb {R}}_*^{2}\),

and/or there is \(c_2>0\) such that for all \((x,y)\in {\mathbb {R}}_*^{2}\)

The class of kernels satisfying all of the above assumptions includes many of the most commonly encountered coagulation kernels. It includes in particular the Smoluchowski (or Brownian) kernel (cf. (1.9)) and the free molecular kernel (cf. (1.7)).

The source rate is assumed to be given by \(\eta \in {\mathcal {M}}_{+}\left( {\mathbb {R}} _*\right) \) and to satisfy

Note that then we always have \(\eta \left( {\mathbb {R}} _*\right) <\infty \), that is, the measure \(\eta \) is bounded.

We study the existence of stationary injection solutions to equation (1.3) in the following precise sense:

Definition 2.1

Assume that \(K:{{{\mathbb {R}}}}_*^{2}\rightarrow {{\mathbb {R}} }_{+}\) is a continuous function satisfying (2.10) and the upper bound (2.12). Assume further that \(\eta \in {\mathcal {M}}_{+}\left( {\mathbb {R}}_*\right) \) satisfies (2.13). We will say that \(f\in {\mathcal {M}}_{+}\left( {\mathbb {R}}_*\right) ,\) satisfying \(f\left( \left( 0,1\right) \right) =0\) and

is a stationary injection solution of (1.3) if the following identity holds for any test function \(\varphi \in C_{c}({{{\mathbb {R}}}}_*)\):

Remark 2.2

Definition 2.1, or a discrete version of it, will be used throughout most of the paper (cf. Sections 2 to 6). In Section 7, we will use a more general notion of a stationary injection solution to (1.3), considering source terms \(\eta \) which satisfy \( {\mathrm{supp}} \eta \subset [a, b]\) for some given constants a and b such that \(0<a<b\). Then we require that \(f\in {\mathcal {M}}_{+}\left( {\mathbb {R}}_*\right) \) and \(f((0, a))=0\), in addition to (2.14). Note that for such measures we have \(\int _{{{\mathbb {R}}}_*} f(\text {d}x) = \int _{[a,\infty )} f(\text {d}x)\). The generalized case is straightforwardly reduced to the above setup by rescaling space via the change of variables \(x'=x/a\).

The condition \(f\left( \left( 0,1\right) \right) =0\) is a natural requirement for stationary solutions of the coagulation equation, given that \(\eta \left( \left( 0,1\right) \right) =0\). As we show next, the second integrability condition (2.14) is the minimal one needed to have well defined integrals in the coagulation operator.

First, note that all the integrals appearing in (2.15) are well defined for any \(\varphi \in C_{c}\left( {\mathbb {R}}_*\right) \) with \({\mathrm{supp}} \varphi \subset ( 0,L]\), because we can then restrict the domain of integration to the set \(\left\{ \left( x,y\right) \in \left[ 1,L\right] \times \left[ 1,\infty \right) \right\} \) in the term containing \(\varphi \left( x\right) \), and to the set \(\left\{ \left( x,y\right) \in \left[ 1,L\right] ^{2}\right\} \) in the term containing \(\varphi \left( x+y\right) \). In addition, (2.12) implies that \(K\left( x,y\right) {\leqq }{\tilde{C}}_{L}[y^{\gamma + \lambda }+y^{-\lambda }]\) for \(\left( x,y\right) \in \left[ 1,L\right] \times \left[ 1,\infty \right) \). Therefore,

where \(C_{L}\) depends on \(\varphi \), \(\gamma \), and \(\lambda \). Then, the assumption (2.14) in the Definition 2.1 implies that all the integrals appearing in (2.15) are convergent.

We now state the main results of this paper.

Theorem 2.3

Assume that K satisfies (2.9)– (2.12) and \(| \gamma +2\lambda | <1.\) Let \(\eta \ne 0 \) satisfy (2.13). Then, there exists a stationary injection solution \(f\in {\mathcal {M}}_{+}\left( {\mathbb {R}}_*\right) \), \(f\ne 0\), to (1.3) in the sense of Definition 2.1.

Theorem 2.4

Suppose that \(K\left( x,y\right) \) satisfies (2.9)–(2.12) as well as \(| \gamma +2\lambda | {\geqq }1.\) Let us assume also that \(\eta \ne 0\) satisfies (2.13). Then, there is no solution of (1.3) in the sense of Definition 2.1.

Remark 2.5

Notice that the free molecular kernel defined as in (1.7) satisfies (2.10)–(2.12) with \(\gamma =\frac{1}{6},\ \lambda =\frac{1}{2}\). Then, since \(\gamma +2\lambda >1\), we are in the Hypothesis of Theorem 2.4 which implies that there are no solutions of (1.3) in the sense of the Definition 2.1 for the kernel (1.7) and some \(\eta \ne 0\). On the other hand, in the case of the Brownian kernel defined in (1.9) with \(\gamma = 0 \) and \(\lambda = \frac{1}{3}\) the assumptions of Theorem 2.3 hold and nontrivial stationary injection solutions in the sense of Definition 2.1 exist for each \(\eta \) satisfying (2.13).

Remark 2.6

We observe that if \(\eta =0\), there is a trivial stationary solution to (1.3) given by \(f=0\). On the other hand, if \(\eta \ne 0\), then \(f=0\) cannot be a solution.

Remark 2.7

Assumption (2.13) is motivated by specific problems in chemistry [36] which have a source of monomers \(s_{\alpha }=s\delta _{\alpha ,1}\) only. However, in all the results of this paper this assumption could be replaced by the most general condition

and in the discrete case, the analogous condition (5.2) could be replaced by \(\sum _{\alpha =1}^{\infty }\alpha s_{\alpha }<\infty \). Indeed, it is easily seen that the only property of the source term \(\eta \) that is used in the arguments of the proofs, both in the existence and non-existence results, is that:

for some \(L_{\eta }\) sufficiently large, or an analogous condition in the discrete case, which follows immediately from (2.16). Moreover, it seems feasible to extend the support of \(\eta \) to all positive real numbers \({\mathbb {R}}_{*}\), by assuming suitable smallness conditions for f and \(\eta \) near the origin (for instance in the form of a bounded moment) in order to avoid fluxes of volume of particles coming from \(x=0\). This would lead us to consider issues different from the main ones considered in this paper, therefore we decided to not further consider this case here.

The flux of mass from small to large particles at the stationary state is computed in the next lemma for the above measure-valued solutions. In comparison to (2.5), then one needs to refine the definition by using a right-closed interval for the first integration and an open interval for the second integration, as stated in (2.18) below.

Lemma 2.8

Suppose that the assumptions of Theorem 2.3 hold. Let f be a stationary injection solution in the sense of Definition 2.1. Then f satisfies for any \(R>0\)

Remark 2.9

Note that if \(R{\geqq }L_\eta \), the right-hand side of (2.18) is always equal to \(J=\int _{[1,L_\eta ]} x \eta (\text {d}x)>0\). Therefore, the flux is constant in regions involving only large cluster sizes.

Proof

If \(R<1\), both sides of (2.18) are zero, and the equality holds. Consider then some \(R\ge 1\) and for all \(\varepsilon \) with \(0<\varepsilon <R\) choose some \(\chi _\varepsilon \in C_c^\infty ({{\mathbb {R}}}_*)\) such that \(0\le \chi _\varepsilon \le 1\), \(\chi _\varepsilon (x) = 1\), for \(1\le x {\leqq }R\), and \(\chi _\varepsilon (x) = 0\), for \(x {\geqq }R+\varepsilon \). Then for each \(\varepsilon \) we may define \(\varphi (x) = x \chi _\varepsilon (x) \) and thus obtain a valid test function \(\varphi \in C_{c}({{{\mathbb {R}}}}_*)\). Since then (2.15) holds, we find that for all \(\varepsilon \)

The first term can be rewritten as follows:

We readily see that the terms involving \(\chi _\varepsilon \) on the right hand side tend to zero as \(\varepsilon \) tends to zero due to the fact that for Radon measures \(\mu \) the integrals \(\int _{[a-\varepsilon , a)}d\mu \) and \(\int _{(a,a+\varepsilon ]} d\mu \) converge to 0 as \(\varepsilon \) tends to zero. Then we obtain from (2.19) that

Rearranging the terms we obtain

which implies (2.18) using a symmetrization argument. \(\square \)

The following Lemma will be used several times throughout the paper to convert bounds for certain “running averages” into uniform bounds of integrals. The function \(\varphi \) below is included mainly for later convenience.

Lemma 2.10

Suppose \(a>0\) and \(b \in (0,1)\), and assume that \(R\in (0,\infty ]\) is such that \(R\ge a\). Consider some \(f \in \mathcal {M_+}({{\mathbb {R}}}_*)\) and \(\varphi \in C({{\mathbb {R}}}_*)\), with \(\varphi \ge 0\).

-

1.

Suppose \(R<\infty \), and assume that there is \(g \in L^1([a,R])\) such that \(g\ge 0\) and

$$\begin{aligned} \frac{1}{z}\int _{[bz,z]} \varphi (x) f(\text {d}x) {\leqq }g(z)\,, \quad \text {for } z \in [a,R] \,. \end{aligned}$$(2.20)Then

$$\begin{aligned} \int _{[a,R]} \varphi (x) f(\text {d}x) {\leqq }\frac{\int _{[a,R]}g(z)\text {d}z}{\vert \ln b\,\vert } + R g(R)\,. \end{aligned}$$(2.21) -

2.

Consider some \(r\in (0,1)\), and assume that \(a/r\le R<\infty \). Suppose that (2.20) holds for \(g(z)=c_0 z^q\), with \(q\in {{\mathbb {R}}}\) and \(c_0\ge 0\). Then there is a constant \(C>0\), which depends only on r, b and q, such that

$$\begin{aligned} \int _{[a,R]} \varphi (x) f(\text {d}x) {\leqq }C c_0 \int _{[a,R]} z^q \text {d}z \,. \end{aligned}$$(2.22) -

3.

If \(R=\infty \) and there is \(g \in L^1([a,\infty ))\) such that \(g\ge 0\) and

$$\begin{aligned} \frac{1}{z}\int _{[bz,z]} \varphi (x) f(\text {d}x) {\leqq }g(z)\,, \quad \text {for } z \ge a \,, \end{aligned}$$(2.23)then

$$\begin{aligned} \int _{[a,\infty )} \varphi (x) f(\text {d}x) {\leqq }\frac{\int _{[a,\infty )}g(z)\text {d}z}{\vert \ln b\,\vert }\,. \end{aligned}$$(2.24)

Proof

We first prove the general case in item 1. Assume thus that \(R<\infty \) and that \(g\ge 0\) is such that (2.20) holds. We recall that then \(0<a\le R\). If \(a\ge b R\), we can estimate

using the assumption (2.20) with \(z=R\). Thus (2.21) holds in this case since \(g\ge 0\).

Otherwise, we have \(0<a<b R\). By assumption, the constant \(C_1 := \int _{[a,R]} g(z) \text {d}z{\geqq }0\) is finite. Integrating (2.20) over z from a to R, we obtain

The iterated integral satisfies the assumptions of Fubini’s theorem, and thus it can be written as an integral over the set

Therefore, after using Fubini’s theorem to obtain an integral where z-integration comes first, we find that

The integral over z yields \(\ln (x/(bx))=\vert \ln b\,\vert \), and thus \(\int _{[a,bR]} \varphi (x) f(\text {d}x ) {\leqq }\ C_1/ \vert \ln b\,\vert \). To get an estimate for the integral over [bR, R], we use (2.20) for \(z=R\). Hence, (2.21) follows also in this case which completes the proof of the first item.

For item 2, let us assume that \(0<r<1\), \(a\le r R<\infty \), and that (2.20) holds for \(g(z)=c_0 z^q\), with \(q\in {{\mathbb {R}}}\) and \(c_0\ge 0\). Since then \(g\in L^1([a,R])\) and \(g\ge 0\), we can conclude from the first item that that (2.21) holds. Thus we only need find a suitable bound for the second term therein, for \(R g(R)=c_0 R^{q+1}\). By changing the integration variable from z to \(y=z/R\), we find

Here, \(C':=\int _{[r,1]} y^q \text {d}y\) satisfies \(0<C'<\infty \) for any choice of \(q\in {{\mathbb {R}}}\). Therefore, we can now conclude that (2.22) holds for \(C=|\ln b\,|^{-1} + 1/C'\) which depends only on q, b, and r.

For item 3, let us suppose that \(R=\infty \) and \(g \in L^1([a,\infty ))\) is such that \(g\ge 0\) and (2.23) holds. Then for all intergers \(n\ge a\) we necessarily have \(\inf _{x\ge n} (x g(x))=0\) since otherwise g is not integrable. Therefore, there is \(R_n\rightarrow \infty \) such that \(\lim _{n\rightarrow \infty } R_n g(R_n)=0\). We apply item 1 with \(R=R_n\), and taking \(n\rightarrow \infty \) proves that (2.24) holds. This completes the proof of the Lemma. \(\square \)

3 Existence Results: Continuous Model

Our first goal is to prove the existence of a stationary injection solution (cf. Theorem 2.3) under the assumption \(|\gamma + 2\lambda |<1\). This will be accomplished in three steps: We first prove in Proposition 3.6 existence and uniqueness of time-dependent solutions for a particular class of compactly supported continuous kernels. Considering these solutions at large times allows us to prove in Proposition 3.10 existence of stationary injection solutions for this class of kernels using a fixed point argument. We then extend the existence result to general unbounded kernels supported in \({ {\mathbb {R}}}_*^{2}\) and satisfying (2.10)– (2.12) with \(| \gamma +2\lambda | <1\).

Compactly supported continuous kernels are automatically bounded from above but, for the first two results, we will also assume that the kernel has a uniform lower bound on the support of the source. To pass to the limit including the more general kernel functions, it will be necessary to control the dependence of the solutions on both of the bounds and on the size of support of the kernel. To fix the notations, let us first choose an upper bound \(L_\eta \) for the support of the source, that is, a constant satisfying (2.13). In the first two Propositions, we will consider kernel functions which are continuous, non-negative, have a compact support, and for which we may find \(R_{*}{\geqq }L_{\eta }\) and \(a_1\), \(a_2\) such that \(0<a_{1}<a_{2}\) and \( K(x,y)\in [a_{1},a_{2}],\) for \((x,y)\in [1,2R_{*}]^{2}\). This allows us to prove first that the time-evolution is well-defined, Proposition 3.6, and then in Proposition 3.10 the existence of stationary injection solutions for this class of kernels using a fixed point argument. The proofs include sufficient control of the dependence of the solutions on the cut-off parameters to remove the restrictions and obtain the result in Theorem 2.3.

In fact, not only we regularize the kernel, but we also introduce a cut-off for the coagulation gain term. This will guarantee that the equation is well-posed and has solutions whose support never extends beyond the interval \([1,2R_{*}]\). To this end, let us choose \(\zeta _{R_{*}}\in C\left( {\mathbb {R}}_*\right) \) such that \(0\le \zeta _{R_{*}}\le 1\), \(\zeta _{R_{*}}\left( x\right) =1\) for \(0{\leqq }x{\leqq }R_{*}\), and \(\zeta _{R_{*}}\left( x\right) =0\) for \(x{\geqq }2R_{*}\). We then regularize the time evolution equation (1.3) as

As we show later, this will result in a well-posedness theory such that any solution of (3.1) has the following property: \(f\left( \cdot ,t\right) \) is supported on the interval \(\left[ 1,2R_{*}\right] \) for each \(t{\geqq }0\). Let us also point out that since we are interested in solutions f such that \(f\left( \left( 0,1\right) ,t\right) =0\), the above integral \(\int _{\left( 0,x \right] }\left( \cdot \cdot \cdot \right) \) can be replaced by \(\int _{\left[ 1,x-1\right] }\left( \cdot \cdot \cdot \right) \) if \(x {\geqq }1\).

Assumption 3.1

Consider a fixed source term \(\eta \in {\mathcal {M}}_{+}\left( {{{\mathbb {R}}}}_*\right) \) and assume that \(L_\eta \ge 1\) satisfies (2.13). Suppose \(R_{*}\), \(a_1\), \(a_2\), and T are constants for which \(R_{*}>L_\eta \), \(0<a_{1}<a_{2}\), and \(T>0\). Suppose \(K:{ {\mathbb {R}}}_*^{2}\rightarrow {{\mathbb {R}}}_{+}\) is a continuous, non-negative, symmetric function such that \(K(x,y)\le a_2\) for all x, y, and we also have \(K(x,y)\in [a_{1},a_{2}]\) for \((x,y)\in [1,2R_{*}]^{2}\), and \(K(x,y)=0\), if \(x{\geqq }4R_{*}\) or \(y{\geqq }4R_{*}\). Moreover, we assume that there is given a function \(\zeta _{R_{*}}\) such that \(\zeta _{R_{*}}\in C\left( {\mathbb {R}}_*\right) \), \(0\le \zeta _{R_{*}}\le 1\), \(\zeta _{R_{*}}\left( x\right) =1\) for \(0{\leqq }x{\leqq }R_{*}\), and \(\zeta _{R_{*}}\left( x\right) =0\) for \(x{\geqq }2R_{*}\).

We will now study measure-valued solutions of the regularized problem (3.1) in an integrated form. To this end, we use a fairly strong notion of continuous differentiability although uniqueness of the regularized problem might hold in a larger class. However, since we cannot prove uniqueness after the regularization has been removed, it is not a central issue here.

Definition 3.2

Suppose Y is a normed space, \(S\subset Y\), and \(T>0\). We use the notation \(C^1([0,T],S;Y)\) for the collection of maps \(f:\left[ 0,T\right] \rightarrow S\) such that f is continuous and there is \({\dot{f}}\in C([0,T],Y)\) for which the Fréchet derivative of f at any \(t\in \left( 0,T\right) \) is given by \({\dot{f}}(t)\).

We also drop the normed space Y from the notation if it is obvious from the context, in particular, if \(S={\mathcal {M}}_{+,b}(I)\) and \(Y=C_0(I)^*\) or \(Y=S\).

Clearly, if \(f\in C^1([0,T],S;Y)\), the function \({\dot{f}}\) above is unique and it can be found by requiring that for all \(t\in \left( 0,T\right) \)

and then taking the left and right limits to obtain the values \({\dot{f}}(0)\) and \({\dot{f}}(T)\). What is sometimes relaxed in similar notations is the existence of the left and right limits.

Definition 3.3

Suppose that Assumption 3.1 holds. Consider some initial data \(f_{0}\in {\mathcal {M}}_{+}({{\mathbb {R}}}_*)\) for which \(f_{0}\left( \left( 0,1\right) \cup \left( 2R_{*},\infty \right) \right) =0\). Then \(f_0\in {\mathcal {M}}_{+,b}({{\mathbb {R}}}_*)\).

We will say that \(f\in {C^{1}(\left[ 0,T \right] ,{\mathcal {M}}_{+,b}({{\mathbb {R}}}_*))}\) satisfying \(f\left( \cdot ,0\right) =f_{0}\left( \cdot \right) \) is a time-dependent solution of (3.1) if the following identity holds for any test function \(\varphi \in C^{1}(\left[ 0,T\right] ,C_{c}\left( {{\mathbb {R}}}_*\right) )\) and all \(0<t<T\),

Remark 3.4

Note that for any such solution f, automatically by continuity and compactness of [0, T] one has

since \(\Vert f\Vert =f({{\mathbb {R}}}_*)\). Let us also point out that whenever \(\varphi \in C^{1}(\left[ 0,T\right] ,C_{c}\left( {{\mathbb {R}}}_*\right) )\) and \(f\in {C^{1}(\left[ 0,T\right] ,{\mathcal {M}}_{+,b}({{\mathbb {R}}}_*))}\), the map \(t\mapsto \int _{{{{\mathbb {R}}}_*}}\varphi \left( x,t\right) f\left( \text {d}x,t\right) \) indeed belongs to \(C^{1}(\left[ 0,T\right] ,{\mathbb {R}}_{*})\). Thus the derivative on the left hand side of (3.2) is defined in the usual sense and, in fact, it is equal to \(\int _{{{{\mathbb {R}}}_*}}\varphi \left( x,t\right) {\dot{f}}\left( \text {d}x,t\right) \). In addition, there is sufficient regularity that after integrating (3.2) over the interval \(\left[ 0,t \right] \) we obtain

We can define also weak stationary solutions of (3.1). It is straightforward to check that if \(F(\text {d}x)\) is a stationary solution, then \(f(d x ,t)=F(\text {d}x)\) is a solution to (3.4) with initial condition \(f_0(\text {d}x)=F(\text {d}x)\).

Definition 3.5

Suppose that Assumption 3.1 holds. We will say that \(f\in {{\mathcal {M}}_{+}({{\mathbb {R}}}_*)},\) satisfying \(f((0,1) \cup (2R_*,\infty ))=0\) is a stationary injection solution of (3.1) if the following identity holds for any test function \(\varphi \in C_{c}\left( {{\mathbb {R}}} _*\right) \):

Proposition 3.6

Suppose that Assumption 3.1 holds. Then, for any initial condition \(f_{0}\) satisfying \(f_{0}\in {\mathcal {M}}_{+}({{\mathbb {R}}}_*)\), \( f_{0}\left( \left( 0,1\right) \cup \left( 2R_{*},\infty \right) \right) =0\) there exists a unique time-dependent solution \(f\in {C^{1}(\left[ 0,T\right] ,{\mathcal {M}}_{+,b}({{\mathbb {R}}}_*))}\) to (3.1) which solves it in the classical sense. Moreover, we have

and the estimate

holds for \(C = \int _{{{\mathbb {R}}}_*}\eta (\text {d}x)\ge 0\), which is independent of \(f_{0}\), t, and T.

Remark 3.7

We remark that the lower estimate \(K(x,y){\geqq }a_{1}>0\) will not be used in the proof of Proposition 3.6. However, this assumption will be used later in the proof of the existence of stationary injection solutions.

Proof

In this proof we skip some standard computations which may be found in [43, Section 5]. We define \({\mathcal {X}}_{R_{*}}=\left\{ f\in {\mathcal {M}}_{+}({{\mathbb {R}}} _*):f\left( \left( 0,1\right) \cup \left( 2R_{*},\infty \right) \right) =0\right\} \). Since \([1,2R_{*}]\) is compact, for any \(f\in {\mathcal {X}}_{R_{*}}\) we have \(f({{\mathbb {R}}}_*)<\infty \), and thus \({\mathcal {X}}_{R_{*}}\subset {\mathcal {M}}_{+,b}({\mathbb {R}}_*)\). For \(f\in {\mathcal {M}}_{+,b}({\mathbb {R}}_*)\), we clearly have \(f\in {\mathcal {X}}_{R_{*}}\) if and only if \(\int \varphi (x) f(d x)=0\) for all \(\varphi \in C_0({{\mathbb {R}}}_*)\) whose support lies in \(\left( 0,1\right) \cup \left( 2R_{*},\infty \right) \). Therefore, \({\mathcal {X}}_{R_{*}}\) is a closed subset both in the \(*-\)weak and norm topology of \(C_0({{\mathbb {R}}}_*)^*={\mathcal {M}}_{b}({{\mathbb {R}}}_*)\).

For the rest of this proof, we endow \({\mathcal {X}}_{R_{*}}\) with the norm topology which makes it into a complete metric space. We look for solutions f in the subset \(X:=C( \left[ 0,T\right] , {\mathcal {X}}_{R_{*}})\) of the Banach space \(C\left( \left[ 0,T\right] , {\mathcal {M}}_{b}({{\mathbb {R}}}_*)\right) \). The space X is endowed with the norm

By the uniform limit theorem, also X is then a complete metric space.

We now reformulate (3.1) as the following integral equation acting on \({\mathcal {X}}_{R_{*}}\): we define for \(0\le t\le T\), \(x\in {{\mathbb {R}}}_*\), and \(f\in X\) first a function

and using this we obtain a measure, written for convenience using the function notation,

Notice that the definition (3.8) indeed is pointwise well defined and yields a function \((x,s)\mapsto a\left[ f\right] \left( x,s\right) \) which is continuous and non-negative for any \(f\in X\). Moreover, we claim that if \(f\in X\), then (3.9) defines a measure in \({\mathcal {M}}_{+}({{\mathbb {R}}} _*)\) for each \(t\in [0,T]\), and we have in addition \({\mathcal {T}}\left[ f\right] \in X\). The only non-obvious term is the term on the right-hand side containing \(\int _{0}^{x}K\left( x-y,y\right) f\left( x-y,s\right) f\left( y,s\right) \text {d}y\). We first explain how this term defines a continuous linear functional on \(C_c\left( {{\mathbb {R}}}_*\right) \). Define \(g(x,s)=\frac{\zeta _{R_{*}}(x) }{2}e^{-\int _{s}^{t}a\left[ f\right] \left( x,\xi \right) d\xi }\) which is a jointly continuous function with \(g(x,s)=0\) if \(x\ge 2 R_{*}\). Given \(\varphi \in C_{c}\left( {{\mathbb {R}}}_*\right) \) we then set

Here the right-hand side of (3.10) is well defined since \( f\left( \cdot ,s\right) \in {\mathcal {X}}_{R_{*}}\) for each \(s\in \left[ 0,t\right] .\) Moreover, this operator defines a continuous linear functional from \(C_c\left( {{\mathbb {R}}}_*\right) \) to \({\mathbb {R}}\), and thus is associated with a unique positive Radon measure. Finally, if \(\varphi (x)=0\) for \(1\le x\le 2 R_{*}\), then \(g(x+y,s) \varphi \left( x+y\right) =0\) for \(x+y<1\), which implies that the right hand side of (3.10) is zero. Therefore, the measure belongs to \({\mathcal {X}}_{R_{*}}\) for all t. Continuity in t follows straightforwardly.

The operator \({\mathcal {T}}\left[ \cdot \right] \) defined in (3.9) is thus a mapping from \(C([0,T],{\mathcal {X}}_{R_{*}})\) to \(C([0,T],{\mathcal {X}} _{R_{*}})\) for each \(T>0.\) We now claim that it is a contractive mapping from the complete metric space

to itself if T is sufficiently small. This follows by means of standard computations using the assumption \(K\left( x,y\right) {\leqq }a_{2}\), as well as the inequality \(\left| e^{-x_{1}}-e^{-x_{2}}\right| {\leqq }\left| x_{1}-x_{2}\right| \) valid for \(x_{1}{\geqq }0,\ x_{2}{\geqq }0.\)

Therefore, there exists a unique solution of \(f={\mathcal {T}}\left[ f\right] \) in \({X}_{T}\) assuming that T is sufficiently small. Notice that \(f{\geqq }0\) by construction.

In order to show that the obtained solution can be extended to arbitrarily long times we first notice that if \(f={\mathcal {T}}\left[ f\right] \), then \(f\in C^{1}(\left[ 0,T\right] ,{\mathcal {X}}_{R_{*}})\) and the definition in (3.9) implies that f satisfies (3.1). Integrating this equation with respect to the x variable, we obtain the following estimates:

whence (3.7) follows. We can then extend the solution to arbitrarily long times \(T>0\) using standard arguments. After this, the uniqueness of the solution in \(C^{1}(\left[ 0,T\right] ,{\mathcal {M}}_{+,b}({{\mathbb {R}}}_*))\) follows by a standard Grönwall estimate. \(\square \)

Remark 3.8

Notice that using the inequality \(K(x,y){\geqq }a_{1}>0\) we can strengthen (3.11) into the estimate

Inspecting the sign of the right hand side this implies an estimate stronger than (3.7), namely,

We now prove that solutions obtained in Proposition 3.6 are weak solutions in the sense of Definition 3.3.

Proposition 3.9

Suppose that the assumptions in Proposition 3.6 hold. Then, the solution f obtained is a weak solution of (3.1) in the sense of Definition 3.3.

Proof

Multiplying (3.1) by a continuous test function \(\varphi \in C^{1}\left( \left[ 0,T\right] ,C\left( {{\mathbb {R}}}_*\right) \right) \) with \(T>0\) we obtain, using the action of the convolution on a test function in (3.10),

As mentioned earlier, the left-hand side can be rewritten as

Therefore, f satisfies (3.2) in Definition 3.3. \(\square \)

We will use in the following the dynamical system notation \(S\left( t\right) \) for the map

where f is the solution of (3.1) obtained in Proposition 3.6. Note that by uniqueness \(S\left( t\right) \) has the following semigroup property:

The operators \(S\left( t\right) \) define a mapping

where \({\mathcal {X}}_{R_{*}}=\left\{ f\in {\mathcal {M}}_{+}({{\mathbb {R}}} _*):f\left( \left( 0,1\right) \cup \left( 2R_{*},\infty \right) \right) =0\right\} \), as before.

We can now prove the following result:

Proposition 3.10

Under the assumptions of Proposition 3.6, there exists a stationary injection solution \({\hat{f}} \in {\mathcal {M}}_{+}({{\mathbb {R}}}_*)\) to (3.1) as defined in Definition 3.5.

Proof

We provide below a proof of the statement but skip over some standard technical computations. Further details about these technical estimates can be found from [43, Section 5].

We first construct an invariant region for the evolution equation (3.1). Let \(f_0 \in {\mathcal {X}}_{R_{*}} \) and set \(f(t)=S(t)f_0\). In particular, f satisfies (3.2). Let us then choose a time-independent test function such that \(\varphi (x)=1\) when \(1\le x{\leqq }2R_{*}\). Similarly to (3.11) and using the fact that \(f(\cdot ,t)\) has support in \([1,2 R_*]\), the lower bound for K implies an estimate

where \(c_{0}=\int _{{{\mathbb {R}}}_*}\eta \left( \text {d}x\right) \). As in Remark 3.8, inspecting the sign of the right hand side we then find that if we choose any \(M{\geqq }\sqrt{\frac{2c_{0}}{a_{1}}}\), then the following set is invariant under the time-evolution:

Moreover, \({\mathcal {U}}_{M}\) is compact in the \(*-\)weak topology due to Banach-Alaoglu’s Theorem (cf. [5]), since it is an intersection of a \(*-\)weak closed set \({\mathcal {X}}_{R_{*}}\) and the closed ball \(\Vert f\Vert \le M\).

Consider the operator \(S(t):{\mathcal {X}}_{R_{*}}\rightarrow {\mathcal {X}} _{R_{*}}\) defined in (3.13). We now endow \({\mathcal {X}}_{R_{*}}\) with the \(*-\)weak topology and prove that S(t) is continuous. Due to Proposition 3.9 we have that \(f(\cdot ,t)=S(t)f_{0}\) satisfies (3.4) for any test function \( \varphi \in C^{1}\left( \left[ 0,T\right] ,C_c\left( {{\mathbb {R}}}_* \right) \right) \), \(0{\leqq }t{\leqq }T\) with \(T>0\) arbitrary. Let \(f_{0},{\hat{f}} _{0}\in {\mathcal {X}}_{R_{*}}\). We write \(f(\cdot ,t)=S(t)f_{0}\) and \({\hat{f}}(\cdot ,t)=S(t){\hat{f}}_{0}.\) Using (3.4) and subtracting the corresponding equations for f and \({\hat{f}}\), we obtain

where

For the derivation of (3.17), we have used symmetry properties under the transformation \(x\leftrightarrow y\): clearly, \(K\left( x,y\right) \left[ \varphi \left( x+y,s\right) \chi _{\left\{ x+y{\leqq }R_{*}\right\} }\left( x,y\right) -\varphi \left( x,s\right) \right. \left. -\varphi \left( y,s\right) \right] \) is then symmetric and \(\left[ f\left( \text {d}x,s\right) {\hat{f}}\left( dy,s\right) -f\left( dy,s\right) {\hat{f}}\left( \text {d}x,s\right) \right] \) is antisymmetric, and hence their product integrates to zero.

Consider then an arbitrary \(\psi \in C_c\left( {{\mathbb {R}}}_*\right) \). We claim that there is a test function \(\varphi \in C^{1}\left( \left[ 0,t\right] ,C_{c}\left( { {\mathbb {R}}}_*\right) \right) \) such that

Given such a function \(\varphi \), since f and \({\hat{f}}\) have no support on (0, 1), equation (3.17) implies

Therefore, if such a function \(\varphi \) exists for any \( \psi \in C_c\left( {{\mathbb {R}}}_*\right) \), we would find that the estimate at time t, \(\left| \int _{{{\mathbb {R}}}_*}\psi \left( x\right) (f\left( \text {d}x,t\right) -{\hat{f}}\left( \text {d}x,t\right) )\right| \), will become arbitrarily small if the estimate at time 0, \(\left| \int _{[1, {2R_{*}}]}\varphi \left( x,0\right) (f_{0}\left( \text {d}x\right) -{\hat{f}}_{0}\left( \text {d}x\right) )\right| \), is made sufficiently small. In particular, this property can be used to prove that for every \(f(t)=S(t)f_0\) in a \(*-\)weak open set U one can find a \(*-\)weak open neighbourhood V of \(f_0\) such that for any \({\hat{f}}_{0}\in V\) one has \(S(t){\hat{f}}_{0} \in U\). Hence, the \(*-\)weak continuity of \( S\left( t\right) \) would then follow.

In order to conclude the proof of the continuity of S(t) in the \(*-\) weak topology it only remains to prove the existence of \(\varphi \in C^{1}\left( \left[ 0,t\right] ,C_{c}\left( {{\mathbb {R}}}_*\right) \right) \) satisfying (3.18) for a fixed \( \psi \in C_c\left( {{\mathbb {R}}}_*\right) \). First, let us choose \(a\in (0,1)\) and \(b\ge 4R_{*}\) so that the support of \(\psi \) is contained in \(I_0:=[a,b]\). We now construct \(\varphi \) as a solution to an evolution equation in the Banach space \(Y:= \left\{ h\in C({{\mathbb {R}}}_*)\left| \, h(x)=0\text { if }x\le a\text { or }x\ge b{\displaystyle }\right. \!\right\} \) which is a closed subspace of \(C_0({{\mathbb {R}}}_*)\).

More precisely, we now look for solutions \(\varphi \in {\tilde{X}}:= C([0,t],Y)\), endowed with the weighted norm \(\Vert \varphi \Vert _M := \sup _{x\in {{\mathbb {R}}}_*,\, s\in [0,t]}|\varphi (x,s)| \mathrm{e}^{M (s-t)}\). The parameter \(M>0\) is chosen sufficiently large, as explained later.

Clearly, \(\psi \in Y\). To regularize the small values of x, we choose a function \(\phi _a\in C({{\mathbb {R}}}_*)\) such that \(0\le \phi _a\le 1\), \(\phi _a(x)=0\) if \(x\le a\), and \(\phi _a(x)=1\) if \(x\ge 1\), and then define

Now, if \(\varphi \in {\tilde{X}}\), we have \(\tilde{{\mathcal {L}}}[\varphi ](x,s)=0\) both if \(x\le a\) (due to the factor \(\phi _a\)) and if \(x\ge b\), since \(K(x,y)=0\) if \(x\ge b \ge 4 R_*\). In addition, the assumptions guarantee that \(x\mapsto {\mathcal {L}}[\varphi ](x,s)\) is continuous, so we find that \( \tilde{{\mathcal {L}}}[\varphi ](x,s)\in Y\) for any fixed s.

We look for solutions \(\varphi \) as fixed points satisfying \(\varphi ={\mathcal {A}}[\varphi ]\), where

A straightforward computation, using the uniform bounds of total variation norms of f and \({\hat{f}}\), shows that \({\mathcal {A}}\) is a map from \({\tilde{X}}\) to itself. In addition, since

we find that \({\mathcal {A}}\) is also a contraction if we fix M so that \(M> \frac{3a_2}{2} \left( \Vert f\Vert _t+\Vert {\hat{f}}\Vert _t \right) \). Thus by the Banach fixed point theorem, there is a unique \(\varphi \in {\tilde{X}}\) such that \(\varphi ={\mathcal {A}}[\varphi ]\). This choice satisfies (3.18), at least for \(x\ge 1\), and hence completes the proof of continuity of S(t).

We next prove that also \(t\mapsto S\left( t\right) f_{0}\) is continuous in the \(*-\)weak topology. Let \(t_{1},t_{2}\in \left[ 0,T \right] \) with \(t_{1}<t_{2}.\) Let \(\varphi \in C_{c}\left( {{\mathbb {R}}} _*\right) .\) Using (3.4) we obtain:

Thus using the bound \(\left\| f\right\| _{T}<\infty \) we obtain

where the constant C does not depend on \(t_1\), \(t_2\) or \(\varphi \). Therefore, the mapping \(t\mapsto S\left( t\right) f_{0}\) is continuous in the \(*-\)weak topology.

We can now conclude the proof of Proposition 3.10. As proven above, for any fixed t, the operator \(S(t):{\mathcal {U}}_{M}\rightarrow {\mathcal {U}}_{M}\) is continuous and \({\mathcal {U}}_{M}\) is convex and compact when endowed with the \(*-\)weak topology. Using Schauder fixed point theorem, for all \( \delta >0\), there exists a fixed point \({\hat{f}}_{\delta }\) of \(S(\delta )\) in \({\mathcal {U}}_{M}\). In addition, \({\mathcal {U}}_{M}\) is metrizable and hence sequentially compact. As shown in [18, Theorem 1.2], these properties imply that there is \({\hat{f}}\) such that \(S(t){\hat{f}} = {\hat{f}}\) for all t. Thus \({\hat{f}}\) is a stationary injection solution to (3.1). \(\square \)

We now prove Theorem 2.3.

Proof of Theorem 2.3 (existence)

Given a kernel K(x, y) satisfying (2.11), (2.12), it can be rewritten as

where

with \(p=\max \left\{ \lambda ,-\left( \gamma +\lambda \right) \right\} \) and the constants \(C_1>0\), \(C_2<\infty \) independent of x. Notice that the dependence of the function \(\Phi \) on x is due to the fact that we are not assuming the kernel K(x, y) to be an homogeneous function.

By definition of p, we have \(\gamma + 2 p =|\gamma +2\lambda |\ge 0\), and thus always \(p {\geqq }-\frac{\gamma }{2}\). On the other hand, by assumption, \(|\gamma +2\lambda |<1\), and thus also \(p<\frac{1-\gamma }{2}\). Reciprocally, we observe that kernels with the form (3.21) satisfying (3.22) with \(p {\geqq }-\frac{\gamma }{2}\) satisfy also (2.11), (2.12) .

We use two levels of truncations. First, given \(\varepsilon \) with \(0<\varepsilon <1\) we define

where \(\Phi _\varepsilon \) is smooth, non-negative, and bounded by \(\frac{A}{\varepsilon ^{\sigma }}\) everywhere, and satisfies

Here A is a large constant independent of \(\varepsilon \); we take \(A=1\) when \(\Phi \) is unbounded, and assume it sufficiently large in a way that will be seen in the proof if \(\Phi \) is bounded. Concerning \(\sigma \) we take \(\sigma =0\) if \(p{\leqq }0\) for any \(\gamma \), \(\sigma >0\) arbitrary small if \(p>0\) and \(\gamma {\leqq }0\) and \(0< \sigma <\frac{p}{\gamma }\) if \(p>0\) and \(\gamma >0\). We then have

The second level of truncation is to define

where \(\omega _{R_{*}}\in C^{\infty }_{0}({{\mathbb {R}}}^2_{+})\), \(0\le \omega _{R_*}\le 1\), and

Notice that, if \(\gamma {\leqq }0\) the truncation in \(\min \left\{ \left( x+y\right) ^{\gamma },\frac{1}{\varepsilon }\right\} \) in (3.23) does not have any effect, because we are only interested in the region where \(x{\geqq }1\) and \(y{\geqq }1\), due to the fact that the solutions we construct satisfy \(f((0,1))=0\).

From Proposition 3.10, to every \(\varepsilon \) and \(R_*\), there exists a stationary injection solution \(f_{\varepsilon ,R_{*}}\) satisfying

for any test function \(\varphi \in {C}_{c}{({{\mathbb {R}}}_{*})}\). As in the proof of Lemma 2.8 consider any \(z,\delta >0\) and take \(\chi _\delta \in C^\infty ({{\mathbb {R}}}_+)\) satisfying \(\chi _\delta (x) = 1\) if \(x {\leqq }z\), and \(\chi _\delta (x) = 0\) if \(x {\geqq }z+\delta \). Then \(\varphi (x) = x \chi _\delta (x) \) is a valid non-negative test function. Since \(\zeta _{R_*}\le 1\), we may employ the inequality \(\varphi \left( x+y\right) \zeta _{R_{*}}\left( x+y\right) \le \varphi \left( x+y\right) \) in (3.27), and conclude that for these test functions

Using the equalities derived in the proof of Lemma 2.8 and taking \(\delta \rightarrow 0\) then proves that

A lower bound for the left hand-side and an upper bound for the right hand-side of (3.28), both independent of \(R_{*}\), are computed next. Since \({\mathrm{supp}} \eta \subset [1,L_\eta ]\) and \(\vert |\eta \vert |\) is bounded, then

where c is a constant independent of \(R_{*}\) and c is bounded by \(L_{\eta } \vert |\eta \vert |\). On the other hand we have \(K_{\varepsilon ,R_{*}}\left( x,y\right) {\geqq }\varepsilon >0\) for \(\left( x,y\right) \in \left[ 1,2 R_{*}\right] ^{2}.\) Then,

Using that here

we obtain

Since \(x{\geqq }2z/3\) in the domain of integration, we obtain

which implies that

where \(C_{\varepsilon }\) is a numerical constant depending on \(\varepsilon \) but independent of \(R_{*}\). Since the right hand side is integrable on \([1,2R_{*}]\), Lemma 2.10 may be employed to obtain a bound

Since the support of \(f_{\varepsilon ,R_{*}}\) lies in \([1,2 R_{*}]\) we find that for all \(R_{*}\ge 1\)

where \({\bar{C}}_{\varepsilon }\) is a constant independent of \(R_{*}\). Following the same argument for arbitrary lower limit \(y\in [1,2R_{*}]\), we also obtain a decay bound

which obviously extends to \(y> 2R_{*}\) since \(f_{\varepsilon ,R_{*}}((2R_{*},\infty ))=0\).

Thus, estimate (3.32) implies that for each \(\varepsilon \) the family of solutions \(\{f_{\varepsilon ,R_{*}}\}_{R_{*}\ge 1}\) is contained in a closed unit ball of \({\mathcal {M}}_{+,b}\left( {\mathbb {R}}_*\right) \). This is a sequentially compact set in the \(*-\)weak topology, and thus by taking a subsequence if needed, we can find \(f_{\varepsilon }\in {\mathcal {M}}_{+,b}\left( {\mathbb {R}}_*\right) \) such that \(f_{\varepsilon }\left( \left( 0,1\right) \right) =0\) and

with \(R_{*}^{n}\rightarrow \infty \) as \(n\rightarrow \infty \). Note that then we can use the earlier “step-like” test-functions and the bounds (3.32) and (3.33) to conclude that also the limit functions satisfy similar estimates, namely,

Consider next a fixed test function \(\varphi \in C_{c}({{\mathbb {R}}}_*)\). Now for all large enough values of n, we have \(\varphi \left( x+y\right) \zeta _{R^n_{*}}\left( x+y\right) =\varphi \left( x+y\right) \) everywhere, since the support of \(\varphi \) is bounded. We claim that as \(n\rightarrow \infty \), the limit of (3.27) is given by

Since \(f_{\varepsilon ,R_{*}^{n}}\) has support in \([1,2 R_{*}]\), it follows that we may always replace \(K_{\varepsilon ,R_{*}}(x,y)\) in (3.27) by \(K_{\varepsilon }(x,y)\) without altering the value of the integral. By the above observations, it suffices to show that

for \(\mu _n(\text {d}x):=f_{\varepsilon ,R^n_{*}}\left( \text {d}x\right) \) and

Note that although \(\phi \in C_b({{\mathbb {R}}}_*^2)\), it typically would not have compact support. However, the earlier tail estimates suffice to control the large values of x, y, as we show in detail next.

We prove (3.37) by showing that every subsequence has a subsequence such that the limit holds. For notational convenience, let \(\mu _n\) denote the first subsequence and consider an arbitrary \(\varepsilon '>0\). We first regularize the support of \(\phi \) by choosing a function \(g:{{\mathbb {R}}}_+\rightarrow [0,1]\) which is continuous and for which \(g(r)=1\), for \(r\le 1\), and \(g(r)=0\), for \(r\ge 2\). We set \(\phi _M(x,y) := g\left( \frac{x}{M}\right) g\left( \frac{y}{M}\right) g\left( \frac{1}{M x}\right) g\left( \frac{1}{M y}\right) \phi (x,y)\). Then for every M, we have \(\phi _M\in C_c({{\mathbb {R}}}_*^2)\) and thus it is uniformly continuous. By (3.35), we may use dominated convergence theorem to conclude that \(\int \phi _M(x,y)f_{\varepsilon }\left( \text {d}x\right) f_{\varepsilon }\left( dy\right) \rightarrow \int \phi (x,y)f_{\varepsilon }\left( \text {d}x\right) f_{\varepsilon }\left( dy\right) \) as \(M\rightarrow \infty \). Thus for all sufficiently large M, we have \(\big |\int \phi _M(x,y)f_{\varepsilon }\big ( \text {d}x\big ) f_{\varepsilon }\big ( dy\big )- \int \phi (x,y)f_{\varepsilon }\big ( \text {d}x\big ) f_{\varepsilon }( dy)\big |<\varepsilon '\). On the other hand, by the decay bound in (3.33) we can find a constant C which does not depend on \(R_*\) and for which \(\big |\int \phi _M(x,y)\mu _n(\text {d}x)\mu _n(dy)-\int \phi (x,y)\mu _n(\text {d}x)\mu _n(dy)\big |\le C M^{-\frac{1}{2}}\). We fix \(M=M(\varepsilon ')\) to be a value such that also this second bound is less than \(\varepsilon '\) for all n.

In order to conclude the proof of (3.37) it only remains to show that \(\int \phi _{M}\left( x,y\right) \mu _{n}\left( \text {d}x\right) \mu _{n}\left( dy\right) \) converges to \(\int \phi _{M}\left( x,y\right) f_{\varepsilon }\left( \text {d}x\right) f_{\varepsilon }\left( dy\right) \) as \(n\rightarrow \infty .\) This is just a consequence of the fact that the convergence \(\mu _{n}\left( \text {d}x\right) \rightharpoonup f_{\varepsilon }\left( \text {d}x\right) \) as \(n\rightarrow \infty \) in the \(*-\)weak topology of the space \({\mathcal {M}} _{+,b}\left( \left[ \frac{1}{2M},2M\right] \right) \) implies the convergence \(\mu _{n}\left( \text {d}x\right) \mu _{n}\left( dy\right) \rightharpoonup f_{\varepsilon }\left( \text {d}x\right) f_{\varepsilon }\left( dy\right) \) as \(n\rightarrow \infty \) in the \(*-\)weak topology of the space \({\mathcal {M}}_{+,b}\left( \left[ \frac{1}{2M},2M\right] ^{2}\right) .\) This result can be found for instance in [2], Theorem 3.2 for probability measures, which implies the result for arbitrary measures using simple rescaling arguments.

Since \(f_\varepsilon \) is then a stationary solution to (1.3) with \(K=K_{\varepsilon }\), we can apply Lemma 2.8 directly, and conclude that

where c is defined in (3.29) and is independent of \(\varepsilon \). We now observe that (3.22) and (3.23)–(3.24) imply for all sufficiently small \(\varepsilon \)

where \(C_{0}>0\) is independent of \(\varepsilon \) and we used that \(\frac{x}{x+y}\in \left[ \frac{1}{3}, \frac{2}{3}\right] \). Combining this estimate with (3.38) as well as the fact that

we obtain

Therefore, we obtain the following estimates for the measures \(f_{\varepsilon }\left( \text {d}x\right) \):

where \({\tilde{C}}\) is independent of \(\varepsilon \).

Consider first the case \(\gamma \le 0\) and recall that then \(p\ge 0\) and \(z^\gamma \le 1\) for \(z\ge 1\). Since \(f_\varepsilon ((0,1))=0\), then the bound (3.39) implies that for all \(z\ge 1\) we have

Since \(\gamma +2 p <1\), Lemma 2.10 implies then that for all \(y\ge 1\),

where the constant C does not depend on \(\varepsilon \). In particular, then the measures \(x^{\gamma +p} f_{\varepsilon }\left( \text {d}x\right) \) belong to a \(*\)-weak compact set, and there exist \(F\in {\mathcal {M}}_{+,b}\left( {\mathbb {R}}_{*}\right) \) such that

for some sequence \(( \varepsilon _{n}) _{n\in {\mathbb {N}}}\) with \(\lim _{n\rightarrow \infty }\varepsilon _{n}=0\). We denote \(f\left( \text {d}x\right) = x^{-\gamma -p} F\left( \text {d}x\right) \), and then \(f \in {\mathcal {M}}_{+}\left( {\mathbb {R}}_{*}\right) \). In addition, \(f((0,1))=0\) and it satisfies the tail estimate

It remains to consider the case \(\gamma >0\). Then (3.39) implies that

Using these bounds in item 3 of Lemma 2.10 implies then that for all \(y\ge 1\),

where the constant C does not depend on \(\varepsilon \). Hence, in this case the family of measures \(\left\{ f_{\varepsilon }\right\} _{\varepsilon >0}\) is contained in a \(*\)-weak compact set in \({\mathcal {M}}_{+,b}\left( {\mathbb {R}}_{*}\right) \). Therefore, there exists \(f\in {\mathcal {M}}_{+,b}\left( {\mathbb {R}}_{*}\right) \) such that

for some sequence \(\left( \varepsilon _{n}\right) _{n\in {\mathbb {N}}}\) with \(\lim _{n\rightarrow \infty }\varepsilon _{n}=0.\)

To obtain better tail bounds for the limit measure, let us first observe that by (3.45), there is a constant C such that for all \(\varepsilon \)

Therefore, applying item 2 of Lemma 2.10 with \(r=\frac{1}{2}\), and using the assumption \(\gamma +2 p <1\), we can adjust the constant C so that

Let then \(y,R\ge 1\) be such that \(y<R\) but they are otherwise arbitrary. We choose a test function \(\varphi \in C_c({{\mathbb {R}}}_*)\), such that \(0{\leqq }\varphi {\leqq }1\), \(\varphi (x)=1\) for \(y{\leqq }x{\leqq }R\), and \(\varphi (x)=0\) for \(x{\geqq }2R\) and for \(x\le \frac{1}{2} y\). Then, if also \(\varepsilon \le (2R)^{-\gamma }\), we have

where for values \(y\le 2\) the estimate follows by using \(f_{\varepsilon }((0,1))=0\) and then \(a=1\) in (3.49). Applying this with \(\varepsilon =\varepsilon _n\) and then taking \(n\rightarrow \infty \) proves that

Here we may take \(R\rightarrow \infty \), and using monotone convergence theorem we can conclude that f satisfies a tail estimate identical to the earlier case with \(\gamma \le 0\), namely, also for \(\gamma >0\) we can find a constant C such that

It only remains to take the limit \(\varepsilon _{n}\rightarrow 0\) in (3.36). Suppose that \(\varphi \in C_{c}\left( {\mathbb {R}}_{*}\right) . \) Then, in the term containing \(\varphi (x+y)\) we have that the integrand is different from zero only in a bounded region. Using then that for any \(q\in {{\mathbb {R}}}\) we have \( \lim _{\varepsilon \rightarrow 0} (x y)^{q} K_{\varepsilon }\left( x,y\right) = (x y)^{q} K\left( x,y\right) \) uniformly in compact subsets of \({{\mathbb {R}}}_*\), as well as (3.43) and (3.48), we obtain that the limit of that term is

The terms containing \(\varphi \left( x\right) \) or \(\varphi \left( y\right) \) can be treated analogously due to the symmetry under the transformation \( x\leftrightarrow y.\) We then consider the limit of the term containing \( \varphi \left( x\right) \) where \(\varphi \in C_{c}\left( {\mathbb {R}}_{*}\right) .\) Our goal is to show that the contribution to the integral due to regions \(\{y{\geqq }M\}\) where M is very large, can be made arbitrarily small as \(M\rightarrow \infty \), uniformly in \(\varepsilon .\) Suppose that M is chosen sufficiently large, so that the support of \(\varphi \) is contained in \(\left( 0,M\right) \). We then have the following identity:

Given that only values with \(x{\geqq }1\) may contribute, and x is in a bounded region contained in \(\left[ 1,M\right] \), we obtain, using (3.39), an estimate

Using (3.40) we can bound the second term uniformly,

where the constant C is always independent of \(\varepsilon \), although it might need to be adjusted at each inequality. Therefore, the second term on the right hand side of (3.51) tends to zero as \(\varepsilon \rightarrow 0.\)

In order to estimate the first term we need to consider separately different ranges of the values of the exponents p and \(\gamma \). We claim that for \(1{\leqq }x {\leqq }C_0 {\leqq }M,\) \(y{\geqq }M\), where \({\mathrm{supp}} \varphi \subset [0,C_0]\), the following estimates hold for some constants C, \(C_*>0\) which do not depend on \(\varepsilon ,\) M:

-

1.

If \(\gamma {\leqq }0\) and \(p{\leqq }0\) we have

$$\begin{aligned} \min \left\{ \left( x+y\right) ^{\gamma },\frac{1}{\varepsilon }\right\} \Phi _{\varepsilon }\left( \frac{x}{x+y}, x\right) {\leqq }C \, . \end{aligned}$$(3.52) -

2.

If \(\gamma >0\) and \(p{\leqq }0\) we have

$$\begin{aligned}&\min \left\{ \left( x+y\right) ^{\gamma },\frac{1}{\varepsilon }\right\} \Phi _{\varepsilon }\left( \frac{x}{x+y}, x\right) \nonumber \\&\quad {\leqq }C\left( y^{\gamma +\lambda }+y^{-\lambda }\right) \chi _{\left\{ y\le \left( \frac{1}{\varepsilon }\right) ^{\frac{1}{\gamma }}\right\} }+\frac{C}{\varepsilon }\left( y^{\lambda }+y^{-\gamma -\lambda }\right) \chi _{\left\{ y>\left( \frac{1}{\varepsilon }\right) ^{\frac{1}{\gamma }}\right\} }\, , \end{aligned}$$(3.53)where \(\chi _{U}\) is the characteristic function of the set U.

-

3.

If \(\gamma {\leqq }0\) and \(p>0\) we have

$$\begin{aligned} \min \left\{ \left( x+y\right) ^{\gamma },\frac{1}{\varepsilon }\right\} \Phi _{\varepsilon }\left( \frac{x}{x+y}, x\right) {\leqq }C\left( y^{\gamma +\lambda }+y^{-\lambda }\right) \chi _{\left\{ y{\leqq }C_{*} \varepsilon ^{-\frac{\sigma }{p}}\right\} }\,. \end{aligned}$$(3.54) -

4.

If \(\gamma >0\) and \(p>0\) we have

$$\begin{aligned} \min \left\{ \left( x+y\right) ^{\gamma },\frac{1}{\varepsilon }\right\} \Phi _{\varepsilon }\left( \frac{x}{x+y}, x\right) {\leqq }C\left( y^{\gamma +\lambda }+y^{-\lambda }\right) \chi _{\left\{ y{\leqq }C_{*} \varepsilon ^{-\frac{\sigma }{p}}\right\} }\, . \end{aligned}$$(3.55)

In the case 1 we use the fact that, since \(p{\leqq }0,\) we have \(\sigma =0.\) Then (3.25) implies that \(\Phi _{\varepsilon }\left( s,x\right) {\leqq }C.\) On the other hand, using that \(\gamma {\leqq }0\) and \(x+y{\geqq }x{\geqq }1\) we have \(\min \left\{ \left( x+y\right) ^{\gamma },\frac{1}{\varepsilon }\right\} {\leqq }1\) whence (3.52) follows.