Abstract

In this work, we present purely subword-based alternatives to fastText word embedding algorithm The alternatives are modifications of the original fastText model, but rely on subword information only, eliminating the reliance on word-level vectors and at the same time helping to dramatically reduce the size of embeddings. Proposed models differ in their subword information extraction method: character n-grams, suffixes, and the byte-pair encoding units. We test the models in the task of morphological analysis and lemmatization for 3 morphologically rich languages: Finnish, Russian, and German. The results are compared with other recent subword-based models, demonstrating consistently higher results.

Similar content being viewed by others

Notes

http://morpho.aalto.fi/projects/morpho/

https://github.com/google/sentencepiece

https://github.com/ispras-texterra/babylondigger

REFERENCES

Pinter, Y., Guthrie, R., and Eisenstein, J., Mimicking word embeddings using subword RNNs, Proc. 2017 Conf. on Empirical Methods in Natural Language Processing, Copenhagen, 2017, pp. 102–112.

Schick, T. and Schutze, H., Attentive mimicking: better word embeddings by attending to informative contexts, Proc. 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, 2019, vol. 1, pp. 489–494.

Zhao, J., Mudgal, S., and Liang, Y., Generalizing word embeddings using bag of subwords, Proc. 2018 Conf. on Empirical Methods in Natural Language Processing, Brussels, 2018, pp. 601–606.

Sasaki, S., Suzuki, J., and Inui, K., Subword-based compact reconstruction of word embeddings, Proc. 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, 2019, vol. 1, pp. 3498–3508.

Heinzerling, B. and Strube, M., BPEmb: tokenization-free pre-trained subword embeddings in 275 languages, Proc. 11th Int. Conf. on Language Resources and Evaluation (LREC 2018), Miyazaki, 2018.

Zhu, Y., Vulić, I., and Korhonen, A., A systematic study of leveraging subword information for learning word representations, Proc. 2019 Conf. North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, 2019, vol. 1, pp. 912–932.

Bojanowski, P., Grave, E., Joulin, A., and Mikolov, T., Enriching word vectors with subword information, Trans. Assoc. Comput. Linguist., 2017, vol. 5, pp. 135–146.

Grave, E., Bojanowski, P., Gupta, P., Joulin, A., and Mikolov, T., Learning word vectors for 157 languages, Proc. 11th Int. Conf. on Language Resources and Evaluation (LREC 2018), Miyazaki, 2018.

Shibata, Y., et al., Byte pair encoding: a text compression scheme that accelerates pattern matching, Tech. Rep., Kyushu Univ.: Dep. of Informatics, 1999, no. DOI-TR-161.

Pennington, J., Socher, R., and Manning, C.D., Glove: global vectors for word representation, Proc. 2014 Conf. on Empirical Methods in Natural Language Processing (EMNLP), Doha, 2014, pp. 1532–1543.

Üstün, A., Kurfalı, M., and Can, B., Characters or morphemes: how to represent words?, Proc. 3rd Workshop on Representation Learning for NLP, Melbourne, 2018, pp. 144–153.

Devlin, J., et al., BERT: pre-training of deep bidirectional transformers for language understanding, Proc. 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, 2019, vol. 1, pp. 4171–4186.

Mikolov, T., et al., Advances in pre-training distributed word representations, Proc. 11th Int. Conf. on Language Resources and Evaluation (LREC 2018), Miyazaki, 2018.

Zhu, Y., et al., On the importance of subword information for morphological tasks in truly low-resource languages, Proc. 23rd Conf. on Computational Natural Language Learning (CoNLL), Hong Kong, 2019, pp. 216–226.

Zeman, D., et al., CoNLL 2018 shared task: multilingual parsing from raw text to universal dependencies, Proc. CoNLL 2018 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies, Brussels, 2018, pp. 1–21.

Rybak, P. and Wróblewska, A., Semi-supervised neural system for tagging, parsing and lematization, Proc. CoNLL 2018 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies, Brussels, 2018, pp. 45–54.

Srivastava, R.K., Greff, K., and Schmidhuber, J., Highway networks, 2015, arXiv:1505.00387.

Diederik Kingma and Jimmy Ba, Adam: a method for stochastic optimization, Proc. 3rd Int. Conf. on Learning Representations ICLR 2015, San Diego, 2015.

Zeman, D., Popel, M., Straka, M., Hajič, J., Nivre, J., Ginter, F., Luotolahti, J., Pyysalo, S., Petrov, S., Potthast, M., Tyers, F., Badmaeva, E., Gokirmak, M., Nedoluzhko, A., Cinková, S., Hajič, J., Jr., Hlaváčová, J., Kettnerová, V., Urešová, Z., Kanerva, J., Ojala, S., Missilä, A., Manning, C.D., Schuster, S., Reddy, S., Taji, D., Habash, N., Leung, H., de Marneffe, M.-C., Sanguinetti, M., Simi, M., Kanayama, H., de Paiva, V., Droganova, K., Alonso, H.M., Çöltekin, Ç., Sulubacak, U., Uszkoreit, H., Macketanz, V., Burchardt, A., Harris, K., Marheinecke, K., Rehm, G., Kayadelen, T., Attia, M., Elkahky, A., Yu, Z., Pitler, E., Lertpradit, S., Mandl, M., Kirchner, J., Alcalde, H.F., Strnadová, J., Banerjee, E., Manurung, R., Stella, A., Shimada, A., Kwak, S., Mendonça, G., Lando, T., Nitisaroj, R., and Li, J., CoNLL 2017 shared task: multilingual parsing from raw text to universal dependencies, in Proc. CoNLL 2017 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies, Association for Computational Linguistics, 2017, pp. 1–19.

Boguslavsky, I., SynTagRus – a deeply annotated corpus of Russian, in Les emotions dans le discours-Emotions in Discourse, 2014, pp. 367–380.

Haverinen, K., et al., Building the essential resources for Finnish: the Turku dependency treebank, Lang. Res. Eval., 2014, vol. 48, no. 3, pp. 493–531.

Kilian, F., Kohn, A., Beuck, N., and Menzel, W., Because size does matter: the Hamburg dependency treebank, Proc. Language Resources and Evaluation Conf. (LREC 2014), Reykjavik, 2014.

Turdakov, D., Astrakhantsev, N., Nedumov, Y., Sysoev, A., Andrianov, I., Mayorov, V., Fedorenko, D., Korshunov, A., and Kuznetsov, S., Texterra: a framework for text analysis, Proc. Inst. Syst. Program. RAS (Proc. ISP RAS), 2014, vol. 26, no. 1, pp. 421–438.

Andrianov, I.A., Mayorov, V.D., and Turdakov, D.Y.E., Modern approaches to aspectbased sentiment analysis, Proc. Inst. Syst. Program. RAS, 2015, vol. 27, no. 5, pp. 5–22.

Zeman, D., Nivre, J., Abrams, M., et al., Universal Dependencies 2.5, LINDAT/CLARIAH-CZ Digital Library at the Institute of Formal and Applied Linguistics (UFAL), Charles Univ., Faculty of Mathematics and Physics, 2019. http://hdl.handle.net/11234/1-3105.

ACKNOWLEDGMENTS

We thank Vladimir Mayorov and Sergey Korolyov for assisting with the development and experiments, and Denis Turdakov, Ivan Andrianov, Hrant Khachatryan, Vladislav Trifonov for feedback and insightful discussions.

Author information

Authors and Affiliations

Corresponding authors

Appendices

APPENDIX A

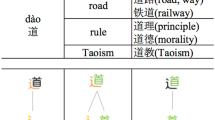

COMPARISON OF VOCABULARIES FOR ENGLISH AND MORPHOLOGICALLY RICH LANGUAGES

APPENDIX B

ILLUSTRATION OF ORIGINAL FASTTEXT AND THE PROPOSED ALTERNATIVES

APPENDIX C

ILLUSTRATION OF THE NEURAL NETWORK FOR MORPHOLOGICAL ANALYSIS AND LEMMATIZATION

APPENDIX D

COMPARISON OF PROPOSED MODELS AND SIMILAR SUBWORDS-ONLY APPROACHES

APPENDIX E

COMPARISON OF FASTTEXT AND PROPOSED MODELS

APPENDIX F

NEURAL NETWORK-BASED ANALYZER’S HYPERPARAMETERS

APPENDIX G

THE PERFORMANCE OF BPE-BASED MODELS

APPENDIX H

THE PERFORMANCE OF BPE-BASED MODELS BASED ON VOCABULARY SIZE AND LANGUAGE

Rights and permissions

About this article

Cite this article

Ghukasyan, T., Yeshilbashyan, Y. & Avetisyan, K. Subwords-Only Alternatives to fastText for Morphologically Rich Languages. Program Comput Soft 47, 56–66 (2021). https://doi.org/10.1134/S0361768821010059

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S0361768821010059