Abstract

A Markov tree is a probabilistic graphical model for a random vector indexed by the nodes of an undirected tree encoding conditional independence relations between variables. One possible limit distribution of partial maxima of samples from such a Markov tree is a max-stable Hüsler–Reiss distribution whose parameter matrix inherits its structure from the tree, each edge contributing one free dependence parameter. Our central assumption is that, upon marginal standardization, the data-generating distribution is in the max-domain of attraction of the said Hüsler–Reiss distribution, an assumption much weaker than the one that data are generated according to a graphical model. Even if some of the variables are unobservable (latent), we show that the underlying model parameters are still identifiable if and only if every node corresponding to a latent variable has degree at least three. Three estimation procedures, based on the method of moments, maximum composite likelihood, and pairwise extremal coefficients, are proposed for usage on multivariate peaks over thresholds data when some variables are latent. A typical application is a river network in the form of a tree where, on some locations, no data are available. We illustrate the model and the identifiability criterion on a data set of high water levels on the Seine, France, with two latent variables. The structured Hüsler–Reiss distribution is found to fit the observed extremal dependence patterns well. The parameters being identifiable we are able to quantify tail dependence between locations for which there are no data.

Similar content being viewed by others

Notes

According to the report of the Organisation for Economic Co-operation and Development (OECD) Preventing the flooding of the Seine in the Paris – Ile de France region - p.4.

References

Asadi, P., Davison, A., Engelke, S.: Extremes on river networks. Annals. Appl. Stat. 9, 2023–2050 (2015)

Beirlant, J., Goegebeur, Y., Segers, J., Teugels, J., De Waal, D., Ferro, C.: Statistics of Extremes: Theory and Applications. Wiley Series in Probability and Statistics. Wiley (2004)

Coles, S.G., Tawn, J.A.: Modelling extreme multivariate events. J. Royal. Stat. Soc. Ser. B 53(2), 377–392 (1991)

Davison, A.C., Hinkley, D.V.: Bootstrap Methods and their Application. Cambridge Series in Statistical and Probabilistic Mathematics. Cambridge University Press, Cambridge (1997)

de Haan, L., Ferreira, A.: Extreme Value Theory: An Introduction. Springer Series in Operations Research and Financial Engineering. Springer, New York (2007)

Drees, H., Huang, X.: Best attainable rates of convergence for estimators of the stable tail dependence function. J. Multivar. Anal. 64(1), 25–46 (1998)

Einmahl, J., Kiriliouk, A., Segers, J.: A continuous updating weighted least squares estimator of tail dependence in high dimensions. Extremes 21 (2), 205–233 (2018)

Einmahl, J., Krajina, A., Segers, J.: An M-estimator for tail dependence in arbitrary dimensions. Ann. Stat. 40(3), 1764–1793 (2012)

Engelke, S., Hitz, A.S.: Graphical models for extremes. J. Royal. Stat. Soc. Ser. B 82(3), 1–38 (2020)

Engelke, S., Malinowski, A., Kabluchko, Z., Schlather, M.: Estimation of Hüsler–Reiss distributions and Brown–Resnick processes. J. Royal. Stat. Soc. Ser. B 77, 239–265 (2015)

Genton, M.G., Ma, Y., Sang, H.: On the likelihood function of a Gaussian max-stable processes. Biometrika 98(2), 481–488 (2011)

Gissibl, N., Klüppelberg, C.: Max-linear models on directed acyclic graphs. Bernoulli 24(4A), 2693–2720 (2018)

Hüsler, J., Reiss, R.: Maxima of normal random vectors: between independence and complete dependence. Stat. Probab. Lett. 7, 283–286 (1989)

Huang, X.: Statistics of bivariate extremes. Tinbergen Institute Research Series, 22 (1992)

Huser, R., Davison, A.C.: Composite likelihood estimation for the Brown–Resnick process. Biometrika 100(2), 511–518 (2013)

Kiriliouk, A., Segers, J., Tafakori, L.: An estimator of the stable tail dependence function based on the empirical beta copula. Extremes 21 (4), 581–600 (2018)

Klüppelberg, C., Sönmez, E.: Max-linear models on infinite graphs generated by Bernoulli bond percolation. arXiv:1804.06102 (2018)

Koller, D., Friedman, N.: Probabilistic Graphical Models: Principles and Techniques. MIT Press, Cambridge (2009)

Krupskii, H.J.P.: CopulaModel: CopulaModel: Dependence Modeling with Copulas. R package version 0.6 (2014)

Lauritzen, S.: Graphical Models. Oxford University Press, Oxford (1996)

Lee, D., Joe, H.: Multivariate extreme value copulas with factor and tree dependence structures. Extremes 21, 147–176 (2018)

Nikoloulopoulos, A.K., Joe, H., Li, H.: Extreme value properties of multivariate t copulas. Extremes 12(2), 129–148 (2009)

Papastathopoulos, I., Strokorb, K.: Conditional independence among max-stable laws. Stat. Probab. Lett. 108, 9–15 (2016)

Perfekt, R.: Extremal behaviour of stationary Markov chains with applications. Ann. Appl. Probab. 4(2), 529–548 (1994)

Resnick, S.: Extreme values, regular variation, and point processes. Applied probability. Springer (1987)

Rootzén, H., Tajvidi, N.: Multivariate generalized Pareto distributions. Bernoulli 12(5), 917–930 (2006)

Segers, J.: Multivariate regular variation of heavy-tailed Markov chains. arXiv:math/0701411 (2007)

Segers, J.: One- versus multi-component regular variation and extremes of Markov trees. Adv. Appl. Probab., 52(3):to appear (2020)

Smith, R.L.: The extremal index for a Markov chain. J. Appl. Probab. 29(1), 37–45 (1992)

Wainwright, M., Jordan, M.: Graphical models, exponential families, and variational inference. Foundations. Trends. Mach. Learn. 1(1–2), 1–305 (2008)

Yu, H., Uy, W., Dauwels, J.: Modeling spatial extremes via ensemble-of-trees of pairwise copulas. IEEE Trans. Signal. Process. 65(3), 571–585 (2017)

Yun, S.: The extremal index of a higher-order stationary Markov chain. Ann. Appl. Probab. 8(2), 408–437 (1998)

Acknowledgments

We wish to thank the two Reviewers and the Associate Editor for a wealth of valuable remarks and suggestions, which helped us to enhance the understanding of the place of our models in the existing literature and of our contribution to it. Stefka Asenova is also grateful to David Lee for the clarifications and indications on the model in Lee and Joe (2018).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A.1 Relation to extremal graphical models in Engelke and Hitz (2020)

Engelke and Hitz (2020) introduce graphical models for extremes in terms of the multivariate Pareto distribution associated to a simple max-stable distribution G. We briefly review their approach and compare it with ours in case G is a Hüsler–Reiss distribution. Let V = {1,…,d} and let X = (Xv,v ∈ V ) be a random vector with unit-Pareto margins. The condition that X ∈ D(G) is equivalent to

By a direct calculation, it follows that

for \(z \in (0, \infty )^{V}\), from which

where Y = (Yv,v ∈ V ) is a random vector whose distribution function is equal to the right-hand side in Eq. 24. The law of Y is a multivariate Pareto distribution, which, upon a change in location, is a special case of the multivariate generalized Pareto distributions arising in Rootzén and Tajvidi (2006) and Beirlant et al. (2004, Section 8.3) as limit distributions of multivariate peaks over thresholds.

Assuming that Y is absolutely continuous, its support is equal to the L-shaped set \(\{ y \in (0, \infty )^{V} : \max \limits _{v \in V} Y_{v} > 1 \}\) or a subset thereof, making conditional independence notions related to density factorizations ill-suited for Y. This is why Engelke and Hitz (2020) study conditional independence relations for the random vector Yu defined in distribution as Y ∣Yu > 1 for u ∈ V. According to Engelke and Hitz (2020, Definition 2), the law of Y is defined to be an extremal graphical model with respect to some graph \(\mathcal {G}\) if for all u ∈ V, the law of Yu satisfies the global Markov property with respect to \(\mathcal {G}\). Note that Y itself is not required to satisfy the said Markov property.

The multivariate Pareto distribution derived through Eq. 24 from the Hüsler–Reiss distribution G = HΛ is referred to in Engelke and Hitz (2020) as the Hüsler–Reiss Pareto distribution. In their article, the term Hüsler–Reiss graphical models is then used for Hüsler–Reiss Pareto distributions that are extremal graphical models.

To show the relation with our approach, note that Eq. 25 implies that, for all u ∈ V, we have

Recall the tail tree (Ξu,v,v ∈ V ∖ u) in Eq. 7 and put Ξu,u = 0. Equations 10 and Eq. 13 in combination with Theorem 2 in Segers (2020) and the continuous mapping theorem imply that

where ζ is a unit-Pareto random variable, independent of the log-normal random vector (Ξu,v,v ∈ V ). Comparing the two previous limit relations, we find that Yu is equal in distribution to (ζΞu,v,v ∈ V ). The representation \(\ln {\Xi }_{u,v} = {\sum }_{e \in ({u} \rightsquigarrow {v})} \ln M_{e}\) as path sums starting from u over independent Gaussian increments \(\ln M_{e}\) along the edges implies that the Gaussian vector \((\ln {\Xi }_{u,v}, v \in V)\) satisfies the global Markov property with respect to \(\mathcal {T}\). Since ζ is independent of (Ξu,v,v ∈ U) this Markov property then also holds for (ζΞu,v,v ∈ V ) and thus also for Yu. But this means exactly that the multivariate Pareto distribution associated to the Hüsler–Reiss distribution with parameter matrix Λ(𝜃) in Eq. 5 is an extremal graphical model with respect to \(\mathcal {T}\).

By way of comparison, the random vector Z∗ constructed via properties (Z1)–(Z2) in Section 2.3 is not max-stable nor multivariate Pareto, but it satisfies the global Markov property with respect to \(\mathcal {T}\) and it belongs to D(HΛ(𝜃)), motivating the chosen structure of Λ(𝜃) in Eq. 5. In Section 2.2, our assumption on ξ after transformation to X with unit-Pareto margins is that X ∈ D(HΛ(𝜃)). In this sense, we require that the extremal dependence of ξ is like the one of the graphical model Z∗. Our approach is thus different from the one in Engelke and Hitz (2020), who postulate a new definition of extremal graphical models for multivariate Pareto vectors, but without regard for the max-domain of attraction of the corresponding max-stable distributions. Still, for graphical models with respect to trees, both methods arrive at the same structure for the Hüsler–Reiss parameter matrix Λ(𝜃).

1.2 A.2 Comparison with the Lee–Joe structured Hüsler–Reiss model

Lee and Joe (2018) already proposed a way to bring structure to the parameter matrix \({\Lambda } = (\lambda _{ij}^{2})_{i,j=1}^{d}\) of a d-variate max-stable Hüsler–Reiss distribution. Recall that Hüsler and Reiss (1989) studied the asymptotic distribution of the component-wise maxima of a triangular array of row-wise independent and identically distributed Gaussian random vectors, the n-th row having correlation matrix ρ(n). Assuming \((1 - \rho _{ij}(n)) \ln (n) \to \lambda _{ij}^{2}\) as \(n \to \infty \), they found the limit to be the distribution bearing their name. Motivated by this property, Lee and Joe (2018) propose to set \(\lambda _{ij}^{2} = (1 - \rho _{ij}) \nu \) where \(\rho = (\rho _{ij})_{i,j=1}^{d}\) is a structured correlation matrix and ν > 0 is a free parameter. They then introduce the Hüsler–Reiss distributions that result from imposing on ρ the structure of a factor model or the one of a p-truncated vine. If p = 1, the latter becomes a Markov tree and we can compare their model with ours. In their case, a free correlation parameter αe ∈ (− 1, 1) is associated to each edge e ∈ E of the tree on V = {1,…,d}. The correlation matrix ρ of the resulting Gaussian graphical model is

The Lee–Joe model for the structured Hüsler–Reiss matrix ΛLJ derived from ρ is therefore

The model in Eq. 26 is to be compared with the one in our Eq. 5. The former has (d − 1) + 1 = d free parameters, (αe,e ∈ E) and ν, whereas the latter has only d − 1 free parameters (𝜃e,e ∈ E). In Eq. 5, the Hüsler–Reiss parameters satisfy

where we write λe = λab for e = (a,b) ∈ E. In contrast, the Lee–Joe parameter matrix in Eq. 26 only satisfies this additivity relation asymptotically as \(\nu \to \infty \). For instance, on a tree with d = 3 nodes and edges (1, 2) and (2, 3), i.e., a chain, their and our models satisfy respectively

Since the Lee–Joe parameter ν > 0 takes the role of \(\ln (n)\) in the Hüsler–Reiss limit relation, we can think of it as being large. In this interpretation, our parametrization becomes a limiting case of the one of Lee and Joe (2018).

Whereas the Lee–Joe parametrization is motivated from the limit result in Hüsler and Reiss (1989) for row-wise maxima of Gaussian triangular arrays, ours is motivated as the max-stable attractor of certain regularly varying Markov trees as in Segers (2020), the vector Z∗ in Section 2.3 serving as example. A possible advantage of our structure is that the resulting multivariate Pareto vector falls into the framework of conditional independence for such vectors is an extremal graphical model as in Engelke and Hitz (2020, Definition 2), as discussed in Appendix A. In general, this is not true for the multivariate Pareto vector induced by the Lee–Joe structure. For the trivariate tree in the preceding paragraph, for instance, the criterion in Proposition 3 in Engelke and Hitz (2020) is easily checked to be verified for our matrix Λ but not for the one of Lee and Joe (2018).

For the Seine data, we compare the fitted Lee–Joe tail dependence model with ours. In order to avoid possible identifiability issues for the Lee–Joe parameters, we suppress the nodes with latent variables and use the right-hand tree in Fig. 10 for the d = 5 observable ones, corresponding to the five locations in the dataset. The estimation method of Lee and Joe (2018) is based on pairwise copulas and annual maxima via composite likelihood. For year y and for variable j ∈{1,…,d}, let my,j be the maximum of all observations for that variable and that year, insofar available. These maxima are reported in Table 2 and their availability depends on the variable, i.e., on the location. For Melun there are only 15 such annual maxima in comparison to 33 for Nemours. For each variable j, transform these maxima to uniform margins \(\hat {u}_{y,j}\) using the empirical cumulative distribution function based on all available maxima for that variable. Note that for this transformation, Lee and Joe (2018) rely on estimated generalized extreme value distributions instead. For variables i,j ∈{1,…,d}, let \(\mathcal {Y}_{ij}\) be the set of years y for which annual maxima are available for both variables. For the pair (Paris, Meaux) this is the period 1999–2019 while for the pair (Paris, Nemours) this is 1990–2019. Let c(u,v; λ2) denote the bivariate Hüsler–Reiss copula density with parameter λ2. Following Lee and Joe (2018), we estimate the free parameters in Eq. 26 by maximizing a composite likelihood: letting \(\lambda _{ij}^{2}(\alpha , \nu )\) denote the right-hand side in Eq. 26, the parameter estimates are

For the implementation, we relied on the R package CopulaModel (Krupskii 2014).

Next, we compute bivariate extremal coefficients and compare them with the non-parametric ones on the one hand and with those obtained using our own model on the other hand. The points in Fig. 13 being some distance away from the diagonal, the two methods indeed seem to give somewhat different results. Moreover, there is less concordance between the non-parametric estimates and the ones from the Lee–Joe model than between the non-parametric ones and those resulting from our model: compare the red crosses in Fig. 13 with those in the left-hand plots in Fig. 11.

Bivariate extremal coefficients comparison: using the modelling and estimation method of Lee and Joe (2018, Section 4–5) and those proposed in this paper

1.3 A.3 Proof of Proposition 2.1

We show that the stdf l of Z∗ is equal to lU in Eq. 4 with U = V and Λ = Λ(𝜃). Since the margins of Z∗ are unit-Fréchet, they are tail equivalent to the unit-Pareto distribution, so that standardization to the unit-Pareto distribution is unnecessary. By the inclusion–exclusion principle,

for \(x \in (0, \infty )^{d}\). For any non-empty \(W\subseteq V\) and any u ∈ W, it holds by Eq. 7 in combination with Theorem 2 in Segers (2020) that

with ζ a unit-Pareto variable independent of (Ξuv,v ∈ V ∖ u). Using the fact that 1/ζ is a uniform variable on [0, 1] and setting Ξuu = 1 we have

upon a change of variable \(y = \exp (-z)\). Since \((\ln {\Xi }_{uv}, v \in V \setminus u)\) is multivariate normal with mean vector μV,u(𝜃) and covariance matrix ΣV,u(𝜃), we obtain from Eq. 27 that the stdf of Z∗ is equal to \(- \ln H_{\Lambda (\theta )}(1/x_{1}, \ldots , 1/x_{d})\), with HΛ the cumulative distribution function in Eqs. 3.5–3.6 in Hüsler and Reiss (1989), but with unit-Fréchet margins rather than Gumbel ones. By Remark 2.5 in Nikoloulopoulos et al. (2009), this stdf is equal to the one given in Eq. 4, as required.

1.4 A.4 Choice of node neighborhoods and parameter identifiability

The MM estimator in Eq. 18 and the CL estimator in Section 4.2 involve the choice of subsets \(W_{u} \subseteq U\) for u ∈ U. These sets or neighborhoods need to be chosen in such a way that the parameter vector 𝜃 is still identifiable from the collection of covariance matrices \({\Sigma }_{W_{u},u}(\theta )\) for u ∈ U and thus from the path sums pa,b for a,b ∈ Wu and u ∈ U. Here we illustrate this issue with an example.

Consider the following structure on five nodes where all variables are observable except for the one on node 2:

Clearly, the parameter vector 𝜃 = (𝜃12,𝜃23,𝜃34,𝜃25) is identifiable from the distribution of the observable variables because the criterion of Proposition 3.1 is satisfied: the only node whose variable is latent has degree three.

First, consider the following subsets Wu for u ∈{1, 3, 4, 5}:

The four 1 × 1 covariance matrices \({\Sigma }_{W_{u},u}(\theta )\) that correspond to these subsets are

We are not able to identify the parameter 𝜃 because the set of path sums {p15,p34} is too small: we have only two equations andfor four unknowns.

Second, consider instead the following node sets

The four covariance matrices \({\Sigma }_{W_{u},u}(\theta )\) are now

Clearly, the four edge parameters are identifiable from these covariance matrices.

1.5 A.5 Finite-sample performance of the estimators

We assess the performance of the three estimators introduced in Section 4 by numerical experiments involving Monte Carlo simulations.

Let \(\xi ^{\prime }=(\xi ^{\prime }_{v}, v\in V)\) be a random vector with continuous joint probability density function and satisfying the global Markov property, Eq. 6, with respect to the graph in Fig. 14. Let fu(xu) for any u ∈ V be the marginal density function of the variable \(\xi ^{\prime }_{u}\) and let xj↦fj∣v(xj∣xv) be the conditional density function of \(\xi ^{\prime }_{j}\) given \(\xi ^{\prime }_{v} = x_{v}\). For any u ∈ V the joint density function of \(\xi ^{\prime }\) is

with \(E_{u} \subseteq E\) the set of edges directed away from u, i.e., (v,j) ∈ Eu if and only if v = u or v separates u and j. The joint density f is determined by d − 1 bivariate densities fvj. It would seem that the joint density f depends on u, but this is not so, as can be confirmed by writing out the bivariate conditional densities. We make two parametric choices: the univariate margins fu are unit Fréchet densities, \(f_{j}(x_{j})=\exp (-1/x_{j})/{x_{j}^{2}}\) for \(x_{j} \in (0, \infty )\), and the bivariate margins for each pair of variables on adjacent vertices j,v are Hüsler–Reiss distributions with parameter 𝜃jv. Hence, \(\xi ^{\prime }\) corresponds to the vector Z∗ in Section 2.3.

Tree used for the graphical model underlying the data-generating process in the simulation study in Appendix A. The value of the parameters are 𝜃12 = 0.1, 𝜃23 = 0.3, 𝜃34 = 0.8, 𝜃35 = 0.5, 𝜃16 = 0.2 and 𝜃17 = 1.2. Variables \(\xi ^{\prime }_{1}\) and \(\xi ^{\prime }_{3}\) are latent

To generate an observation from the left hand-side of Eq. 28 above we use the right hand-side of that equation, proceeding iteratively, walking along paths starting from u using the conditional densities. An observation of \(\xi _{j}^{\prime }\) given \(\xi ^{\prime }_{v} = x_{v}\) is generated via the inverse function of the cdf xj↦Fj|v(xj∣xv), the conditional cdf of \(\xi ^{\prime }_{j}\) given \(\xi ^{\prime }_{v} = x_{v}\). To do so, the equation Fj|v(xj∣xv) − p = 0 is solved numerically as a function in xj for fixed p ∈ (0, 1). The choice of the Hüsler–Reiss bivariate distribution gives the following expression for Fj∣v(xj∣xv):

After generating all the variables \((\xi ^{\prime }_{v})_{v\in V}\) in this way, independent standard normal noise \(\varepsilon \sim \mathcal {N}_{d}(0,I_{d})\) is added. Although the distribution of \(\xi =\xi ^{\prime }+\varepsilon \) is not necessarily a graphical model with respect to the graph in Fig. 14, it is still in the max-domain of attraction of a Hüsler–Reiss distribution with parametric matrix as in Eq. 5. Hence the vector ξ is still in the class of models under consideration in Section 2.2. The data on nodes 1 and 3 are discarded and not used in the estimation so as to mimic a model with two latent variables, ξ1 and ξ3; according to Proposition 3.1, the six dependence parameters are still identifiable. In this way, we generate 200 samples of size n = 1000. The estimators are computed with threshold tuning parameter k ∈{25, 50, 100, 150, 200, 300}.

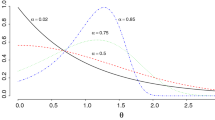

The bias, standard deviation and root mean squared errors of the three estimators are shown in Fig. 15 and Fig. 16 for the six parameters. The MME and CLE are computed with the sets Wu being W2 = {2, 4, 5, 6, 7}, W4 = W5 = {2, 4, 5}, and W6 = W7 = {2, 6, 7}. As is to be expected, the absolute value of the bias is increasing with k, while the standard deviation is decreasing and the mean squared error has a U-shape and eventually increases with k. The MME and CLE have very similar properties. For larger values of the true parameter, e.g. 𝜃34 = 0.8 and 𝜃17 = 1.2, all the three estimators perform in a comparable way. The ECE tends to have larger absolute bias and standard deviation for smaller values of the true parameters.

Bias (left), standard deviation (middle) and root mean squared error (right) of the method of moment estimator (MME), composite likelihood estimator (CLE) and pairwise extremal coefficient estimator (ECE) of the parameters 𝜃12 (top), 𝜃23 (middle), and 𝜃34 (bottom) as a function of the threshold parameter k. Model and settings as described in Appendix A

Bias (left), standard deviation (middle) and root mean squared error (right) of the method of moment estimator (MME), composite likelihood estimator (CLE) and pairwise extremal coefficient estimator (ECE) of the parameters 𝜃35 (top), 𝜃16 (middle), and 𝜃17 (bottom) as a function of the threshold parameter k. Model and settings as described in Appendix A

1.6 A.6 Seine case study: data preprocessing

The data represent water level in centimeters at the five locations mentioned above and were obtained from Banque Hydro, http://www.hydro.eaufrance.fr, a web-site of the Ministry of Ecology, Energy and Sustainable Development of France providing data on hydrological indicators across the country. The dataset encompasses the period from January 1987 to April 2019 with gaps for some of the stations.

Two major floods in Paris make part of our dataset: the one in June 2016 when the water level was measured at 6.01 m and the one at the end of January 2018 with water levels slightly less than 6 m measured in Paris too. A flood of similar magnitude to the ones in 2016 and 2018 occurred in 1982. By way of comparison, the biggest reportedFootnote 1 flood in Paris is the one in 1910 when the level in Paris reached 8.6 m.

Table 1 shows the average and the maximum water level per station observed in the complete dataset. The maxima of Paris, Meaux, Melun and Nemours occurred either during the floods in June 2016 or the floods in January 2018, which can be seen from Table 2 which displays the annual maxima at the five locations and the date of occurrence.

From Table 2 it can be observed that for many of the years the dates of maxima occurrence identify a period of several consecutive days during which the extreme event took place. For instance the maxima in 2007 occurred all in the period 4–8 March, which suggests that they make part of one extreme event. Similar examples are the periods 25–31 Dec 2010, 4–12 Feb 2013, 2–4 June 2016, etc. For most of the years this period spans between 3 and 7 days. We will take this into account when forming independent events from the dataset. In particular we choose a window of 7 consecutive calendar days within which we believe the extreme event have propagated through the seven locations. We have experimented with different length of that window, namely 3 and 5 days event period, but we have found that the estimation and analysis results are robust to that choice.

Figure 17 illustrates the water levels attained at the different locations during selected years from Table 2. The maxima of Sens, Nemours and Meaux seem to be relatively homogeneous compared to the maxima in Paris.

Plot of maxima attained at each location during selected events from Table 2

For all of the stations water level is recorded several times a day and we take the daily average to form a dataset of daily observations. Accounting for the gaps in the mentioned period (see Tables 1 and 2) we end up with a dataset of 3408 daily observations in the period from 1 October 2005 to 8 April 2019. The dataset represents five time series each of length 3408. We consider two sources of non-stationarity: seasonality and serial correlation.

The serial correlation can be due to closeness in time or presence of long term time trend in the observations. We first apply a declustering procedure, similar to the one in Asadi et al. (2015) in order to form a collection of supposedly independent events. As a first step each of the series is transformed to ranks and the sum of the ranks is computed for every day in the dataset. The day with the maximal rank is chosen, say d∗. A period of 2r + 1 consecutive days, centered around d∗ is considered and only the observations falling in that period are selected to form the event. Within this period the station-wise maximum is identified and the collection of the station-wise maxima forms one event. Because there is some evidence that the time an extreme event takes to propagate through the seven nodes in our model is about 3–7 days, we choose r = 3, hence we consider that one event lasts 7 days. In this way we obtain 717 observations of supposedly independent events. As it was mentioned the results are robust to the choice of r = {1, 2, 3}.

We test for seasonality and trends each of the series (each having 717 observations). The season factor is significant across all series and the time trend is marginally significant for some of the locations. We used a simple time series model to remove these non-stationarities. The model is based on season indicators and a linear time trend

where 𝜖t for t = 1, 2,… is a stationary mean zero process. After fitting the model in Eq. 29 to each of the five series through ordinary least squares we obtain the residuals and use those in the estimation of the extremal dependence.

1.7 A.7 ECE-based confidence interval for the dependence parameters

Let \(\hat {\theta }_{n,k} = \hat {\theta }_{n,k}^{\text {ECE}}\) denote the pairwise extremal coefficient estimator in Eq. 22 and let 𝜃0 denote the true vector of parameters. By Einmahl et al. (2018, Theorem 2) with Ω equal to the identity matrix, the ECE is asymptotically normal,

provided \(k = k_{n} \to \infty \) such that k/n → 0 fast enough (Einmahl et al. 2012, Theorem 4.6). The asymptotic covariance matrix takes the form

The matrices \(\dot {L}\) and ΣL depend on 𝜃0 and are described below. For every k and every e ∈ E, an asymptotic 95% confidence interval for the edge parameter 𝜃0,e is given by

First, recall that \(\mathcal {Q} \subseteq \{ J \subseteq U : |J| = 2 \}\) is the set of pairs on which the ECE is based and put \(q = |\mathcal {Q}|\). Define the \(\mathbb {R}^{q}\)-valued map \(L(\theta ) = \left (l_{J}(1,1;\theta ), J \in \mathcal {Q}\right )\) and let \(\dot {L}(\theta ) \in \mathbb {R}^{q \times |E|}\) be its matrix of partial derivatives. For a pair J = {u,v} and an edge e = (a,b), the partial derivative of lJ(1, 1; 𝜃) with respect to 𝜃e is given by

where puv is the path sum as in Eq. 15 and ϕ denotes the standard normal density function. The partial derivatives of lJ(1, 1; 𝜃) with respect to 𝜃e for every e ∈ E form a row of the matrix \(\dot {L}(\theta )\).

Second, ΣL(𝜃0) is the q × q covariance matrix of the asymptotic distribution of the empirical stdf,

The elements of the matrix ΣL(𝜃0) are defined in terms of the stdf evaluated at different coordinates and of the partial derivatives of the stdf l(x; 𝜃) with respect to the elements of x. For details we refer to Einmahl et al. (2018, Section 2.5). Here we note that the partial derivatives just mentioned are

1.8 A.8 Bootstrap confidence interval for the Pickands dependence function

For assessing the goodness-of-fit of the proposed model (Section 5.2), we construct non-parametric 95% confidence intervals for A(w) = l(1 − w,w) for w ∈ [0, 1]. As shown in Kiriliouk et al.(2018, Section 5) this can be achieved by resampling from the empirical beta copula. For every fixed w ∈ [0, 1] we seek with a(w) and b(w) such that

where \(\hat {l}_{n,k}\) is the non-parametric estimator of the stdf. For a(w) and b(w) satisfying the above expression, a point-wise confidence interval is given by

Let \((Y^{\ast }_{v,i})_{v \in U}\), for i = 1,…,n, be a random sample from the empirical beta copula drawn according to steps A1–A4 of Kiriliouk et al. (2018, Section 5). Let the function \(\hat {l}^{\beta }_{n,k}\) be the empirical beta stdf based on the original data and let the function \(\hat {l}^{\ast }_{n,k}\) be the non-parametric estimate of the stdf using the bootstrap sample.

We use the distribution of \(\hat {l}_{n,k}^{\ast }-\hat {l}^{\beta }_{n,k}\) conditionally on the data as an estimate of the distribution of \(\hat {l}_{n,k}-l\). Hence, we estimate a(w) and b(w) by a∗(w) and b∗(w) respectively defined implicitly by

We further estimate the bootstrap distribution of \(\hat {l}^{\ast }_{n,k}\) by a Monte Carlo approximation obtained by N = 1000 samples of size n from the empirical beta copula. As a consequence, the lower and upper bounds for \(\hat {l}^{\ast }_{n,k}(1-w,w)\) above are equated to the empirical 0.025- and 0.975-quantiles, respectively, yielding

Replacing a(w) and b(w) in Eq. 30 by a∗(w) and b∗(w) respectively as solved from Eq. 31 yields the bootstrapped confidence interval for A(w) shown in Fig. 7.

Rights and permissions

About this article

Cite this article

Asenova, S., Mazo, G. & Segers, J. Inference on extremal dependence in the domain of attraction of a structured Hüsler–Reiss distribution motivated by a Markov tree with latent variables. Extremes 24, 461–500 (2021). https://doi.org/10.1007/s10687-021-00407-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10687-021-00407-5

Keywords

- Multivariate extremes

- Tail dependence

- Graphical models

- Latent variables

- Hüsler–Reiss distribution

- Markov tree

- Tail tree

- River network