Abstract

While univariate nonparametric estimation methods have been developed for estimating returns in mean-downside risk portfolio optimization, the problem of handling possible cross-correlations in a vector of asset returns has not been addressed in portfolio selection. We present a novel multivariate nonparametric portfolio optimization procedure using kernel-based estimators of the conditional mean and the conditional median. The method accounts for the covariance structure information from the full set of returns. We also provide two computational algorithms to implement the estimators. Via the analysis of 24 French stock market returns, we evaluate the in-sample and out-of-sample performance of both portfolio selection algorithms against optimal portfolios selected by classical and univariate nonparametric methods for three highly different time periods and different levels of expected return. By allowing for cross-correlations among returns, our results suggest that the proposed multivariate nonparametric method is a useful extension of standard univariate nonparametric portfolio selection approaches.

Similar content being viewed by others

1 Introduction

Modern portfolio theory (MPT) is one of the most applied and recognized investment approaches used by investors today. The theoretical basis on which it relies is not very complicated and easy to apply, which is one of the reasons for its success. Rather than analyzing each investment individually, the key idea is to have a look at the portfolio as a whole and hence take into account the correlation structure between assets. In fact, the MPT originates from the so-called mean–variance (MV) portfolio model of Markowitz (1952); see also Markowitz (1959, 1987). The optimizing asset allocation is simply defined as the process of mixing asset weights of a portfolio within the constraints of an investor’s capital resources to yield the most favorable risk-return trade-off. For typical risk-averse investors, an optimal combination of investment assets that gives a lower risk and a higher return is always preferred.

The variance, which is the deviation above and below the mean return, is used as a risk measure in portfolio optimization to find the trade-off between risk and return. In order to compare investment options, Markowitz developed a mathematical framework to describe each investment or each asset class using unsystematic risk statistics. Indeed, by quantifying investment risk in the form of the mean, variance, and covariance of returns, Markowitz gave investors a mathematical approach to asset selection and portfolio management. He used these statistics to derive a so-called efficient frontier, or risk-reward equation, where every portfolio maximizes the expected return for a given variance, or equivalently minimizes variance for a given expected return. The efficient frontier flattens as it goes higher because there is a limit to the return an investor can expect. In a complete market without riskless lending and borrowing, a whole range of efficient asset portfolios with stochastic dominant features can be determined, which collectively delineates a convex MV frontier. However, variance is a questionable measure of risk for at least two reasons:

-

(i)

it makes no distinction between gains and losses;

-

(ii)

it is an appropriate measure of risk only when the underlying distribution of returns is symmetric with moments of order two.

Markowitz (1959) recognized the asymmetrical inefficiencies inherited in the traditional MV model. To overcome this drawback, he suggested a downside risk (DSR) measured by the semivariance, which takes into consideration the asymmetry and the risk perception of investors. In fact, symmetry of asset return distributions have been widely rejected in practice, see, for example, Eftekhari and Satchell (1996). This fact justifies the use of semivariance when the presence of skewness or any other measure of asymmetry is observed. The semivariance is often considered as a more plausible risk measure than the variance. However, mean–semivariance optimal portfolios cannot be easily derived as the semicovariance matrix is endogenous and not symmetric (see, e.g., Estrada 2004, 2008), and the classical Lagrangian method is not applicable to resolve the optimization problem.

de Athayde (2001) has developed an algorithm to construct a mean–DSR portfolio frontier. Although this frontier is continuous and convex, it has several kinks due to the fact that asset returns are not identically distributed. Clearly, the frontier is made on segments of parabolas (piecewise of quadratic functions), each one becoming steeper and steeper as we move toward the extremes, in either direction. They are connected to each other producing the successive kinks. The more observations we have, the more parabolas will appear and the smaller the segment of each will become. Otherwise, the number of convexity kinks will increase with the number of observations, getting closer and closer to each other, until when we reach the asymptotic limit, they will not be qualified as kinks any more, and the whole portfolio frontier will have a smooth shape which can be compared to the one obtained with the mean–variance portfolio model of Markowitz (1952).

Since, from a practitioner point of view, we always have a finite number of observations, the DSR portfolio frontier will always exhibit such convexity kinks. In order to overcome this problem, de Athayde (2003) used nonparametric techniques to estimate smooth continuous distribution of the portfolio in question. The major contribution is to replace returns by their mean kernel estimates (nonparametric mean regression). The advantage of this technique is to provide an effect similar to the case in which observations are continuous yielding a smoother portfolio frontier. Although this contribution is innovative, the paper is unstructured with no simulations and no applications. Another neglected aspect which deserves serious attention concerns the theory: a great confusion is palpable in the estimators writing. Ben Salah et al. (2018a) revisited de Athayde’s work by making it more rigorous. The mean nonparametric estimator is clarified and its parameters are exhibited as well as their practical choices. The corresponding optimization algorithms were coded using the R programming language and empirically validated on real data. Secondly, taking advantage on the robustness of the median, de Athayde’s work is improved by proposing another method to optimize a portfolio. This new method is based on nonparametric estimation of conditional median based on kernel methods. It is well known that the median is more robust than the mean and less sensitive to outliers. Returns will be replaced by their nonparametric median estimators. Nevertheless, the computing step and the convergence of the algorithm takes a long time due to the construction of the estimators: the asset estimation returns are derived from the estimation of the portfolio returns and they change at each computing step. Convergence is assumed to occur when the portfolio weights stay within some fixed tolerance value across successive iteration of the optimization stage. Contrary to de Athayde (2003), Ben Salah et al. (2018a, b) proposed a new strategy to speed up the convergence of the algorithm: the idea is to start by estimating all the returns of each asset using kernel mean or median estimates. The portfolio return estimates are then obtained as a linear combination of the different asset return estimates, and the overall CPU load is drastically reduced.

Although some progresses have been made, all methods introduced above are univariate and do not take into account any possible correlation between assets. In this paper, an alternative nonparametric method to derive portfolio frontiers is proposed. The proposed approach is multivariate and based on vectorial nonparametric estimation of returns using multivariate mean and median. It has the advantage of taking into consideration the possible correlation between asset returns without specifying any specific dependence structure.

The paper is organized as follows. Section 2 presents standard models of portfolio optimization. Section 3 introduces the univariate and multivariate kernel-based estimators for the conditional mean and median. We also discuss their optimal parameter values. These estimators give an estimator of the DSR. Section 4, based on the previous estimators, exhibits two DSR optimization algorithms to get an optimal portfolio and the corresponding efficient frontier. Based on real data, Sect. 5 provides empirical support for the proposed multivariate nonparametric portfolio selection method and compares its efficiency with classical and univariate nonparametric portfolio selection methods. Section 6 contains some concluding remarks.

2 Standard approaches

2.1 The M–V model

A mean–variance analysis is the process of weighing risk (variance) against expected return. By looking at the expected return and variance of an asset, investors attempt to make more efficient investment choices: seeking the lowest variance for a given expected return or seeking the highest expected return for a given variance level. More precisely, the classical MV portfolio optimization model aims at determining the proportions (weights) \(\omega _{i}\) of a given capital to be invested in each asset i belonging to a predetermined set or market, so as to minimize the risk of the return of the whole portfolio for a specified expected return \(E^{*}\).

Let m denote the number of assets, \(\{R_{i}\}^{m}_{i=1}\) a set of random returns, and \(\{R_{i,t}\}^{T}_{t=1}\) the set of observed returns of size \(T>m\ge 2\). In addition, let \(\mu _{i}\) be the expected return of asset i, and \((\sigma _{ij})\) the (i, j)th coefficient of the m-dimensional variance-covariance matrix \(\mathbf {M}\) of asset returns. Then, for a required level \(E^{*}\) of the portfolio return \(R_{p,t}=\sum ^{m}_{i=1}\omega _{i}R_{i,t}\), the MV model can be written as a convex linear optimization problem with the following form:

where \(\varvec{\omega }=(\omega _1, \ldots , \omega _m)^{\prime }\), \(\varvec{\mu }=(\mu _{1}, \ldots , \mu _{m})^{\prime }\), and \(\mathbf {1}\) is an m-dimensional vector whose elements are all one. The optimization problem can be solved by a number of efficient algorithms with moderate computational effort, even for large values of m. Moreover, (1) can be solved for a specific value of \(E^{*}\) or, alternatively, for several values of \(E^{*}\) and thus generating the minimum variance set. Using the Lagrange multiplier method, the \(m\times 1\) vector of optimal (op) weights is given by

where \(\alpha =\mathbf {1}^{\prime }\mathbf {M}^{-1}\mathbf {1}\), \(\lambda =\varvec{\mu }^{\prime } \mathbf {M}^{-1}\mathbf {1}\), and \(\theta =\varvec{\mu }^{\prime }\mathbf {M}^{-1}\mathbf {1}\). The optimal variance of \(R_{p,t}\) is obtained by pre-multiplying (2) with \(\varvec{\omega }_{\mathrm{op}}^{\prime } \mathbf {M}\), that is

In this case, the efficient frontier curve is continuously convex. It is a parabola in mean–standard deviation space. Either way, it is important to notice that the risk of the portfolio can be expressed as a function of the risk of the individual assets in the portfolio. Moreover, all the variances, covariances, and expected returns of the individual assets are exogenous variables.

2.2 The DSR model

However, the variance is a questionable measure of risk for at least three reasons: (i) it makes no distinction between gains and losses; (ii) it is an appropriate measure of risk only when the underlying distribution of returns is symmetric; and (iii) it can be applied as a risk measure only when the underlying distribution of the returns is symmetric.

Markowitz (1959) recognized the asymmetrical inefficiencies inherited in the traditional MV model. To overcome this drawback, he suggested to use a downside risk (DSR) defined by

where B is any benchmark return chosen by the investor. The benchmark can be equal to 0, or the risk-free rate \(R_{f}\), any stock market index, or the mean \(\mu _{p}\) of the portfolio return \(R_{p,t}\). When \(B=\mu \), DSR is a downside risk measure called semivariance.

The DSR is a more robust measure of asset risk that focuses only on the risks below a target rate of return. This measure of risk is more plausible for several reasons. First, investors obviously do not dislike upside volatility; they only dislike downside volatility. Second, the DSR measure is more useful than the variance when the underlying distribution of returns is asymmetric and just as useful when the underlying distribution is symmetric. In other words, the DSR is at least a measure of risk as useful as the variance. Finally, the DSR combines the information provided by two statistics, variance and skewness, and hence making it possible to use a one-factor model to estimate required returns; see, e.g., Nawrocki (1999) and Estrada (2006). The corresponding optimization problem can be written as follows:

where the elements of the \(m\times m\) matrix \(\mathbf {M}_{\mathrm{SR}}\) are given by

and where \(T_0\) is the period in which the portfolio underperforms the target return B.

The above framework provides an exact estimate of the portfolio semivariance. However, finding the portfolio with a minimum DSR is not an easy task. The major obstacle is that the semicovariance matrix \(\mathbf {M}_{\mathrm{SR}}\) is endogenous; that is, a change in weights affects the periods in which the portfolio underperforms the target rate of return, which in turn affects the elements of \(\mathbf {M}_{\mathrm{SR}}\). To get an approximate solution of (5), Hogan and Warren (1972) define the sample semicovariance between assets i and j as

This definition has two drawbacks. The benchmark return is limited to the risk-free rate \(R_{f}\) and cannot be tailored to any desired benchmark. Moreover, it is usually the case that \(M_{i,j}^{\mathrm{HW}}(\cdot )\ne M_{j,i}^{\mathrm{HW}}(\cdot )\). This second characteristic is particularly limiting both formally, i.e., the semicovariance matrix is usually asymmetric, and intuitively, i.e., it is not clear how to interpret the contribution of assets i and j to the risk of the portfolio. Further, the optimization problem (1) is not quadratic anymore which may cause optimization difficulties.

In order to overcome these drawbacks, Estrada (2004, 2008) defines the sample semicovariance between assets i and j with respect to a benchmark B as

This definition can be tailored to any desired B and generates a symmetric \((M_{i,j}=M_{j,i})\), nonnegative, definite, semicovariance matrix. Next, the solution of the MV problem follows directly.

To get a direct solution of (5), a simple and iterative optimization algorithm was developed by de Athayde (2001) that ensures the convergence to the optimal solution. However, due to some properties of the frontier, when only a finite number of observations is available, the portfolio frontier presents some discontinuity on its convexity. To address this issue, de Athayde (2003) generalizes his algorithm by introducing univariate kernel-based mean estimators of the returns. This idea provides an effect similar to the case in which observations are continuous and, moreover, it establishes a smooth portfolio frontier comparable to that obtained by the MV optimization method. Although de Athayde’s contribution is innovative, his two papers are unstructured with no simulations and no applications. Another neglected aspect which deserves serious attention concerns the theory: a great confusion is palpable in the setup of the estimators. Motivated by these observations, Ben Salah et al. (2018a) revisited de Athayde’s work by making it more rigorous. In particular, they clarify the kernel-based mean estimator, exhibit its tuning parameters, and discuss implementation issues. Then, by taking advantage of the robustness of the median, these authors improved on de Athayde’s results by replacing the kernel-based mean return estimators by their kernel-based median counterparts.

Valuable as the univariate kernel-based estimation methods can sometimes be in portfolio selection, they do not take into account the possible cross-correlations between asset returns. Indeed, it is well known that returns are not mutually independent. It is therefore worth proposing multivariate, or vector, nonparametric techniques to estimate smooth continuous distributions of a set of portfolios under study. This is the topic of the current paper. In particular, we focus on both univariate and multivariate kernel-based mean and median estimators of \(\{R_{i,t}\}\). Next, we use the smoothed continuous distributions of the returns to optimize their corresponding DSR. Finally, given an optimal DSR, we discuss the construction of a new and smooth portfolio frontier, thus providing a more flexible framework for portfolio selection.

3 Nonparametric approaches

In this section, first we introduce the univariate nonparametric estimators of returns of de Athayde (2001, 2003) and Ben Salah et al. (2018a, b). Then we propose two multivariate nonparametric, kernel-based, estimators. Throughout the paper we assume that the vector of random returns \(\mathbf {R}_{t}=(R_{1,t},\ldots ,R_{m,t})^{\prime }\) \((t\in \mathbb {Z})\) consists of strictly stationary and ergodic time series processes taking values in \(\mathbb {R}^{m}\).

3.1 Univariate nonparametric return estimation

Let \(K(\cdot )\) be a probability density function satisfying some regularity conditions, and \(h_{T}>0\) the bandwidth or smoothing parameter. Here, \(K:\mathbb {R}\rightarrow \mathbb {R}\) is a so-called kernel function. Common assumptions on the bandwidth are \(h\equiv h_{T}\rightarrow 0\), and \(Th_{T}\rightarrow \infty \) as \(T\rightarrow \infty \). In addition, let \(\{R_{i,t}\}^{T}_{t=1}\) be a sequence of observations on the process \(\{R_{i,t},t\in \mathbb {Z}\}\) \((i=1,\ldots ,m)\) with each ith subprocess having a continuous distribution function and a proper density. Then, at time t, a kernel smoother of the mean (Mn) of \(\{R_{i,t}, t\in \mathbb {Z}\}\) is defined as

Note that (9) is essentially a weighted average of \(\{R_{i,\ell }\}^{T}_{\ell =1}\) in which the weight given to each \(R_{i,\ell }\) decreases with its distance from the observation in question.

The disadvantage of (9) is that it is sensitive to outliers and may be inappropriate in some cases, such as when the distribution of \(\{R_{i,t}, t\in \mathbb {Z}\}\) is heavy-tailed or asymmetric. In those cases, it may be sensible to use a univariate kernel-based estimator of the median (Mdn) rather than an estimator of the mean. In particular at time t, and given an \(L_{1}\)-loss function, the estimator is defined as

Alternatively, one can obtain \(\widehat{R}^{\,\mathrm{Mdn}}_{i,t}\) by solving the equation

where \(F_{T}(\cdot |\cdot )\) is an estimator of the conditional distribution function of \(R_{i,\ell }\) given \(R_{i,t}\), and \(I(\cdot )\) is the indicator function.

Remark 1

The kernel \(K(\cdot )\) determines the shape of the weighting function. The use of symmetric and unimodal kernels is standard in nonparametric estimation. Throughout the empirical analysis we adopt the Gaussian kernel. For practical problems the choice of the kernel is not so crucial, as compared to the choice of the bandwidth. For all univariate and multivariate kernel-based methods, we use the so-called Sheather–Jones bandwidth, which is generally believed to be a satisfactory way of doing so. Under certain mixing conditions of the process \(\{R_{i,t},t\in \mathbb {Z}\}\), uniform convergence rates and asymptotic normality of the estimators (9) and (10) can be proved; see, e.g., De Gooijer (2017) and Gannoun et al. (2003) and the references therein.

3.2 Multivariate nonparametric return estimation

Multivariate kernel-based mean and median estimation is a straightforward extension of plain univariate estimation. Let \(\mathcal {K}(\cdot ):\mathbb {R}^{m}\rightarrow \mathbb {R}\) be a multivariate kernel density function with a symmetric positive definite \(m\times m\) matrix \(\mathbf {H}\) known as the bandwidth matrix. Then, at time t, the multivariate kernel-based mean estimator (M-Mn) and the multivariate kernel-based median estimator (M-Mdn) of the m-dimensional process \(\{\mathbf {R}_{t},t\in \mathbb {Z}\}\) are respectively defined as

where \(||\, \cdot \,||\) is a matrix or vector norm. In this paper, we adopt the Euclidean norm.

Computing the above estimators requires a numerical procedure, which becomes increasingly difficult to implement as the dimension m increases. As a simplification the matrix \(\mathbf {H}\) is often taken to be a diagonal matrix with values \(\{h_{i}\equiv h_{i,T}\}^{m}_{i=1}\) such that \(h_{i}>0\), \(h_{i}\rightarrow 0\) and \(Th_{i}\rightarrow \infty \), as \(T\rightarrow \infty \). In addition, it is common to consider a product of m univariate kernel functions, i.e., \(\mathcal {K}(u)=\prod ^{m}_{i=1}K(u_{i})\). Then we can write the above estimators as follows

A kernel-based estimate of \(R_{p,t}\) is given by \(\widetilde{R}_{p,t_{}}=\varvec{\omega }^{\prime }\widetilde{\mathbf {R}}_{t}\), where \(\widetilde{\mathbf {R}}_{t}=(\widetilde{R}_{1,t},\ldots ,\widetilde{R}_{m,t})^{\prime }\) denotes either \(\widehat{\mathbf {R}}^{\mathrm{M-Mn}}_{t}\) or \(\widehat{\mathbf {R}}^{\mathrm{M-Mdn}}_{t}\). Given this estimate, a kernel-based estimate of the DSR follows by replacing \(R_{p,t}\) in (4) by \(\widetilde{R}_{p,t}\).

4 Multivariate mean–DSR optimization

4.1 Computational algorithms

Recall the optimization problem in (5). Given the set of kernel-based estimates \(\{\widetilde{R}_{i,t}\}^{m}_{i=1}\), the matrix \(\mathbf {M}_{\mathrm{SR}}\) has the following elements

Using the above framework, we propose two algorithms for the optimization of (5). Compared to the proposal by Ben Salah et al. (2018a), both algorithms account for possible cross-correlations in the asset returns.

Algorithm I:

-

(i)

Initial stage: Start with an arbitrary portfolio with weight vector \(\varvec{\omega }_{0}=(\omega _{0,1},\ldots ,\omega _{0,m})^{\prime }\) and portfolio return \(R^{(0)}_{p,t}= \varvec{\omega }^{\prime }_{0}\mathbf {R}_{t}\). At each time point t, compute the following two univariate nonparametric estimators

$$\begin{aligned} \widehat{R}^{(0),\mathrm{Mn}}_{p,t}&= \frac{\sum ^{T}_{\ell =1}R^{(0)}_{p,\ell } K\Big (\frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\Big ) }{\sum ^{T}_{\ell =1}K\Big (\frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\Big )}\,\, \hbox {and}\nonumber \\ \widehat{R}^{(0),\mathrm{Mdn}}_{p,t}&= \arg \min _{z\in \mathbb {R}} \frac{\sum _{\ell =1}^{T}|R^{(0)}_{p,\ell }-z|K\Big (\frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\Big )}{\sum _{\ell =1}^{T}K\Big (\frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\Big )}. \end{aligned}$$(15)In addition, compute the multivariate nonparametric estimators

$$\begin{aligned} \widehat{\mathbf {R}}^{(0),\mathrm{M-Mn}}_{t}&= \frac{\sum _{\ell =1}^{T} \mathbf {R}_{\ell } \mathcal {K}\Big (\frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\Big ) }{\sum _{\ell =1}^T\mathcal {K}\Big (\frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\Big ) },\,\, \nonumber \\ \widehat{\mathbf {R}}^{(0),\mathrm{M-Mdn}}_{t}&= \arg \min _{\mathbf {z}\in \mathbb {R}^{m}}\frac{\sum _{\ell =1}^{T} ||\mathbf {R}_{\ell }-\mathbf {z}||\mathcal {K}\left( \frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\right) }{\sum _{\ell =1}^{T}\mathcal {K}\left( \frac{R^{(0)}_{p,t}-R^{(0)}_{p,\ell }}{h}\right) }, \end{aligned}$$(16)where \(\mathbf {R}_{\ell }=(R_{1,\ell },\ldots ,R_{m,\ell })^{\prime }\) and \(\mathcal {K}(\cdot )=K^{m}(\cdot )\). Let \(\widetilde{R}^{(0)}_{p,t}\) denote either \(\widehat{R}^{(0),\mathrm{Mn}}_{p,t}\) or \(\widehat{R}^{(0),\mathrm{Mdn}}_{p,t}\). Then, for a given B and an m-dimensional vector \(\mathbf {V}_{t}=\big ((\widetilde{R}^{(0)}_{1,t}-B),\ldots ,(\widetilde{R}^{(0)}_{m,t}-B)\big )^{\prime }\), compute the positive semidefinite matrix \(\widetilde{\mathbf {M}}_{0}=(1/T)\sum _{t\in S_{0}}\mathbf {V}_{t}\mathbf {V}_{t}^{\prime }\), where \(S_{0}=\{t:1\le t \le T, (\widetilde{R}^{(0)}_{p,t}-B) < 0\}\). Next, similar to (2), compute the \(m\times 1\) portfolio weight vector \(\varvec{\omega }_1\). That is

$$\begin{aligned} \varvec{\omega }_{1}=\frac{\alpha _{0} E^{*}-\lambda _{0}}{\alpha _{0}\theta -\lambda _{0}^{2}} \widetilde{\mathbf {M}}_{0}^{-1}\widetilde{\varvec{\mu }}_{0}+ \frac{\theta _{0}-\lambda _{0}E^{*}}{\alpha _{0}\theta _{0}-\lambda _{0}^{2}}\widetilde{\mathbf {M}}_{0}^{-1}\mathbf {1}, \end{aligned}$$(17)where \(\alpha _0=\mathbf {1}^{\prime }\widetilde{\mathbf {M}}_{0}^{-1}\mathbf {1}\), \(\lambda _{0}=\widetilde{\varvec{\mu }}_{0}^{\prime }\widetilde{\mathbf {M}}_{0}^{-1}\mathbf {1}\), \(\theta _{0}=\widetilde{\varvec{\mu }}_{0}^{\prime }\widetilde{\mathbf {M}}_{0}^{-1}\widetilde{\varvec{\mu }}_{0}\), and where \(\widetilde{\varvec{\mu }}_{0}= (\overline{\widetilde{R}}^{(0)}_{1},\ldots ,\overline{\widetilde{R}}^{(0)}_{m})^{\prime }\) is an \(m\times 1\) vector of mean returns with \(\overline{\widetilde{R}}^{(0)}_{i}=(1/T)\sum ^{T}_{t=1}\widetilde{R}^{(0)}_{i,t}\) \((i=1,\ldots ,m)\).

-

(ii)

Using (17), compute the portfolio return at time t, i.e., \(R^{(1)}_{p,t} = \varvec{\omega }^{\prime }_{1}\mathbf {R}_{t}\). Next, compute the univariate nonparametric estimators

$$\begin{aligned} \widehat{R}^{(1),\mathrm{Mn}}_{p,t}&= \frac{\sum ^{T}_{\ell =1}R^{(1)}_{p,\ell } K\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }{\sum ^{T}_{\ell =1}K\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }\,\, \hbox {and}\nonumber \\ \widehat{R}^{(1),\mathrm{Mdn}}_{p,t}&= \arg \min _{z\in \mathbb {R}} \frac{\sum _{\ell =1}^{T}|R^{(1)}_{p,\ell }-z|K\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }{\sum _{\ell =1}^{T}K\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }. \end{aligned}$$(18)Also, compute the multivariate nonparametric estimators

$$\begin{aligned} \widehat{\mathbf {R}}^{(1),\mathrm{M-Mn}}_{t}&= \frac{\sum _{\ell =1}^{T} \mathbf {R}_{\ell } \mathcal {K}\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }{\sum _{\ell =1}^T\mathcal {K}\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }, \nonumber \\ \widehat{\mathbf {R}}^{(1),\mathrm{M-Mdn}}_{t}&= \arg \min _{\mathbf {z}\in \mathbb {R}^{m}}\frac{\sum _{\ell =1}^{T} ||\mathbf {R}_{\ell }-\mathbf {z}||\mathcal {K}\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }{\sum _{\ell =1}^{T}\mathcal {K}\left( \frac{R^{(1)}_{p,t}-R^{(1)}_{p,\ell }}{h}\right) }. \end{aligned}$$(19)Next, similarly to \(S_{0}\) in step (i), construct the set \(S_{1}=\{t:1\le t\le T, (\widetilde{R}^{(1)}_{p,t}-B) < 0\}\). Then, calculate a new positive semidefinite matrix \(\widetilde{\mathbf {M}}_{1}=\big (1/n(S_{1})\big )\sum _{t\in S_{1}}\mathbf {V}_{t}\mathbf {V}_{t}^{\prime }\), where \(\mathbf {V}_{t}=\big ((\widetilde{R}^{(1)}_{1,t}-B),\ldots ,(\widetilde{R}^{(1)}_{m,t}-B)\big )^{\prime }\) and \(n(S_{1})\) is the cardinality of set \(S_{1}\). Finally, compute the \(m\times 1\) portfolio weight vector \(\varvec{\omega }_{2}\), i.e.,

$$\begin{aligned} \varvec{\omega }_{2}=\frac{\alpha _{1} E^{*}-\lambda _{1}}{\alpha _{1}\theta _{1} -\lambda _{1}^{2}}\widetilde{\mathbf {M}}_{1}^{-1}\widetilde{\varvec{\mu }}_{1}+ \frac{\theta _{1}-\lambda _{1} E^{*}}{\alpha _{1}\theta _{1}-\lambda _{1}^{2}}\widetilde{\mathbf {M}}_{1}^{-1}\mathbf {1}, \end{aligned}$$(20)where \(\alpha _{1}=\mathbf {1}^{\prime }\widetilde{\mathbf {M}}_{1}^{-1}\mathbf {1}\), \(\lambda _{1}=\widetilde{\varvec{\mu }}_{1}^{\prime }\widetilde{\mathbf {M}}_{1}^{-1}\mathbf {1}\), \(\theta _{1}=\widetilde{\varvec{\mu }}_{1}^{\prime }\widetilde{\mathbf {M}}_{1}^{-1}\widetilde{\varvec{\mu }}_{1}\), and \(\widetilde{\varvec{\mu }}_{1}= (\overline{\widetilde{R}}^{(1)}_{1},\ldots ,\overline{\widetilde{R}}^{(1)}_{m})^{\prime }\) is an \(m\times 1\) vector of the mean returns associated with the set \(S_{1}\).

-

(iii)

Continue with step (ii) until at iteration step \(u+1\) the matrix \(\widetilde{\mathbf {M}}_{u+1}\) will be the same as \(\widetilde{\mathbf {M}}_{u}\) or, alternatively, if \(||\varvec{\omega }_{u+1}||\approx ||\varvec{\omega }_{u}||\). In that case, the weight vector of the minimum DSR portfolio, with expected return \(E^{*}\), is given by

$$\begin{aligned} \varvec{\omega }_{u+1}=\frac{\alpha _{u} E^{*}-\lambda _{u}}{\alpha _{u}\theta _{u} -\lambda _{u}^{2}}\widetilde{\mathbf {M}}_{u}^{-1}\widetilde{\varvec{\mu }}_{u}+ \frac{\theta _{u}-\lambda _{u}E^{*}}{\alpha _{u}\theta _{u}-\lambda _{u}^{2}} \widetilde{\mathbf {M}}_{u}^{-1}\mathbf {1}, \quad (u=1,2,\ldots ), \end{aligned}$$(21)where \(\alpha _{u}=\mathbf {1}^{\prime }\widetilde{\mathbf {M}}_{u}^{-1}\mathbf {1}\), \(\lambda _{u}=\widetilde{\varvec{\mu }}_{u}^{\prime }\widetilde{\mathbf {M}}_{u}^{-1}\mathbf {1}\), \(\theta _{u}=\widetilde{\varvec{\mu }}_{u}^{\prime }\widetilde{\mathbf {M}}_{u}^{-1}\widetilde{\varvec{\mu }}_{u}\), and \(\widetilde{\varvec{\mu }}_{u}= (\overline{\widetilde{R}}^{(u)}_{1},\ldots ,\overline{\widetilde{R}}^{(u)}_{m})^{\prime }\) is an \(m\times 1\) vector of the mean returns associated with the set \(S_{u}\).

-

(iv)

Finally, employ the quantities in step (iii) to approximate the DSR. Calling the resulting value \(\widehat{\hbox {DSP}}^{\mathrm{(I)}}\), i.e.,

$$\begin{aligned} \widehat{\hbox {DSR}}^{\mathrm{(I)}}(\varvec{\omega }_{u+1})=\frac{\alpha _{u}(E^{*})^{2}-2\lambda _{u} E^{*}+\theta _{u}}{\alpha _{u}\theta _{u}-\lambda _{u}^{2}}. \end{aligned}$$(22)

Clearly, with Algorithm I the estimation of the portfolio returns changes at each iteration step u. This may be time-consuming, in particular, when the set of potential asset returns contains many variables. The following algorithm avoids this problem.

Algorithm II:

-

(i)

Initial stage: Replace the vector \(\mathbf {R}_{t}\) by its nonparametric counterpart \(\widetilde{\mathbf {R}}_{t}\), i.e.,

$$\begin{aligned} \widetilde{\mathbf {R}}_{t}=\frac{\sum _{\ell =1}^{T} \mathbf {R}_{\ell }\mathcal {K}\left( \frac{\mathbf {R}_{\ell }-\mathbf {R}_{t}}{h}\right) }{\sum _{\ell =1}^{T} \mathcal {K}\left( \frac{\mathbf {R}_{\ell }-\mathbf {R}_{t}}{h}\right) }\quad \hbox {or}\quad \widetilde{\mathbf {R}}_{t}=\arg \min _{\mathbf {z}\in \mathbb {R}^{m}}~ \frac{\sum _{\ell =1}^{T}||\mathbf {R}_{\ell }- \mathbf {z} ||\mathcal {K}\Big (\frac{\mathbf {R}_{\ell }-\mathbf {R}_{t}}{h}\Big )}{\sum _{\ell =1}^{T}\mathcal {K} \Big (\frac{\mathbf {R}_{\ell }-\mathbf {R}_t}{h}\Big )}, \end{aligned}$$(23)where \(\mathcal {K}\left( \frac{\mathbf {R}_{\ell }-\mathbf {R}_{t}}{h}\right) = \prod _{i=1}^{m} K\Big (\frac{R_{i,\ell }-R_{i,t}}{h_{i}}\Big )\). Then, for an arbitrary weight vector \(\varvec{\omega }_{0}\), compute the associated portfolio return \(\widetilde{R}^{(0)}_{p,t}=\varvec{\omega }_{0}^{\prime }\widetilde{\mathbf {R}}_{t}\).

-

(ii)

For a given B, and an m-dimensional vector \(\mathbf {V}_{t}=\big ((\widetilde{R}_{1,t}-B),\ldots ,(\widetilde{R}_{m,t}-B)\big )^{\prime }\), compute the positive semidefinite matrix \(\widetilde{\mathbf {M}}_{0}=(1/T)\sum _{t\in S_{0}}\mathbf {V}_{t}\mathbf {V}_{t}^{\prime }\), where \(S_{0}=\{t:1\le t\le T, (\widetilde{R}^{(0)}_{p,t}-B) < 0\}\).

-

(iii)

Obtain an m-dimensional weight vector \(\varvec{\omega }_{1}\) by minimizing \(\varvec{\omega }^{\prime }\widetilde{\mathbf {M}}_{0} \varvec{\omega }\) subject to \(\varvec{\omega }^{\prime }\widetilde{\varvec{\mu }}= E^{*}\), and \(\varvec{\omega }^{\prime }\mathbf {1} = 1\), where \(\widetilde{\varvec{\mu }}=(\overline{\widetilde{R}}_{1},\ldots ,\overline{\widetilde{R}}_{m})^{\prime }\) is the empirical mean of the vector of returns obtained in step (i). An explicit solution is given by

$$\begin{aligned} \varvec{\omega }_{1}=\frac{\alpha _{0} E^{*}-\lambda _{0}}{\alpha _{0}\theta -\lambda _{0}^{2}} \widetilde{\mathbf {M}}_{0}^{-1}\widetilde{\varvec{\mu }}+ \frac{\theta _{0}-\lambda _{0}E^{*}}{\alpha _{0}\theta _{0}-\lambda _{0}^{2}}\widetilde{\mathbf {M}}_{0}^{-1}\mathbf {1}, \end{aligned}$$(24)where \(\alpha _0=\mathbf {1}^{\prime }\widetilde{\mathbf {M}}_{0}^{-1}\mathbf {1}\), \(\lambda _{0}=\widetilde{\varvec{\mu }}^{\prime }\widetilde{\mathbf {M}}_{0}^{-1}\mathbf {1}\), and \(\theta _{0}=\widetilde{\varvec{\mu }}^{\prime }\widetilde{\mathbf {M}}_{0}^{-1}\widetilde{\varvec{\mu }}\).

-

(iv)

Repeat steps (ii)–(iii) many times until the algorithm converges, i.e., when at iteration step \(u+1\), \(||\varvec{\omega }_{u+1}||\approx ||\varvec{\omega }_{u}||\). Then

$$\begin{aligned} \varvec{\omega }_{u+1}=\frac{\alpha _{u}E^{*}-\lambda _{u}}{\alpha _{u}\theta _{u}-\lambda _{u} ^{2}}\widetilde{\mathbf {M}}_{u}^{-1}\widetilde{\varvec{\mu }}+\frac{\theta _{u}-\lambda _{u} E^{*}}{\alpha _{u}\theta _{u}-\lambda _{u}^{2}}\widetilde{\mathbf {M}}_{u}^{-1}\mathbf {1}, \quad (u=1,2,\ldots ), \end{aligned}$$(25)where \(\alpha _{u}=\mathbf {1}^{\prime }\widetilde{\mathbf {M}}_{u}^{-1}\mathbf {1}\), \(\lambda _{u}=\widetilde{\varvec{\mu }}^{\prime }\widetilde{\mathbf {M}}_{u}^{-1}\mathbf {1}\), and \(\theta _{u}=\widetilde{\varvec{\mu }}^{\prime }\widetilde{\mathbf {M}}_{u}^{-1}\widetilde{\varvec{\mu }}\). We denote the DSR associated with this algorithm by

$$\begin{aligned} \widehat{\hbox {DSR}}^{\mathrm{(II)}}(\varvec{\omega }_{u+1})=\frac{\alpha _{u}(E^{*})^{2}-2\lambda _{u} E^{*}+\theta _{u}}{\alpha _{u}\theta _{u}-\lambda _{u}^{2}}. \end{aligned}$$(26)

Remark 2

When \(|S_0| < m\), the empirical matrix \({\widetilde{M}}_{0}\) will be singular so that generalized inverse matrices should be used in (17) or equivalent equations.

4.2 Efficient frontier construction

In order to build the portfolio frontier, there is a need to select some other points in the efficient set. As the DSR is a function of the expected return \(E^{*}\), by varying this value one can obtain a new value of \(\widehat{\hbox {DSR}}^{(\cdot )}(\cdot )\) and, hence, the efficient frontier. The equation for \(\widehat{\hbox {DSR}}^{(\cdot )}(\cdot )\) shows that, while the final matrix \(\mathbf {M}_{u}\) does not change, \(\widehat{\hbox {DSR}}^{(\cdot )}(\cdot )\) is a quadratic function of \(E^{*}\). However, if \(E^{*}\) changes considerably, both algorithms end up with a new matrix \(\mathbf {M}_{u}\), and therefore a new quadratic function. Thus, the portfolio frontier will be described by a sequence of segments of different quadratic functions. The more assets are used, the smoother will be the portfolio frontier in question, creating a similar effect as if one is adding more observations to the return series. In addition, it is known that the nonparametric, kernel-based, technique creates a continuous distribution of the returns, and hence gives rise to a new portfolio frontier with a smoother shape.

Remark 3

Algorithms I and II both allow short selling, i.e., there are no constraints on the weights \(\omega _{i}\) \((i=1,\ldots ,m)\). With selling constraints the additional condition \(\omega _{i}\ge 0\) is needed. There are many available software packages to solve the corresponding optimization problem; see, for instance, the R-quadprog package.

5 An empirical illustration

5.1 Data and methods

To illustrate the proposed portfolio selection methods, we present results for a set of French stock prices taken from Thomson Reuters. The data set consists of \(m=24\) daily stock closing prices \(\{P_{i,t}\}\), covering the time period 07/01/2000–06/01/2017. These assets belong to different sectors: banks, insurance, industry, energy, technology, and telecommunication. The stock prices under investigation are components of the CAC 40 index, the benchmark for the Euronext Paris market, reflecting the performance of the 40 largest equities in France. Removing missing values, the data set contains 4,214 observations. For all series, the returns are computed as \(R_{i,t}=(P_{i,t}-P_{i,t-1})/P_{i,t-1}\) with \(P_{i,t}\) adjusted for dividend payments and stock splits.

Figure 1 shows a graph of the CAC 40 index for the complete time period. We see a strong decrease of the index in mid 2008 during the financial crisis. It is also evident that after 2012 a slow recovery of the French economy settles in, as indicated by an upward trend. The grey-shaded areas depict the following three subperiods under investigation: “calm” (I), covering the year 2004 \((T=259)\), “crisis” (II), covering the year 2008 \((T=256)\), and “good” (III), covering the year 2013 \((T=255)\). The number of contemporaneous cross-correlations having a p value smaller than 0.05, out of a total of 276 p values for each subperiod, is 222 (calm), 276 (crisis), and 274 (good). Indeed, there are strong correlations between the individual stocks, which in all cases are positive. These results suggest the use of portfolio selection methods that explicitly take account of cross-correlations.

With respect to the portfolio optimization, we employ the following seven methods:

-

(1)

Naive, i.e., \(R_{p,t}=\sum ^{m}_{i=1}\omega _{i}R_{i,t}\) with \(\omega _{i}=(1/m)\).

-

(2)

Classic MV portfolio optimization. That is, using (1) with \(\varvec{\mu }=\big (\overline{R}_{1},\ldots ,\overline{R}_{m})^{\prime }\), \(\mathbf {M}=(1/T)\sum _{t}\mathbf {U}_{t}\mathbf {U}^{\prime }_{t}\), where \(\mathbf {U}_{t}=\big ((R_{1,t}-\overline{R}_{1}),\ldots ,(R_{m,t}-\overline{R}_{m})\big )^{\prime }\) and \(\overline{R}_{i}=(1/T)\sum ^{T}_{t=1}R_{i,t}\) \((i=1,\ldots ,m)\).

-

(3)

Univariate nonparametric mean DSR. That is, using (4) with \(R_{p,t}\) replaced by \(\widehat{R}_{p,t}=\sum ^{m}_{i=1}\omega _{i}\widehat{R}^{\mathrm{Mn}}_{i,t}\), where \(\widehat{R}^{\mathrm{Mn}}_{i,t}\) is defined by (9).

-

(4)

Univariate nonparametric median DSR. That is, using (4) with \(R_{p,t}\) replaced by \(\widehat{R}_{p,t}=\sum ^{m}_{i=1}\omega _{i}\widehat{R}^{\mathrm{Mdn}}_{i,t}\), where \(\widehat{R}^{\mathrm{Mdn}}_{i,t}\) is defined by (10).

-

(5)

Multivariate nonparametric mean DSR. That is Algorithm II with \(\widehat{R}^{\mathrm{Mn}}_{t}\) and \(\widehat{\mathbf {R}}^{\mathrm{M-Mn}}_{t}\).

-

(6)

Multivariate nonparametric median DSR in two variants. That is

-

(i)

Algorithm I with \(\widehat{R}^{\mathrm{Mdn}}_{t}\) and \(\widehat{\mathbf {R}}^{\mathrm{M-Mdn}}_{t}\), and

-

(ii)

Algorithm II with \(\widehat{R}^{\mathrm{Mdn}}_{t}\) and \(\widehat{\mathbf {R}}^{\mathrm{M-Mdn}}_{t}\).

-

(i)

Throughout all optimization procedures we set the benchmark \(B=0\) and the expected return \(E^{*}=0.01\) \((1\%)\). Moreover, the maximum number of iteration steps is fixed at 50. Note that Algorithm I with \(\widehat{R}^{\mathrm{Mn}}_{t}\) and \(\widehat{\mathbf {R}}^{\mathrm{M-Mn}}_{t}\) is not included in our study. The reason is that for this particular data set Algorithm I does not converge. That is, it oscillates between two different optimal portfolios, albeit equivalent in terms of returns, for the data under study. However, it should be said that theoretically both Algorithms I and II converge.

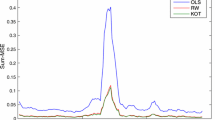

5.2 Efficient frontiers

Figure 2 displays the efficient frontier curves of the multivariate nonparametric median DSR method 6(ii) with no constraints on the portfolio weights. All other nonparametric portfolio optimization methods produced similar curves which are not distinguishable from the ones shown in Fig. 2, and hence they are not shown here. Compared to the classical MV portfolio optimization, the multivariate nonparametric efficient frontier based on median estimation dominates the other curves for any expected return. Long-short positions can be based on the sign of the portfolio weights. Across all nonparametric methods, 8 assets have negative signs for period I, 12 have negative signs for period II, and 11 assets have negative signs for period III. Given these results, one may take profit of the evolution of the stock market by taking short positions on assets with nonnegative weights.

Next, we investigate optimization methods 2–6 with short selling constraint. Short selling is a strategy to speculate if the market value of an asset is going to decline. It can also be used to hedge long positions. To be more specific, the strategy involves borrowing a stock from a broker and then selling it in the market. The stock is bought back and returned to the broker at a later date, called covering the short. If the stock drops, the short-seller buys at a lower price and then he makes money.

Under the short selling constraint, the results of the portfolio optimization methods differ for each subperiod and different levels of expected return. For period I, the optimal portfolio has nine nonzero weights corresponding to the following stocks: ArcelorMital, Klépierre, Pernod Ricard, Safran, Technip, Total, Unibail-Rodamco, Vinci, and Danone. In contrast, for period II the following four stock indices have nonzero weights: Air Liquide, Orange, Sodexo, and Danone. Finally, for period III there are eight stock indices with nonzero weights: Air Liquide, Klépierre, Pernod Ricard, Publicis Groupe, Safran, Sanofi, Sodexo, and Danone. Interestingly, for all three time periods, Danone is part of the optimal portfolio for all levels of expected return.

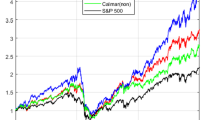

5.3 Forecasting evaluation

As a robustness check, this section reports the out-of-sample forecasting performance of portfolio selection methods 1–6 against the CAC 40 index returns in terms of mean forecast error (MFE), mean squared forecast error (MSFE), and mean absolute forecast error (MAFE). We adopt a rolling window-scheme with a forecast horizon of one quarter. In particular, all optimal portfolios are trained with a quarter and predictions are made on the next quarter. For instance, for period I, the first quarter of 2004 is used to test the performance of an optimal portfolio composed of individual securities selected in the 4th quarter of 2003. The length of the initial in-sample estimation period, having enough observations for reasonably accurate nonparametric estimates, balances with our desire for a relatively long out-of-sample period for forecast evaluation. With such a design, a total of 252 forecasts are generated.

The results are summarized in Table 1. There are some observations worth noting. For Algorithm II the forecasts obtained from the nonparametric mean and median DSR methods are equivalent in terms of the lowest values of the three performance measures, and across all three time periods. For period II, all multivariate methods perform considerably better than their univariate counterparts. This indicates that there is some gain in using multivariate portfolio selection methods that account for possible cross-correlations between asset returns. On the other hand, the forecasting results for the univariate and multivariate methods are qualitatively similar for periods I and III. Finally, we see that it is easy to outperform the naive benchmark method.

6 Conclusion

In this paper, we propose multivariate nonparametric estimators of the conditional mean and conditional median for mean–DSR optimization. In particular, the estimators account for possible interrelationships between asset returns, as for instance quantified by cross-correlations. To implement the proposed estimators, we provide two computational algorithms for efficient portfolio selection. Via the analysis of 24 French stock market returns, we evaluate the in-sample performance of classical and nonparametric portfolio selection methods with and without restrictions on the portfolio weights. In addition, we compare the out-of-sample performance of seven portfolio selection methods in forecasting the CAC 40 index returns during three highly different time periods.

From a theoretical point of view, it is clear that the proposed nonparametric multivariate methods are more natural than their univariate counterparts when asset returns are correlated. This particular extension has not been considered in the current literature. Algorithm II provides an efficient and simple tool for this purpose. Moreover, the algorithm allows for heavy-tailed or asymmetric distribution of portfolio returns by considering univariate and multivariate kernel-based median estimators. Finally, it is good to mention that the computational burden of Algorithm II is minimal.

7 Supplementary material

Data and R codes, as supplementary material, are available at: https://doi.org/www.jandegooijer.nl.

References

de Athayde, G.M.: Building a mean-downside risk portfolio frontier. In: Dunis, C., Timmermann, A., Moody, J. (eds.) Developments in Forecast Combination and Portfolio Choice, pp. 194–211. Wiley, New York (2001). https://doi.org/10.1016/B978-075064863-9.50012-8

de Athayde, G.M.: The mean-downside risk portfolio frontier: a non-parametric approach. In: Satchell, S.E., Scowcroft, A. (eds.) Advances in Portfolio Construction and Implementation, pp. 290–309. Butterworth-Heinemann, Oxford (2003)

Ben Salah, H., Chaouch, M., Gannoun, A., de Peretti, C., Trabelsi, A.: Median-based nonparametric estimation of returns in mean-downside risk portfolio frontier. Ann. Oper. Res. 262(2), 653–681 (2018a). https://doi.org/10.1007/s10479-016-2235-z

Ben Salah, H., Gannoun, A., Ribatet, M.: A new approach in nonparametric estimation of returns in mean-downside risk portfolio frontier. Int. J. Portf. Anal. Manag. 2(2), 169–197 (2018b)

De Gooijer, J.G.: Elem. Nonlinear Time Ser. Anal. Forecast. Springer, New York (2017). https://doi.org/10.1007/978-3-319-43252-6

Eftekhari, B., Satchell, S.E.: Some problems with modelling asset returns using the elliptical class. Appl. Econ. Lett. 3(9), 571–572 (1996). https://doi.org/10.1080/135048596355970

Estrada, J.: Mean-semivariance behavior: an alternative behavioral model. J. Emerg. Mark. Financ. 3(3), 231–248 (2004). https://doi.org/10.1177/097265270400300301

Estrada, J.: Downside risk in practice. J. Appl. Corp. Financ. 18(1), 117–125 (2006)

Estrada, J.: Mean–semivariance optimization: a heuristic approach. J. Appl. Financ. 18(1), 57–72 (2008). https://doi.org/10.2139/ssrn.1028206

Gannoun, A., Saracco, J., Yu, K.: Nonparametric time series prediction by conditional median and quantiles. J. Stat. Plan. Inference 117(2), 207–223 (2003). https://doi.org/10.1016/s0378-3758(02)00384-1

Hogan, W.W., Warren, J.M.: Computation of the efficient boundary in the E-S portfolio selection. J. Financ. Quant. Anal. 7, 1881–1896 (1972). https://doi.org/10.2307/2329623

Markowitz, H.M.: Portfolio selection. J. Financ. 7(1), 77–91 (1952). https://doi.org/10.2307/2975974

Markowitz, H.M.: Portfolio Selection: Efficient Diversification of Investments. Wiley, New York (1959). https://doi.org/cowles.yale.edu/sites/default/files/files/pub/mon/m16-all.pdf

Markowitz, H.M.: Mean-Variance Analysis in Portfolio Choice and Capital Markets. Basil Blackwell, Oxford (1987). Paperback edition: Blackwell, 1990

Nawrocki, D.N.: A brief history of downside risk measures. J. Invest. 8(3), 9–25 (1999). https://doi.org/10.3905/joi.1999.319365

Acknowledgements

We are grateful to the Editor and two anonymous referees for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ben Salah, H., De Gooijer, J.G., Gannoun, A. et al. Mean–variance and mean–semivariance portfolio selection: a multivariate nonparametric approach. Financ Mark Portf Manag 32, 419–436 (2018). https://doi.org/10.1007/s11408-018-0317-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11408-018-0317-4

Keywords

- Downside risk

- Forecasting

- Multivariate kernel-based mean estimation

- Multivariate kernel-based median estimation

- Semivariance