Abstract

Purpose

This article reviews the process of scaling up early developmental preventive interventions with criminological outcomes over the life-course, with a focus on quantifying the scale-up penalty. The scale-up penalty is an empirically based quantification of the amount of attenuation in the effects of interventions as they move from research and demonstration projects to large-scale delivery systems attempting to achieve population-level impacts.

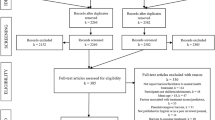

Methods

A systematic review was conducted, which included rigorous criteria for inclusion of studies, comprehensive search strategies to identify eligible studies, and a detailed protocol for coding key study characteristics and factors related to the scale-up penalty.

Results

A total of six studies met the inclusion criteria, originating in two countries (USA and Norway) and covering a 20-year period (1998 to 2017). Studies showed large variability in the scale-up penalty assigned, ranging from 0 to 50%, with one study reporting scale-up penalties from negative to 71%. A wide range of factors were considered in quantifying the scale-up penalty, including implementation context, heterogeneity in target populations, heterogeneity of service providers, and fidelity to the model. The most recent studies were more comprehensive in their consideration of factors influencing the scale-up penalty.

Conclusions

It is important for program developers and policymakers to recognize and account for implementation challenges when scaling up early developmental preventive interventions. Further research in this area is needed to help mitigate attenuation of program effects and aid public investments in early developmental preventive interventions.

Similar content being viewed by others

Notes

It is important to note that when researchers assign a scale-up penalty in the context of simulation models, this is not an arbitrary figure. It is based on empirical studies that have documented attenuation of program effects and the associated implementation factors in the scale-up process.

It is also important to note that the Perry Preschool project in particular reported large effect sizes for a range of criminological outcomes and was used in two of the six studies included in this review.

Later versions of the cost-benefit model, which first appeared in 2004 and 2006, substituted “scale-up discounts” with a range of adjustments to program effect sizes to account for methodological quality, the relevance or quality of the outcome measure used, and researcher involvement in the program’s design and implementation. Still important to the model is an adjustment (reduction) to program effect sizes if the intervention is deemed not to be a “real-world” trial, and this is part of the researcher involvement adjustment.

References

Alkon, A., Tschann, J. M., Ruane, S. H., Wolff, M., & Hittner, A. (2001). A violence-prevention and evaluation project with ethnically diverse populations. American Journal of Preventive Medicine, 20, 48–55.

Aos, S., Miller, M., & Drake, E. (2006). Evidence-based public policy options to reduce future prison construction, criminal justice costs, and crime rates. Olympia, WA: Washington State Institute for Public Policy.

Backer, T. E. (2000). The failure of success: challenges of disseminating effective substance abuse prevention programs. Journal of Community Psychology, 28, 363–373.

Bellg, A. J., Borrelli, B., Resnick, B., Hecht, J., Minicucci, D. S., Ory, M., Ogedegbe, G., Orwig, D., Ernst, D., Czajkowski, S., & Treatment Fidelity Workgroup of the NIH Behavior Change Consortium. (2004). Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH Behavior Change Consortium. Health Psychology, 23, 443–451.

Bond, G. R., Evans, L., Salyers, M. P., Williams, J., & Kim, H. W. (2000). Measurement of fidelity in psychiatric rehabilitation. Mental Health Services Research, 2, 75–87.

Bonta, J., & Andrews, D. (2007). Risk-need-responsivity model for offender assessment and rehabilitation. Rehabilitation, 6, 1–22.

Borrelli, B., Sepinwall, D., Ernst, D., Bellg, A. J., Czajkowski, S., Breger, R., DeFrancesco, C., Levesque, C., Sharp, D. L., Ogedegbe, G., Resnick, B., & Orwig, D. (2005). A new tool to assess treatment fidelity and evaluation of treatment fidelity across 10 years of health behavior research. Journal of Consulting and Clinical Psychology, 73, 852–860.

Brooks-Gunn, J., Gross, R. T., Kraemer, H. C., Spiker, D., & Shapiro, S. (1992). Enhancing the cognitive outcomes of low birth weight, premature infants: for whom is the intervention most effective? Pediatrics, 89, 1209–1215.

Campbell Collaboration. (2014). Campbell systematic reviews: policies and guidelines. Campbell Policies and Guidelines Series, No. 1. https://doi.org/10.4073/cpg.2016.1.

Dodge, K. A. (2001). The science of youth violence prevention: progressing from developmental epidemiology to efficacy to effectiveness to public policy. American Journal of Preventive Medicine, 20, 63–70.

Donohue, J. J., & Siegelman, P. (1998). Allocating resources among prisons and social programs in the battle against crime. Journal of Legal Studies, 27, 1–43.

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41, 327–350.

Dusenbury, L., Brannigan, R., Falco, M., & Hansen, W. B. (2003). A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Education Research, 18, 237–256.

Elliott, D. S., & Mihalic, S. (2004). Issues in disseminating and replicating effective prevention programs. Prevention Science, 5, 47–53.

Fagan, A. A. (2017). Illuminating the black box of implementation in crime prevention. Criminology & Public Policy, 16, 451–455.

Fagan, A. A., Bumbarger, B. K., Barth, R. P., Bradshaw, C. P., Cooper, B. R., Supplee, L. H., & Walker, D. K. (2019). Scaling-up evidence-based interventions in U.S. public systems to prevent behavioral health problems: challenges and opportunities. Prevention Science, in press.

Farrington, D. P. (2003). Methodological quality standards for evaluation research. Annals of the American Academy of Political and Social Science, 587, 49–68.

Farrington, D. P., Gaffney, H., Lösel, F., & Ttofi, M. M. (2017). Systematic reviews of the effectiveness of developmental prevention programs in reducing delinquency, aggression, and bullying. Aggression and Violent Behavior, 33, 91–106.

Fixsen, D. L., Blase, K. A., Metz, A., & Van Dyke, M. (2013). Statewide implementation of evidence-based programs. Exceptional Children, 79, 213–230.

Fixsen, D. L., Blase, K. A., & Fixsen, A. A. (2017). Scaling effective innovations. Criminology & Public Policy, 16, 487–499.

Flay, B. R. (1986). Efficacy and effectiveness trials (and other phases of research) in the development of health promotion programs. Preventive Medicine, 15, 451–474.

Flay, B. R., Biglan, A., Boruch, R. F., Castro, F. G., Gottfredson, D. C., Kellam, S., et al. (2005). Standards of evidence: criteria for efficacy, effectiveness and dissemination. Prevention Science, 6, 151–175.

Forgatch, M. S., & Patterson, G. R. (2010). Parent Management Training—Oregon Model: an intervention for antisocial behavior in children and adolescents. In J. R. Weisz & A. E. Kazdin (Eds.), Evidence-based psychotherapies for children and adolescents (pp. 159–177). New York: Guilford Press.

Forgatch, M. S., Patterson, G. R., & Gewirtz, A. H. (2013). Looking forward the promise of widespread implementation of parent training programs. Perspectives on Psychological Science, 8, 682–694.

Forehand, R., & Kotchick, B. A. (1996). Cultural diversity: a wake-up call for parent training. Behavior Therapy, 27, 187–206.

Gearing, R. E., El-Bassel, N., Ghesquiere, A., Baldwin, S., Gillies, J., & Ngeow, E. (2011). Major ingredients of fidelity: a review and scientific guide to improving quality of intervention research implementation. Clinical Psychology Review, 31, 79–88.

Glasgow, R. E., Vinson, C., Chambers, D., Khoury, M. J., Kaplan, R. M., & Hunter, C. (2012). National Institutes of Health approaches to dissemination and implementation science: current and future directions. American Journal of Public Health, 102, 1274–1281.

Gorman-Smith, D. (2006). How to successfully implement evidence-based social programs: a brief overview for policymakers and program providers. Practice, 10, 278–290.

Gottfredson, D. C., Cook, T. D., Gardner, F. E., Gorman-Smith, D., Howe, G. W., Sandler, I. N., & Zafft, K. M. (2015). Standards of evidence for efficacy, effectiveness, and scale-up research in prevention science: Next generation. Prevention Science, 16, 893–926.

Greenwood, P. W., Model, K., Rydell, C. P., & Chiesa, J. (1998). Diverting children from a life of crime: Measuring costs and benefits. Santa Monica, CA: RAND.

Groff, E., & Mazerolle, L. (2008). Simulated experiments and their potential role in criminology and criminal justice. Journal of Experimental Criminology, 4, 187–193.

Kellam, S. G., & Langevin, D. J. (2003). A framework for understanding ‘evidence’ in prevention research and programs. Prevention Science, 4, 137–153.

Kitzman, H. J., Olds, D. L., Cole, R. E., Hanks, C. E., Anson, E. A., Arcoleo, K. J., et al. (2010). Enduring effects of prenatal and infancy home visiting by nurses on children: follow-up of a randomized trial among children at age 12 years. Archives of Pediatrics and Adolescent Medicine, 164, 412–418.

Lee, S., Aos, S., Drake, E., Pennucci, A., Miller, M., & Anderson, L. (2012). Return on investment: evidence-based options to improve statewide outcomes. Olympia, WA: Washington State Institute for Public Policy.

Manning, M., Homel, R., & Smith, C. (2010). A meta-analysis of the effects of early developmental prevention programs in at-risk populations on non-health outcomes in adolescence. Child and Youth Services Review, 32, 506–519.

McCrabb, S., Lane, C., Hall, A., Milat, A., Bauman, A., Sutherland, R., et al. (2019). Scaling-up evidence-based obesity interventions: a systematic review assessing intervention adaptations and effectiveness and quantifying the scale-up penalty. Obesity Reviews. https://doi.org/10.1111/obr.12845.

McLaughlin, J. A., & Jordan, G. B. (2004). Using logic models. In K. E. Newcomer, H. P. Hatry, & J. S. Wholey (Eds.), Handbook of practical program evaluation (pp. 7–32). San Francisco, CA: Jossey-Bass.

Mihalic, S. F., & Irwin, K. (2003). Blueprints for violence prevention: from research to real-world settings—factors influencing the successful replication of model programs. Youth Violence and Juvenile Justice, 1, 307–329.

Moore, J. E., Bumbarger, B. K., & Cooper, B. R. (2013). Examining adaptations of evidence-based programs in natural contexts. Journal of Primary Prevention, 34, 147–161.

Nurse-Family Partnership (2019). Nurse-Family Partnership: national snapshot. Retrieved from www.nursefamilypartnership.org. Accessed August 2, 2019.

Olds, D. L., Henderson, C. R., Chamberlin, R., & Tatelbaum, R. (1986). Preventing child abuse and neglect: a randomized trial of nurse home visitation. Pediatrics, 78, 65–78.

Olds, D., Henderson Jr., C. R., Cole, R., Eckenrode, J., Kitzman, H., Luckey, D., et al. (1998). Long-term effects of nurse home visitation on children’s criminal and antisocial behavior: 15-year follow-up of a randomized controlled trial. Journal of the American Medical Association, 280, 1238–1244.

Olds, D. L., Hill, P. L., O’Brien, R., Racine, D., & Moritz, P. (2003). Taking preventive intervention to scale: the Nurse-Family Partnership. Cognitive and Behavioral Practice, 10, 278–290.

Olds, D. L., Holmberg, J. R., Donelan-McCall, N., Luckey, D. W., Knudtson, M. D., & Robinson, J. (2014). Effects of home visits by paraprofessionals and by nurses on children: follow-up of a randomized trial at ages 6 and 9 years. JAMA Pediatrics, 168, 114–121.

Pew-MacArthur Results First Initiative (2016). Implementation oversight for evidence-based programs: A policymaker’s guide to effective program delivery. Retrieved from https://www.pewtrusts.org.

Piquero, A. R., Jennings, W. G., Diamond, B., Farrington, D. P., Tremblay, R. E., Welsh, B. C., et al. (2016). A meta-analysis update on the effects of early family/parent training programs on antisocial behavior and delinquency. Journal of Experimental Criminology, 12, 229–248.

Rocque, M., Welsh, B. C., Greenwood, P. W., & King, E. (2014). Implementing and sustaining evidence-based practice in juvenile justice: a case study of a rural state. International Journal of Offender Therapy and Comparative Criminology, 58, 1033–1057.

Silbert, T., & Welch, L. (2001). A cost-benefit analysis of arts education for at-risk youth. Los Angeles, CA: School of Policy, Planning, and Development, University of Southern California.

Spoth, R. L., Kavanagh, K., & Dishion, T. J. (2002). Family centered preventive intervention science: toward benefits to larger populations of children, youth, and families. Prevention Science, 3, 145–152.

Sullivan, C. J., Welsh, B. C., & Ilchi, O. S. (2017). Modeling the scaling up of early crime prevention: implementation challenges and opportunities for translational criminology. Criminology & Public Policy, 16, 457–485.

Tommeraas, T., & Ogden, T. (2017). Is there a scale-up penalty? Testing behavioral change in the scaling up of Parent Management Training in Norway. Administration and Policy in Mental Health and Mental Health Services Research, 44, 203–216.

Tonry, M., & Farrington, D. P. (1995). Strategic approaches to crime prevention. In M. Tonry & D. P. Farrington (Eds.), Building a safer society: strategic approaches to crime prevention (pp. 1–20). Chicago: University of Chicago Press.

Welsh, B. C., Sullivan, C. J., & Olds, D. L. (2010). When early crime prevention goes to scale: a new look at the evidence. Prevention Science, 11, 115–125.

Yampolskaya, S., Nesman, T. M., Hernandez, M., & Koch, D. (2004). Using concept mapping to develop a logic model and articulate a program theory: a case example. American Journal of Evaluation, 25, 191–207.

Acknowledgements

We are grateful to the assigned journal editor, Paul Mazerolle, and three anonymous reviewers for insightful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yohros, A., Welsh, B.C. Understanding and Quantifying the Scale-Up Penalty: a Systematic Review of Early Developmental Preventive Interventions with Criminological Outcomes. J Dev Life Course Criminology 5, 481–497 (2019). https://doi.org/10.1007/s40865-019-00128-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40865-019-00128-1