Abstract

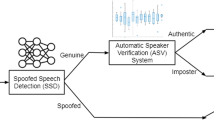

Voice user interface (VUI) has become increasingly popular in recent years. Speaker recognition system, as one of the most common VUIs, has emerged as an important technique to facilitate security-required applications and services. In this paper, we propose to design, for the first time, a real-time, robust, and adaptive universal adversarial attack against the state-of-the-art deep neural network (DNN) based speaker recognition systems in the white-box scenario. By developing an audio-agnostic universal perturbation, we can make the DNN-based speaker recognition systems to misidentify the speaker as the adversary-desired target label, with using a single perturbation that can be applied on arbitrary enrolled speaker’s voice. In addition, we improve the robustness of our attack by modeling the sound distortions caused by the physical over-the-air propagation through estimating room impulse response (RIR). Moreover, we propose to adaptively adjust the magnitude of perturbations according to each individual utterance via spectral gating. This can further improve the imperceptibility of the adversarial perturbations with minor increase of attack generation time. Experiments on a public dataset of 109 English speakers demonstrate the effectiveness and robustness of the proposed attack. Our attack method achieves average 90% attack success rate on both X-vector and d-vector speaker recognition systems. Meanwhile, our method achieves 100 × speedup on attack launching time, as compared to the conventional non-universal attacks.

Similar content being viewed by others

References

Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., Devin, M., Ghemawat, S., Irving, G., Isard, M., & et al. (2016). Tensorflow: a system for large-scale machine learning. In 12th USENIX symposium on operating systems design and implementation (OSDI 16) (pp. 265–283).

Abdoli, S., Hafemann, L.G., Rony, J., Ayed, I.B., Cardinal, P., & Koerich, A.L. (2019). Universal adversarial audio perturbations. arXiv:1908.03173.

Amazon: Alexa uses voice profiles to recognize your voice and personalize your experience (2020). https://www.amazon.com/gp/help/customer/display.html?nodeId=202199440.

Amazon: Amazon echo and Alexa devices (2020). https://www.amazon.com/smart-home-devices/.

Apple: Siri (2020). https://www.apple.com/siri/.

Bank, C. (2019). Security as unique as your voice. https://www.chase.com/personal/voice-biometrics.

Brown, T., Mané, D., Roy, A., Abadi, M., & Gilmer, J. (2017). Adversarial patch. arXiv:1712.09665.

Carlini, N., Mishra, P., Vaidya, T., Zhang, Y., Sherr, M., Shields, C., Wagner, D., & Zhou, W. (2016). Hidden voice commands. In 25th USENIX security symposium (USENIX security 16) (pp. 513–530).

Carlini, N., & Wagner, D. (2017). Towards evaluating the robustness of neural networks. In 2017 IEEE symposium on security and privacy (SP) (pp. 39–57): IEEE.

Carlini, N., & Wagner, D. (2018). Audio adversarial examples: targeted attacks on speech-to-text. In 2018 IEEE security and privacy workshops (SPW) (pp. 1–7): IEEE.

Chen, G., Chen, S., Fan, L., Du, X., Zhao, Z., Song, F., & Liu, Y. (2019). Who is real bob? Adversarial attacks on speaker recognition systems. arXiv:1911.01840.

Chen, P.Y., Zhang, H., Sharma, Y., Yi, J., & Hsieh, C.J. (2017). Zoo: zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In Proceedings of the 10th ACM workshop on artificial intelligence and security (pp. 15–26).

Christophe, V., Junichi, Y., & Kirsten, M. (2016). Cstr vctk corpus: english multi-speaker corpus for cstr voice cloning toolkit. The Centre for Speech Technology Research (CSTR).

Goodfellow, I.J., Shlens, J., & Szegedy, C. (2014). Explaining and harnessing adversarial examples. arXiv:1412.6572.

Google: voice match and media on google home. https://support.google.com/googlenest/answer/7342711?hl=en (2019).

Google: hey google (2020). https://assistant.google.com/.

Grosse, K., Papernot, N., Manoharan, P., Backes, M., & McDaniel, P. (2017). Adversarial examples for malware detection. In European symposium on research in computer security (pp. 62–79): Springer.

Hendrik Metzen, J., Chaithanya Kumar, M., Brox, T., & Fischer, V. (2017). Universal adversarial perturbations against semantic image segmentation. In Proceedings of the IEEE international conference on computer vision (pp. 2755–2764).

Kreuk, F., Adi, Y., Cisse, M., & Keshet, J. (2018). Fooling end-to-end speaker verification with adversarial examples. In 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1962–1966): IEEE.

Kurakin, A., Goodfellow, I., & Bengio, S. (2016). Adversarial examples in the physical world. arXiv:1607.02533.

Lei, Y., Scheffer, N., Ferrer, L., & McLaren, M. (2014). A novel scheme for speaker recognition using a phonetically-aware deep neural network. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1695–1699): IEEE.

Li, Z., Shi, C., Xie, Y., Liu, J., Yuan, B., & Chen, Y. (2020). Practical adversarial attacks against speaker recognition systems. In Proceedings of the 21st international workshop on mobile computing systems and applications (pp. 9–14).

Lin, Y.C., Hong, Z.W., Liao, Y.H., Shih, M.L., Liu, M.Y., & Sun, M. (2017). Tactics of adversarial attack on deep reinforcement learning agents. In Proceedings of the 26th international joint conference on artificial intelligence (pp. 3756–3762).

Liu, X., Yang, H., Liu, Z., Song, L., Li, H., & Chen, Y. (2018). Dpatch: an adversarial patch attack on object detectors. arXiv:1806.02299.

McLaren, M., Lei, Y., & Ferrer, L. (2015). Advances in deep neural network approaches to speaker recognition. In 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 4814–4818): IEEE.

Microsoft: Cortana (2020). https://www.microsoft.com/en-us/cortana.

Moosavi-Dezfooli, S.M., Fawzi, A., Fawzi, O., & Frossard, P. (2017). Universal adversarial perturbations. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1765–1773).

Nair, V., & Hinton, G.E. (2010). Rectified linear units improve restricted boltzmann machines. In International conference on machine learning (ICML).

Neekhara, P., Hussain, S., Pandey, P., Dubnov, S., McAuley, J., & Koushanfar, F. (2019). Universal adversarial perturbations for speech recognition systems. arXiv:1905.03828.

Povey, D., Ghoshal, A., Boulianne, G., Burget, L., Glembek, O., Goel, N., Hannemann, M., Motlicek, P., Qian, Y., Schwarz, P., & et al. (2011). The kaldi speech recognition toolkit. In IEEE 2011 workshop on automatic speech recognition and understanding: CONF. IEEE Signal Processing Society.

Qin, Y., Carlini, N., Cottrell, G., Goodfellow, I., & Raffel, C. (2019). Imperceptible, robust, and targeted adversarial examples for automatic speech recognition. In International conference on machine learning (pp. 5231–5240).

Raj, D., Snyder, D., Povey, D., & Khudanpur, S. (2019). Probing the information encoded in x-vectors. arXiv:1909.06351.

Rix, A.W., Beerends, J.G., Hollier, M.P., & Hekstra, A.P. (2001). Perceptual evaluation of speech quality (pesq)-a new method for speech quality assessment of telephone networks and codecs. In 2001 IEEE international conference on acoustics, speech, and signal processing. Proceedings (Cat. No. 01CH37221), (Vol. 2 pp. 749–752): IEEE.

Sainburg, T., Thielk, M., & Gentner, T.Q. (2019). Latent space visualization, characterization, and generation of diverse vocal communication signals. bioRxiv, 870311.

Scheibler, R., Bezzam, E., & Dokmanić, I. (2018). Pyroomacoustics: a python package for audio room simulation and array processing algorithms. In 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 351–355): IEEE.

Snyder, D., Garcia-Romero, D., Povey, D., & Khudanpur, S. (2017). Deep neural network embeddings for text-independent speaker verification. In Interspeech (pp. 999–1003).

Snyder, D., Garcia-Romero, D., Sell, G., McCree, A., Povey, D., & Khudanpur, S. (2019). Speaker recognition for multi-speaker conversations using x-vectors. In ICASSP 2019-2019 IEEE International conference on acoustics, speech and signal processing (ICASSP) (pp. 5796–5800): IEEE.

Snyder, D., Garcia-Romero, D., Sell, G., Povey, D., & Khudanpur, S. (2018). X-vectors: robust dnn embeddings for speaker recognition. In 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 5329–5333): IEEE.

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., & Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting. The Journal of Machine Learning Research, 15(1), 1929–1958.

Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., & Fergus, R. (2013). Intriguing properties of neural networks. arXiv:1312.6199.

Thys, S., Van Ranst, W., & Goedemé, T. (2019). Fooling automated surveillance cameras: adversarial patches to attack person detection. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 0–0).

Vadillo, J., & Santana, R. (2019). Universal adversarial examples in speech command classification. arXiv:1911.10182.

Vaidya, T., Zhang, Y., Sherr, M., & Shields, C. (2015). Cocaine noodles: exploiting the gap between human and machine speech recognition. In 9th USENIX workshop on offensive technologies.

Variani, E., Lei, X., McDermott, E., Moreno, I.L., & Gonzalez-Dominguez, J. (2014). Deep neural networks for small footprint text-dependent speaker verification. In 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 4052–4056): IEEE.

Xie, Y., Shi, C., Li, Z., Liu, J., Chen, Y., & Yuan, B. (2020). Real-time, universal, and robust adversarial attacks against speaker recognition systems. In ICASSP 2020-2020 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 1738–1742): IEEE.

Yuan, X., Chen, Y., Zhao, Y., Long, Y., Liu, X., Chen, K., Zhang, S., Huang, H., Wang, X., & Gunter, C.A. (2018). Commandersong: a systematic approach for practical adversarial voice recognition. In 27th USENIX security symposium (USENIX security 18) (pp. 49–64).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xie, Y., Li, Z., Shi, C. et al. Real-time, Robust and Adaptive Universal Adversarial Attacks Against Speaker Recognition Systems. J Sign Process Syst 93, 1187–1200 (2021). https://doi.org/10.1007/s11265-020-01629-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-020-01629-9