Abstract

We consider gradient fields on \({\mathbb {Z}}^d\) for potentials V that can be expressed as

This representation allows us to associate a random conductance type model to the gradient fields with zero tilt. We investigate this random conductance model and prove correlation inequalities, duality properties, and uniqueness of the Gibbs measure in certain regimes. We then show that there is a close relation between Gibbs measures of the random conductance model and gradient Gibbs measures with zero tilt for the potential V. Based on these results we can give a new proof for the non-uniqueness of ergodic zero-tilt gradient Gibbs measures in dimension 2. In contrast to the first proof of this result we rely on planar duality and do not use reflection positivity. Moreover, we show uniqueness of ergodic zero tilt gradient Gibbs measures for almost all values of p and q and, in dimension \(d\ge 4\), for q close to one or for \(p(1-p)\) sufficiently small.

Similar content being viewed by others

1 Introduction

Gradient fields are a statistical mechanics model that can be used to model phase separation or, in the case of vector valued fields, solid materials. Formally they can be defined as a random field \((\varphi _x)_{x\in {\mathbb {Z}}^d}\in {\mathbb {R}}^{{\mathbb {Z}}^d}\) with distribution

Here \(\mathrm {d}\varphi (x)\) denotes the Lebesgue measure, \(V:{\mathbb {R}}\rightarrow {\mathbb {R}}\) a measurable symmetric potential, and \(x\sim y\) indicates the sum over unordered pairs of neighbouring sites of \({\mathbb {Z}}^d\). We can give a meaning to the formal expression (1.1) using the DLR-formalism. The DLR-formalism defines equilibrium distributions usually called Gibbs measure for this type of models as measures \(\mu \) on \({\mathbb {R}}^{{\mathbb {Z}}^d}\) such that the conditional probability of the restriction to any finite set is as above. In the setting of gradient interface models in general no Gibbs measure exists in dimension \(d\le 2\) [33]. Therefore one often considers gradient Gibbs measures [20]. This means that attention is restricted to the \(\sigma \)-algebra generated by the gradient fields

Then infinite volume measures exist if V(s) grows sufficiently fast (linearly is sufficient) as \(s\rightarrow \pm \infty \). Gradient Gibbs measures are also useful to model tilted surfaces. For a translation invariant gradient Gibbs measure \(\mu \) the tilt vector \(u\in {\mathbb {R}}^d\) is defined by

where \(\nabla \varphi (x)\in {\mathbb {R}}^d\) denotes the discrete derivative, i.e., the vector with entries \(\nabla _i\varphi (x)=\varphi (x+e_i)-\varphi (x)\) with \(e_i\) denoting the ith standard unit vector. If the gradient Gibbs measure is ergodic the tilt corresponds to the asymptotic average inclination of almost every realisation of the gradient field.

Gradient interface models have been studied frequently in the past years. In particular the discrete Gaussian free field with \(V(s)=s^2\) where the fields are Gaussian caught considerable attention. Many of the results obtained in this case were generalized to the class of strictly convex potentials satisfying \(c_1\le V''(s)\le c_2\) for some \(0<c_1<c_2\) and all \(s\in {\mathbb {R}}\). Let us only mention two results for convex potentials and refer to the literature in particular the reviews [19, 30] for all further results and references. Funaki and Spohn showed in [20] that for every tilt vector u there exists a unique translation invariant gradient Gibbs measure. Moreover, the scaling limit of the model is a massless Gaussian field as shown by Naddaf and Spencer [28] for zero tilt and generalised to arbitrary tilt by Giacomin et al. [22]. In contrast for non-convex potentials far less is known because all the techniques seem to rely on convexity in an essential way. For potentials of the form \(V=U+g\) where U is strictly convex and \(g''\in L^q\) for some \(q\ge 1\) with sufficiently small norm the problem can be led back to the convex theory by integrating out some degrees of freedom. This way many results from the convex case can be proved in particular uniqueness and existence of the Gibbs measure for every tilt and that the scaling limit is Gaussian [11, 12, 15]. This setting corresponds to the high temperature phase. For low temperatures which correspond to non-convexities far away from the minimum of V it was shown that the surface tension is strictly convex and the scaling limit is Gaussian [1, 2, 24].

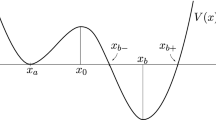

For intermediate temperatures where the potentials can be very non-convex no robust techniques are known. All results to date are restricted to the special class of potentials introduced by Biskup and Kotecky in [6] that can be represented as

where \(\rho \) is a non-negative Borel measure on the positive real line. Biskup and Kotecky mostly considered the simplest nontrivial case, denoting the Dirac measure at \(x\in {\mathbb {R}}\) by \(\delta _x\),

where \(p\in [0,1]\) and \(q\ge 1\). They show that in dimension \(d=2\) and for \(q>1\) sufficiently large there exist two ergodic zero-tilt gradient Gibbs measures. Later, Biskup and Spohn showed in [7] that nevertheless the scaling limit of every zero-tilt gradient Gibbs measure is Gaussian if the measure \(\rho \) is compactly supported in \((0,\infty )\). In [34] their result was recently extended by Ye to potentials of the form \(V(s)=(1+s^2)^\alpha \) with \(0<\alpha <\frac{1}{2}\) which can be written as in (1.4) for some \(\rho \) with unbounded support.

Our main results concern the phase diagram at zero tilt for this class of potentials. Although our techniques would apply to general \(\rho \) we restrict our attention to the simplest case where \(\rho \) is as in (1.5) and the potential can be written as

For this class of potentials we show uniqueness of the ergodic zero tilt gradient Gibbs measures for almost all p and q and, in dimension \(d\ge 4\) for \(p(1-p)\) or \(q-1\) small. Moreover, we give a new proof for the result that for large q there exists a p such that there are two distinct ergodic gradient Gibbs measures without the use of reflection positivity. For a detailed statement of our main results we refer to Sect. 2. Note that one major drawback is the restriction to zero tilt that applies here and to all earlier results for this model.

The main reason to study this class of potentials is that they are much more tractable because the variable \(\kappa \) can be considered as an additional degree of freedom using the representation (1.4). This leads to extended gradient Gibbs measures which are given by the joint law of \((\eta _{e},\kappa _{e})_{e\in {\mathbf {E}}({\mathbb {Z}}^d)}\). These extended gradient Gibbs measures can be represented as a mixture of non-homogeneous Gaussian fields with bond potential \(\kappa _{e}\eta ^2/2\) for every edge \(e\in {\mathbf {E}}({\mathbb {Z}}^d)\) and \(\kappa _{e}\in {\mathbb {R}}_+\). This implies that for a given \(\kappa \) the distribution of the random field is Gaussian with covariance given by the inverse of the operator \(\Delta _\kappa \) where

In all the works mentioned before this structure is frequently used, e.g. in [7] it is proved that the resulting \(\kappa \)-marginal of the extended gradient Gibbs measure is ergodic so that well known homogenization results for random walks in ergodic environments can be applied.

The main purpose of this note is to investigate the properties of the \(\kappa \)-marginal of extended gradient Gibbs measures in a bit more detail. The starting point is the observation that the \(\kappa \)-marginal of an extended gradient Gibbs measures with zero tilt is itself a Gibbs measure for a specification of a certain random conductance model. Moreover, the ergodic Gibbs measures of the random conductance model are in a one to one relation with the ergodic gradient Gibbs measures with zero tilt. In particular, we can lift results about the (simpler) random conductance model to results about gradient Gibbs measures. Let us mention that massive \({\mathbb {R}}\)-valued random fields have been earlier connected to discrete percolation models to analyse the existence of phase transitions [35]. For gradient models the setting is slightly different because we consider a random conductance model on the bonds with long ranged correlations while for massive models one typically considers some type of site percolation with quickly decaying correlations.

The random conductance model is simpler than the corresponding gradient interface model in at least three aspects. Firstly, it has a compact single spin state space which is actually just \(\{1,q\}\) in our main case of interest. Secondly, it satisfies strong correlation inequalities in particular the FKG-inequality while there are no such results for gradient interface models with non-convex interactions. The proofs of the correlation inequalities are further simplified by the observation that the random conductance model is closely related to determinantal processes and they then follow immediately from similar results for the weighted spanning tree. Finally, duality takes a much clearer form for the random conductance model. It was already observed in [6] that in dimension \(d=2\) the gradient interface model with potential \(V_{p,q}\) exhibits a duality property when defined on the torus. Moreover, there is a self dual point \(p_{\mathrm {sd}}=p_{\mathrm {sd}}(q)\in (0,1)\) satisfying the equation

where the model agrees with its own dual and two Gibbs measures exist for large q. For the random conductance model duality can be stated for arbitrary planar graphs and similarly to the coexistence proof for the Ising model this duality can be used to show the existence of two distinct Gibbs measures at \(p_{\mathrm {sd}}\). We remark that many of our techniques and results for the random conductance model originated in the study of the Potts model and the random cluster model and we conjecture further similarities. To avoid confusion between the terms random cluster model and random conductance model we will use the term FK random cluster model in the following.

This paper is structured as follows. In Sect. 2 we give a precise definition of gradient Gibbs measures and state our main results. Then, in Sect. 3 we introduce and motivate the random conductance model and its relation to extended gradient Gibbs measures. We prove properties of the random conductance model in Sects. 4 and 5. Finally, in Sect. 6 we use the duality of the model to reprove the phase transition result. Two technical proofs and some results about regularity properties of discrete elliptic equations are delegated to appendices.

2 Model and main results

2.1 Specifications

Let us briefly recall the definition of a specification because the concept will be needed in full generality for the random conductance model (see Sect. 4). We consider a countable set S (mostly \({\mathbb {Z}}^d\) or the edges of \({\mathbb {Z}}^d\)) and a measurable state space \((F,{{\mathcal {F}}})\) (mostly either \(|F|=2\) or \((F,{{\mathcal {F}}})= ({\mathbb {R}},{\mathcal {B}}({\mathbb {R}}))\)). Random fields are probability measures on \((F^S,{{\mathcal {F}}}^S)\) where \({{\mathcal {F}}}^S\) denotes the product \(\sigma \)-algebra. The set of probability measures on a measurable space \((X,{\mathcal {X}})\) will be denoted by \({\mathcal {P}}(X,{\mathcal {X}})\). For any \(\Lambda \subset S\) we denote by \(\pi _\Lambda :F^S\rightarrow F^\Lambda \) the canonical projection. We often consider the \(\sigma \)-algebra \({{\mathcal {F}}}_\Lambda =\pi _\Lambda ^{-1}({{\mathcal {F}}}^\Lambda )\) of events depending on the set \(\Lambda \). Recall that a probability kernel \(\gamma \) from \((X,{\mathcal {B}})\) to \((X,{\mathcal {X}})\), where \({\mathcal {B}}\subset {\mathcal {X}}\) is a sub-\(\sigma \)-algebra, is called proper if \(\gamma (B,\cdot )={\mathbb {1}}_B\) for \(B\in {\mathcal {B}}\).

Definition 2.1

A specification is a family of proper probability kernels \(\gamma _\Lambda \) from \({{\mathcal {F}}}_{\Lambda ^\mathrm {c}}\) to \({{\mathcal {F}}}_{S}\) indexed by finite subsets \(\Lambda \subset S\) such that \(\gamma _{\Lambda _1}\gamma _{\Lambda _2} =\gamma _{\Lambda _1}\) if \(\Lambda _2\subset \Lambda _1\). We define the set of Gibbs measures for the specification \(\gamma \) by

We call measures in \({\mathcal {G}}(\gamma )\) specified by \(\gamma \)

Remark 2.2

There is a well known equivalent definition of Gibbs measures. A cofinal set I is a subset of subsets of S with the property that for any finite set \(\Lambda _0\subset S\) there is \(\Lambda \in I\) such that \(\Lambda _0\subset \Lambda \). Then \(\mu \in {\mathcal {G}}(\gamma )\) if and only if \(\mu \gamma _{\Lambda }=\mu \) for \(\Lambda \in I\) where I is a cofinal subset of subsets of S. See Remark 1.24 in [21] for a proof.

2.2 Gradient Gibbs measures

We introduce the relevant notation and the definition of Gibbs and gradient Gibbs measures to state our results. For a broader discussion see [21, 30]. In this paragraph we consider real valued random fields indexed by a lattice \(\Lambda \subset {\mathbb {Z}}^d\). We will denote the set of nearest neighbour bonds of \({\mathbb {Z}}^d\) by \({\mathbf {E}}({\mathbb {Z}}^d)\). More generally, we will write \({\mathbf {E}}(G)\) and \({\mathbf {V}}(G)\) for the edges and vertices of a graph G. To consider gradient fields it is useful to choose on orientation of the edges. We orient the edges \(e=\{x,y\}\in {\mathbf {E}}({\mathbb {Z}}^d)\) from x to y iff \(x\le y\) (coordinate-wise), i.e., we can view the graph \(({\mathbb {Z}}^d,{\mathbf {E}}({\mathbb {Z}}^d))\) as a directed graph but mostly we work with the undirected graph.

To any random field \(\varphi :{\mathbb {Z}}^d\rightarrow {\mathbb {R}}\) we associate the gradient field \(\eta =\nabla \varphi \in {\mathbb {R}}^{{\mathbf {E}}({\mathbb {Z}}^d)}\) given by \(\eta _{e}=\varphi _y-\varphi _x\) if \(\{x,y\}\in {\mathbf {E}}({\mathbb {Z}}^d)\) are nearest neighbours and \(x\le y\). We formally write \(\eta _{x,y}=\eta _e=\varphi _y-\varphi _x\) and \(\eta _{y,x}=-\eta _e=\varphi _x-\varphi _y\). The gradient field \(\eta \) satisfies the plaquette condition

for every plaquette, i.e., nearest neighbours path \(x_1,x_2,x_3,x_4,x_1\). Vice versa, given a field \(\eta \in {\mathbb {R}}^{{\mathbf {E}}({\mathbb {Z}}^d)}\) that satisfies the plaquette condition there is a up to constant shifts a unique field \(\varphi \) such that \(\eta =\nabla \varphi \) (the antisymmetry of the gradient field is contained in our definition). We will refer to those fields as gradient fields and denote them by \({\mathfrak {X}}\subset {\mathbb {R}}^{{\mathbf {E}}({\mathbb {Z}}^d)}\). To simplify the notation we write \(\varphi _\Lambda \) for \(\Lambda \subset {\mathbb {Z}}^d\) and \(\eta _{E}\) for \(E\subset {\mathbf {E}}({\mathbb {Z}}^d)\) for the restriction of fields and gradient fields. We usually identify a subset \(\Lambda \subset {\mathbb {Z}}^d\) with the graph generated by it and as before we write \({\mathbf {E}}(\Lambda )\) for the bonds with both endpoints in \(\Lambda \).

For a subgraph \(H\subset G\) we write \(\partial H\) for the (inner) boundary of H consisting of all points \(x\in {\mathbf {V}}(H)\) such that there is an edge \(e=\{x,y\}\in {\mathbf {E}}(G){\setminus } {\mathbf {E}}(H)\). In the case of a graph generated by \(\Lambda \subset G\) we have \(x\in \partial \Lambda \) if there is \(y\in \Lambda ^{\mathrm {c}}\) such that \(\{x,y\}\in {\mathbf {E}}(G)\). We define \(\Lambda ^\circ =\Lambda {\setminus } \partial \Lambda \). For a finite subset \(\Lambda \subset {\mathbb {Z}}^d\) we denote by \(\mathrm {d}\varphi _\Lambda \) the Lebesgue measure on \({\mathbb {R}}^\Lambda \).

We define for \(\omega \in {\mathbb {R}}^{{\mathbf {E}}({\mathbb {Z}}^d)}_{g} \) and \(\Lambda \) finite and simply connected (i.e., \(\Lambda ^\mathrm {c}\) connected) the following a priori measure on gradient configurations

where \({\tilde{\varphi }}\) is a configuration such that \(\nabla {\tilde{\varphi }}=\omega \) and \(\nabla _*\) the push-forward of this measure along the gradient map \(\nabla :{\mathbb {R}}^{{\mathbb {Z}}^d}\rightarrow {\mathfrak {X}}\). The shift invariance of the Lebesgue measure implies that this definition is independent of the choice of \({\tilde{\varphi }}\) and it only depends on the restriction \(\omega _{{\mathbf {E}}(\Lambda )^{\mathrm {c}}}\) since \(\Lambda ^\mathrm {c}\) is connected. For a potential \(V:{\mathbb {R}}\rightarrow {\mathbb {R}}\) satisfying some growth condition we define the specification \(\gamma _\Lambda \)

where the constant \(Z_\Lambda (\omega _{{\mathbf {E}}(\Lambda )^{\mathrm {c}}})\) ensures the normalization of the measure. We introduce the notation \({\mathcal {E}}_{E}=\pi _E^{-1}(\mathcal {B}({\mathbb {R}})^E)\) for \(E\subset {\mathbf {E}}({\mathbb {Z}}^d)\) for the \(\sigma \)-algebra of events depending only on E. In accordance with Definition 2.1 measures that are specified by the specification \(\gamma \), i.e., measures \(\mu \) that satisfy for simply connected \(\Lambda \subset {\mathbb {Z}}^d\)

will be called gradient Gibbs measures for the potential V.

For \(a\in {\mathbb {Z}}^d\) we define the shifts \(\tau _a:{\mathbb {Z}}^d\rightarrow {\mathbb {Z}}^d\) and \(\tau _a:{\mathbf {E}}({\mathbb {Z}}^d)\rightarrow {\mathbf {E}}({\mathbb {Z}}^d)\) (always using the same symbol \(\tau _a\) for shifts) by

We also consider the extension \(\tau _a:{\mathbb {R}}^{{\mathbf {E}}({\mathbb {Z}}^d)}\rightarrow {\mathbb {R}}^{{\mathbf {E}}({\mathbb {Z}}^d)}\) to gradient fields which is defined by

A measure is translation invariant if \(\mu (\tau _a^{-1}(A))=\mu (A)\) for all a and \(A\in {\mathcal {B}}({\mathbb {R}})^{{\mathbf {E}}({\mathbb {Z}}^d)}\). An event is translation invariant if \(\tau _a(A)=A\) for all \(a\in {\mathbb {Z}}^d\). A gradient measure is ergodic if \(\mu (A)\in \{0,1\}\) for all translation invariant A.

2.3 Main results

Our main results concern the uniqueness and non-uniqueness of gradient Gibbs measures with zero tilt for potentials as in (1.6). Our first main result shows that the shift invariant gradient Gibbs measures are unique for almost all values of p and q.

Theorem 2.3

For every q and \(d\ge 2\) there is an at most countable set \(N(q,d)\subset [0,1]\) such that for any \(p\in [0,1]{\setminus } N(q,d)\) there is a unique shift invariant ergodic gradient Gibbs measure \(\mu \) with zero tilt for the potential \(V_{p,q}\).

This theorem is proved in Sect. 5 below the proof of Theorem 5.1. Moreover, we reprove the non-uniqueness result originally shown in [6] for this type of potential.

Theorem 2.4

There is \(q_0\ge 1\) such that for \(d=2\), \(q\ge q_0\), and \(p=p_{\mathrm {sd}}(q)\) the solution of (1.8), there are at least two shift invariant gradient Gibbs measures with 0 tilt.

The proof of this theorem is given at the end of Sect. 6. Moreover we prove uniqueness for ’high temperatures’ and dimension \(d\ge 4\). This corresponds to the regime where the Dobrushin condition holds.

Theorem 2.5

Let \(d\ge 4\). For any \(q\ge 1\) there exists \(p_0=p_0(q,d)>0\) such that for all \(p\in [0,p_0)\cup (1-p_0,1]\) there is a unique shift invariant ergodic gradient Gibbs measure with zero tilt for the potential \(V_{p,q}\). Moreover, there exists \(q_0=q_0(d)>1\) such that for any \(q\in [1,q_0]\) and any \(p\in [0,1]\) there is a unique shift invariant ergodic gradient Gibbs measure with zero tilt for the potential \(V_{p,q}\).

The proof of this Theorem is given in Sect. 5 below the proof of Theorem 5.6.

The main tool in the proofs of these theorems is the fact that the structure of the potentials V in (1.4) allows us to consider \(\kappa \) as a further degree of freedom and we consider the joint distribution of the gradient field \(\eta \) and \(\kappa \). We show that the law of the \(\kappa \)-marginal can be related to a random conductance model. The analysis of this model then translates back into the theorems stated before. We will make those statements precise in the next section. Let us end this section with some remarks.

Remark 2.6

-

1.

For spin systems with finite state space and bounded interactions there are general results that show that phase transitions, i.e., non-uniqueness of the Gibbs measure are rare, see, e.g., [21]. Theorem 2.3 establishes a similar result for a specific class of potentials for a unbounded spin space where no general results are known. As discussed in more detail at the end of Sect. 5 we expect that for every \(q\ge 1\) the Gibbs measure is unique for all \(p\in [0,1]\) except possibly for \(p=p_c\) for some critical value \(p_c=p_c(q)\). Hence, Theorem 2.3 is far from optimal but we hope that the results provided in this paper prove useful to establish stronger results.

-

2.

Let us compare the results to earlier results in the literature. For \(p/(1-p)<1/q\) the potential \(V_{p,q}\) is strictly convex so that uniqueness of the translation invariant, ergodic Gibbs measures is well known and holds for every tilt. The two step integration used by Cotar and Deuschel extends the uniqueness result to the regime \(p/(1-p)<C/\sqrt{q}\) (see Section 3.2 in [11]). In particular the case \(p\in [0,p_0)\) in Theorem 2.5 is included in earlier results. However, the potential becomes very non-convex (has a very negative second derivative at some points) for p close to 1 and the uniqueness result for \(p\in (1-p_0,1]\) and \(d\ge 4\) appears to be new. In this regime the only known result seems to be convexity of the surface tension as a function of the tilt which was shown in [2] (see in particular Proposition 2.4 there). Their results apply to p very close to one, \(q-1\) very small, and \(d\le 3\). The results in [1] remove the restrictions on q and d.

-

3.

The restriction to dimension \(d\ge 4\) arises from the fact that the Green function for inhomogeneous elliptic operators in divergence form decays slower than in the homogeneous case.

3 Extended gradient Gibbs measures and random conductance model

3.1 Extended gradient Gibbs measure

In this work we restrict to potentials of the form introduced in (1.4). As already discussed in more detail in [6, 7] it is possible to use the special structure of V to raise \(\kappa \) to a degree of freedom. Let \(\mu \) be a gradient Gibbs measure for V. For a finite set \(E\subset {\mathbf {E}}({\mathbb {Z}}^d)\) and Borel sets \({\mathbf {A}}\subset {\mathbb {R}}^E\) and \({\mathbf {B}}\subset {\mathbb {R}}_+^E\) we define the extended gradient Gibbs measure as in [6] by

It can be checked that this is a consistent family of measures and thus we can extend \({\tilde{\mu }}\) to a measure on \(({\mathbb {R}}\times {\mathbb {R}}_+)^{{\mathbf {E}}({\mathbb {Z}}^d)}\). It was explained in [6] that \(\tilde{\mu }\) is itself a Gibbs measure for the specification \({\tilde{\gamma }}_\Lambda \) defined by

Note that the distribution \((\mathrm {d}{\bar{\eta }},\mathrm {d}{\bar{\kappa }})_{{\mathbf {E}}(\Lambda )}\) actually only depends on \(\eta _{{\mathbf {E}}(\Lambda )^{\mathrm {c}}}\) and is independent of \(\kappa \). Let us add one remark concerning the notation. In this work we essentially consider three strongly related viewpoints of one model. The first viewpoint are gradient Gibbs measures that are measures on \({\mathfrak {X}}\). They will be denoted by \(\mu \) and the corresponding specification is denoted by \(\gamma \). Then there are extended gradient Gibbs measures for a specification \({\tilde{\gamma }}\). They are measures on \({\mathfrak {X}}\times {\mathbb {R}}_+^{{\mathbf {E}}({\mathbb {Z}}^d)}\) and will be denoted by \({\tilde{\mu }}\). The \(\eta \)-marginal of \({\tilde{\mu }}\) is a gradient Gibbs measure \(\mu \). Finally there is also the \(\kappa \)-marginal of \({\tilde{\mu }}\) which is a measure on \({\mathbb {R}}_+^{{\mathbf {E}}({\mathbb {Z}}^d)}\) and will be denoted by \({\bar{\mu }}\). An important result here is that \({\bar{\mu }}\) is a Gibbs measure for a specification \({\bar{\gamma }}\) if \(\rho \) is a measure as in (1.5). In this case \({\bar{\mu }}\) is a measure on the discrete space \(\{1,q\}^{{\mathbf {E}}({\mathbb {Z}}^d)}\). We expect that this result can be extended to far more general measures \(\rho \) but we do not pursue this matter here. To keep the notation consistent we denote objects with single spin space \({\mathbb {R}}\), e.g., gradient Gibbs measures without symbol modifier, objects with single spin space \(\{1,q\}\), e.g., the \(\kappa \)-marginal with a bar, and objects with single spin space \(\{1,q\}\times {\mathbb {R}}\), e.g., extended Gibbs with a tilde.

It was already remarked in [6] that this setting resembles the situation for the Potts model that can be coupled to the FK random cluster model via the Edwards–Sokal coupling measure.

3.2 The random conductance model

As explained before our strategy is to analyse the \(\kappa \)-marginal of extended gradient Gibbs measures and then use the results to deduce properties of the gradient Gibbs measures for \(V_{p,q}\). The key observation is that the \(\kappa \)-marginal of extended gradient Gibbs measures is given by the infinite volume limit of a strongly coupled random conductance model. To motivate the definition of the random conductance model we consider the \(\kappa \)-marginal of the extended specification \(\tilde{\gamma }\) defined in (3.2). For zero boundary value \({\bar{0}}\in {\mathfrak {X}}\) with \({\bar{0}}_e=0\) and \(\lambda \in \{1,q\}^{{\mathbf {E}}({\mathbb {Z}}^d)}\) we obtain

We write \(\Lambda ^w=\bar{\Lambda }/\partial \Lambda \) for the graph where the entire boundary is collapsed to a single point (this is called wired boundary conditions and we will discuss this below in more detail). We denote the lattice Laplacian with conductances \(\lambda \) and zero boundary condition outside of \(\Lambda ^\circ \) by \(\tilde{\Delta }_{\lambda }^{{\Lambda }^w}\), i.e., \(\tilde{\Delta }_{\lambda }^{{\Lambda }^w}\) acts on functions \(f:{\Lambda ^\circ }\rightarrow {\mathbb {R}}\) by \(\tilde{\Delta }_{\lambda }^{{\Lambda }^w}f(x) =\sum _{y\sim x} \lambda _{\{x,y\}}(f(x)-f(y))\) where we set \(f(y)=0\) for \(y\notin \Lambda ^\circ \). The definition (3.2) and an integration by parts followed by Gaussian calculus then imply

It simplifies the presentation to introduce the random conductance model of interest in a slightly more general setting. We consider a finite and connected graph \(G=(V,E)\). The combinatorial graph Laplacian \(\Delta _c\) associated with a set of conductances \(c:E\rightarrow {\mathbb {R}}_+\) is defined by

for any function \(f:V\rightarrow {\mathbb {R}}\). Note that we defined the graph Laplacian as a non-negative operator which is convenient for our purposes and common in the context of graph theory. In the following we view the Laplacian \(\Delta _c\) as a linear map on the space \(H_0=\{f:V\rightarrow {\mathbb {R}}: \sum _{x\in V} f(x)=0\}\) of functions with vanishing average. We define \(\det \Delta _c\) as the determinant of this linear map. By the maximum principle the Laplacian is injective on \(H_0\), hence \(\det \Delta _c>0\). Sometimes we clarify the underlying graph by writing \(\Delta _c^G\).

Remark 3.1

In the general setting it is more natural to let the Laplacian act on \(H_0\) instead of fixing a point to 0 as in the definition of \(\tilde{\Delta }_{\lambda }^{{\Lambda }^w}\) above where this corresponds to Dirichlet boundary conditions. It would also be possible to fix a point \(x\in {\mathbf {V}}(G)\) and consider \(\tilde{\Delta }_c^G\) acting on functions \(f:{\mathbf {V}}(G){\setminus } \{x_0\}\rightarrow {\mathbb {R}}\) defined by \(({\tilde{\Delta }}_c^Gf)(x)=\sum _{y\sim x} c_{\{x,y\}} f(x)-f(y)\) for \(x\in {\mathbf {V}}(G){\setminus } \{x_0\}\) where we set \(f(x_0)=0\). It is easy to see using, e.g., Gaussian calculus and a change of measure that the determinant of \({\tilde{\Delta }}_c^G\) is independent of \(x_0\) and

Note that the Gibbs property is harder to verify when restricting the average.

Motivated by (3.4) we fix a real number \(q> 1\) and consider the following probability measure on \(\{1,q\}^E\)

where \(Z=Z^{G,p}\) denotes a normalisation constant such that \({\mathbb {P}}^{G,p}\) is a probability measure. In the following we will often drop G and p from the notation and we will always suppress q. We restrict our attention to \(q\ge 1\) because by scaling the model with conductances \(\{1,q\}\) has the same distribution as a model with conductances \(\{\alpha ,\alpha q\}\) for \(\alpha >0\) so that we can set the smaller conductance to 1. Let us state a remark concerning the relation to the FK random cluster model.

Remark 3.2

-

1.

We chose the notation such that the similarity to the FK random cluster model is apparent. Both models have an a priori distribution given by independent Bernoulli distribution with parameter p on the bonds that is then correlated by a complicated infinite range interaction depending on q. For \(q=1\) the FK random cluster model reduces to Bernoulli percolation. Similarly, as \(q\rightarrow 1\) the pushforward of the distribution of the random conductance model under the map \(\{1,q\}\rightarrow \{0,1\}\) converges to the Bernoulli percolation measure. At the end of Sect. 5 we state a couple of conjectures about the behaviour of this model that show that we expect similarities with the in many more aspects.

-

2.

While there are several close similarities to the FK random cluster model there is also one important difference that seems to pose additional difficulties in the analysis of this model. The conditional distribution in a finite set depends on the entire configuration of the conductances outside the finite set (not just a partition of the boundary as in the FK random cluster model). In particular the often used argument that the conditional distribution of a random cluster model in a set given that all boundary edges are closed is the free boundary FK random cluster distribution has no analogue in our setting.

-

3.

We refer to the model as a random conductance model since we will (not very surprisingly) use tools from the theory of electrical networks. Note that in the definition of the potential V the parameters correspond to different (random) stiffness of the bonds.

4 Basic properties of the random conductance model

4.1 Preliminaries

As before we consider a connected graph \(G=(V,E)\). To simplify the notation we introduce for \(E'\subset E\) and \(\kappa \in \{1,q\}^E\) the notation

for the number of hard and soft edges respectively and we define \(h(\kappa )=h(\kappa ,E)\) and \(s(\kappa )=s(\kappa ,E)\). Let us introduce the weight of a subset of edges \({\varvec{t}}\subset E\) by defining

We will denote the set of all spanning trees of a graph by \(\mathrm {ST}(G)\). We identify spanning trees with their edge sets. In the following, we will frequently use the Kirchhoff formula

for the determinant of a weighted graph Laplacian (cf. [32] for a proof). Let us remark that the Kirchhoff formula is frequently used in statistical mechanics and has also been used in the context of gradient interface models for some potentials as in (1.4) in [9].

Remark 4.1

Note that Eq. (4.4) remains true for graphs with multi-edges and loops. Indeed, loops have no contribution on both sides and multi-edges can be replaced by a single edge with the sum of the conductances as conductance.

4.2 Correlation inequalities

We will now show correlation inequalities for the measures \({\mathbb {P}}={\mathbb {P}}^{G,p}\). We start by recalling several of the well known correlation inequalities. To state our results we introduce some notation. Let E be a finite or countable infinite set. Let \(\Omega =\{1,q\}^E\) and \({\mathcal {F}}\) the \(\sigma \)-algebra generated by cylinder events. We consider the usual partial order on \(\Omega \) given by \(\omega _1\le \omega _2\) iff \((\omega _1)_e\le (\omega _2)_e\) for all \(e\in E\). A function \(X:\Omega \rightarrow {\mathbb {R}}\) is increasing if \(X(\omega _1)\le X(\omega _2)\) for \(\omega _1\le \omega _2\) and decreasing if \(-X\) is increasing. An event \(A\subset \Omega \) is increasing if its indicator function is increasing. We write \({\bar{\mu }}_1\succsim {\bar{\mu }}_2\) if \({\bar{\mu }}_1\) stochastically dominates \({\bar{\mu }}_2\) which is by Strassen’s Theorem equivalent to the existence of a coupling \((\omega _1,\omega _2)\) such that \(\omega _1\sim {\bar{\mu }}_1\) and \(\omega _2\sim {\bar{\mu }}_2\) and \(\omega _1\ge \omega _2\) (see [31]). We introduce the minimum \(\omega _1 \wedge \omega _2\) and the maximum \(\omega _1\vee \omega _2\) of two configurations given by \((\omega _1 \wedge \omega _2)_e=\min ( (\omega _1)_e, (\omega _2)_e)\) and \((\omega _1 \vee \omega _2)_e=\max ((\omega _1)_e, (\omega _2)_e)\) for any \(e\in E\). We call a measure \({\bar{\mu }}\) on \(\Omega \) strictly positive if \({\bar{\mu }}(\omega )>0\) for all \(\omega \in \Omega \). Finally we introduce for \(f,g\in E\) and \(\omega \in \Omega \) the notation \(\omega _{fg}^{\pm \pm }\in \Omega \) for the configuration given by \((\omega _{fg}^{\pm \pm })_e=\omega _e\) for \(e\notin \{f,g\}\) and \((\omega _{fg}^{+ *})_f= q\), \((\omega _{fg}^{-*})_f = 1\), \((\omega _{fg}^{*+})_g= q\), \((\omega _{fg}^{*-})_g = 1\) and similarly for g. We define \(\omega _f^{\pm }\) similarly. We sometimes drop the edges f, g from the notation. We write \({\bar{\mu }}(\omega )={\bar{\mu }}(\{\omega \})\) for \(\omega \in \Omega \) and \({\bar{\mu }}(X)=\int _{\Omega }X\,\mathrm {d}{\bar{\mu }}\) for \(X:\Omega \rightarrow {\mathbb {R}}\).

Theorem 4.2

(Holley inequality) Let \(\Omega =\{1,q\}^E\) be finite and \({\bar{\mu }}_1\), \({\bar{\mu }}_2\) strictly positive measures on \(\Omega \) that satisfy the Holley inequality

Then \({\bar{\mu }}_1\precsim {\bar{\mu }}_2\).

Proof

The original proof appeared in [25], a simpler proof can be found , e.g., in [23, Theorem 2.1]. \(\square \)

A strictly positive measure is called strongly positively associated if it satisfies the FKG lattice condition

Theorem 4.3

A strongly positively associated measure \({\bar{\mu }}\) satisfies the FKG inequality, i.e., for increasing functions \(X,Y:\Omega \rightarrow {\mathbb {R}}\)

Proof

A proof can be found in [23, Theorem 2.16]. \(\square \)

The next theorem provides a simple way to verify the assumptions of Theorems 4.2 and 4.3. Basically it states that it is sufficient to check the conditions when varying at most two edges.

Theorem 4.4

Let \(\Omega =\{1,q\}^E\) be finite and \({\bar{\mu }}_1\), \({\bar{\mu }}_2\) strictly positive measures on \(\Omega \). Then \({\bar{\mu }}_1\) and \({\bar{\mu }}_2\) satisfy (4.5) iff the following two inequalities hold

In particular, (4.8) and (4.9) together imply \({\bar{\mu }}_1\precsim {\bar{\mu }}_2\).

Proof

See [23, Theorem 2.3]. \(\square \)

We state one simple corollary of the previous results.

Corollary 4.5

Let \({\bar{\mu }}_1\), \({\bar{\mu }}_2\) be strictly positive measures on \(\Omega =\{1,q\}^E\) such that at least one of the measures \({\bar{\mu }}_1\), \({\bar{\mu }}_2\) is strongly positively associated. Then

implies \({\bar{\mu }}_1\precsim {\bar{\mu }}_2\).

Proof

Assuming that \({\bar{\mu }}_1\) is strongly positively associated we find using first the assumption (4.10) and then (4.6)

Now Theorem 4.4 implies the claim. The proof if \({\bar{\mu }}_2\) is strictly positively associated is similar. \(\square \)

It is convenient to derive the following correlation results for the measures \({\mathbb {P}}^{G,p}\) from corresponding results for the weighted spanning tree measure. The weighted spanning tree measure on a connected weighted graph \((G,\kappa )\) is a measure on \(\mathrm {ST}(G)\) with distribution

This model has been studied extensively, see [4] for a survey. An important special case is the uniform spanning tree corresponding to constant conductances \(\kappa \) that assigns equal probability to every spanning tree.

The following lemma provides the basic estimate to check the condition (4.9) for the measures \({\mathbb {P}}^{G,p}\).

Recall the notation \(\kappa ^{\pm \pm }_{fg}\) introduced before Theorem 4.2 and also the shorthand \(\kappa ^{\pm \pm }\).

Lemma 4.6

For a finite graph G and \(\kappa \in \{1,q\}^E\) as above

Remark 4.7

The proof in fact extends to any \(\kappa \in {\mathbb {R}}_+^E\) and \((\kappa ^{\pm \pm }_{fg})_f=c_f^{\pm }\), \((\kappa ^{\pm \pm }_{fg})_g=c_g^{\pm }\) with \(c_f^-\le c_f^+\) and \(c_g^-\le c_g^+\).

Proof

The lemma can be derived from the fact that the weighted spanning tree has negative correlations. It is well known (see, e.g., [4]) that for all positive weights \(\kappa \) on a finite graph G the measure \(\mathbb {Q}_\kappa ^G\) has negative edge correlations

Simple algebraic manipulations show that this is equivalent to

We introduce the following sums that depend on f, g, and \(\kappa \)

With this notation multiplication by \((A_{fg}+A_f+A_g+A)^2\) shows that (4.15) is equivalent to

It remains to show that the statement in the lemma can be deduced from (4.17) (actually the statements are equivalent). Clearly we can assume \(\kappa =\kappa ^{--}\), i.e., \(\kappa _f=\kappa _g=1\). Using the Kirchhoff formula (4.4) we find the following expression

In the second step we split the sum into four terms depending on whether \(f\in {\varvec{t}}\) and \(g \in {\varvec{t}}\) and use the shorthand introduced in (4.16). Hence we obtain

Subtracting those two identities we find that only the cross-terms between \(A_f,A_g\) and between \(A_{fg}, A\) do not cancel and we get

We can conclude using (4.17). \(\square \)

The previous lemma directly implies that the measures \({\mathbb {P}}^{G,p}\) are strongly positively associated.

Corollary 4.8

The measure \({\mathbb {P}}^{G,p}\) satisfies the FKG lattice condition for any \(\kappa _1,\kappa _2\in \{1,q\}^E\)

and the FKG inequality

for any increasing functions \(X,Y:\{1,q\}^E\rightarrow {\mathbb {R}}\).

Proof

Lemma 4.6 and the trivial observation that \(h(\kappa ^{++})+h(\kappa ^{--})=h(\kappa ^{+-})+h(\kappa ^{-+})\) imply for any \(\kappa \in \{1,q\}^E\) and \(f,g\in E\) the lattice inequality

Then Theorem 4.4 applied to \({\bar{\mu }}_1={\bar{\mu }}_2={\mathbb {P}}^{G,p}\) implies that the FKG lattice condition (4.21) holds and therefore by Theorem 4.3 also the FKG inequality (4.22). \(\square \)

Let us first state a trivial consequence of this corollary.

Corollary 4.9

The measures \({\mathbb {P}}^{G,p}\) and \({\mathbb {P}}^{G,p'}\) satisfy for \(p\le p'\)

Proof

Using Corollaries 4.8 and 4.5 we only need to check whether (4.10) holds for \({\bar{\mu }}_1={\mathbb {P}}^{G,p}\) and \({\bar{\mu }}_2={\mathbb {P}}^{G,p'}\). This is clearly the case if \(p\le p'\). \(\square \)

The next step is to show correlation inequalities with respect to the size of the graph. More specifically we show statements for subgraphs and contracted graphs. This will later easily imply the existence of infinite volume limits. Moreover, we can bound infinite volume states by finite volume measures in the sense of stochastic domination. Let \(F\subset E\) be a set of edges. We define the contracted graph G/F by identifying for every edge \(f\in F\) the endpoints of f. Similarly for a set \(W\subset V\) of vertices we define the contracted graph G/W by identifying all vertices in W. The resulting graphs may have multi-edges. We also consider connected subgraphs \(G'=(V',E')\) of G. Recall the notation \(\kappa ^{\pm }=\kappa _f^\pm \) for \(f\in E\). We use the notation \(\Delta _\kappa ^{G'}\) for the graph Laplacian on \(G'\) where we restrict the conductances \(\kappa \) to \(E'\) and we denote by \(\Delta _\kappa ^{G/F}\) the graph Laplacian on G/F. The following lemma relates the determinants of the different graph Laplacians.

Lemma 4.10

With the notation introduced above we have for \(\kappa \in \{1,q\}^E\)

Remark 4.11

The lemma again extends to \(\kappa \in {\mathbb {R}}_+^E\) and \(\kappa ^\pm _f\) with \((\kappa _f^+)_f=c_+>c_-=(\kappa _f^-)_f\).

Proof

The proof is similar to the proof of Lemma 4.6. We derive the statement from a property of the weighted spanning tree model. For graphs as above and \(e\in E'\) the estimate

holds (see Corollary 4.3 in [4] for a proof). We can rewrite (assuming again \(\kappa _f=1\), i.e., \(\kappa =\kappa ^-\))

Note that

and therefore (using \(\kappa =\kappa ^-\))

Similar statements hold for the graphs G/F and \(G'\). Hence (4.26) implies (4.25). \(\square \)

Let us remark that the probability \({\mathbb {Q}}^G_\kappa (f\in {\varvec{t}})\) can also be expressed as a current in a certain electrical network. In order to avoid unnecessary notation at this point we kept the weighted spanning tree measure and we will only exploit this connection when necessary below.

Again, the previous estimates imply correlation inequalities for the measures \({\mathbb {P}}^{G,p}\). In the following we consider a fixed value of p but different graphs so that we drop only p from the notation but we keep the graph G. We introduce the distribution under boundary conditions for a connected subgraph \(G'=(V',E')\) of G. For \(\lambda \in \{1,q\}^E\) we define the measure \({\mathbb {P}}^{G,E',\lambda }\) on \(\{1,q\}^{E'}\) by

where \((\lambda ,\kappa )\in \{1,q\}^E\) denotes the conductances given by \(\kappa \) on \(E'\) and by \(\lambda \) on \(E{\setminus } E'\). This definition implies that we have the following domain Markov property for \(\omega \in \{1,q\}^{E'}\)

Since the measure \({\mathbb {P}}^G\) is strongly positively associated, (4.31) and Theorem 2.24 in [23] imply that the measure \({\mathbb {P}}^{G,E',\lambda }\) is strongly positively associated. We now state the consequences of Lemma 4.10 on stochastic ordering.

Corollary 4.12

For a finite graph \(G=(V,E)\), a connected subgraph \(G'=(V',E')\), an edge subset \(F\subset E\), and configurations \(\lambda _1, \lambda _2\in \{1,q\}^E\) such that \(\lambda _1\le \lambda _2\) the following holds

More generally, we have for \(\lambda \in \{1,q\}^E\) and \(E''\subset E'\) or \(E''\cap F=\emptyset \) respectively

Proof

From Lemma 4.10 we obtain for \(f\in E'\) and any \(\kappa \in \{1,q\}^{E'}\)

Similarly, Lemma 4.10 implies for \(f\in E{\setminus } F\) and \(\kappa \in \{1,q\}^{E{\setminus } F}\)

Then the strong positive association of \({\mathbb {P}}^G\) and Corollary 4.5 imply the first and the last stochastic orderings claimed in (4.32). The stochastic domination result in the middle of (4.32) follows from (4.31) and a general result for strictly positive associated measures (see [23, Theorem 2.24]). The proof of (4.33) is similar. \(\square \)

4.3 Infinite volume measures

The definition of the measure \({\mathbb {P}}\) shows that it is a finite volume Gibbs measure for the energy \(E(\kappa )=\ln (\det \Delta _\kappa )/2\) and a homogeneous Bernoulli a priori measure. We would like to define infinite volume limits for the measures \({\mathbb {P}}^G\) and define a notion of Gibbs measures in infinite volume. This requires some additional definitions. The \(\sigma \)-algebras \({\mathcal {F}}_E\) for \(E\subset {\mathbf {E}}(G)\) are defined as the \(\sigma \)-algebra generated by \((\kappa _e)_{e\in E}\) and we write \({\mathcal {F}}={\mathcal {F}}_{{\mathbf {E}}(G)}\).

An event \(A\subset {\mathcal {F}}\) is called local if it measurable with respect to \({\mathcal {F}}_E\) for some finite set E, i.e., A depends only on finitely many edges. Similarly we define a local function as a function that is measurable with respect to \({\mathcal {F}}_E\) for a finite set E. We say that a sequence of measures \({\bar{\mu }}_n\) on \(\{1,q\}^{{\mathbf {E}}({\mathbb {Z}}^d)}\) converges in the topology of local convergence to a measure \({\bar{\mu }}\) if \({\bar{\mu }}_n(A)\rightarrow {\bar{\mu }}(A)\) for all local events A. For a background on the choice of topologies in the context of Gibbs measures we refer to [21]. The construction of the infinite volume states proceeds similarly to the construction for the Potts model by defining a specification and introducing the notion of free and wired boundary conditions. For simplicity we restrict the analysis to \({\mathbb {Z}}^d\) but the generalisation to more general graphs is straightforward. First, we define infinite volume limits of the finite volume distributions with wired and free boundary conditions. Let us denote by \(\Lambda _n=[-n,n]\cap {\mathbb {Z}}^d\) the ball with radius n in the maximum norm around the origin and we denote by \(E_n={\mathbf {E}}(\Lambda _n)\) the edges in \(\Lambda _n\). We introduce the shorthand \(\Lambda _n^w=\Lambda _n/\partial \Lambda _n\) for the box with wired boundary conditions. We define

for the measures \({\mathbb {P}}\) on \(\Lambda _n\) with free and wired boundary conditions respectively. From Corollary 4.12 and Eq. (4.31) we conclude that for any increasing event A depending only on edges in \(E_n\)

We conclude that for any increasing event A depending only on finitely many edges the limits \(\lim _{n\rightarrow \infty } {\bar{\mu }}_{n,p}^0(A)\) and similarly \(\lim _{n\rightarrow \infty } {\bar{\mu }}_{n,p}^1(A)\) exist. Using standard arguments we can write every local event A as a union and difference of increasing local events and we conclude that \(\lim _{n\rightarrow \infty } {\bar{\mu }}_{n,p}^{0}(A)\) and \(\lim _{n\rightarrow \infty } {\bar{\mu }}_{n,p}^{1}(A)\) exist. It is well known (see [5]) that this implies convergence of \({\bar{\mu }}_{n,p}^{0}\) and \({\bar{\mu }}_{n,p}^1\) to a measure on \(\{1,q\}^{{\mathbf {E}}({\mathbb {Z}}^d)}\) in the topology of local convergence. We denote the infinite volume measures by \({\bar{\mu }}_p^{0}\) and \({\bar{\mu }}_p^1\).

Lemma 4.13

The measure \({\bar{\mu }}_p^0\) and \({\bar{\mu }}_p^1\) satisfy the FKG-inequality and for \(0\le p\le p'\le 1\) the relations

Moreover they are invariant under symmetries of the lattice and ergodic with respect to translations.

Proof

We refer to the proof of Theorem 4.17 and Corollary 4.23 in [23] for a detailed proof for the FK random cluster model which essentially also applies to the model considered here. For the proof of shift invariance and ergodicity our proof is closer to Lemma 1.11 in [17].

The first part of the lemma is a consequence of Corollaries 4.8, 4.9, and 4.12 and a limiting argument.

The invariance under rotations of the lattice follows from the invariance of the finite volume measures under rotations. We now prove the shift invariance of \({\bar{\mu }}^1_p\), i.e.,

Recall that \(\tau _x e = e+x \) for \(e\in {\mathbf {E}}({\mathbb {Z}}^d)\) and \((\tau _x \eta )_e=\eta _{\tau _{-x}e}\) for gradient fields. As before it is sufficient to show shift invariance for increasing local events. Let \(x\in {\mathbb {Z}}^d\) and suppose \(x\in \Lambda _k\) for some \(k>0\). Let A be an increasing local event. Let n be large enough such that A only depends on the edges in \(\Lambda _{n-2k}\). From the relation \(\Lambda _{n-k}\subset \tau _x\Lambda _n \subset \Lambda _{n+k}\) and Corollary 4.12 we conclude that

Taking the limit \(n\rightarrow \infty \) we conclude that \(\lim _{n\rightarrow \infty } {\mathbb {P}}^{\tau _x \Lambda _n}(\tau _x A)={\bar{\mu }}^0(\tau _x A)\). Translation invariance of \({\bar{\mu }}^0\) now follows from

We prove ergodicity of \({\bar{\mu }}^0\) by showing that it is even mixing, i.e., \({\bar{\mu }}^0\) satisfies for all local events A and B

Again, it is sufficient to show this for all decreasing local events. The FKG inequality stated in Corollary 4.8 and translation invariance imply that for all \(x\in {\mathbb {Z}}^d\)

It remains to show the reverse inequality for \(|x|\rightarrow \infty \). We assume that A, B only depend on the edges in \(\Lambda _k\) for some k. Let n and m be integers such that \(n+k< |x|_\infty < m -k \). Then we can write the measure \({\mathbb {P}}^{\Lambda _m}(\cdot \vert \tau _xB)\) as a mixture of measures \({\mathbb {P}}^{\Lambda _m, E_n, \lambda }\) such that \(\lambda \in \tau _xB\) (here we use that \(\tau _x B\) does not depend on the edges in \(E_n\)). By the first statement of Corollary 4.12 the stochastic ordering \({\mathbb {P}}^{\Lambda _m, E_n, \lambda }\succsim {\mathbb {P}}^{\Lambda _n}\) holds true for all \(\lambda \) and therefore (note that A is decreasing)

Sending first \(m\rightarrow \infty \), then \(|x|\rightarrow \infty \) and \(n\rightarrow \infty \) we conclude that

Thus \({\bar{\mu }}^0\) is mixing. The fact that \({\bar{\mu }}^1\) is also translation invariant and mixing can be shown with similar arguments. \(\square \)

4.4 Infinite volume specifications

We now introduce the concept of infinite volume Gibbs measures for this model. We first consider the case of a finite connected graph G. For \(E\subset {\mathbf {E}}(G)\) we consider the finite volume specifications \({\bar{\gamma }}^G_{E}:{\mathcal {F}}\times \{1,q\}^{{\mathbf {E}}(G)}\rightarrow {\mathbb {R}}\)

where the normalisation \(Z_\lambda \) ensures that \({\bar{\gamma }}^G_{E}(\cdot ,\lambda )\) is a probability measure. A careful calculation shows that \({\bar{\gamma }}^G\) is indeed a specification, i.e., \({\bar{\gamma }}^G_{E}\) are proper probability kernels that satisfy for \(E\subset E'\)

Let us remark for readers familiar with the theory of Gibbs measures that this specification is actually a modification of the independent specification with \(\uplambda =p\delta _q+(1-p)\delta _1\) and one can also use Proposition 1.30 in [21] to verify that \({\bar{\gamma }}\) defines a specification. Since \({\bar{\gamma }}_{E}^G(\cdot , \lambda )\) is concentrated on a finite set it is convenient to use the notation \({\bar{\gamma }}_{E}^G(\kappa ,\lambda )={\bar{\gamma }}_{E}^G(\{\kappa \},\lambda )\). The measure \({\mathbb {P}}^{G}\) is a finite volume Gibbs measure, i.e., it satisfies

or put differently for \(\kappa ,\lambda \in \{1,q\}^{E}\)

We would like to call \(\mu \) a Gibbs measure on \(\{1,q\}^{{\mathbb {Z}}^d}\) for the random conductance model if

holds for all \(E\subset {\mathbf {E}}({\mathbb {Z}}^d)\) finite. However, \({\bar{\gamma }}^{G}_E\) is a priori only well defined for finite graphs so that we use an approximation procedure for infinite graphs. Let G be a connected infinite graph. We are a bit sloppy with the notation and do not distinguish between \({\bar{\gamma }}^H_{E}\) for a subgraph H of G and its proper extension to \({\mathcal {F}}\times \{1,q\}^{{\mathbf {E}}(G)}\), i.e., we define for \(\kappa ,\lambda \in \{1,q\}^{{\mathbf {E}}(G)}\)

We denote for \(f\in {\mathbf {E}}(G)\) and \(\kappa \in \{1,q\}^{{\mathbf {E}}(G)}\) by \(\kappa ^+\) and \(\kappa ^-\) as before the configurations such that \(\kappa _e^+=\kappa ^-_e\) for \(e\ne f\) and \(\kappa _f^-=1\), \(\kappa ^+_f=q\).

In the following we assume \(p\in (0,1)\). For \(p\in \{0,1\}\) the measures \({\mathbb {P}}^{G,p}\) agree with the Dirac measure on the constant 1 or constant q configuration. Since we assume that E is finite the specification \({\bar{\gamma }}^H_{E}\) can be uniquely characterized by the following two conditions: Firstly, the kernels \({\bar{\gamma }}^H_E\) are proper and secondly they satisfy for all \(\kappa ,\lambda \in \{1,q\}^{{\mathbf {E}}(H)}\) with \(\kappa _{E^{\mathrm {c}}}=\lambda _{E^{\mathrm {c}}}\)

where we used (4.29) in the second step. We show that we can give meaning to this expression in infinite volume. For this we sketch the definition of spanning trees in infinite volume but we refer to the literature for details (see [4]). A monotone exhaustion of an infinite graph G is a sequence of subgraphs \(G_n\) such that \(G_n\subset G_{n+1}\) and \(G=\bigcup _{n\ge 1} G_n\). It can be shown that for any finite sets \(E_1\subset E_2\subset {\mathbf {E}}(G)\) the limit \(\lim _{n\rightarrow \infty } {\mathbb {Q}}^{G_n}_\kappa ({\varvec{t}}\cap E_2=E_1)\) exists. In fact this is a consequence of (4.26) and the arguments we used for \({\bar{\mu }}_n^0\) above. Hence it is possible to define a measure \({\mathbb {Q}}_\kappa ^{G,0}\) on \(2^{{\mathbf {E}}(G)}\), the power set of \({\mathbf {E}}(G)\) which will be called the weighted free spanning forest on G (as the name suggest the measure is supported on forests but not necessarily on trees, i.e., on connected subsets of edges). Similarly, we can define the wired spanning forest \({\mathbb {Q}}_\kappa ^{G,1}\) replacing the subgraphs \(G_n\) by the contracted graphs \(G_n/\partial G_n\). By definition those measures satisfy

for any \(f\in E\). Then it is possible to define two families of proper probability kernels \({\bar{\gamma }}^{G,0}_{E}\) and \({\bar{\gamma }}^{G,1}_{E}\) for \(E\subset ({\mathbf {E}}(G))\) finite by the property that for \(f\in E\) and \(\kappa ,\lambda \in \{1,q\}^{{\mathbf {E}}(G)}\) such that \(\kappa _{E^{\mathrm {c}}}=\lambda _{E^{\mathrm {c}}}\)

From and (4.52) and (4.52) we conclude that \({\bar{\gamma }}^{G,0}\) and \({\bar{\gamma }}^{G,1}\) are well defined. Moreover we obtain that this family of probability kernels satisfy for \(\lambda ,\kappa \in \{1,q\}^{{\mathbf {E}}(G)}\)

Note that the concatenation for \({\bar{\gamma }}^{G,0}\) for \(E',E\subset {\mathbf {E}}({\mathbb {Z}}^d)\) is given by

in particular it only involves a finite sum in the case of a finite spin space. We conclude using (4.57) and (4.58) that \({\bar{\gamma }}^{G,0}_E\) and \({\bar{\gamma }}^{G,1}_E\) define two specifications on G.

Suppose the wired and the free uniform spanning forests on G agree. This implies that also the weighted wired and free spanning forests \({\mathbb {Q}}^{G,0}_\kappa \) and \({\mathbb {Q}}^{G,1}_\kappa \) on G agree if the conductances \(\kappa _e\) are contained in a compact subset of \((0,\infty )\) (see Theorem 7.3 and Theorem 7.7 in [4]). Thus \({\bar{\gamma }}^{G,1}_{E}={\bar{\gamma }}^{G,0}_{E}\) in this case. In particular we obtain that \({\bar{\gamma }}^{{\mathbb {Z}}^d,0}_{E}={\bar{\gamma }}^{{\mathbb {Z}}^d,1}_{E}\) because the free and the wired uniform spanning forests on \({\mathbb {Z}}^d\) agree (Corollary 6.3 in [4]). In the following we will denote this specification by \({\bar{\gamma }}_{E}\). To ensure consistency with the earlier definition of \({\tilde{\gamma }}\) we define for a connected subset \(\Lambda \subset {\mathbb {Z}}^d\) that \({\bar{\gamma }}_{\Lambda }={\bar{\gamma }}_{{\mathbf {E}}(\Lambda )}\). We can now give a formal definition of Gibbs measures for the random conductance model.

Definition 4.14

A measure \({\bar{\mu }}\in {\mathcal {P}}(\{1,q\}^{{\mathbf {E}}({\mathbb {Z}}^d)})\) is an infinite volume Gibbs measure for the random conductance model if it is specified by \({\bar{\gamma }}_E\), i.e., a Gibbs measure in the sense of Definition 2.1 for the specification \({\bar{\gamma }}_E\).

As one would expect the infinite volume measures \({\bar{\mu }}_p^0\) and \({\bar{\mu }}_p^1\) are Gibbs measures.

Lemma 4.15

The measures \({\bar{\mu }}_p^0\) and \({\bar{\mu }}_p^1\) are Gibbs measures as defined in Definition 4.14. Moreover any Gibbs measure \({\bar{\mu }}\) satisfies \({\bar{\mu }}_p^0\precsim {\bar{\mu }}\precsim {\bar{\mu }}_p^1\).

Proof

By Eq. (4.48) we have for \(E\subset E_n\)

We show that both sides converge in the topology of local convergence as \(n\rightarrow \infty \). Let A be an increasing event depending on a finite number of edges. We have seen in (4.37) that \(\mu _n^0(A)\) is an increasing sequence and converges by definition to \(\mu ^0(A)\). We derive the convergence of the left hand side of equation (4.60) from the following three observations. First, we conclude from (4.32) and (4.49) that \({\bar{\gamma }}^{\Lambda _n}_E(A,\cdot )\) is an increasing function. Second, using (4.33) and (4.49) we obtain \({\bar{\gamma }}^{\Lambda _{n+1}}_E(A,\kappa )\ge {\bar{\gamma }}^{\Lambda _n}_E(A,\kappa _{E_n})\) for all \(\kappa \in \{1,q\}^{E_{n+1}}\). The third observation is that (4.37) can also be applied to an increasing function instead of an increasing event. These three facts imply

On the other hand, we obtain for any \(m\in \mathbb {N}\)

Sending \(m\rightarrow \infty \) we get

Hence, we have shown that

holds for any increasing and local event A. Using standard arguments (4.64) holds for all local events. Therefore \(\mu ^0\) is a Gibbs measure. The proof for \(\mu ^1\) is similar based on the identity

Finally, a limiting argument and the comparison of boundary conditions show that \({\bar{\mu }}_p^0\precsim {\bar{\mu }}\precsim {\bar{\mu }}_p^1\) for any Gibbs measure \(\mu \) (see [23, Proposition 4.10]). \(\square \)

Let us briefly introduce the class of quasilocal specifications which is a natural and useful condition for a specification. For an extensive discussion we refer to the literature [21]. A quasilocal function on a general state space is a bounded function \(X:F^S\rightarrow {\mathbb {R}}\) that can be approximated arbitrarily well by local functions, i.e.,

A specification \(\gamma \) is called quasilocal if \(\gamma _{\Lambda } X\) is a quasilocal function for every local function X. We will show that the specification \({\bar{\gamma }}_{E}\) is quasilocal. This will be a direct consequence of the following result that shows uniform convergence of \({\bar{\gamma }}^{\Lambda _n^w}_E\) to \({\bar{\gamma }}_E\). This convergence will be of independent use later.

Lemma 4.16

The specifications \({\bar{\gamma }}_{E_n}\) and \({\bar{\gamma }}_{E_n}^{\Lambda _N^w}\) satisfy

Proof

First, we claim that it is sufficient to show that

Indeed, using (4.68) in (4.52) we obtain

Since \(E_n\) is finite this implies the claim.

It remains to prove (4.68). This is a consequence of the transfer current theorem (see Theorem 4.1 in [4]) that states in the special case of the occupation property that for \(f=\{x,y\}\in {\mathbf {E}}(G)\)

where the expression \(I_f(f)\) denotes the current through the edge f when 1 unit of current is induced respectively removed at the two ends of f. In the last step we used that \(I_f(f)\) can be calculated by applying the inverse Laplacian to the sources to obtain the potential which can be used to calculate the current through f. Now (4.68) follows from the display (4.70) and Lemma B.3. \(\square \)

Corollary 4.17

The specification \({\bar{\gamma }}_E\) is quasilocal.

Proof

Let X be a local function. We need to show that \({\bar{\gamma }}_EX\) is quasilocal. Lemma 4.16 implies that the local functions \({\bar{\gamma }}^{\Lambda _N^w}_EX\) satisfy

\(\square \)

4.5 Relation to extended gradient Gibbs measures

In this paragraph we state the results that relate the random conductance model to extended gradient Gibbs measure. This is finally the justification to consider this model. The proofs of the results in this paragraph are deferred to Appendix A. The first Proposition establishes that the \(\kappa \)-marginal of extended gradient Gibbs measures are Gibbs states for the random conductance model.

Proposition 4.18

Let \(\tilde{\mu }\) be an extended gradient Gibbs measure associated to a translation invariant and ergodic gradient Gibbs measure \(\mu \) with zero tilt. Then the \(\kappa \)-marginal \(\bar{\mu }\) of \(\tilde{\mu }\) is a Gibbs measure in the sense of Definition 4.14.

The second main result in this paragraph is a reverse of Proposition 4.18, namely that it is possible to obtain an extended Gibbs measure with zero tilt for the potential \(V_{p,q}\), given a Gibbs measure \({\bar{\mu }}\) for the random conductance model with parameters p, q.

Proposition 4.19

Let \({\bar{\mu }}\) be a Gibbs measure in the sense of Definition 4.14 for parameters p and q and \(\kappa \sim {\bar{\mu }}\). Let \(\varphi ^\kappa \) be the random field that for given \(\kappa \) is a Gaussian field with zero average, \(\varphi ^\kappa (0)=0\), and covariance \((\Delta _\kappa )^{-1}\), i.e., \(\varphi ^\kappa \) satisfies for \(f:{\mathbb {Z}}^d\rightarrow {\mathbb {R}}\) with finite support and \(\sum _{x} f(x)=0\)

Let \({\tilde{\mu }}\) be the joint law of \((\kappa ,\nabla \varphi ^\kappa )\). Then \({\tilde{\mu }}\) is an extended Gibbs measure for the potential \(V_{p,q}\) with zero tilt, in particular its \(\eta \)-marginal is a gradient Gibbs measure with zero tilt.

As a last result in this direction we state a very useful result from [7] that characterizes the law of \(\varphi \) given \(\kappa \) for extended gradient Gibbs measures if \(\varphi \) is distributed according to a gradient Gibbs measure.

Proposition 4.20

Let \(\mu \) be a translation invariant, ergodic gradient Gibbs measure with zero tilt and \({\tilde{\mu }}\) the corresponding extended gradient Gibbs measure. Then the conditional law of \(\varphi \) given \(\kappa \) is \({\tilde{\mu }}\)-almost surely Gaussian. It is determined by its expectation

and the covariance given by \((\Delta _\kappa )^{-1}\), i.e., for \(f:{\mathbb {Z}}^d\rightarrow {\mathbb {R}}\) with finite support and \(\sum _{x} f(x)=0\)

Proof

This is Lemma 3.4 in [7]. \(\square \)

In particular those results establish the following. Assume that \(\mu \) is an ergodic zero tilt gradient Gibbs measure. Let \({\bar{\mu }}\) be the \(\kappa \)-marginal of the corresponding extended gradient Gibbs measure \({\tilde{\mu }}\) (which by Proposition 4.18 is Gibbs for the random conductance model). We can use Proposition 4.19 to construct an extended gradient Gibbs measure \({\tilde{\mu }}'\). Using the definition of \({\tilde{\mu }}'\) in Propositions 4.19 and 4.20 we conclude that we get back the extended gradient Gibbs measure we started from, i.e., \({\tilde{\mu }}={\tilde{\mu }}'\).

5 Further properties of the random conductance model

In this section we state and prove more results about the random conductance model considered in this work and use the results from the previous section to derive corresponding results for the associated gradient interface model. We end this section with some conjectures and open questions.

5.1 Uniqueness results for the random conductance model

We start by proving \({\bar{\mu }}_p^0={\bar{\mu }}_p^1\) for \(d\ge 2\) and almost all values of p which will in particular implies uniqueness of the Gibbs measure for those p.

Theorem 5.1

For every \(q\ge 1\) there are at most countably many \(p\in [0,1]\) such that \({\bar{\mu }}_p^1\ne {\bar{\mu }}_p^0\).

Proof

It is a standard consequence of the invariance under lattice symmetries and \({\bar{\mu }}_{p}^0\precsim {\bar{\mu }}_{p}^1\) that \({\bar{\mu }}_p^1={\bar{\mu }}_p^0\) is equivalent to \({\bar{\mu }}_p^1(\kappa _e=q)={\bar{\mu }}_p^0(\kappa _e=q)\) for one and therefore any \(e\in {\mathbf {E}}({\mathbb {Z}}^d)\) (see, e.g, Proposition 4.6 in [23]). Lemma 5.3 below implies for \(e\in {\mathbf {E}}({\mathbb {Z}}^d)\)

for any \(p'>p\). In particular, we can conclude that \({\bar{\mu }}_p^0={\bar{\mu }}_p^1\) holds for all points of continuity of the map \(p\mapsto {\bar{\mu }}^0_p(\kappa _e=q)\). Since this map is increasing by Corollary 4.9 it has only countably many points of discontinuity. \(\square \)

We are now in the position to prove Theorem 2.3.

Proof of Theorem 2.3

We note that a translation invariant zero tilt Gibbs measure exists for any p and q, e.g., as a limit of torus Gibbs states (see the proof of Theorem 2.2 in [6]). It remains to show uniqueness. Consider p such that \(\bar{\mu }^1_p=\bar{\mu }^0_p\) which is true for all but a countable number of \(p\in [0,1]\) by Theorem 5.1 above. Let \(\mu _1\) and \(\mu _2\) be ergodic zero tilt gradient Gibbs measures for \(V=V_{p,q}\). By Proposition 4.18 the corresponding \(\kappa \)-marginals \({\bar{\mu }}_1\) and \({\bar{\mu }}_2\) of the extended Gibbs measures \({\tilde{\mu }}_1\) and \({\tilde{\mu }}_2\) are Gibbs measures in the sense of Definition 4.14 and therefore equal. Using Proposition 4.20 we conclude that since \(\mu _1\) and \(\mu _2\) are ergodic zero tilt gradient Gibbs measures their laws are determined by \({\bar{\mu }}_1\) and \({\bar{\mu }}_2\), hence \(\mu _1=\mu _2\). \(\square \)

Remark 5.2

Similar arguments for this model appeared already in the proof of Theorem 2.4 in [6] where they use the convexity of the pressure to show that the number of q-bonds on the torus is concentrated around its expectation in the thermodynamic limit. However, this is not sufficient to conclude uniqueness.

The key ingredient in the proof of Theorem 5.1 is the following lemma that compares \({\bar{\mu }}_p^1(\kappa _e=q)\) with \({\bar{\mu }}_{p'}^0(\kappa _e=q)\) for \(p<p'\). Intuitively the reason for this result is that a change of p is a bulk effect of order \(|\Lambda |\) while the effect of the boundary conditions is of order \(|\partial \Lambda |\).

Lemma 5.3

For any \(p<p'\) we have

Proof

The proof follows the proof of Theorem 1.12 in [17] where a similar result for the FK random cluster model is shown. The only difference is that the comparison between free and wired boundary conditions is slightly less direct. We define \(a= {\bar{\mu }}_{p'}^0(\kappa _e=q)\) and \(b= {\bar{\mu }}_{p}^1(\kappa _e=q)\). Comparison between boundary condition implies \({\bar{\mu }}_{n,p'}^0(\kappa _e=q)\le {\bar{\mu }}_{p'}^0(\kappa _e=q)=a\) for any \(e\in E_n\). Recall that \(h(\kappa )=| \{e\in {\mathbf {E}}(G):\kappa _e=q\} |\) denotes the number of q-bonds and \(s(\kappa )\) similarly the number of 1-bonds. The definition of a and b implies for \(0<\varepsilon <1-a\)

Similarly for \(0<\varepsilon <b\)

Our goal is to show that \(b-\varepsilon \le a+\varepsilon \). We denote by \(\Delta ^0\) and \(\Delta ^1\) the graph Laplacian on \(\Lambda _n\) with free and wired boundary conditions respectively. To compare the boundary conditions we denote by \(T_1=\mathrm {ST}(\Lambda _n^w)\) the set of wired spanning trees on \(\Lambda _n\) and by \(T_0=\mathrm {ST}(\Lambda _n)\) the set of spanning trees on \( \Lambda _n\) with free boundary conditions. There is a map \(\Phi :T_0 \rightarrow T_1\) such that \(\Phi ({\varvec{t}}){\restriction _{\Lambda _{n-1}}}={\varvec{t}}{\restriction _{\Lambda _{n-1}}}\). Indeed, removing all edges in \(E_n{\setminus } E_{n-1}\) from \({\varvec{t}}\) we obtain an acyclic subgraph of \(\Lambda _n^w\), hence we can find a tree \(\Phi ({\varvec{t}})\) such that \({\varvec{t}}{\restriction _{\Lambda _{n-1}}}\subset \Phi ({\varvec{t}})\subset {\varvec{t}}\). The observation \(|{\varvec{t}}{\setminus } \Phi ({\varvec{t}})|=|\partial \Lambda _n|-1\) implies that \(w(\kappa ,{\varvec{t}})\le w(\kappa ,\Phi ({\varvec{t}}))q^{|\partial \Lambda _n|-1}\). Since \(\Phi \) does not change the edges in \(E_{n-1}\) each tree \({\varvec{t}}\in T_1\) has at most \(2^{|E_n{\setminus } E_{n-1}|}\) preimages. We obtain that

Similarly, there is an injective mapping \(\Psi :T_1\rightarrow T_0\) such that \({\varvec{t}}\subset \Psi ({\varvec{t}})\). Indeed, we fix a tree \({\varvec{t}}_b\) in the graph \((\Lambda _n{\setminus } \Lambda _{n-1}, {\mathbf {E}}(\Lambda _n{\setminus } \Lambda _{n-1})\) and define \(\Psi ({\varvec{t}})={\varvec{t}}\cup {\varvec{t}}_b\in T_0\). We get

Inserting the bound \(|E_n{\setminus } E_{n-1}|\le 2d|\partial \Lambda _n|\) we infer from the definition (3.7) for any \(\kappa \in \{1,q\}^{E_n}\)

We define the constant \(\alpha =p'(1-p)/(p(1-p'))>1\). Simple manipulation show that for any function \(X:\{1,q\}^{E_n}\rightarrow {\mathbb {R}}\)

Therefore we obtain

From (5.3) and (5.4) we conclude

which implies \(a-b+2\varepsilon \ge 0\) as \(n\rightarrow \infty \) since \(\alpha >1\) and \(|E_n|/|\partial \Lambda _n|\rightarrow \infty \). The lemma follows as \(\varepsilon \rightarrow 0\). \(\square \)

The next result is a non-uniqueness result for the random conductance model.

Theorem 5.4

In dimension \(d=2\) and for \(q>1\) sufficiently large there are two distinct Gibbs measures \({\bar{\mu }}_{p_{\mathrm {sd}}}^1\ne {\bar{\mu }}_{p_{\mathrm {sd}}}^0\) at the self-dual point defined by Eq. (1.8).

The proof uses duality of the random conductance model and can be found in Sect. 6. This result easily implies Theorem 2.4.

Proof of Theorem 2.4

Using Proposition 4.19 we infer from Theorem 5.4 the existence of two translation invariant extended gradient Gibbs measures \(\tilde{\mu }_0\) and \(\tilde{\mu }_1\) constructed from \({\bar{\mu }}_{p_{\mathrm {sd}}}^0\ne {\bar{\mu }}_{p_{\mathrm {sd}}}^1\). Their \(\eta \)-marginals \(\mu _0\) and \(\mu _1\) are not equal since then the \(\kappa \)-marginals \({\bar{\mu }}_1\) and \({\bar{\mu }}_2\) would agree. They both have zero tilt by Proposition 4.19 and the definition of \({\tilde{\mu }}\) shows that \({\tilde{\mu }}\) is translation invariant if \({\bar{\mu }}\) is translation invariant. \(\square \)

Remark 5.5

A proof similar to Lemma 3.2 in [7] shows that ergodicity of \({\bar{\mu }}_1\) and \({\bar{\mu }}_2\) implies that \(\mu _0\) and \(\mu _1\) are themselves ergodic. The only difference is that \(\eta \) given \(\kappa \) is not independent (while \(\kappa \) given \(\eta \) is). Instead one has to rely on the decay of correlations for Gaussian fields stated in Appendix B.

Theorem 5.6

For \(d\ge 4\) there is \(q_0>1\) such that for \(p\in [0,1]\) and \(q\in [1,q_0)\) the Gibbs measure for the random conductance model is unique. Similarly, for \(d\ge 4\) and \(q\ge 1\) there is a \(p_0=p_0(q,d)>0\) such that the Gibbs measure is unique for \(p\in [0,p_0)\cup (1-p_0,1]\).

Proof

We are going to apply Dobrushin’s criterion (see, e.g., [21, Theorem 8.7]. The necessary estimate is basically a refined version of the proof of Lemma 4.6. Fix two edges \(f,g\in {\mathbf {E}}({\mathbb {Z}}^d)\). Recall the notation \(\lambda ^{\pm \pm }=\lambda ^{\pm \pm }_{fg}\) and \(\lambda ^\pm =\lambda ^\pm _f\) introduced above Theorem 4.2. We will write \({\bar{\gamma }}_f={\bar{\gamma }}_{\{f\}}\) in the following. Note that (4.52) and \({\bar{\gamma }}_{f}(\lambda ^{+},\lambda )+{\bar{\gamma }}_{f}(\lambda ^{-},\lambda )=1\) imply that

where \({\mathbb {Q}}_{\lambda ^-}\) denotes the weighted spanning forest measure on \({\mathbb {Z}}^d\) with conductances \(\lambda ^-\). We need to bound the entries of the Dobrushin interdependence matrix given by

Since the derivative of the map \(x\mapsto p/(p+(1-p)\sqrt{x})\) is bounded by \(p(1-p)\) for \(x\ge 1\) we conclude that

To simplify the notation we assume \(\lambda =\lambda ^{--}\). We can express \(\mathbb {Q}_{\lambda ^{-+}}(f\in {\varvec{t}})\) through the measure \(\mathbb {Q}_{\lambda ^{--}}=\mathbb {Q}_\lambda \) as follows

A sequence of manipulations then shows that

The numerator can be rewritten using the transfer-current Theorem for two edges (see [4, Page 10] and equation below 4.3 in [27])

where \(I_f^\kappa (g)\) denotes the current through g in a resistor network with conductances \(\kappa \) when 1 unit of current is inserted (respectively removed) at the ends of f (using a fixed orientation of the edges here, e.g., lexicographic). Altogether we have shown that

Using electrical network theory we can express for \(f=(x,x+e_i)\) and \(g=(y,y+e_j)\)

where \(G_\kappa \) denotes the inverse of the operator \(\Delta _\kappa \) which exists in dimension \(d\ge 3\) and whose derivative exists in dimension \(d\ge 2\). The second derivative of the Green function can be bound using Nash–Moser theory. It is shown in Lemma B.2 that there are constants \(C_3(q,d), \alpha (q,d)>0\) depending on the ellipticity contrast of the operator \(\Delta _\kappa \) and and the dimension such that

Combining this bound with (5.17) and (5.18) we conclude for \(f\in {\mathbf {E}}({\mathbb {Z}}^d)\) that

In dimension \(d\ge 4\) the sum is finite. Now, for fixed q, the sum becomes smaller than 1 for p sufficiently close to 0 or 1. Therefore there is \(p_0=p_0(q,d)\) such that the Gibbs measure is unique for \(p\in [0,p_0)\cup (1-p_0,1]\). On the other hand, the constant \(C_3(q,d)\) from Lemma B.2 is decreasing in q. Therefore we can estimate uniformly for \(p\in [0,1]\) and for \(q\le 2\)

Hence the Dobrushin criterion is satisfied for q sufficiently close to 1 and all \(p\in [0,1]\).

\(\square \)

Remark 5.7

-

1.

Note that the gradient-gradient correlations in gradient models at best only decay critically with \(|x|^{-d}\) (which is the decay rate for the discrete Gaussian free field). In particular, the sum of the covariances \(\sum _{g\in {\mathbf {E}}({\mathbb {Z}}^d)} \mathrm {Cov}(\eta _f,\eta _g)\) diverges in this type of model. We use crucially in the previous theorem that the decay of correlations is better for the discrete model: They decay with the square of the gradient-gradient correlations.

-

2.

The averaged (annealed) second order derivative of the Green function decays with the optimal decay rate \(|x|^{-d}\) as shown in [14]. For the application of the Dobrushin criterion we, however need deterministic bounds which are weaker.

-

3.

To extend the uniqueness result for q close to 1 to dimensions \(d=3\) and \(d=2\) one would need estimates for the optimal Hölder exponent \(\alpha \) depending on the ellipticity contrast of discrete elliptic operators. Here the ellipticity contrast can be bounded by q. There do not seem to be any results in this direction in the discrete setting. In the continuum setting the problem is open for \(d\ge 3\), but has been solved for \(d=2\) in [29]. In this case \(\alpha \rightarrow 1\) as the ellipticity contrast converges to 1. A similar result in the discrete setting would imply uniqueness of the Gibbs measure for small q in dimension 2.

Note that we can again lift the uniqueness result for the Gibbs measure of the random conductance model to a uniqueness result for the ergodic gradient Gibbs measures with zero tilt.

Proof of Theorem 2.5

The proof follows from the uniqueness of the discrete Gibbs measure proven in Theorem 5.6 in the same way as the proof of Theorem 2.3 which can be found above Remark 5.2. \(\square \)

5.2 Open questions

Let us end this section by stating one further result and two conjectures regarding the phase transitions of this model. They are most easily expressed in terms of percolation properties of the model even though the interpretation as open and closed bonds is somehow misleading in this context. We write \(x\leftrightarrow y\) for \(x,y\in {\mathbb {Z}}^d\) and \(\kappa \) if there is a path of q-bonds in \(\kappa \) connecting x and y and similarly for sets. Observe that the results of [18] can be applied to the model introduced here and we obtain the existence of a sharp phase transition.

Theorem 5.8

For every q the model undergoes a sharp phase transition in p, i.e., there is \(p_c(q,d)\) such that the following two properties hold. On the one hand there is a constant \(c_1>0\) such that for \(p>p_c\) sufficiently close to \(p_c\)

On the other hand, for \(p<p_c\) there is a constant \(c_p\) such that

Proof

The proof of Theorem 1.2 in [18] for the FK random cluster model applies to this model. Indeed, it only relies on \(\mu _{n,p}^1\) being strongly positively associated and a certain relation for the p derivative of events stated in Theorem 3.12 in [23] which is still true since the p-dependence is the same as for the FK random cluster model. \(\square \)

Remark 5.9

For \(d=2\) the self dual point defined in (1.8) and the critical point agree: \(p_c=p_{\mathrm {sd}}\). This can be seen based on Theorem 1.5 and the arguments used in the proof of Theorem 1.4 in [18] for the FK random cluster model.

In the FK random cluster model the most interesting phenomena happen for \(p=p_c\) and the subcritical and supercritical phase are much simpler to understand (in particular in \(d=2\)). Due to the differences explained in Remark 3.2 those questions seem to be harder for our random conductance model. Nevertheless we conjecture the following stronger version of Theorems 5.1 and 5.6.

Conjecture 5.10

For \(p\ne p_c\) there is a unique Gibbs measure.

Note that the sharpness result Theorem 5.8 shows that the probability of subcritical q-clusters to be large is exponentially small. Nevertheless it is not clear how this can be used to show uniqueness of the Gibbs measure in our setting.

The behaviour at \(p_c\) is also very interesting. A phase transition is called continuous if \(\mu _{p_c}^1(0\leftrightarrow \infty )=0\) and otherwise it is discontinuous. For the FK random cluster model in dimensions \(d=2\) the phase transition is continuous for \(q\le 4\) and otherwise discontinuous. Moreover, the uniqueness of the Gibbs measure at \(p_c\) is equivalent to a continuous phase transition. We do not know whether the same is true for the random conductance model considered here. But we expect the general picture to be true also for the random conductance and we make this precise in a second conjecture.

Conjecture 5.11

There is a \(q_0=q_0(d)\) such that for \(q> q_0\) there is non-uniqueness of Gibbs measures \({\bar{\mu }}_{p_c,q}^1\ne {\bar{\mu }}_{p_c,q}^0\) at the critical point while for \(q<q_0\) the Gibbs measures agree, i.e., \({\bar{\mu }}_{p_c,q}^1={\bar{\mu }}_{p_c,q}^0\).

A partial result in the direction of this conjecture is Theorem 5.4 that states non-uniqueness for large q in dimension \(d=2\) and Theorem 5.6 that shows uniqueness for q close to 1 and \(d\ge 4\).

6 Duality and coexistence of Gibbs measures

In this section we are going to prove that \(\mu ^0_{p_{\mathrm {sd}}}\ne \mu ^1_{p_{\mathrm {sd}}}\) for large q which implies the non-uniqueness of gradient Gibbs measures stated in Theorem 2.4. This is a new proof for the result in [6]. They consider conductances \(q_1\), \(q_2\) with \(q_1q_2=1\) which makes the presentation slightly more symmetric.