Abstract

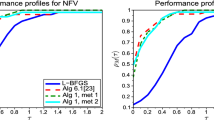

A displacement aggregation strategy is proposed for the curvature pairs stored in a limited-memory BFGS (a.k.a. L-BFGS) method such that the resulting (inverse) Hessian approximations are equal to those that would be derived from a full-memory BFGS method. This means that, if a sufficiently large number of pairs are stored, then an optimization algorithm employing the limited-memory method can achieve the same theoretical convergence properties as when full-memory (inverse) Hessian approximations are stored and employed, such as a local superlinear rate of convergence under assumptions that are common for attaining such guarantees. To the best of our knowledge, this is the first work in which a local superlinear convergence rate guarantee is offered by a quasi-Newton scheme that does not either store all curvature pairs throughout the entire run of the optimization algorithm or store an explicit (inverse) Hessian approximation. Numerical results are presented to show that displacement aggregation within an adaptive L-BFGS scheme can lead to better performance than standard L-BFGS.

Similar content being viewed by others

Notes

Quasi-Newton methods offer the ability to update Hessian and/or inverse Hessian approximations, which is why we state inverse parenthetically here. For ease of exposition throughout the remainder of the paper, we often drop mention of the inverse, although in many cases it is the approximation of the inverse, not the Hessian approximation, that is used in practice.

By limited-memory-type BFGS algorithm, we mean one that stores and employs a finite set of curvature pairs rather than an explicit Hessian approximation.

This provides evidence for the belief, held by some optimization researchers, that when solving certain large-scale problems one often observes that consecutive steps lie approximately in low-dimensional subspaces.

References

Berahas, A. S., J. Nocedal, and M. Takáč. A multi-batch L-BFGS method for machine learning. In: Advances in Neural Information Processing Systems, pp. 1055–1063 (2016)

Berahas, A.S., Takáč, M.: A robust multi-batch L-BFGS method for machine learning. Optim. Methods Softw. 35(1), 191–219 (2020)

Boggs, P.T., Byrd, R.H.: Adaptive, limited-memory BFGS algorithms for unconstrained optimization. SIAM J. Optim. 29(2), 1282–1299 (2019)

Bonnans, J.F., Gilbert, JCh., Lemaréchal, C., Sagastizábal, C.A.: A family of variable metric proximal methods. Math. Progr. 68(1), 15–47 (1995)

Broyden, C.G.: The convergence of a class of double-rank minimization algorithms. J. Inst. Math. Appl. 6(1), 76–90 (1970)

Byrd, R.H., Hansen, S.L., Nocedal, J., Singer, Y.: A stochastic quasi-Newton method for large-scale optimization. SIAM J. Optim. 26(2), 1008–1031 (2016)

Byrd, R.H., Nocedal, J.: A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 26(3), 727–739 (1989)

Byrd, R.H., Nocedal, J., Schnabel, R.B.: Representations of quasi-Newton matrices and their use in limited memory methods. Math. Program. 63, 129–156 (1994)

Byrd, R.H., Nocedal, J., Yuan, Y.: Global convergence of a class of quasi-Newton methods on convex problems. SIAM J. Numer. Anal. 24(5), 1171–1189 (1987)

Curtis, F. E.: A self-correcting variable-metric algorithm for stochastic optimization. In: Proceedings of the 48th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 48, pp. 632–641, New York, USA (2016)

Curtis, F.E., Que, X.: An adaptive gradient sampling algorithm for nonsmooth optimization. Opt. Meth. Softw. 28(6), 1302–1324 (2013)

Curtis, F.E., Que, X.: A quasi-Newton algorithm for nonconvex, nonsmooth optimization with global convergence guarantees. Math. Program. Comput. 7, 399–428 (2015)

Curtis, F.E., Robinson, D.P., Zhou, B.: A self-correcting variable-metric algorithm framework for nonsmooth optimization. IMA J. Numer. Anal. 40(2), 1154–1187 (2019)

Davidon, W.C.: Variable metric method for minimization. SIAM J. Optim. 1(1), 1–17 (1991)

Dennis, J.E., Moré, J.J.: A characterization of superlinear convergence and its application to quasi-Newton methods. Math. Comput. 28(126), 549–560 (1974)

Dennis, J.E., Schnabel, R.B.: Numerical Methods for Unconstrained Optimization and Nonlinear Equations. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1996)

Dolan, E.D., More, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13(3), 317–322 (1970)

Gill, P.E., Golub, G.H., Murray, W., Saunders, M.A.: Methods for modifying matrix factorizations. Math. Comput. 126(28), 505–535 (1974)

Goldfarb, D.: A family of variable metric updates derived by variational means. Math. Comput. 24(109), 23–26 (1970)

Gould, N.I.M., Orban, D., Toint, PhL: CUTEst: a constrained and unconstrained testing environment with safe threads for mathematical optimization. Comput. Optim. Appl. 60(3), 545–557 (2015)

Gower, R. , Goldfarb, D., Richtárik, P.: Stochastic block BFGS: squeezing more curvature out of data. In: International Conference on Machine Learning, pp. 1869–1878 (2016)

Haarala, N., Miettinen, K., Mäkelä, M.M.: New limited memory bundle method for large-scale nonsmooth optimization. Optim. Methods Softw. 19(6), 673–692 (2004)

Keskar, N. S., Berahas, A. S.: ADAQN: an adaptive quasi-newton algorithm for training RNNs. In: Joint European conference on machine learning and knowledge discovery in databases, pp 1–16. Springer (2016)

Kolda, T.G., O’Leary, D.P., Nazareth, L.: BFGS with update skipping and varying memory. SIAM J. Optim. 8(4), 1060–1083 (1998)

Lewis, A.S., Overton, M.L.: Nonsmooth optimization via quasi-Newton methods. Math. Program. 141(1), 135–163 (2013)

Mifflin, R., Sun, D., Qi, L.: Quasi-Newton bundle-type methods for nondifferentiable convex optimization. SIAM J. Optim. 8(2), 583–603 (1998)

Mokhtari, A., Ribeiro, A.: Global convergence of online limited memory BFGS. J. Mach. Learn. Res. 16(1), 3151–3181 (2015)

Morales, J.L.: A numerical study of limited memory BFGS methods. Appl. Math. Lett. 15, 481–487 (2002)

Nocedal, J.: Updating quasi-Newton matrices With limited storage. Math. Comput. 35(151), 773–782 (1980)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, New York (2006)

Pearson, J.D.: Variable metric methods of minimisation. Comput. J. 12(2), 171–178 (1969)

Powell, M.J.D.: Some global convergence properties of a variable metric algorithm for minimization with exact line searches. In: Cottle, R.W., Lemke, C.E. (eds.) Nonlinear Programming, SIAM-AMS Proceedings, Harwell, England, vol. IX. American Mathematical Society (1976)

Ritter, K.: Local and superlinear convergence of a class of variable metric methods. Computing 23(3), 287–297 (1979)

Ritter, K.: Global and Superlinear Convergence of a Class of Variable Metric Methods, pp. 178–205. Springer, Berlin (1981)

Rosenbrock, H.H.: An automatic method for finding the greatest or least value of a function. Comput. J. 3(3), 175–184 (1960)

Schraudolph, N. N., Yu, J., Günter, S.: A stochastic quasi-Newton method for online convex optimization. In: Artificial Intelligence and Statistics, pp. 436–443 (2007)

Shanno, D.F.: Conditioning of quasi-Newton methods for function minimization. Math. Comput. 24(111), 647–656 (1970)

Vlček, J., Lukšan, L.: Globally convergent variable metric method for nonconvex nondifferentiable unconstrained minimization. J. Optim. Theory Appl. 111(2), 407–430 (2001)

Wang, X., Ma, S., Goldfarb, D., Liu, W.: Stochastic quasi-Newton methods for nonconvex stochastic optimization. SIAM J. Optim. 27(2), 927–956 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This material is based upon work supported by the National Science Foundation under grant numbers CCF–1618717 and CCF–1740796.

Rights and permissions

About this article

Cite this article

Berahas, A.S., Curtis, F.E. & Zhou, B. Limited-memory BFGS with displacement aggregation. Math. Program. 194, 121–157 (2022). https://doi.org/10.1007/s10107-021-01621-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-021-01621-6

Keywords

- Nonlinear optimization

- Quasi-Newton algorithms

- Broyden–Fletcher–Goldfarb–Shanno (BFGS)

- Limited-memory BFGS

- Superlinear convergence