Abstract

In this paper, we study representations of ultragraph Leavitt path algebras via branching systems and, using partial skew ring theory, prove the reduction theorem for these algebras. We apply the reduction theorem to show that ultragraph Leavitt path algebras are semiprime and to completely describe faithfulness of the representations arising from branching systems, in terms of the dynamics of the branching systems. Furthermore, we study permutative representations and provide a sufficient criteria for a permutative representation of an ultragraph Leavitt path algebra to be equivalent to a representation arising from a branching system. We apply this criteria to describe a class of ultragraphs for which every representation (satisfying a mild condition) is permutative and has a restriction that is equivalent to a representation arising from a branching system.

Similar content being viewed by others

1 Introduction

The study of algebras associated to combinatorial objects is a mainstream area in mathematics, with connections with symbolic dynamics, wavelet theory and graph theory, to name a few. Among the most studied algebras arising from combinatorial objects, we find Cuntz–Krieger algebras, graph C*-algebras, Leavitt path algebras (algebraic version of graph C*-algebras) and algebras associated to infinite matrices (the so-called Exel–Laca algebras). Aiming at an unified approach to graph C*-algebras and Exel–Laca algebras Mark Tomforde introduced ultragraphs in [41]. One of the advantages of dealing with ultragraphs arises from the combinatorial picture, very similar to graphs, available to study Exel–Laca algebras. Also, new examples appear, as the class of ultragraph algebras is strictly larger than the class of graph and Exel–Laca algebras (both in the C*-context and in the purely algebraic context), although in the C*-algebraic context these three classes agree up to Morita equivalence, see [34].

Ultragraphs can be seen as graphs for which the range map takes values over the power set of the vertices, that is, the range is a subset of the set of vertices. Ultragraphs can also be seen as labelled graphs where it is only possible to label edges with the same source. This restriction makes it much simpler to deal with objects (such as algebras and topological spaces) associated to ultragraphs than with objects associated to labelled graphs, but still interesting properties of labelled graphs present themselves in ultragraphs. This is the case, for example, in the study of chaos for shift spaces over infinite alphabets (see [30, 31]). In fact, ultragraphs are key in the study of shift spaces over infinite alphabets, see [25, 26, 29]. Furthermore, the KMS states associated to ultragraph C*-algebras are studied in [11] and the connection of ultragraph C*-algebras with the Perron–Frobenius operator is described in [16], where the theory of representations of ultragraph C*-algebras (arising from branching systems) is also developed. It is worth mentioning that many of the results in [16] are lacking an algebraic counterpart, and this is partially the goal of this paper.

Over the years, many researches dedicated efforts to obtain analogues of results in operator theory in the purely algebraic context and to understand the relations between these results. For example, Leavitt path algebras, see [1, 2, 4], were introduced as an algebraization of graph C*-algebras and Cuntz–Krieger algebras. Later, Kumjian–Pask algebras, see [6], arose as an algebraization of higher rank graph C*-algebras. Partial skew group rings were studied as algebraization of partial crossed products, see [12], and Steinberg algebras were introduced in [10, 40] as an algebraization of the groupoid C*-algebras first studied by Renault. Very recently the algebraization of ultragraph C*-algebras called ultragraph Leavitt path algebras was defined, see [33]. Similarly to the C*-algebraic setting, ultragraph Leavit path algebras generalize the Leavitt path algebras and the algebraic version of Exel–Laca algebras and provide examples that are neither Leavitt path algebras nor Exel–Laca algebras.

As we mentioned before, our goal is to study ultragraph Leavitt path algebras. Our first main result is the reduction theorem, which for Leavitt path algebras was proved in [36]. This result is fundamental in Leavitt path algebra theory, and it is also key in our study of representations of ultragraph Leavitt path algebras. (We also use it to prove that ultragraph Leavitt path algebras are semiprime). The study of representations of algebras associated to combinatorial objects is a subject of much interest. For example, representations of Leavitt path algebras were studied in [3, 9, 32, 39], of Kumjian-Pask in [6], of Steinberg algebras in [5, 8]. Representations of various algebras, in connection with branching systems, were studied in [13,14,15, 17,18,19,20,21,22, 24, 35, 38]. To describe the connections of representations of ultragraph Leavitt path algebras with branching systems is the second goal of this paper. In particular, we will give a description of faithful representations arising from branching systems, will define permutative representations and show conditions for equivalence between a given representation and representations arising from branching systems. (These are algebraic versions of the results in [16].) The concepts we build here will be crucial in the description of perfect irreducible representations of ultragraph Leavitt path algebras in terms of branching systems given in [27, Theorem 6.9]. (Notice that this description is a new result also in the context of Leavitt path algebras of graphs.)

The paper is organized as follows: The next section is a brief overview of the definitions of ultragraphs and the associated ultragraph Leavitt path algebra. In Section 3, we prove the reduction theorem, by using partial skew group ring theory and the grading of ultragraph Leavitt path algebras by the free group on the edges (obtained from the partial skew ring characterization). We notice that the usual proof of the reduction theorem for Leavitt path algebras does not pass straighforwardly to ultragraph Leavitt path algebras, and so we provide a proof using partial skew group ring theory. (In the case of a graph, our proof is an alternative proof of the reduction theorem for Leavitt path algebras.) In Section 4, we define branching systems associated to ultragraphs and show how they induce a representation of the algebra. The study of faithful representations arising from branching systems is done in Section 5. Finally, in Section 6 we define permutative representations and study equivalence of representations of an ultragraph Leavitt path algebra with representations arising from branching systems.

Before we proceed, we remark that we make no assumption of countability on the ultragraphs, and hence, the results we present generalize, to the uncountable graph case, the results for Leavitt path algebras presented in [20].

2 Preliminaries

We start this section with the definition of ultragraphs.

Definition 2.1

([41, Definition 2.1]) An ultragraph is a quadruple \(\mathcal {G}=(G^0, \mathcal {G}^1, r,s)\) consisting of two sets \(G^0, \mathcal {G}^1\), a map \(s:\mathcal {G}^1 \rightarrow G^0\) and a map \(r:\mathcal {G}^1 \rightarrow P(G^0){\setminus } \{\emptyset \}\), where \(P(G^0)\) stands for the power set of \(G^0\).

Before we define the algebra associated with an ultragraph, we need a notion of “generalized vertices”. This is the content of the next definition.

Definition 2.2

([41]) Let \(\mathcal {G}\) be an ultragraph. Define \(\mathcal {G}^0\) to be the smallest subset of \(P(G^0)\) that contains \(\{v\}\) for all \(v\in G^0\), contains r(e) for all \(e\in \mathcal {G}^1\), and is closed under finite unions and finite intersections.

Notice that since \(\mathcal {G}^0\) is closed under finite intersections, the emptyset is in \(\mathcal {G}^0\). We also have the following helpful description of the set of generalized vertices \(\mathcal {G}^0\).

Lemma 2.3

([41, Lemma 2.12]) Let \(\mathcal {G}\) be an ultragraph. Then

We can now define the ultragraph Leavitt path algebra associated to an ultragraph \(\mathcal {G}\).

Definition 2.4

([33]) Let \(\mathcal {G}\) be an ultragraph and R be a unital commutative ring. The Leavitt path algebra of \(\mathcal {G}\), denoted by \(L_R(\mathcal {G})\), is the universal R with generators \(\{s_e,s_e^*:e\in \mathcal {G}^1\}\cup \{p_A:A\in \mathcal {G}^0\}\) and relations

-

1.

\(p_\emptyset =0, p_Ap_B=p_{A\cap B}, p_{A\cup B}=p_A+p_B-p_{A\cap B}\), for all \(A,B\in \mathcal {G}^0\);

-

2.

\(p_{s(e)}s_e=s_ep_{r(e)}=s_e\) and \(p_{r(e)}s_e^*=s_e^*p_{s(e)}=s_e^*\) for each \(e\in \mathcal {G}^1\)

-

3.

\(s_e^*s_f=\delta _{e,f}p_{r(e)}\) for all \(e,f\in \mathcal {G}\)

-

4.

\(p_v=\sum \nolimits _{s(e)=v}s_es_e^*\) whenever \(0<\vert s^{-1}(v)\vert < \infty \).

In the previous definition, \(p_A\) is a projection, for each \(A\in \mathcal {G}^0\). Notice that \(\{v\}\in \mathcal {G}^0\) for each vertex v in \(G^0\). For the vertices in \(G^0\), we use the notation \(p_{v}\) instead of \(p_{\{v\}}\), and so, for each edge e, \(p_{s(e)}\) means the projection \(p_{\{s(e)\}}\).

To prove the reduction theorem in the next section, we need the characterization of Leavitt ultragraph algebras as partial skew rings. Therefore we recall this description below (as done in [28]).

2.1 Leavitt ultragraph path algebras as partial skew rings

We begin recalling some partial skew ring theory. A partial action of a group G on a set \(\Omega \) is a pair \(\alpha = (\{D_{t}\}_{t\in G}, \ \{\alpha _{t}\}_{t\in G})\), where for each \(t\in G\), \(D_{t}\) is a subset of \(\Omega \) and \(\alpha _{t}:D_{t^{-1}} \rightarrow \Delta _{t}\) is a bijection such that \(D_{e} = \Omega \), \(\alpha _{e}\) is the identity in \(\Omega \), \(\alpha _{t}(D_{t^{-1}} \cap D_{s})=D_{t} \cap D_{ts}\) and \(\alpha _{t}(\alpha _{s}(x))=\alpha _{ts}(x),\) for all \(x \in D_{s^{-1}} \cap D_{s^{-1} t^{-1}}.\) In case \(\Omega \) is an algebra or a ring, then the subsets \(D_t\) should also be ideals and the maps \(\alpha _t\) should be isomorphisms. Associated to a partial action of a group G in a ring A, we have the partial skew group ring \(A\star _{\alpha } G\), which is defined as the set of all finite formal sums \(\sum _{t \in G} a_t\delta _t\), where, for all \(t \in G\), \(a_t \in D_t\) and \(\delta _t\) are symbols. Addition is defined in the usual way and multiplication is determined by \((a_t\delta _t)(b_s\delta _s) = \alpha _t(\alpha _{-t}(a_t)b_s)\delta _{t+s}\).

Next we set up some notation regarding ultragraphs. A finite path is either an element of \(\mathcal {G}^0\) or a sequence of edges \(e_1...e_n\), with length \(|e_1...e_n|=n\), and such that \(s(e_{i+1})\in r(e_i)\) for each \(i\in \{0,\ldots ,n-1\}\). An infinite path is a sequence \(e_1e_2e_3...\), with length \(|e_1e_2...|=\infty \), such that \(s(e_{i+1})\in r(e_i)\) for each \(i\ge 0\). The set of finite paths in \(\mathcal {G}\) is denoted by \(\mathcal {G}^*\), and the set of infinite paths in \(\mathcal {G}\) is denoted by \({\mathfrak {p}}^\infty \). We extend the source and range maps as follows: \(r(\alpha )=r(\alpha _{|\alpha |})\), \(s(\alpha )=s(\alpha _1)\) for \(\alpha \in \mathcal {G}^*\) with \(0<|\alpha |<\infty \), \(s(\alpha )=s(\alpha _1)\) for each \(\alpha \in {\mathfrak {p}}^\infty \), and \(r(A)=A=s(A)\) for each \(A\in \mathcal {G}^0\). An element \(v\in G^0\) is a sink if \(s^{-1}(v) = \emptyset \), and we denote the set of sinks in \(G^0\) by \(G^0_s\). We say that \(A\in \mathcal {G}^0\) is a sink if each vertex in A is a sink. Finally, a ghost edge is an element of \((\mathcal {G}^1)^*\).

Define the set

We extend the range and source maps to elements \((\alpha ,v)\in X\) by defining \(r(\alpha ,v)=v\) and \(s(\alpha ,v)=s(\alpha )\). Furthermore, we extend the length map to the elements \((\alpha ,v)\) by defining \(|(\alpha ,v)|:=|\alpha |\).

The group acting on the space X is the free group generated by \(\mathcal {G}^1\), which we denote by \(\mathbb {F}\). Let \(W\subseteq \mathbb {F}\) be the set of paths in \(\mathcal {G}^*\) with strictly positive length.

Now we define the following sets:

-

for \(a\in W\), let \(X_a=\{x\in X:x_1..x_{|a|}=a\}\);

-

for \(b\in W\), let \(X_{b^{-1}}=\{x\in X:s(x)\in r(b)\}\);

-

for \(a,b\in W\) with \(r(a)\cap r(b)\ne \emptyset \), let

$$\begin{aligned} X_{ab^{-1}}= & {} \left\{ x\in X:|x|>|a|, \,\,\, x_1...x_{|a|}=a \text { and }s(x_{|a|+1})\in r(b)\cap r(a)\right\} \bigcup \\&\bigcup \left\{ (a,v) \in X:v\in r(a)\cap r(b)\right\} ; \end{aligned}$$ -

for the neutral element 0 of \(\mathbb {F}\), let \(X_0=X\);

-

for all the other elements c of \(\mathbb {F}\), let \(X_c=\emptyset \).

Define, for each \(A\in \mathcal {G}^0\) and \(b\in W\), the sets

and

We obtain a partial action of \(\mathbb {F}\) on X by defining the following bijective maps:

-

for \(a\in W\) define \(\theta _a:X_{a^{-1}}\rightarrow X_a\) by

$$\begin{aligned} \theta _a(x)=\left\{ \begin{array}{ll} ax &{} \text { if } |x|=\infty ,\\ (a\alpha ,v) &{} \text { if } x=(\alpha ,v),\\ (a,v) &{} \text { if } x=(v,v); \end{array} \right. \end{aligned}$$ -

for \(a\in W\) define \(\theta _a^{-1}:X_a\rightarrow X_{a^{-1}}\) as being the inverse of \(\theta _a\);

-

for \(a,b\in W\) define \(\theta _{ab^{-1}}:X_{ba^{-1}}\rightarrow X_{ab^{-1}}\) by

$$\begin{aligned} \theta _{ab^{-1}}(x)=\left\{ \begin{array}{ll} ay &{} \text { if } |x|=\infty \text { and } x=by,\\ (a\alpha ,v) &{} \text { if } x=(b\alpha ,v),\\ (a,v) &{} \text { if } x=(b,v); \end{array} \right. \end{aligned}$$ -

for the neutral element \(0\in \mathbb {F}\) define \(\theta _0:X_0\rightarrow X_0\) as the identity map;

-

for all the other elements c of \(\mathbb {F}\) define \(\theta _c:X_{c^{-1}}\rightarrow X_c\) as the empty map.

The above maps together with the subsets \(X_t\) form a partial action of \(\mathbb {F}\) on X, that is \((\{\theta _t\}_{t\in \mathbb {F}}, \{X_t\}_{t\in \mathbb {F}})\) is such that \(X_0=X\), \(\theta _0=Id_x\), \(\theta _c(X_{c^{-1}}\cap X_t)=X_{ct}\cap X_c\) and \(\theta _c\circ \theta _t=\theta _{ct}\) in \(X_{t^{-1}}\cap X_{t^{-1}c^{-1}}\). This partial action induces a partial action on the level of the R-algebra of functions (with point-wise sum and product) F(X). More precisely, let D be the subalgebra of F(X) generated by all the finite sums of all the finite products of the characteristic maps \(\{1_{X_A}\}_{A\in \mathcal {G}^0}\), \(\{1_{bA}\}_{b\in W, A\in \mathcal {G}^0}\) and \(\{1_{X_c}\}_{c\in \mathbb {F}}\). Also define, for each \(t\in \mathbb {F}\), the ideals \(D_t\) of D, as being all the finite sums of finite products of the characteristic maps \(\{1_{X_t}1_{X_A}\}_{A\in \mathcal {G}^0}\), \(\{1_{X_t}1_{bA}\}_{b\in W, A\in \mathcal {G}^0}\) and \(\{1_{X_t}1_{X_c}\}_{c\in \mathbb {F}}\). Now, for each \(c\in \mathbb {F}\), define the R-isomorphism \(\beta _c:D_{c^{-1}}\rightarrow D_c\) by \(\beta _c(f)=f\circ \theta _{c^{-1}}\). Then \((\{\beta _t\}_{t\in \mathbb {F}}, \{D_t\}_{t\in \mathbb {F}})\) is a partial action of \(\mathbb {F}\) on D.

Remark 2.5

From now on we will use the notation \(1_A\), \(1_{bA}\) and \(1_t\) instead of \(1_{X_A}\), \(1_{X_{bA}}\) and \(1_{X_t}\), for \(A\in \mathcal {G}^0\), \(b\in W\) and \(t\in \mathbb {F}\). Also, we have the following description of the ideals \(D_t\):

and, for each \(t\in \mathbb {F}\),

The key result in [28] that we need to be the following theorem.

Theorem 2.6

[28] Let \(\mathcal {G}\) be a countable ultragraph, R be a unital commutative ring, and \(L_R(\mathcal {G})\) be the Leavitt path algebra of \(\mathcal {G}\). Then there exists an R-isomorphism \(\phi :L_R(\mathcal {G})\rightarrow D\rtimes _\beta \mathbb {F}\) such that \(\phi (p_A)=1_A\delta _0\), \(\phi (s_e^*)=1_{{e^{-1}}}\delta _{e^{-1}}\) and \(\phi (s_e)=1_e\delta _e\), for each \(A\in \mathcal {G}^0\) and \(e\in \mathcal {G}^1\).

Remark 2.7

To prove Theorem 2.6 the authors first defined, using the universality of \(L_R(\mathcal {G})\), a surjective homomorphism \(\phi :L_R(\mathcal {G})\rightarrow D\rtimes _\beta \mathbb {F}\) such that \(\phi (p_A)=1_A\delta _0\), \(\phi (s_e)=1_e\delta _e\), and \(\phi (s_e^{*})=1_{e^{-1}}\delta _{e^{-1}}\), for each \(A\in \mathcal {G}^0\) and \(e\in \mathcal {G}^1\). Up to this part of the proof, the assumption on the cardinalilty of the ultragraph was not used. In particular, since \(1_A\delta _0, 1_e\delta _e, 1_{e^{-1}}\delta _{e^{-1}}\) are all nonzero then \(p_A, s_e\) and \(s_{e^*}\) are all nonzero in \(L_R(\mathcal {G})\) (for \(\mathcal {G}\) an arbitrary ultragraph). The injectivity of \(\phi \) then followed by Theorem 3.2 of [33], which in turn relied on the graded uniqueness theorem for Leavitt path algebras of countable graphs (Theorem 5.3 of [43]). However, Theorem 5.3 of [43] also holds for arbitrary graphs, with the same proof. So, the injectivity of \(\phi :L_R(G)\rightarrow D\rtimes _\beta \mathbb {F}\) also holds for arbitrary \(\mathcal {G}\), and we get the following theorem:

Theorem 2.8

Let \(\mathcal {G}\) be an arbitrary ultragraph, R be a unital commutative ring, and \(L_R(\mathcal {G})\) be the Leavitt path algebra of \(\mathcal {G}\). Then there exists an R-isomorphism \(\phi :L_R(\mathcal {G})\rightarrow D\rtimes _\beta \mathbb {F}\) such that \(\phi (p_A)=1_A\delta _0\), \(\phi (s_e^*)=1_{{e^{-1}}}\delta _{e^{-1}}\) and \(\phi (s_e)=1_e\delta _e\) for each \(A\in \mathcal {G}^0\) and \(e\in \mathcal {G}^1\).

3 The reduction theorem

The reduction theorem for Leavitt path algebras, see [1, 36], is an extremely useful tool in establishing various ring-theoretic properties of Leavitt path algebras. (For example, the uniqueness theorems for Leavitt path algebras follow with mild effort from the reduction theorem.) A version for relative Cohn path algebras was given in [15], where it was also used as an important tool in the study of representations of these algebras. In our context, the reduction theorem allows us to characterize faithful representations of Leavitt ultragraph path algebras arising from branching systems, but we expect it will also have applications in further studies of ultragraph Leavitt path algebras. (For example, in Corollary 3.3 we show that ultragraph Leavitt path algebras are semiprime.) We present the theorem below, but first we recall the following:

Definition 3.1

([42]) Let \(\mathcal {G}\) be an ultragraph. A closed path is a path \(\alpha \in \mathcal {G}^*\) with \(| \alpha | \ge 1\) and \(s(\alpha ) \in r(\alpha )\). A closed path \(\alpha \) is a cycle if \(s(\alpha _i)\ne s(\alpha _j)\) for each \(i\ne j\). An exit for a closed path is either an edge \(e \in \mathcal {G}^1\) such that there exists an i for which \(s(e) \in r(\alpha _{i})\) but \(e \ne \alpha _{i+1}\) or a sink w such that \(w \in r(\alpha _i)\) for some i. We say that the ultragraph \(\mathcal {G}\) satisfies Condition (L) if every closed path in \(\mathcal {G}\) has an exit.

Notice that if \(\alpha =\alpha _1...\alpha _n\) is a closed path without exit in an ultragraph, then \(r(\alpha _i)\) is a singleton, for each \(i\in \{1,\ldots ,n\}\).

Theorem 3.2

Let \(\mathcal {G}\) be an arbitrary ultragraph, R be a unital commutative ring and \(0\ne x\in L_R(\mathcal {G})\). Then there are elements \(\mu =\mu _1...\mu _n\) and \(\nu =\nu _1...\nu _m\in L_R(\mathcal {G})\), with \(\mu _i, \nu _j\in \mathcal {G}^1\cup (\mathcal {G}^1)^*\) for each i and j, such that \(0\ne \mu x\nu \) and either \(\mu x\nu =\lambda p_A\), for some \(A\in \mathcal {G}^0\), or \(\mu x\nu =\sum \nolimits _{i=1}^k\lambda _i s_{c}^i\), where c is a cycle without exit.

Proof

Recall that, by Theorem 2.8, there is an isomorphism \(\Phi :L_R(\mathcal {G}): D\rtimes _\beta \mathbb {F}\) such that \(\Phi (p_A)=1_A\delta _0\) and \(\Phi (s_ap_As_b^*)=1_{ab^{-1}}1_{aA}\delta _{ab^{-1}}\), where a, b are paths in \(\mathcal {G}\) and \(A\in \mathcal {G}^0\). Moreover, \(D\rtimes _\beta \mathbb {F}\) has a natural grading over \(\mathbb {F}\), that is, \(D\rtimes _\beta \mathbb {F}=\bigoplus _{t\in \mathbb {F}}D_t\delta _t\). \(\square \)

Let x be a nonzero element in \(L_R(\mathcal {G})\). We divide the proof in a few steps. The first one is the following.

Claim 1

There is a vertex \(v\in G^0\) such that \(xp_v\ne 0\).

First we prove that for each \(t\in \mathbb {F}\), and \(0\ne f_t\delta _t\in D_t\delta _t\), there is a vertex v such that \(f_t\delta _t1_v\delta _0\ne 0\).

Indeed, notice that for a nonzero element \(f\delta _0\in D_0\delta _0\), since \(f\ne 0\), there exists an element \(y\in X\) such that \(f(y)\ne 0\). Let \(v=s(y)\). Then \((f1_v)(y)\ne 0\) and therefore \(f\delta _01_v\delta _0=f1_v\delta _0\ne 0\). Similarly, for a given element \(0\ne f\delta _{ab^{-1}}\in D_{ab^{-1}}\delta _{ab^{-1}}\), where a, b are paths in \(\mathcal {G}\) (possibly one of them with length zero) notice that \(\beta _{ba^{-1}}(f)\ne 0\), and hence, there is an element \(y\in X_{ba^{-1}}\) such that \((\beta _{ba^{-1}}(f))(y)\ne 0\). Let \(v=s(y)\). Then \((\beta _{ba^{-1}}(f)1_v)(y)=(\beta _{ba^{-1}}(f))(y)\ne 0\), and it follows that \(f\delta _{ab^{-1}}1_v\delta _0=\beta _{ab^{-1}}(\beta _{ba^{-1}}(f)1_v)\delta _{ab^{-1}}\ne 0\).

Now, since \(x \ne 0\), we have that \(0\ne \Phi (x)=\sum \nolimits _{i=1}^n f_{t_i}\delta _{t_i}\), with \(t_i\ne t_j\), and \(f_{t_i}\delta _{t_i}\ne 0\) for each i. Fix some \(i_0\) and chose a vertex v such that \(f_{t_{i_0}}\delta _{t_{i_0}}1_v\delta _0\ne 0\). Since \(D\rtimes _\beta \mathbb {F}\) is \(\mathbb {F}\)-graded then \(z=\sum \nolimits _{i=1}^nf_{t_i}\delta _{t_i}1_v\delta _0\ne 0\). Hence, applying \(\Phi ^{-1}\) to z, we get that \(xp_v\ne 0\).

Claim 2

For each nonzero \(x\in L_R(\mathcal {G})\), there exists an \(y\in L_R(\mathcal {G})\) of the form \(y=y_1...y_n\), with \(y_i\in \mathcal {G}^1\), such that \(x y\ne 0\) and xy has no ghost edges in its composition (recall that a ghost edge is an element of \((\mathcal {G}^1)^*\)), that is, we can write \(xy = \sum \lambda _j s_{a_j}p_{A_j}\) where \(a_j\) are paths in the ultragraph \(\mathcal {G}\) and \(A_j\in \mathcal {G}^0\).

Write \(x=\sum \lambda _i s_{a_i}p_{A_i}s_{b_i}^*\) with each \(\lambda _i s_{a_i}p_{A_i}s_{b_i}^*\ne 0\), where \(a_i,b_i\) are paths in the ultragraph \(\mathcal {G}\), \(A_i\in \mathcal {G}^0\), and \(\lambda _i\in R\) (see [33, Theorem 2.5]). If \(b_i\) has length zero for each i then we are done. Suppose that there exists an index i such that \(b_i\) has positive length. Now, using the \(\mathbb {F}\)-grading of \(L_\mathcal {G}(R)\), write \(x=\sum \nolimits _{t\in S} x_t\), where \(x_t=\sum \nolimits _{i:a_ib_i^{-1}=t}\lambda _is_{a_i}p_{A_i}s_{b_i}^*\) and each \(x_t\ne 0\). Then, for each \(t\in S\),

Fix some \(t\in S\) and let \(g_t:=\sum \nolimits _{i:a_ib_i^{-1}=t}\lambda _i 1_{a_iA_i}1_t\ne 0\). Since \(\beta _{t^{-1}}(g_t)\ne 0\) there exists some \(x\in X_{t^{-1}}\) such that \(\beta _{t^{-1}}(g_t)(x)\ne 0\). If \(|x|=0\) then \(x=(v,v)\) where v is a sink. Notice that in this case \((\beta _{t^{-1}}(g_t)1_v)(x)=\beta _{t^{-1}}(g_t)(x)\ne 0\), so that \(g_t\delta _t1_v\delta _0\ne 0\), and so \(xp_v\ne 0\). Notice that since v is a sink then for each \(b\in W\) it holds that \(s_b^*p_v=0\), and so \(xp_v\) has no ghost edges in its composition.

If \(|x|>0\) let \(e=x_1\) and notice that \((\beta _{t^{-1}}(g_t)1_e)(x)=\beta _{t^{-1}}(g_t)(x)\ne 0\) so that \(\beta _{t^{-1}}(g_t)1_e\ne 0\). Therefore \(g_t\delta _t 1_e\delta _e\ne 0\), and so \(x_ts_e\ne 0\).

So, it is proved that either there exist a vertex v such that \(xp_v\ne 0\) and \(xp_v\) has no ghost edges in its composition or there exist an edge e such that \(xs_e\ne 0\). In this case, note that \(xs_e\) has less ghost edges in its composition than x. Repeating these arguments a finite number of times, we obtain the conclusion of Claim 2.

Claim 3

For each \(0\ne x\in L_R(\mathcal {G})\) which is a sum of elements without ghost edges in its composition, there are elements \(y,z\in L_R(\mathcal {G})\), where \(y=y_1...y_n\), \(z=z_1...z_q\), and \(y_i,z_j\in \mathcal {G}^1\cup (\mathcal {G}^1)^*\cup G^0\), such that \(yxz\ne 0\) and either \(yxz=\lambda p_A\) for some \(\lambda \in R\) and \(A\in \mathcal {G}^0\), or \(yxz=\sum \nolimits _{i=1}^n\lambda _i s_c^i\) where \(\lambda _i\in R\) and c is a cycle without exit.

Let \(0\ne x\in L_R(\mathcal {G})\) be any element without ghost edges in its composition. Write \(x=\sum \nolimits _{j\in M}\beta _jp_{A_j}+\sum \nolimits _{i\in N} \lambda _is_{a_i}p_{B_i}\), where \(A_j,B_i\in \mathcal {G}^0\), \(a_i\) are paths with positive length, \(\beta _j,\lambda _i\) are nonzero elements in R, \(p_{r(a_i)}p_{B_i}\ne 0\) for each i, and the number of summands describing x is the least possible. Notice that M or N could be empty. Define m as the cardinality of M and n as the cardinality of N.

We prove the claim using an induction argument over the (minimal) number of summands in \(x\in L_R(\mathcal {G})\) without ghost edges in its composition.

If \(m+n=1\) then \(x=\beta _1p_{A_1}\), or \(x=\lambda _1s_{a_1}p_{B_1}\) (in which case \(s_{a_1}^*x=\lambda _1p_{r(a_1)}p_{B_1}\)), and so we are done.

Now, suppose the induction hypothesis holds and let \(x=\sum \nolimits _{j\in M}\beta _jp_{A_j}+\sum \nolimits _{i\in N} \lambda _is_{a_i}p_{B_i}\) (with minimal number of summands). We prove below that Claim 3 holds for this x.

Suppose that N is empty. By Claim 1 there is a vertex v such that \(0\ne xp_v\). Hence \(xp_v=(\sum \nolimits _{j\in M:v\in A_j}\beta _j)p_v\), and we are done.

Now, suppose that N is nonempty, say \(N=\{1,2,\ldots ,n\}\). Moreover assume, without loss of generality, that \(|a_i|\le |a_{i+1}|\) for each \(i\in \{1,\ldots ,n-1\}\).

By Claim 1 there exist a vertex v such that \(xp_v\ne 0\). If \(m>1\) then

which has less summands than x, and so we may apply the induction hypothesis on \(xp_v\). The same holds if \(s_{a_i}p_{B_i}p_v=0\) for some i. Therefore, we are left with the cases when \(m=0\) or \(m=1\), and \(s_{a_i}p_{B_i}p_v\ne 0\) for each \(a_i\).

Before we proceed, notice that each \(a_i\) in the description of x is of the form \(a_i=a_i^1...a_i^{k_i}\), where \(a_i^j\) are edges. If there are i, k such that \(a_i^j\ne a_k^j\) then \(s_{a_i}^*s_{a_k}=0\), and \(s_{a_i}^*x\ne 0\) (since \(L_K(\mathcal {G})\) is \(\mathbb {F}\) graded and \(s_{a_i}^*s_{a_i}p_{B_i}=p_{r(a_i)}p_{B_i}\ne 0\)). Therefore \(s_{a_i}^*x\) has less summands than x, possibly including ghost edges. Applying Claim 2 to \(s_{a_i}^*x\) and then applying the induction hypothesis, we obtain the desired result for x. So, we may suppose that each path \(a_i\) is the beginning of the path \(a_{i+1}\).

Recall that to finish the proof we need to deal with two cases:

where \(|a_i|\le |a_{i+1}|\) and \(a_i\) is the beginning of \(a_{i+1}\) for each i.

If \(xp_v=\sum \nolimits _{i=1}^n \lambda _i s_{a_i}p_{B_i}p_v\), then \(s_{a_1}^*xp_v=\lambda _1p_{B_1}p_v+\sum \nolimits _{i=2}^ns_{a_1}^*s_{a_i}p_v\). Therefore it is enough to deal with the case \(xp_v=\beta p_v+\sum \nolimits _{i=1}^n\lambda _i s_{a_i}p_{B_i}p_v\).

Notice that (by the \(\mathbb {F}\)-grading on \(L_R(\mathcal {G})\)) \(p_vxp_v\ne 0\), and \(p_vxp_v=\beta p_v+\sum \nolimits _{i=1}^n \lambda _ip_vs_{a_i}p_{B_i}p_v\). If there is a j such that \(s(a_j)\ne v\) then \(p_vs_{a_j}=0\), and so we may apply the induction hypothesis on \(p_vxp_v\). Therefore we are left with the case when \(v=s(a_i)\) for each i, what implies that each \(a_i\) is a closed path based on v.

Let \(c=a_1\), and write \(c=c_1...c_k\). If c has an exit, then either there exists an edge \(e\ne c_{j+1}\) such that \(s(e)\in r(c_j)\) or there exists a sink w in \(r(c_i)\) for some i. In the first case, notice that, since each \(a_i\) is of the form \(a_i=c\overline{a_i}\), then \(s_{c_1...c_je}^*s_{a_i}=0\) for each i. Then

Now suppose that there is a sink w in \(r(c_q)\) for some q. Then for each i, \(p_ws_{c_1...c_q}^*s_{a_i}p_{B_i}p_vs_{c_1...c_q}=p_ws_{a_i^{q+1}}...s_{a_i^{k_i}}p_{B_i}p_vs_{c_1...c_q}=0\) since w is not the source of any edge. Then \(p_ws_{c_1...c_q}^*xp_vs_{c_1...c_q}=\beta p_w p_{r(c_q)}=\beta p_w\ne 0\).

So it remains the case when c has no exit. Since c is based on v and has no exit, and each \(a_i\), for \(i>1\), is a closed path based on v then \(a_i\) equals \(c^j\) for some j. Notice that since c is a closed path without exit then \(a_i=d^{n_i}\), where d is a cycle without exit and \(n_i\in \mathbb {N}\). Therefore \(s_{a_1}xp_v\) has the desired form. \(\square \)

As a first example of potential applications of the reductions theorem, we show below that ultragraph Leavitt path algebras are semiprime (for Leavitt path algebras of graphs over fields this is Proposition 2.3.1 in [1]). Recall that a ring R is said to be semiprime if, for every ideal I of R, \(I^2=0\) implies \(I=0\).

Corollary 3.3

Let \(\mathcal {G}\) be an arbitrary ultragraph and R be a unital commutative ring with nonzero divisors. Then the ultragraph Leavitt path algebra \(L_R(\mathcal {G})\) is semiprime.

Proof

Let I be a nonzero ideal and x in I be nonzero. By the reduction Theorem 3.2, there are elements \(\mu =\mu _1...\mu _n\) and \(\nu =\nu _1...\nu _m\in L_R(\mathcal {G})\), with \(\mu _i, \nu _j\in \mathcal {G}^1\cup (\mathcal {G}^1)^*\) for each i and j, such that \(0\ne \mu x\nu \) and either \(\mu x\nu =\lambda p_A\), for some \(A\in \mathcal {G}^0\), or \(\mu x\nu =\sum \nolimits _{i=1}^k\lambda _i s_{c}^i\), where c is a cycle without exit. Since \(p_A\) is an idempotent if \(\mu x\nu =\lambda p_A\) then we are done. If \(\mu x\nu =\sum \nolimits _{i=1}^k\lambda _i s_{c}^i\) then, by the \(\mathbb {F}\) grading (see Theorem 2.8), we have that \((\mu x\nu )^2\ne 0\) and we are done. \(\square \)

4 Algebraic branching systems and the induced representations

In this section, we start the study of representations of ultragraph Leavitt path algebras via branching systems. Motivated by the relations that define an ultragraph Leavitt path algebra, we get the following definition.

Definition 4.1

Let \(\mathcal {G}\) be an ultragraph, X be a set and let \(\{R_e,D_A\}_{e\in \mathcal {G}^1,A\in \mathcal {G}^0}\) be a family of subsets of X. Suppose that

-

1.

\(R_e\cap R_f =\emptyset \), if \(e \ne f \in \mathcal {G}^1\);

-

2.

\(D_\emptyset =\emptyset , \ D_A \cap D_B= D_{A \cap B}, \text { and } D_A \cup D_B= D_{A \cup B}\) for all \(A, B \in \mathcal {G}^0\);

-

3.

\(R_e\subseteq D_{s(e)}\) for all \(e\in \mathcal {G}^1\);

-

4.

\(D_v= \bigcup \nolimits _{e \in s^{-1}(v)}R_e\), if \(0<\vert s^{-1}(v) \vert <\infty \); and

-

5.

for each \(e\in \mathcal {G}^1\), there exist two bijective maps \(f_e:D_{r(e)}\rightarrow R_e\) and \(f_e^{-1}:R_e \rightarrow D_{r(e)}\) such that \(f_e\circ f_e^{-1}=\mathrm {id}_{R_e}\) and, \(f_e^{-1}\circ f_e=\mathrm {id}_{D_{r(e)}}\).

We call \(\{R_e,D_A,f_e\}_{e \in \mathcal {G}^1,A \in \mathcal {G}^0}\) a \(\mathcal {G}\)-algebraic branching system on X or, shortly, a \(\mathcal {G}\)-branching system.

Given any ultragraph \(\mathcal {G}\), we deal with the existence of branching systems associated to \(\mathcal {G}\) in the proposition below.

Proposition 4.2

Let \(\mathcal {G}\) be an ultragraph such that \(s^{-1}(v)\) and r(e) are finite or countable for each vertex \(v\in G^0\) and each edge e. Then there exists a \(\mathcal {G}\)-branching system.

Proof

Let \(X=[0,1)\times (\mathcal {G}^1\cup G^0)\). For each edge e define \(R_e=[0,1)\times \{e\}\). For each sink \(u\in G^0\) define \(D_u=[0,1)\times \{u\}\), and for each non-sink \(v\in G^0\) let \(D_v=\bigcup \nolimits _{e\in s^{-1}(v)}R_e=[0,1)\times s^{-1}(v)\). Define \(D_\emptyset =\emptyset \) and, for each non-empty \(A \in \mathcal {G}^0\), let

It is easy to see that \(\{R_e,D_A\}_{e \in \mathcal {G}^1,A \in \mathcal {G}^0}\) satisfies Condition (1)–(2) of Definition 4.1. We prove that Condition 5 is satisfied.

Fix \(e \in \mathcal {G}^1\). We have to construct \(f_e\) and \(f_e^{-1}\) that satisfy Condition 5. Since \(D_{r(e)}=\bigcup \nolimits _{v\in r(e)}D_v\), and r(e) and \(s^{-1}(v)\) are countable sets, then \(D_{r(e)}=[0,1)\times J\) where J is a finite or countable set. If J is finite then let \(J=\{c_1,\ldots ,c_n\}\), and for each \(i\in \{1,\ldots ,n\}\) define \(F_i:[\frac{i-1}{n},\frac{i}{n})\times \{e\}\rightarrow [0,1)\times \{c_i\}\) by \(F_i(x,e)=(nx-i+1,c_i)\), which is bijective. Piecing together \(F_i\)’s yields \(f_e\), and piecing together \(F_i^{-1}\)’s yields \(f_e^{-1}\). If J is infinite let \(J=\{c_i\}_{i\in \mathbb {N}}\), and for each \(i\in \mathbb {N}\) define \(F_i:[1-\frac{1}{i}, 1-\frac{1}{i+1})\times \{e\}\rightarrow [0,1)\times \{c_i\}\) by \(F_i(x,e)=((i+1)ix-(i+1)(i-1),c_i)\), which is bijective. Again, piecing together \(F_i\)’s yields \(f_e^{-1}\), and piecing together \(F_i^{-1}\)’s yields \(f_e\). \(\square \)

Remark 4.3

The result above extends Theorem 3.1 in [20] to uncountable graphs.

Remark 4.4

Another very important example of a branching system associated to an ultragraph is the branching system associated with its algebraic boundary path space X. In fact this branching system is basically described by the partial action we reviewed on Section 2.1 (where we look at the domain sets associated to edges and its ranges, and the corresponding bijective maps, see [27, Remark 4.3] for details). Furthermore, this branching system is key in describing permutative irreducible representations, see [27, Theorem 6.9].

Next we describe how to construct representations of ultragraph Leavitt path algebras from branching systems.

Let \(\mathcal {G}\) be an ultragraph, R be a commutative unital ring, and X be a \(\mathcal {G}\)-algebraic branching system. Denote by M the set of all maps from X to R. Notice that M is an R-module, with the usual operations. For a subset \(Y\subseteq X\), we write \(1_Y\) to denote the characteristic function of Y, that is \(1_Y(x)=1\) if \(x\in Y\), and \(1_Y(x)=0\) otherwise. (Clearly \(1_Y\) is an element of M.) Finally, we denote by End(M) the set of all R-homomorphisms from the R-module M to M.

For each \(e\in \mathcal {G}^1\) and \(\phi \in M\) define

and for each \(A\in \mathcal {G}^0\) and \(\phi \in M\) define

Clearly, for each \(e\in \mathcal {G}^1\) and \(A\in \mathcal {G}^0\), we have that \(S_e, S_e^*, \text { and } P_A\in End(M)\).

Remark 4.5

To simplify our notation, for each \(e\in \mathcal {G}^1\) and \(\phi \in M\), we write \(S_e(\phi )=\phi \circ f_{e}^{-1}\) and \(S_e^*(\phi )=\phi \circ f_e\) instead of \(S_e(\phi )=\phi \circ f_{e}^{-1}\cdot 1_{R_e}\) and \(S_e^*(\phi )=\phi \circ f_e \cdot 1_{D_{r(e)}}\).

We end the section by describing the representations induced by branching systems associated to an ultragraph \(\mathcal {G}\).

Proposition 4.6

Let \(\mathcal {G}\) be an ultragraph and let \(\{R_e,D_A,f_e\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\) be a \(\mathcal {G}\)-algebraic branching system on X. Then there exists a unique representation \(\pi :L_R(\mathcal {G}) \rightarrow End(M)\) such that \(\pi (s_e)(\phi )= S_e\), \(\pi (s_e^*)=S_e^*\), and \(\phi (p_A)= P_A\), or equivalently, such that \(\pi (s_e)(\phi )=\phi \circ f_{e}^{-1}\), \(\pi (s_e^*)(\phi )=\phi \circ f_e\) and \(\phi (p_A)(\phi )=\phi 1_{D_A}\), for each \(e\in \mathcal {G}^1\), \(A\in \mathcal {G}^0\), and \(\phi \in M\).

Proof

By the universal property of ultragraph Leavitt path algebras, we only need to verify that the family \(\{S_e, S_e^*, P_A\}_{e\in \mathcal {G}^1, A \in \mathcal {G}^0}\) satisfy relations 1 to 4 in Definition 2.4. We show below how to verify relation 4 and leave the others to the reader.

Note that for \(e\in \mathcal {G}^1\) and \(\phi \in M\), we get \(\pi (s_e)\pi (s_e^*)(\phi )=\pi (s_e)(\phi \circ f_e)=\phi \circ f_e\circ f_e^{-1}=\phi \cdot 1_{R_e}\). Now, let v be a vertex such that \(0<|s^{-1}(v)|<\infty \). Then for each \(\phi \in M\), we have that

and relation 4 in Definition 2.4 is proved. \(\square \)

Remark 4.7

In the previous theorem, we can also take M as being the R-module, \(M_s\), of all the maps from X to R with finite support, instead of all the maps from X to R. Since \(M_s\) is a sub-module of M, and the maps \(f_e\) in a branching system are bijections, the representation on \(End(M_s)\) induced by a branching system can be obtained by restricting the induced representation on End(M) to \(M_s\).

5 Faithful representations of ultragraph Leavitt path algebras via Branching Systems

In this section, we describe faithfulness of the representations induced by branching systems in terms of dynamical properties of the branching systems and in terms of combinatorial properties of the ultragraph. Our first result follows below, linking faithfulness of the representations with the dynamics of the branching systems.

Theorem 5.1

Let \(\mathcal {G}\) be an ultragraph, and \(\{R_e,D_A,f_e\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\) be an branching system such that \(D_A\ne \emptyset \) for each \(\emptyset \ne A\in \mathcal {G}^0\). Then the induced representation of \(L_R(\mathcal {G})\) (from Proposition 4.6) is faithful if, and only if, for each closed cycle without exit \(c=c_1...c_k\), and for each finite set \(F\subseteq \mathbb {N}\), there exists an element \(z_0\in D_{r(c)}\) such that \( f_c^n(z_0)\ne z_0\), for each \(n\in F\) (where \(f_c=f_{c_1}...f_{c_n}\)).

Proof

Let \(\pi :L_R(\mathcal {G})\rightarrow End(M)\) be the homomorphism induced by Proposition 4.6. We prove first that if the condition described in the theorem holds then the representation is faithful.

Let \(0\ne x\in L_R(\mathcal {G})\). By Theorem 3.2 there are elements \(y,z\in L_R(\mathcal {G})\) such that \(0\ne y x z\) and either \(y x z=\lambda p_A\), for some \(A\in \mathcal {G}^0\), or \(y x z=\sum \nolimits _{i=1}^k\lambda _i s_{c}^i\), where c is a cycle without exit.

If \(yxz=\lambda p_A\), for some \(A\in \mathcal {G}^0\), then \(\pi (x)\ne 0\) since

Suppose that \(yxz=\sum \nolimits _{i=1}^m\lambda _i s_{c}^i\), where c is a cycle without exit. Let j be the least of the elements i such that \(\lambda _i\ne 0\). Define \(\mu =(s_c^j)^*yxz\), and note that \(\mu =\lambda _j p_v+\sum \nolimits _{i=1}^{m-j} \widetilde{\lambda _i}s_{c^i}\), where \(\widetilde{\lambda _i}=\lambda _{j+i}\) and \(v=r(c)\).

Let \(z_0\in D_v=D_{r(c)}\) be such that \(f_c^i(z_0)\ne z_0\) for each \(i\in \{1,\ldots ,m-j\}\) (such \(z_0\) exists by hypothesis). Let \(\delta _{z_0}\in M\) be the map defined by \(\delta _{z_0}(x)=1\) if \(x=z_0\), and \(\delta _{z_0}(x)=0\) otherwise. Notice that, for each \(i\in \{1,\ldots ,m-j\}\), we have

Therefore

from where \(\pi (\mu )\ne 0\) and hence \(\pi (x)\ne 0\) (since \(\mu =(s_c^j)^*yxz\)).

For the converse suppose that there exist a \(j_0\), and a cycle c without exits based at w such that \(f_c^{j_0}(z)=z\) for every \(z \in D_w\). Then we have that \(\pi (s_{c^{j_0}})=\pi (p_w)\). To see that \(p_w\ne s_{c^{j_0}}\) use Theorem 2.8 and the \(\mathbb {F}\)-grading of \(D\rtimes _\beta \mathbb {F}\). So, \(\pi \) is not injective.

\(\square \)

For ultragraphs that satisfy Condition (L), the above theorem has a simplified version. Recall that an ultragraph \(\mathcal {G}\) satisfies Condition (L) if every closed path in \(\mathcal {G}\) has an exit.

Corollary 5.2

Let \(\mathcal {G}\) be an ultragraph satisfying Condition (L), and let \(\{R_e,D_A,f_e\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\) be a branching system with \(D_A\ne \emptyset \), for each \(\emptyset \ne A\in \mathcal {G}^0\). Then the induced representation of \(L_R(\mathcal {G})\) (from Theorem 4.6) is faithful.

For ultragraphs such that \(s^{-1}(v)\) and r(e) are finite or countable (for each vertex v and each edge e), we also get the converse of the previous corollary. This is our next result.

Theorem 5.3

Let \(\mathcal {G}\) be an ultragraph such that \(s^{-1}(v)\) and r(e) are finite or countable for each \(v\in G^0\) and \(e\in \mathcal {G}^1\). Then \(\mathcal {G}\) satisfies Condition (L) if, and only if, for every algebraic branching system \(\{R_e,D_A,f_e\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\) with \(D_A\ne \emptyset \), for each \(\emptyset \ne A\in \mathcal {G}^0\), the induced representation of \(L_R(\mathcal {G})\) (from Proposition 4.6) is faithful.

Proof

By Corollary 5.2, we only need to prove the converse. We do this proving the contrapositive, i.e., we prove that if \(\mathcal {G}\) does not satisfy condition (L) then there exists an algebraic branching system \(\{R_e,D_A,f_e\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\) with \(D_A\ne \emptyset \), for each non-empty\(A\in \mathcal {G}^0\), such that the representation induced by Proposition 4.6 is not faithful.

Suppose that \(\mathcal {G}\) does not satisfy condition (L). Then there exists a cycle \(\alpha =(\alpha _1,\dots ,\alpha _n)\) such that \(\vert r(\alpha _i) \vert =1\) and \(s^{-1}(s(\alpha _i))=\{\alpha _i\}\), for all \(i=1,\dots ,n\), and \(\alpha _i \ne \alpha _j\) if \(i \ne j\).

By the proof of Theorem 4.2, there is a \(\mathcal {G}\)-branching system on \([0,1)\times (G^0\cup \mathcal {G}^1)\) such that \(D_{r(\alpha _{i-1})}=D_{s(\alpha _i)}=R_{\alpha _i}=[0,1)\times \{\alpha _i\}\), and \(f_{\alpha _i}:D_{r(\alpha _i)}\rightarrow R_{\alpha _i}\) is the bijective affine map \(f_{\alpha _i}(x,\alpha _{i+1})=(x,\alpha _i)\), for each \(i=1,\dots ,n\). Notice that \(f_{\alpha _1}\circ f_{\alpha _2}...\circ f_{\alpha _n}=\mathrm {id}_{R_{\alpha _1}}\). Let \(\pi \) be the representation associated with this branching system (as in Proposition 4.6). Then, for each \(\phi \in M\), we have

and hence \(\pi (s_{\alpha _n}^*)...\pi (s_{\alpha _1}^*)=\pi (p_{s(\alpha _1)})\).

To see that \(\pi \) is not faithful it remains to show that \(s_{\alpha _n} ^*...s_{\alpha _1}^*\ne p_{s(\alpha _1)}\). For this, we construct a branching system as follows: Let \(\{D_A\}_{A\in \mathcal {G}^0}\) and \(\{R_e\}_{e\in \mathcal {G}^1}\) be as above, and chose maps \({\widetilde{f}}_{\alpha _1},...{\widetilde{f}}_{\alpha _n}\) such that \({\widetilde{f}}_{\alpha _1}\circ ...\circ {\widetilde{f}}_{\alpha _n}\ne Id_{R_{\alpha _1}}\). Let \(x_0\in R_{\alpha _1}\) be such that \((f_{\alpha _1}\circ ...\circ f_{\alpha _n})(x_0)\ne x_0\), and chose an element \(\varphi \in M\) such that \(\varphi (x_0)=1\) and \(\varphi \circ (f_{\alpha _1}\circ ...\circ f_{\alpha _n})(x_0)=0\). Let \({\widetilde{\pi }}\) be the representation of \(L_R(\mathcal {G})\) obtained by Proposition 4.6 from this branching system. Then

and so \({\widetilde{\pi }}(s_{\alpha _n}^*)...{\widetilde{\pi }}(s_{\alpha _1}^*)\ne {\widetilde{\pi }}(p_{s(\alpha _1)})\). It follows that \(s_{\alpha _n} ^*...s_{\alpha _1}^*\ne p_{s(\alpha _1)}\), and hence,, the representation \(\pi \) is not faithful. \(\square \)

We end this section showing that it is always possible to construct faithful representations of \(L_R(\mathcal {G})\) arising from branching systems.

Proposition 5.4

Let \(\mathcal {G}\) be an ultragraph such that \(s^{-1}(v)\) and r(e) are finite or countable for each \(v\in G^0\) and \(e\in \mathcal {G}^1\). Then there exist a \(\mathcal {G}\)-branching system \(\{R_e, D_A, f_e\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\) such that the representation induced from Proposition 4.6 is faithful.

Proof

Let \(\{R_e,D_A,f_e\}_{e\in \mathcal {G}^1, A \in \mathcal {G}^0}\) be the \(\mathcal {G}\)-branching system obtained in Theorem 4.2. Following Theorem 5.1, all we need to do is to redefine some maps \(f_e:D_{r(e)}\rightarrow R_e\) to get a branching system such that for each closed cycle c without exit, and for each finite set \(F\subseteq \mathbb {N}\), there is an element \(z_0\in D_{r(c)}\) such that \(f_c^i(z_0)\ne z_0\) for each \(i\in F\). Let \(c=c_1...c_n\) be a closed cycle without exit, where \(c_i\) are edges. Recall from the proof of Theorem 4.2 that \(R_e=[0,1)\times \{e\}\) for each edge e, and \(D_v=\bigcup \nolimits _{e\in s^{-1}(v)}R_e=[0,1)\times s^{-1}(v)\) for each non-sink v. Since \(c=c_1...c_n\) is a closed cycle without exit then \(D_{r(c_i)}=[0,1)\times c_{i+1}\) for each \(i\in \{1,\ldots ,n-1\}\), and \(D_{r(c_n)}=[0,1)\times c_1\). Now, let \(\theta \in (0,1)\) be an irrational number. Define, for each \(i\in \{1,\ldots ,n-1\}\), \(f_{c_i}:[0,1)\times c_{i+1}\rightarrow [0,1)\times c_i\) by \(f_{c_i}(x,c_{i+1})=((x+\theta )mod(1), c_i)\) and define \(f_{c_n}:[0,1)\times c_1\rightarrow [0,1)\times c_n\) by \(f_{c_n}(x,c_1)=((x+\theta )mod(1), c_n)\). Let \(f_c:D_{r(c_n)}\rightarrow R_{c_1}\) be the composition \(f_c=f_{c_1}...f_{c_n}\), and notice that for each rational number \(x\in [0,1)\) we get \(f_c(x,c_1)=(y,c_1)\), where y is an irrational number. Therefore \(f_c(x,c_1)\ne (x,c_1)\) for each rational number \(x\in [0,1)\). \(\square \)

6 Permutative representations

The goal of this section is to show that under a certain condition over an ultragraph \(\mathcal {G}\), each R-algebra homomorphism \(\pi : L_R(\mathcal {G})\rightarrow A\) has a sub-representation associated to it which is equivalent to a representation induced by a \(\mathcal {G}\)-algebraic branching system.

Recall that given an R-algebra A (where R is a unital commutative ring), there exist an R-module M and an injective R-algebra homomorphism \(\varphi :A\rightarrow End_R(N)\) (see Section 5 of [20] for example). Composing a homomorphism from \(L_R(\mathcal {G})\) to an R-algebra A with the previous homomorphism \(\varphi \), we get a homomorphism from \(L_R(\mathcal {G})\) to \(End_R(N)\). So we will only consider representations from \(L_R(\mathcal {G})\) with image in \(End_R(N)\), for some \(R-\)module N.

Next we set up notation and make a few remarks regarding a representation (an R-homomorphism) \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\), where N is an R-module.

For each \(e\in \mathcal {G}^1\) define \(N_e=\pi (s_es_e^*)(N)\), and for each \(A\in \mathcal {G}^0\) define \(N_A=\pi (p_A)(N)\). Notice that \(N_e\) and \(N_A\) are sub-modules of N and:

-

\(N_e\cap N_f=\{0\}\) for each \(e\ne f\);

-

\(N_e\subseteq N_{s(e)}\) for each \(e\in \mathcal {G}^1\);

-

for each non-sink \(v\in \mathcal {G}^0\) we have \(N_v=\left( \bigoplus \nolimits _{s(e)=v}N_e\right) \bigoplus O_v\), where \(O_v\) is a submodule of \(N_v\). If \(|s^{-1}(v)|<\infty \) then \(O_v=\{0\}\);

-

for each \(A\in \mathcal {G}^0\) \(N_A=\left( \bigoplus \nolimits _{v\in A}N_v\right) \bigoplus O_A\), where \(O_A\) is a submodule of \(N_A\). If A is finite then \(O_A=\{0\}\).

-

\(\pi (s_e^*)_{|_{N_e}}:N_e\rightarrow N_{r(e)}\) is an isomorphism for each \(e\in \mathcal {G}^1\), with inverse \(\pi (s_e)_{|_{N_{r(e)}}}:N_{r(e)}\rightarrow N_e\).

Permutative representations were originally defined by Bratteli–Jorgensen in the context of representations of Cuntz algebras (see [7]) on Hilbert spaces. For Bratteli–Jorgensen, a permutative representation is one such that there exists an orthonormal basis of the Hilbert space that is taken to itself by the operators with define the representation (see Section 2 in [7]). Without knowledge of Bratteli–Jorgensen definition, the authors defined permutative representations, originally in the context of graph C*-algebras and then for Leavitt path algebras and ultragraph C*-algebras (see [16, 20, 21]), as representations that satisfy Condition (B2B). (In the context of Leavitt path algebras (B2B) is the algebraization/extension of Bratteli–Jorgensen condition.) We define this condition in the context of ultragraph Leavitt path algebras below.

Definition 6.1

Let \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\) be an R-homomorphism. We say that \(\pi \) is permutative if there exist bases B of N, \(B_v\) of \(N_v\), \(B_{r(e)}\) of \(N_{r(e)}\) and \(B_e\) of \(N_e\), for each \(v\in G^0\) and \(e\in \mathcal {G}^1\), such that:

-

1.

\(B_v\subseteq B\) and \(B_{r(e)}\subseteq B\) for each \(v\in G^0\) and \(e\in \mathcal {G}^1\),

-

2.

\(B_v\subseteq B_{r(e)}\) for each \(v\in r(e)\),

-

3.

\(B_e\subseteq B_{s(e)}\) for each \(e\in \mathcal {G}^1\),

-

4.

\(\pi (s_e)(B_{r(e)})=B_e\) for each \(e\in \mathcal {G}^1\) (B2B).

Remark 6.2

The last condition of the previous definition is equivalent to \(\pi (s_e^*)(B_e)=B_{r(e)}\), since the map \(\pi (s_e):N_{r(e)}\rightarrow N_e\) is an R-isomorphism, with inverse \(\pi (s_e^*):N_e\rightarrow N_{r(e)}\).

Notice that associated to a permutative representation \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\) there are basis of N, \(N_v\), \(N_{r(e)}\), and \(N_e\), for every \(v\in G^0\) and \(e\in \mathcal {G}^1\). From these basis, we can build a basis of \(N_A\), for every \(A\in \mathcal {G}^0\). More precisely, for each \(A \in \mathcal {G}^0\), following Lemma 2.3, write \(A=\left( \bigcap \nolimits _{e\in X_1}r(e) \right) \cup ...\cup \left( \bigcap \nolimits _{e\in X_n}r(e) \right) \bigcup F\), and define

We then have the following.

Lemma 6.3

Let \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\) be a permutative representation and \(A \in \mathcal {G}^0\). Then the set \(B_A\) is well defined (that is, does not depends on the description of A) and is a basis of \(N_A\). Moreover, if \(D\in \mathcal {G}^0\) then \(B_{A\cup D}=B_A\cup B_D\) and \(B_{A\cap D}=B_A\cap B_D\).

Proof

First we prove that \(B_A\) is a basis of \(N_A=\pi (p_A)(N)\). For each \(h\in B_v\), with \(v\in F\), we have \(\pi (p_A)(\pi (p_v)(h))=\pi (p_v)(h)=h\) and so \(h\in N_A\). Moreover, for \(h\in \bigcap \nolimits _{e\in X_i}B_{r(e)}\), we get \(\pi (p_A)(\pi (p_{\bigcap \nolimits _{e\in X_i}r(e)})(h))=\pi (p_{\bigcap \nolimits _{e\in X_i}r(e)})(h)=h\) and so \(h\in N_A\). Therefore, \(B_A\subseteq N_A\). Next we show that \(span(B_A)=N_A\). Notice that, by definition, \(N_{r(e)}=span(B_{r(e)})\) for each edge e, and \(N_v=span(B_v)\) for each vertex v. To show that \(span(B_A)=N_A\) for any \(A\in \mathcal {G}^0\) we first need to prove the following:

Claim 1: For each finite set \(X\subseteq \mathcal {G}^1\) it holds that \(span(\bigcap \nolimits _{e\in X}B_{r(e)})=\bigcap \nolimits _{e\in X}span(B_{r(e)})\).

The inclusion \(span(\bigcap \nolimits _{e\in X}B_{r(e)})\subseteq \bigcap \nolimits _{e\in X}span(B_{r(e)})\) is obvious. To prove the other inclusion, let e, f be two edges, and let \(h\in span(B_{r(e)})\cap span(B_{r(f)})\). Write \(h=\sum \nolimits _{i=1}^n\alpha _i h_i=\sum \nolimits _{j=1}^m\beta _j k_j\), with \(h_i\in B_{r(e)}\) for each i, \(k_j\in B_{r(f)}\) for each j, and all \(\alpha _i\) and \(\beta _j\) nonzero. Then \(\sum \nolimits _{i=1}^n\alpha _ih_i-\sum \nolimits _{j=1}^m\beta _j k_j=0\). If \(h_i \notin \{k_1,\ldots ,k_m\}\) for some i then \(\alpha _i=0\) (since \(\{h_1,\ldots ,h_n\}\cup \{k_1,\ldots ,k_m\}\subseteq B\) and B is linearly independent), which is impossible since \(\alpha _i\ne 0\) for each i. So we get \(\{h_1,\ldots ,h_n\}\subseteq \{k_1,\ldots ,k_m\}\). By the same arguments, applied to \(k_i\), we obtain that \(\{k_1,\ldots ,k_m\}\subseteq \{h_1,\ldots ,h_n\}\). Therefore \(\{k_1,\ldots ,k_m\}= \{h_1,\ldots ,h_n\}\) and hence \(\{h_1,\ldots ,h_n\}\subseteq B_{r(e)}\cap B_{r(f)}\) and \(h\in span(B_{r(e)}\cap B_{r(f) })\). The claim now follows by inductive arguments over the the cardinality of X.

We now prove that \(N_A=span(B_A)\)

Suppose first that \(A=\bigcap \nolimits _{e\in X_1}r(e)\cup \bigcap \nolimits _{f\in X_2}r(f)\). Then

Notice that \(\prod \nolimits _{e\in X_1}\pi (p_{r(e)})(N)\subseteq \pi (p_{r(e)})(N)=span(B_{r(e)})\) for each \(e\in X_1\) and so \(\prod \nolimits _{e\in X_1}\pi (p_{r(e)})(N)\subseteq \bigcap \nolimits _{e\in X_1}span(B_{r(e)})=span(\bigcap \nolimits _{e\in X_1}B_{r(e)})\subseteq span(B_A)\), where the second to last equality follows from Claim 1. Similarly we get \(\prod \nolimits _{f\in X_2}\pi (p_{r(f)})(N)\subseteq span(B_A)\) and \(\prod \nolimits _{e\in X_1}\pi (p_{r(e)})\prod \nolimits _{f\in X_2}\pi (p_{r(f)})(N)\subseteq span(B_A)\). Therefore \(N_A=\pi (p_A)(N)\subseteq span(B_A)\). The general case, that is, the case \(A=\left( \bigcap \nolimits _{e\in X_1}r(e) \right) \cup ...\cup \left( \bigcap \nolimits _{e\in X_n}r(e) \right) \bigcup F\) follows similarly, and we leave the details to the reader.

Since the set B is linearly independent and \(B_A\subseteq B\) it follows that \(B_A\) is linearly independent and hence \(B_A\) is a basis of \(N_A\). Furthermore, \(B_A\subseteq B\) also implies that \(B_A\) is well defined.

The last statement of the lemma follows directly from the definition of the sets \(B_A\), \(B_D\), \(B_{A\cup D}\) and \(B_{A\cap D}\), where \(A, D \in \mathcal {G}^0\). \(\square \)

For the next theorem, we recall the following definition:

Definition 6.4

Let \(\pi : L_R(\mathcal {G}) \rightarrow End_K(M)\) and \(\varphi : L_R(\mathcal {G}) \rightarrow End_R(N)\) be representations of \(L_R(\mathcal {G})\), where M and N are R-modules. We say that \(\pi \) is equivalent to \(\varphi \) if there exists a R-module isomorphism \(T:M\rightarrow N\) such that the diagram

commutes, for each \(a\in L_R(\mathcal {G})\).

It is, in general, not true that each representation of \(L_R(\mathcal {G})\) is equivalent to a representation induced from an \(\mathcal {G}\)-algebraic branching system. See, for example, Remark 5.2 of [20]. However, we get the following theorem:

Theorem 6.5

Let \(\varphi :L_R(\mathcal {G})\rightarrow End_R(N)\) be a permutative homomorphism, and let B be a basis of N satisfying the conditions of Definition 6.1. Suppose that \(\varphi (p_A)(h_x)=0\) for each \(h_x\in B{\setminus } B_A\) and \(A\in \mathcal {G}^0\), and that \(\varphi (s_e^*)(h_x)=0\) for each edge e and \(h_x\in B{\setminus } B_e\). Then there exists a \(\mathcal {G}\)-algebraic branching system X such that the representation \(\pi :L_R(\mathcal {G})\rightarrow End_R(M)\), induced by Proposition 4.6, is equivalent to \(\varphi \), where M is the R-module of all the maps from X to R with finite support.

Proof

Let \(B=\{h_x\}_{x\in X}\) be a basis of N, with subsets \(B_e\), \(B_v\) and \(B_{r(e)}\) for each edge e and vertex v satisfying the conditions of Definition 6.1. For each \(A\in \mathcal {G}^0\), let \(B_A\) be the set defined just before Lemma 6.3. For each \(e\in \mathcal {G}^1\), define \(R_e=\{x\in X:h_x\in B_e\}\) and \(D_{r(e)}=\{x\in X:h_x\in B_{r(e)}\}\) (notice that X is the index set of B). Moreover, for each \(A\in \mathcal {G}^0\) define \(D_A=\{x\in X:h_x\in B_A\}\). For a given edge e recall that the map \(\varphi (s_e):N_{r(e)}\rightarrow N_e\) is an isomorphism and that \(\varphi (s_e)(B_{r(e)})=B_e\). So we get a bijective map \(f_e:D_{r(e)}\rightarrow R_e\), such that \(\varphi (s_e)(h_x)=h_{f_e(x)}\).

It is not hard to see that X together with the subsets \(\{R_e, D_A\}_{e\in \mathcal {G}^1, A\in \mathcal {G}^0}\), and the maps \(f_e:D_{r(e)}\rightarrow R_e\) defined above, is a \(\mathcal {G}\)-algebraic branching system. For example, to see that \(D_{A\cap C}=D_A\cap D_C\) for \(A,C\in \mathcal {G}^0\), notice that

where the second to last equality follows from Lemma 6.3, more precisely, from the fact that \(B_{A\cap C}=B_A\cap B_C\). Similarly the equality \(D_{A\cup C}=D_A\cup D_C\) also holds. Also, recall that \(B_v\) is a basis of \(N_v=\pi (p_v)(N)\) for each vertex v and \(B_e\) is a basis of \(N_e=\pi (s_es_e^*)(N)\) for each edge e. If \(0<|s^{-1}(v)|<\infty \) then \(N_v=\bigoplus \nolimits _{e\in s^{-1}(v)}N_e\), from where \(B_v=\bigcup \nolimits _{e\in s^{-1}(v)}B_e\), and so \(D_v=\bigcup \nolimits _{e\in s^{-1}(v)}R_e\). The verification of the other conditions of Definition 4.1 are left to the reader.

Let M be the R module of all the maps from X to R with finite support, and let \(\pi :L_R(\mathcal {G})\rightarrow End_R(M)\) be the homomorphism induced as in Proposition 4.6 and Remark 4.7.

Let \(\delta _x\in M\) be the map defined by \(\delta _x(y)=0\) if \(y\ne x\) and \(\delta _x(x)=1\). Notice that \(\{\delta _x\}_{x\in X}\) is a basis of M. Let \(T:M\rightarrow N\) be the isomorphism defined by \(T(\sum \nolimits _{i=1}^n k_i\delta _{x_i})=\sum \nolimits _{i=1}^n k_ih_{x_i}\).

It remains to show that \(\varphi (a)=T\circ \pi (a) \circ T^{-1}\), for each \(a\in L_R(\mathcal {G})\). Notice that for this it is enough to verify that \(\varphi (s_e)=T\circ \pi (s_e)\circ T^{-1}\), \(\varphi (s_e^*)=T\circ \pi (s_e^*) \circ T^{-1}\), and \(\varphi (p_A)=T\circ \pi (p_A) \circ T^{-1}\), for each edge e and \(A\in \mathcal {G}^0\).

Let \(A\in \mathcal {G}^0\). We show that, for each \(h_x\in B\), \(\varphi (p_A)(h_x)=(T\circ \pi (p_A)\circ T^{-1})(h_x)\). For this, suppose that \(h_x\in B_A\). Then

If \(h_x\in B{\setminus } B_A\) then \(\varphi (p_A)(h_x)=0\) by hypothesis and, since \(x\notin D_A\), we also have \(\pi (p_A)(\delta _x)=0\). Hence \((T\circ \pi (p_A))(T^{-1}(h_x))=\varphi (p_A)(h_x)\) for each \(h_x\in B\), and therefore \(T\circ \pi (p_A)\circ T^{-1}=\varphi (p_A)\).

Next we show that \(\varphi (s_e)=T\circ \pi (s_e)\circ T^{-1}\). Let \(h_x\in B_{r(e)}\). Then \(\varphi (s_e)(h_x)=h_{f_e(x)}\), and \(T(\pi (s_e)(T^{-1}(h_x)))=T(\pi (s_e)(\delta _x))=T(\delta _x\circ f_e^{-1})=T(\delta _{f_e(x)})=h_{f_e(x)}\). For \(h_x\in B{\setminus } B_{r(e)}\) we get \(\varphi (s_e)(h_x)=\varphi (s_e)\varphi (s_e^*)\varphi (s_e)(h_x)=\varphi (s_e)\varphi (p_{r(e)})(h_x)=0\) (since \(\varphi (p_{r(e)})(h_x)=0\) by hypothesis), and \(\pi (s_e)(T^{-1}(h_x))=\pi (s_e)(\delta _x)=\delta _x \circ f_e^{-1}.1_{R_e}=0\) since \(x\notin D_{r(e)}\).

It remains to prove that \(\varphi (s_e^*)=T\circ \pi (s_e^*)\circ T^{-1}\). For \(h_x\in B{\setminus } B_e\), we have that \(\varphi (s_e^*)(h_x)=0\) by hypothesis, and \(T(\pi (s_e^*)(T^{-1}(h_x)))=T(\pi (s_e^*)(\delta _x))=T(\delta _x\circ f_e.1_{D_{r(e)}})=0\), since \(x\notin R_e\). For \(h_x\in B_e\), we get \(\varphi (s_e^*)(h_x)=h_{f_e^{-1}(x)}\), and \(T(\pi (s_e^*)(T^{-1}(h_x)))=T(\pi (s_e^*)(\delta _x))=T(\delta _x\circ f_e.1_{D_{r(e)}})=T(\delta _{f_e^{-1}(x)})=h_{f_e^{-1}(x)}\). \(\square \)

Remark 6.6

If e is an edge in \(\mathcal {G}\) such that s(e) is a finite emitter then, in the previous theorem, the hypothesis \(\varphi (s_e^*)(h)=0\) for each \(h\in B{\setminus } B_e\), follows from the hypothesis \(\varphi (p_A)(h)=0\) for each \(h\in B{\setminus } B_A\). In fact, let \(v=s(e)\). Since \(p_v=\sum \nolimits _{s(f)=v}\varphi (s_f)\varphi (s_f)^*\) (because \(s^{-1}(v)\) is finite) then \(B_v=\bigcup \nolimits _{f\in s^{-1}(v)}B_f\), where the last union is a disjoint union. Then, for \(h\in B{\setminus } B_v\), we get \(\varphi (p_v)(h)=0\) (by hypothesis). For \(h\in B_v{\setminus } B_e\), let \(f\in s^{-1}(v)\) be such that \(h\in B_f\) and \(f\ne e\). Notice that \(h=\varphi (s_f)\varphi (s_f^*)(h)\), and then \(\varphi (s_e^*)(h)=\varphi (s_e^*)\varphi (s_f)\varphi (s_f^*)(h)=0\), since \(\varphi (s_e)^*\varphi (s_f)=0\).

Therefore, if \(\mathcal {G}\) has no infinite emitters, the hypothesis \(\varphi (s_e^*)(h)=0\) for each \(h\in B{\setminus } B_e\) is unnecessary.

We now proceed to describe ultragraphs for which a large class of representations is permutative. We recall some definitions and propositions from [16].

Definition 6.7

([16], Definition 6.5) Let \(\mathcal {G}\) be an ultragraph. An extreme vertex is an element A satisfying

-

1.

either \(A=r(e)\) for some edge e and \(A\cap r(\mathcal {G}^1{\setminus }\{e\})=\emptyset =A\cap s(\mathcal {G}^1)\); or

-

2.

\(A=s(e)\) for some edge e and \(A\cap s(\mathcal {G}^1{\setminus } \{e\})=\emptyset =A\cap r(\mathcal {G}^1)\).

The edge e associated to an extreme vertex A as above is called the extreme edge of A.

Let \(\mathcal {G}\) be an ultragraph. Define the set of isolated vertices of \(\mathcal {G}\) to be

and define the ultragraph \(\mathbb {G}_0:=(G^0 {\setminus } I_0, \mathcal {G}^1,r,s)\). Denote by \(X_1\) the set of extreme vertices of \(\mathbb {G}_0\), let \(\overline{X_1}=\bigcup \nolimits _{A\in X_1}A\), and denote by \(Y_1\) the set of extreme edges of \(\mathbb {G}_0\). Notice that the extreme vertices and the extreme edges of \(\mathcal {G}\) and \(\mathbb {G}_0\) are the same. Denote by \(I_1\) the set of isolated vertices of the ultragraph \(\Big (G^0 {\setminus } (I_0 \cup \overline{X_1}),\mathcal {G}^1{\setminus } Y_1,r,s\Big )\), and define

Now, define \(X_2\) and \(Y_2\) as being the extreme vertices and extreme edges of the ultragraph \(\mathbb {G}_1\), let \(\overline{X_2}=\bigcup \nolimits _{A\in X_2}A\), let \(I_2\) be the isolated vertices of the ultragraph

and let

Inductively, while \(X_n\ne \emptyset \), we define the ultragraphs \(\mathbb {G}_n\) and the sets \(X_{n+1}\), of extreme vertices of \(\mathbb {G}_n\), and \(Y_{n+1}\), of extreme edges \(\mathbb {G}_n\). We also define the sets \(\overline{X_{n+1}}=\bigcup \nolimits _{A\in X_n}A\) and the set of isolated vertices \(I_{n+1}\) of the ultragraph \(\mathbb {G}_n\).

Notice that there is a bijective correspondence between the sets \(X_n\) and \(Y_n\), associating each extreme vertex \(A\in X_n\) to an unique extreme edge \(e\in Y_n\). For each \(A\in X_n\), let \(e\in Y_n\) be the (unique) edge associated to A. If \(A=r(e)\) then A is called a final vertex of \(X_n\) and, if \(A=s(e)\), then A is called an initial vertex of \(X_n\). We denote the set of initial vertices of \(X_n\) by \(X_n^{\mathrm {ini}}\) and the set of final vertices of \(X_n\) by \(X_n^{\mathrm {fin}}\).

The following theorem is the algebraic version of [16, Theorem 6.8]. Until this moment, the coefficient ring R in the Leavitt path algebras of ultragraphs appearing in this paper was assumed only to be a unital commutative ring. However, for general modules over commutative rings, it is not true that each submodule has a basis. But this fact, which we need in the next theorem, is true if R is a field.

Theorem 6.8

Let \(\mathcal {G}\) be an ultragraph, R be a field, N be an R-module, and let \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\) be a representation. Let \(N_{r(e)}\) and \(N_v\) be as in the beginning of this section. Suppose that \(N_{r(e)}=\oplus _{v\in r(e)}N_v\), for each \(e \in \mathcal {G}^1\). If there exists \(n \ge 1\) such that \(X_1,\dots ,X_n \ne \emptyset \), and \(\Big (\bigcup \nolimits _{e \in \mathcal {G}^1}r(e)\Big ) \cup s(\mathcal {G}^1)=\bigcup \nolimits _{i=1}^{n}(\overline{X_i} \cup I_i)\), then \(\pi \) is permutative.

Proof

The proof of this theorem is analogous to the proof of Theorem 6.8 in [16]. The only difference is that in the proof of Theorem 6.8 in [16] the bases are orthonormal bases, while here they are bases only. \(\square \)

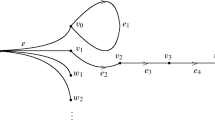

Example 6.9

Let \(\mathcal {G}\) be the ultragraph with edges \(\{e_1,e_2,e_3,e_4\}\cup \{g_i\}_{i\in \mathbb {N}}\), with \(r(g_i)=\{u_n^i:1\le n\le i\}\) as follows.

For this ultragraph, it is not hard to see that for each representation \(\pi :L_R(\mathcal {G})\rightarrow End_\mathbb {R}(N)\) the hypothesis of the previous theorem hold, and so each representation is permutative.

Remark 6.10

The ideas of the proof of the previous theorem may be applied to a larger class of ultragraphs than the one satisfying the hypothesis of the theorem. For example, the ultragraph \(\mathcal {G}\)

where \(s(e_i)=u_{i1}\), \(r(e_i)=\{u_{(i-1)j}\}_{j\in \mathbb {N}}\), \(s(h_1)=u_{11}\), \(s(h_i)=v_i\) for each \(i\ge 2\), and \(r(h_i)=v_{i+1}\) for each \(i\ge 1\), does not satisfy the hypothesis of the previous theorem, but each representation \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\) is permutative. See [Remark 6.9, [16]] for more details.

We end the paper with the following result, regarding unitary equivalence of representations.

Theorem 6.11

Let \(\mathcal {G}\) be an ultragraph and suppose that there exists \(n \ge 1\) such that \(X_1,\dots ,X_n \ne \emptyset \), and \(\Big (\bigcup \nolimits _{e \in \mathcal {G}^1}r(e)\Big ) \cup s(\mathcal {G}^1)=\bigcup \nolimits _{i=1}^{n}(\overline{X_i} \cup I_i)\). Let R be a field, N be an R-module, and let \(\pi :L_R(\mathcal {G})\rightarrow End_R(N)\) be a representation. Let \(N_{r(e)}\), \(N_e\) and \(N_v\) be as in the beginning of this section. Suppose that \(N_{r(e)}=\oplus _{v\in r(e)}N_v\), for each \(e \in \mathcal {G}^1\). Let \(M=\pi (L_R(\mathcal {G}))(N)\), and let \({\widetilde{\pi }}:L_R(\mathcal {G})\rightarrow End_R(M)\) be the restriction of \(\pi \). If \(N_v=\bigoplus _{e\in s^{-1}(v)}N_e\), for each vertex v which is not a sink, then \({\widetilde{\pi }}\) is equivalent to a representation induced by a branching system.

Proof

By Theorem 6.8 we get that \({\widetilde{\pi }}\) is permutative. Let B be the basis obtained in the proof of Theorem 6.8, that is, B is a basis of M, \(B_v\) is a basis of \({\widetilde{\pi }}(p_v)(M)\) for each vertex v, \(B_e\) is a basis of \({\widetilde{\pi }}(s_e){\widetilde{\pi }}(s_e^*)(M)\) for each edge e, \(B_v\supseteq B_e\) for each \(e\in s^{-1}(v)\), and \(B_v\subseteq B\) for each vertex v. Moreover, by hypothesis, \(B_{r(e)}=\bigcup \nolimits _{v\in r(e)}B_v\). Notice that \(B=\bigcup \nolimits _{v\in G^0}B_v\). By Theorem 6.5, we need to show that \({\widetilde{\pi }}(p_A)(h_x)=0\) for each \(h_x\in B{\setminus } B_A\), and \({\widetilde{\pi }}(s_e^*)(h_x)=0\) for all \(h_x\in B{\setminus } B_e\).

Let \(h_x\in B{\setminus } B_A\). Then \(h_x\in B_u\) for some \(u\notin A\), and so \({\widetilde{\pi }}(p_A)(h_x)={\widetilde{\pi }}(p_A){\widetilde{\pi }}(p_u)(h_x)=0\) ( \({\widetilde{\pi }}(p_A){\widetilde{\pi }}(p_u)=0\)).

Let e be an edge and \(h_x\in B{\setminus } B_e\). If \(h_x\in B_u\) with \(u\ne s(e)\) then \({\widetilde{\pi }}(s_e^*)(h_x)={\widetilde{\pi }}(s_e^*){\widetilde{\pi }}(p_{s(e)})(h_x)=0\) since \({\widetilde{\pi }}(p_{s(e)})(h_x)=0\). So, let \(h_x\in B_u\) with \(u=s^{-1}(e)\). Since \(B_u=\bigcup \nolimits _{f\in s^{-1}(u)}B_f\), there exists \(f\in s^{-1}(u)\) with \(f\ne e\) such that \(h_x\in B_f\). From the proof of the previous theorem, we get that \(B_f={\widetilde{\pi }}(s_f)(B_{r(f)})\), and so \(h_x={\widetilde{\pi }}(s_f)(h)\) for some \(h\in B_{r(f)}\). Then \({\widetilde{\pi }}(s_e^*)(h_x)={\widetilde{\pi }}(s_e^*){\widetilde{\pi }}(s_f)(h)=0\) since \({\widetilde{\pi }}(s_e^*){\widetilde{\pi }}(s_f)=0\). \(\square \)

Remark 6.12

Note that in the previous theorem, the condition \(N_v=\bigoplus _{e\in s^{-1}(v)}N_e\) is automatically satisfied if \(0<|s^{-1}(v)|<\infty \).

References

Abrams, G., Ara, P., Siles Molina, M.: Leavitt path algebras, Lecture Notes in Mathematics Vol. 2191. Springer Verlag, London, (2017)

Abrams, G., Aranda Pino, G.: The Leavitt path algebra of a graph. J. Algebra 293, 319–334 (2005)

Ara, P., Rangaswamy, K.M.: Leavitt path algebras with at most countably many irreducible representations. Rev. Mat. Iberoam. 31(4), 1263–1276 (2015)

Ara, P., Moreno, M.A., Pardo, E.: Nonstable K-theory for graph algebras. Algebr. Represent. Theory 10, 157–178 (2007)

Ara, P., Hazrat, R., Li, H., Sims, A.: Graded Steinberg algebras and their representations. Algebra Number Theory 12(1), 131–172 (2018)

Aranda Pino, G., Clark, J., Raeburn, I.: Kumjian-Pask algebras of higher-rank graphs. Trans. Amer. Math. Soc. 365(7), 3613–3641 (2013)

Bratteli, O., Jorgensen, P.E.T.: Iterated function systems and permutation representations of the Cuntz algebra. Memoirs Amer. Math. Soc. 139, 663 (1999)

Brown, J., Clark, L.O., Farthing, C., Sims, A.: Simplicity of algebras associated to étale groupoids. Semigroup Forum 88(2), 433–45 (2014)

Chen, X.W.: Irreducible representations of Leavitt path algebras. Forum Math. 27(1), 549–57 (2015)

Clark, L.O., Farthing, C., Sims, A., Tomforde, M.: A groupoid generalisation of Leavitt path algebras. Semigroup Forum 89, 501–51 (2014)

de Castro, G.G., Gonçalves, D.: KMS and ground states on ultragraph C*-algebras. Integr. Equ. Oper. Theory 90, 63 (2018)

Dokuchaev, M., Exel, R.: Associativity of crossed products by partial actions, enveloping actions and partial representations. Trans. Amer. Math. Soc. 357, 1931–1952 (2005)

Farsi, C., Gillaspy, E., Kang, S., Packer, J.: Separable representations, KMS states, and wavelets for higher-rank graphs. J. Math. Anal. Appl. 434, 241–270 (2016)

Farsi, C., Gillaspy, E., Kang, S., Packer, J.: Wavelets and graph C*-algebras. Appl. Numer. Harmon. Anal. 5, 35–86 (2017)

Gil Canto, C., Gonçalves, D.: Representations of relative Cohn path algebras. J. Pure Appl. Algebra 224, 106310 (2020)

Gonçalves, D., Li, H., Royer, D.: Branching systems and general Cuntz-Krieger uniqueness theorem for ultragraph C*-algebras. Internat. J. Math. 27(10), 1650083 (2016)

Gonçalves, D., Li, H., Royer, D.: Faithful representations of graph algebras via branching systems. Can. Math. Bull. 59, 95–103 (2016)

Gonçalves, D., Li, H., Royer, D.: Branching systems for higher rank graph C*-algebras. Glasg. Math. J. 60(3), 731–751 (2018)

Gonçalves, D., Royer, D.: Perron-Frobenius operators and representations of the Cuntz-Krieger algebras for infinite matrices. J. Math. Anal. Appl. 351, 811–818 (2009)

Gonçalves, D., Royer, D.: On the representations of Leavitt path algebras. J. Algebra 333, 258–272 (2011)

Gonçalves, D., Royer, D.: Unitary equivalence of representations of algebras associated with graphs, and branching systems. Funct. Anal. Appl. 45, 45–59 (2011)

Gonçalves, D., Royer, D.: Graph \({\rm C}^*\)-algebras, branching systems and the Perron-Frobenius operator. J. Math. Anal. Appl. 391, 457–465 (2012)

Gonçalves, D., Royer, D.: Leavitt path algebras as partial skew group rings. Commun. Algebra 42, 127–143 (2014)

Gonçalves, D., Royer, D.: Branching systems and representations of Cohn-Leavitt path algebras of separated graphs. J. Algebra 422, 413–426 (2015)

Gonçalves, D., Royer, D.: Ultragraphs and shift spaces over infinite alphabets. Bull. Sci. Math. 141(1), 25–45 (2017)

Gonçalves, D., Royer, D.: Infinite alphabet edge shift spaces via ultragraphs and their C*-algebras. Int. Math. Res. Not. 2177–2203, 2019 (2019)

Gonçalves, D., Royer, D.: Irreducible and permutative representations of ultragraph Leavitt path algebras. Forum Math. 32, 417–431 (2020)

Gonçalves, D., Royer, D.: Simplicity and chain conditions for ultragraph Leavitt path algebras via partial skew group ring theory. J. Aust. Math. Soc. 109(3), 299–319 (2020)

Gonçalves, D., Sobottka, M.: Continuous shift commuting maps between ultragraph shift spaces. Discrete Contin. Dyn. Syst. 39, 1033–1048 (2019)

Gonçalves, D., Uggioni, B.B.: Li-Yorke chaos for ultragraph shift spaces. Discrete Contin. Dyn. Syst. 40, 2347–2365 (2020)

Gonçalves, D., Uggioni, B.B.: Ultragraph shift spaces and chaos. Bull. Sci. Math. 158, 102807 (2020)

Hazrat, R., Rangaswamy, K.M.: On graded irreducible representations of Leavitt path algebras. J. Algebra 450, 458–486 (2016)

Imanfar, M., Pourabbas, A., Larki, H.: The leavitt path algebras of ultragraphs. Kyungpook Math. J. 60, 21–43 (2020)

Katsura, T., Muhly, P.S., Sims, A., Tomforde, M.: Graph algebras, Exel-Laca algebras, and ultragraph algebras coincide up to Morita equivalence. J. Reine Angew. Math. 640, 135–165 (2010)

Marcolli, M., Paolucci, A.M.: Cuntz-Krieger Algebras and Wavelets on Fractals. Complex Anal. Oper. Theory 05(01), 41–81 (2011)

Pino, G.Aranda, Barquero, D.Martín, González, C.Martín, Molina, M.Siles: The socle of a Leavitt path algebra. J. Pure Appl. Algebra 212(3), 500–509 (2008)

Pino, G.Aranda, Barquero, D.Martín, González, C.Martín, Molina, M.Siles: Socle theory for Leavitt path algebras of arbitrary graphs. Rev. Mat. Iberoam. 26, 611–638 (2010)

Ramos, C.C., Martins, N., Pinto, P.R., Ramos, J.S.: Cuntz-Krieger algebras representations from orbits of interval maps. J. Math. Anal. Appl. 341(2), 825–833 (2008)

Rangaswamy, K.M.: Leavitt path algebras with finitely presented irreducible representations. J. Algebra 447, 624–648 (2016)

Steinberg, B.: A groupoid approach to discrete inverse semigroup algebras. Adv. Math. 223, 689–727 (2010)

Tomforde, M.: A unified approach to Exel-Laca algebras and \(C^\ast \)-algebras associated to graphs. J. Operator Theory 50, 345–368 (2003)

Tomforde, M.: Simplicity of ultragraph algebras. Indiana Univ. Math. J 52(4), 901–925 (2003)

Tomforde, M.: Leavitt path algebras with coefficients in a commutative ring. J. Pure App. Algebra 215(4), 471–484 (2011)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Daniel Gonçalves: This author is partially supported by Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq) Grant Numbers 304487/2017-1 and 406122/2018-0 and Capes-PrInt Grant Number 88881.310538/2018-01 - Brazil.

Rights and permissions

About this article

Cite this article

Gonçalves, D., Royer, D. Representations and the reduction theorem for ultragraph Leavitt path algebras. J Algebr Comb 53, 505–526 (2021). https://doi.org/10.1007/s10801-020-01004-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10801-020-01004-8