Abstract

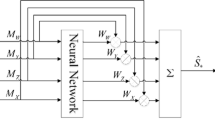

In this paper, we present a new two-stage speech enhancement approach, specially conceived to reduce musical and other random noises without requiring their localization in the time–frequency domain. The proposed method is motivated by two observations: (1) the random scattering nature of the energy peaks corresponding to the musical noise in the spectrogram of the processed speech; and (2) the existence of correlation between Wiener filter gains calculated at different frequencies. In the first stage of the proposed method, a preliminary gain function is generated using the nonnegative matrix factorization algorithm. In the second stage, a modified gain function that is more robust to noise artefacts, and referred to as calibrated filter, is estimated by applying a DNN-based nonlinear mapping function to the preliminary gain function. To further decrease the variability of the estimated calibrated filter, we propose to expand the DNN-based extraction of frequency dependencies to a set of preliminary gain functions derived from spectral estimates based on a family of data tapers; the resulting calibrated filter is referred to as multi-filter. The evaluation of the proposed DNN-based calibrated filter models for speech enhancement, under different noise types and input SNR levels, shows substantial improvements in terms of standard speech quality and intelligibility measures when compared to uncalibrated filter.

Similar content being viewed by others

Availability of Data and Materials

Data used in our experiments are all available. The TSP speech database is covered by a permissive Simplified BSD license, and freely available for download at http://www.mmsp.ece.mcgill.ca/Documents/Data/. Noise data can be found at: http://spib.linse.ufsc.br/noise.html.

Notes

Only half of the coefficients are used since the audio signal samples are real-valued and their spectral coefficients exhibit complex conjugate symmetry.

References

Y. Attabi, H. Chung, B. Champagne, W.-P. Zhu, NMF-based speech enhancement using multitaper spectrum estimation, in Proceedings of the International Conference on Signals and Systems (ICSigSys) (2018), pp. 36–41

A. Ben Aicha, S. Ben Jebara, Perceptual musical noise reduction using critical bands tonality coefficients and masking thresholds, in Proceedings of 8th Annual Conference of the International Speech Communication Association (2007), pp. 822–825

S. Ben Jebara, A perceptual approach to reduce musical noise phenomenon with wiener denoising technique, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing, vol. 3 (2006), pp. 49–52

S. Boll, Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 27(2), 113–120 (1979)

O. Cappé, Elimination of the musical noise phenomenon with the Ephraim and Malah noise suppressor. IEEE Trans. Speech Audio Process. 2(2), 345–349 (1994)

W. Chan, I. Lane, Deep recurrent neural networks for acoustic modelling. arXiv preprint arXiv:1504.01482 (2015)

H. Chen, M. Gao, Y. Zhang, W. Liang, X. Zou, Attention-based multi-NMF deep neural network with multimodality data for breast cancer prognosis model. BioMed Res. Int. (2019). https://doi.org/10.1155/2019/9523719

H. Chung, E. Plourde, B. Champagne, Regularized non-negative matrix factorization with Gaussian mixtures and masking model for speech enhancement. Speech Commun. 87, 18–30 (2017)

N. Derakhshan, M. Rahmani, A. Akbari, A. Ayatollahi, An objective measure for the musical noise assessment in noise reduction systems, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (2009), pp. 4429–4432

Y. Ephraim, D. Malah, Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 32(6), 1109–1121 (1984)

H. Erdogan, J.R. Hershey, S. Watanabe, J. Le Roux, Phase-sensitive and recognition-boosted speech separation using deep recurrent neural networks, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (2015), pp. 708–712

T. Esch, P. Vary, Efficient musical noise suppression for speech enhancement system, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (2009), pp. 4409–4412

C. Févotte, N. Bertin, J.-L. Durrieu, Nonnegative matrix factorization with the Itakura-Saito divergence: with application to music analysis. Neural Comput. 21(3), 793–830 (2009)

T. Gerkmann, R.C. Hendriks, Unbiased MMSE-based noise power estimation with low complexity and low tracking delay. IEEE Trans. Audio Speech Lang. Process. 20(4), 1383–1393 (2012)

Z. Goh, K.-C. Tan, T. Tan, Postprocessing method for suppressing musical noise generated by spectral subtraction. IEEE Trans. Speech Audio Process. 6(3), 287–292 (1998)

I. Goodfellow, Y. Bengio, A. Courville, Deep Learning (MIT Press, Cambridge, 2016).

H. Gustafsson, S.E. Nordholm, I. Claesson, Spectral subtraction using reduced delay convolution and adaptive averaging. IEEE Trans. Speech Audio Process. 9(8), 799–807 (2001)

R. Hamon, V. Emiya, L. Rencker, W. Wang, M. Plumbley, Assessment of musical noise using localization of isolated peaks in time-frequency domain, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (2017), pp. 696–700

Y. Hu, P.C. Loizou, A generalized subspace approach for enhancing speech corrupted by colored noise. IEEE Trans. Speech Audio Process. 11(4), 334–341 (2003)

Y. Hu, P.C. Loizou, Speech enhancement based on wavelet thresholding the multitaper spectrum. IEEE Trans. Speech Audio Process. 12(1), 59–67 (2004)

T. Inoue, H. Saruwatari, K. Shikano, K. Kondo, Theoretical analysis of musical noise in Wiener filtering family via higher-order statistics, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2011), pp. 5076–5079

ITU-T, Recommendation P.862: Perceptual Evaluation of Speech Quality (PESQ): And Objective Method for End-to-end Speech Quality Assessment of Narrow-Band Telephone Networks and Speech Codecs, Technical Report, 2001

P. Kabal, TSP speech database, McGill University, Database Version, vol. 1, no. 0, pp. 09-02, 2002

S. Kamath, P. Loizou, A multi-band spectral subtraction method for enhancing speech corrupted by colored noise, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing, vol. 4 (2002), pp. 4164–4164

T.G. Kang, K. Kwon, J.W. Shin, and N.S. Kim, NMF-based speech enhancement incorporating deep neural network, in Proceedings of 15th Annual Conference of the International Speech Communication Association (2014), pp. 2843–2846

M.R. Khan, T. Hasan, M.R. Khan, Iterative noise power subtraction technique for improved speech quality, in Proceedings of International Conference on Electrical and Computer Engineering (2008), pp. 391–394

D.P. Kingma, J. Ba, Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

K. Kwon, J.W. Shin, N.S. Kim, NMF-based speech enhancement using bases update. IEEE Signal Process. Lett. 22(4), 450–454 (2015)

D.D. Lee, H.S. Seung, Algorithms for non-negative matrix factorization, in Advances in Neural Information Processing Systems (2001), pp. 556–562.

S. Li, J.-Q. Wang, M. Niu, X.-J. Jing, T. Liu, Iterative spectral subtraction method for millimeter-wave conducted speech enhancement. J. Biomed. Sci. Eng. 3(2), 187 (2010)

J.S. Lim, A.V. Oppenheim, Enhancement and bandwidth compression of noisy speech. Proc. IEEE 67(12), 1586–1604 (1979)

P.C. Loizou, Speech Enhancement: Theory and Practice (CRC Press, Cambridge, 2007).

R. Miyazaki, H. Saruwatari, T. Inoue, Y. Takahashi, K. Shikano, K. Kondo, Musical-noise-free speech enhancement based on optimized iterative spectral subtraction. IEEE Trans. Audio Speech Lang. Process. 20(7), 2080–2094 (2012)

N. Mohammadiha, P. Smaragdis, A. Leijon, Supervised and unsupervised speech enhancement using nonnegative matrix factorization. IEEE Trans. Audio Speech Lang. Process. 21(10), 2140–2151 (2013)

A. Narayanan, D. Wang, Ideal ratio mask estimation using deep neural networks for robust speech recognition, in Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (2013), pp. 7092–7096

S. Pascual, A. Bonafonte, J. Serrà, SEGAN: speech enhancement generative adversarial network, in Proceedings of 18th Annual Conference of the International Speech Communication Association (2017), pp. 3642–3646

E. Plourde, B. Champagne, Auditory-based spectral amplitude estimators for speech enhancement. IEEE Trans. Audio Speech Lang. Process. 16(8), 1614–1623 (2008)

T.F. Quatieri, R.B. Dunn, Speech enhancement based on auditory spectral change, in Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 1 (2002), pp. 257–260

K.S. Riedel, A. Sidorenko, Minimum bias multiple taper spectral estimation. IEEE Trans. Signal Process. 43(1), 188–195 (1995)

P. Scalart, Speech enhancement based on a priori signal to noise estimation, in Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2 (1996), pp. 629–632.

M.K. Singh, S.Y. Low, S. Nordholm, Z. Zang, Bayesian noise estimation in the modulation domain. Speech Commun. 96, 81–92 (2018)

C.H. Taal, R.C. Hendriks, R. Heusdens, J. Jensen, An algorithm for intelligibility prediction of time–frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 19(7), 2125–2136 (2011)

D.J. Thomson, Spectrum estimation and harmonic analysis. Proc. IEEE 70(9), 1055–1096 (1982)

R.M. Udrea, N. Vizireanu, S. Ciochina, S. Halunga, Nonlinear spectral subtraction method for colored noise reduction using multi-band Bark scale. Signal Process. 88(5), 1299–1303 (2008)

Y. Uemura, Y. Takahashi, H. Saruwatari, K. Shikano, K. Kondo, Automatic optimization scheme of spectral subtraction based on musical noise assessment via higher-order statistics," in Proceedings of International Workshop on Acoustic Echo and Noise Control (2008)

A. Varga, H.J. Steeneken, Assessment for automatic speech recognition: II. NOISEX-92: a database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 12(3), 247–251 (1993)

E. Vincent, R. Gribonval, C. Fevotte, Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 14(4), 1462–1469 (2006)

N. Virag, Single channel speech enhancement based on masking properties of the human auditory system. IEEE Trans. Speech Audio Process. 7(2), 126–137 (1999)

T. Virtanen, Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Trans. Audio Speech Lang. Process. 15(3), 1066–1074 (2007)

D. Wang, J. Chen, Supervised speech separation based on deep learning: an overview. IEEE/ACM Trans. Audio Speech Lang. Process. 26, 1702–1726 (2018)

D.S. Williamson, Y. Wang, D. Wang, Complex ratio masking for monaural speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 24(3), 483–492 (2016)

B. Xia, C. Bao, Wiener filtering based speech enhancement with weighted denoising auto-encoder and noise classification. Speech Commun. 60, 13–29 (2014)

Y. Xu, J. Du, L.-R. Dai, C.-H. Lee, A regression approach to speech enhancement based on deep neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 23(1), 7–19 (2015)

K. Yamashita, S. Ogata, T. Shimamura, Spectral subtraction iterated with weighting factors, in Proceedings of IEEE Speech Coding Workshop (2002), pp. 138–140

P.C. Yong, S. Nordholm, H.H. Dam, Optimization and evaluation of sigmoid function with a priori SNR estimate for real-time speech enhancement. Speech Commun. 55(2), 358–376 (2013)

Funding

This work was supported by the Natural Sciences and Engineering Research Council (NSERC) of Canada, and the Microsemi Corporation [CRD Grant No. CRDPJ 515072-17].

Author information

Authors and Affiliations

Contributions

YA and BC conceived and designed the study. YA performed the experiments. YA, BC, and WPZ contributed to the writing, reviewing and editing of the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Attabi, Y., Champagne, B. & Zhu, WP. DNN-Based Calibrated-Filter Models for Speech Enhancement. Circuits Syst Signal Process 40, 2926–2949 (2021). https://doi.org/10.1007/s00034-020-01604-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-020-01604-6