Abstract

A general framework for the field-theoretic thermodynamic uncertainty relation was recently proposed and illustrated with the \((1+1)\) dimensional Kardar–Parisi–Zhang equation. In the present paper, the analytical results obtained there in the weak coupling limit are tested via a direct numerical simulation of the KPZ equation with good agreement. The accuracy of the numerical results varies with the respective choice of discretization of the KPZ non-linearity. Whereas the numerical simulations strongly support the analytical predictions, an inherent limitation to the accuracy of the approximation to the total entropy production is found. In an analytical treatment of a generalized discretization of the KPZ non-linearity, the origin of this limitation is explained and shown to be an intrinsic property of the employed discretization scheme.

Similar content being viewed by others

1 Introduction

The thermodynamic uncertainty relation (TUR) was formulated originally for a Markovian dynamics on a discrete set of states [1, 2] and later for overdamped Langevin equations [3]. It describes a lower bound on the entropy production in terms of mean and variance of an arbitrary current, provided the system is in a non-equilibrium steady state (NESS), for recent reviews see, e.g., [4, 5]. Specifically, the TUR product \(\mathcal {Q}\) of entropy production \(\left\langle \varDelta s_\text {tot}\right\rangle \) and precision \(\epsilon ^2\) of a current, both defined precisely below, obeys \(\mathcal {Q}\ge 2\). In [6], we have proposed a general framework for formulating a field-theoretic thermodynamic uncertainty relation. To demonstrate this framework, we have analytically shown the validity of the TUR for the one-dimensional Kardar–Parisi–Zhang equation (KPZ) [7]. As a central result, we found that the TUR product \(\mathcal {Q}\) is equal to 5 in the limit of a small coupling constant, i.e., the TUR is not saturated in this case. As these results are based on a systematic perturbation expansion, they apply a priori to the weak coupling regime (i.e. the linear scaling regime) of the non-linear equation (see e.g. [8]). In the case at hand this is the Edwards-Wilkinson scaling regime of the KPZ equation.

Since its introduction, the KPZ equation has been studied extensively and evolved into one of the most prominent examples in non-equilibrium statistical physics. An overview of the progress made can be found in, e.g., [9,10,11,12]. Regarding more recent theoretical developments on the aspect of the KPZ probability density functions and their respective universality classes we mention, e.g., [13,14,15,16,17,18,19,20]. Another active area of theoretical work deals with different types of correlated noise, see, e.g., [18, 21,22,23]. Regarding experimental studies of the KPZ equation via liquid crystal turbulence, we refer to [24,25,26]. Recent numerical treatment of the KPZ equation is shown in, e.g., [27,28,29,30,31]. From a mathematical point of view, in [32] a space-time discretization scheme for the equivalent Burgers equation has been proposed and its convergence, albeit in a weak distributional sense (which seems to be the best to be expected), has been rigorously proven. Further convergence proofs of discretization schemes are limited to spatially more regular noise, see e.g. [33].

In the present paper, we perform a numerical study of the KPZ–TUR to confirm the analytical results from [6] via a direct numerical simulation based on finite difference approximation in space [34,35,36]. Due to the poor spatial regularity of the KPZ equation the discretization of the non-linearity \((\partial _xh)^2\) is not straightforward. There exists a variety of different procedures, which lead to significantly differing results regarding expressions like the surface width (see, e.g., [34,35,36]). In [37] a generalized discretization of the KPZ non-linearity has been introduced, which covers most of the above mentioned schemes. This generalization uses a real parameter \(0\le \gamma \le 1\) to tune the explicit form of the respective discretization. For \(\gamma =1/2\), one obtains the so-called ‘improved discretization’ introduced in [34], which distinguishes itself by remarkable theoretical properties (see, e.g., [34, 37] and Sect. 3.1). For the equivalent Burgers equation a closely related scheme is proposed in [38] which is now known as the Sasamoto-Spohn discretization of the Burgers non-linearity (see e.g. [32]). Furthermore, in [32] it is shown that this discretization satisfies the assumptions of their convergence theorem.

We perform the numerical simulations in Sect. 5 with this improved discretization scheme (\(\gamma =1/2\)). Moreover, in Sect. 6.5, we also use the boundary cases of \(\gamma =0,\,1\) as these turn out to mark the lower and upper bound, respectively, of the numerical (discrete) TUR product (see Sect. 6.3.4). While the discretization with \(\gamma =1/2\) is the best approximation to the results from [6], we find numerically in Sect. 5.3 that there is still a deviation of roughly \(10\%\) between the numerical results and [6]. Based on an idea presented in [39], we can explain this systematic deviation by an analytical test of the generalized discretization of the KPZ non-linearity. We show that this deviation is an inherent property of the generalized nonlinear discretization operator (see Sect. 6), which we think is an intriguing finding in itself.

The paper is organized as follows. The basic notions for formulating the field theoretic TUR are introduced in Sect. 2. In Sect. 3, we explain the spatial and temporal discretization of the employed numerical scheme. Our way of approximating the TUR constituents and their theoretically expected scaling according to [6] is presented in Sect. 4. In Sect. 5, we show our numerical results; Sect. 6 is devoted to the analytical treatment of the generalized discretization of the KPZ non-linearity. We draw our conclusions in Sect. 7.

2 Basic Notions and Problem Statement

We start with a brief sketch of the underlying continuum problem and the notions needed to formulate the TUR. Consider the \((1+1)\)-dimensional KPZ equation modeling nonlinear surface growth with \(h=h(x,t)\) the surface height on a finite interval in space, \(x\in [0,b]\), \(b>0\),

In (1) we employ periodic boundary conditions, \(h(0,t)=h(b,t)\), and vanishing initial condition, \(h(x,0)=0\), \(x\in [0,b]\), i.e., we start from a flat surface. The KPZ equation from (1) is subject to Gaussian space-time white noise with zero mean, \(\left\langle \eta (x,t)\right\rangle =0\) and covariance given by \(\left\langle \eta (x,t)\,\eta (x^\prime ,t^\prime )\right\rangle =\varDelta _0\delta (x-x^\prime )\delta (t-t^\prime )\), where \(\varDelta _0\) measures the noise strength. The parameter \(\nu \) describes the strength of the diffusive term (surface tension) and \(\lambda \) is the coupling constant of the non-linearity that models surface growth perpendicular to the local surface.

One constituent of the TUR is the so-called fluctuating output, or, equivalently, the time-integrated generalized current, given by a linear functional, which reads [6]

Here \(g(x)\in L^2(0,b)\) describes an arbitrary weight function with non-vanishing mean (i.e., \(\int _0^bdx\,g(x)\ne 0\)). The precision of the output functional from (2) is given by

with \(\left\langle \cdot \right\rangle \) as the average over the noise history. In the NESS, i.e., for \(t\gg 1\), the precision from (3) becomes independent of the weight function g(x) [6]. The second component of the TUR product is the total entropy production in the NESS. For the KPZ equation from (1) the total entropy production is given by [6]

Based on the experience from [1, 3] for a Markovian dynamics, the TUR product \(\mathcal {Q}\) is expected to fulfill

In [6] we have shown analytically for the KPZ equation that for small \(\lambda \)

i.e., the TUR is obviously fulfilled, however not saturated. In the present paper, we numerically obtain the two TUR constituents in (3) and (4), and hence \(\mathcal {Q}\), by direct numerical simulation of (1).

3 Discretization of the KPZ-Equation

Throughout this paper we will use a direct numerical integration technique to simulate the height h(x, t) of the Kardar–Parisi–Zhang equation. There are various approaches to this regarding spatial and temporal discretization (see, e.g., [32, 34, 36,37,38,39,40,41,42,43]). In the following we present the details and reasoning of our approach.

3.1 Spatial Discretization

We consider a one-dimensional grid with grid-points \(x_l\) subject to periodic boundary conditions with lattice-spacing \(\delta \) given by

where b is the fixed length of the grid and L is the number of grid-points. At each grid-point we have for a fixed time t the value of the height field \(h_l(t)\equiv h(x_l,t)=h(l\delta ,t)\), with \(x_l=l\delta \) and \(l=0,\ldots ,L-1\). The time evolution of \(h_l(t)\) is then governed by (1), i.e.,

where \(h_L(t)=h_0(t)\) due to the periodic boundary conditions. Furthermore, \(\mathcal {L}_l\) and \(\mathcal {N}_l\) denote the discretizations of the linear and nonlinear term at the grid-point \(x_l\), respectively, and \(\eta _l(t)\equiv \eta (x_l,t)=\eta (l\delta ,t)\) represents the discretized noise. Regarding the diffusive term \(\mathcal {L}_l\) in (8), we choose the standard discretization, namely the nearest-neighbor discrete Laplacian,

see, e.g., [34, 35, 37, 38, 42]. The discretization of the nonlinear term \(\mathcal {N}_l\) is more subtle. During the last few decades different discretizations of the nonlinear term have been proposed for numerically integrating the KPZ equation [34, 35, 37, 42]. In the case of one spatial dimension, they all belong to the family of so-called generalized discretizations [37],

with \(\gamma \in \mathbb {R}\) and \(0\le \gamma \le 1\). In the following, we will highlight the cases \(\gamma =0\), \(\gamma =1\) and \(\gamma =1/2\).

For \(\gamma =0\), this discretization reads

which is simply the arithmetic mean of the squared forward and backward taken slope, respectively, of the height field at the grid-point \(x_l\) [37].

The case \(\gamma =1\) yields

This is the square of the central difference discretization of \(\partial _xh\), which is a commonly used choice for numerically integrating the KPZ equation, see, e.g., [34, 42].

Finally, \(\gamma =1/2\) leads to

This form was applied to the KPZ equation in, e.g., [34,35,36]. It is closely related to the discretized non-linearity proposed in [32, 38, 41] of the 1d-Burgers equation equivalent to (8). Following [34], we will name (13) the improved discretization (ID), for the following reasons. It has been shown analytically in [34] using (13) that the discrete Fokker-Planck equation corresponding to (8) possesses a steady state probability distribution for all \(\lambda >0\), which is equal to the linear (Edwards-Wilkinson, \(\lambda =0\)) steady state distribution. It was further shown that the stationary solution of the Fokker-Planck equation is reached for a non-vanishing conserved probability current, which indicates a genuine non-equilibrium steady state in the discretized system. This implies that the case \(\gamma =1/2\) accurately mimics the NESS-behavior of the continuous case, with the exact form of the total entropy production \(\left\langle \varDelta s_\text {tot}\right\rangle \) from [6]. The above mentioned properties of the operator \(\mathcal {N}_l^{(1/2)}\) distinguish the case \(\gamma =1/2\) from, e.g., \(\gamma =1\), which does not fulfill the fluctuation-dissipation relation in \((1+1)\) dimensions that is essential for obtaining the discrete NESS probability distribution equivalent to the continuous case. Furthermore, the choice \(\gamma =1/2\) in (10) is the only one that displays the above behavior [37].

We note that for spatially smooth enough functions h, any discretization from (10) (\(0\le \gamma \le 1\)) has an approximation error \(O(\delta ^2)\). This implies that for sufficiently small \(\delta \) the differences between their respective outcomes can be made arbitrarily small. However, the solution h(x, t) of (1) is at every time t a very rough function in space (see also Sect. 6). The various discretizations in (10) thus lead to significantly different results, e.g., with respect to the surface width in [34, 35, 39] and in the present paper with respect to certain integral norms of the KPZ non-linearity being essential for the KPZ–TUR (see Sect. 6).

3.2 Temporal Discretization

Regarding the temporal discretization of (8), we choose the stochastic Heun method (see, e.g., [40, 44]), as its predictor-corrector nature reflects the Stratonovich discretization used in [6]. To be specific, the predictor step applies the Euler forward scheme to (8), which yields the predictor \(y_l(t+\varDelta t)\) according to

Here, \(l=0,\ldots ,L-1\) like above and \(\{\xi _l(t)\}\) are stochastically independent N(0, 1)-distributed random variables (see, e.g., [40, 42, 44]). The prefactor in front of \(\xi _l(t)\) ensures that the noise has the prescribed variance according to (1). The predictor from (14) is then used in the subsequent corrector step as

The form in (15) displays the above mentioned Stratonovich time discretization. For the sake of simplicity, we start at \(t=0\) from a flat profile, in particular \(h_l(0)=0\), \(l=0,\ldots ,L-1\), and we impose periodic boundary conditions, i.e., \(h_L(t)=h_0(t)\). We slightly reformulate the expressions in (14) and (15) by introducing a set of effective input parameters \(\{\widetilde{\nu },\,\widetilde{\varDelta _0},\,\widetilde{\lambda }\}\) given by

with \(\delta \) from (7). Hence, the predictor-corrector Heun method reads

where we set

The effective spatial step-size \(\varDelta x\) in the simulation is now simply given by

which is a common choice, see, e.g. [35, 36, 40, 42]. From the parameter set \(\{\widetilde{\nu },\,\widetilde{\varDelta _0},\,\widetilde{\lambda }\}\), which enters the simulation, the physical parameter set \(\{\nu ,\,\varDelta _0,\,\lambda \}\) can be obtained from (16).

Finally, the calculation of the constituents of the TUR requires expectation values, denoted by \(\left\langle \cdots \right\rangle \). Those are approximated by ensemble-averaging over a certain number E of independent realizations.

4 Approximation and Scaling of the TUR Constituents

4.1 Regularizations

Since the KPZ equation is strictly speaking a singular SPDE (see, e.g., [32, 39, 45]), it has to be regularized in some way. From a physical point of view, this can be done by either introducing a smallest length-scale (e.g., in form of a lattice-spacing \(\delta \) [38]) or, in Fourier-space, by defining an upper cutoff wave number [35]. In the course of the analytical derivation of a KPZ–TUR in [6], we took the second approach and introduced the cutoff wave number \(2\pi \varLambda /b\). This caused the physical entities like output functional, diffusion coefficient and entropy production rate to depend on this cutoff parameter (see eqs. (80), (85) and (110), respectively, in [6]) and to become singular for \(\varLambda \rightarrow \infty \). On the other hand, \(\varLambda \rightarrow \infty \) represents the limit of spatially white noise. Thus, in order to approximate white noise as closely as possible, we have to choose \(\varLambda \) as large as possible. In the present paper, we use the real-space direct numerical simulation, described in Sect. 3, with lattice-spacing \(\delta \) from (7) to calculate the relevant physical quantities, which will depend on \(\delta \) and diverge for \(\delta \rightarrow 0\). For comparison purposes, a relation between the cutoff parameter \(\varLambda \) and the lattice-spacing \(\delta =b/L\) has to be established, keeping in mind that \(\varLambda \) has to be chosen as large as possible for the above reason. However, given a certain lattice spacing \(\delta \) in real-space, \(\varLambda \) is limited by the following consideration. With b and \(\delta \) fixed, our real-space direct simulation determines the values of \((\partial _xh)^2\) at L grid-points \(x_l=l\,\delta \), \(l=0,\ldots ,L-1\). Its Fourier transform is exact if it results in the corresponding Galerkin approximation of the non-linearity in Fourier space (i.e. a convolution with the correct wavenumber restriction for all modes). This is fulfilled if \(\varLambda \) satisfies the condition

The above condition (20) is the content of the 3/2-rule by Orszag [35, 46, 47]. It is used in spectral codes, as the so-called dealiasing procedure (see, e.g., [35]). To sum up, given L, the largest value of \(\varLambda \) for which the Fourier transform of \((\partial _xh)^2\) is exactly represented by its Galerkin approximation reads

Having this exact Galerkin approximation is relevant for determining the correct value of the TUR product in [6]. Therefore, we choose \(\varLambda \) according to (21). With this relation between the number of grid-points L and the wavenumber cutoff \(\varLambda \), we will now proceed with the numerical approximation of the TUR constituents and their respective scaling forms.

4.2 Mean and Variance of the Output Functional

As we showed in [6], the KPZ–TUR is independent of the choice of g(x) in (2) and thus, for the sake of simplicity, we set \(g(x)\equiv 1\) in the following. The output functional

thus becomes essentially the instantaneous spatially averaged height. We define

i.e., via a composite Simpson’s rule, with periodic boundary conditions \(h_L(t)=h_0(t)\), \(\{h_l(t)\}\) obtained via (17) and \(\varDelta x=1\) from (19). This implies that we approximate \(L\,\varPsi (t)/b\), rather than (22) itself, which simplifies the comparison of the numerically obtained results with the theoretical ones.

The expected scaling of \(\left\langle \varPsi ^{(N)}(t)\right\rangle \) is derived as follows. From [6] the corresponding (dimensionless) theoretical prediction \(\left\langle \varPsi _s(t_s)\right\rangle \) is known to lowest non-vanishing order in \(\lambda _\text {eff}\) as

Here, \(x_s\equiv x/b\), \(t_s\equiv t/T\) and \(h_s\equiv h/H\) are scaled, dimensionless quantities with reference values b, \(T=b^2/\nu \) and \(H=(\varDelta _0b/\nu )^{1/2}\), respectively, and

represents an effective, dimensionless coupling constant, see [6]. Hence, after rescaling, (24) can also be written as

where (16) and (21) were used. The left hand side of (26) is what we approximate with \(\left\langle \varPsi ^{(N)}(t)\right\rangle \) from (23), and thus

is the expected scaling behavior in the number of grid-points L and time t for \(t\gg T\).

For the variance of \(\varPsi ^{(N)}(t)\), we know from [6], that for sufficiently large \(\varLambda \)

to lowest non-vanishing order in \(\lambda _\text {eff}\). Hence, by following the same steps as above, we get

for \(t\gg T\). Using (27) and (29) the scaling form for the precision

is given by

for \(t\gg T\).

4.3 The Total Entropy Production

The last entity missing for formulating the numerical version of the KPZ–TUR is the total entropy production \(\left\langle \varDelta s_\text {tot}\right\rangle \). It is given as defined in (4) where we note that the integrand on the r.h.s. is nothing but the square of the KPZ non-linearity \((\partial _xh)^2\) and we thus can approximate the integrand using any of the discretizations from (10). To be specific, by means of the composite Simpson’s rule, we get the approximation

The prefactor of \(L^3/b^3\) arises from the fact that (32) uses (10) with \(\varDelta x=1\). Lastly, the time integral in (4) is approximated via

where \(\varDelta t\) is a discrete time-step and \(t=N\varDelta t\).

Proceeding similarly like in Sect. 4.2, we get from the theoretical predictions in [6] the expected scaling behavior for \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) and the TUR product with respect to the number of grid-points L and time t as

for \(t\gg T\), and

5 Numerical Results

5.1 Employed Parameters and Fit Functions

In this section we present the numerical results obtained from (17) by using three different discretizations according to (10). If not explicitly stated otherwise, we employ for the numerical simulations the ID discretization (\(\gamma =1/2\)) from (13). We compare the numerics to the expected scaling forms from Sect. 4. The numerics is performed for the following set of input parameters. For all simulations we set \(\widetilde{\nu }=\widetilde{\varDelta _0}=1\) and take \(\widetilde{\lambda }\) from \(\widetilde{\lambda }=0.01\) to \(\widetilde{\lambda }=0.1\) on a range of grid-points, which varies from \(L=16\) to \(L=1024\). In the range of \(L=16\ldots 64\) we use a time-step size of \(\varDelta t=10^{-4}\) and an ensemble size of \(E=500\). For \(L=128\ldots 1024\) a larger time-step of \(\varDelta t=10^{-2}\) and smaller ensemble size, \(E=250\), is used. This reduction is due to the strongly increasing run-time of the simulations for larger numbers of grid-points.

We test the scaling predictions for the TUR constituents. At first, we check whether (27) and (29) is fulfilled. This is done by fitting the numerical data of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) according to the fit-function \(f_1\), with

and \(f_2\), given by

respectively, where \(a_L\) and \(b_L\) are L-dependent fit-parameters. Subsequently, we compare \(a_L\) and \(b_L\) with \(c_1^2(L)\) and \(c_2(L)\), respectively.

The scaling prediction for the precision \((\epsilon ^2)^{(N)}\) according to (31) is tested by fitting

with fit-parameter \(d_L\), to the numerically obtained data for the precision and comparing \(c_3(L)\) to \(d_L\) for the respective values of L.

\(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) in the range of \(L=16\ldots 1024\). The dots represent the numerical data obtained from (17) using (13), whereas the straight lines are fit-functions according to (36), (37), respectively. The graphs in a and c are obtained with the set of input-parameters \(\{\widetilde{\nu },\widetilde{\varDelta _0},\widetilde{\lambda }\}=\{1.0,1.0,0.1\}\), time-step size \(\varDelta t=10^{-4}\) and ensemble size \(E=500\), whereas b and d show graphs with \(\varDelta t=10^{-2}\) and \(E=250\) for the same set of input-parameters

Precision \((\epsilon ^2)^{(N)}\) and total entropy production \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) in the range of \(L=16\ldots 1024\). The dots represent the numerical data obtained from (17) using (13), whereas the straight lines are fit-functions according to (38), (39), respectively. The graphs in a and c are obtained with the set of input-parameters \(\{\widetilde{\nu },\widetilde{\varDelta _0},\widetilde{\lambda }\}=\{1.0,1.0,0.1\}\), time-step size \(\varDelta t=10^{-4}\) and ensemble size \(E=500\), whereas b and d show graphs with \(\varDelta t=10^{-2}\) and \(E=250\) for the same set of input-parameters

Finally, by fitting the numerical data for \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) according to

with \(e_L\) as the fit-parameter and subsequently comparing \(c_4(L)\) to \(e_L\), the scaling prediction for the total entropy production (34) is checked.

5.2 Expectation Squared and Variance for the Spatial Mean of the Height Field

In Fig. 1, we plot the numerical data of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}\right] \). The data of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) displays a clear power-law behavior for all L in time t for \(t\ge 10^{2}\). This implies that the NESS-behavior is reached after this amount of time. In Table 1 we list the results for the respective fit-parameters and scaling predictions as well as their relative deviations. The values given in Table 1 suggest that for all L the predicted scaling form of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) from (27) squared is indeed valid. The approximation becomes more accurate for a growing number of grid-points L as the relative error \(\varDelta _1\) shows the clear trend of decreasing for growing L. The slight deviation in this trend observed between \(L=64\) and \(L=256\) is due to the fact that we changed \(\varDelta t\) from \(\varDelta t=10^{-4}\) for \(L=64\) to \(\varDelta t=10^{-2}\) for \(L=256\) as well as \(E=500\) for \(L=64\) to \(E=250\) for \(L=256\). However, as the effect is rather small, we did not see the need to adjust the parameters \(\varDelta t\) and E for \(L=256\ldots 1024\) in order to achieve a higher accuracy.

We now turn to the variance of the mean height field according to (29). The predicted power-law behavior of \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) in (29) can be observed in Fig. 1(c) and 1(d). The predicted scaling factor \(c_2(L)\) in (29) is reproduced well by the numerical data and its respective fit-functions (37) with fit-parameter \(b_L\). In contrast to the results for \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\), no clear trend in the relative error \(\varDelta _2=|c_2(L)-b_L|/c_2(L)\) can be observed, i.e., \(\varDelta _2\) does not become smaller with growing L. An improvement in the approximation may be obtained by an increase of the ensemble-size E and a further decrease of the time-step size \(\varDelta t\). This, however, would lead to a significantly longer run-time of the simulations. As in all cases the relative error is below \(10\%\), the gain from a further improved accuracy following the above mentioned steps may be outweighed by the increasing run-time.

We also performed the same numerical simulation for \(\widetilde{\lambda }=0.01\) (data not explicitly shown) instead of \(\widetilde{\lambda }=0.1\) before. For \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) this causes the system to take longer to reach its NESS-behavior, namely \(t\approx 10^4\) in comparison to \(t\approx 10^2\) for \(\widetilde{\lambda }=0.1\). This is due to the weaker driving by the non-linearity weighted with \(\widetilde{\lambda }=0.01\) opposed to \(\widetilde{\lambda }=0.1\) in Fig. 1 with \(\{\widetilde{\nu },\,\widetilde{\varDelta _0}\}\) fixed. Nevertheless, the general trend that with an increase of the number of grid-points L a decrease in the relative error \(\varDelta _1\) is achieved could still be seen clearly. Regarding the variance of \(\varPsi ^{(N)}(t)\), we could not determine a significant difference between \(\widetilde{\lambda }=0.01\) and \(\widetilde{\lambda }=0.1\).

5.3 Precision and Total Entropy Production

By combining the numerical results of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) according to (31), we obtain the data of the precision \((\epsilon ^2)^{(N)}\) as shown in Figs. 2a, b. As is to be expected considering the observations for the scaling of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \), both graphs display a clear power-law behavior in time t. The compliance of the numerical data with the predicted scaling from (31) can be seen in Table 2. Further, Fig. 2a, b again allow for a rough estimation of the elapsed time until the NESS-behavior is reached. To be specific, the numerical data converges to the asymptotic behavior according to (31) after \(t\approx 10^2\). This is roughly the same time it took for \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) in Fig. 1. That these two times coincide is to be expected, since \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) did not show a discernible amount of time to converge to the asymptotic scaling form (see Fig. 1).

We will now turn to the scaling behavior of the total entropy production \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\). In Fig. 2c, d, we show the plots of the numerically obtained data for \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) and the according fits. We find that the scaling behavior is recovered nicely, albeit with a significantly greater relative deviation as compared to \((\epsilon ^2)^{(N)}\) (see Table 2).

We also calculated the precision for \(\widetilde{\lambda }=0.01\) (data not explicitly shown), where it could again be seen that the time needed by the system to reach its NESS-behavior is at least one order of magnitude longer for \(\widetilde{\lambda }=0.01\) than for \(\widetilde{\lambda }=0.1\). This is due to the same reason as discussed for \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) above. It becomes apparent for \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) that the only effect of the reduction of \(\widetilde{\lambda }\) by one order of magnitude from \(\widetilde{\lambda }=0.1\) to \(\widetilde{\lambda }=0.01\) is a rescaling of the scaling factors. While for the other entities like \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) some impact by the change of \(\widetilde{\lambda }\) can be observed in regard to the scaling factors and the relative errors, the relative errors of the scaling factors of \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) do not change significantly with \(\widetilde{\lambda }\). In particular, the only influence on the relative error of \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) is achieved by an increase in the number of grid-points L. It seems, however, that the relative errors do not become smaller than roughly \(10\%\) even for large L. In Sect. 6 we will discuss this observation in more detail and present an analytical explanation for this discrepancy.

5.4 Thermodynamic Uncertainty Relation

In the previous sections we have derived the scaling forms of all the TUR constituents and tested their scaling predictions numerically. Here, we will combine these results for the numerical thermodynamic uncertainty product \(\mathcal {Q}^{(N)}=\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\,(\epsilon ^2)^{(N)}\). In Fig. 3, we plot the TUR product \(\mathcal {Q}^{(N)}\). It can be seen that it approaches a stationary value. Since in the stationary state the data of \(\mathcal {Q}^{(N)}\) fluctuates stochastically around a certain value, we introduce \(\overline{\mathcal {Q}}_\tau ^{(N)}\), i.e., the temporal mean of \(\mathcal {Q}^{(N)}\) for times \(t\ge \tau \). This yields a quantity that can be compared to \(\mathcal {Q}\) from (35) shown as dashed lines in Fig. 3. Note that the value of \(\tau =10^3\) is chosen heuristically based on the observations from Fig. 3. From Table 3, it can be seen that \(\overline{\mathcal {Q}}_\tau ^{(N)}\) ranges from 4.16 to 4.58. Hence, for all calculated configurations the TUR product is significantly greater than 2 and thus the numerical calculations support the theoretical prediction from (35) and [6] well, in the sense that the TUR product is not saturated. It can be further inferred from Table 3 that all the \(\overline{\mathcal {Q}}_\tau ^{(N)}\)’s underestimate the theoretically predicted values. This is due to the above discussed observation that, at least for large L and E, the relative errors of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) tend to zero, whereas the relative error of \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) seems to tend to approximately \(10\%\). Therefore, the TUR product is inherently underestimated by the numerical scheme from (17) and the ID discretization of the non-linearity from (13).

TUR product \(\mathcal {Q}^{(N)}\) in the range of \(L=16\ldots 1024\). The dots represent the numerical data obtained from (17) using (13), whereas the dashed lines are the theoretically expected values of \(\mathcal {Q}\) according to (35). In a the set of input-parameters \(\{\widetilde{\nu },\widetilde{\varDelta _0},\widetilde{\lambda }\}=\{1.0,1.0,0.1\}\), time-step size \(\varDelta t=10^{-4}\) and ensemble size \(E=500\) is used, whereas in b we use a time-step size of \(\varDelta t=10^{-2}\) and an ensemble size of \(E=250\) for the same set of input-parameters

We have shown that for \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) the predicted scaling forms from (27) and (29), respectively, fit the numerically obtained data for these two quantities very well. Especially for large values of the number of grid-points L, we observed for \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) a clear decrease in the relative error between the theoretical predictions and the numerical results, i.e., \(\varDelta _1\rightarrow 0\) (see Table 1). The relative error \(\varDelta _2\) of the variance \(\text {var}\left[ \varPsi ^{(N)}(t)\right] \) did not depend on L and seems to be solely caused by stochastic fluctuations due to the limited ensemble size E (see Table 1). With the above two quantities, both components of the precision \((\epsilon ^2)^{(N)}\) from (31) were found to follow the predicted scaling forms and thus also the numerically obtained precision behaves as expected (see Table 2). In Table 2 we have seen for \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\), that the scaling of the numerical data fits well with the theoretically predicted one from (34). It was observed, however, that even for large L the relative error did not get smaller than roughly \(10\%\). We conclude that this is an inherent issue with the numerical scheme from (17) with the non-linearity according to (13). Further discussion of this point will follow in Sect. 6.

For the TUR product we observe that all simulated systems tend to a stationary value for \(\mathcal {Q}^{(N)}=\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\,(\epsilon ^2)^{(N)}\) (see Fig. 3). However, the numerical value is underestimating the theoretically expected one in all cases (see Table 3). Nevertheless, the numerical data shows clearly that the TUR product is well above the value of 2 and ranges for our simulations roughly between 4 and 5, which is strong support for the analytical calculations from [6].

5.5 Dependence of the TUR-Product on the Coupling Parameter

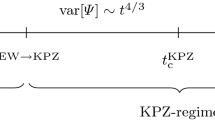

The analytical derivation of the KPZ–TUR in [6] being based on a general perturbation expansion with respect to a small coupling constant \(\lambda _\text {eff}\), may lead to the impression that it will hold only for times t smaller than the Edwards-Wilkinson (EW) to KPZ crossover time \(t^{\text {EW}\rightarrow \text {KPZ}}\) (see [48]) instead for \(t\rightarrow \infty \). To analyze the range of validity of the KPZ–TUR it is instructive to look at the relevant time-regimes of the KPZ equation. There are two distinct crossover times that describe the scaling behavior of \(\text {var}[\varPsi (t)]\) (see [48]), namely for one the EW to KPZ crossover time

(see [48]) i.e. the time at which the system crosses over from the EW scaling regime with dynamical exponent \(z=2\) to the transient KPZ scaling regime with \(z=3/2\). This crossover is reflected in the scaling behavior of \(\text {var}[\varPsi (t)]\), which is given by \(\text {var}[\varPsi (t)]\sim t\) for \(t<t^{\text {EW}\rightarrow \text {KPZ}}\) and \(\text {var}[\varPsi (t)]\sim t^{4/3}\) in the transient regime \(t>t^{\text {EW}\rightarrow \text {KPZ}}\). On the other hand there is the KPZ correlation time

i.e. the time at which the transient KPZ regime turns into the stationary KPZ regime, namely the genuine KPZ–NESS, which restores normal time-scaling for the variance, thus \(\text {var}[\varPsi (t)]\sim t\) for \(t>t_\text {c}^\text {KPZ}>t^{\text {EW}\rightarrow \text {KPZ}}\) [48]. Here both times in (40) and (41) are given in dimensionless form for the sake of simplicity. With (40) and (41) we define a critical value for \(\lambda _\text {eff}\) at which both crossover times coincide, given by

With this critical threshold \(\lambda _\text {eff}^\text {c}\) we divide the scaling analysis of \(\text {var}[\varPsi (t)]\) in two distinct regimes, namely one where \(\lambda _\text {eff}<\lambda _\text {eff}^\text {c}\) and another where \(\lambda _\text {eff}>\lambda _\text {eff}^\text {c}\).

We first discuss the case corresponding to our effective input parameters \(\{\widetilde{\nu },\widetilde{\varDelta _0},\widetilde{\lambda }\}=\{1,1,0.1\}\) which lead to \(\lambda _\text {eff}=0.1\sqrt{L}\), implying a range from \(\lambda _\text {eff}=0.4\) to \(\lambda _\text {eff}=3.2\) for system-sizes of \(L=16\) and \(L=1024\), respectively. Thus our numerical analysis is performed significantly below the critical \(\lambda _\text {eff}^\text {c}\). Being below the critical threshold implies that formally \(t^{\text {EW}\rightarrow \text {KPZ}}>t_\text {c}^\text {KPZ}\), which causes the system to become stationary before the crossover from EW to KPZ takes place. For this formal ordering of relevant time-scales the dynamic behavior is described by the Edwards-Wilkinson correlation time, given by \(t_\text {c}^\text {EW}=\pi \,L^2/(288\,\nu )\) [48], or in dimensionless form,

It can be checked that indeed \(t_\text {c}^\text {EW}<t_\text {c}^\text {KPZ}\) for \(\lambda _\text {eff}\ll \lambda _\text {eff}^\text {c}\), e.g. for \(L=1024\) we have \(t_\text {c}^\text {EW}/t_\text {c}^\text {KPZ}\approx 0.26\). Thus the system reaches a stationary state described by the dynamical exponent \(z=2\) corresponding to the Edwards-Wilkinson scaling regime. Therefore, \(\text {var}[\varPsi (t)]\) is given by (29) for all times \(t>t_\text {c}^\text {EW}\), provided \(\lambda _\text {eff}\ll \lambda _\text {eff}^\text {c}\) and thus the TUR-product \(\mathcal {Q}\) from (35) is valid for large-enough times as well, especially for \(t\rightarrow \infty \).

For the case of \(\lambda _\text {eff}>\lambda _\text {eff}^\text {c}\) the ‘normal’ ordering of time-scales is in place, that is \(t^{\text {EW}\rightarrow \text {KPZ}}<t_\text {c}^\text {KPZ}\). Then for \(t^{\text {EW}\rightarrow \text {KPZ}}<t<t_\text {c}^\text {KPZ}\), \(\text {var}[\varPsi (t)]\sim t^{4/3}\), which implies that for \(t\gg t_\text {c}^\text {KPZ}\), i.e. when the system has reached its genuine KPZ–NESS where again \(\text {var}[\varPsi (t)]\sim t\), the slope is greater than in the EW-regime, namely we have [48]

in dimensionless form.

We thus expect for sufficiently large times t the following \(\lambda _\text {eff}\)-dependence of the KPZ–TUR product

The relations in (45) are based for one on the above scaling analysis of the variance of \(\varPsi (t)\). On the other hand it is known that the 1d stationary distribution \(p_s[h]\) of the EW and KPZ equation are identical (see e.g. (100) in [6]). Thus stationary KPZ correlations are given by the corresponding Edwards-Wilkinson correlations. This implies that the expressions for \(\left\langle \varPsi \right\rangle \) and \(\left\langle \varDelta s_\text {tot}\right\rangle \) from [6] (see (24) and (34)) are not only perturbative approximations but in fact the exact expressions for the 1d stationary case.

6 Analytical Test of the Generalized Discretization of the KPZ-Non-linearity

6.1 Implications of Poor Regularity

As we have already mentioned in Sect. 3, the solution h(x, t) to the KPZ equation from (1) is a very rough function for all times t. The spatial regularity of h(x, t) cannot be higher than that of the solution to the corresponding Edwards-Wilkinson equation, \(h^{(0)}(x,t)\), i.e., the KPZ equation with a vanishing coupling constant, \(\lambda =0\) in (1) (see, e.g., [39, 45, 49]). For \(h^{(0)}(x,t)\) it can be checked that for all \(t>0\)

where \(H^{s}\) denotes the Sobolev space of order \(s\in \mathbb {R}\) of 1-periodic functions,

This implies that \(h^{(0)}(x,t)\in H^{s}\) with \(s<1/2\), and thus \(h^{(0)}(x,t)\in L^2\), however \(h^{(0)}(x,t)\notin H^1\). Therefore, \(\left\langle \Vert \partial _xh^{(0)}\Vert ^2_{L^2}\right\rangle =\left\langle \int dx\,(\partial _xh^{(0)})^2\right\rangle \), is not a well-defined quantity. Using Hölders inequality for the expectation, one gets \(\left\langle \int dx\,(\partial _xh^{(0)})^2\right\rangle \le \left( \left\langle \int dx\,(\partial _xh^{(0)})^4\right\rangle \right) ^{1/2}\), which shows that \(\left\langle \int dx\,(\partial _xh^{(0)})^4\right\rangle =\left\langle \Vert (\partial _xh^{(0)})^2\Vert ^2_{L^2}\right\rangle \) is not well defined either. These two expressions do, however, play an important role in determining the TUR constituents. Hence, some form of regularization is needed to make these expressions well-defined. In [6], this was accomplished by introducing a cutoff \(\varLambda \) of the Fourier-spectrum, i.e., \(|k|\le \varLambda \).

Here, we will follow a different path. Since the solution of the Edwards-Wilkinson equation given by

is expected to be a reasonable approximation to the solution of the KPZ equation (1) for \(\lambda \ll 1\), we approximate the KPZ non-linearity \((\partial _xh)^2\) by \((\partial _xh^{(0)})^2\). The Fourier-coefficients \(h^{(0)}_k\) are given by

where \(\mu _k=-4\pi ^2k^2\) (see [6]) and \(\eta _k\) is the k-th Fourier-coefficient of \(\eta (x,t)=\sum _k\eta _ke^{2\pi ikx}\), i.e., the Fourier-series of the KPZ noise from (1). This procedure is equivalent to solving the KPZ equation by a low order perturbation solution with respect to \(\lambda \), which was performed in [6]. We then replace in \(\left\langle \int _0^1dx\,(\partial _xh^{(0)})^2\right\rangle \) and \(\left\langle \int _0^1dx\,(\partial _xh^{(0)})^4\right\rangle \) the non-linearity \((\partial _xh^{(0)})^2\) with any of its generalized discretizations \(\mathcal {N}_\delta ^{(\gamma )}[h^{(0)}]\),

where we have defined

as the continuum variant of (10). For simplicity, as the operator only acts on x we omit the time t in the above equation. Of course, the expressions from (50), (51) will diverge for \(\delta \rightarrow 0\). The necessary regularization of these expressions is performed by introducing a smallest \(\delta >0\).

Both ways of regularization are based on the physical idea of introducing a smallest length scale [38], here in real space and in [6] in Fourier space, so their respective results are directly comparable to one another.

6.2 Expected Integral Norms of the Non-linearity

The expectation of the \(L^1\)-norm of \(\mathcal {N}_\delta ^{(\gamma )}[h^{(0)}]\) from (50) is evaluated for \(t\gg 1\) and \(\delta \ll 1\) as

where the details of the calculation are given in Appendix A. Similarly, the expectation of the \(L^2\)-norm squared from (51) reads

as shown in Appendix B. The expressions in (53) and (54) being divergent for \(\delta \rightarrow 0\) reflect the regularity issues from above.

6.3 Approximations of the TUR Constituents

We now establish how the expressions in (50) and (51) are related to the respective constituents of the thermodynamic uncertainty relation.

6.3.1 The Output Functional

Consider the dimensionless form of the KPZ equation from (1) (see, e.g., also [6]). Performing a spatial integration within the boundaries (0, 1) yields with the definition of the output functional \(\varPsi (t)\) from (22)

Due to the periodic boundary conditions the diffusive term vanishes. A subsequent averaging leads to

In the NESS, (56) becomes

We now approximate the right hand side of (57) by (50) and thus we find with (53) for the output functional in lowest non-vanishing order of \(\lambda _\text {eff}\) and for \(t_s\gg 1\)

6.3.2 The Total Entropy Production

From [6] we know that in the NESS \(\left\langle \varDelta s_\text {tot}\right\rangle =\sigma \,t_s\) holds with \(\sigma \) as the dimensionless entropy production rate given by (see [6])

where we now approximate the right hand side of (59) by (51). Hence, with (54) we obtain for the entropy production rate for \(t_s\gg 1\)

6.3.3 The Variance

We have

where

with \(h_0(t)\) the 0-th coefficient of the Fourier series \(h(x,t)=\sum _kh_k(t)e^{2\pi ikx}\) for the KPZ solution. The \(h_k\) may be expanded in terms of the effective coupling constant \(\lambda _\text {eff}\) and to lowest non-vanishing order it reduces to \(h_k(t)\approx h^{(0)}_k(t)\), where the latter corresponds to the solution of the Edwards-Wilkinson equation \((\lambda _\text {eff}=0)\). Hence, to lowest non-vanishing order we get from (62)

where (49) and the relation \(\left\langle \eta _0(r)\eta _0(s)\right\rangle =\delta (r-s)\) have been used. The second term in (61) is known from (58) and thus gives no contribution to the \(O(\lambda _\text {eff}^0)\)-term from (63). However, for completeness we note that the next non-vanishing term in a \(\lambda _\text {eff}\)-expansion of \(\left\langle (\varPsi _s(t_s))^2\right\rangle \) is \(O(\lambda _\text {eff}^2)\) and the prefactor of \(\lambda _\text {eff}^2\) contains the contribution \(1/((\gamma +1)^2\delta ^2)t_s^2\), which cancels the second term in (61). This is similar to the continuum case in [6]. Thus, to lowest non-vanishing order in \(\lambda _\text {eff}\), the variance is for all \(0\le \gamma \le 1\) given by

in dimensionless form.

6.3.4 The Discrete TUR Product

As the variance from (64) is to lowest order identical to the theoretically predicted one, the TUR product as a function of \(\gamma \), denoted by \(\mathcal {Q}^{(\gamma )}_\delta \), reads with (58) and (60) for \(\delta \ll 1\), \(t_s\gg 1\)

Since \(\mathcal {Q}^{(\gamma )}_\delta \) is monotonously increasing with \(\gamma \), the case of \(\gamma =0\) represents a lower bound on the TUR product. Hence, the TUR is clearly not saturated as was predicted in [6].

Compared to [6], we here follow an independent path in obtaining the TUR product in (65). Instead of using a Fourier space representation, we derive the TUR product from real space calculations. In particular, we introduce a smallest length scale \(\delta \) in real space as the regularizing parameter opposed to a cutoff parameter in the Fourier spectrum in [6]. In other words, we work here with the full Fourier spectrum but have to approximate the non-linearity by (52), whereas in [6] we calculated the exact non-linearity, but only on a finite Fourier spectrum with \(|k|\le \varLambda \). Below we connect these two approaches. We note that the divergences of \(\left\langle \varPsi (t)\right\rangle ^2\) and \(\left\langle \varDelta s_\text {tot}\right\rangle \) are in the present representation in \(1/\delta ^2\) for \(\delta \rightarrow 0\), whereas in [6] these expressions diverge in \(\varLambda ^2\) for \(\varLambda \rightarrow \infty \). Thus, the analysis above may well be understood as an alternative way of calculating the thermodynamic uncertainty relation.

6.4 Comparison of the Approximated TUR Constituents with the Theoretical Predictions

To compare the results of the approximated TUR components in (58) and (60) to the theoretical predictions from [6], we need to express the lattice-spacing \(\delta \) in terms of the Fourier-cutoff \(\varLambda \) used in [6]. On the interval (0, 1), the lattice-spacing is given by \(\delta =1/L\), with L the number of grid-points. Using the link between L and \(\varLambda \) from (21) leads to

where the last step holds for large enough \(\varLambda \). Thus, with the theoretical predictions for \(\left\langle \varPsi _s(t_s)\right\rangle ^2\) and \(\left\langle \varDelta s_\text {tot}\right\rangle \) from [6] given by

we can calculate the relative deviation \(\varDelta \) of \(\left( \left\langle \varPsi (t)\right\rangle ^{(\gamma )}_\delta \right) ^2\) and \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(\gamma )}_\delta \), respectively. For the expectation of the output functional squared we obtain for \(\varLambda \gg 1\) with (66), (67) and (58)

Analogously, we get for the relative deviation of \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(\gamma )}_\delta \) for \(\varLambda \gg 1\) and with (66), (68) and (60)

With the theoretical prediction from [6],

where the last step holds for \(\varLambda \gg 1\), we calculate the relative deviation of the thermodynamic uncertainty product according to

In Table 4 we show the relative deviations of all three quantities for some significant values of \(\gamma \).

As can be seen, the overall best result is obtained for \(\gamma =1/2\). For all other choices of \(\gamma \) as displayed in Table 4, either all the relative errors are greater or, if one of the three errors is chosen to be zero, the two others turn out to be larger than the respective ones for \(\gamma =1/2\). In fact, \(\gamma =1/2\) minimizes the target function

for \(w=2\). Choosing, e.g., \(w=1,\,3\) results in \(\gamma =0.48,\,0.52\), respectively. Hence, \(\gamma =1/2\) provides in a natural sense a much better approximation than \(\gamma =0,\,1\).

\(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) from (27) and (34), respectively, for \(\mathcal {N}^{(\gamma )}_l\) with \(\gamma =0,\,1/2,\,1\). The dots represent the numerical data for \(\{\widetilde{\nu },\widetilde{\varDelta _0},\widetilde{\lambda }\}=\{1.0,1.0,0.1\}\), \(\varDelta t=10^{-2}\), \(E=250\) and \(L=128\). The straight lines show fits according to (36) and (39) with fit-parameters \(a_L\) and \(e_L\), respectively

The main purpose of the above analysis was to confirm and explain our key numerical findings from Fig. 1, Table 1 and Fig. 2, Table 2. Namely, that for \(\gamma =1/2\) the error of \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) nearly vanishes, while \(\left\langle \varDelta s_\text {tot}\right\rangle \) is underestimated by roughly \(10\%\) and consequently the TUR product is underestimated by roughly \(10\%\) as well (see Table 3). These findings are confirmed by the corresponding analytical results in Table 4 and (65). Furthermore, we infer from the analysis above that the deviation for \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) (and thus for the TUR) cannot be reduced by changing the parameters of the numerical scheme like lattice-size \(\delta =\varDelta x\) or the time step \(\varDelta t\). It is instead caused by an intrinsic property of the non-linear operator \(\mathcal {N}_\delta ^{(1/2)}\) which recovers the correct scaling of \(\left\langle \int _0^1dx\,(\partial _xh^{(0)})^2\right\rangle \), but underestimates the prefactor in the scaling form of \(\left\langle \int _0^1dx\,(\partial _xh^{(0)})^4\right\rangle \) by exactly \(10\%\).

6.5 Numerical Results for the Generalized Discretization of the Non-linearity

The above analytical results from (65) as well as Table 4 are confirmed by additional numerical simulations for \(\mathcal {N}^{(\gamma )}_l\) with \(\gamma =0,\,1/2,\,1\). Fig. 4 shows the data for \(\left\langle \varPsi ^{(N)}(t)\right\rangle ^2\) and \(\left\langle \varDelta s_\text {tot}\right\rangle ^{(N)}\) from (27) and (34), respectively. We quantify the significant differences between the respective graphs in Table 5.

A comparison of the numerically found relative errors \(\varDelta \) in Table 5 to those analytically obtained in Table 4 shows very good agreement, which supports the above analysis. As the variance is not dependent on the respective choice of \(\gamma \) (see (64)), which was also reproduced by the numerics, we refrain from explicitly showing this plot as there is no discernible difference in the three graphs. Finally, we show the TUR product \(\mathcal {Q}^{(N)}\) for the three different choices of \(\gamma \) in Fig. 5, indicating a clear distinction between the three different discretizations and good agreement with the analytically calculated values from (65) represented by the dashed lines in the plot.

7 Conclusion

We have performed direct numerical simulation of the KPZ equation driven by space-time white noise on a spatially finite interval in order to test the analytical results from [6] regarding the KPZ–TUR, strictly speaking valid in the EW-scaling regime of the KPZ equation as being based on a perturbation expansion in Fourier space.

Due to the spatial roughness of the solution to the KPZ equation (see Sect. 6), the discretization of the nonlinear term is of great importance. It may be chosen from a set of different variants introduced over the last few decades, which all belong to the so-called generalized discretization \(\mathcal {N}^{(\gamma )}_\delta \), with \(0\le \gamma \le 1\) [37]. The numerical data in Sect. 5 was obtained with \(\gamma =1/2\), whereas in Sect. 6.5 we also used \(\gamma =0,\,1\), to illustrate the lower and upper bounds of the KPZ–TUR product, respectively. The choice of \(\gamma =1/2\) leads to the so-called improved discretization [34] for the KPZ equation. \(\mathcal {N}^{(1/2)}_\delta \) is distinguished by the fact that it preserves the continuum steady state probability distribution of h(x, t) [34, 37, 38]. This implies that also the continuum expression for the total entropy production as derived in [6] remains true in the discrete case (\(\delta >0\)). As the limit \(\delta \rightarrow 0\) inherently diverges due to the surface roughness, we believe this feature of \(\mathcal {N}^{(1/2)}_\delta \) to be of importance. We have further analytically shown in Sect. 6 and confirmed numerically in Sect. 6.5 that the discretization with \(\gamma =1/2\) leads to the most accurate approximation of the results in [6], which again highlights the significance of \(\mathcal {N}^{(1/2)}_\delta \).

A central result of this paper, numerically obtained in Sect. 5.4 and analytically shown in Sect. 6.3.4, is that for all choices of \(\gamma \) the TUR product clearly does not saturate the lower bound \(\mathcal {Q}=2\). In particular, we have found as lowest value 4 for \(\gamma =0\) and as largest value 6 for \(\gamma =1\). Our preferred choice of \(\gamma =1/2\) leads to a TUR product of 9/2 (see Sect. 6.3.4), which is also found within the numerical data in Sect. 5.4. This \(10\%\) underestimation of the theoretical prediction from [6] is independent of the lattice spacing \(\delta \) and the time-step size \(\varDelta t\). By using an idea presented in [39], which consists basically of testing how the discretized non-linearity of a rough SPDE acts on the solution of the corresponding linearized equation, we were able to show analytically that this deviation is an intrinsic property of the \(\mathcal {N}^{(1/2)}_\delta \)-operator. Whereas it recovers the correct scaling of \(\left\langle \varPsi (t)\right\rangle ^2\) it underestimates the scaling factor of \(\left\langle \varDelta s_\text {tot}\right\rangle \) by \(10\%\) (see Sect. 6). Furthermore, the analysis in Sect. 6 may be seen as an alternative way, compared to [6], of deriving the KPZ–TUR.

We thus conclude that the value 9/2 for the TUR product obtained with \(\gamma =1/2\) is the most reliable result that can be achieved by direct numerical simulation of the KPZ equation. Regarding future work, the findings in [34,35,36] lead us to believe that a pseudo spectral simulation of the KPZ equation might yield an even closer approximation to the value 5 as found in [6] than the one obtained in this paper by direct numerical simulation.

The numerical verification of the \(\lambda _\text {eff}\)-dependent TUR in Sect. 5.5 as well as approaching the intermediate regime \(\lambda _\text {eff}\approx \lambda _\text {eff}^\text {c}\) analytically and numerically will be pursued in future work.

References

Barato, A.C., Seifert, U.: Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015)

Gingrich, T.R., Horowitz, J.M., Perunov, N., England, J.L.: Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016)

Gingrich, T.R., Rotskoff, G.M., Horowitz, J.M.: Inferring dissipation from current fluctuations. J. Phys. A 50(18), 184004 (2017)

Horowitz, J.M., Gingrich, T.R.: Thermodynamic uncertainty relations constrain non-equilibrium fluctuations. Nat. Phys. 16(1), 15–20 (2019)

Seifert, U.: Stochastic thermodynamics: from principles to the cost of precision. Physica A 504, 176–191 (2018)

Niggemann, O., Seifert, U.: Field-theoretic thermodynamic uncertainty relation. J. Stat. Phys. 178, 1142–1174 (2020)

Kardar, M., Parisi, G., Zhang, Y.-C.: Dynamic scaling of growing interfaces. Phys. Rev. Lett. 56, 889–892 (1986)

Hentschel, H.G.E., Family, F.: Scaling in open dissipative systems. Phys. Rev. Lett. 66, 1982–1985 (1991)

Halpin-Healy, T., Takeuchi, K.A.: A KPZ Cocktail–Shaken, not stirred. J. Stat. Phys. 160(4), 794–814 (2015)

Sasamoto, T.: The 1D Kardar—Parisi-Zhang equation: height distribution and universality. Progress Theoret. Exp. Phys. 2, 2016 (2016)

Takeuchi, Kazumasa A.: An appetizer to modern developments on the Kardar–Parisi–Zhang universality class. Physica A, 504, 77–105 (2018). Lecture Notes of the 14th International Summer School on Fundamental Problems in Statistical Physics

Spohn, H.: The 1+1 dimensional Kardar–Parisi–Zhang equation: more surprises. J. Stat. Mech. 2020(4), 044001 (2020)

Prähofer, M., Spohn, H.: Exact scaling functions for one-dimensional stationary KPZ growth. J. Stat. Phys. 115, 255–279 (2004)

Sasamoto, T., Spohn, H.: Exact height distributions for the KPZ equation with narrow wedge initial condition. Nucl. Phys. B 834(3), 523–542 (2010)

Amir, G., Corwin, I., Quastel, J.: Probability distribution of the free energy of the continuum directed random polymer in 1 + 1 dimensions. Commun. Pure Appl. Math. 64(4), 466–537 (2011)

Takeuchi, K.A., Sano, M.: Evidence for geometry-dependent universal fluctuations of the Kardar–Parisi–Zhang interfaces in liquid-crystal turbulence. J. Stat. Phys. 147, 853–890 (2012)

Imamura, T., Sasamoto, T.: Exact solution for the stationary Kardar–Parisi–Zhang equation. Phys. Rev. Lett. 108, 190603 (2012)

Meerson, B., Sasorov, P.V., Vilenkin, A.: Nonequilibrium steady state of a weakly-driven Kardar–Parisi–Zhang equation. J. Stat. Mech. 2018(5), 053201 (2018)

Saberi, A.A., Dashti-Naserabadi, H., Krug, J.: Competing universalities in Kardar–Parisi–Zhang growth models. Phys. Rev. Lett. 122, 040605 (2019)

Rodríguez-Fernández, E., Cuerno, R.: Non-KPZ fluctuations in the derivative of the Kardar–Parisi–Zhang equation or noisy Burgers equation. Phys. Rev. E 101, 052126 (2020)

Mathey, S., Agoritsas, E., Kloss, T., Lecomte, V., Canet, L.: Kardar–Parisi–Zhang equation with short-range correlated noise: emergent symmetries and nonuniversal observables. Phys. Rev. E 95, 032117 (2017)

Niggemann, O., Hinrichsen, H.: Sinc noise for the Kardar–Parisi–Zhang equation. Phys. Rev. E 97, 062125 (2018)

Squizzato, D., Canet, L.: Kardar–Parisi–Zhang equation with temporally correlated noise: a nonperturbative renormalization group approach. Phys. Rev. E 100, 062143 (2019)

Fukai, Y.T., Takeuchi, K.A.: Kardar–Parisi–Zhang interfaces with inward growth. Phys. Rev. Lett. 119, 030602 (2017)

Fukai, Y.T., Takeuchi, K.A.: Kardar–Parisi–Zhang interfaces with curved initial shapes and variational formula. Phys. Rev. Lett. 124, 060601 (2020)

Iwatsuka, T., Fukai, Y.T., Takeuchi, K.A.: Direct evidence for universal statistics of stationary Kardar–Parisi–Zhang interfaces. Phys. Rev. Lett. 124, 250602 (2020)

Marinari, E., Pagnani, A., Parisi, G.: Critical exponents of the KPZ equation via multi-surface coding numerical simulations. J. Phys. A 33(46), 8181–8192 (2000)

Prolhac, S., Spohn, H.: Height distribution of the Kardar–Parisi–Zhang equation with sharp-wedge initial condition: Numerical evaluations. Phys. Rev. E 84, 011119 (2011)

Hartmann, A.K., Le Doussal, P., Majumdar, S.N., Rosso, A., Schehr, G.: High-precision simulation of the height distribution for the KPZ equation. EPL (Europhys. Lett.) 121(6), 67004 (2018)

Priyanka., Täuber., Uwe C., Pleimling, M.: Feedback control of surface roughness in a one-dimensional Kardar–Parisi–Zhang growth process. Phys. Rev. E 101, 022101 (2020)

Ebrahimi Viand, R., Dortaj, S., Nedaaee O., Seyyed E., Nedaiasl, K., Sahimi, M.: Numerical Simulation and the Universality Class of the KPZ Equation for Curved Substrates. arXiv e-prints, arXiv:2007.09761 (2020)

Cannizzaro, G., Matetski, K.: Space-time discrete KPZ equation. Commun. Math. Phys. 358, 521–588 (2018)

Blömker, D., Kamrani, M., Hosseini, S.M.: Full discretization of the stochastic Burgers equation with correlated noise. IMA J. Numer. Anal. 33(3), 825–848, 01 (2013)

Lam, C.-H., Shin, F.G.: Improved discretization of the Kardar–Parisi–Zhang equation. Phys. Rev. E 58, 5592–5595 (1998)

Giada, L., Giacometti, A., Rossi, M.: Pseudospectral method for the Kardar–Parisi–Zhang equation. Phys. Rev. E 65, 036134 (2002)

Gallego, R., Castro, M., López, J.M.: Pseudospectral versus finite-difference schemes in the numerical integration of stochastic models of surface growth. Phys. Rev. E 76, 051121 (2007)

Buceta, R.C.: Generalized discretization of the Kardar–Parisi–Zhang equation. Phys. Rev. E 72, 017701 (2005)

Sasamoto, T., Spohn, H.: Superdiffusivity of the 1D lattice Kardar–Parisi–Zhang equation. J. Stat. Phys. 137, 917 (2009)

Hairer, M., Voss, J.: Approximations to the stochastic Burgers equation. J. Nonlinear Sci. 21, 897–920 (2011)

Greiner, A., Strittmatter, W., Honerkamp, J.: Numerical integration of stochastic differential equations. J. Stat. Phys. 51, 95–108 (1988)

Krug, J., Spohn, H.: Kinetic roughening of growing surfaces. In: Godrèche, C. (ed.) Solids far from equilibrium. Cambridge Univerity Press, Cambridge (1991)

Moser, K., Kertész, J., Wolf, D.E.: Numerical solution of the Kardar—Parisi–Zhang equation in one, two and three dimensions. Physica A 178(2), 215–226 (1991)

Miranda, V.G., Reis, F.D.A.: Numerical study of the Kardar–Parisi–Zhang equation. Phys. Rev. E 77, 031134 (2008)

Kloeden, P.E., Platen, E.: Numerical Solution of Stochastic Differential Equations. Stochastic Modelling and Applied Probability, vol. 23. Springer, Berlin (1992)

Corwin, I., Shen, H.: Some recent progress in singular stochastic PDEs. Bull. Am. Math. Soc. (N. S.) 57(3), 409–454 (2020)

Orszag, S.A.: On the elimination of aliasing in finite-difference schemes by filtering high-wavenumber components. J. Atmos. Sci. 28(6), 1074 (1971)

Basdevant, C., Deville, M., Haldenwang, P., Lacroix, J.M., Ouazzani, J., Peyret, R., Orlandi, P., Patera, A.T.: Spectral and finite difference solutions of the Burgers equation. Comput. Fluids 14(1), 23–41 (1986)

Krug, J.: Origins of scale invariance in growth processes. Adv. Phys. 46(2), 139–282 (1997)

Poças, D., Protas, B.: Transient growth in stochastic Burgers flows. Discrete Contin. Dyn. Syst. B 23, 2371 (2018)

Acknowledgements

We would like to thank an anonymous reviewer for his/her valuable remarks.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Chris Jarzynski.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Expectation of the \(L^1\)-Norm of \(\mathcal {N}_\delta ^{(\gamma )}[h^{(0)}(x)]\)

To obtain the result in (53), we define

then the expression in (52) may also be written as

Using (75), (50) for \(0\le \gamma \le 1\) reads

The first two terms in (76) are calculated via

where

Denoting by \(\overline{(\cdot )}\) the complex conjugate, the right hand side of (77) is evaluated as follows

where we have used in the second step that \(\int _0^1dx\,e^{2\pi i(k-l)x}=\delta _{k,l}\) with \(\delta _{k,l}\) the Kronecker symbol. The third step employs the two-point correlation function of \(h^{(0)}_k\) from (87) for \(t\gg 1\), with \(\mu _k=-(2\pi k)^2\). Using (78) we get

With the substitution \(x=2\pi k(p+q)\delta \) and \(\delta \ll 1\), we may rewrite (80) as

The integral in (81) can be evaluated by either using the residue theorem or by employing an adequate CAS, and yields \(\pi /2\). Hence, the expression of (77) is given for \(t\gg 1\) and \(\delta \ll 1\) by

The last term in (76) is evaluated analogously. Thus by using again the property of the Fourier eigenbasis and (87) for \(t\gg 1\) we get

where we have substituted \(x=2\pi k\delta \) with \(\delta \ll 1\) and used the value of the integral in (81). Combining (82) and (83) gives (53).

Appendix B: Expectation of the \(L^2\)-Norm Squared of \(\mathcal {N}_\delta ^{(\gamma )}[h^{(0)}(x)]\)

To ease the calculation of (51), let us first rewrite (52) in the following way,

Hence,

Consequently,

where we have used (78). The four point correlation function can be evaluated via Wick’s theorem and

with

\(\mu _k=-4\pi ^2k^2\) as above [6]. With (87) and (88), the expression in (86) becomes

i.e., (89) results in

where we have substituted \(x=2\pi l\delta \) for \(\delta \ll 1\) and used (88) for \(t\gg 1\). Next, we will calculate

where we have again used Wick’s theorem and (87) with (88) as well as an index shift \(k-l\leftrightarrow l\) to obtain the prefactor of two in the second term. The first term in (91) reads for \(t\gg 1\)

since the second sum in (92) has the same form like the one in (83). The second term in (91) may be evaluated with (88) for \(t\gg 1\) by substituting \(x=2\pi l\delta \) for \(\delta \ll 1\) according to

where we have again used the value of the integral in (81). Lastly, with Wick’s theorem, (87), (88) and (78) we get

Here, we used again an index shift \(k-l\leftrightarrow l\) to obtain the factor of two in front of the first sum of (94). The first term in (94) has, after inserting (88) for \(t\gg 1\) and substituting \(x=2\pi l\delta \) the same form as the second sum in (92) and thus vanishes. The second term in (94) becomes for \(t\gg 1\)

where we substituted in the second step \(x=\pi l\delta \). Hence, combining (90), (92), (93) and (95) leads to

which is the result given in (54).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Niggemann, O., Seifert, U. Numerical Study of the Thermodynamic Uncertainty Relation for the KPZ-Equation. J Stat Phys 182, 25 (2021). https://doi.org/10.1007/s10955-020-02692-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-020-02692-z