Abstract

As basic data for seismic activity analysis, hypocenter catalogs need to be accurate, complete, homogeneous, and consistent. Therefore, clarifying systematic errors in catalogs is an important discipline of seismicity research. This review presents a systematic model-based methodology to reveal various biases and the results of the following analyses. (1) It is critical to examine whether there is a non-stationary systematic estimation bias in earthquake magnitudes in a hypocenter catalog. (2) Most earthquake catalogs covering long periods are not homogeneous in space, time, and magnitude ranges. Earthquake network structures and seismometers change over time, and therefore, earthquake detection rates change over time and space. Even in the short term, many aftershocks immediately following large earthquakes are often undetected, and the detection rate varies, depending on the elapsed time and location. By establishing a detection rate function, the actual seismic activity and the spatiotemporal heterogeneity of catalogs can be discriminated. (3) Near real-time correction of source locations, far from the seismic observation network, can be implemented based on better determined source location comparisons of other catalogs using the same identified earthquakes. The bias functions were estimated using an empirical Bayes method. I provide examples showing different conclusions about the changes in seismicity from different earthquake catalogs. Through these analyses, I also present actual examples of successful modifications as well as various misleading conclusions about changes in seismic activity. In particular, there is a human made magnitude shift problem included in the global catalog of large earthquakes in the late nineteenth and early twentieth centuries.

Similar content being viewed by others

1 Introduction

Research on seismic activity requires hypocenter data on the occurrence of an earthquake, with the elements of time, longitude, latitude, depth, and magnitude, which are calculated from a set of seismic wave records. Earthquake hypocenters are published in quasi-real-time or regularly as hypocenter catalogs by relevant national and international organizations. For example, the Japan Meteorological Agency (JMA) is responsible for earthquake observations in, and around, Japan.

Earthquake hypocenters provide basic data for seismicity analyses, and therefore, earthquake catalogs should be precise, complete, homogeneous, and consistent. In addition to precision and completeness, homogeneity is crucial for ensuring the accuracy and applicability of the collected data; homogeneity implies that a catalog maintains a similar quality and consistency throughout all data for a particular region and time span, which leads to good convertibility between different earthquake catalogs from the same region and time period.

This review focuses on (1) data quality issues in existing catalogs, (2) techniques and methods for improving catalog quality, and (3) methods for avoiding pitfalls caused by data deficits. Solutions to these problems are invaluable for understanding complex seismic processes, forecasting seismicity, and thus, producing reliable earthquake hazard evaluations.

There are two types of errors that arise when estimating earthquake elements, which are random errors and systematic errors. Random errors can be reduced by increasing the number of data points. Systematic errors are a serious research focus in statistical seismology for clarifying observational reasons. It is unavoidable that the properties of biased elements described in the catalog differ to some extent depending on the region, but it is troublesome if they change over time. Without acknowledging this, it has sometimes been misunderstood that seismic activity in a local crust is abnormally quiet. The loss of homogeneity or uniformity of such catalogs has often been caused by artificial changes in the observation and data processing methods of observation stations and seismometers.

It is useful to compare elements of the same earthquake, such as its magnitude, to those in other catalogs for determining whether transient biases in magnitude evaluation exist. The time-dependent systematic differences in the magnitudes of the same earthquake in different catalogs suggest that one of the catalogs has inconsistent factors. The present study uses statistical models and methods to reveal bias or other systematic inhomogeneities of the respective hypocenter elements among various catalogs.

2 Review

2.1 Magnitude shift

Magnitude estimates contain both random and systematic errors (biases). Random errors have many causes, such as different site conditions, radiation patterns, and reading errors. A number of studies have shown that these errors are normally distributed (Freedman 1967; von Seggern 1973; Ringdal 1976). Systematic errors arise due to several causes, with the most obvious being the changes in the magnitude calculation technique, station characteristics, and station installation or removal. It has recently become clear that systematic errors in magnitudes exhibit considerable temporal variation in most seismicity catalogs, despite efforts by network operators to maintain consistency (e.g., Haberman and Craig 1988).

2.1.1 Statistical models and methods for detecting magnitude biases

One significant area of study regarding earthquake prediction in the last century is seismic quiescence in the background seismic activity of a region. Seismic quiescence is a phenomenon in which the seismic activity becomes abnormally low for a certain period of time in a region where there is a certain amount of seismic activity (occurrence of small and medium-sized earthquakes). It is considered to be one of the intermediate-term precursors of earthquakes because the epicenter is sometimes located in all or part of the area (including adjacent areas).

For example, Habermann (1988), Wyss and Habermann (1988), and Matthews and Reasenberg (1988) discuss a quantitative (statistical) analysis for detecting seismicity quiescence. However, there are at least two issues in their theories and methods. The first issue is algorithm declustering of earthquake occurrence data to obtain background seismicity and then testing the stationary Poisson process against the piecewise stationary Poisson process that represents the seismic rate lowering from the preceding period (see the next subsubsection for further comments). The second issue is the discrimination of genuine seismic quiescence from apparent seismic quiescence caused by a biased magnitude evaluation (magnitude shift) within the assumed period. There is concern regarding whether the sizes of observed earthquakes can be expected to be homogeneously evaluated (referred to herein as the magnitude shift issue).

Catalogs by different institutions adopt magnitude scales that are defined differently owing to the emphasis and measurement of various aspects of seismic waves and different estimation methods. For example, Utsu (1982c, 2002) investigated the systematic mean differences of magnitude scales between different catalogs using the same earthquakes from global and regional catalogs that cover a common region. These differences are dependent on magnitude scale. Despite systematic differences in magnitude between the catalogs due to differently defined magnitude scales, the aim of this study is to examine whether time-dependent systematic differences exist. If a time-dependent change in magnitude difference occurs for a particular period and magnitude range, it indicates a change in magnitude evaluations (magnitude shift) in at least one of the two compared catalogs.

Firstly, the time-dependent systematic difference ψ(t, M(1)) in the magnitude M(1) of an earthquake in catalog #1 compared to its magnitude M(2) in catalog #2 was considered. To examine this, the differences in the use of\( {M}_i^{(2)}-{M}_i^{(1)} \)for the same earthquake i in the two catalogs were noted. As discussed in Ogata and Katsura (1988), a two-dimensional cubic B-spline function \( {\psi}_{\theta}\equiv {\psi}_{\theta}\left({t}_i,{M}_i^{(1)}\right) \) of time and magnitude for systematic difference between the magnitudes was considered.

To estimate the bias ψθ, the residual sum of squares

was first considered for the goodness-of-fit of the surface for the biases. Here, if necessary, the systematic difference as an extended function \( {\psi}_{\theta}\equiv {\psi}_{\theta}\left({t}_i^{(1)},{x}_i^{(1)},{y}_i^{(1)},{z}_i^{(1)}:{M}_i^{(1)}\right) \) of the time, location, depth, and magnitude could be considered. Haberman and Craig (1988) considered only time-dependent biases of the magnitude differences for applying a traditional test statistic, which is equivalent to assuming a piecewise constant function ψθ = ψ(t) of time.

Then, the penalized residual sum of squares was considered

to stabilize the convergence of the unique solution. Here, the penalty function

with penalty weights w = (w1, ⋯, w5) works against the roughness of the function (or smoothness constraint). The weights of the penalty are optimized by considering the following Bayesian framework: First, consider the prior probability density function:

where the adjusting weight vector w is called a hyper-parameter. Then, together with the likelihood function,

the posterior function is given by

which corresponds to the penalized log likelihood. Here, the optimal selection of adjusted weights \( \hat{\mathbf{w}} \) (called hyper-parameters in the Bayesian framework) is derived by minimizing the Akaike Bayesian information criterion (ABIC; Akaike 1980; Parzen et al. 1998, pp. 309–332):

where dim(w) is the number of adjusting hyper-parameters w for maximization.

An efficient optimization calculation for the posterior model (2) is given in Murata (1993). In the case of the general log likelihood function, instead of the residual sum of squares in (1), this procedure requires many computational repetitions, including the incomplete conjugate gradient method and the quasi-Newton linear search method that were applied in Ogata and Katsura (1988, 1993) and Ogata et al. (1991, 2003).

Thus, the empirical Bayesian model with the smallest ABIC is regarded as having the best fit with respect to the hyper-parameters. This ABIC value can be compared with the ABIC value of the case in which some hyper-parameters (weights) are very large for very restricted cases, such as w2 = w4 = w5 = 108 in (3), which is equivalent to the case in which no temporal systematic change in ψθ occurred, namely, no magnitude shift.

For the optimized hyper-parameters (weights), the minimized PRSS in (2) provides the solution of the coefficients \( \hat{\theta} \) of the smoothed spline function ψθ, which is known as the maximum a posteriori (MAP) solution, namely, the ABIC of which should be compared with that of the time-constant case for significance. The penalized log-likelihood functions have a quadratic form with respect to the parameter, θ, and so, the posterior function is proportional to the normal distribution, its integration is reduced to the determinant of the variance-covariance matrix (details are provided in Murata (1993), Ogata and Katsura (1988, 1993), and Ogata et al. (1998, 2003)).

2.1.2 Magnitude shift issues regarding the JMA hypocenter catalog

Ogata et al. (1998) applied this method to examine the differences between the magnitudes MJ reported by the JMA and body-wave magnitudes (mb) reported by the National Earthquake Information Center (NEIC) of the US Geological Survey (USGS) Preliminary Determination of Epicenters (PDE) catalog in and around Japan during the period 1963–1989. Here, it is assumed that the global NEIC catalog in this period is homogeneous after the installation of the World-Wide Standardized Seismograph Network. The magnitude scale MJ of the JMA catalog before the mid-1970s is based on the definition of Tsuboi (1954), determined by the amplitude of the displacement-type seismometer. The optimally estimated function ψθin Fig. 1a for the bias indicates that the JMA magnitudes below MJ 5.5 are substantially underestimated in the period after 1976. This occurred by mixing the calibrated magnitudes for the velocity-type seismographs that measure smaller earthquakes. This magnitude change (magnitude shift) for earthquakes caused the appearance of a seismic gap offshore from Tokai District, in the eastern part of the Nankai Trough. This gap was reported by Mogi (1986) and others who were concerned with the expected Tokai earthquake.

Smoothed magnitude differences of earthquakes from JMA and USGS PDE catalogs from July 1965–1990 (a, c). The original version of the JMA catalog was revised in September 2004. The smoothed magnitude difference of earthquakes in the revised catalog and the original catalog is shown in panel b. Panel a compares the magnitude of the original JMA hypocenter catalog and c uses the revised JMA catalog. Contours show equidistance of 0.1 difference magnitude unit in the smoothed surface. Higher JMA magnitudes than USGS magnitudes are shown in purple. Light blue is used to show the opposite. The vertical red dotted line denotes 1977, when the significant change of the original JMA magnitude difference pattern in the range between mb 3.5–5

The epidemic type aftershock sequence (ETAS) model usually fits well to aftershocks and ordinary seismic activities in a self-contained seismogenic region. It stochastically decomposes the background seismicity and clustering components. The ETAS model is also useful for detecting the period of misfit to the earthquake occurrence data. In particular, it is extended to a simple non-stationary ETAS model, meaning that the two-stage ETAS model is applied to estimate a change-point and examine its significance by evaluating whether the two models applied to the data in the periods before and after the change point are statistically significantly different.

Ogata et al. (1998) applied the two-stage ETAS model to the JMA earthquake dataset of MJMA ≥ 4.5 for the period 1926–1995 and the central Japan region at 136–141 °E and 33–37 °N, with a depth of 50 km or shallower, and quiescence relative to the ETAS model was observed after approximately 1975–1976. In contrast, there was no relative quiescence by applying the ETAS model to the USGS-PDE data with mb ≥ 4.4 and depth≤ 100 km in the same region for the target period of 1963–1995. Furthermore, the significant relative quiescence in the JMA data for the same target period, depth range, and cutoff magnitude as those of the USGS data was confirmed. These suggested the magnitude shift of the JMA catalog after the mid-1970s, and therefore, whether a magnitude shift occurred in the JMA catalog after the mid-1970s was explored.

On September 25, 2003, the JMA revised magnitudes based on velocity seismometers so that new velocity magnitudes must be connected smoothly to the traditional magnitudes based on displacement seismometers (see Funasaki 2004). The smoothed differences of JMA magnitudes by the revision are shown in Fig. 1b, which indicates an increase in the new magnitude scale with time. Therefore, in the same way, the systematic magnitude difference of the new JMA hypocenter catalog against the PDE catalog was reanalyzed. Figure 1c shows that a significant bias in time remained, although the substantial change in the band from M 4.0 to 5.5 moved approximately 3 years ahead.

2.1.3 Magnitude shift issues regarding global hypocenter catalogs

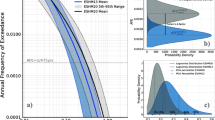

Bansal and Ogata (2013) identified seismicity changes approximately 4.5–6 years before the 2004 M 9.1 Sumatra earthquake in the Sumatra–Andaman Islands region (90–100 oE, 2–15 °N) using the USGS-NEIC and the International Seismological Centre (ISC) catalogs. These authors found that the two-stage ETAS model, with the change point at the middle of the year 2000, provided a significantly better statistical fit to both datasets of M ≥ 4.7 than the stationary ETAS model throughout the entire period. However, Fig. 2 shows that the results after the change point differed significantly. The former catalog implied the activation relative to the predicted ETAS seismicity after the change point, whereas the latter indicated the diametrically opposite, namely, the relative quiescence.

Opposite seismicity changes between NEIC and ISC catalogs. Estimated cumulative curves of the ETAS model to data from July 1976 until the M9 Smatra earthquake (December 25, 2004) in the Sumatra-Andaman Island region (see text for the region). The data presented are from the period up to the maximum likelihood estimate of change-point (red vertical dashed line for July 18, 2000) and the extrapolated ones to the rest of the period, for the data from a the NEIC PDE catalog and b the ISC catalog

Magnitude shifts either in the ISC catalog or in the NEIC catalog after the change point time were, therefore, suspected. Figure 3a, b shows significantly different distributions (both mean and variability) of the magnitude differences between the NEIC catalog and the ISC catalog before and after the change point time. Figure 3c, d demonstrates that the smoothed magnitude differences for a bias estimate are different in the range above magnitude Mc = 4.7 of nearly completely detected earthquakes.

Magnitude differences in USGS body wave magnitude mb and ISC body wave magnitude Mb (1973–2005). Mb–mb is shown in the a Sumatra area and b Japan area. Because the magnitude differences are 0.1 interval unit, the magnitude differences are plotted with small perturbations. The horizontal red lines are mean differences during the earlier period until 8500 days. The smoothed surfaces of Mb–mb on the plane of mb over the period are shown in c Sumatra area and d Japan area. Contours show an equidistance of 0.1 magnitude unit differences of the smoothed surface, and thin and thick purple show higher magnitudes differences than thin and thick light blue. The horizontal light blue line represents the lowest magnitude of completeness, Mc = 4.7. The vertical dark blue lines show changes of the magnitude difference pattern in the range where plots were highly changed

To examine which had changed, a third catalog was required. Access to any other available catalog for the Sumatra-Andaman Islands area to investigate the magnitude shift was not possible; therefore, Japan and its surrounding areas were chosen as the study region, with the assumption that the magnitude shift occurred globally and was not limited to the Sumatra-Andaman Islands area. Here, earthquake magnitudes were provided by the USGS-NEIC, ISC, and JMA catalogs. Figure 4a, b shows the magnitude differences Mb–MJMA and mb–MJMA of the same earthquakes besides Mb–mb in the Japan area, respectively, and shows a significantly different mean (bias) of the magnitude difference before and after the same time as the change point time in the Sumatra-Andaman Islands region. Figure 3c, d indicates that the smoothed magnitude differences for bias estimations of Mb–MJMA are significantly larger than those of mb–MJMA in the range above about magnitude Mc = 4.7 of nearly completely detected earthquakes.

Magnitude differences of ISC and USGS magnitudes against JMA magnitude MJMA in the Japan area. Magnitude differences a MJMA–Mb, b MJMA–mb of the same earthquakes in ISC body wave magnitude Mb and USGS body wave magnitude mb, respectively, relative to the JMA magnitude (1973–2005), c smoothed surfaces of Mb–MJMA on the plane of MJMA, d shows the same as c, replacing Mb with mb. Contours show an equidistance of 0.1 magnitude unit differences of the smoothed surface, and higher magnitudes differences are shown in purple (in contrast to light blue). The vertical solid lines in a–d show changes of the magnitude difference pattern in the range where plots were highly changed

It was concluded that the magnitude shift was likely to have taken place in the ISC catalog and that Mb was underestimated during this time. The lowering of the ISC magnitudes in the year 1996 was likely due to the use of the International Data Centre (IDC) amplitude data of the Comprehensive Nuclear Test Ban Treaty Organization (CTBTO). In contrast, the NEIC discontinued the use of the IDC data because the IDC amplitudes were obtained with a different amplitude measuring procedure than that used at the NEIC, and implied a systematic lowering of the magnitude using IDC amplitudes (Dewey J.W. April 1, 2011, personal communication). The adoption of the IDC data took place simultaneously globally, including both the Sumatra-Andaman Islands and Japan.

2.1.4 Hypocenter catalog of global shallow large earthquakes in the twentieth century

Based on the fractal and self-similar features of earthquakes, it is statistically known that the occurrence of earthquakes is long-range dependent in time as well as in space. This applies to large earthquake sequences worldwide. This section criticizes conventional statistical tests that use the null hypothesis of the stationary Poisson process for great earthquakes, which resulted in the magnitude heterogeneity of the earlier global catalog of the twentieth century of large earthquakes.

Abe (1981) edited a catalog of global earthquakes of magnitude 7 and over for the period 1904–1980. In homogenizing the catalog, the author consistently adhered to the original definitions of surface-wave magnitude (MS) given by Gutenberg (1945) and Gutenberg (1956) to determine surface wave magnitude MS based on the amplitude and period data listed in Gutenberg and Richter’s unpublished notes, bulletins, from stations worldwide, and other sources. Later, Abe and Noguchi (1983a, 1983b) added earthquakes that occurred in the early period 1897–1917. Some corrections were made by Abe (1984). These catalogs provide a complete list of large shallow earthquakes for the period 1897–1980 (magnitude Ms ≥ 7, depth ≤ 70 km) on a uniform basis. All these catalogs are mutually consistent in their use of the magnitude scale, hereafter collectively called “Abe’s catalog.”

Having examined the broken line-shaped cumulative curve of M ≥ 7 earthquakes in Abe (1981) (Fig. 5a), Pérez and Scholz (1984) suspected the existence of magnitude shifts in Abe’s catalog. This was based on the assumption that the collection of such a large global earthquake should appear as a stationary Poisson process (uniformly random), which corresponds to a straight linear cumulative curve. These authors conventionally tested Abe’s catalog against the null hypothesis as a stationary Poisson process (uniformly random) to reject the homogeneity of the catalog and then claimed that two remarkable changes in the rate around 1922 and 1948 resulted from the inhomogeneity of earthquake catalogs for some instrumental-related reasons. However, they did not consider the additions and corrections by Abe and Noguchi (1983a, 1983b). The excess before 1908 was almost consistent with the corrections of Abe and Noguchi, which was due to the amplitude data collected by Gutenberg from the un-dumping Milne seismometers before 1909 being too large due to the underestimation of seismometer magnification. In consideration of this, the aim of Ogata and Abe (1991) was to determine whether the changes around 1922 and 1948 did not change in seismicity.

Cumulative number of shallow earthquakes. Panels are a with MS ≥ 7 globally, b with MJMA ≥ 6 in the regional area around Japan (1885–1980) based on Utsu’s catalog, c with Ms ≥ 7 in low latitude areas, |θ | ≤ 20o, of the world (1897–1980), and d Ms ≥ 7 in high latitude areas, |θ | > 20o, of the world (1897–1980), where θ is the latitude

When hypothesizing that the change in the occurrence rate occurred due to magnitude determination bias, Pérez and Scholz (1984) concluded that the MS of the large earthquake in Abe (1981) before 1908, compared to those after 1948, was approximately 0.5 MS unit overestimated as well as a 0.2 MS unit overestimated in the period 1908–1948. The smoothed magnitude differences between the Pérez and Scholz catalog and Abe’s catalog by the estimation method in this study is shown in Fig. 6a, whereby, the quasi-periodic vibration of the Gibbs phenomena occurred in smoothing the discontinuous jumps at 1908 and 1948, as expected. It is clear that the argument presented by Pérez and Scholz (1984) strongly depends on their a priori assumption of an independent (nonstationary Poisson process) or short-range dependence of earthquake occurrence. They used the conventional sample mean difference test (z test), but this should take a higher significance level than the standard hypothesis, considering the effect of choosing a change point at which the difference is most pronounced (Matthews and Reasenberg 1988). Furthermore, the z test is inappropriate for long-term (inverse power decaying) correlated data, such as seismic activity, as discussed below.

Smoothed surfaces of magnitude differences of P&S and E&V catalogs against Abe catalog. a MP&S–MABE, b ME&V–MABE on the plane MABE against the elapsed time (1897–1980) for global earthquakes, c MABE–MUTSU, and d ME&V–MUTSU on the plane MUTSU over the period in years for Japan region. High magnitude differences are shown in purple (in contrast to than light blue). The vertical dotted lines in a–d denote 1922 and 1948

If the heterogeneity of the catalog occurs because of instrument error, these two cumulative patterns are expected to have similar shapes in multiple wide regions. Figure 5c, d indicates that there were clearly different trends in the regions of low latitude bounded by ± 20° and high latitude regions. Furthermore, it is clear that the space-time magnitude pattern of global seismicity appears non-stationary and non-homogeneous during a one-century period (Fig. 5c, d). This heterogeneity was discussed by Mogi (1974, 1979).

A twentieth-century catalog of earthquakes in and around Japan was edited by Utsu (1982b); this catalog included very many different earthquakes down to approximately M6, much smaller than M7 in Abe’s catalog, and the magnitude scale used was independently determined based on the definition of MJMA by Tsuboi (1954). The MJMA scale is close to MS, but there is a systematic linear deviation (Utsu 1982b). On average, MJMA differs from MS by 0.23 units at MJMA = 6.0 and - 0.30 units at MJMA = 8.0 (Hayashi and Abe 1984). The magnitudes of earthquakes for the period 1926–1980 were given by the Seismological Bulletins of the JMA, and the magnitudes for the period 1885–1925 were determined by Utsu (1979, 1982a). Utsu (1982b) compiled these catalogs into one complete catalog with M ≥ 6 for the period 1885–1980. Its catalog for shallow earthquakes (magnitude MJMA ≥ 6, depth 60 km, or shallow depth) in the region 128°–148 °E and 30°–46 °N of Utsu (1982b, 1985) is hereafter called “Utsu’s catalog.”

It is remarkable that seemingly similar seismic trends are observed despite different magnitude determinations during the same period in Japan (Fig. 5b) and high-latitude regions of the world (Fig. 5d). It is notable that, above a magnitude threshold of completely detected, the number of earthquakes in Japan and neighboring regions comprises approximately 20% of the globe, and also that the Utsu’s catalog (M ≥ 6) includes approximately 10 times the number of earthquakes in the same area than in Abe’s catalog (M ≥ 7). Despite such different magnitude thresholds, we see the similarity between Fig. 5b, d whereby, the seismicity rates in Japan and globally high latitude areas were high in the 1920s to the 1940s and gradually decreased until 1980. When assuming the Gutenberg–Richter law, this similarity in the two independent catalogs suggests that the catalogs reflect the change in the real seismic activity rather than the suspected editorial heterogeneity of the global catalog. Furthermore, the smoothed magnitude differences between Utsu’s and Abe’s catalogs (Fig. 6c) ensure that both have no magnitude shifts over time, which implies the magnitude shift of the Pérez and Scholz catalog due to Fig. 6a, as relative to those of Utsu’s catalog in Japan. The synchronous variation of seismic frequency in high-latitude regions and in the regional area around Japan, obtained from independent catalogs, suggests an external effect, such as large-scale motion of the Earth rather than the presupposed heterogeneity of the catalogs.

The broken line-type cumulative curve for major global earthquakes as appeared in Abe’s catalog (Fig. 5a) can be interpreted as follows. This persistent fluctuation or trend in appearance being very common in various geophysical records can be understood in relation to the long-range dependence. This is supported by the dispersion-time diagrams, spectrum, and analysis of the R/S statistic (Ogata and Abe 1991) for the occurrence times. The apparent long-term fluctuation can be reproduced by a set of samples from a stationary self-similar process.

The self-similarity or long-range dependence of a time series can be examined statistically by empirical plots of earthquake sequences (Hurst 1951; Mandelbrot and Wallis 1969a, 1969b, 1969c; Ogata 1988; Ogata and Katsura 1991; Ogata and Abe 1991). According to these authors, a much higher significance level is required for the null hypothesis of stationary long-range dependence of the data regardless of declustering, because the majority of decluster algorithms seem to remove short-range dependence, and complete removal of dependent shocks other than aftershocks is difficult for such data with long-range dependence. Furthermore, the implementation of a z test procedure should be carefully checked in seismicity studies.

Nevertheless, this claim of the heterogeneity of Abe’s catalog by Pérez and Scholz (1984) was adopted by Pacheco and Sykes (1992), who essentially followed the same magnitude-shift modification for the period 1900–1980 to convert surface wave magnitudes to moment magnitudes by the empirical relation (Ekström and Dziewonski 1988). Incidentally, there is an apparent change-point in 1978 for their catalog for the period 1969–1990 in Pacheco and Sykes (1992) (Fig. 5b), but they made no interpretation for this particular change in the cumulative curve.

Engdahl and Villaseñor (2002) adopted these moment magnitudes and compiled the CENT7.CAT database in the centennial catalog. However, 62% of earthquakes with Mw ≥ 7 before 1976 were based on the seismic moment catalog by Pacheco and Sykes (1992), most of which was based on the conversion of surface wave magnitude MS to seismic moment M0 and then to Mw through an empirical formula (see Noguchi (2009) for more issues and details). Incidentally, CENT7.CAT led to a seemingly broken line shape in 1948 for the cumulative number throughout the twentieth century (e.g., see Fig. 4 in Noguchi 2009, M ≧ 7.0)). Figure 6b, d shows the magnitude differences of the Engdahl and Villasenor catalog relative to Abe’ catalog and Utsu’s catalog, respectively; both of which remain the effect of the discontinuous magnitude shift between Abe’s and the Pérez and Scholz catalogs in the magnitude range greater than M6.5. Such earthquake catalogs in the twentieth century should, therefore, be used as basic data to evaluate damaging earthquakes, while carefully considering the issues outlined here.

2.2 Location correction of earthquake catalogs

Similar to replacing magnitudes by location (longitude, latitude, or depth) in the penalized sum of squares discussed above, a quasi-real-time correction of the hypocenter location can be performed. Revealing systematic errors in a catalog is a serious subject of seismological research.

2.2.1 Real-time correction of the epicenter location to calibrate global earthquake locations

A similar analysis was applied for the real-time correction of epicenter locations. For example, Ogata et al. (1998) utilized this model to calibrate global earthquake location estimates from a small seismic array at Matsushiro, central Japan. The Seismological Observatory of the JMA at Matsushiro has an array of stations called the Matsushiro Seismic Array System (MSAS), which consists of seven telemetering stations located equidistantly on a circle of radius of approximately 5 km, and at its center. The MSAS locates a focus by a combination of the azimuth of the wave approach and the epicentral distance (i.e., distance to the epicenter from the origin of the array), and thus, the MSAS plays various roles not only in reporting the seismic wave arrival times and related data to central institutions in Japan and the world, but also in quickly detecting and determining locations. This includes those of possible nuclear experiments as one of the IDC of CTBT.

Let an epicenter location estimated by the MSAS be (Δi, ϕi) in the polar coordinates centered at the MSAS origin, and the corresponding true location of be\( \left({\Delta}_i^0,{\phi}_i^0\right) \). Here, it is assumed that each earthquake location of the USGS-PDE catalog is the true location for the period 1984–1988. The structure of the bias was then analyzed with

for i = 1 , 2, . . . , N, where εi and ηi are unbiased mutually independent errors owing to the reading errors, whereas the functions f and g are the bias functions that are dependent upon the locations determined by the MSAS.

Whether these error data are useful for estimating the standard deviation of the error {ηi} in (9) was then investigated. That is to say, whether ηi ~ N(0,\( {s}^2{\sigma}_i^2 \)) for some constant s2. In contrast, for the other error εi in (8), it has been empirically determined that the estimation error of the epicentral distance Δ may be roughly proportional to Δ1/2. Whether this could improve the fit to the data was also investigated.

Each spatial function f and g was parameterized by cubic two-dimensional B-spline functions in the following way: A disc A with radius R of epicentral distance from the origin in polar coordinates was regarded as a rectangle [0, R] × [0°, 360°) in the ordinary Cartesian coordinates and was equally divided into MΔ × Mϕ rectangular subregions. For each f and g, the spline coefficients θ whose number is M = (MΔ + 3) × (Mϕ + 3) was estimated. For the explicit definition of the bi-cubic B-spline function, see Inoue (1986), Ogata and Katsura (1988, 1993), and Ogata and Abe (1991). Then, the sums of squares of the residuals was considered, given by

where 9801 parameters (i.e., MΔ = Mϕ = 96) were used for estimations. It was assumed that the functions f and g for the biases were sufficiently smooth for the stable estimation of a large number of coefficients. The same penalties were considered for functions f and g, as in Eq. 1 replacing ψ by f and g, for (10) and (11), and x and t by Δ and ϕ, respectively. Then, the optimal minimizations of the penalized square sum of residuals by the same method as given in the magnitude shift, subsubsection Statistical models and methods for detecting magnitude biases. More details and computational methods of the ABIC procedure with an efficient conjugate gradient algorithm are described in Ogata et al. (1998).

Real-time correction of the epicenter location utilized this model to calibrate the global earthquake location estimates from a small seismic array at the Japan Meteorological Agency Matsushiro Seismological Observatory, and this has been adopted for operational determinations for the Matsushiro catalog.

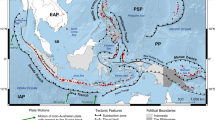

The USGS epicenters in Fig. 7a are much more accurate than those of the MSAS locations of earthquakes, where their differences are shown in Fig. 7b. Using the model and method described above, we had smoothed biases in polar coordinates, as shown in Fig. 7d, e, which can be used for the automatic modification of the future MSAS coordinates, as shown in Fig. 7c, without knowing the USGS epicenters. This supports the stability of the solutions of many parameters, as shown in Fig. 7d, e.

Correction of the epicenter locations determined by the local MSAS array for global earthquakes. Epicenter locations (gray disks) for earthquakes determined by MSAS; a earthquakes occurring in 1984–1988 and c earthquakes occurring in 1989–1992. The geographical world map is drawn by a polar coordinate system whose center is the location of the MSAS origin in central Japan. Black disks in a indicate USGS locations and b difference of epicenter locations of the same earthquake. An arrow indicates the shift from the MSAS location to the USGS location, provided that the differences of epicentral distance and azimuth are within 2o and 25o, respectively. These are used to minimize by the sum of least squares under a smoothness constraint (see text). The black disks in c are compensated locations from the MSAS data (gray disks) in c by the estimated bias functions f and g, shown in d and e, respectively. Contour plots in the panels d and e show the bias functions f for epicentral distance and g for azimuth in Eqs. (10) and (11), respectively, which are estimated using the data during 1984–1988 in Fig. 7a. The function f varies from − 3.5° to 2.5° in the angular distance, and the contour interval is 0.5°. The function g varies from − 45.0° to 50.0°, and the contour interval is 5.0°. Areas of negative function values are white

This result suggests that such systematic biases of MSAS epicenter locations reflect the accurate use of the vertical structure of the P wave velocity model for the Earth’s interior (Kobayashi et al. 1993). In addition, we provided discussions with figures of small regional biases of the residuals in Ogata et al. (1998).

2.2.2 Quasi-real-time correction of the depth location of offshore earthquakes

A correction method for the routinely determined hypocenter coordinates in the far offshore region is given by considering the source coordinates of an earthquake that occurred offshore. Offshore earthquakes are determined only on one side from the observation point in the inland area, and therefore, the depth is determined with low accuracy and with large deviations. The aim was to compensate for biases that are location-dependent.

Let \( \left({x}_i^{JMA},{y}_i^{JMA},{z}_i^{JMA}\right) \) be the hypocenter coordinates of the earthquake i = 1, 2,…, N, and be \( \left({x}_i^0,{y}_i^0,{z}_i^0\right) \) the true hypocenter coordinates of the corresponding earthquake. For simplicity, it is assumed that the epicenters are very close to each other, but the depths are significantly different. Thus, the following model to determine the depth bias was considered:

for i = 1, 2, ..., N, where h is the bias function that is dependent on the locations determined by the JMA network, whereas εi~N(0, σ2) represents unbiased independent residuals. Here, the bias function h (x, y, z) is a 3-dimensional piece-wise linear function defined on a 3D Delaunay tessellation consisting of tetrahedrons whose vertices are the nearest four earthquake hypocenters. Namely, each Delaunay tetrahedron provides a flat surface (piecewise linear function), where the four heights of the flat surface are determined at the tetrahedron vertices, and the height of the piecewise flat surface at any point location is linearly interpolated within the tetrahedron that includes a JMA hypocenter. The residual sum of squares was considered

with a penalty function for the smoothness constraint

Then, the optimal minimizations of the penalized square sum of residuals by the same method as given in the subsection “Magnitude shift.”

Two particular catalogs to compensate for the routine JMA catalog were then utilized in the following examples. First, offshore earthquakes were determined from the observation network in the inland area of the Tohoku region, and therefore, the depths were determined with large deviations in the vertical direction. Umino et al. (1995) determined depths of the epicenters using the sP wave; that is, the S wave from the epicenter reflected on the surface of the ground and converted to a P wave and propagated to the observation point. According to this method, the offshore plate boundary earthquake and the double seismic surface in the Pacific Plate are accurately determined.

Ogata (2009) compared the hypocenters \( \left({x}_i^0,{y}_i^0,{z}_i^0\right) \) of the Umino catalog and the earthquakes determined this way with those of the JMA catalog \( \left({x}_i^{JMA},{y}_i^{JMA},{z}_i^{JMA}\right) \) and then estimated the depth deviation in a Bayesian manner by assuming model (13). The constraint that the deviation of the nearest earthquake, which is the vertex of each tetrahedron by 3D Delaunay division was almost the same value as the prior distribution, optimized by the ABIC method, and the optimal strength of the constraint w = (w1, w2, w3) in (14) was determined.

Then, the result of the transformation works \( {\hat{z}}_i={z}_i+{h}_{\hat{\theta}}\left({x}_i,{y}_i,{z}_i\right) \) for any JMA hypocenters that are linearly interpolated depending on the Delaunay tetrahedron that the hypocenter location was included. Figure 8 indicates how the original JMA hypocenters were modified in each region. In addition, in the Nankai Trough area, hypocenter correction is also desired to compare the past JMA catalog from the viewpoint of the forecast strategy of the expected large earthquake. It is hoped that the epicenter data accumulated by ocean bottom seismometers, such as the Dense Ocean floor Network system for Earthquakes and Tsunamis (DONET) and the Seafloor Observation Network for Earthquakes and Tsunamis along the Japan Trench (S-net), will be effectively used as the target earthquake for depth correction of the JMA epicenter.

Depth corrections of offshore earthquakes from the JMA catalog (example 1). a Routinely determined M ≥ 3 earthquake hypocenters in JMA catalog during 2000–2006 and b corrected hypocenters using the sP waves by Umino et al. (1995) for the same earthquakes (left panels)

Second, the F-net Broadband Seismograph Network catalog of NIED (2016) is another useful catalog to compensate the JMA catalog. Each earthquake depth of the F-net was optimized by waveform fitting, given the fixed epicenter coordinate. Although the accuracy of the depths of F-net is given in a 3-km unit, this is still useful for removing the offshore biases of the JMA depths. Figure 9 indicates how the original JMA hypocenters are modified in, and around, the mainland Japan region.

2.3 Detection rates of earthquakes and b values of the magnitude frequency

Most catalogs covering a long time period are not homogeneous because the detection rate of smaller earthquakes changes in time and space due to the development of seismic networks changing over a long period of time. In contrast, many aftershocks occur immediately after a large earthquake, with waveforms that overlap on the seismometer records, making it difficult to effectively determine the hypocenters and magnitudes of smaller aftershocks immediately following earthquakes. Therefore, many aftershocks immediately after a large earthquake are not detected or located.

The aim was to separate real seismicity from the catalog heterogeneity. One method for doing so is a traditional method that restricts earthquakes above a completely detected magnitude, but this loses a large amount of data. A frequency distribution was used for all detected earthquakes by assuming the Gutenberg–Richter law for magnitude frequency and modeling detection rate of earthquakes of magnitude such that 0 ≤ q(M| μ, σ) ≤ 1, which is a cumulative function of the normal distribution where the parameter μ represents the magnitude value at which 50% of earthquakes are detected and σ relates to a range of magnitudes at which earthquakes are partially detected (Ringdal 1975), so that μ + 2σ provides the magnitude at which 97.5% of earthquakes are detected. Then, a model of magnitude frequency distribution for observed earthquakes

was considered, to examine space-time inhomogeneity by assuming that the parameters (b, μ, σ) are not constants but represented by functions of time t and/or location (x, y). Then, given a data set of times, epicenters, and magnitudes (ti, xi, yi, Mi), log-likelihood was considered (Ogata and Katsura 2006), given in the form

for time evolution or

for spatial variation, in place of the sum of squares in the above, with penalties against the roughness of b ‐ , μ‐ and functions σ‐ of time t and/or location (x, y). The optimal strengths of the roughness penalties, or smoothing constraints, are obtained, and the solutions are useful for analyzing b value changes and seismicity.

2.3.1 Modeling detection rate changes over time

The south coast of Urakawa (Urakawa-Oki, Fig. 10a) is located approximately 142.5 °E, 42 °N in Hokkaido, Japan, and has been an active swarm-like earthquake zone for a long period of time. The observed swarm activity from 1926 to September 1990, with a depth of h ≤ 100 km, was examined, and the data were chosen from the JMA Hypocenter Catalog. Figure 10b includes the cumulative curve and magnitudes versus occurrence times of all earthquakes with determined magnitudes. The apparently increasing rate of events (i.e., increasing slope of cumulative number curve in Fig. 10b) does not always mean an increase in seismic activity. From the magnitude versus time plot (Fig. 10b), it was observed that the number of smaller earthquakes increased with time, indicating an increase in the detection capability of earthquakes in the area. In contrast, the steepest rise of the cumulative curve in March 1982 includes the aftershock activity of the 1982 M 7.1 Urakawa-Oki earthquake.

Temporal detection rate analysis of earthquakes. Detected earthquakes off the coast of southern Urakawa (Urakawa-Oki, (A)), Hokkaido (1926–1990), B cumulative number of earthquakes by year since 1926 with the corresponding magnitude versus occurrence time plot, Ca b values (b = β loge 10) against time in years, Cb μ values (50% detection rate) against time in years, Cc σ value against time in years, and D magnitude frequency histograms (“+” sign) of in time spans Da 1926–1945, Db 1946–1965, Dc 1966–1975, Dd 1976–1983, and De 1984–1990 with the estimated frequency-density curves at the middle time (indicated by the squared a–e in panel C) in the corresponding time spans, where x-axis is for magnitude and y-axis is the frequencies given in logarithmic scale. Theoretically calculated two-fold standard error curves are provided for each interval

To conventionally obtain the b value change of the real seismic activity by removing the effect of detection capability, it is necessary to use data from earthquakes whose magnitudes exceeded the cutoff magnitude above which almost earthquakes were detected throughout the observed time span and region. The cutoff magnitude for the complete earthquake detection in this area since 1926 is MJ 5.0. This leads to significant data waste (cf., Fig. 10B(a)) so instead, all the data of detected earthquakes for an unlimited range of magnitudes were included. In total, 1249 events were considered for this example.

The entire time interval was divided into 20 subintervals of equal lengths for the knots of the cubic B-spline (described in the Appendix of Ogata and Katsura (1993)). Further subdivisions did not provide any significant improvement in the ABIC value, and hence, the number of B-spline coefficients of the three functions, b, μ, and σ of time t is 3 × (20 + 3).

Firstly, the ABIC values for the goodness-of-fit of the penalized log likelihood (Good and Gaskins 1971) were compared between the same weight values and independently adjusted distinctive weights penalties posed to the b-, μ-, and σ-functions for the strength of the smoothness constraint. In this case, the latter approach was better, and thus, the estimated function in Fig. 10C(a) shows that the trend of b value change appears to decrease linearly in the range of 65 years. Figure 10C(b) shows that the magnitude of 50% detected earthquakes decreased from MJ 5.0 to MJ 3.0 by 1990. Figure 10C(c) shows the range of the partially detected magnitude, which tends to be large when the seismic activity is low.

To determine the significance of the patterns of the b, μ, and σ values, their variability distributions of the estimates were computed by the Hessian matrix of posterior distribution (6) around the MAP solution, where the likelihood function is replaced by the one in (16). The two-fold standard error curves are shown in Fig. 10C(a)–(c), which indicates that the standard error is small when the event occurrences are densely observed.

To confirm the goodness-of-fit of the estimated model, the entire time interval from 1926 to the end of September 1990 was divided into five subintervals at the end of 1945, 1965, 1975, and 1983. Then, the histogram of the magnitude frequency for each subinterval was compared with the estimated frequency function of magnitude in (15) at the middle time point t (squared a–e) of the corresponding subinterval in Fig. 10C(a)–(c). Figure 10D(a)–(e) demonstrates such a comparison, where the error bands for the estimated curve are of the first-order approximation around the marginal posterior mode (details are provided in Ogata and Katsura (1993). A good coincident between the histogram and estimated curves is transparent from Fig. 10D(a)–(e).

2.3.2 Spatial detection rate changes immediately following a large earthquake

Omi et al. (2013) demonstrated the retrospective forecasting aftershock probability in time within 24 h by using the USGS-PDE catalog aftershock activity immediately after the 2011 Tohoku-Oki Earthquake of M 9.0 in Japan. Although the JMA provides many more aftershocks, spatial heterogeneity is conspicuous, and the time evolution of aftershock detection becomes complex. Here, the focus was on the spatial detection rate within one day after the M 9.0 event.

The green and red dots in all panels in Fig. 11 indicate the epicenter locations of all detected earthquakes by the JMA network in mainland Japan, and its vicinity with a depth up to 65 km after the M 9.0 earthquake until the end of the day. Earthquakes are highly clustered in space, and therefore, the Delaunay-based functions rather than cubic spline functions were adopted.

Examples of spatial detection rate analysis of aftershocks. Estimated surfaces for earthquakes shallower than 65 km detected by the JMA network 1 day after the 2011 M9 Tohoku-Oki earthquake. Earthquakes are shown as green and red dots. a b values with contour interval 0.05, b μ value (magnitude with 50% detection rate) with contour interval 0.05, c σ value with contour interval 0.01, and d–f 97.5% detection rate of M 2.0, M 3.0, and M 4.0 earthquakes, respectively, with contour interval 0.1 (10%)

A 2D Delaunay tessellation, based on these and additional locations on the rectangular boundary, including the corners, was developed. Then, the Delaunay-based functions for the location-dependent parameters were defined. The coefficients are values at the epicenters, and the parameter value at any location is linearly interpolated. Here, the piecewise linear functions fk(x,y), k = 1,2,3, are defined in the following forms: b(x,y) = exp{f1(x,y)}, μ(x,y) = exp{f2(x,y)}, and σ(x,y) = exp{f3(x,y)}, to avoid negative values, where each triangle provides a flat surface for which the height of the surface at the three vertices. At any other point on the surface of the triangle, the height of the surface can be determined using linear interpolation.

The log likelihood function (17) of the coefficients of the parameter functions is considered, and considers the penalty function of the form

for the smoothness constraints with K = 3, where the weights w = (w1, w2, w3) are adjusted as explained for (4)–(7), where the penalty and likelihood function is replaced by (18) and the one in (17), respectively. In general, the variability is small where the epicenters are densely distributed, and large where they are sparse, in particular, about the boundary of the considered region.

Figure 11d–f shows the interpolated images of the optimal MAP estimates (solutions) of the parameter functions and spatial distribution of 97.5% detected earthquakes of the M 2.0, M 3.0, and M 4.0 earthquakes, respectively. The value at each pixel of the graph is calculated as the linear interpolation of the three coefficients of the nearest Delaunay triangle. The detailed images can be shown by magnifying the region where the earthquakes are densely distributed or highly clustered, as demonstrated in Ogata and Katsura (1988) (Fig. 5). This is an advantage of the Delaunay-based function.

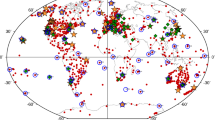

Finally, the Global Centroid Moment Tensor (CMT) catalog was considered (https://www.globalcmt.org/CMTsearch.html). The earthquakes are highly clustered along the trench curves in space, and so Delaunay-based parameterization, as before, was adopted, rather than cubic spline and kernel functions. First, the b values using all earthquakes of M ≥ 5.4 for the period 1976–2006 were estimated, above which the earthquakes of the entire period throughout world are completely detected. The likelihood function for the b value was used by Aki (1965). Alternatively, this is equivalent to the limited case of (15), where for q(M| b, μ, σ) = 1 M ≥ 5.4, and = 0 otherwise; subsequently, consider the log likelihood (17), which is dependent only on the b function. Thus, by the same optimization procedure, assuming the smoothness constraint of the Delaunay-based b function in (18) with K = 1, the optimal MAP solution was obtained (Fig. 12a). Furthermore the general case of (15) was considered and simultaneously estimated b, μ, and σ by using the penalized log likelihood whose weights are optimized by means of the ABIC. The MAP solutions are shown in Fig. 12b–d. One of the conspicuous features is that both b values are quite similar, and both b values are large in oceanic ridge zones but are small in subduction zones. Furthermore, it is notable that b values are clearly detailed owing to using all detected earthquakes. There are differences of 50% detection rate mu between the northern and southern hemisphere, with the northern hemisphere having a higher detection rate than the southern hemisphere. There are only slight differences in σ values at these scales.

Optimal MAP solutions for Global CMT catalog. a Estimated b values by using all completely detected M ≥ 5.4 earthquakes and b–d estimated b, μ, and σ values by using all detected M ≥ 0 earthquakes, respectively. Rainbow colors represent ordered estimates in respective quantities, and the estimated values are shown by the corresponding color tables

Iwata (2008, 2012) showed, by a similar temporal analysis that uses the 1st-order spline modeling, that the temporal lowering of detection rates takes place within half a day after large earthquakes throughout the world at all depths from the global CMT catalog. This implies that, immediately after a large earthquake, waveforms at the stations in a very wide region are too badly affected to get a CMT solution, comparing to using a part of wave signals for the ordinal hypocenter determination

3 Conclusions

High-quality earthquake catalogs must be precise, complete, homogeneous, and consistent, because they provide essential data for seismicity analysis. However, most catalogs covering long periods are not homogeneous because the detection rate enhancement increases the identified smaller earthquakes in time due to an improved seismic network. This can be seen by modeling the evolution of detection rate earthquakes in time and space, which is useful for the study of real seismicity activity for a long time period. This model can also be applied to the real-time short-term forecast of aftershocks immediately after major earthquakes.

I have serious concerns regarding the issue of whether earthquake sizes (magnitudes) are consistently determined throughout the entire period, which has been widely analyzed as a magnitude shift issue in seismicity studies. The present review criticizes the use of conventional statistical tests of the magnitude shift that use a single catalog based on the assumption that the earthquake series are independent or short-range auto-correlated regardless of whether a declustering algorithm is applied. An alternate method is to compare the magnitudes of two or more catalogs that examine common seismogenic areas, even if they adopt different magnitude evaluations. Such a test is discussed in Haberman and Craig (1988), which takes more significant weight in the differences between small earthquakes because of more earthquakes. However, the present model (1) considers the magnitude difference as a function of magnitude, possibly of locations. This helps promote the convertibility between different earthquake catalogs from the same region and time period. Solutions to these problems are invaluable for producing reliable earthquake hazard evaluations.

By replacing magnitudes in the abovementioned similar model by location (longitude, latitude, and depth), a similar analysis can be applied for quasi-real-time correction of the hypocenter location. For the near real-time correction of source locations, particularly, the correction of seismic sources far from the network of seismic observations can be applied based on better determined source location comparisons of other catalogs using the same identified earthquakes.

The richer the data, the more prominent the regionality and heterogeneity of earthquake occurrences, thereby making it difficult to understand them uniformly. In order to extract essential information, statistical models, including non-stationarity and non-uniformity, are inevitable. As shown in this review, the aid of the hierarchical Bayesian method, which deals with a flexible model with a large number of parameters related to the inverse problem, is needed.

Availability of data and materials

The following are the datasets supporting the conclusions of this article:

● Earthquake data from the JMA Hypocenter catalog [https://earthquake.usgs.gov/earthquakes/search/]

● The NIED F-net catalog: [https://www.fnet.bosai.go.jp/event/search.php?LANG=en.]

● The USGS-PDE catalog: [https://earthquake.usgs.gov/earthquakes/search/.]

● The ISC hypocenter catalog: [http://www.isc.ac.uk/iscbulletin/search/bulletin/.]

● The global centroid moment catalog: [https://www.globalcmt.org/CMTsearch.htm.]

Abbreviations

- ABIC:

-

Akaike Bayesian information criterion

- B-spline:

-

Basis spline

- CENT7.CAT:

-

Centennial Earthquake Catalog of Magnitude 7 or Larger

- CTBTO:

-

Comprehensive Nuclear Test Ban Treaty Organization

- CMT:

-

Centroid Moment Tensor

- DONET:

-

Dense Oceanfloor Network system for Earthquakes and Tsunamis

- IDC:

-

International Data Centre

- ISC:

-

International Seismological Center

- JMA:

-

Japan Meteorological Agency

- MAP solution:

-

Maximum a posteriori solution

- MSAS:

-

Matsushiro Seismic Array System

- NEIC:

-

National Earthquake Information Center

- NIED:

-

National Research Institute for Earth Science and Disaster Resilience

- PDE:

-

Preliminary determination of epicenters

- PRSS:

-

Penalized residual sum of squares

- S-net:

-

Seafloor Observation Network for Earthquakes and Tsunamis along the Japan Trench

- USGS:

-

United States Geological Survey

References

Abe K (1981) Magnitudes of large shallow earthquakes from 1904 to 1980. Phys Earth Planet Inter 27:72–92

Abe K (1984) Complements to magnitudes of large shallow earthquakes from 1904 to 1980. Phys Earth Planet Inter 34:17–23

Abe K, Noguchi S (1983a) Determination of magnitudes of large shallow earthquakes 1898–1917. Phys Earth Planet Inter 32:45–59

Abe K, Noguchi S (1983b) Revision of magnitudes of large earthquakes 1897--1912. Phys Earth Planet Inter 33:1–11

Akaike H (1980) Likelihood and Bayes procedure. In: Bernard JM, De Groot MH, Lindley DU, Smith AFM (eds) Bayesian statistics. University Press, Valencia

Aki K (1965) Maximum likelihood estimate of b in the formula log N = a-bM and its confidence limits. Bull Earthq Res Inst, Univ Tokyo 43:237–239

Bansal AR, Ogata Y (2013) A non-stationary epidemic type aftershock sequence model for seismicity prior to the December 26, 2004 M9.1 Sumatra-Andaman Islands mega-earthquake. J Geophys Res 118:616–629 https://doi.org/10.1002/jgrb.50068

Ekström G, Dziewonski AM (1988) Evidence of bias in estimations of earthquake size. Nature 332:319–323

Engdahl ER, Villaseñor A (2002) Global Seismicity: 1900-1999. In: Lee WHK, Kanamori H, Jennings PC, Kisslinger C (eds) International Handbook of Earthquake & Engineering Seismology, Part A. Academic Press, San Diego, pp 665–690

Freedman H (1967) Estimating earthquake magnitude. Bull Seismol Soc Am 57:747–760

Funasaki J (2004) Revision of the JMA velocity magnitude. Quart J Seismol (Kenshin-Jihou) 67:11–20 https://www.jma.go.jp/jma/kishou/books/kenshin/vol67p011.pdf, Accessed 23 Nov 2020

Good IJ, Gaskins RA (1971) Nonparametric roughness penalties for probability densities. Biometrika 58:255–277

Gutenberg B (1945) Amplitudes of surface waves and magnitudes of shallow earthquakes. Bull Seismol Soc Am 35:3–12

Gutenberg B (1956) Magnitude and energy of earthquakes. Ann Geofis (Rome) 9:1–15

Haberman RE, Craig MS (1988) Comparison of Berkeley and CALNET magnitude estimates as a means of evaluating temporal consistency of magnitudes in California. Bull Seismol Soc Am 78:1255–1267

Habermann RE (1988) Precursory seismic quiescence: past, present, and future. Pure Appl Geophys 126:279–318

Hayashi Y, Abe K (1984) A method of M determination from JMA data (in Japanese). J Seismol Soc Japan 37:429–439

Hurst HE (1951) Long-term storage capacity of reservoirs. Trans Am Soc Civ Eng 116:770–808

Inoue H (1986) A least squares smooth fitting for irregularly spaced data: finite element approach using B-spline basis. Geophysics 51:2051–2066

Iwata T (2008) Low detection capability of global earthquake after the occurrence of large earthquakes: investigation of the Harvard CMT catalogue. Geophys J Int 174:849–856

Iwata T (2012) Revisiting the global detection capability of earthquakes during the period immediately after a large earthquake: considering the influence of intermediate-depth and deep earthquakes. Res Geophys 2:e4 https://doi.org/10.4081/rg.2012.e4

Kobayashi A, Mikami N, Ishikawa Y (1993) Epicentral correction for the hypocenter of Matsushiro seismic Array system (in Japanese with English captions for figures). Tech Rep Matsushiro Seismol Obs Japan Meteorol Agency 12:1–9

Mandelbrot BB, Wallis JR (1969a) Some long-run properties of geophysical records. Water Resour Res 5:321–340

Mandelbrot BB, Wallis JR (1969b) Computer experiments with fractional Gaussian noises. Part 1, averages and variances. Water Resour Res 5:228–241

Mandelbrot BB, Wallis JR (1969c) Robustness of the rescaled range RIS in the measurement of noncyclic long run statistical dependence. Water Resour Res 5:967–988

Matthews MV, Reasenberg PA (1988) Statistical methods for investigating quiescence and other temporal seismicity patterns. Pure Appl Geophys 126:357–372

Mogi K (1974) Active periods in the world’s chief seismic belts. Tectonophys 22:265–282

Mogi K (1979) Global variation of seismic activity. Tectonophys 57:43–50

Mogi K (1986) Recent seismic activity in the Tokai district: 273–277, report of the coordinating Committee for Earthquake Prediction, Japan. https://cais.gsi.go.jp/YOCHIREN/report/kaihou35/05_03.pdf

Murata Y (1993) Estimation of optimum average surficial density from gravity data: an objective Bayesian approach. Geophys Res 98:12097–12109

Noguchi S (2009) Note on the heterogeneity of earthquake magnitudes in the global centennial catalog (1900–1999). Rep Natl Res Inst Earth Sci Dis Prev 73:1–18 https://dil-opac.bosai.go.jp/publication/nied_report/PDF/73/73-1noguchi.pdf

Ogata Y (1988) Statistical models for earthquake occurrences and residual analysis for point processes. J Am Stat Assoc Appl 83(401):9–27

Ogata Y (2009) A correction method of routinely determined hypocenter coordinates in far offshore region. Rep Coordinat Committee Earthq Predict 82(3-3):91–93 http://cais.gsi.go.jp/YOCHIREN/report/kaihou83/01_02.pdf

Ogata Y, Abe K (1991) Some statistical features of the long-term variation of the global and regional seismic activity. Int Stat Rev 59:139–161

Ogata Y, Imoto M, Katsura K (1991) 3-D spatial variation of b-values of magnitude-frequency distribution beneath the Kanto District, Japan. Geophys J Int 104:135–146

Ogata Y, Katsura K (1988) Likelihood analysis of spatial inhomogeneity for marked point patterns. Ann Inst Stat Math 40:29–39

Ogata Y, Katsura K (1991) Maximum likelihood estimates of the fractal dimension for random spatial patterns. Biometrika 78:463–474

Ogata Y, Katsura K (1993) Analysis of temporal and spatial heterogeneity of magnitude frequency distribution inferred from earthquake catalogs. Geophys J Int 113:727–738

Ogata Y, Katsura K (2006) Immediate and updated forecasting of aftershock hazard. Geophys Res Lett 33:L10305

Ogata Y, Katsura K, Tanemura M (2003) Modelling heterogeneous space-time occurrences of earthquakes and its residual analysis. Appl Stat (JRSSC) 52:499–509

Ogata Y, Kobayashi A, Mikami N, Murata Y, Katsura K (1998) Correction of earthquake location estimation in a small-seismic-array system. Bernoulli 4(2):167–184 https://projecteuclid.org/download/pdf_1/euclid.bj/1174937293

Omi T, Ogata Y, Hirata Y, Aihara K (2013) Forecasting large aftershocks within one day after the main shock. Sci Rep 3:2218 https://doi.org/10.1038/srep02218

Pacheco JF, Sykes LR (1992) Seismic moment catalog of large shallow earthquakes 1900 to 1989. Bull Seismol Soc Am 82:1306–1349

Parzen E, Tanabe K, Kitagawa G (1998) Selected papers of Hirotugu Akaike. Springer, New York

Pérez OJ, Scholz CH (1984) Heterogeneities of the instrumental seismicity catalog (1904-1980) for strong shallow earthquakes. Bull Seismol Soc Am 74:669–686

Ringdal F (1975) On the estimation of seismic detection thresholds. Bull Seismol Soc Am 65:1631–1642

Ringdal F (1976) Maximum-likelihood estimation of seismic magnitude. Bull Seismol Soc Am 66:789–802

Tsuboi C (1954) Determination of the Gutenberg-Richter’s magnitude of earthquakes occurring in and near Japan. Zisin II (J Seismol Soc Japan) 7:185–193 in Japanese with English summary and figure captions

Umino N, Hasegawa A, Matsuzawa T (1995) Article navigation shear-wave polarization anisotropy beneath the north-eastern part of Honshu, Japan. Geophys J Int 120:356–366

Utsu T (1979) Seismicity of Japan from 1885 through 1925: a new catalog of earthquakes of M ≥ 6 felt in Japan and smaller earthquakes which caused damage in Japan (in Japanese). Bull Earthq Res Inst, Univ Tokyo 54:253–308

Utsu T (1982a) Seismicity of Japan from 1885 through 1925: correction and supplement (in Japanese). Bull Earthq Res Inst, Univ Tokyo 57:1–17

Utsu T (1982b) Catalog of large earthquakes in the region of Japan from 1885 through 1980 (in Japanese). Bull Earthq Res Inst, Univ Tokyo 57:401–463

Utsu T (1982c) Relationships between earthquake magnitude scales. Bull Earthq Res Inst, Univ Tokyo 57:465–497 https://repository.dl.itc.u-tokyo.ac.jp/?action=repository_uri&item_id=32981&file_id=19&file_no=1

Utsu T (1985) Catalog of large earthquakes in the region of Japan from 1885 through 1980: correction and supplement. Bull Earthq Res Inst, Univ Tokyo 60:639–642 in Japanese with English summary and figure captions

Utsu T (2002) Statistical features of seismicity. In: Lee WHK, Kanamori H, Jennings PC, Kisslinger C (eds) International Handbook of Earthquake & Engineering Seismology Part A. Academic Press, San Diego, pp 719–732

von Seggern D (1973) Joint magnitude determination and the analysis of variance for explosion magnitude estimates. Bull Seismol Soc Am 63:827–845

Wyss M, Habermann RE (1988) Precursory seismic quiescence. Pure Appl Geophys 126:319–332

Acknowledgements

The author expresses his gratitude to Koichi Katsura for assisting with calculations and figures and Prof. Umino of Tohoku University for providing hypocenter data using the sP waves off the coast of Tohoku District, Japan. The author also thanks Jiancang Zhuang for his invitation to present on the subject at the 2018 AGU Fall meeting (S11C-0372). The author would like to further thank two anonymous reviewers for their useful comments. Finally, the author is grateful for the support of the JSPS KAKENHI (Grant No. 17H00727).

Funding

This work was supported by the JSPS KAKENHI Grant Number 17H00727.

Author information

Authors and Affiliations

Contributions

Y.O. conducted the data analysis and interpretation of the results and drafted the manuscript. The author has read and approved the final manuscript.

Author information

The author is Professor Emeritus of the Institute of Statistical Mathematics, 10-3 Midori-Cho, Tachikawa city, Tokyo, 190-8562 Japan.

Corresponding author

Ethics declarations

Competing interests

The author declares that he has no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ogata, Y. Visualizing heterogeneities of earthquake hypocenter catalogs: modeling, analysis, and compensation. Prog Earth Planet Sci 8, 8 (2021). https://doi.org/10.1186/s40645-020-00401-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40645-020-00401-8