Abstract

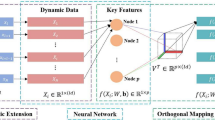

Visual process monitoring allows operators to identify and diagnose faults intuitively and quickly. The performance of visual process monitoring depends on the quality of extracted features and the performance of the visual model to visualize these features. In this study, we propose a deep model for feature extraction. First, a stacked auto-encoder is used to obtain the feature representation of the input data. Second, the feature representation is fed into a multi-layer perceptron (MLP) with the Fisher criterion as the objective function. The outputs of the MLP are the extracted discriminant features. We combine the proposed feature extraction method with the visual model consisting of t-distributed stochastic neighbor embedding (t-SNE) and a back-propagation (BP) neural network (t-SNE-BP) for visual process monitoring. In this method, an industrial data set is reorganized into a dynamic sample set containing fault trend. Subsequently, the proposed feature extraction method is used to extract discriminant features from the dynamic sample set. Finally, the t-SNE-BP model maps these discriminant features into a two-dimensional space in which the normal and fault states are represented by different regions. Visual process monitoring is performed on this two-dimensional space. The Tennessee Eastman process is used to demonstrate the performance of the proposed feature extraction and visual process monitoring methods.

Similar content being viewed by others

References

Barron, A. R. (1991). Complexity regularization with application to artificial neural networks. Nonparametric Functional Estimation and Related Topics, 335, 561–576.

Becker, M., Lippel, J., Stuhlsatz, A., & Zielke, T. (2020). Robust dimensionality reduction for data visualization with deep neural networks. Graphical Models. https://doi.org/10.1016/j.gmod.2020.101060.

Borek, D., Bromberg, R., Hattne, J., & Otwinowski, Z. (2018). Real-space analysis of radiation-induced specific changes with independent component analysis. Journal of Synchrotron Radiation, 25, 451–467. https://doi.org/10.1107/S1600577517018148.

Calce, S. E., Kurki, H. K., Weston, D. A., & Gould, L. (2017). Principal component analysis in the evaluation of osteoarthritis. American Journal of Physical Anthropology, 162(3), 476–490. https://doi.org/10.1002/ajpa.23130.

Chen, X. Y., & Yan, X. F. (2012). Using improved self-organizing map for fault diagnosis in chemical industry process. Chemical Engineering Research and Design, 90(12), 2262–2277. https://doi.org/10.1016/j.cherd.2012.06.004.

Chen, X. Y., & Yan, X. F. (2013). Fault diagnosis in chemical process based on self-organizing map integrated with fisher discriminant analysis. Chinese Journal of Chemical Engineering, 21(4), 382–387. https://doi.org/10.1016/S1004-9541(13)60469-3.

Chiang, L. H., Kotanchek, M. E., & Kordon, A. K. (2004). Fault diagnosis based on Fisher discriminant analysis and support vector machines. Computers & Chemical Engineering, 28(8), 1389–1401. https://doi.org/10.1016/j.compchemeng.2003.10.002.

Chiang, L. H., Russell, E. L., & Braatz, R. D. (2001). Fault detection and diagnosis in industrial systems. In Springer, 2001 advanced textbooks in control and signal processing.

Corona, F., Mulas, M., Baratti, R., & Romagnoli, J. A. (2010). On the topological modeling and analysis of industrial process data using the SOM. Computers & Chemical Engineering, 34(12), 2022–2032. https://doi.org/10.1016/j.compchemeng.2010.07.002.

Ding, J. R., Condon, A., & Shah, S. P. (2018). Interpretable dimensionality reduction of single cell transcriptome data with deep generative models. Nature Communications. https://doi.org/10.1038/s41467-018-04368-5.

Dorfer, M., Kelz, R., & Widmer, G. (2016). Deep linear discriminant analysis. International Conference on Learning Representations 2016.

Dos Santos, V. A., Schmetterer, L., Stegmann, H., Pfister, M., Messner, A., Schmidinger, G., et al. (2019). CorneaNet: fast segmentation of cornea OCT scans of healthy and keratoconic eyes using deep learning. Biomedical Optics Express, 10(2), 622–641. https://doi.org/10.1364/Boe.10.000622.

Downs, J. J., & Vogel, E. F. (1993). A plant-wide industrial process control problem. Computers & Chemical Engineering, 17(3), 245–255.

Flamini, F., Spagnolo, N., & Sciarrino, F. (2019). Visual assessment of multi-photon interference. Quantum Science and Technology, 4(2). https://doi.org/10.1088/2058-9565/ab04fc.

Galiaskarov, M. R., Kurkina, V. V., & Rusinov, L. A. (2017). Online diagnostics of time-varying nonlinear chemical processes using moving window kernel principal component analysis and Fisher discriminant analysis. Journal of Chemometrics, 31(8). https://doi.org/10.1002/cem.2866.

Gnouma, M., Ladjailia, A., Ejbali, R., & Zaied, M. (2019). Stacked sparse autoencoder and history of binary motion image for human activity recognition. Multimedia Tools and Applications, 78(2), 2157–2179. https://doi.org/10.1007/s11042-018-6273-1.

Hamadache, M., & Lee, D. (2017). Principal component analysis based signal-to-noise ratio improvement for inchoate faulty signals: Application to ball bearing fault detection. International Journal of Control, Automation and Systems, 15(2), 506–517. https://doi.org/10.1007/s12555-015-0196-7.

Horrocks, T., Holden, E. J., Wedge, D., Wijns, C., & Fiorentini, M. (2019). Geochemical characterisation of rock hydration processes using t-SNE. Computers & Geosciences, 124, 46–57. https://doi.org/10.1016/j.cageo.2018.12.005.

Husnain, M., Missen, M. M. S., Mumtaz, S., Luqman, M. M., Coustaty, M., & Ogier, J. M. (2019). Visualization of high-dimensional data by pairwise fusion matrices using t-SNE. Symmetry-Basel, 11(1). https://doi.org/10.3390/sym11010107.

Kim, D., Park, S. H., & Baek, J. G. (2018). A Kernel fisher discriminant analysis-based tree ensemble classifier: Kfda forest. International Journal of Industrial Engineering-Theory Applications and Practice, 25(5), 569–579.

Li, Z. L., Bagan, H., & Yamagata, Y. (2018). Analysis of spatiotemporal land cover changes in Inner Mongolia using self-organizing map neural network and grid cells method. Science of the Total Environment, 636, 1180–1191. https://doi.org/10.1016/j.scitotenv.2018.04.361.

Liu, G. C., Li, L. L., Jiao, L. C., Dong, Y. S., & Li, X. L. (2019). Stacked Fisher autoencoder for SAR change detection. Pattern Recognition. https://doi.org/10.1016/j.patcog.2019.106971.

Liukkonen, M., Hiltunen, Y., & Laakso, I. (2013). Advanced monitoring and diagnosis of industrial processes. In 2013 8th Eurosim congress on modelling and simulation (eurosim), 112–117. https://doi.org/10.1109/eurosim.2013.30.

Ma, J. W., Nguyen, C. H., Lee, K., & Heo, J. (2019). Regional-scale rice-yield estimation using stacked auto-encoder with climatic and MODIS data: a case study of South Korea. International Journal of Remote Sensing, 40(1), 51–71. https://doi.org/10.1080/01431161.2018.1488291.

Maaten, L. V. D. (2009). Learning a parametric embedding by preserving local structure. In International conference on artificial intelligence and statistics, 384–391.

Moradi, R., Berangi, R., & Minaei, B. (2019). SparseMaps: Convolutional networks with sparse feature maps for tiny image classification. Expert Systems with Applications, 119, 142–154. https://doi.org/10.1016/j.eswa.2018.10.012.

Onel, M., Kieslich, C. A., & Pistikopoulos, E. N. (2019). A nonlinear support vector machine-based feature selection approach for fault detection and diagnosis: Application to the Tennessee Eastman process. AIChE Journal, 65(3), 992–1005. https://doi.org/10.1002/aic.16497.

Papakostas, M., & Giannakopoulos, T. (2018). Speech-music discrimination using deep visual feature extractors. Expert Systems with Applications, 114, 334–344. https://doi.org/10.1016/j.eswa.2018.05.016.

Quinones-Grueiro, M., Prieto-Moreno, A., Verde, C., & Llanes-Santiago, O. (2019). Data-driven monitoring of multimode continuous processes: A review. Chemometrics and Intelligent Laboratory Systems, 189, 56–71. https://doi.org/10.1016/j.chemolab.2019.03.012.

Robertson, G., Thomas, M. C., & Romagnoli, J. A. (2015). Topological preservation techniques for nonlinear process monitoring. Computers & Chemical Engineering, 76, 1–16. https://doi.org/10.1016/j.compchemeng.2015.02.002.

Shahriari, A. (2016). Learning of separable filters by stacked fisher convolutional autoencoders. Paper presented at the proceedings of the British Machine vision conference 2016.

Silva, A. F. T., Sarraguca, M. C., Ribeiro, P. R., Santos, A. O., De Beer, T., & Lopes, J. A. (2017). Statistical process control of cocrystallization processes: A comparison between OPLS and PLS. International Journal of Pharmaceutics, 520(1–2), 29–38. https://doi.org/10.1016/j.ijpharm.2017.01.052.

Song, Y., Jiang, Q. C., Yan, X. F., & Guo, M. J. (2014). A multi-SOM with canonical variate analysis for chemical process monitoring and fault diagnosis. Journal of Chemical Engineering of Japan, 47(1), 40–51. https://doi.org/10.1252/jcej.13we134.

Tang, J. W., & Yan, X. F. (2017). Neural network modeling relationship between inputs and state mapping plane obtained by FDA-t-SNE for visual industrial process monitoring. Applied Soft Computing, 60, 577–590. https://doi.org/10.1016/j.asoc.2017.07.022.

Tong, C., Li, J., Lang, C., Kong, F. X., Niu, J. W., & Rodrigues, J. J. P. C. (2018). An efficient deep model for day-ahead electricity load forecasting with stacked denoising auto-encoders. Journal of Parallel and Distributed Computing, 117, 267–273. https://doi.org/10.1016/j.jpdc.2017.06.007.

Tsai, W. P., Huang, S. P., Cheng, S. T., Shao, K. T., & Chang, F. J. (2017). A data-mining framework for exploring the multi-relation between fish species and water quality through self-organizing map. Science of the Total Environment, 579, 474–483. https://doi.org/10.1016/j.scitotenv.2016.11.071.

Valle, M. A., Ruz, G. A., & Masias, V. H. (2017). Using self-organizing maps to model turnover of sales agents in a call center. Applied Soft Computing, 60, 763–774. https://doi.org/10.1016/j.asoc.2017.03.011.

Wang, B. X., Pan, H. X., & Yang, W. (2017). Robust bearing degradation assessment method based on improved CVA. IET Science, Measurement and Technology, 11(5), 637–645. https://doi.org/10.1049/iet-smt.2016.0391.

Yu, H. Y., Khan, F., Garaniya, V., & Ahmad, A. (2014). Self-organizing map based fault diagnosis technique for non-gaussian processes. Industrial and Engineering Chemistry Research, 53(21), 8831–8843. https://doi.org/10.1021/ie500815a.

Yu, J. B., & Yan, X. F. (2018). Layer-by-layer enhancement strategy of favorable features of the deep belief network for industrial process monitoring. Industrial and Engineering Chemistry Research, 57(45), 15479–15490. https://doi.org/10.1021/acs.iecr.8b04689.

Zhou, P. C., Han, J. W., Cheng, G., & Zhang, B. C. (2019). Learning compact and discriminative stacked autoencoder for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 57(7), 4823–4833. https://doi.org/10.1109/Tgrs.2019.2893180.

Acknowledgements

The authors are grateful for the support of National key research and development program of China (2020YFA0908300), and National Natural Science Foundation of China (21878081).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The detailed derivation of the parameter updates of MLP is as follows.

-

1.

For the output data \( \text{y}_{j}^{ (l )} \), \( M (\text{y}_{j}^{ (l )} ) \) and \( N (\text{y}_{j}^{ (l )} ) \) denote the average and the number of samples of the class to which \( \text{y}_{j}^{l} \) belongs, respectively. For example, if \( \text{y}_{j}^{ (l )} \) belongs to class \( \text{Y}_{c}^{ (l )} \), then \( M (\text{y}_{j}^{ (l )} ) { = }\bar{y}_{c}^{ (l )} \), and \( N (\text{y}_{j}^{ (l )} ) { = }n_{c} \). \( {{\partial \tilde{J} (\text{W} ,\text{b} )} \mathord{\left/ {\vphantom {{\partial \tilde{J} (\text{W} ,\text{b} )} {\partial \text{y}_{j}^{ (l )} }}} \right. \kern-0pt} {\partial \text{y}_{j}^{ (l )} }} \) is calculated as follows:

$$ \frac{{\partial \tilde{J} (\text{W} ,\text{b} )}}{{\partial \text{y}_{j}^{ (l )} }}{ = } - \frac{{\partial \left[ {\frac{{tr (\tilde{S}_{b} )}}{{tr (\tilde{S}_{w} )}}} \right]}}{{\partial \text{y}_{j}^{ (l )} }} = - \frac{{tr (\tilde{S}_{w} )\cdot \frac{{\partial tr (\tilde{S}_{b} )}}{{\partial \text{y}_{j}^{ (l )} }} - tr (\tilde{S}_{b} )\cdot \frac{{\partial tr (\tilde{S}_{w} )}}{{\partial \text{y}_{j}^{ (l )} }}}}{{\left( {tr (\tilde{S}_{w} )} \right)^{2} }}, $$(12)where

$$ \begin{aligned} \frac{{\partial tr (\tilde{S}_{w} )}}{{\partial \text{y}_{j}^{ (l )} }} & = \frac{\partial }{{\partial \text{y}_{j}^{ (l )} }}\frac{1}{2}\sum\limits_{i = 1}^{c} {\sum\limits_{{\text{y}^{ (l )} \in \text{Y}_{i}^{ (l )} }} {\left\| {\text{y}^{ (l )} - \bar{y}_{i}^{ (l )} } \right\|^{2} } } \\ & { = }\left( {\text{y}_{j}^{ (l )} - M (\text{y}_{j}^{ (l )} )} \right) \cdot \left( { 1- \frac{1}{{N (\text{y}_{j}^{ (l )} )}}} \right) ,\\ \end{aligned} $$(13)and

$$ \begin{aligned} \frac{{\partial tr (\tilde{S}_{b} )}}{{\partial \text{y}_{j}^{ (l )} }} & = \frac{\partial }{{\partial \text{y}_{j}^{ (l )} }}\frac{1}{2}\sum\limits_{i = 1}^{c} {\sum\limits_{v = i + 1}^{c} {\left\| {\bar{y}_{i}^{ (l )} - \bar{y}_{v}^{ (l )} } \right\|^{2} } } \\ & { = }\sum\limits_{v = 1}^{c} {\left( {M (\text{y}_{j}^{ (l )} )- M (\text{y}_{v}^{ (l )} )} \right) \cdot \frac{1}{{N (\text{y}_{j}^{ (l )} )}}} .\\ \end{aligned} $$(14) -

2.

The value of \( \delta^{ (l )} \) for the output layer is calculated.

$$ \delta^{ (l )} = \frac{{\partial \tilde{J} (\text{W} ,\text{b} )}}{{\partial \text{y}_{j}^{ (l )} }} \cdot \frac{{\partial \text{y}_{j}^{ (l )} }}{{\partial \text{z}_{j}^{ (l )} }} = \frac{{\partial \tilde{J} (\text{W} ,\text{b} )}}{{\partial \text{y}_{j}^{ (l )} }} \cdot f^{\prime } \left( {\text{z}_{j}^{ (l )} } \right), $$(15)where operator \( \cdot \) represents the multiplication of the corresponding position.

-

3.

For layer \( k = l - 1 , { }l - 2 ,\ldots , { 2} \), \( \delta^{ (k )} \) is calculated as follows:

$$ \delta^{ (k )} = \left( {\left( {\text{W}^{ (k )} } \right)^{\text{T}} \delta^{ (k + 1 )} } \right) \cdot f^{\prime } \left( {\text{z}_{j}^{ (k )} } \right). $$(16) -

4.

The gradients are calculated.

$$ \begin{aligned} & \nabla_{{\text{W}^{ (k )} }} \tilde{J} (\text{W} , { }\text{b} , { }y_{j}^{ (k )} )= \delta^{ (k + 1 )} \left( {y_{j}^{ (k )} } \right)^{\text{T}} \\ & \nabla_{{b^{{^{ (k )} }} }} \tilde{J} (\text{W} , { }\text{b} , { }y_{j}^{ (k )} )= \delta^{ (k + 1 )} \\ \end{aligned} $$(17) -

5.

The gradients of all samples in the batch are accumulated.

$$ \begin{aligned} & \Delta \text{W}^{ (k )} = \Delta \text{W}^{ (k )} + \nabla_{{\text{W}^{ (k )} }} \tilde{J} (\text{W} ,\text{b} ,y ) { } \\ & \Delta b^{ (k )} = \Delta b^{ (k )} + \nabla_{{b^{ (k )} }} \tilde{J} (\text{W} ,\text{b} ,y )\\ \end{aligned} $$(18) -

6.

The parameters are updated.

$$ \begin{aligned} \text{W}^{ (k )} & = \text{W}^{ (k )} - \eta \left[ {\left( {\frac{1}{N}\Delta \text{W}^{ (k )} } \right) + \lambda_{1} \text{W}^{ (k )} } \right] ,\\ b^{ (k )} & = b^{ (k )} - \eta \left( {\frac{1}{N}\Delta b^{ (k )} } \right) ,\\ \end{aligned} $$(19)where \( \eta \) is the learning rate, \( \lambda_{1} \) penalizes the large weights to prevent overfitting, and N is the total number of samples in the batch.

Rights and permissions

About this article

Cite this article

Lu, W., Yan, X. Visual high dimensional industrial process monitoring based on deep discriminant features and t-SNE. Multidim Syst Sign Process 32, 767–789 (2021). https://doi.org/10.1007/s11045-020-00758-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-020-00758-5