Abstract

In this paper, the expression for the SNR has been developed through the imaging model. It is concluded that the image SNR decreases with the increase of the number of light-emitting points of the target under the same hardware conditions and experimental parameters. Using uniform bright squares of different sizes as the target, the SNR of the reconstructed image is calculated. Simulation and prototype experiments have proved the correctness of the conclusion. Based on this conclusion, a method of segmented area imaging is proposed to improve the reconstructed image quality. The quality of all the images using this method with Wiener inverse filtering, R-Lucy deconvolution, and ADMM is better than the image quality obtained by full-area imaging.

Similar content being viewed by others

1 Introduction

The pinhole imaging system is the simplest imaging structure. Only one pinhole realizes the imaging function, but because the luminous flux is very small and the resolution is also limited, its application is greatly limited. To solve the problem, the concept of coded aperture imaging is proposed by Dick [1], which is widely used in X-ray and γ-ray imaging[2,3,4]. Since the coded aperture imaging system is not limited by the lens, the ultra-thin camera is possible. Receiving more attention in recent years, it is proposed to apply the coded aperture imaging system to the visible light [5,6,7,8,9], using URA [10]and MURA [11] as coded apertures, and other forms of coded aperture, such as diffuser [12,13,14], protective glass [15] or optical fiber [16]. The main advantages of this imaging system are the large field of view and miniaturization, but the image quality is not better than a lens. In theory, a lens-less imaging system can also achieve perfect imaging, but there is a large gap between its imaging quality and the lens imaging system. Because the reconstruction image is easily affected by noise, obtaining a good image requires a suitable reconstruction algorithm, which not only suppresses the noise, but also ensures the image resolution. Thanks to the development of computational imaging technology, many excellent algorithms are used to improve the image quality of the coded aperture imaging system, including algorithms such as ADMM [8, 12, 17] and neural networks [7, 8, 18], which can obtain better images. But at the same time, it brings new problems. To suppress the noise, the resolution of the reconstructed image will make some sacrifices. So, the SNR of the coded aperture system is a significant factor in resolution.

In this article, we have established the theoretical model and prototype of the coded aperture lens-less imaging system, analyzed the SNR of the coded aperture imaging system, and mainly considered the Poisson noise and calibration error to the SNR of the reconstructed image. The results show that the SNR is a function of the number of light-emitting points. Using LCD as the target simulator, display uniform bright squares of different sizes, and calculate the SNR of the uniform area after reconstructing the image. Simulation and experiment have relatively consistent results, more points in the scene, more noise. If the scene fills the field of view, a poor image that needs noise reduction and resolution sacrifice will be displayed. It is also mentioned in the literature [12] that the non-linear algorithm reconstructed image may cause the resolution of multiple points to decrease. We simulated the effect of increasing the number of light-emitting points on the resolution. With the increase of the number of light-emitting points, it is indeed impossible to resolve the striped target, but it can still be recognized after multi-frame superposition, indicating that for the linear algorithm, the resolution does not decrease, but is disturbed by strong noise. However, after adding constraints or using a non-linear algorithm, the SNR will be greatly improved, while the resolution is indeed reduced. To solve this problem, we analyze the properties of the SNR and proposed a segmented area imaging method to improve the quality of images.

2 The coded aperture imaging system

2.1 Optical system

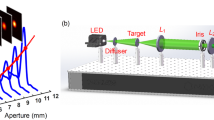

The coded aperture imaging system is a generalization of pinhole imaging. Multiple pinholes are used to replace a single pinhole. The distribution of pinholes is specially designed, so it is called a coded aperture. The system composition is shown below (Fig. 1).

The entire imaging system consists only of a sensor and a coded aperture. The coded aperture is composed of multiple light-transmitting small holes and is parallel to the surface of the sensor. After the light emitted from a point target on the object side passes through the coded template, a pattern will be generated on the photosensitive surface of the detector, and this pattern is highly related to the coded aperture.

According to the composition of the system, the propagation of the light field is divided into three steps. First, the spherical wave emitted by the target passes through free space to reach the coded aperture, then is modulated by the coded aperture, and then diffracts to reach the sensor. The light emitted by the target is non-coherent, so the response of the light emitted by a point on the sensor, the HPSF, can be calculated first, and then the convolution of the HPSF with the scene image is used to obtain the image data recorded by the sensor. To reduce the impact of discrete sampling on simulation results, we up-sampled the simulation image and the coded aperture by ten times and used ten-time down-sampling when the sensor output data (Fig. 2).

The point target \(P_0\) emits a spherical wave with a complex amplitude in the X–Y plane where the coded aperture is:

The light field behind the mask is:

The distance between the coded aperture and the sensor is relatively close, which is generally smaller than the effective size of the coded aperture. Theoretically, the Fresnel diffraction conditions are not met. Rayleigh Sommerfeld diffraction [19,20,21,22] should be used, but considering that the diffraction energy of a pinhole is mainly concentrated in the Fresnel diffraction area, so it is possible to use Fresnel diffraction and Rayleigh Sommerfeld diffraction for the simulation of the diffraction from the coded aperture to the sensor. The pinhole simulation results also show that the results of the two methods are more consistent. Due to the upsampling, the calculation is relatively large in the simulation process, and the relative calculation speed of Fresnel diffraction is fast, so in this paper, we use Fresnel diffraction to simulate the diffraction process. Therefore, the complex amplitude of the light field obtained on the sensor is:

Based on the above analysis, we define the impulse response of the optical system as hardware PSF (HPSF),

When the system captures the target X, the light intensity distribution recorded by the sensor:

where t is a coefficient related to exposure time, X is the scene, and N is noise from the sensor. The noise here represents the deviation between the measured value and the true value, including both Poisson noise and additive noise. Reconstructing X can use a deconvolution-related algorithm. For ease of analysis, we write the above formula in matrix form,

H is the matrix form of the HPSF. In an ideal geometric optical model, for a coded Aperture lens-free imaging system, H consists of ‘0’ and ‘1’. But in the case of diffraction, it no longer has ‘0’ elements. Each row of H contains some or all of the elements in HPSF, and the elements arrangement order between rows is different, and x in formula (6) is the vector form of the scene, and all the elements in the scene are arranged in a certain order, which is related to the convolution operation rules. If the size of HPSF is m × n and the size of X is p × q, the size of H is [(m + p − 1)(n + q − 1)] × (p × q). For example, if X contains 10,000 pixels, H will contain more than 100 million elements, which will cause a lot of calculation. One way to reduce the complexity is to use a separable mask[5]. While this expression is mainly for the convenience of analysis, and conv2 function in MATLAB is used for calculation in the simulation experiment.

Measured value of H is expressed by \(\tilde{H}\). The reconstructed image of the target can be expressed as:

Due to the existence of noise and measurement error, there is a certain deviation(h) between \(\stackrel{\sim }{H}\) and H, so \(\stackrel{\sim }{H}\)=H–h, the above formula can be rewritten as:

where t is the exposure time, which ensures that the detector receives enough photons, x in the first term is the ideal image of the target, and the second term is the deviation caused by the measurement error of the HPSF and is only related to the target X since h is fixed after \(\stackrel{\sim }{H}\) was measurement. The third term is the deviation caused by noise.

2.2 The SNR of the reconstructed image

To facilitate analysis, we rewrite Eq. (8) as:

where \(a_m\), \(b_m\) are all row vectors. Then we can get the value of the jth pixel,

Noting that \(S_1 \left( j \right) = t\sum_{i = 1}^m {a_j \left( i \right)} x_i\), which is the deviation caused by h, \(S_2 \left( j \right) = \sum_{i = 1}^m {b_j \left( i \right)} n_i\) which is the deviation caused by noise. The SNR can be defined as the ratio of the true value to the standard deviation:

where d and p are the brightness and the number of lighting points. E(•) is the mean function, and D(•) is the variance function. \(j \in 1:p\) means that j belongs to an integer in the closed interval [1, p], and p is the number of light points. Because \(x_j\), S1 and S2 are not related to each other, there is no covariance in the above formula, and \(x_j - E(x_j ) = 0\). When calibrating H, multiple measurements can be performed to make the elements of h as small as possible, so that its influence can be reduced as much as possible. It can be considered as the disturbance caused by h, \(D\left[ {S_1 \left( j \right){| }j \in 1:p} \right] \approx 0\), which means the variance of all \(S_1 \left( j \right)\) so

Besides, \(n_i\) can be rewritten as \(n_i { = }\tilde{y}_i - y_i + n_i^g\), where \(y_i\) is the truth of the response of the detector to photons, and \(\tilde{y}_i\) is the measured value of \(y_i\), according to Poisson distribution \({\text{Possion}}\left( {y_i ,y_i } \right)\). So \(\tilde{y}_i - y_i\) is Poisson noise, and its variance is equal to \(y_i\). \(n_i^g\) is additive noise. Noting that \(S_2^p \left( j \right){ = }\sum_{i = 1}^m {b_j \left( i \right)} \tilde{y}_i\), \(S_2^g \left( j \right){ = }\sum_{i = 1}^m {b_j \left( i \right)} n_i^g\), \(S_2^r \left( j \right){ = }\sum_{i = 1}^m {b_j \left( i \right)} y_i = tx_j\). Because \(\tilde{y}_i \sim {\text{Possion}}\left( {y_i ,y_i } \right)\),

where \(S_2^p \left( j \right)\) and \(S_2^g \left( j \right)\), respectively, conform to skellam distribution and normal distribution, \(\sigma_i\) is the variance of \(n_i^g\), and \(n_i^g\) is assumed to conform to a normal distribution. Then we can get that,

Because the elements of H are all greater than 0, there must be \(y_i \left( {p = n} \right) \ge y_i \left( {p = n{ - }1} \right)\). If \(\sigma_i\) does not change, it is obvious that \(D\left( {S_2 {|}n = p} \right) \ge D\left( {S_2 {|}n = p - 1} \right)\).

Through the above derivation, it shows that SNR decreases as the number of luminous points increases. However, when the luminous point decreases, the SNR will not increase indefinitely. It is affected by additive noise, and there is an extreme value of SNR.

When HPSF has only one element, namely point-to-point imaging, such as lens imaging or hole imaging under ideal conditions, H is close to the identity matrix, and its SNR is no longer affected by the number of luminous points. Formula (16) also indicates that reducing the absolute value of b is beneficial to control the SNR of the reconstructed image, which provides inspiration for the design of the coding template, and can give full play to the designability of the coding template in coded aperture imaging.

3 Simulation

Formula (12) can only reveal that the SNR of each pixel decreases as the number of luminous points increases. We can infer that the SNR of each pixel becomes worse, and the SNR of the entire image will also become worse. To confirm this conjecture, the simulation experiment is carried out by using the model. The target adopts a rectangular pattern with a side length of 20 pixels to 126 pixels and applies Poisson noise to the energy distribution on the detector. Reconstructing image using wiener deconvolution with consistency parameter, the SNR of 20 × 20 pixels in the central area of the reconstructed image is counted and repeated 60 times to calculate the average value of the SNR of the reconstructed image. The result is shown in the Fig. 3.

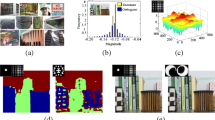

The bigger the target is, the worse the SNR will be. To achieve the same SNR as the small target, R-Lucy deconvolution can be used to suppress noise. while the three-line target cannot be distinguished showing as Fig. 4(b). If the noise is not suppressed, the noise may overwhelm the signal, making it impossible to distinguish between different light-emitting points.

To improve the SNR of the reconstructed image, it can only be achieved by reducing S1 or S2. Among them, reducing S1 can make multiple measurements on HPSF, or use a modulated coded aperture to make multiple measurements and reconstruct[9] to reduce the impact of h. Reducing S2 can only be achieved by reducing the power of noise, which can be realized by reducing the luminous point number of the target.

4 Experimental settings

We built a prototype and used our method to improve image quality. The unit size of the coded aperture is 13 um, which is generated using the M sequence of 127 and extended by one cycle, as shown in the Fig. 5.

The detector is B1923 CCD from Imperx, using a KAI-02170 monochrome chip. The detector has 1920 × 1080 pixels with a size of 7.4um. The angle response of the pixel drops to 50% at 18°, and the distance from the photosensitive surface to the coded aperture is 3.37 mm. Although the field of view of the coded aperture camera is very large, it is limited to the angle response of the detector, and the field of view is only about 36°. We use an LCD monitor as the target simulator. The unit size of the monitor is 0.275 mm, and the target image maintains the original resolution without zooming in and out.

Firstly, we use a point light source to illuminate, and the averaged 100 frames of data are collected as HPSF. Then to image uniform rectangular targets of different sizes, reconstruct the image, select the target area to calculate the SNR.

The results in Fig. 6 are consistent with the simulation results. Based on this result, we propose a method to improve the quality of lens-less imaging of the coded aperture. This method, we called segmented area imaging, divides a target into several regions, images them separately, and then splicing them together. Theoretically, the imaging quality is better than that of the whole region directly. Figure 7 compares the results of segmented area imaging and full-area imaging (snapshotting to get the full image), and the reconstructed images using the three algorithms all show that the image quality of the segmented area imaging is improved compared with the full-area imaging method.

5 Summary

In this paper, we build a simulation model of the coded aperture imaging system based on the theory of light propagation, simulate the imaging process, and analyze the SNR characteristics of the coded aperture lens-less imaging system. Both simulation experiments and prototype experiments imaged uniform targets of different sizes and reconstructed the image. The experimental results and the theoretical analysis results were more consistent. Both showed that the larger the target size, the SNR of the reconstructed image lower. Although this conclusion was the result of research on coded aperture imaging, due to its extensive mathematical model, this conclusion is also applicable to other lens-less imaging, such as scattering imaging and diffuser camera. Also, this conclusion can help researchers find better masks.

The segmented area imaging can be used to image the target in different regions to improve the image quality, but at the same time, the temporal resolution will be sacrificed, and appropriate means are needed to partition the observed target, which is more suitable for the microscopic imaging scene of static observation.

References

Dicke, R.H.: scatter-hole cameras for x-rays and gamma rays. Astrophys. J. 153, 101 (1968)

Cannon, T.M., Fenimore, E.E.: Coded aperture imaging: many holes make light work. Opt. Eng. 19(3), 193283 (1980)

Durrant, P.T., Dallimore, M., Jupp, I.D., Ramsden, D.: The application of pinhole and coded aperture imaging in the nuclear environment. Nuclear Instrum. Methods Phys. Res. Sect. A 422(1), 667–671 (1999)

Mojica, E., Pertuz, S., Arguello, H.: High-resolution coded-aperture design for compressive x-ray tomography using low resolution detectors. Opt. Commun. 404, 103–109 (2017)

Ahmad, F., DeWeert, M. J., Farm, B.P.: Lensless coded aperture imaging with separable doubly Toeplitz masks. In: Compressive Sensing III. pp 1–12 (2014)

Adams, J.K., Boominathan, V., Avants, B.W., Vercosa, D.G., Ye, F., Baraniuk, R.G., Robinson, J.T., Veeraraghavan, A.: Single-frame 3D fluorescence microscopy with ultraminiature lensless FlatScope. Sci Adv 3(12), e1701548 (2017)

Sinha, A., Lee, J., Li, S., Barbastathis, G.: Lensless computational imaging through deep learning. Optica 4(9), 1117–1125 (2017)

Monakhova, K., Yurtsever, J., Kuo, G., Antipa, N., Yanny, K., Waller, L.: Learned reconstructions for practical mask-based lensless imaging. Opt. Express 27(20), 28075–28090 (2019)

Jiang, Z., Yang, S., Huang, H., He, X., Kong, Y., Gao, A., Liu, C., Yan, K., Wang, S.: Programmable liquid crystal display based noise reduced dynamic synthetic coded aperture imaging camera (NoRDS-CAIC). Opt. Express 28(4), 5221–5238 (2020)

Fenimore, T.M.C.E.E.: Uniformly redundant arrays, in Digital signal processing symposium. Los Alamos Scientific Lab., N. Mex. (USA), Albuquerque (1977)

Gottesman, S.R., Fenimore, E.E.: New family of binary arrays for coded aperture imaging. Appl. Opt. 28(20), 4344–4352 (1989)

Antipa, N., Kuo, G., Heckel, R., Mildenhall, B., Bostan, E., Ng, R., Waller, L.: DiffuserCam: lensless single-exposure 3D imaging. Optica 5(1), pp 1–9 (2017)

Kwon, H., Arbabi, E., Kamali, S.M., Faraji-Dana, M., Faraon, A.: Computational complex optical field imaging using a designed metasurface diffuser. Optica 5(8), 924–931 (2018)

Singh, A.K., Pedrini, G., Takeda, M., Osten, W.: Scatter-plate microscope for lensless microscopy with diffraction limited resolution. Sci. Rep. 7(1), 10687 (2017)

Kim, G., Isaacson, K., Palmer, R., Menon, R.: Lensless photography with only an image sensor. Appl. Opt. 56(23), 6450–6456 (2017)

Kürüm, U., Wiecha, P.R., French, R., Muskens, O.L.: Deep learning enabled real time speckle recognition and hyperspectral imaging using a multimode fiber array. Opt. Express 27(15), 20965–20979 (2019)

Bostan, E., Froustey, E., Rappaz, B., Shaffer, E., Sage, D., Unser, M.: Phase retrieval by using transport-of-intensity equation and differential interference contrast microscopy. In: 2014 IEEE International Conference on Image Processing (ICIP), Vol. of 2014 paper 3939–3943

Fu, H., Bian, L., Cao, X., Zhang, J.: Hyperspectral imaging from a raw mosaic image with end-to-end learning. Opt. Express 28(1), 314–324 (2020)

Mehrabkhani, S., Schneider, T.: Is the Rayleigh-Sommerfeld diffraction always an exact reference for high speed diffraction algorithms? Opt. Express 25(24), 30229–30240 (2017)

Nascov, V., Logofătu, P.C.: Fast computation algorithm for the Rayleigh-Sommerfeld diffraction formula using a type of scaled convolution. Appl. Opt. 48(22), 4310–4319 (2009)

Lucke, R.L.: Rayleigh-Sommerfeld Fraunhofer diffraction. Phys. Educ. pp 1–8 (2006)

Shen, F., Wang, A.: Fast-Fourier-transform based numerical integration method for the Rayleigh-Sommerfeld diffraction formula. Appl. Opt. 45(6), 1102–1110 (2006)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, J., Zhao, Y. SNR of the coded aperture imaging system. Opt Rev 28, 106–112 (2021). https://doi.org/10.1007/s10043-020-00639-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10043-020-00639-z