Abstract

Purpose

The automated clipping of surgical nasal endoscopic video is a challenging task because there are many hard frames that have indiscriminative visual features which lead to misclassification. Prior works mainly aim to classify these hard frames along with other frames, and it would seriously affect the performance of classification.

Methods

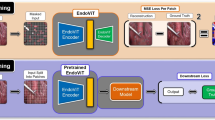

We propose a hard frame detection method using a convolutional LSTM network (called HFD-ConvLSTM) to remove invalid video frames automatically. Firstly, a new separator based on the coarse-grained classifier is defined to remove the invalid frames. Meanwhile, the hard frames are detected via measuring the blurring score of a video frame. Then, the squeeze-and-excitation is used to select the informative spatial–temporal features of endoscopic videos and further classify the video frames with a fine-grained ConvLSTM learning from the reconstructed training set with hard frames.

Results

We justify the proposed solution through extensive experiments using 12 surgical videos (duration:8501 s). The experiments are performed on both hard frame detection and video frame classification. Nearly 88.3% fuzzy frames can be detected and the classification accuracy is boosted to 95.2%. HFD-ConvLSTM achieves superior performance compared to other methods.

Conclusion

HFD-ConvLSTM provides a new paradigm for video clipping by breaking the complex clipping problem into smaller, more easily managed 2-classification problems. Our investigation reveals that the hard framed detection based on blurring score calculation is effective for nasal endoscopic video clipping.

Similar content being viewed by others

References

Berthetrayne P, Gras G, Leibrandt K, Wisanuvej P, Schmitz A, Seneci CA, Yang G (2018) The i2snake robotic platform for endoscopic surgery. Ann Biomed Eng 46(10):1663–1675

Abdelsattar JM, Pandian TK, Finnesgard EJ, Khatib MME, Rowse PG, Buckarma EH, Gas BL, Heller SF, Farley DR (2015) Do you see what I see? how we use video as an adjunct to general surgery resident education. J Surg Educ 72(6):e145–e150

O’Mahoney PRA, Yeo HL, Lange MM, Milsom JW (2016) Driving surgical quality using operative video. Surg Innov 23(4):337–340

Ruthberg JS, Quereshy HA, Ahmadmehrabi S, Trudeau S, Chaudry E, Hair B, Kominsky A, Otteson TD, Bryson PC, Mowry SE (2020) A multimodal multi-institutional solution to remote medical student education for otolaryngology during Covid-19. Otolaryngology-Head Neck Surg 163(4):707–709

Yuan Y, Qin W, Ibragimov B, Zhang G, Han B, Meng MQ-H, Xing L (2020) Densely connected neural network with unbalanced discriminant and category sensitive constraints for polyp recognition. IEEE Trans Autom Sci Eng 17(2):574–583

Wu Z, Xiong C, Jiang Y-G, Davis LS (2019) A coarse-to-fine framework for resource efficient video recognition. In: Wallach H, Larochelle H, Beygelzimer A, d’ Alché-Buc F, Fox E, Garnett R (eds) Advances in neural information processing systems, vol 32. Curran Associates, Inc., New York, pp 7780–7789

Jin Y, Li H, Dou Q, Chen H, Qin J, Fu C, Heng P (2020) Multi-task recurrent convolutional network with correlation loss for surgical video analysis. Med Image Anal 59:101572

Shi X, Jin Y, Dou Q, Heng P-A (2020) LRTD: long-range temporal dependency based active learning for surgical workflow recognition. Int J Comput Assisted Radiol Surg pp 1–12

Twinanda AP, Shehata S, Mutter D, Marescaux J, Mathelin MD, Padoy N (2017) Endonet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36(1):86–97

Wang S, Raju A, Huang J (2017) Deep learning based multi-label classification for surgical tool presence detection in laparoscopic videos. In: 2017 IEEE 14th international symposium on biomedical imaging (ISBI 2017), pp 620–623

Jin Y, Cheng K, Dou Q, Heng P-A (2019) Incorporating temporal prior from motion flow for instrument segmentation in minimally invasive surgery video. In: Medical image computing and computer assisted intervention (MICCAI 2019). Springer International Publishing, Cham 2019:440–448

Al Hajj H, Lamard M, Charrière K, Cochener B, Quellec G (2017) Surgical tool detection in cataract surgery videos through multi-image fusion inside a convolutional neural network. In: 2017 39th annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 2002–2005

Bano S, Vasconcelos F, Vander Poorten E, Vercauteren T, Ourselin S, Deprest J, Stoyanov D (2020) Fetnet: a recurrent convolutional network for occlusion identification in fetoscopic videos. Int J Comput Assist Radiol Surg 15(5):791–801

Jin Y, Dou Q, Chen H, Yu L, Qin J, Fu C, Heng P (2018) Sv-rcnet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37(5):1114–1126

Cadene R, Robert T, Thome N, Cord M M2cai workflow challenge: Convolutional neural networks with time smoothing and hidden Markov model for video frames classification., arXiv:abs/1610.05541

Caba Heilbron F, Carlos Niebles J, Ghanem B (2016) Fast temporal activity proposals for efficient detection of human actions in untrimmed videos. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1914–1923

Wang L, Qiao Y, Tang X (2014) Action recognition and detection by combining motion and appearance features. THUMOS14 action recognition challenge 1(2): 2

Yi F, Jiang T (2019) Hard frame detection and online mapping for surgical phase recognition. In: 2019 Medical image computing and computer assisted intervention (MICCAI 2019) pp 449–457

Sun Y, Kamel MS, Wong A, Wang Y (2007) Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit 40:3358–3378

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. IEEE Trans Pattern Anal Mach Intell 99:2999–3007

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Wang H, Feng J, Wei N, Bu Q, He X (2016) No-reference image quality assessment based on re-blur theory. Chin J Sci Instrum 37(7):1647–1655

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2818–2826

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict for the authors Hongyu Wang, Xiaoying Pan, Hao Zhao, Cong Gao, Ni Liu.

Human rights statement

The included human study has been approved and performed in accordance with ethical stands.

Informed consent

Informed consent was applied.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was partially supported by the Youth Program of National Natural Science Foundation of China (No. 62001380), the National key R & D program of China (No. 2019YFC0121502), the Scientific Research Project of Education Department of Shaanxi Provincial Government (No. 19JK0808).

Rights and permissions

About this article

Cite this article

Wang, H., Pan, X., Zhao, H. et al. Hard frame detection for the automated clipping of surgical nasal endoscopic video. Int J CARS 16, 231–240 (2021). https://doi.org/10.1007/s11548-021-02311-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-021-02311-6