Abstract

With the recognition of free apps, Android has become the most widely used smartphone operating system these days and it naturally invited cyber-criminals to build malware-infected apps that can steal vital information from these devices. The most critical problem is to detect malware-infected apps and keep them out of Google play store. The vulnerability lies in the underlying permission model of Android apps. Consequently, it has become the responsibility of the app developers to precisely specify the permissions which are going to be demanded by the apps during their installation and execution time. In this study, we examine the permission-induced risk which begins by giving unnecessary permissions to these Android apps. The experimental work done in this research paper includes the development of an effective malware detection system which helps to determine and investigate the detective influence of numerous well-known and broadly used set of features for malware detection. To select best features from our collected features data set we implement ten distinct feature selection approaches. Further, we developed the malware detection model by utilizing LSSVM (Least Square Support Vector Machine) learning approach connected through three distinct kernel functions i.e., linear, radial basis and polynomial. Experiments were performed by using 2,00,000 distinct Android apps. Empirical result reveals that the model build by utilizing LSSVM with RBF (i.e., radial basis kernel function) named as FSdroid is able to detect 98.8% of malware when compared to distinct anti-virus scanners and also achieved 3% higher detection rate when compared to different frameworks or approaches proposed in the literature.

Similar content being viewed by others

1 Introduction

Today, smartphone is not only a cellular telephone, but it can integrate with the computer-like operating system, which is also able to perform various tasks with the help of apps. Symbian was the first modern mobile operating system for smartphones that entered the market in the year 2000. After that, limited mobile phone companies, like Nokia, Microsoft, Apple, and Google, has followed them and launched their own mobile operating systems in the market. Among these, Android operating systemFootnote 1 launched by Google in the year 2008 is quite popular as it is freely available, open source, and has a wide range of free apps in its play store. According to Stat Counter,Footnote 2 Android covers 74.92% share in the market till date. However, the success of Android in the market is mainly due to its apps. Currently, about 2.6 million apps are present in the official play store of Android,Footnote 3 which users can download and install for various purposes.

Android is a privilege-separated operating system where every app has its own individual system identity, i.e., Group-ID and Linux user-ID.Footnote 4 Each app of Android runs in a procedure sandbox and accesses the permissions to use the resources which are not present in its sandbox. Depending upon the sensitivity of permissions, the system automatically grant permissions or may prompt the users to approve or reject the requests for permissions. By taking the advantage of these permissions cyber-criminals target the user privacy. As stated in,Footnote 5 G-Data Security expert counted 3,246,284 malware apps until the end of the year 2018 and discovered over 7,50,000 new malware apps at the end of 2019. To defend Google play storeFootnote 6 from malware apps, Google introduced Google Bouncer in the year 2012, which scans new apps at the time of their launch. However, it has limitations, e.g., Bouncer can easily fingerprint.Footnote 7 It is not hard to bypass Google’s security check, so that malicious Android apps can make their way to Google Play store Footnote 8 and ultimately to users’ devices. By taking advantage of these permissions, cyber-criminal build malware apps on a daily basis and invite users to install these apps. More than two billion active Android devices are present in the market.Footnote 9 To overcome the drawback of the bouncer and to protect Android devices, Google introduced Google play protect in the market.Footnote 10 It has the capability to scan the apps in real-time. But it also have the limitations as stated in [28].

Android apps work on the principle of permission-model [11]. In addition to that, it provides protection at four level, that categorize permissions asFootnote 11 “signature”, “signature or system”, “normal” and “dangerous”. In our study, we do not consider “signature” and “signature or system” because they are system granted. We only consider “normal” and “dangerous” permissions which are granted by the user. Normal permissions does not pay any risk to the user’s privacy. If the permission is listed in its manifest, then it is granted by the system automatically. On the other hand, dangerous permissions give access to the user’s confidential data. However, it is purely dependent upon the user to give access or revoke the use of permission or set of permissions.

Performance of malware detection rely on selecting the right set of features. The features which are selected as an input to detect malware have a great effect on the performance of the malware detection. To select appropriate features or feature sets, in this study we use distinct feature selection approaches. Feature selection approaches are divided into two distinct classes, one class contains feature ranking approaches, and second class contains feature subset selection approaches. Feature ranking approaches are based on conclusive factors to arrange each feature according to its rank, further high ranking features are selected for a specified work. On the other side feature subset selection approaches depend upon the principle of selection of features’ subset, which collectively improve detection capability. In this work, six distinct kinds of feature ranking and four distinct kinds of feature subset selection approaches are used to select the right features sets. Further, selected feature sets helps in minimizing the value of misclassification errors since it eliminates irrelevant features and holds only those features which have excellent discriminative power.

In the literature, a number of researchers applied different machine learning algorithms to detect malware. Some of the broadly used algorithms are decision tree learning algorithms [60], neural networks [54, 59], clustering [14], regression and classification. The construction of an appropriate malware detection model which can help to detect apps that are really infected with malware is still a challenging task in the field of cyber-security. So in this work, we implement LSSVM using three distinct kernel functions viz., polynomial, linear, and RBF to build a model for malware detection. LSSVM is the variant of the SVM that is established on the hypothesis of statistical learning.

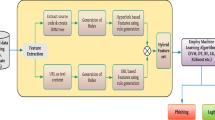

The list of phases pursued by us in building an effective Android malware detection model is demonstrated in Fig. 1. To conduct an empirical study on the collection of large data set, we collect Android application packages (.apk) files from distinct promised repositories. After that, we divide collected .apk files into two classes, i.e., benign and malware based on the results of different anti-virus scanners (Microsoft window defenderFootnote 12 and VirusTotalFootnote 13). In the next phase, we extracted permissions and API calls (consider as features in our work) by using distinct tools available publicly. Additionally, the right set of features is selected by implementing feature selection approaches on our collected data set. Further, the selected feature sets (i.e., permissions and API calls) are used as an input to form a model by considering LSSVM having three distinct kernel functions. At last, to validate that our proposed model is capable to detect malware or not we validate it with some existing frameworks or approaches developed in the literature and also compared our proposed framework with different anti-virus scanners available in the market.

The novel and unique assistance of this research work is presented as follows:

-

To the best of our knowledge, this is the first work in which 2,00,000 unique apps are collected that further belongs to thirty different categories of Android apps. To build effective and efficient malware detection model we extract permissions and API calls and consider them as features in this research paper.

-

In this research paper, we proposed a new framework that works on the principle of machine learning algorithm by selecting relevant features using feature selection approaches. Empirical result reveals that our proposed framework is able to detect 98.75% unknown malware from real-world apps.

-

Our proposed framework is capable to detect malware from real-world apps in less time-period when compared to distinct anti-virus scanners available in the market.

-

Our proposed framework is able to detect 3% higher detection rate when compared to different frameworks or approaches proposed in the literature.

-

In this study, we applied t-test analysis to investigate that features selected by feature selection approaches are having significant difference or not.

The rest of the paper is summarized as follows. Section 2 of this paper, discusses about the perviously developed approaches or frameworks used for malware detection along with the gaps present in the literature. Also, this section provides a brief structure of our proposed model based on the gaps in the literature. Section 3, explains the different feature raking approaches. Section 4, explains the different feature subset selection approaches used in this paper. Section 5 explains the LSSVM having different kernels to detect malware. In Section 6, we discuss different comparison methods of the proposed framework with existing techniques available in the literature. Performance parameters used for evaluation in this study are mentioned in Section 7. Sections 8 and 9 give the experimental setup of our proposed framework and the outcomes. In Section 10, we have discussed threats to validity and summarized our work with future scope in Section 11.

2 Related work and overview of proposed framework

Approaches or frameworks which were developed by the previous researchers to detect malware from Android apps are presented in this section. To find and overcome the gaps in the existing approaches, we divide this section in to two subsections. In the first subsection, we discuss about the frameworks or approaches, developed in the literature. In the second subsection, we first discuss about the data sets used in earlier studies and then we present the description about the collection of Android apps, formulation of our data set, extraction and formulation of feature sets, capability of features, feature selection approaches implemented in the literature. The research questions answered in this study are also formulated in this section.

2.1 Related work

In this subsection, we discuss about the analysis and its types which are used for Android malware. Later, we discuss about the detection techniques which were suggested by the previous researchers and academicians.

2.1.1 Analysis of Android apps

There are three different ways to carry out the analysis of Android apps i.e., static [29, 50, 72], dynamic [29] and hybrid [9, 34]. Static analysis is the one which analyzes the app without executing it. In dynamic analysis, it analyzes the app during its execution. The hybrid approach is the combination of both the static and dynamic analysis. Petsas et al. [68] have explored that malicious apps targeting the Android platform can evade dynamic analysis. They applied and tested heuristics of sophistication by integrating them in existing malware samples, which attempt to conceal the presence when examined in an emulated environment. Bläsing et al. [12] suggested Android Application Sandbox (AASandbox) that execute on both static and dynamic analysis to automatically identify the suspicious apps from Android. In this study, we perform dynamic analysis of Android apps to build a malware detection model.

2.1.2 Android Malware detection

Chen et al. [18] suggested Pegasus which use the app permissions to detect malware apps. They formed Permission Event Graph (PEG) by using the fundamentals of static analysis and applied models of the APIs. Peiravian and Zhu [67] employed machine learning methods to detect malicious Android apps. They perform experiment on 1200 real malware-infected apps and validated their performance. Chakradeo et al. [17] introduced Mobile Application Security Triage (MAST), a framework which supports to manage malware resources toward the apps by the most significant potential to exhibit malicious behavior. The MAST is a statistical method that measures the correlation between multiple categorical data using Multiple Correlation Analysis (MCA).

Wang et al. [84] studied the risk of single permission and the group of permissions. They employ feature ranking methods to rank individual Android permissions based on the risk involved. Enck et al. [25] build a framework named Kirin which used the principles of light-weight certification of apps to detect malware at the time of installation. Ongtang et al. [65] presented Secure Application INTeraction (Saint), that governs installation and run-time permissions. Grace et al. [35] proposed Woodpecker which examined the Android permission-based security model applied to pre-installed apps. Bugiel et al. [13] developed a model for a policy-driven and system-centric runtime monitoring of communication channels among apps at multiple layers. Zhou et al. [98] presented a systematic characterization of existing Android malware, such as, collecting charges from the devices by subscribing the services and mistreating SMS related Android permissions. Barrera et al. [8] proposed permission-based security models which helps to control access to different resources of system. They presented a methodology by doing empirical analysis of 1,100 Android apps for permission-based security models which make unique usage of self-organizing maps.

In recent study [52] Papilio introduced, a new approach for visualizing permissions of real-world Android apps. They build a new specific layout approach that includes node-link diagrams, matrix layouts and aspects of set membership. Matsudo et al. [61] presents a system model for supporting users’ approval decision when an app is installed. They introduced a reputation-based security evaluation first, which analyzes permissions to judge app is malicious or not. Arp et al. [4] proposed DREBIN, a lightweight approach for the detection of Android malware. They combined concept of machine learning and static analysis, which makes malware development better. DERBIN can scan a number of apps and can protect users to install apps from untrusted sources. Jeon et al. [40] address the issues of finer-grained permissions of Android. There proposed framework was based on four major groups of Android permissions and experiments were performed by taking top Android apps to differentiate between benign or malware-infected apps. PUMA presented in [74], is a new framework for detecting malware-infected Android apps by implementing machine learning algorithms after analyzing the extracted permissions from the Android apps itself. Grace et al. [34] developed RiskRanker, a proactive approach to accurately and scalably sift over a number of apps in existing App stores, to spot zero-delay malware. They conclude that 118,318 apps among 322 zero-day specimens from 11 distinct families were successfully discovered. TaintDroid [26] is a information flow tracking tool that can concurrently track multiple sources of sensitive data. A new model to protect smartphones was discussed in [70]. This model execute attack detection on a remote server where the implementation of the app on the smartphone mirror in a virtual machine. Schmidt et al. [5] presented anomaly detection using machine learning to monitor system-based information and system gathering behavior that is processed by a remote system. Zheng et al. [95] focused on the demanding task of triggering a particular behavior through automated UI interactions. They proposed a hybrid analysis approach to display UI-based trigger conditions in Android apps. To discover malware at kernel-level and user-level, a technique, named MADAM, has been developed in [24], which is capable to distinguish malware or benign apps. A fine-grained dynamic binary instrumentation tool named as DroidScope is presented in [91], for Android that reconstructs two levels of semantic information i.e., Java and operating system. A framework to monitor system calls named as Crowdroid is introduced in [14]. Crowdroid can see the track of information flows and API analysis which paid great impact to find malware activities in the network.

A root privilege management scheme called Root Privilege Manager (RPM) were proposed by [80]. It prevents Android apps from the risk raised by the permissions i.e., normal or dangerous. Wang et al. [82] analyses the used permissions and support-based permissions candidate method to detect Android malware. A hybrid feature selection approach which work on Rough Set Quick Reduct algorithm to detect malware was proposed in [10]. Wang et al. [85] collected 11 kind of static features by extraction from each type of app to characterize its behavior. By collecting the behavior, they applied classification algorithms to categorize malware and benign apps. Kirubavathi et al. [46] proposed a structural-based analysis learning approach, which accepts machine learning algorithms to detect malware and benign apps. They adopt botnet linked patterns of requested permissions as a feature to evaluate benign and malware apps. Jerlin et al. [41] suggested a new approach to detect malware by using its Application Programmable Interfaces (APIs). They adopt upper and lower boundaries as one of its feature to detect malware from Android. Mahindru and Singh [60] applied supervised machine learning algorithm on 172-permissions extracted during its installation and start-up time from Android apps.

Xiao et al. [88] proposed an approach that was based on deep learning to distinguish between benign and malware apps. In their approach, they consider system call as feature and trained it with the help of Long Short-Term Memory (LSTM) classifier. In their study, they trained LSTM models with system call sequences from malware and benign apps. Experiments were performed on 3567 malware-infected and 3536 benign apps and achieved recall of 96.6%. Mahindru and Sangal [54] proposed a framework DeepDroid that works on the principle of deep learning. They extract permissions and API calls as features from collected Android application packages (.apk). To select significant features to develop malware detection model six distinct feature ranking approaches are applied on extracted features. Experiments were performed on 1,00,000 benign apps and 20,000 malware-infected apps. Framework developed using Principal component analysis (PCA) as feature ranking approach achieved a detection rate of 94%. Letteri et al. [49] proposed a botnet detection methodology for internet of things (IOT) based on deep learning techniques, tested on a new, SDN-specific data set with a high (up to 97%) classification accuracy. Devpriya and Lingamgunta [23] proposed a novel hash-based multifactor secure mutual authentication scheme that includes hashing properties, certificates, nonce values,traditional user ids, and password mechanisms that resist MITM attacks, replay attacks, and forgery attacks.

Ma et al. [53], presented Android malware detection model based on the principle of API information. In their study, with the help of API information they construct three distinct data sets that are related to boolean, frequency and time-series. Based on these three data sets, three distinct detection models are developed. Experiments were performed by using 10010 benign and 10683 malware apps and achieved an accuracy of 98.98% by considering an ensemble approach. Mahindru and Sangal [59] proposed PerbDroid that developed by using features selected by feature ranking approaches and deep learning as machine classifier. Experiments were performed on 2,00,000 distinct Android apps and achieved a detection rate of 97.8%. Wang et al. [86] proposed a hybrid model based on convolutional neural network (CNN) and deep autoencoder (DAE). To improve the accuracy of malware detection model, they employed multiple CNN to select features from high-dimensional features of Android apps. Experiments were performed on 10,000 benign and 13,000 malware-infected apps and trained it with the help of serial convolutional neural network architecture (CNN-S). Mahindru and Sangal [56] proposed malware detection model with semi-supervised machine learning techniques. They applied LLGC algorithm on 2,00,000 distinct Android apps and achieved an accuracy of 97.8%.

Yamaguchi and Gupta [90] discussed properties of IOT device which make it more vulnerable for malware attacks i.e., large volume and pervasiveness. In their study, they proposed method to mitigate the attack on IOT based devices. Gupta et al. [36] proposed a book that is related to security measure and challenges faced by different communication devices. They also discussed different methods to mitigate the attacks. In [32], it was seen that feature selection approach paid a great effect in developing the model. Authors implemented Principal Component Analysis (PCA) to reduce the complexity of the model. With the advancement in the machine learning algorithms like SVM [20, 94], Deep learning model [32, 38, 96], it not only helped in detecting intrusion detection [45], cyber attacks but it also helped in health sector and in wireless routing too. Distinct researchers applied deep learning model and hybrid methods [32, 38, 96] in their study and achieved remarkable results.

Arora et al. [3] proposed PermPair, in which they construct and compare the graphs by extracting permissions from benign and malware-infected apps. Empirical result reveals that proposed malware detection model achieved an accuracy of 95.44% when compared to other similar approaches and favorite mobile anti-malware apps. Mahindru and Sangal [55] proposed DLDroid malware detection model, that is based on feature selection approaches and Deep Neural Network (DNN) machine learning algorithm. In their study, they collected Android apps that are developed during COVID-19. Experiments were performed on 11,000 distinct Android apps and model developed using DNN and Rough set analysis achieved a detection rate of 97.9% when compared to distinct anti-virus scanners available in the market.

Table 1 describes the brief details of some existing Android malware detection techniques present in literature. It also includes the type of monitoring and type of analysis used for these techniques. The conclusions made from these techniques are presented in the last column of the table.

2.2 Gaps and overview of our proposed framework

In this subsection of the paper, we discuss about the gaps that are present in the previous studies and how we can overcome these gaps while developing an efficient and effective Android malware detection framework.

2.2.1 Gaps present in the previous frameworks/approaches

The research done earlier in this field had the following limitations: use of limited data set, high computation burden and unable to detect sophisticated malware. To overcome the first limitation, in this study, we collect 2,00,000 Android apps which belong to thirty different categories from different promised repositories mentioned in Table 3. Further, to select significant features which help to reduce computation burden, we implement ten distinct feature selection approaches on extracted feature data set (i.e., Permissions, API calls, number of user download the app and rating of an app). Next, selected features are considered as input to build model by using distinct machine learning algorithms so that suitable framework is build to detect malware from real-world apps. In the previous studies, developed frameworks/approaches were not tested on real-world apps.

2.2.2 Description of the collected Android apps

Pervious frameworks or studies mentioned in Table 2, used only limited data sets of Android apps to examine its associations with malware or benign class. Therefore, it is not able to draw generic conclusion relevant to all Android apps and its system. To overcome this gap, we collect apps that belongs to thirty different categories which are used to generalize and strengthen our outcomes. In this study to develop efficient and effective Android malware detection model, we collect Android Application packages (.apk) from different promised repositories. We collected 2,00,000 of .apk files, from Google’s play store, hiapk,Footnote 14 appchina,Footnote 15 Android,Footnote 16 mumayi,Footnote 17 gfan,Footnote 18 slidemeFootnote 19 and pandaapp.Footnote 20 Among these 2,00,000 benign .apk files, 1,75,000 are distinct. Further, the features are extracted after deleting viruses infected apps, reported by VirusTotal and Microsoft Windows Defender. VirusTotal identify malware affected apps by antivirus engines, it contains the definition of 70 antivirus softwares. A total of 35,000 malware samples, are collected from three different promised repositories. Kadir et al. [43], introduced Android sample set of 1929 botnets, consisting of 14 distinct botnet families. Android Malware Genome project [98] contains a data set of 1200 malware samples that cover the currently present Android malware families. We collected about 17,871 samples from AndroMalShareFootnote 21 along with their package names. After removing duplicate packages from the collected data set, we have 25,000 unique malware samples left in our study. Both benign and malware apps being collected from the above mentioned sources at the end of December 2018. Table 3 shows the number of .apk files belonging to different categories i.e., business, comics, communication, education and so on. To better differentiate between benign and malware apps we consider .apk files belonging to normal, trojan, backdoor, worms, botnet and spyware familiesFootnote 22 mentioned in Table 3.

2.2.3 Formulation of data set

After collecting a unique samples of .apk files from various sources mentioned in previous subsection, we extract permissions and API calls from each of the .apk file. Extraction of permissions and API calls have been performed with the help of an emulator (in our study we use Android studio). Emulator provides the same API level and execution environment as our smartphones provide to us. In our study, to extract permissions and API calls from Android apps we use Android system version 6.0 Marshmallow (i.e., API level 23) and form our data set for experiments. Previous developed frameworks or approaches used the previous version of Android to extract features from them. There are two reasons for selecting this Android version: first, it asks the user to revoke or grant the permission to use the resources of smartphones and second it covers 28.1% of Android devices which is higher than other versions present in the marketFootnote 23. A flowchart showing that how an app gets installed and demand permissions on Android 6.0 is presented in Fig. 2. When we start the installed app at the very first time, it demands some of the permissions which are required by the app to function properly. Next, SDK level is checked if the version of SDK is ≥ 23 then Android ask from the user to grant or revoke the permission to the app. If the user grant the permissions to the app, then call command is executed otherwise not. This facility was not available in the earlier versions of Android.

Figure 3 demonstrates the phases which are followed in extracting features from Android apps. In the first phase, to extract features from collected .apk, we perform dynamic analysis by using Android studio as an emulator. To extract permissions and API calls, we use Android 6.0, i.e., Marshmallow mentioned above. Further, we write a program in java language and extract permissions and API calls from them and save into the .csv file [58] that are publicly available for researchers and academicians.Footnote 24 These permissions are demanded by apps during their installation and start-up time. By using the same process again and again, we extract permissions from 2,00,000 different Android apps and record them in the .csv file format. Previous researchers used limited set of features to develop a model for malware detection. To overcome this gap, in this study we collect 1532 permissions, 310 API calls, number of user download the app and rating of an app Footnote 25 which helps to build a model by using feature selection approaches. Hence, each of the collected app can be represented as a 1844-dimensional Boolean vector, where “1” implies that an app requires the permission and “0” implies that the permission is not required. It is very common that distinct apps may request the similar set of permissions for its execution. Permissions overview given by GoogleFootnote 26 is used to describe the behavior of a permission i.e., “dangerous” or “normal”.

2.2.4 Formulation of feature sets

Several approaches had been developed for Android malware detection [1, 8, 87]. In this study, we divide the extracted API calls and permissions in to thirty different feature sets which helps

in developing malware detection model.Footnote 27 Table 4 displays the basic description of the feature sets that are considered in our work.

2.2.5 Capability of features

The objective of this work is to study, the relationship among the features and its associated classes (i.e., benign or malware) which helps in malware detection. In this paper, malware is considered as dependent variable and feature sets are taken into consideration as independent variables. Malware is supposed to be a work of numerous set of features for instances S1, S2, S3, S4, S5,...., up to S30. To investigate the efficacy of the feature sets utilized, they are further classified into three different groups which are written as under:

-

a.

Analysis 1 (A1): The efficacy of feature sets is utilized to dat the level of class. The relationship among malware and feature sets is possibly outlined as below:

Malware=f (S1, S2, S3, S4, S5,...., S30)

-

b.

Analysis 2 (A2): Reduced feature attributes attained by utilizing feature ranking approaches are considered as an input for building a malware detection model for Android. To rank the features based on their performance, in the present work we consider six distinct feature ranking approaches. According to the study, [33, 76], it may be relevant to choose top \(\lceil \log _{2} q \rceil \) set of features by utilizing feature ranking approaches. In the present study, we also use top \(\lceil \log _{2} q \rceil \) set of features to detect either the app is benign or normal. Here “q” indicates the set of features present in a novel data set. The association can be presented in the following way:

Malware= f (reduced set of features utilizing feature ranking approaches)

-

c.

Analysis 3 (A3): Feature subset selection approaches are considered to reduce the size of feature sets which further can be considered as an input to build a model for detecting malware from Android. In the present research, we consider four distinct feature subset selection approaches to discover abbreviated subset of features. The association can be presented in the following way:

Malware= f (reduced set of features utilizing feature subset selection approaches)

2.2.6 Feature selection approaches

On the basis of Table 5, it is seen that in previous studies a number of authors applied different feature ranking approaches to detect malware from Android apps and achieved a good detection rate. This indicates that the outcome of malware detection model rely on the features that are taken as an input to design a model. Selecting the suitable feature sets is essential for data preprocessing task in machine learning. In the field of malware detection, some researchers have used selection approaches to select appropriate set of features. Allix et al. [2] performed an empirical validation of Android apps for malware detection. They performed the static analysis of Android apps’ bytecode to withdraw an illustration of the program control-flow graph (CFG). Further, the extracted CFG is stated as character strings in their study on establishing similarity between Android app. They derived that string representation of the CFG is an abstraction of the app’s code that keeps information related to structure of the code, on the other hand they discards low-level details such as variable names or registered numbers. This is a desirable property in the context of malware detection. They performed malware detection on 50,000 Android apps. Shabtai et al. [76] investigated whether the feature extraction at app level, operating system level, scheduling level etc. helps them to detect malware or not. They performed experiment on a small number of Android apps, used less number of features and implemented less number of feature selection approaches to detect malware. Also, none of the researches done earlier in this direction have used feature subset selection approaches. So, in this paper we implemented ten distinct types of feature selection approaches on a large collection of 1842 features (divided in to thirty distinct feature sets) to identify the best subset of features which assist us to detect malware detection with better detection rate and also minimize the figure of misclassification errors. Feature ranking approaches and Feature subset selection approaches can be defined in the following manner:

-

Feature ranking approaches: These approaches, use certain conclusive elements to rank the features. Further, on the basis of their ranks appropriate features can be selected to build the model.

-

Feature subset selection approaches: These approaches aim to search subset of features which can have good detective capability.

2.2.7 Research questions

To develop a malware detection model for Android malware detection from real-world apps with better detection rate and to cover the gaps in the literature (i.e., selection of right feature sets to develop a model, implementation on large collection of data set and implementation of proposed framework on real-world apps), we consider the following research questions in this research paper:

-

RQ1. Which malware detection model is most appropriate to detect malware from real-world apps?

This question helps in finding the most appropriate model which is suitable for malware detection in Android. In this work, we build 30 distinct models by considering ten distinct feature selection approaches and three different machine learning techniques. Further, to identify that which model is more appropriate for malware detection we consider two performance parameters i.e, F-measure and Accuracy in our study.

-

RQ2. Whether the presented malware detection framework is effective or not to detect malware from Android devices?

The goal of this question is to investigate the performance of our malware detection approach. For this, we compare the performance of our developed model with some existing techniques available in the literature.

-

RQ3. Does a subset of feature perform better than all extracted features for the task of detecting the app is malware or not?

The aim of this question, is to evaluate the features and investigate their relationship among benign and malware apps. Distinct kinds of feature reduction approaches are being considered for finding subset of features which are able to detect either the app is benign or not.

-

RQ4. Among different implemented feature ranking approaches which approach work best for the task to detect either the Android app belong to benign or malware class?

In feature ranking approach, efficiency of the machine learning algorithms is affected by the characteristics and nature of the malware data set. Distinct approaches are being implemented with various criterions to rank the collected feature sets. Two distinct performance criterions i.e., F-measure and Accuracy are considered in this study, to compare distinct feature-ranking approaches.

-

RQ5. Among applied feature subset selection approaches which approach performs foremost for the task of detecting malware from Android apps?

To determine the subset of features which are appropriate to detect either the Android app is benign or malware we consider feature subset selection approaches. In this work, we compare distinct approaches by using two performance criterions i.e., F-measure and Accuracy.

-

RQ6. How do the feature subset selection approaches compare with feature ranking approaches?

In this paper, pair-wise t-test being used to determine either the feature subset selection approaches are appropriate than feature ranking approaches or both of them behave equally well.

-

RQ7. Do the feature selection approaches effect on the outcome of the supervised machine learning approaches?

It is seen that number of feature selection approaches perform extremely well with specific supervised machine learning methods. Therefore, in this research work distinct feature selection approaches are evaluated using distinct supervised machine learning approaches to measure their performance. Further, it also emphases on variation of performance of supervised machine learning approach over distinct supervised machine learning approaches.

3 Feature ranking approaches

These approaches rank features separately without applying any training algorithm. Ranking of features depends upon their score. On the basis of our investigation of the previous studies, the majority of approaches are capable to calculate the grading of every feature. In this research, we employ six different ranking approaches to rank the features. Feature ranking approaches are explained below:

3.1 Gain-ratio feature selection

In this selection approach, feature ranking work on the prediction of the gain-ratio in relation to the class [64]. The “Z” known as the gain-ratio of feature is determined as:-

where Gain(Z) = I(X) − E(Z) and X depicts the set including m numbers of instances with n different classes. The forthcoming statistics necessary to categorize a given sample is calculated by utilizing succeeding equation:

Here in this equation Pi is the chance that a random sample can be a member of class Ci and is measured by zi/z.

The number of instances is given by zij of class Ci in subset Nj. The foreseen knowledge is relying on the partition of subsets by F, and is presented by

SplitInfoF(X) is measured by utilizing following equation:

The value of SplitInfoF(X) show us the details achieved by dividing the data set of training X into t portions equivalent to t results of a test on the attribute Z.

3.2 Chi-Squared test

This test is employed to examine the self-determination among two events [69], and in our work, ranking of features is predicted by the significance of its statistic in relation to the class. Higher the calculated value implies the denial of the outliers and consequently these features can be analyzed as better relevance to detect malware from Android apps.

3.3 Information-gain feature selection

In Info-gain features are selected on its relation with respect to the class [64].

3.4 OneR feature selection

OneR feature selection approach is used for grading the features [64]. To rank individual features it utilizes the classification mechanism. In it valuable features are considered as constant ones and divide the set of values into a few dissociate intervals made by straightforward method. In this study, we consider features with better classification rates.

3.5 Principal Component Analysis (PCA)

Reduction of attribute is accomplished by implementing PCA on our collected data set. PCA helps in transforming a high dimension data space into a low dimension data space. Features which are present in low dimension have extreme importance in detecting malware [83]. Correlation among several features are high, so PCA is utilized to relocate these features that are not extremely correlated. The features obtained are named as principal component domain features. Further, to identify significant patterns in the data a small value of principal components is sufficient. The detailed phases of PCA are demonstrated in Fig. 4.

Feature data set is collected in the form of m ∗ n matrix, that contains n number of data sample and m number of extracted features. In the second phase, normalization of the feature data set is performed by using equation

and replace xj with (xj − μj). Next, we calculate eigen value and eigen vector by using matlab environment. Next, to select first k number of principal components from the covariance matrix we performed following steps

while(i = 1 to m) do evaluate cumvar = \(\displaystyle \sum \limits _{i=1}^{k} \lambda _{ii}\)

if (cumvar ≥ 0.99)or(1 − cumvar ≤ 0.01) return k 99% of variance is retained end if end while cumvar denotes (cumulative variance) and (λ) represents eigen values sorted in descending order. After evaluating this, reduced feature sets are selected for training purpose.

3.6 Logistic regression analysis

For feature ranking, Univariate Logistic Regression (ULR) analysis being considered to verify the degree of importance for every feature sets [21]. In the current work, we consider two benchmarks of LR model; to discover the importance of every feature and to rank each feature sets. Parameters for Logistic regression analysis are as follows:

-

1.

Value of regression coefficient: The coefficient measure of features indicates the degree of correlation of every feature sets with malware.

-

2.

P-value: P-value i.e., level of significance shows the correlation significance.

4 Feature subset selection approaches

These approaches are employed to detect appropriate subset of features which jointly have best detective capability. These are established on the hypothesis that developed model has better detection rate and lower value of misclassification errors when linked with few other features or when matched by itself. Several approaches are feasible to identify the right subset of features which helps in detecting malware. In this work, four distinct feature subset selection approaches are considered to calculate the score of feature. Implemented approaches are depicted below:

4.1 Correlation based feature selection

This approach is based on correlation approach which select a subset of features that are particularly related to the class (i.e., benign or malware). In this research paper, Pearson’s correlation (r: Coefficient of correlation) has been used for searching the dependency among features. If the value of “r” is higher among the feature sets, it indicates a strong relation among these features. It further implies that, there is a statistical reason to consider those classes which are having lower (or highest) feature value with that it have lower (or highest) ranges of other highly correlated features.

4.2 Rough set analysis (RSA)

This approach is an estimation of conventional set, in terms of a joins of feature sets which provide the upper and the lower estimation of the original data set [66]. This formal estimation, depicts the upper and lower limits of the original data set. The application of this approach is in mining the data from imperfect data. This approach is used to select the reduced set of features from the extracted feature sets. RSA used three distinct notations such as approximations, reduced attributes and information system. The steps that are pursued to get reduced subset by utilizing RSA are mentioned-below and also demonstrated in Fig. 5.

-

i.

Approximation: Let \(A=(C,Z), X\subseteq Z\) and \(Y\subseteq C.\) X − topmost (XY ) and X − lowermost (< uline > X < /uline > Y ) approximations of X are utilized to estimate Y. The topmost limit includes all the objects which maybe the part to the set and the lowermost approximation includes of all objects which certainly be a part of the set. The XY and (< uline > X < /uline > Y ) are computed by utilizing subsequent equations:

$$ \bar{X}Y=\{y_i\in U|[y_i]_{Ind(B)}\cap Y\neq0\} $$(5)$$ \underline{X}Y=\left.\{y_i\in U\right|[y_i]_{{Ind(B)}\cap Y}\}, $$(6)where |[yi]Ind(C) belongs to the same class of yi in connection Ind(C).

-

ii.

Reduced attributes: Correctness evaluation of the group Z(Acc(Z)) in \(A\subseteq B\) is determined as:

$$ \mu_B(A)=\frac{card(\underline{B}Z)}{card(\bar{B}Z)} $$(7)The number of features contained in the topmost or uppermost approximation of the set Z is called the cardinality of the set. Further, all possible feature sets are considered whose accuracy is equivalent to the accuracy of extracted feature sets.

-

iii.

Information system: It is determined as Z = (C,B), where C is a universe including non-empty set of confined objects and B is the sets of attributes with a finite number of elements. For each b ∈ B, there exist a function \(F_{b}:C\rightarrow V_{b}\), where Vb denotes the value of attribute b. For each A ⊂ B, there exists an equivalence relation known as B-indiscerbility relation (Ind(Z)). Ind(Z) can be defined as:

$$ IND_A(Z)=\{(x, y) \in C^2 \left| \forall a\in Z,\right. a(x)=a(y)\}. $$(8)

4.3 Consistency subset evaluation approach

This technique provides the importance of subset of attributes by their level of consistency appearing in class values, when the training instances are applied on the subset of attributes. The consistency rate is calculated with the help of inconsistency rate, where two data elements can be considered as inconsistent if they belong to different class labels (i.e., benign or malware) but have same feature values. For this work, destination variable i.e., apps having two distinct characteristics (i.e., 0 for benign apps and 1 for malware apps). A group of feature (GF) is having Z amount of sample, there are z amount of instances in a manner that Z = X1 + X2 + … + Xz. Instance Xi seems in entirely A samples from which A0 numbers of samples are marked by 0 and A1 number of samples are marked by 1, here A = A0 + A1. If A1 is less than A0, then the difference count for the instance Xi is INC = A − A0. The inconsistency rate (INCR) of feature set is computed by utilizing succeeding equation:

4.4 Filtered subset evaluation

Filtered subset evaluation is based on the principle to select random subset evaluator from data set that was gained by applying arbitrary filtering approach [47]. The filtering technique does not based on any induction algorithm. Filtered subset evaluation technique is scalable and fast. Figure 6 demonstrates the steps followed to find subset of feature by utilizing filter method.

5 Machine learning techniques

In the previous studies, number of frameworks were developed by implementing various machine learning algorithms like K-nearest Neighbour (KNN), Decision Tree (J48), Naïve Bayes (NB), Multi-Layer Perceptron (MLP) and Random Forest (RF) by [81], Bayesian classification by [92], Bayes network, Multi-layer perceptron, J48, Random forest and K-Nearest neighbours by [63] and Support Vector Machine (SVM), Decision Tree (J48), Logistic Regression, Random Forest(RF), K-Nearest neighbors, Naïve Bayes(NB), Adaboost and Deep Learning by [30]. It is seen in the literature, that the performance of LSSVM [42, 48] in predicting the fault is better when compared to other machine learning algorithms. So, in this study, we use LSSVM classifier with three distinct kernel functions i.e., linear, RBF and polynomial which is described below:

5.1 LSSVM classifier

LSSVM being the part of supervised machine learning algorithm, is used in different fields for example : regression, classification and outliers detection [78]. The fundamental design of LSSVM machine learning algorithm is based on two-class problems, where data are divided on the basis of optimum hyperplane determined by support vectors. Boundary value of the training set is decided by the support vectors among two classes. In this research paper, LSSVM with distinct kernels being used as classifier for building a model to classify benign and malware apps. The comprehensive type of LSSVM function is determined as:

here z is the output vector and y is the input vector, ϕ(y) is a non-linear mapped function and it is utilized to map the data input with greater size of feature space. a indicate the adapt weight vector and b represent the scalar threshold value accordingly. The succeeding equation is improved by the following manner:

here Ex is the input measure by error sample a and γ be the cost function. By solving this problem, malware detection values are obtained from the equation given below:

here K(bi,b) is the function of kernel, in order to enable the product to be carried out in high-dimensional feature space by utilizing data space in low-dimension. The considered kernel functions in the present research paper are stated as follows:

-

1.

Linear function:

$$ K(x_i,x_j)=x_i^Tx_j $$(13) -

2.

Polynomial function:Footnote 28

$$ K(x_i,x_j)=(x_i^T*x_j+C)^d $$(14) -

3.

Radial basis function or RBF Kernel:Footnote 29

$$ K(x_i,x_j)=e^{\gamma \|x_i-x_j\|}, \ \gamma>0 $$(15)

6 Comparison of proposed model with different existing techniques

To validate that our proposed framework is able to achieve higher detection rate or not, we compare the result of our proposed model with three different techniques mentioned below:

-

a.

Comparison of results with previously used classifiers and frameworks:- To validate that our proposed model is feasible to detect malware as equivalent to previous used classifiers or not, we calculate two performance parameters like Accuracy and F-measure for new proposed model and existing models. In addition to that, we compare our developed malware detection model with existing frameworks or approaches.

-

b.

Comparison of results with different Anti-Virus scanners:- To compare the performance of our model for malware detection, we chose ten available distinct anti-virus scanners and compare their detection rate with the detection rate of our proposed framework.

-

c.

Detection of known and unknown malware families:- Further, to evaluate how much our proposed framework is reliable to detect known and unknown malware families, we test known and unknown malware families with our proposed framework and calculate the accuracy to detect the malware.

7 Evaluation of performance parameters

In this section of the paper, we discuss the fundamental definitions of the performance parameters utilized by us while evaluating our proposed model for malware detection. Confusion matrix is used to calculate all these parameters. It consists of actual and detected classification information built by detection models. Table 6 demonstrates the confusion matrix for malware detection model. In the present work, two performance parameters namely, F-Measure and Accuracy are utilized for measuring the performance of malware detection approaches.

-

True Positives (TP): A true positive is an outcome where the model correctly predicts the positive class.

-

True Negative (TN) : A true negative is an outcome where the model correctly predicts the negative class.

-

False Positive (FP): A false positive is an outcome where the model incorrectly predicts the positive class.

-

False Negative (FN): A false negative is an outcome where the model incorrectly predicts the negative class.

-

Precision: Precision quantifies the number of positive class predictions that actually belong to the positive class.

$$ Precision =\frac{a}{a + b}. $$(16) -

Recall: Recall quantifies the number of positive class predictions made out of all positive examples in the dataset.

$$ Recall =\frac{a}{a + c}, $$(17)where \(a= N_{Malware\rightarrow Malware},\) \(b= N_{Benign\rightarrow Malware},\) \(c= N_{Malware\rightarrow Benign}\)

Accuracy

Accuracy is defined as corrected detection of malware-infected apps with total number of benign and malware-infected apps. For supervised, semi-supervised and hybrid machine learning techniques it is given by

where Nclasses = a + b + c + d, \(d= N_{Benign\rightarrow Benign}\)

F-measure

In this research paper, we develop distinct malware detection models by implementing distinct machine learning algorithms. So it is very difficult to compare two different models with high recall and low precision or vice versa. Therefore, in this study we used F-measure to compare two different models. F-measure helps to measure Precision and Recall at the same time. F-measure uses Harmonic Mean in place of Arithmetic Mean by punishing the extreme values more and is defined by.

8 Experimental setup

In the present section, we introduce the experimental setup done to find the performance of our developed malware detection models. LSSVM with three distinct kernel functions (i.e., polynomial, RBF and linear) is implemented on thirty different categories of android apps mentioned in Table 3. All these data sets have varying number of benign or malware apps which are adequate to perform our analysis. Figure 7 shows the framework of our proposed model named as FSDroid.

In the very first step, feature ranking and feature subset selection approaches are applied on the extracted features data set. In the next step, we use the Min-max normalization approach to normalize the data. This approach is based on the principle of linear transformation, which bring each data point \(D_{q_{i}}\) of feature Q to a normalized value \(D_{q_{i}},\) that lie in between 0 − 1. Following equation is considered to find the normalized value of \(D_{q_{i}}:\)

where min(Q) & max(Q) are the minimum and maximum significance of attribute Q, respectively. In the third step, we trained significant features by implementing distinct machine learning techniques. In the next step, we construct a confusion matrix and calculate the performance parameters i.e., accuracy and F-measure. Next, we compare the performance of the developed malware detection model and select the best malware detection model. At last, we compare the performance of our proposed malware detection model with existing techniques available in the literature and distinct anti-virus scanners. If the performance of our proposed malware detection model is better than existing techniques then it is useful and in reverse of it if the performance is not enhanced than the proposed malware model is not useful.

The subsequent measures are pursued at the time of selecting a subset of features to built the malware detection model that detects either the app is benign or malware. Feature selection approaches are implemented on 30 different data sets of Android apps. Hence, a total of 990 ((1 selecting all extracted features + 10 feature selection approaches) * 30 data sets (subsets of different feature sets particular to data sets determined after conducting feature selection) * 3 detection methods) different detection models have been build in this work. Below we provide step by step details of our approach:

-

1.

In the present work, four feature subset selection approaches and six feature ranking approaches are implemented on 30 different feature sets to select the right set of features for malware detection.

-

2.

The subsets of features obtained from aforementioned procedure are given as an input to machine learning classifiers. To compare the developed models, we use 20 fold cross-validation method. Cross-validation is a statistical learning approach that is utilized to classify and match the models by dividing the data into two different portions [47]. One portion is utilized to train and the remaining portion of data is utilized to verify the build model, on the basis of training [47]. The data is initially separated into K same sized segments. K-1 folds are utilized to train the model and the rest one fold is utilized for testing intention. K-fold cross-validation is having important significance in utilizing the data set for the both testing and training. For this study, 20-fold cross-validation is utilized to analyze the models, i.e., data sets are segregated into 20 portions. The outcomes of all build malware detection models are matched with each other by employing two distinct performance measure parameters: F-measure and AccuracyFootnote 30.

-

3.

FSDroid i.e., proposed model build by utilizing above two steps are validated with the existing techniques developed in the literature to review whether the build malware detection model is useful or not.

9 Results of performed experiment

In the current section of the paper, the relationship among different feature sets and malware detection at the class level is submitted. Set of features are used as an input and present the ratio of benign and malware apps within an experiment. F-measure and Accuracy are used as performance assessment parameters to match the performance of malware detection model build by using LSSVM with distinct kernel functions (i.e., polynomial, RBF and linear). To depict the experimental results we utilize the abbreviations as given in Table 7 corresponding to their actual names.

9.1 Feature ranking approaches

Six feature ranking approaches: gain-ratio feature evaluation, Chi-squared test, information gain feature evaluation, logistic regression analysis, information gain, oneR feature evaluation and principal component analysis are implemented on a distinct feature sets. Each approach utilize distinct performance parameters to rank the feature. Moreover, top \(\lceil \log _{2} a \rceil \) set of features from “a” number of features being measured to build a model for detecting malware. For initial four feature ranking approaches (Gain-ratio feature evaluation, Chi-squared test, OneR feature evaluation and Information gain), top \(\lceil \log _{2} a \rceil \) are selected as subset of features, where a is the number of features in the original data set (for this work a= 20). However, in the case of ULR, those features are selected which posses a positive value of regression co-efficient, i.e., p-value measure is below 0.05, and in matter of PCA, only those features are selected which have eigenvalue greater than 1. Considered features using feature ranking approaches are demonstrated in Fig. 8.

9.2 Feature subset selection approaches

In the present work, four distinct kinds of feature subset selection approaches are implemented on thirty data sets of Android apps one after another. Feature subset selection approaches work on the principle of hypothesis which make models with better accuracy and make less amount of misclassified errors, while selecting the best features from available number of features. Later, these isolation subset of features has been selected as an input for building a model to detect either the app is benign or malware. Considered set of features after feature subset selection approaches are demonstrates in Fig. 9.

9.3 Machine learning techniques

Eleven subsets of features (1 considering all set of extracted features + 10 resulting by implemented feature selection approaches) are used as an input to build a model for malware detection. Hardware utilized to carry out this study is Core i7 processor having storage capacity of 1TB hard disk and 16GB RAM. Detection models are build by use the MATLAB environment. The performance of each detection model is measured by using two performance parameters: F-measure and Accuracy and in addition to this we also measured the time to build and test the model. Table 8, 9, 10, 11, 12 and 13, presents the performance values obtained for distinct data sets by utilizing LSSVM with linear, RBF and polynomial kernel function and time to build and test the models. On the basis of Table 8–13, it may be concluded that:

-

Model developed by considering features selected by feature selection approaches as an input is able to detect malware more effectively rather than model developed by using all extracted feature sets.

-

Model constructed by considering LSSVM with polynomial, RBF and linear kernel by selecting FS4 as an input achieved higher detection rate when compared to other models developed by using different feature selection approaches.

-

Model build by considering LSSVM with RBF kernel by selecting FS4 as an input achieved higher detection rate when compared to other models developed by using polynomial and linear kernel.

In this research paper, LSSVM with three kernel functions and ten distinct feature selection approaches are considered to select features which helps to detect Android malware more effectively. To find out which developed model is more capable to detect malware, we construct box-plot diagrams of the individual model. Box-plot diagrams helps to identify which model is best suitable for malware detection on the basis of few number of outliers and better value of median. Figure 10a–f demonstrate the box-plot diagrams for F-measure and Accuracy for every developed model. The x-axis of the diagrams presents the feature selection techniques. Figures include eleven box-plot diagram: one box-plot diagram consists of all extracted feature sets, four box-plot consist of feature subset selection approaches and six box-plot consist of feature ranking approaches. On the basis of the box-plot diagram, we find following observations:

-

Model constructed by considering LSSVM with polynomial and linear kernel and FS4 achieved higher median value in addition to few outliers. On the basis of box-plot diagrams demonstrated in Fig. 10a–f, model developed by considering FS4 as feature selection approach gives better detection rate when compared to other developed approaches.

-

From box-plot diagrams, we observed that model build by considering LSSVM with RBF kernel and FS4, is having few outliers and higher median value. It means that the model developed by using FS4 for detecting malware and benign apps achieved better results when compare to other developed models in this study.

-

In this study, we implement six distinct feature ranking approaches, to detect malware from Android apps. Among implemented feature ranking approaches, on the basis of box-plot diagrams we can conclude that, model developed by using FR6 achieved higher median value in addition with fewer outliers.

-

On the basis of box-plot diagrams, among all implemented feature subset selection approaches, model developed by using FS4 give best results when compared to other approaches.

9.4 Comparison of results

To identify that out of implemented feature selection approaches and machine learning algorithms which technique work well or all of the techniques perform equally well, we employed pair-wise t-test in our study.

-

1.

Feature Selection Approaches: In this study, for each of the feature selection approaches two sets are formed, each of feature selection approach have 90 distinct data points (3 machine learning techniques * 30 data set). t-test are performed on distinct feature selection approaches and the respective p-value to measure its statistical significance is compared. The outcome of t-test study is demonstrated in Fig. 11a. In the figure, we used two different symbols to represent the p-value i.e., circle filled with green color have p-value > 0.05 (having no relevance difference) and circle filled with red color have p-value ≤ 0.05 (relevance difference). After observing the Fig. 11a, it is clear that, majority of the cells are filled with green color circle. This means that there is no relevance difference among the employed feature selection approaches. Further, by determining the measure of mean difference, given in Table 14, we have observed that feature sets obtained by considering FS4 give best outcomes when examined with other implemented feature selection approaches.

In the present work, we also compare the developed model on the basis of cost-benefit analysis. For every feature selection approach, cost-benefit analysis is computed by employing following equation:

$$ Cost-Benefit=(Based_{cost}+Benefit_{cost})/2. $$(20)Here, Basedcost is dependent on the correlation among the selected features set and error in the class. Basedcost can be calculated from the following equation :

$$ Based_{cost}=Accuracy \ (SM)*\rho_{SM.fault}. $$(21)Here, Accuracy (SM) is the classification accuracy to build a malware detection model by utilizing selected features set, ρSM.fault is a multiple correlation coefficient among selected features set and error. The proposed model produces higher accuracy and as it have higher multiple correlation coefficient so it will achieve a high Basedcost. NAM is considered as feature sets and NSM is considered as the number of selected features after implementing features selection approaches. Basedcost can be calculated from the following equation:

$$ Based_{cost}=NAM-NSM/NAM $$(22)The feature selection approach which achieve higher value of cost-benefit is an foremost feature selection approach as proposed in [16]. Figure 12a-b demonstrates cost-benefit of distinct feature selection approaches. On the basis of Fig. 12a-b we observed that FS4 achieved higher median Cost-benefit measure when matched with other approaches.

-

2.

Machine Learning Techniques: In our study, we implemented eleven different features subsets (i.e., 1 considering all features + 10 feature selection approaches) on thirty different Android app data set by examining two performance parameters i.e., F-measure and Accuracy, all with 330 data points ((1 considering all set of features + 10 feature selection method) * 30 data sets)). Figure 11b demonstrates the outcomes of t-test analysis. On the basis of Fig. 11b, it is noticeable that, there is a relevance difference among these techniques because p-value is smaller than 0.05. On the other hand, by determining the difference in their mean value as given in Table 15, LSSVM with RBF kernel gives best outcome when compared to other machine learning techniques.

-

3.

Feature subset selection and feature ranking approaches: For this study, pair-wise t-test is used to identify which feature selection approach work better. For both of the implemented approaches (i.e., feature subset selection and feature ranking) sample pairs of performance evaluation are studied. The performance of averaged feature subset selection and feature ranking techniques outcomes of t-test analysis are briefed in Table 16. In this research paper, three distinct kinds of machine learning algorithms are applied on thirty different Android categories by selecting Accuracy and F-measure as performance parameters, in accordance with each feature selection approaches an aggregate number of two sets are utilized, feature subset selection with 360 distinct points (which means 4 feature subset selection approaches * 3 machine learning techniques * 30 data sets) and feature ranking with 540 distinct data points (3 machine learning techniques * 6 feature ranking approaches * 30 data sets). On the basis of Table 16, it is seen that, there isn’t a relevant variation among two implemented approaches, because p-value come out to be greater than 0.05. By comparing the value of the mean difference, feature subset selection approaches give best results as compared to feature ranking approaches. On the basis of Cost-Benefit analysis as demonstrated in Fig. 12, we can say that both feature subset selection and feature ranking have nearly similar Cost-Benefit value. It proves that the averaged cost and benefit of model build by considering selected set of features with feature subset selection approaches and feature ranking having nearly same value.

9.5 Evaluation of FSDroid

9.5.1 Comparison of results with previously used classifiers and frameworks

In addition to the study done in finding the best approach to build a malware detection model accurately, this study also makes the comparison with different most often used supervised machine learning approaches present in literature such as SVM with three distinct kernels i.e., linear, polynomial and RBF, Decision tree analysis, Logistic regression, Neural network and Naïve Bayes classifier. Figure 13 demonstrates the box-plot diagrams for F-measure and Accuracy of commonly utilized classifiers and LSSVM using linear, RBF and polynomial kernel. On the basis of Fig. 13, we observed that LSSVM using three distinct kernels have higher median value along with some number of outliers.

Pair-wise t-test is also implemented to decide which machine learning approach yield best performance. The outcomes of t-test study for distinct machine learning approaches are demonstrated in Fig. 14. On the basis of Fig. 14, it is seen that in number of the cases there is a relevance difference among these machine learning techniques because p-value is smaller than 0.05. On the other hand by noticing the mean difference value in Table 17 it can be seen that LSSVM with RBF kernel achieved better results when compared to other supervised machine learning techniques.

In addition to that, in our study we compare our proposed malware detection model (i.e., FSDroid) with existing frameworks or approaches that were developed in the literature. Table 18 shows the name, goal, methodology, deployment, data set and detection rate of suggested approaches or frameworks. Experiment was performed with our collected data set and empirical result reveals that our proposed framework has achieved 3% higher detection rate when compared to distinct framework available in the literature.

9.5.2 Comparison of results with different Anti-Virus scanners

Although our proposed framework LSSVM with RBF kernel i.e., FSDroid gives a better detection rate when compared to the machine learning technique used in the literature, in the end it must be comparable with the common anti-virus products available in practice for Android malware detection. For this experiment, we select 10 different anti-viruses which are available in the market and applied them on our collected data set.Footnote 31 The performance of our proposed framework is comparatively better when compared to different anti-viruses available in the market. Table 19 shows the results of the experiment with anti-virus scanners. The detection rate of the anti-viruses scanners varies considerably. Also the best anti-virus scanners detected 97.1% of the Android malwares and certain scanners identified only 82% of the malicious samples, likely do not being specialized in detecting Android malware. Our proposed framework i.e., FSdroid gives the detection rate of 98.8% and outperforms 1 out of 10 anti-virus scanners. From this, we can say that our proposed framework is more efficient in detecting malware rather than the manually created signatures of many anti-virus scanners. In addition to that, we also compare the complexity analysis of our proposed framework i.e., FSDroid with distinct anti-virus scanners and experiment were performed on 1000 distinct Android apps that were collected from real-world and empirical result reveals that our proposed framework is able to detect malware-infected apps in less time period when compared to distinct anti-virus scanners.

9.5.3 Detection of known and unknown malware families

Detection of known malware families

In this section, we check that our proposed framework is capable to detect malware of known family or not. For this experiment, we select 20 sample of each families (in our study, we consider sample of 81 different families shown in Table 20.) and train it with our selected model. LSSVM with RBF kernel is capable to detect an average of 98.7% malware apps. The name of families and the samples used for each family can be found in Table 20 and the detection rate of our proposed framework for each family is illustrated in Figs. 15a and b.

Detection of unknown malware families

To check whether the LSSVM with RBF kernel is capable to detect unknown malware families or not, we trained, our proposed framework with the random selection of 10 different families obtained by principle of counting and test is applied on the rest of the remaining 71 families present in the data set. Table 21 shows the result of LSSVM with RBF kernel when we train with 10 selected families. From Table 21, we can say that if we train LSSVM with RBF kernel function and having few number of known families samples which are necessary to generalize the behavior of most malware families it gives better detection rate.

In summary, our proposed framework is capable to detect Android malware more effectively when compared with several anti-virus scanners which regularly update their signature definition. In addition, our proposed framework is capable to identify Android malware more efficiently whenever we trained with limited number of malware families.

9.5.4 Experimental findings

The comprehensive conclusion of our experimental work is presented in this section. The empirical study was conducted for thirty different categories of Android apps by considering LSSVM with three distinct kinds of kernel functions i.e., linear, RBF and polynomial. On the basis of the experimental results, this research paper is able to answer the questions mentioned in Section 2.

-

RQ1: In this paper, we applied three distinct machine learning algorithms to build a model to detect whether the app is benign or malware. On the basis of Tables 8-13, it can be implicit that model build by employing LSSVM with RBF kernel by using selected set of features obtained as a result of FS4 as an input gives better outcome when compared to others.

-

RQ2: To respond the RQ2, Fig. 15 and Tables 18 and 19 were analyzed. Here, it is found that the model build by utilizing LSSVM with RBF kernel is capable to detect malware from real-world apps.

-

RQ3: In the present paper, four distinct kind of feature subset selection approaches and six distinct kind of feature ranking approaches are used to identify the smaller subset of features. By utilizing these approaches, we considered best possible subsets of the features which helps to build a model to identify that either the app is benign or malware. On the basis of the Tables 8-13, in number of cases there occurs a reduced subset of features which are best for building a detection model when compared to all the extracted features.

-

RQ4: In the present paper, six distinct variants of feature ranking approaches are used to discover the reduced subset of features. On the basis of t-test study, it is seen that feature selection by implementing PCA i.e., FR6 approach gives the better outcomes when matched to others approaches.

-

RQ5: For this paper, four distinct kind of feature subset selection approaches are used to find the reduced subset of features. On the basis of t-test study, it is seen that feature selection by utilizing FS4 gives the outcomes which are persuasively better when compared to other approaches.

-

RQ7: For this work, pair-wise t-test being utilized to identify whether feature subset selection approaches perform better than feature ranking approaches or both of them carried out equally well. On the basis of t-test outcomes it is seen that, there is a relevance difference among feature subset selection and feature ranking approach. Moreover, the value of mean difference shows that feature ranking gives better results than the feature subset selection approaches.

-

RQ7: On the basis of Section 9, we can observe that the performance of the feature selection approaches vary by using the distinct machine learning techniques. Further, it also observed that selection of machine learning algorithm to build a malware detection model which detect either the app is malware or not is based on the feature selection approaches.

10 Threat to validity

In this section, threats to validity which are experienced at the time of performing the experiment are presented. Below we discuss them:

-

i.

Construct validity : In this work, presented models for malware detection only detect either an app is benign or malware, but does not state that how many number of possible permissions and API calls are required to detect malware.

-

ii.

External Validity: Cyber-criminals develops malware on daily basis to misuse the user information. In this work, we considered 81 different malware families to train the model and our proposed model is capable to detect malware from known and unknown families. Further, research can be extended to train model with more malware families and which is capable to detect more malware apps from real-world.

-

iii.

Internal Validity : The third threat lies in the consistency of the data used in this study. We collected data from different promised repositories mentioned in Section 2.3. Any error in the information not mentioned in the sources were not considered in this work. Also we can not claim that the data considered for experiment is 100% accurate, we believed that it has been collected consistently.

11 Conclusion

This work emphasizes on designing a malware detection framework by using selected set of features which help us to identify that an Android app belong to malware class or benign class. The execution process was performed by taking assistance of thirty different categories of Android apps.

Our submissions after performing the experiment are following:

-

Empirical results indicate that, it is feasible to determine a small subset of features. The malware detection model build by considering this determined set of features is able to detect malware and benign apps with inferior value of misclassified errors and better accuracy.

-