Abstract

The semidiscretization of a sound soft scattering problem modelled by the wave equation is analyzed. The spatial treatment is done by integral equation methods. Two temporal discretizations based on Runge–Kutta convolution quadrature are compared: one relies on the incoming wave as input data and the other one is based on its temporal derivative. The convergence rate of the latter is shown to be higher than previously established in the literature. Numerical results indicate sharpness of the analysis. The proof hinges on a novel estimate on the Dirichlet-to-Impedance map for certain Helmholtz problems. Namely, the frequency dependence can be lowered by one power of \(\left| s\right| \) (up to a logarithmic term for polygonal domains) compared to the Dirichlet-to-Neumann map.

Similar content being viewed by others

1 Introduction

Boundary element methods have established themselves as one of the standard methods when dealing with scattering problems, especially if the domain of interest is unbounded. First introduced for stationary problems, beginning with the seminal works [3, 4] these methods have steadily been extended to time-dependent problems; see [32] for an overview. The method of convolution quadrature (CQ), introduced by Lubich in [22, 23], is a convenient way of extending the stationary results to a time-dependent setting.

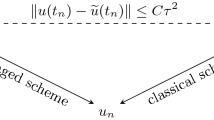

It is well-known that the convergence rate of a Runge–Kutta convolution quadrature (RK-CQ) as introduced in [20], is determined by bounds on the convolution symbol K in the Laplace domain. Namely, a bound of the form

leads to convergence rate \(q+1-\mu \), with q the stage order of the RK-method, as was proven in [7], see also [5, 20] for earlier results in this direction. Thus one might expect that changing the symbol to \(s^{-1} K(s)\) would increase the convergence order by one.

When considering discretizations of the wave equation using boundary integral methods, this is not always the case. Instead, it has been observed that sometimes a “superconvergence phenomenon” appears, where the observed convergence rate surpasses those predicted, see [29,30,31].

In this paper, we give a first explanation why such a phenomenon occurs in the model problem of sound soft scattering, i.e., the discretization of the Dirichlet-to-Neumann map. We expect that similar phenomena can also explain the improved convergence rate for the Neumann problem or more complex scattering problems. The proof relies on the observation that the \(s^{-1}\)-weighted Dirichlet-to-Neumann map can be decomposed into a Dirichlet-to-Impedance map plus the identity operator. For the Dirichlet-to-Impedance operator, it was observed in [2] that an improved bound holds compared to the Dirichlet-to-Neumann map as long as the geometry is given by the sphere or the half-space. It is then conjectured in [2] that a similar bound holds for smooth, convex geometries. In this paper, provided that we restrict the Laplace parameter s to a sector, we generalize this result to a much broader class of geometries, namely, smooth or polygonal geometries, without convexity assumption. This will then immediately give the stated improved bound for the convolution quadrature scattering problem. In the case of polygons, the result holds in a slightly weaker form in that it contains an additional logarithmic factor.

As a consequence of this observation, it may often be beneficial to select a problem formulation with an extra time derivative. In many situations, such formulations are even the natural choice, see, e.g., [6, 8, 9], and especially when one works with the wave equation as a first order system as in [31].

Another way of looking at this phenomenon is that when using a standard formulation (i.e., taking \(\lambda ^k\) as in Proposition 3.2 without using a time derivative on the data), then the discrete integral will exhibit a superconvergence effect.

We point out that the present paper focuses on a semidiscretization of the problem with respect to the time variable. For practical purposes one would also have to take into account the discretization in space using boundary elements. We also mention that while popular, CQ is only one possibility to apply boundary integral techniques to wave propagation problems. Notably also space-time based methods have gained popularity [13, 14, 18] in recent years.

2 Model problem and notation

We consider a sound soft scattering problem for acoustic waves. For a bounded Lipschitz domain \(\varOmega ^- \subseteq \mathbb {R}^d\) with \(\varOmega ^+:=\mathbb {R}^d{\setminus } \overline{\varOmega ^-}\), the problem reads: Find \(u^{\mathrm {tot}}\) such that

Here \(u^{\mathrm {inc}}\) is a given incoming wave, i.e., \(u^{\mathrm {inc}}\) also solves the wave equation, and we assume that for \(t\le 0\) it has not reached the scatterer yet. The problem can be recast by decomposing the total wave into the incoming and outgoing wave, \(u^{\mathrm {tot}}=u^{\mathrm {inc}}+ u\), where u solves:

This will be the problem we are discretizing. For simplicity, we consider two possible cases. The bounded Lipschitz domain \(\varOmega ^- \subseteq \mathbb {R}^d\) has either a smooth boundary or \(\varOmega ^- \subseteq \mathbb {R}^2\) is a polygon. While we expect that the results and techniques can be generalized to the case of piecewise smooth geometries, such an extension would lead to a much higher level of technicality in the present paper. Although we focus on the exterior scattering problem as our motivating model problem all of the main results also hold for the interior Dirichlet problem.

We end the section by fixing some notation. We write \(H^{m}(\varOmega ^\pm )\) for the usual (complex valued) Sobolev spaces on \(\varOmega ^+\) or \(\varOmega ^-\). On the interface \(\varGamma :=\partial \varOmega ^{-}\) we also need fractional spaces \(H^{s}(\varGamma )\) for \(s \in [-1,1]\), see, e.g., [1, 24] for precise definitions. We also set \(H^{1}_{\varDelta }(\varOmega ^\pm ):=\{u \in H^1(\varOmega ^\pm ): \; \varDelta u \in L^2(\varOmega ^\pm )\}\). We write \(\gamma ^\pm : H^1(\varOmega ^\pm ) \rightarrow H^{1/2}(\varGamma )\) for the exterior and interior trace operator, and \(\partial _n^{\pm }: H^{1}_{\varDelta }(\varOmega ^\pm ) \rightarrow H^{-1/2}(\varGamma )\) for the normal derivative. We note that in both cases, we take the normal to point out of the bounded domain \(\varOmega ^-\). We write \({\llbracket \gamma u \rrbracket }:=\gamma ^+ u - \gamma ^- u\) and \(\left\{ \!\!\left\{ \gamma u \right\} \!\! \right\} :=\frac{1}{2}\left( \gamma ^+ u + \gamma ^- u\right) \) for the trace jump and mean, and \({\llbracket \partial _n u \rrbracket }:=\partial _n^+ u - \partial _n^- u\) for the jump of the normal derivative.

The notation \(A \lesssim B\) abbreviates \(A \le C B\) with implied constants independent of critical parameters, in particular the parameter s that appears throughout this work. For (relatively) open sets \(\mathcal {O}\) we introduce the \(L^2(\mathcal {O})\) scalar product \((u,v)_{L^2(\mathcal {O})}:= \int _{\mathcal {O}} u {\overline{v}}\).

2.1 Boundary integral methods and convolution quadrature

It is well-known that scattering problems of the form presented in Sect. 2 can be solved by employing boundary integral methods, see [32] for a detailed time domain treatment. For the frequency domain, results can be found in most textbooks on the subject, see [15, 16, 24, 33, 34].

The use of boundary integral methods for discretizing the time domain scattering problem dates back to the works [3, 4], where also important Laplace domain estimates of the form (3.2) were first shown.

For \(s \in \mathbb {C}_+:=\big \{z \in \mathbb {C}: \,{\text {Re}}(z) > 0\big \}\), we introduce the single and double layer potentials

where \(\Phi \) is the fundamental solution for the operator \(-\varDelta + s^2\):

here \(H_0^{(1)}\) denotes the first kind Hankel function of order zero, see [24, Chap. 9]. Finally, we introduce the boundary integral operators induced by the potentials:

In practice, these operators can be realized explicitly as integrals over the boundary \(\varGamma \) since for sufficiently smooth functions \(\psi \), \(\varphi \) the following equations hold:

The operator we consider for discretizing (2.1) is the Dirichlet-to-Neumann map.

Definition 2.1

For \(s \in \mathbb {C}_+\), given \(g \in H^{1/2}(\varGamma )\), let u solve

We then define the operators

In practice, the following well-known proposition gives an explicit way to calculate \({\text {DtN}}\).

Proposition 2.2

(see, e.g., [21, Appendix 2]) The Dirichlet-to-Neumann map can be written as

RK-CQ was introduced by Lubich and Ostermann in [20]. It provides a simple and general way of approximating convolution integrals by a high order method and has the great advantage that only the Laplace transform of the convolution symbol is required. We only very briefly introduce the method and notation.

Let K be a holomorphic function in the half plane \({\text {Re}}(s)> \sigma _0 >0\). Let \({\mathscr {L}}\) denote the Laplace transform and \({\mathscr {L}}^{-1}\) its inverse. We (formally) introduce the operational calculus by defining

where \(g \in {\text {dom}}\left( K(\partial _t)\right) \) is such that the inverse Laplace transform exists and the expression above is well defined.

For a Runge–Kutta method given by the Butcher tableau \(A\), \(b^T,\) \(c\), the convolution quadrature approximation of \(K(\partial _t)\) with time step size \(k>0\) is given, for any function \(g: \mathbb {R}\rightarrow \mathbb {R}\) with \(g(t)=0\) for \(t \le 0\), by the expression

where the matrix valued function \(\varDelta \) is given by

The extension to operator valued functions K is straight forward. In practice, we only consider evaluating \(K(\partial _t^k) g\) at the discrete time steps \(t_j:=j\,k\).

We make the following assumptions on the Runge–Kutta method, slightly stronger than [7].

Assumption 2.3

-

(i)

The Runge–Kutta method is A-stable with (classical) order \(p\ge 1\) and stage order \(q\le p\).

-

(ii)

The stability function \(R(z):=1+z b^T({\text {I}}- z A)^{-1} \mathbb {1}\) satisfies \(\left| R(it)\right| <1\) for \(0\ne t \in \mathbb {R}\).

-

(iii)

The Runge–Kutta coefficient matrix \(A\) is invertible.

-

(iv)

The method is stiffly accurate, i.e., \(b^T A^{-1}=(0,\dots ,0,1)\).

Remark 2.4

Assumption 2.3 is satisfied by the Radau IIA and Lobatto IIIC methods, see [17]. Also note that the order conditions imply that \(c_{m}=1\) for such methods. \(\square \)

Our analysis will employ the following result on RK-CQ using Laplace domain estimates:

Proposition 2.5

([7, Thm. 3]) Assume that K is holomorphic in the half plane \({\text {Re}}(s)> \sigma _0 >0\) and that there exist \(\mu _1,\mu _2 \in \mathbb {R}\) such that K(s) satisfies the following bounds for all \(\delta >0\):

Assume that the Runge–Kutta method satisfies Assumption 2.3. Let \(r > \max \big (p+\mu _1,p,q+1\big )\) and \(g \in C^{r}([0,T])\) satisfy \(g(0)={\dot{g}}(0)=\dots g^{(r-1)}(0)=0\). Then there exists \({\bar{k}} >0\) such that for \(0<k<{\bar{k}}\),

The constant C depends on \(t_n\), \(\sigma _0\), \({\bar{k}}\), the constants \(C_{\sigma _0}\), \(C_{\delta }\), and the Runge–Kutta method.

3 Main results

To simplify the notation, we introduce a symbol for the sectors in Proposition 2.5. Throughout this work we fix \(\sigma _0>0\) and \(\delta >0\) and set

Remark 3.1

The choice of \(\sigma _0>0\) and \(\delta >0\) in the definition of \({\mathscr {S}}\) is arbitrary, and all our estimates will hold for any choice, although all the constants will depend on \(\sigma _0\) and \(\delta \). \(\square \)

We are now able to state the main result of the paper. We start by stating the standard convergence result for discretizing the Dirichlet-to-Neumann map.

Proposition 3.2

(Standard method) Let \(g \in C^{r}([0,T],H^{1/2}(\varGamma ))\) for some \(r > p+2\) and \(g(0)={\dot{g}}(0)=\dots = g^{(r)}(0)=0\). Let \(\lambda :={\text {DtN}}^\pm (\partial _t) g\) be the exact normal derivative and \(\lambda ^k:={\text {DtN}}^\pm (\partial _t^k) g\) denote the standard CQ-approximation. There exists a constant \( \overline{k}>0\) such that the following estimate holds for \(0\le t \le T\) and \(0<k<{\overline{k}}\):

with a constant C(T) depending on the terminal time T, the Runge–Kutta method, \(\varGamma \), and \({\overline{k}}\).

Proof

Follows from the well-known bound

(see for example [21]) and Proposition 2.5. \(\square \)

We will observe numerically in Sect. 5 that Proposition 3.2 is essentially sharp. Thus, when considering the differentiated equation, one expects an increase in order by one, which follows directly from Proposition 2.5. But for the Dirichlet-to-Neumann map the increase of order is even greater, as long as one assumes slightly higher regularity of the data.

Theorem 3.3

(Method based on differentiated data) Let \(r > p+2\). Let \(g \in C^{r}\big ([0,T],H^1(\varGamma )\big )\) satisfy \(g(0)={\dot{g}}(0)=\dots = g^{(r)}(0)=0\). Let \(\lambda :={\text {DtN}}^\pm (\partial _t) g\) be the exact normal derivative and \(\lambda ^k:=[[\partial _t^k]^{-1}{\text {DtN}}^\pm (\partial _t^k)] {\dot{g}}\) denote the CQ-approximation using \({\dot{g}}\) as input data. Then, for all \(\varepsilon > 0\), there exists a constant \( \overline{k}>0\) such that the following estimate holds for \(0\le t \le T\) and \(0<k<{\overline{k}}\):

The constant \(C(T,\varepsilon )\) depends on \(\varepsilon \), the terminal time T, the Runge–Kutta method, \(\varGamma \), and \({\overline{k}}\). If \(\varGamma \) is smooth, one can take \(\varepsilon =0\).

Proof

We apply Proposition 2.5. By linearity, we can write the Dirichlet-to-Neummann operator as

The second operator (in frequency domain) is independent of s. It is a simple calculation that in such cases, i.e., if \(K(s)=B\) for all s, the convolution weights satisfy \(W_j=\delta _{j,0} B\). Thus, we have

Since stiff accuracy implies \(b^T A^{-1}=(0,\dots ,0,1)\) and \(c_{m}=1\), the operator \({\text {I}}\) is reproduced exactly by the CQ. A similar decomposition was already invoked in [7] to explain a superconvergence phenomenon for a scalar problem. Combining standard estimates, e.g., [21, Table 1], with Theorem 3.4 shows that the Dirichlet-to-Impedance map satisfies

By Proposition 2.5 and by estimating the logarithmic term by \(C |s|^\varepsilon \) for arbitrary \(\varepsilon >0\), we obtain (3.3). \(\square \)

While Theorem 3.3 is the main motivation for this paper, its proof is based on another result, which may be of independent interest.

Theorem 3.4

Let \(s \in {\mathscr {S}}\). Let \(\varOmega ^{-} \subseteq \mathbb {R}^d\) be a bounded smooth Lipschitz domain. The following estimate holds for the Dirichlet-to-Neumann map:

If \(\varOmega ^{-} \subseteq \mathbb {R}^2\) is a bounded Lipschitz polygon, then one has

The constant C depends only on \(\varOmega ^-\) and the parameters \(\sigma _0\), \(\delta \) defining the sector \({\mathscr {S}}\).

Proof

Due to its lengthy and technical nature, we defer the proof to Sect. 4. For smooth geometries it is shown as Corollary 4.11. Polygonal domains are handled in Corollary 4.19. \(\square \)

Remark 3.5

The regularity requirement \(g \in H^{1}(\varGamma )\) is stronger than the expected requirement \(g \in H^{1/2}(\varGamma )\). This is due to the construction of the boundary layer function [see (4.15)]. \(\square \)

Since all our results hold for both the interior and exterior problem, we can also easily treat the case of an indirect BEM formulation.

Corollary 3.6

(Indirect formulation) Let \(s\in {\mathscr {S}}\) and assume that \(\varOmega ^{-}\subseteq \mathbb {R}^d\) is smooth. Then, the operator \(V^{-1}(s)-2s\) satisfies the bound

Let \(g \in C^{r}([0,T],H^1(\varGamma )\)) for some \(r > p+2\) with \(g(0)={\dot{g}}(0)=\dots g^{(r)}(0)=0\). Let \(\varphi :=V^{-1}(\partial _t) g\) be the exact density and \(\varphi ^k:=[[\partial _t^k]^{-1}V^{-1}(\partial _t^k)] {\dot{g}}\) be its CQ-approximation.

Then there exists a constant \(\overline{k}>0\) such that the following estimate holds for \(0\le t \le T\) and \(0<k<{\overline{k}}\):

The constant C(T) depends on T, the Runge–Kutta method, \(\varGamma \), and \({\overline{k}}\).

If \(\varOmega ^{-}\subseteq \mathbb {R}^2\) is a polygon, then

and

where \(\varepsilon >0\) is arbitrary, and \(C(T,\varepsilon )\) depends additionally on \(\varepsilon \).

Proof

We can write \(V^{-1}(s)={\text {DtN}}^-(s) - {\text {DtN}}^+(s)\). Thus the statements follows from Theorems 3.3 and 3.4. \(\square \)

4 Proofs

The proof of Theorem 3.4 hinges on three main observations, which require some technical work to be made rigorous:

-

1.

In 1d on \(\mathbb {R}_+\), the interior Dirichlet-to-Neumann map is given by \(g \mapsto s g\).

-

2.

The existing \({\text {DtN}}\)-estimate’s poor s dependence is mainly caused by boundary layers.

-

3.

Boundary layers are essentially a 1d phenomenon, so observation 1 applies.

4.1 Preliminaries

There are many ways of defining fractional order Sobolev spaces. A convenient way of working with them is by introducing them via the real method of interpolation. Given Banach spaces \(\mathcal {X}_1 \subseteq \mathcal {X}_0\) with continuous embedding and parameters \(\theta \in (0,1)\), \(q\in [1,\infty )\), we define the interpolation norm and space as follows:

When working with the Helmholtz equation, it is convenient to work with \(\left| s\right| \)-weighted norms:

Definition 4.1

For an open (or relatively open) set \(\mathcal {O}\), parameters \(s \in \mathbb {C}_+\) and \(\theta \in \{0,1\}\), we define the weighted Sobolev norms

For \(\theta \in (0,1)\), the corresponding norms are defined via interpolation. If we want to include homogeneous boundary conditions, we write \( \widetilde{H}^{\theta } (\mathcal {O})\) for the interpolation space between \(L^2(\mathcal {O})\) and \(H^1_0(\mathcal {O})\). If the spaces are equipped with the weighted norms (4.2), we write \(\Vert v \Vert _{|s|,\theta , \sim ,\mathcal {O}}\), for the corresponding interpolation norm.

The dual norms are defined by

For the most part we will be working with the closed surface \(\varGamma \). There, the norms \(\left\| \cdot \right\| _{\left| s\right| ,\theta ,\varGamma }\) and \(\left\| \cdot \right\| _{\left| s\right| ,\theta ,\sim ,\varGamma }\) coincide. By Lemma A.1 we also have for \(\theta \in (0,1)\) and bounded domains \(\mathcal {O}\) the norm equivalence

with implied constants independent of |s|.

We start with some well-known s-explicit estimates for the (modified) Helmholtz equation.

Lemma 4.2

(Well posedness) Let \(s \in {\mathscr {S}}\). The sesquilinear form

associated to \(-\varDelta + s^2\) is elliptic in the sense that, using \(\zeta :=\frac{{\overline{s}}}{\left| s\right| }\), it satisfies

Proof

We calculate:

Since \({\text {Re}}(s) \sim \left| s\right| \) in the sector \({\mathscr {S}}\) this concludes the proof. \(\square \)

Lemma 4.3

(Trace estimates) For \({\text {Re}}(s)\ge 0\) and \(\left| s\right| >\sigma _0\), let \(u \in H^1(\varOmega ^\pm )\) satisfy

Then the following estimates hold for the traces of u:

Proof

We start with the normal derivative. For any \(\xi \in H^{1/2}(\varGamma )\) and any v with \(\gamma ^\pm v=\xi \) we calculate:

Next we select v as the minimal energy extension, satisfying

By [32, Prop. 2.5.1], v admits the estimate \(\left\| v\right\| _{\left| s\right| ,1,\varOmega ^\pm }\lesssim \left| s\right| ^{1/2} \Vert \xi \Vert _{H^{1/2}(\varGamma )},\) and (4.5) follows.

For the Dirichlet trace, we get using the multiplicative trace estimate and the same lifting v:

The estimate for the impedance trace then follows trivially. \(\square \)

The previous lemma shows that for a priori estimates in terms of standard Sobolev norms the constants involved have some s dependence. The next lemmas show that the use of the weighted norms introduced in Definition 4.1 avoids such dependencies:

Lemma 4.4

The operators \(\gamma ^\pm : H^{1}(\varOmega ^\pm ) \rightarrow H^{1/2}(\varGamma )\) satisfy the bounds:

Proof

The multiplicative trace estimate and Young’s inequality give

Combining this with the standard trace estimate concludes the proof in view of (4.3). \(\square \)

Lemma 4.5

(Dirichlet problem) Let \(g \in H^{1/2}(\varGamma )\), \(f \in L^2(\varOmega ^\pm )\). For any \(s \in {\mathscr {S}}\) there exists a unique solution to the problem

The function satisfies the a priori bound

The implied constant depends only on \(\varOmega ^{\pm }\) and the constants \(\sigma _0\), \(\delta \) characterizing \({\mathscr {S}}\).

Proof

Existence follows using the usual theory of elliptic problems. For the a priori bound, we first note that by [28, Lemma 4.22], there exists a lifting \(u_D\) satisfying

Thus the remainder \({\widetilde{u}}:=u-u_D\) solves:

As the sesquilinear form \(a_s\) from Lemma 4.2 is elliptic, we get with \(\zeta \) defined there

Lemma 4.6

(Neumann problem) Let \(h \in H^{-1/2}(\varGamma )\). Then for every \(s \in {\mathscr {S}}\) there exists a unique solution to the problem

u satisfies the a priori bound

The implied constant depends only on \(\varOmega ^{\pm }\) and on \(\sigma _0\), \(\delta \) characterizing \({\mathscr {S}}\).

Proof

Follows easily from the weak formulation and (4.8). \(\square \)

We also have the following trace inequality in a weighted \(H^{-1/2}\)-norm:

Lemma 4.7

If \(-\varDelta u + s^2 u =0\) we can estimate:

Proof

Follows easily from the weak definition of \(\partial _n^- u \), the Cauchy–Schwarz inequality, and (4.9). \(\square \)

4.2 Smooth geometries

In order to prove a first version of Theorem 3.4, we consider a simplified setting of smooth geometry and Dirichlet trace. Closely following the ideas from [25, 27], we construct a lowest order boundary layer function that will be the basis for all further estimates.

Lemma 4.8

(Boundary fitted coordinates) Let \(T: \mathcal {O} \subseteq \mathbb {R}^{d-1} \rightarrow \varGamma \) be a smooth local parametrization of \(\varGamma \). Define \(F: \mathcal {O} \times (-\varepsilon , \varepsilon ) \rightarrow \mathbb {R}^d\) as

where \(n(\widehat{x})\) is the outer normal vector to \(\varOmega ^-\) at the point \(T(\widehat{x})\).

For \(\varepsilon >0\) sufficiently small, F is a smooth diffeomorphism onto \(F\big (\mathcal {O}\times (-\varepsilon ,\varepsilon )\big )\). It holds that \(F(\mathcal {O} \times (0,\varepsilon )) \subseteq \varOmega ^-\) and \(F(\mathcal {O} \times (-\varepsilon ,0)) \subseteq \varOmega ^+\). Additionally, F satisfies

where \({\widetilde{T}}\) and \({\widetilde{R}}\) are smooth and \({\widetilde{T}}({\widehat{x}})\) is invertible.

Proof

We only show (4.12). We select a smooth orthogonal basis of the tangent space at \(T(\widehat{x})\), denoted by \(e_1(\widehat{x}),\dots , e_{d-1}(\widehat{x})\). This implies that \(Q:=\big (e_1(\widehat{x}), \dots , e_{d-1}(\widehat{x}), n(\widehat{x})\big )\) is orthogonal.

Here \({\widetilde{T}}_1:=(e_1,\dots ,e_{d-1})^T D_{\widehat{x}}T(\widehat{x})\), and thus \(\Vert {{\widetilde{T}}_1}\Vert _2\le \left\| D_{\widehat{x}}T(\widehat{x})\right\| _{2}\). We further compute:

where \(R_1\) collects the remaining terms. For sufficiently small \(\rho >0\), depending only on \(\left\| D_{\widehat{x}}T\right\| _{2}\) and \(\left\| D_{\widehat{x}}n\right\| _{2}\), we can linearize the inverse in (4.13) to get (4.12) with \({\widetilde{T}}:=\big ({\widetilde{T}}_1{\widetilde{T}}_1^T\big )^{-1}\) (the latter inverse exists since \(D_{\widehat{x}} T\) and thus also \({\widetilde{T}}_1\) has full rank). \(\square \)

Lemma 4.9

Assume that \(\varOmega ^-\) has a smooth boundary \(\varGamma \). For any \(s \in {\mathscr {S}}\) and for every \(u \in H^1(\varOmega ^-)\) solving

together with \(\gamma ^- u \in H^2(\varGamma )\) there exists a function \(u_{BL} \in H^1(\varOmega ^-)\) with the following properties:

-

(i)

\(\gamma ^- u_{BL} = \gamma ^- u\),

-

(ii)

\(\partial _n^- u_{BL} - s \gamma ^- u_{BL} =0\),

-

(iii)

\(-\varDelta u_{BL} + s^2 u_{BL} = f\) with

$$\begin{aligned} \left\| f\right\| _{L^2(\varOmega ^-)} \lesssim \left| s\right| ^{1/2}\left\| \gamma ^- u\right\| _{H^1(\varGamma )} + \left| s\right| ^{-1/2} \left\| \gamma ^- u\right\| _{H^2(\varGamma )}. \end{aligned}$$(4.14)The implied constant depends only on \(\varOmega ^-\) and \(\sigma _0\), \(\delta \) characterizing \({\mathscr {S}}\).

-

(iv)

For \(\varepsilon > 0\) define the set \(\varOmega ^-_\varepsilon :=\{ x \in \varOmega ^-: {\text {dist}}(x,\varGamma ) > \varepsilon \}\). Then, the following estimates hold for all \(\ell \in \mathbb {R}\) with constants independent of s:

$$\begin{aligned} \left\| u_{BL}\right\| _{H^{2}(\varOmega ^-_{\varepsilon })}&\le C_{\varepsilon ,\ell } \left| s\right| ^{-\ell }\left\| \gamma u\right\| _{H^2(\varGamma )}. \end{aligned}$$ -

(v)

The analogous statement also holds for the exterior problem \(\varOmega ^+\), replacing \(-s\) by s in (ii).

Proof

We only show the case of the interior problem and abbreviate \(g:=\gamma ^- u\). We work in boundary fitted coordinates \((\widehat{x},\rho )\) as described in Lemma 4.8. First assume, that \({\text {supp}}(g) \subset T(\mathcal {O})\), i.e., g is supported by the part of the boundary parametrized by T. The change of variables formula shows that if u solves \(-\varDelta u + s^2 u=f\), then \(\widehat{u}:=u\circ F\) solves:

with \(J:={{\text {det}}(DF)}\) and \({\widehat{f}} = f \circ F\) (see, e.g., [12, Step 7 of proof of Thm. 4, Sec. 6.3.2]).

On the other hand, if \(\widehat{u}_{BL}\) satisfies

then \(u_{BL}:=\widehat{u}_{BL} \circ F^{-1}\) solves

We set \(\widehat{A}:=DF^{-1} DF^{-T}\) and define with \({\widehat{g}}:= g \circ T\) the function

in the boundary fitted coordinates.

By (4.12), we have \({\widehat{A}}_{d,d} = 1 + \rho {\widetilde{R}}_{d,d}\). Differentiating out we obtain for some smooth functions \(c_{ij}\), \(a_i\), \(b_i\), \(d_i\), and b

where, in the last step, we exploited the definition of \(\widehat{u}_{BL}\). From its definition and the fact that \( \left| s\right| \sim {\text {Re}}(s)\) for \(s \in \mathscr {S}\), one can easily see that \(\widehat{u}_{BL}\) satisfies the estimates

Transforming back gives (4.14) for the part of \(\varOmega ^-\) parametrized by F. Assertion (iv) follows easily from the definition, as the exponential decay dominates all powers of \(\left| s\right| \). This allows us to smoothly cut off \(u_{BL}\) for large \(\rho \) and extend it by 0 to the whole domain. For general g, we use a smooth partition of unity to decompose g into functions with local support. \(\square \)

As the next step, we lower the regularity requirement on \(\gamma ^- u\).

Corollary 4.10

Let \(\varOmega ^-\) have a smooth boundary \(\varGamma \). For any \(s \in {\mathscr {S}}\) and for every \(u \in H^1(\varOmega ^-)\) with \(\gamma ^- u \in H^{1}(\varGamma )\) solving

there exists a function \(u_{BL} \in H^2(\varOmega ^-)\) with the following properties:

-

(i)

\(\partial _n^- u_{BL} - s \gamma ^- u_{BL} =0\).

-

(ii)

\( \left\| \partial _n^- (u-u_{BL}) - s\big (\gamma ^- u - \gamma ^- u_{BL}\big )\right\| _{H^{-1/2}(\varGamma )} \lesssim \left\| \gamma ^- u\right\| _{H^{1}(\varGamma )}. \)

-

(iii)

\(\left\| u-u_{BL}\right\| _{\left| s\right| ,1,\varOmega ^-} \lesssim \left| s\right| ^{-1/2}\left\| \gamma ^- u\right\| _{H^{1}(\varGamma )}.\) The implied constant in (ii), (iii) depends only on \(\varOmega ^-\) and the constants \(\sigma _0\), \(\delta \) characterizing \({\mathscr {S}}\).

-

(iv)

For \(\varepsilon > 0\) introduce \(\varOmega ^-_\varepsilon :=\{ x \in \varOmega ^-:{\text {dist}}(x,\varGamma ) > \varepsilon \}\). Then, the following estimates hold for all \(\ell \in \mathbb {R}\) with constants independent of s:

$$\begin{aligned} \left\| u_{BL}\right\| _{H^{2}(\varOmega ^-_{\varepsilon })}&\le C_{\varepsilon ,\ell } \left| s\right| ^{-\ell }\left\| \gamma ^- u\right\| _{H^1(\varGamma )}. \end{aligned}$$ -

(v)

The analogous statement also holds in the case of the exterior problem upon replacing s by \(-s\) in (i) and (ii).

Proof

In order to apply Lemma 4.9, we need \(H^2\)-regularity of \(g:=\gamma ^- u\). We fix a function \({\widetilde{g}} \in H^2(\varGamma )\) with the following properties:

This can be either seen by realizing \(H^1(\varGamma )\) as the interpolation space between \(L^2(\varGamma )\) and \(H^2(\varGamma )\) and using [10, Lemma] or constructed directly via the usual mollifiers as done in [1, Thm. 2.29]: The approximation estimate follows from [1, Eqn (20)] and an interpolation argument. See also [1, Sec. 7.48] for how to trade Sobolev regularity for approximation properties of the mollified function.

Let \({\widetilde{u}}\) denote the solution to

Since \({\widetilde{g}} \in H^2(\varGamma )\), we can apply Lemma 4.9 to construct \(u_{BL}\). Assertion (i) then follows by construction. For (iii) we note that by Lemmas 4.5 and 4.9:

For (ii), we use Lemma 4.3 and (4.9) to get that

Similarly, we have

Assertion (iv) follows directly from Lemma 4.9 (iv) and (4.16). \(\square \)

Corollary 4.11

Let \(\varOmega ^-\subset \mathbb {R}^d\) be smooth and \(s \in {\mathscr {S}}\). Let \(g \in H^1(\varGamma )\) and u solve

Then

The analogous statement holds for the exterior problem upon replacing s by \(-s\) in (4.17).

Proof

Follows by writing \(u=u_{BL} + (u-u_{BL})\). The impedance trace of \(u_{BL}\) vanishes by Corollary 4.10 (i). The impedance trace of the remainder is uniformly bounded with respect to s via Corollary 4.10 (ii). \(\square \)

4.3 Polygons

In this section, we consider a polygonal domain \(\varOmega ^{-}\subset {{\mathbb {R}}}^2\) as an example of a non-smooth domain. In order to match the boundary layer solutions from Lemma 4.9 at corners, we solve an appropriate transmission problem, similarly to what was done in [25]. We refer to Fig. 1b for the geometric situation.

We first need one additional Sobolev space. For a smooth curve \(\varGamma '\) and \(\theta \in [0,1]\), we introduce

where \(d_{\partial \varGamma '}\) denotes the distance to the endpoints of \(\varGamma '\).

4.3.1 A transmission problem in a cone

In this section, we investigate certain transmission problems. These will allow us to match different boundary layer functions in the vicinity of a corner of the domain. We start by investigating the special case of a transmission problem on a sector or an infinite cone. Due to its special structure, we can derive sharper estimates for the normal derivative than what can be obtained from the energy methods used in Lemma 4.16 below.

We introduce some notation. Given \(\omega \in (0,\pi )\), we define the infinite cone

with opening angle \(2 \omega \) and \(\mathcal {C}^\prime \) by removing from \(\mathcal {C}\) its bisector:

Next, we define the sector \(S_{\omega }:=\{(r\cos \varphi ,r\sin \varphi ), \, r\in (0,1), \left| \varphi \right| \in (0,\omega )\}\), which is just the truncated cone \(\mathcal {C} \cap B_{1}(0)\). For its boundary, we write \(\varGamma _{\pm \omega }:=\big \{ (r \cos (\pm \omega ),r \sin (\pm \omega )), \; r\in (0,1)\big \}\) for the two parts of the boundary of the sector that are adjacent to the origin and set \(\varGamma _{S}:= \varGamma _\omega \cup \varGamma _{-\omega }\). Finally, we need to define the normal jump across interfaces. If \(\varGamma '\) denotes a smooth interface separating domains \(\mathcal {O}_1\) and \(\mathcal {O}_2\) we define the normal jump across \(\varGamma '\) via

where the normal vectors \(n_{j}\) are taken to point out of \(\mathcal {O}_j\) respectively.

Lemma 4.12

Consider the solution \(\widehat{u}\in H^1(\mathcal {C})\) to the following problem on the infinite cone for \(\mu >0\) and \(\widehat{s} \in {\mathscr {S}}\) with \(\left| \widehat{s}\right| =1\):

Then, the following statements hold for \(\widehat{u}\):

-

(i)

For each \(\ell \in {{\mathbb {N}}}\) there exist constants \(C_{\ell }\), \(\alpha _{\ell } >0\) such that for all \(r \ge 1\)

$$\begin{aligned} \Vert \widehat{u}\Vert _{W^{\ell ,\infty }(\mathcal {C}^{\prime }{\setminus } B_r(0))}&\le C_{\ell } e^{-\alpha _{\ell } r}. \end{aligned}$$ -

(ii)

There exists a constant \(C>0\) such that the outer normal derivative \(\partial _n u\) satisfies the estimates

$$\begin{aligned} \left\| \partial _n \widehat{u}\right\| _{L^2(\partial \mathcal {C})} + \left\| \partial _n \widehat{u}\right\| _{L^1(\partial \mathcal {C})}&\le C. \end{aligned}$$(4.21)

The constants depend only on the opening angle \(2\omega \), the parameter \(\mu \), and the choices of \(\sigma _0\) and \(\delta \) in the definition of \({\mathscr {S}}\).

Proof

We first show (ii) in Steps 1–3 and then (i) in Step 4.

Step 1: We start with an energy estimate in exponentially weighted spaces, namely, for any \(0<\alpha < \mu {\text {Re}}(\widehat{s})\) the following estimate holds:

with a constant C only depending on \(\alpha \), \(\mu \), \(\omega \), and \(\widehat{s}\).

We fix some notation. We write \(\left\| u \right\| ^2_{1,\alpha }:=\left\| e^{\alpha r}\nabla u\right\| ^2_{L^2(\mathcal {C})} + \left\| e^{\alpha r}u\right\| ^2_{L^2(\mathcal {C})}\), and analogously for \(\left\| u \right\| _{1,-\alpha }\). Also we set \(h(x_1,x_2):=e^{-\widehat{s} \mu x_1}\) for the transmission data.

The proof follows [25, Prop. 6.4.6] verbatim. The sesquilinear form \(B(u,v):=(\nabla u,\nabla u)_{L^2(\mathcal {C})}+ {\hat{s}}^2 (u,v)_{L^2(\mathcal {C})}\) satisfies an inf-sup condition: There is \(c > 0\) depending only on \(\alpha \in [0,1)\) such that

This can be seen by taking, for given u in the infimum, the function \(v:= {\hat{s}}e^{2 r} u\) in the supremum and performing elementary calculations. Next, we show that \(|(h,\gamma v)_{L^2({{\mathbb {R}}}^+\times \{0\})}| \le C \Vert v\Vert _{1,-\alpha }\). This follows also verbatim [25, Prop. 6.4.6] using [25, Lemma A.1.8]. Specifically, by [25, Lemma A.1.8] it suffices to ascertain that for \(\alpha < \mu {\text {Re}}(\widehat{s})\) we have

We conclude that the solution \(\widehat{u}\) satisfies \(\Vert \widehat{u}\Vert _{1,\alpha } \le C\) for some constant \(C > 0\) depending only on the choice of \(\alpha < \mu {\text {Re}}(\widehat{s})\).

Step 2: For a ball \(B_\rho (x)\) of radius \(\rho =\mathcal {O}(1)\) around any point \(x \in \mathcal {C}\) with \({\text {dist}}(x,0)> 2\rho \) we can apply standard elliptic regularity (interior regularity, regularity for homogeneous Dirichlet conditions, and regularity for transmission problems—see, e.g., [25, Lemmas 5.5.5, 5.5.7, 5.5.8]) to get

A Besicovich covering argument (see, e.g., [25, Lemma 4.2.14] for details) by such balls and local trace estimates show that for \(\mathcal {C}_{\infty }:={\mathcal {C}} {\setminus } \overline{B_1(0)}\)

where the implied constant depends only on \(\alpha \), \(\omega \), and \(\widehat{s}\), \(\mu \).

Step 3: We show that \(\left\| \partial _n \widehat{u}\right\| _{L^2(\partial \mathcal {C} \cap B_1(0))}< \infty \). Fix a cut-off function \(\chi \) with \(\chi \equiv 1\) on \(B_1(0)\) and \({\text {supp}}(\chi ) \subseteq B_2(0)\). We consider the following lifting of the jump h using a single layer potential for the Laplacian:

Since \(h \in L^2(({{\mathbb {R}}}^+ \times \{0\}) \cap B_2(0))\), we have by the mapping properties of the single layer potential (see [11, Thm. 2.17]) that \(j|_{\partial ( \mathcal {C} \cap B_2(0))} \in H^1(\partial \mathcal {C} \cap B_2(0))\) with

for some \(C > 0\) depending on \(\mu \) and \({{\hat{s}}}\). The jump relations of the single layer operator provide (see, e.g., [24, Thm. 6.11]) \(\llbracket {\gamma j \rrbracket }=0\) and \(\llbracket {\partial _n j}\rrbracket =h\) on \((0,2) \times \{0\}\). Since \(-\varDelta j = 0\) and \(j \in H^1_{loc}({{\mathbb {R}}}^2)\), and \({\text {supp}} \chi \subset B_2(0)\) we see that \({{\widetilde{u}}}:= \chi (\widehat{u}- j)\) is the \(H^1\)-function solving

Since the right-hand side \({{\widetilde{f}}} \in L^2(\mathcal {C} \cap B_2(0))\) and the Dirichlet boundary conditions are in \(H^1(\partial (B_2(0) \cap \mathcal {C}))\), standard elliptic regularity theory (see, e.g., [24, Thm. 4.24]) shows \(\partial _n {{\widetilde{u}}} \in L^2(\partial (B_2(0)\cap \mathcal {C}))\).

Step 4 (proof of (i)): The 2D Sobolev embedding theorem \(H^2 \subset L^\infty \) and (4.23) show the desired estimate for \(\ell = 0\). The argument leading to (4.23) can be iterated and thus yields the stated estimates for any fixed \(\ell \).

Step 5: Inspection of the proof reveals that all constants (if at all) depend continuously on \({\hat{s}}\). Since we are only interested in \({\hat{s}}\) in a compact set determined by the constants from the definition of \({\mathscr {S}}\) we can make all the constants independent of \({{\hat{s}}}\). \(\square \)

Having studied the transmission problem in a dimensionless form in Lemma 4.12, we can transfer the results to the setting we actually require using a scaling argument.

Lemma 4.13

Fix \(\omega \in (0,\pi )\). For \(s \in {\mathscr {S}}\) and \(\mu >0\), let \(u \in H^1(S_\omega )\) solve the transmission problem on the sector \(S_{\omega }\):

Recall that \(\varGamma _S\) denotes the parts of \(\partial S_{\omega }\) adjacent to the origin. Then

The constants \(C>0\) depend only on \(\omega \), the parameter \(\mu \), and the choices of \(\sigma _0\) and \(\delta \) in the definition of \({\mathscr {S}}\).

Proof

We denote by \(\varGamma _{1} = \partial B_1(0) \cap \partial S_\omega \) the circular arc that is part of \(\partial S_\omega \). Write \(s= |s| {{\hat{s}}}\) with \({{\hat{s}}} \in \{{{\hat{s}}} \in {\mathscr {S}}: |{{\hat{s}}}|= 1\}\). Let \(\widehat{u}\) be the function solving

that is given by Lemma 4.12. Then we define \(u_1(x):=\widehat{u}(|s| \,x)\). Lemma 4.12 and a simple scaling argument gives the following estimates for \(u_1\) (for any j):

The remainder \(\delta := u-u_1 \in H^1(S_\omega )\) then solves

We note that for this Dirichlet problem with piecewise smooth data that are exponentially small in |s|, Lemma 4.5 gives \(\left\| \delta \right\| _{|s|,1,S_\omega } \lesssim \Vert u_1\Vert _{|s|,1/2,\varGamma _1}\), which is exponentially small in |s|. Applying [24, Thm 4.24] then gives, since \(u_1\) vanishes on \(\varGamma _S\):

which is again exponentially small in |s|. The estimate (4.26) follows. \(\square \)

We need the following modification of [26, Lemma 3.13].

Proposition 4.14

Let \(\mathcal {O}\) be a Lipschitz domain. Define the Besov space (cf. (4.1))

For \(\varepsilon \in (0, 1)\) and every \(w \in H^{1/2}(\mathcal {O})\), there exists a function \(w_{\varepsilon } \in H^1(\mathcal {O})\) with

The constant depends only on the domain \(\mathcal {O}\).

Proof

This is essentially [26, Lemma 3.13]. The only modification needed is that we consider the \(H^{1/2}(\mathcal {O})\)-norm on the right-hand side instead of the \(B^{1/2}_{2,\infty }\)-norm, which is the reason for getting penalized by a factor \(\sqrt{\left| \log (\varepsilon )\right| }\) instead of a factor \(\left| \log (\varepsilon )\right| \) as in [26, Lemma 3.13]. The result follows from the same proof, only noting that one can bound using the Cauchy-Schwarz inequality

and the last factor produces a factor \(1+\sqrt{|\log (\varepsilon )|}\). \(\square \)

Lemma 4.15

Let u solve (4.25). Then, there exists a constant \(C>0\) depending only on \(\omega \), \(\mu \) and the parameters in the definition of \({\mathscr {S}}\) such that

Proof

For \(w \in H^{1/2}(\varGamma )\), select \(w_{\varepsilon }\) as in Proposition 4.14 with \(\varepsilon >0\) to be chosen later. We calculate for \(\varGamma _{\pm \omega }\), i.e., the two parts of \(\partial S_\omega \) adjacent to the origin:

Since \(\varGamma _{\pm \omega }\) is a one-dimensional line segment, we can use the Sobolev embedding [35, Sec. 32] to estimate

Overall, we get using the properties of \(w_{\varepsilon }\) from Proposition 4.14 and the estimates on \(\partial _n u\) from (4.24):

Choosing \(\varepsilon = \left| s\right| ^{-1}\) completes the proof. \(\square \)

We are now in a position to study a more general transmission problem, namely, allowing for Dirichlet jumps and more general Neumann transmission data.

Lemma 4.16

(Transmission problem) Let \(\mathcal {O}\subset \mathbb {R}^2\) be an open Lipschitz domain. Let \(\varGamma ' \subset \mathcal {O}\) be a smooth interface that splits \(\mathcal {O}\) into two disjoint Lipschitz domains \(\mathcal {O}_{1}\) and \(\mathcal {O}_{2}\).

Given \(g \in {\widetilde{H}}^{1/2}(\varGamma ')\), \(h \in H^{-1/2}(\varGamma ')\), there exists a unique solution \(u \in H^1(\mathcal {O}_1 \cup \mathcal {O}_2)\) to the following problem:

Additionally, the following estimate holds:

If \(\mathcal {O}_1\) and \(\mathcal {O}_2\) are polygons, \(\varGamma '\) is a straight line, and h can be decomposed as

for some \(h_1 \in \mathbb {C}\), \(\mu >0\), and \(h_2 \in H^{-1/2}(\varGamma ')\), then \(\partial _n u\) exists pointwise almost everywhere and

Proof

Proof of (4.27): Since g is assumed in \({\widetilde{H}}^{1/2}(\varGamma ')\), we can extend it by 0 to a function \({\widetilde{g}} \in H^{1/2}(\partial \mathcal {O}_1)\) such that (see for example [24, Thm. 3.33])

We solve a Dirichlet problem on \(\mathcal {O}_1\) with data \({\widetilde{g}}\) to obtain \(u_1\) and extend it by 0 to \(\mathcal {O}_2\). Then we solve the following problem on \(\mathcal {O}\): Find \(u_2 \in H_0^1(\mathcal {O})\) such that

where \(\gamma _{\varGamma '}\) denotes the trace operator on \(\varGamma '\). The function \(u:=u_1+u_2\) then solves the transmission problem. The estimate (4.27) follows from Lemmas 4.5 to bound \(u_1\) and, in order to bound \(u_2\), the ellipticity of the sesquilinear form (see Lemma 4.2) together with the trace estimate (Lemma 4.4) to estimate the contribution \(\langle {h},{\gamma _{{\varGamma '}} v}\rangle _{\varGamma '}\).

Proof of (4.28) for \(h_1 = 0\): Introduce \(\mathcal {V}:= \partial \varGamma ^\prime \cup \{\hbox { vertices of}\ \varOmega \}\). Since \(\mathcal {O}_1\) and \(\mathcal {O}_2\) are piecewise smooth and \(u = 0\) on \(\partial \mathcal {O}\) and the right-hand side is homogeneous, the solution u is smooth up to the boundary with the exception of the vertices of \(\varOmega \) and near the interface \(\varGamma ^\prime \). Hence, \(\partial _n u\) exists pointwise everywhere on \(\partial \mathcal {O}{\setminus } \mathcal {V}\). To show the estimate (4.28), we consider test functions \(v \in V:= \{v \in C^\infty (\overline{\mathcal {O}})\,|\, v \text { vanishes in a neighborhood of} \mathcal {V}\}\). Since \(\partial _n u\) exists pointwise on \(\partial \mathcal {O}{\setminus }\mathcal {V}\) and \(v \in V\) vanishes in a neighborhood of \(\mathcal {V}\), the duality pairing \(\langle \partial _n u,v\rangle _{\partial \mathcal {O}}\) is well-defined and an integration by parts gives

Since V is dense in \(H^1(\mathcal {O})\) (because \(\mathcal {V}\) consists of finitely many points–see[Sec. [17, 35]], where 35 is the proper citation), the equation (4.29) actually holds for all \(v \in H^1(\mathcal {O})\). Given \(\xi \in H^{1/2}(\partial \mathcal {O})\) we select \(v_\xi \in H^1(\mathcal {O})\) with \(v|_{\partial \mathcal {O}} = \xi \) as the lifting given by (4.7) in Lemma 4.3, which satisfies \(\Vert v_\xi \Vert _{|s|,1,\mathcal {O}} \lesssim |s|^{1/2} \Vert \xi \Vert _{H^{1/2}(\partial \mathcal {O})}\). This implies

By the trace theorem we have \(\Vert v_\xi \Vert _{|s|,1/2,\varGamma '} \lesssim \Vert v_\xi \Vert _{|s|,1,\mathcal {O}}\). Taking the supremum over all \(\xi \in H^{1/2}(\partial \mathcal {O})\) yields (4.28) for the case \(h_1 = 0\).

Proof of (4.28) for \(h_1 \ne 0\): If \(h_1\ne 0\), we lift this contribution separately by solving the corresponding transmission problem (4.25). The estimate follows by applying Lemma 4.15 and a suitable localization. \(\square \)

4.3.2 Corner layers

Before we can bound the functions used to match boundary layers, we must control the jump between two boundary layer solutions. We start with a very simple geometric situation.

Lemma 4.17

Fix \(\omega \in (0,\pi )\). Consider the sector \(S_{\omega }\) and let \(g \in H^1(-1,1)\). Consider the two Cartesian coordinate systems \((\zeta _1,\zeta _2)\) and \(({\widehat{\zeta }}_1,{\widehat{\zeta }}_2)\), each given by one of the straight sides of the sector and such that the components \(\zeta _2\) and \({{\widehat{\zeta }}}_2\) of the bisector \(\{(r,0):r \in (0,1)\}\) are positive. In polar coordinates \((r,\varphi )\) (with \(r > 0\), \(\varphi \in (-\omega ,\omega )\)) these coordinates are given by

For \(\mu >0\) define

-

(i)

On the line segment \(\varGamma ':=\{(r,0):r \in (0,1)\}\) the following estimates hold with \(d_0:={\text {dist}}(\cdot ,(0,0)) = r\)

$$\begin{aligned} \!\!\! \left\| u_1 - u_2\right\| _{\left| s\right| ,1/2,\varGamma '} \!+\! \left\| d_{0}^{-1/2}(u_1 - u_2)\right\| _{L^2(\varGamma ')}&\! \lesssim \! \left| s\right| ^{-1/2}\left\| g\right\| _{H^{1}(-1,1)}. \end{aligned}$$(4.31) -

(ii)

The difference of normal derivatives across \(\varGamma '\) can be decomposed as

$$\begin{aligned} \partial _n u_1(x) - \partial _n u_2(x)&= h_1 sg(0) e^{-\mu s \left| x\right| } + h_2(x) \;\text { with} \\ \left\| h_2\right\| _{\left| s\right| ,-1/2,\varGamma '}&\lesssim \left| s\right| ^{-1/2}\left\| g\right\| _{H^{1}(-1,1)} , \end{aligned}$$where the orientation of the normal is arbitrarily fixed and \(h_1 \in \mathbb {C}\) is independent of g.

-

(iii)

There holds \(\Vert e^{-\mu s |x|}\Vert _{H^{-1/2}(\varGamma ')} \lesssim |s|^{-1}\).

Proof

We work in polar coordinates, which are related to the coordinates \((\zeta _1,\zeta _2)\) and \(({{\widehat{\zeta }}}_1,{{\widehat{\zeta }}}_2)\) by (4.30). For brevity of notation we introduce the constants \(c_1:=\cos (\omega )\), \(c_2:=\sin (\omega )\) and note \(c_2>0\).

Proof of (i): We start with the estimate for the Dirichlet jump and calculate on \(\varGamma '\):

We estimate:

An analogous computation gives:

Next we compute the tangential derivative of \({\llbracket \gamma u \rrbracket }\) on \(\varGamma '\):

The first term is handled analogously to the \(L^2\)-term. For the second term we use the crude estimate \(\left| e^{-s \mu r c_2}\right| \lesssim 1\) and get:

Interpolating (4.32) and (4.33) then gives (4.31).

Proof of (ii): In polar coordinates, the normal derivative on \(\varGamma ^\prime \) of a function is (up to the sign) given by \( \partial _{n_{\varGamma '}} u = r^{-1}\frac{\partial u}{\partial \varphi }\) . Thus it is sufficient to estimate the angular derivatives.

On \(\varGamma '\), we calculate for the angular derivative:

After substracting the contribution \(- 2 s g(0) \mu c_1 e^{-s \mu c_2 r}=: h_1 s g(0) e^{-s \mu c_2 r}\) from the second term, the two terms are structurally similar to the derivative of \({\llbracket \gamma u \rrbracket }\). Hence, we analogously get for \(h_2:={\llbracket \partial _n u \rrbracket } - h_1 s g(0) e^{-s \mu c_2 r}\):

To control \(\Vert h_2\Vert _{|s|,-1/2,\varGamma }\) we calculate for \(\xi \in H^{1/2}(\varGamma ')\):

Proof of (iii): We identify \(\varGamma '\) with the interval (0, 1). A direct calculation shows \(\Vert e^{-\mu s r}\Vert _{L^2(0,1)} \lesssim |s|^{-1/2}\). A test function \(v \in H^1_0(0,1)\) can be represented as \(v(x) = \int _0^r v^\prime (t)\,dt\). Hence, an integration by parts yields

Thus, \(\Vert e^{-\mu s r}\Vert _{H^{-1}(0,1)} \lesssim |s|^{-3/2}\). Furthermore, we have \(\Vert e^{-\mu s r}\Vert _{L^2(0,1)} \lesssim |s|^{-1/2}\). Interpolation then yields \(\Vert e^{-\mu s r}\Vert _{H^{-1/2}(0,1)} \lesssim |s|^{-1}\). \(\square \)

4.3.3 Decomposing the DtN-operator

In Sect. 4.2 we discussed the DtN-operator for smooth geometries. Here, we study the case of polygonal domains. We will do so by introducing corner layers, similarly to what was done in [25, Sec. 7.4.3].

The following Theorem 4.18 presents a decomposition of the DtN-Operator into several contributions. To describe them, we need some notation as illustrated in Fig. 1b. The polygon \(\varOmega ^{-}\) has vertices \(A_1,\ldots ,A_J\) and edge \(\varGamma _j\) connects \(A_{j-1}\) with \(A_{j}\) (we set \(A_{J+1}= A_1\) and \(\varGamma _{J+1}= \varGamma _1\) and, for simplicity of notation, we assume that \(\partial \varOmega ^{-}\) consists of a single component of connectedness). \(\varGamma _j^\prime \) is the bisector of the angle at vertex \(A_j\). The subdomains \(\varOmega _{j}\) are confined by four curves: \(\varGamma _{j}\), the bisectors at \(A_j\) and \(A_{j-1}\) (dashed black in Fig. 1a), and a fourth curve completely contained in \(\varOmega ^{-}\) and sufficiently close to \(\varGamma _{j}\) (dashed blue in Fig. 1a). We set \(\varOmega _0:= \varOmega ^{-} {\setminus } \cup _{i=1}^J {\overline{\varOmega }}_i\) and, for convenience \(\varOmega _{J+1}= \varOmega _1\). We fix \(\chi _{BL} \in C^\infty (\mathbb {R}^2)\) with \({\text {supp}} \chi _{BL} \subset \cup _{i=1}^{J} \varOmega _i\) and \(\chi _{BL} \equiv 1\) near \(\varGamma \). Finally, for each vertex \(A_j\) we let \(\chi _{CL,j} \in C^\infty (\mathbb {R}^2)\) be a cut-off function with \({\text {supp}} \chi _{CL,j} \cap \{A_{j'} \} = \emptyset \) for \(j' \ne j\) such that \(\chi _{CL,j} \equiv 1\) on \(\varGamma _j^\prime \cap {\text {supp}} (\chi _{BL})\).

Theorem 4.18

Let \(\varOmega ^- \subseteq \mathbb {R}^2\) be a polygon, \(s \in {\mathscr {S}}\). Let \(g \in H^1(\varGamma )\) with \(g|_{\varGamma _i} \in H^2(\varGamma _i), i=1, \dots ,J\). Let u solve

Then u can be decomposed as \(u=\chi _{BL} u_{BL} + \sum _{j=1}^J \chi _{CL,j} u_{CL,j} + r\) such that for a \(C > 0\) depending only on \(\varOmega ^{-}\):

-

(i)

\(u_{BL}|_{\varOmega _i} \in H^2(\varOmega _i)\) and \(\Vert u_{BL}\Vert _{|s|,1,\varOmega _i} \le C |s|^{1/2} \Vert g\Vert _{H^1(\varGamma _i)}\) for each \(i=1,\dots ,J\). Additionally, \(\partial _n^- u_{BL} - s \gamma ^- u_{BL} =0\).

-

(ii)

For each \(j \in \{1,\ldots ,J\}\) the function \(u_{CL,j}\) is in \(H^1(\varOmega _i)\) for each \(i=0,\ldots ,J\) and \(u_{CL,j}|_{\varGamma } = 0\). Furthermore, \(-\varDelta u_{CL,j} + s^2 u_{CL,j} = 0\) on \(\varOmega _j \cup \varOmega _{j+1}\) and \(\partial _n \chi _{CL,j} u_{CL,j}\) exists on each edge \(\varGamma _i\), \(i =1,\ldots ,J\), and

$$\begin{aligned} \left\| \partial _n^- (\chi _{CL,j} u_{CL,j}) - s \gamma ^- \chi _{CL,j}u_{CL,j} \right\| _{H^{-1/2}(\varGamma )} \lesssim C \sqrt{\ln (|s|+2)} \Vert g\Vert _{H^1(\varGamma )}. \end{aligned}$$Furthermore, \(\sum _{j=1}^J \Vert u_{CL,j}\Vert _{|s|,1,\varOmega _j \cup \varOmega _{j+1}} \le C \Vert g\Vert _{H^1(\varGamma )}\).

-

(iii)

The remainder r satisfies

$$\begin{aligned} \left\| \partial _n^- r - s \gamma ^- r \right\| _{H^{-1/2}(\varGamma )}&\le C \,\Big ( \left\| g\right\| _{H^1(\varGamma )} + |s|^{-1} \sum _{j=1}^J \Vert g\Vert _{H^{2}(\varGamma _j)}\Big ). \end{aligned}$$

The analogous statement holds for the exterior problem upon replacing s by \(-s\) in (i)-(iii).

Proof

1. step (construction of \(u_{BL}\)) For each \(\varOmega _i\), let \((\theta _i,\rho _i)\) be the boundary fitted coordinates obtained by an affine parametrization of the line that contains \(\varGamma _i\) by \(\theta _i\) and denoting by \(\rho _i\) the (signed) distance from that line. Write \({\widehat{g}}(\theta _i)\) for the function g on \(\varGamma _i\) in the coordinates \((\theta _i,\rho _i)\) and extend it \(H^1\) and \(H^2\)-stable to the line. We define, in boundary fitted coordinates \((\theta _i,\rho _i)\), the function \(u_{BL}(\theta _i,\rho _i):={\widehat{g}}(\theta _i) e^{-s \rho _i}\). That is, the function \(u_{BL}\) is given by applying the construction from Lemma 4.9. We have by construction \(\partial _n u_{BL} - s u_{BL} = 0\) on \(\varGamma \) and \(\Vert u_{BL}\Vert _{|s|,1,\varOmega _i} \lesssim \sqrt{|s|} \Vert g\Vert _{H^1(\varGamma _i)}\).

2. step (construction of \(u_{CL}\)) The function \(u_{BL}\) is discontinuous across the bisectors \(\varGamma _j^\prime \). The corner layers \(u_{CL,j}\) correct this. Focussing on the bisector \(\varGamma _j^\prime \), let \(\varGamma _j\) and \(\varGamma _{j+1}\) be the edges meeting at \(A_j\). Fix \(\kappa \) such that \(\chi _{BL} u_{BL} \equiv 0\) on \({\varGamma ^\prime \cap \mathbb {R}^2{\setminus } B_\kappa (A_j)}\). On the sector \(S_{\omega }=B_{\kappa }(A_j) \cap \varOmega ^- \) define \(u_{CL,j}\) as the solution of the following transmission problem:

Up to translation and rotation, we are essentially in the setting of Lemmas 4.16 and 4.17. That is, on \(\varOmega _j\) and \(\varOmega _{j+1}\) the function \(u_{BL}\) has the form given in Lemma 4.17 so that (taking additionally the effect of \(\chi _{BL}\) into account) we arrive at

where \(r_j = {\text {dist}}(\cdot ,A_j)\). For the normal derivative, Lemma 4.17 provides the representation \({\llbracket \partial _n (\chi _{BL} u_{BL}) \rrbracket }(x)= h_1 s g(0) e^{-s \mu \left| x\right| }+ h_2(x)\) with

By Lemma 4.16, we therefore get

Furthermore, Lemma 4.16 provides for \(\partial _n (\chi _{CL,j} u_{CL,j})\) on \((\varGamma _j \cup \varGamma _{j+1} \cup \{A_j\}) \cap B_{\kappa }(A_j)\) the bound

Noting that \(u_{CL,j} = 0\) on \(\varGamma \), the assertion of (ii) follows.

3. Step (Construction of r): The function \(r \in H^1_0(\varOmega ^-)\) is defined as \(r:= u - u_{BL} - \sum _{j=1}^J \chi _{CL,j} u_{CL,j}\). It satisfies the equation \( - \varDelta r + s^2 r= f \) with f satisfying

The bounds of Lemmas 4.3 and 4.5 then conclude the proof.

4. Step (exterior domains) The result for the exterior problem follows along the same lines. \(\square \)

From Theorem 4.18 we deduce the following result for the DtN operator under slightly lower regularity requirements:

Corollary 4.19

Let \(\varOmega ^{-}\subset \mathbb {R}^2\) be a polygon and \(s \in {\mathscr {S}}\). Let \(g \in H^1(\varGamma )\) and u solve

Then

The analogous statement holds for the exterior problem upon replacing s by \(-s\).

Proof

We employ the smoothing technique as in Corollary 4.10: By smoothing, on a length scale \(|s| \ge 1\) or by interpolation, one can construct a function \({{\widetilde{g}}} \in H^1(\varGamma )\) such that \({{\widetilde{g}}} \in H^2(\varGamma _i)\) for each edge \(\varGamma _i\) and such that

This can be done in two steps: first, one defines the approximation edgewise and in a second step ensure continuity at the vertices of \(\varOmega ^{-}\) by introducing an appropriate correction, e.g., by a piecewise linear function. The remainder of the proof is then as in Corollary 4.10. \(\square \)

5 Numerical examples

In this section, we compare the performance of the numerical schemes of Theorem 3.3 with the more standard method of Proposition 3.2 for an interior scattering problem. That is, we compare the Runge–Kutta convolution quadrature approximation by the following two methods:

-

\([{\text {DtN}}^-(\partial _t^k)] {u^{\mathrm {inc}}}\), which is denoted “standard method”, and

-

\([[\partial _t^k]^{-1}{\text {DtN}}^-(\partial _t^k)] {{\dot{u}}^{\mathrm {inc}}}\), which is denoted “differentiated method”.

We use two different Runge–Kutta methods of the Radau IIA family, one with 3 and one with 5 stages. For the 3-stage version, we have \(q=3\) and \(p=5\). We therefore expect a convergence rate of 3 for the standard method and full classical order 5 up to logarithmic terms for the differentiated scheme.

In order to show that our theoretical estimates are sharp, we also look at the 5-stage method. There, the stage order is \(q=5\) and the classical order \(p=9\). The expected rates are therefore 5 and 7 respectively for the two numerical schemes up to logarithmic terms.

For simplicity, we consider the interior scattering problem and prescribe an exact solution as the travelling wave

The wave direction is selected as \({\varvec{d}}:=(\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}})\), and the other parameters were \(\tau _0:=4\) and \(\alpha :=0.05\). We integrated until the end time \(T=12\). In order to show that the method works with the predicted rates, even for non-convex geometries, we consider the classical L-shaped geometry, given by the vertices

As the space discretization, we employ a Galerkin boundary element method of order 5, based on a code developed by F.-J. Sayas and his group at the University of Delaware. A sufficiently refined grid is employed to be able to focus on the temporal error. Instead of evaluating the \(H^{-1/2}\)-error, we compute the quantity

Here \(\Pi _{L^2}\) denotes the \(L^2\)-orthogonal projection onto the BEM space. Since the grid is sufficiently fine and fixed, this should not impact the observed convergence rates. The operator V(1) was taken because it gives an (s-independent) equivalent norm on \(H^{-1/2}(\varGamma )\).

In Fig. 2, we observe that the rates from Proposition 3.2 and Theorem 3.3 are obtained as predicted. We conclude that while the fact that the rate jumps by order 2, even though the modification of the scheme is of order one, is at first surprising, this can be rigorously explained by Theorem 3.3. Observations of this type provided the main motivation for the investigations in this work.

References

Adams, R.A., Fournier, J.J.F.: Sobolev spaces. Pure and Applied Mathematics, vol. 140, 2nd edn. Elsevier/Academic Press, Amsterdam (2003)

Banjai, L.: Time-domain Dirichlet-to-Neumann map and its discretization. IMA J. Numer. Anal. 34(3), 1136–1155 (2014)

Bamberger, A., Ha Duong, T.: Formulation variationelle espace-temps pour le calcul par potentiel retardé d’une onde acoustique. Math. Meth. Appl. Sci. 8, 405–435 (1986)

Bamberger, A., Ha, Duong T.: Formulation variationnelle pour le calcul de la diffraction d’une onde acoustique par une surface rigide. Math. Methods Appl. Sci. 8(4), 598–608 (1986)

Banjai, L., Lubich, C.: An error analysis of Runge-Kutta convolution quadrature. BIT 51(3), 483–496 (2011)

Banjai, L., Lubich, C.: Runge–Kutta convolution coercivity and its use for time-dependent boundary integral equations. IMA J. Numer. Anal. 39(3), 1134–1157 (2019)

Banjai, L., Lubich, C., Melenk, J.M.: Runge–Kutta convolution quadrature for operators arising in wave propagation. Numer. Math. 119(1), 1–20 (2011)

Banjai, L., Lubich, C., Sayas, F.-J.: Stable numerical coupling of exterior and interior problems for the wave equation. Numer. Math. 129(4), 611–646 (2015)

Banjai, L., Rieder, A.: Convolution quadrature for the wave equation with a nonlinear impedance boundary condition. Math. Comput. 87(312), 1783–1819 (2018)

Bramble, J., Scott, R.: Simultaneous approximation in scales of Banach spaces. Math. Comput. 32, 947–954 (1978)

Chandler-Wilde, S.N., Graham, I.G., Langdon, S., Spence, E.A.: Numerical-asymptotic boundary integral methods in high-frequency acoustic scattering. Acta Numer. 21, 89–305 (2012)

Evans, L.: Partial Differential Equations. American Mathematical Society, Providence, RI (1998)

Gimperlein, H., Meyer, F., Özdemir, C., Stark, D., Stephan, E.P.: Boundary elements with mesh refinements for the wave equation. Numer. Math. 139(4), 867–912 (2018)

Gimperlein, H., Nezhi, Z., Stephan, E.P.: A priori error estimates for a time-dependent boundary element method for the acoustic wave equation in a half-space. Math. Methods Appl. Sci. 40(2), 448–462 (2017)

Gwinner, J., Stephan, E.P.: Advanced boundary element methods. Springer Series in Computational Mathematics. Treatment of boundary value, transmission and contact problems, vol. 52. Springer, Cham (2018)

Hsiao, G.C., Wendland, W.L.: Boundary integral equations. Applied Mathematical Sciences, vol. 164. Springer, Berlin (2008)

Hairer, E., Wanner, G.: Solving ordinary differential equations. II. Springer Series in Computational Mathematics. Stiff and differential-algebraic problems, vol. 14, 2nd edn. Springer, Berlin (2010)

Joly, P., Rodríguez, J.: Mathematical aspects of variational boundary integral equations for time dependent wave propagation. J. Integral Equ. Appl. 29(1), 137–187 (2017)

Karkulik, M., Melenk, J.M., Rieder, A.: Stable decompositions of \(hp\)-BEM spaces and an optimal Schwarz preconditioner for the hypersingular integral operator in 3D. ESAIM Math. Model. Numer. Anal. 54(1), 145–180 (2020)

Lubich, C., Ostermann, A.: Runge–Kutta methods for parabolic equations and convolution quadrature. Math. Comput. 60(201), 105–131 (1993)

Laliena, A.R., Sayas, F.-J.: Theoretical aspects of the application of convolution quadrature to scattering of acoustic waves. Numer. Math. 112(4), 637–678 (2009)

Lubich, C.: Convolution quadrature and discretized operational calculus. I. Numer. Math. 52(2), 129–145 (1988)

Lubich, C.: Convolution quadrature and discretized operational calculus. II. Numer. Math. 52(4), 413–425 (1988)

McLean, W.: Strongly elliptic systems and boundary integral equations. Cambridge University Press, Cambridge (2000)

Melenk, J.M.: \(hp\)-finite element methods for singular perturbations. Lecture Notes in Mathematics, vol. 1796. Springer, Berlin (2002)

Melenk, J.M., Praetorius, D., Wohlmuth, B.: Simultaneous quasi-optimal convergence rates in FEM–BEM coupling. Math. Methods Appl. Sci. 40(2), 463–485 (2017)

Melenk, J.M., Schwab, C.: Analytic regularity for a singularly perturbed problem. SIAM J. Math. Anal. 30(2), 379–400 (1999)

Melenk, J.M., Sauter, S.: Wavenumber explicit convergence analysis for Galerkin discretizations of the Helmholtz equation. SIAM J. Numer. Anal. 49(3), 1210–1243 (2011)

Rieder, A.: Convolution Quadrature and Boundary Element Methods in wave propagation: a time domain point of view. PhD Thesis, Technische Universität Wien (2017)

Rieder, A., Sayas, F.-J., Melenk, J. M.: Runge–Kutta approximation for \(C_0\)-semigroups in the graph norm with applications to time domain boundary integral equations. In: SN Partial Differential Equations and Applications. (in press) arXiv:2003.01996 (2020)

Rieder, A., Sayas, F.-J., Melenk, J. M.: Time domain boundary integral equations and convolution quadrature for scattering by composite media. arXiv:2010.14162 (2020)

Sayas, F.-J.: Retarded Potentials and Time Domain Boundary Integral Equations. Springer Series in Computational Mathematics, vol. 50. Springer, Heidelberg (2016)

Sauter, S.A., Schwab, C.: Boundary element methods. Springer Series in Computational Mathematics, vol. 39. Springer, Berlin (2011). Translated and expanded from the 2004 German original

Steinbach, O.: Numerical approximation methods for elliptic boundary value problems. Finite and boundary elements. Springer, New York (2008). Translated from the 2003 German original

Tartar, L.: An introduction to Sobolev spaces and interpolation spaces. Lecture Notes of the Unione Matematica Italiana, vol. 3. Springer, UMI, Bologna, Berlin (2007)

Acknowledgements

The authors gratefully acknowledge financial support by the Austrian Science Fund (FWF) through the research program “Taming complexity in partial differential systems” (grant SFB F65).

Funding

Open access funding provided by University of Vienna.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A. Norm equivalence of interpolation spaces

A. Norm equivalence of interpolation spaces

Lemma A.1

Let \({{\mathcal {O}}}\) be a bounded domain. For \(\rho > 0\) and \(\theta \in (0,1)\) let \(\Vert \cdot \Vert _{\rho ,\theta ,\mathcal {O}}\) be defined as in Definition 4.1. Define

Then there are constants \(c_1\), \(c_2\) depending only on \(\mathcal {O}\) and \(\theta \) such that for all \(u \in H^\theta (\mathcal {O})\)

Proof

We use [19, Lemma 4.1] with \(X_0 = (L^2(\mathcal {O}), \Vert \cdot \Vert _{L^2(\mathcal {O})})\) and \(X_1 = (H^1(\mathcal {O}), {\Vert \cdot \Vert _{1,\mathcal {O}}})\) there. As given there, we set \(K(u,t):= \inf _{v \in H^1} {\Vert u - v\Vert _{L^2}} + t\Vert v\Vert _{H^1}\) as well as \(k(u,t):= \inf _{v \in H^1} {\Vert u - v\Vert _{L^2}} + t|v|_{H^1}\), where we omitted the argument \(\mathcal {O}\) for brevity. In [19, Lemma 4.1] the interpolation norm \(\Vert \cdot \Vert _\theta \) is based on K and the interpolation seminorm \(|\cdot |_\theta \) is based on k. We note that \(\Vert \cdot \Vert _\theta \sim \Vert \cdot \Vert _{\theta ,\mathcal {O}}\).

1. step: We claim \(\Vert u - {\overline{u}}\Vert _{\theta } \sim |u|_\theta \). This claim follows from the following two estimates using the Poincaré inequality:

Conversely,

2. step: The norm equivalence (5.1) follows from [19, Lemma 4.1]. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Melenk, J.M., Rieder, A. On superconvergence of Runge–Kutta convolution quadrature for the wave equation. Numer. Math. 147, 157–188 (2021). https://doi.org/10.1007/s00211-020-01161-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-020-01161-9