Abstract

Objectives

Providing detailed information about sentencing reduces punitive attitudes of laymen (the information effect). We assess whether this extends to modest information treatments and probe which specific informational types matter most. In addition to previous studies, we include affective measures and trust in judges.

Methods

In four survey experiments, 1778 Dutch participants were exposed to a sentence concerning a serious traffic offense resulting in a fatal accident. Studies 1 and 2 explore the effect of a press release on negative and positive affect. Studies 3 and 4 explore the effects of various types of information on affect and trust in judges.

Results

Modest information treatments generally heightened positive affect, reduced negative affect and—sometimes—increased trust in judges. Providing procedural cues and reference points about the sentence had a sizable effect on legal attitudes.

Conclusions

The information effects pertain to a broader set of attitudes than currently presumed in the literature. Furthermore, subtle treatments containing procedural cues and reference points can change attitudes towards judicial verdicts. Future research needs to explore if this finding extends to other contexts.

Similar content being viewed by others

Introduction and background

A majority of the public often believes that criminal courts punish too mildly (e.g., Roberts and Stalans 1997; Cullen et al. 2000; Elffers and De Keijser 2004; Feilzer 2015). People tend to think judges are lenient punishers and overestimate the effectiveness of prison time (Hough and Park 2002). Also, researchers have found that, most of the time, people rely on stereotypes to characterize judges, such as their being ‘out of touch’ with their community and elitist (Roberts et al. 2002; Van de Walle 2009). As well, many people think that judges are not responsive to society’s calls for harsh punishment, whereas actually they have become more punitive in recent years (Van Tulder 2005, p. 5). One of the possible causes of these misunderstandings and lack of appreciation for court sentencing is limited public knowledge about the courts and the way they come to their sentencing decisions.

Indeed, it is well-documented that, by and large, the public is ignorant with regard to court sentencing (e.g., Cullen et al. 2000; Hough and Park, 2002; Elffers et al. 2007; Roberts et al. 2012; Hough et al. 2013). This ignorance has consequences for how people view punishment and the criminal justice system. For instance, Mitchell and Roberts (2011) found that people who were misinformed about murder rates were also more likely to favor harsher punishment. Furthermore, Hough and Roberts (1998) found that poor knowledge about crime correlated with more negative ratings of courts.

For a long time, this lack of knowledge on court sentencing has led to calls to better educate the public about the court sentencing (e.g., Roberts and Stalans 1997; Cullen et al. 2000, p. 58). Since these calls, more than twenty years ago, a range of empirical studies have established the existence of an information effect. That is, providing the public with information changes their views of courts and sentencing. Research on the information effect shows that people use information to update their beliefs about both specific cases (the micro-level) and—to a lesser extent—sentencing in general (the macro-level).

An example of a macro-level experiment is an experiment by Chapman, Mirrlees-Black and Brawn (2002) that tested whether booklets with factual information about crimes and court sentences affected people’s views in general. Chapman et al. concluded that the information led to increases in knowledge and somewhat more satisfaction with courts and sentencing practices in general. An experiment by Singer and Cooper (2008) confirmed the effectiveness of booklets with factual information. Other modes of information provision have found to affect public attitudes towards sentencing too. Roberts et al. (2002) found that deliberative polling is an effective way to increase knowledge and reduces generic punitive judgments of laymen. Finally, Gainey and Payne (2003) found that students who received a lecture about alternative sentencing changed their attitudes.

Providing information about specific cases also affects public attitudes towards sentencing. St. Amand and Zamble (2001) found that providing case-specific information moderated sentences in a mock court case. Furthermore, an experiment by De Keijser, Koppen and Elffers (2007) showed that providing in-depth information about a crime case reduced punitive judgments of laymen, although their sentencing was still stricter than the sentencing of real judges.

Research in the past decade has continued to advance our understanding of the information effect. Mitchell and Roberts (2011) found a strong correlation between being informed about and attitudes towards sentencing. Furthermore, they found that participants who were provided information about sentencing were less punitive in their sentencing preferences. In line with this finding, Roberts, Hough, Jackson and Gerber (2012) found that providing information about sentencing guidelines decreased punitive judgments by the public for both micro- and macro-level attitudes.

Overall, the literature has found that there is a robust information effect affecting public views on sentencing and sentencing preferences in specific cases. This is in line with the general idea that such attitudes are adaptive and flexible (Cullen et al. 2000; Hough and Park 2002). However, it is less clear to what extent (a) the information effect extends to other relevant attitudes beyond sentencing preferences and (b) the information effect also holds with more subtle informational treatments and which types of information are important components of the information effect. Therefore, this article has two core objectives:

-

1)

Determining whether the information effect extends to a broader set of attitudes.

-

2)

Probing the information effect by testing more modest informational treatments and more specific components of information.

We will discuss both objectives in more detail in the next two sections.

Broadening the information effect to trust in judges and affective responses

This article further increases our understanding of the information effect by including a broader set of attitudes than used in prior studies: trust in judges and affective responses to specific sentences. First, we hardly know anything about affective responses to verdicts and how affective responses are affected by information. In doing so, the literature has ignored the emotional component of sentencing. Especially, high-profile cases may cause public anger or relief about the perceived leniency or strictness of a certain sentence. It is important to better understand how the information effect appeals to emotions because affective states have been found to predict a wide range of judgments and behaviors (e.g., Schwarz and Bless 1991; Carr et al. 2003; Siamagka et al. 2015). Specifically, work by Schwarz and colleagues has shown that assessing “how you feel about it” is an important way for people to make complex evaluative judgments (Schwarz and Clore 1988; Schwarz 1990). For instance, anger about an alleged “mild verdict” or feeling relief when a suspect gets what he/she deserves is arguably to a large extent how people unconsciously evaluate a sentence. To our knowledge, we are the first to assess whether providing people with information treatments change such affective evaluations.

Secondly, we include trust in judges as an important, yet largely neglected, dependent variable. There is academic consensus that trust is a central component of legitimacy, which eventually is crucial for voluntary acceptance of judicial decisions (Tyler 2006). In spite of its importance, there has been surprisingly little research into the information effect on trust in judges. Most research on trust in judges or courts has been carried out in political science, where there is cross-sectional evidence that more knowledge about courts has a positive relation with confidence in courts (Benesh 2006). Personal experience with being on a jury also has a positive correlation with support for local courts (Benesh and Howell 2001; Wenzel, Bowler and Lanoue 2003). Furthermore, a field experiment by Grimmelikhuijsen and Klijn (2015) showed that people who have been instructed to watch an informative television show about the work of judges have more trust than a control group that has not received any such instructions. This means that there is some, though scarce, evidence that exposing people to information about the work of judges is positively linked with trust in judges. At the same time, there is evidence that disputes the relation between information, sentencing opinions and trust. For example, Elffers et al. (2007) used a quasi-experimental design in which subscribers of a regional newspaper were recruited to go to court sessions of various criminal cases for the duration of one year. During this year, these so-called “newspaper jurors” would report in newspaper articles on the criminal cases they witnessed. Their findings showed that, after the treatment interval of one year, no attitude change among the newspaper subscribers could be identified.

Indeed, there has been some discussion as to whether punitive judgments are related to more general attitudes, such as trust (Ryberg and Roberts 2014). Intuitively, one might expect that trust in judges is fostered when laymen’s judgments are more closely aligned with actual sentences. However, the connection between opinions about sentencing and trust has been debated in the literature. For instance, most people express highly critical opinions about the height of sentencing, yet overall trust in judges is relatively stable and high compared with other institutions. According to survey results, a large majority of the public (71%) want judges to focus on specifics of the case instead of public opinion (De Keijser 2014). This suggests that there is a disconnection between punitive judgments and other attitudes such as trust and affect (cf. De Keijser and Elffers 2009).

By including affective responses and trust in judges into research on the information effect, we aim to shed light on the question whether these attitudes are as malleable as punitive judgments or perhaps are disconnected from it. More specifically, we focus on “trust in judges” in a specific case (i.e., on the micro-level) and not on generic attitudes, such as confidence in the courts or court system in general (i.e., macro-level). In this article, we will focus on the micro-level information effect, that is, providing information about a specific case. Therefore, it makes sense to match this with a micro-level trust variable (trust in judges) as opposed to a macro-level variable (confidence in the courts).

Specifying the information effect by probing modest and more specific treatments

Most experiments on information effects so far have used high-intensity treatments, such as elaborate booklets, deliberative polling, television shows or reading complete case files (e.g., Grimmelikhuijsen and Klijn 2015; Chapman et al. 2002; De Keijser et al. 2007). An exception is an experiment by Roberts et al. (2012), but even their experimental treatment contained an information leaflet of about 700 words. In practical terms, such information-intensive treatments are less likely to reach an intended audience of journalists or the general public who have limited time and cognitive resources to process information.

Theoretically, it is interesting to test more subtle informational treatments, too. So far, scholars have concluded that people’s views on courts and especially sentencing are adaptive and flexible (e.g., Cullen et al., 2000). At the same time, this conclusion is mostly based on rather strong treatments. By exploring if shorter pieces of information have the same effect we try to establish if such attitudes are really as malleable as is commonly assumed.

In addition, there have been no studies systematically varying types of information about the same court case, so what kind of information causes attitudes to change is as of yet unknown. Much of the current literature seems to suggest that providing more detail and context nuances people’s views on sentencing. One reason is that when less detail is provided, more global considerations will be at the top of people’s minds, causing people to focus on stereotypes and worst case scenarios (cf. Roberts et al. 2002). Furthermore, authors have argued that public opinion polls asking about sentencing in general have limited validity and may actually tap into other generic attitudes too, such as fear of crime (Indermauer and Hough 2002) or worries about rising crime (Elffers et al. 2007). Thus, when more specificity about a case is provided, more specific considerations come into play, and this decreases negative attitudes towards courts and sentencing (Indermauer and Hough 2002; Mirrlees-Black 2002).

However, it is uncertain to what extent providing merely more details causes the information effect or whether there are specific qualities present in information that drive this effect. First, when revealing details about a case, the information might also reveal certain procedural cues about judicial decision-making. There is a large literature on procedural justice that has empirically shown that, when people perceive judges as more procedurally just, they tend to trust them more (Tyler 2006). More specifically, procedural fairness has been found to be related to various human reactions such as voluntarily acceptance of authorities’ decisions and commitment to groups, organizations and society (Tyler et al. 1997).

In legal contexts, procedural justice judgments are strongly associated with acceptance of court-ordered arbitration awards (Lind et al. 1993), obedience to laws (Tyler 2006) and outcome satisfaction (Casper et al. 1988). Furthermore, the amount of information people have available about their social environment tends to affect their procedural fairness judgments (Van den Bos et al. 1997; Van den Bos 1999; Van den Bos and Lind 2002), which, in turn, impacts their trust in societal authorities (Grootelaar and Van den Bos 2018). Building on this literature, we test whether providing procedural cues in an informational treatment has a distinct effect on public attitudes in legal contexts.

Second, we test the effect of reference points. One reason for people’s punitive attitudes and negative views on sentencing may be that it is relatively unknown whether a sentence is harsh or lenient in a comparative sense. How does the public assess sentences into a subjective scale of ‘too lenient or ‘too strict’? To come to such a judgment, it is argued, people need to compare absolute numbers (such as a sentence) to a relative benchmark (such as sentences in other cases). Psychologists have long shown that an important part of making judgments is by using reference points as a judgmental shortcut. These reference points enable people to evaluate absolute numbers when they possess no deeper knowledge about this number (Tversky and Kahneman 1991; Mussweiler 2003). This implies that the same absolute sentence can be evaluated differently depending on the framing of the reference point. For instance, three years of imprisonment for a causing a deadly traffic accident may not sound much to most citizens, but, when you know that, in the Dutch context, this is a high sentence compared with other traffic crime sentences, the exact same sentence may be judged very differently.

The effect of reference points has also been found in applied settings. For instance, Olsen (2017) found that providing comparative information heavily influences the way people are affected by performance ratings of public organizations. Olsen’s work also shows that so-called “social reference points” are particularly indicative for people’s judgments. In other words, when the performance of a given public organization is compared with other organizations, this strongly affects judgments more so than, for instance, comparison through time (historical reference points). Given the potential importance of reference points in judging numerical information, such as sentences, this article tests the effect of a social reference point—that is, how does the sentence compare with other cases—on how sentencing and judges are evaluated.

Overall, this provides fresh theoretical insight on the literature on the information effect by broadening the literature with less-investigated attitudes (trust and affect), by further calibrating the information effect by investigating shorter information and by systematically testing how various information qualities—such as reference points and procedural information—affect these attitudes. Before we continue explaining the experimental design in more detail, the next section introduces the context in which we conducted our studies.

The current study: Serious traffic offenses in the Netherlands

The experiments employ real materials taken from press releases in which a court decided on a driving offense causing death. In the Netherlands, as in most countries, causing deadly traffic accidents is punishable by imprisonment yet is often punished much milder than other deadly crimes such as manslaughter and homicide, mainly because there usually is no intention to cause a deadly accident. We hypothesize that a lack of understanding of how the degree of blameworthiness in causing a deadly traffic accident determines a sentence is an important source of criticism.

First, it should be noted that motor vehicle crimes, like any other crime in the Netherlands, are tried only by professional judges. All judges in the Netherlands are appointed until a set retirement age and cannot be removed from their position (except for serious unethical behavior). There are no elections for the judiciary nor are there political appointments, jury trials or other forms of laymen involvement in judicial decision-making.

Second, criminal court cases are in the first instance tried by district courts. Simple cases, such as shoplifting, are tried by one judge. More serious and complex cases, such as when deadly traffic accidents are tried before a court, are assessed by three judges (meervoudige kamer). The Dutch criminal system does not have plea bargaining, which means that all cases are fully tried. Decisions of a district court are open to appeal at a Court of Appeal (Gerechtshof). In appeal, defendant and prosecutor can contest both the facts of the case and the interpretation/application of the law. After this first appeal, the prosecutor or defendant can go for a second appeal at the Supreme Court; however, this appeal is restricted to interpretation/application of the law. One cannot contest the case facts at the Supreme Court. In the current study, however, we only presented participants with cases that were judged by a regular court (Rechtbank).

A third relevant aspect of the Dutch criminal court pertaining this study is that judges have a great deal of discretionary powers in choosing punishment (De Keijser 2000). Maximum sentences are specified by law, but there are no mandatory (minimum) sentences. However, judges try to be consistent in their decision-making across courts, by formulating sentencing policies for specific types of crimes and circumstance, although they remain free to depart from these guidelines when considered appropriate. This discretionary power makes it particularly important to justify sentencing to the public.

Fourth and finally, it is noteworthy that the Netherlands is no different than other countries with respect to public opinion about punitive attitudes. A majority of around 71% (2016) found that judges punished too mildly. In spite of this still being a large majority, it is interesting to see that this percentage has come down 10 percentage points from 81% in 2004 (Van Noije 2018).

Altogether, in this article, we probe and theoretically extend the information effect, by testing it in the context of motor vehicle crimes in the Netherlands. Although we argue that this is a highly relevant case to further test the information effect, we will highlight potential limitations to external validity of this choice in the “Discussion.”

In this article, we present data from four experiments conducted in survey samples representative of the Dutch population (total N = 1778). Taken together, these four studies provide evidence that relatively short information treatments (87–300 words) significantly improve more general attitudes—positive affect, negative affect and trust—towards a verdict and trust in judges. We also find specific types of information that drive this effect: sentence reference points (how does this particular sentence compare with others?) and providing procedural information (which decision procedures led to this particular sentence?) both have an effect on legal attitudes.

Method

Design and materials

In this section, we outline the general design choices of the four studies. Any specific design choices will be discussed with the results of each experiment. Further details, such as specifics of our treatment materials, samples and measures, are available in the Online Appendices.

We used real-world case materials to maximize experimental realism among our participants. Studies 1–3 concerned a case in which a man caused a deadly accident in which two people died. Despite the tragedy, the man was sentenced “only” to a conditional sentence of six months and community service of 240 hours. We chose this case because, at first glance, the disastrous accident contrasts with the mild punishment, which we expected to trigger an initial negative response. The justification for this mild verdict is nuanced, with a clearly limited degree of blame of the offender.

For Study 4, we used another traffic law case, with a higher degree of blame of the offender to assess the robustness of our findings. In this case, a man was sentenced to three years imprisonment for reckless driving (driving under influence, total neglect of traffic rules and circumstances). Three years is a long sentence for traffic violations, but the higher degree of culpability make this a ‘harder’ test than the case tested in Studies 1–3. Table 1 provides a short overview of the experiments. Full experimental materials for all experiments are found in Appendix A.

Data collection

We recruited participants from PanelClix, an online survey company in the Netherlands. PanelClix holds one of the biggest active panels in the Netherlands, with 100,000 active members for online market research. PanelClix used stratification based on age, gender and region to create sample representativeness for the Dutch population. In Appendix B, we compare the sample with the population of the Netherlands (based on the figures of the Dutch Bureau for Statistics), and we find that, by and large, our sample matches the general population on age, gender and educational attainment, although people with middle or higher educational attainment tend to be slightly overrepresented in our sample (for details, see Appendix B).

Because of general concerns with the quality of responses in online panels, we included an instructional manipulation check (IMC) at the start of each experiment (Oppenheimer et al. 2009; Berinsky et al. 2014). Only participants who read the instruction in the IMC and gave the correct answer could participate and others were screened out. Appendix C contains the IMC used in our experiments.

Measures

We measured three dependent variables: negative affect about a verdict (Study 1–4), positive affect about a verdict (Study 1–4) and trust in judges (Study 3–4). To measure the affective states of people after reading the verdict, we used a shortened and adapted form of the international I-PANAS-SF scale (Thompson 2007). We used four items from the original scale and added six new items that better reflect the potential emotional responses to a judicial verdict (such as “satisfied” or “furious”). All items were measured on a 5-point Likert scale from “not or very weakly” to “very strongly.” Details about how positive and negative affect were measured can be found in Appendix C. Scale reliability was calculated based on data from the first experiment and resulted in Cronbach’s alpha of 0.94 for negative affect and 0.73 for positive affect.

To measure trust in judges, we used several items based on earlier work by Tyler (2001, p. 218) with items tapping into various components of trust in judges, such as impartiality and competence of judges. We measured this using a Likert scale ranging from 1 (strongly disagree) to 7 (strongly agree) and asked, for instance, “I have confidence in these judges” and “These judges passed their judgment about the defendant in an impartial manner.” The reliability of the scale was α = 0.89 in both Study 3 and Study 4.

Manipulation check

We added two perception-based checks into the survey. First, we asked the following question (translated from Dutch) “after reading the verdict I have a somewhat better understanding of how judges decide on a sentence in case of traffic offences” and, second, “I understand a little better why judges – in the eye of the public – sometimes give mild sentences.” We combined these two items into one index. Cronbach’s alphas ranged from α = 0.70 to α = 0.85 (full details in Appendix C).

We found the following results for the manipulation checks in each of the four studies. Please note that detailed accounts of the designs of each study can be found in the results section of each study:

-

Study 1: perceived understanding was higher in the treatment group (M = 3.57, SD = 0.74) than in the control group (M = 2.28, SD = 0.83), an effect that was statistically significant (t(1) = 14.28, p < .001).

-

Study 2: perceived understanding was higher in the information treatment group (M = 4.47, SD = 1.42) than in the control group (M = 2.85, SD = 1.46), an effect that was statistically significant (t(1) = 11.23, p < .001). As expected, there was no significant difference (t(1) = 0.33, p = .739) between the perspective-taking group (M = 3.61, SD = 1.66) and the non-perspective-taking group (M = 3.67, SD = 1.63)

-

Study 3: perceived understanding was higher in the three treatment groups than in the control group (F(3,409) = 21.11, p < .001, ηp2 = .134). Means were as follows: Mcontrol = 2.97(1.17), Mcircumstances = 3.83(1.40), Mprocedural = 4.35(1.30), Mdetail = 3.50(1.28).

-

Study 4: both providing a reference point (F(1,645) = 19.51, p < .001, ηp2 = .029) and information (F(1,645) = 38.93, p < .001, ηp2 = .057) have an independent effect on perceived understanding.

Participants in all four studies perceived the sentences as more understandable when they were provided with additional information. In “Results,” we report how the treatments affected our core attitudinal measures: negative affect, positive affect, and trust in judges.

Results

Study 1

For the first experiment, we recruited 303 participants. As an initial test for whether the information effect extends beyond punitive judgments, we developed a basic experiment with one control and one treatment group. The control group received only a sentence without further information, plus a placebo text to control for text length. The treatment group received the exact same verdict but included explanatory information from a real press release. As a first step, we only measured affective responses to the verdict in this experiment using an adjusted version of the I-PANAS-SF scale (Thompson 2007). We found a lower negative affect in the treatment group (M = 2.14, SD = 1.03) than in the control group (M = 2.95, SD = 1.24). This effect was statistically significant (F(1,301) = 38.73, p < .001), and the effect size was medium to large (ηp2 = .114). We also found an effect on positive affect, although this was smaller than the effect on negative affect (Mcontrol = 1.35, SD = 0.62/Mtreatment = 1.63, SD = 0.79, F(1,301) = 11.40, p = .001, ηp2 = .036).

We included a typical punitiveness variable in Study 1 to better compare this study with the extant literature on the information effect. We asked participants “if you were the judge, which sentence would you find appropriate?”; next, they were asked to rate the appropriate number of months imprisonment and the number of months the driver’s license should be withdrawn. In line with the literature, the public’s punitive judgments became much more lenient; we see a drop from 35.9 months imprisonment to 15.3 months in the treatment group (F(1,300) = 21.77, p < .001, ηp2 = .068) and a drop in the number of months of driver’s license withdrawal from 76.6 to 24.8 months (F(1,300) = 14.40, p < .001, ηp2 = .046).

Overall, Study 1 provides evidence for a sizable effect of justifications on reducing negative affect, increasing positive affect and improving subjective and objective understanding of judicial decision-making (see Table 2 for an overview).

Study 2

Study 2 had two objectives. First, we wanted to replicate the results of Study 1 to determine the robustness of the results reported. Second, we introduced a perspective-taking treatment to account for an alternative explanation of our initial finding. That is, it might be argued that the positive effect of decision information was caused by people taking the perspective of a judge, which could cause them to be more nuanced in their reactions. It is known that perspective taking helps people to better empathize and gives them more positive views of the person whose perspective is taken, such as refugees (e.g., Adida et al. 2018). Therefore, in our second experiment (N = 406), we employed a factorial design of 2 (information × no information) by 2 (perspective-taking × no perspective-taking) between-subjects variables. The court case was the same as in Study 1. For the perspective-taking treatment, we encouraged people to think about how a judge prepares for a trial and comes to a decision. The control condition contained a placebo for people to think about how a judge travels to work every day.

To replicate the results of Study 1, we assessed the effect of information, and the results were indeed replicated for both negative affect (F(1,402) = 27.08, p < .001, ηp2 = .063) and positive affect (F(1,402) = 16.97, p < .001, ηp2 = .040). The perspective-taking treatment had no reliable effect on negative and positive affect (see Table 3).

In addition, we asked about punitiveness by asking which sentence participants would find appropriate. In line with Study 1, the public became milder when they received more information; participants in the control propose 22.8 months imprisonment yet only 11.57 months in the treatment group (F(1,397) = 12.82, p = .001, ηp2 = .030) and a drop in the number of months of driver’s license withdrawal from 43.5 to 17.6 months (F(1,394) = 22.52, p < .001, ηp2 = .054).

Overall, we replicated our findings from Study 1 for both negative and positive affect. Furthermore, we investigated whether perspective-taking could produce similar positive results, yet no effect was found. Finally, we also find that information reduced the punitive gap between judges and the public.

Study 3

After having found a reliable effect of providing rather extensive information (305 words), we designed Study 3 (N = 420) to assess whether more subtle information and varying types of information have a similar positive effect. If shorter justifications have an effect as well, this extends the boundaries of the information effect. Furthermore, this experiment assessed whether a decision justification not only affects affective responses but also the higher order construct of trust in judges.

There were four experimental conditions. A control condition only provided the sentence without further information. The first treatment contained a procedural cue of 87 words that explained the general procedure that judges employ to come to a decision and how this procedure was applied to the case. Treatment 2 provided an explanation of 63 words outlining the mitigating circumstances that influenced sentence length. Finally, treatment 3 (case detail cue, 70 words) only provided more details about the facts of the case, such as that there was a relatively small error of judgment of the driver that caused the accident.

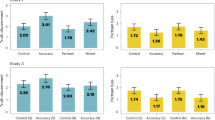

We found that providing a justification had an overall significant effect on negative affect (F(3,416) = 4.48, p = .004, ηp2 = .031). Contrasts comparing each treatment group with the control group (with Sidak adjustment for multiplicity) revealed that the strongest effect was produced by providing a procedural cue (t(411) = 3.31, p = 0.003, d = 0.484), and a marginally significant effect was found for providing a cue about mitigating circumstances (t(411) = 2.37, p = .053, d = 0.309). Instead of what the literature seems to suggest, merely providing more details and facts about the case yielded no significant effect at all (t(411) = .086, p = .774) compared with the control group. In Study 3, we found no significant overall effect on positive affect (F(3,416) = 1.27, p = .284).

We found an overall effect of justification on trust in the judges (F(3,411) = 3.47, p = .016, ηp2 = .025). Contrasts with Sidak adjustment for multiple comparisons revealed that, compared with the control group, this effect was found to be statistically significant for the procedural cue (t(411) = 2.41, p = .049, d = 0.333)) but was not statistically significant for the mitigating circumstances cue(t(411) = 1.79, p = .209) or the case detail cue (t(411) = −0.30, p = .988). We depict the estimated marginal means for positive and negative affect and trust in Fig. 1, full ANOVA results are shown in Table 4.

Overall, Study 3 reveals that providing a procedural cue mitigates negative emotional responses and increases trust in judges but does not increase positive affect. In this experiment, we also examined what kind of information component is most effective and found that outlining the judicial procedure and how it applies to the case influences both affective responses and trust.

Study 4

In an attempt to get some indication about the generalizability of the results reported thus far, we used a different case in the realm of traffic crime. In this case, there was a considerable degree of blame of the suspect in Study 4 (N = 649): In this study, the suspect had been driving under influence and caused the death of one person. This person was sentenced to three years imprisonment and the maximum revocation of his driver’s license of five years. This means that Study 4 differs in two important respects from Studies 1–3.

First, we chose a different criminal court case to test to what extent the effects reported in the earlier studies are robust to changes in the case material. Importantly, we argue that Study 4 provides a ‘hard test’ for our line of reasoning because the stimulus materials of Study 4 depict a case with serious misbehavior (drunk driving) and this might well mitigate the information effect. Second, we added a novel information treatment by providing a reference point. As argued in the theory section, reference points can be viewed of as a judgmental shortcut that allows people to form evaluations of numerical information of which they possess no deeper knowledge, such as the length of a sentence. In other words, because, generally, people do not possess deep knowledge about judicial sentencing, providing a reference helps them to get a better sense of whether a sentence is high or not.

We employed a 2 × 2 factorial between-subjects design for the experiment. The first independent variable comprised a procedural cue (present or absent); the second independent variable was a reference point cue stating that the sentence was high for this type of case (absent or present). In the condition in which the reference point was present, there was a statement that saying “three years imprisonment for a traffic felony is extraordinarily high, but is justified according to the court because the suspect drove very dangerously and irresponsibly by driving while intoxicated and at high speed.” The only difference with the no-reference point condition is that the words “extraordinarily high” were left out. This means the sentence in the control condition read as follows: “Three years imprisonment for a traffic felony is justified according to the court because the suspect drove very dangerously and irresponsibly by driving while intoxicated and at high speed.”

Table 5 provides the summary statistics for the ANOVA analyses for negative affect, positive affect and trust in judges. It shows that we find consistent and strong effects of providing reference point information about the sentence (positive affect: F(1,645) = 37.03, p < .001, ηp2 = .054; negative affect: F(1,645) = 28.64, p < .001, ηp2 = .042; trust: F(1,645) = 23.12, p < .001, ηp2 = .034). Furthermore, the table shows that providing a short justification is significant for negative affect, marginally significant for trust and not significant for positive affect.

The estimated marginal means are shown in Fig. 2 and aid the interpretation of the ANOVA results from Table 3. There are clear and robust effects of providing reference information about the sentence; it lowers negative affect and strengthens trust and positive affect. There are small or no significant effects with regard to providing a (short) justification for the sentence. Although the patterns are in line with the findings from Study 1–3, they are somewhat weaker. In the section below, we compare the experimental results.

Overall comparison of studies 1–4

First, we found a robust effect of various information treatments on negative affect in all four studies. For positive affect and trust in judges, findings are more nuanced. In Study 1, a justification of around 300 words reduced negative affect and increased positive affect with the sentence. In Study 2, we replicated this effect and also found that perspective-taking was no alternative explanation to the effect we found in Study 1. In Study 3, we further probed information effect by testing (a) shorter treatments and (b) different informational qualities. Providing case details about facts surrounding the case or easing circumstance hardly affected people’s judgment of judges and the sentence. We will discuss theoretical implications of this finding in the next section. In contrast, procedural information influenced both negative affect and trust in judges. In contrast with Study 1 and 2, Study 3 did not replicate the effect on positive affect. Finally, Study 4 again found an effect of providing a procedural justification on negative affect, yet only a marginal effect on trust in judges and no effect at all on positive affect.

With regard to objective 1—extending the information effect to other attitudes—we conclude that the information effect is most reliable with regard to reducing negative affect. In other words, more information about a sentence reduces negative emotional responses to a verdict. At the same time, we find indications, though less reliable than for negative affect, that positive affect and trust in judges are also affected by information. It does make sense that, according to our results, it seems to be harder to increase positive feelings about a sentence than it is to mitigate negative feelings. Concerning objective 2—probing what specific informational qualities have an effect—we conclude that both providing a reference point and procedural cues are important components.

Overall, the main conclusion is that information treatments extend beyond punitive judgments to affective responses and, in some instances, trust in judges. Furthermore, the specific element of the information effect that is most indicative for its effect are providing a reference point and explaining the decision procedure of a judge. In such a case, it can also engender trust in judges. We will discuss implications in the next section.

Discussion of findings

Overall, our experiments have various implications for the literature on the information effect. First, we specify the information effect by showing which elements of information have reliable effects on laymen judgments. Previous studies have not attempted to test what kind of information affects laymen attitudes. We find that information outlining the procedural context of the decision affects both trust in judges and mitigates negative affect about a verdict. This finding connects with literature that has shown that experience with a fair decision-making procedure engenders trust in authorities (e.g., Tyler 2006; Grootelaar and Van den Bos 2018). In contrast with suggestions by the extant literature on the information effect (e.g., Indermauer and Hough 2002; Mirrlees-Black 2002; De Keijser et al. 2007), this implies that it is not about merely providing more factual details or circumstances of a case that drives the information effect. The information effect may be more nuanced than currently assumed: certain elements of information have the potential to change people’s views on a sentence and judges, yet others do not. We should further explore these specifics in future research to better understand what the information effect consists of more precisely.

Secondly, our findings extend the scope of the information effect information to more modest and thus less intensive treatments. We find that providing information in the form of a press release of 70 to 300 words decreases negative affect and—most of the time—increases positive affect towards a verdict. Our information treatment was much less intensive compared with earlier information treatments, which consisted of 700 word texts, deliberative polling or TV shows (e.g., Roberts et al. 2012), which extends the boundaries of the information effect.

At the same time, our findings indicate that the information effect may be limited to certain attitudes and certain cases. The information effect might be less persistent than currently assumed in the literature. That is, we find that the information effect weaker when the defendant had a large degree of blame in causing a deadly accident. Essentially providing information may work best when it is easier to sympathize with the defendant.

Finally, our studies call for tighter theoretical integration with adjacent disciplines, such as social psychology. We find that providing reference-point information about the sentence—stating the sentence as a relatively severe punishment of a traffic case—strongly affected negative and positive affects and trust in judges. Although this is commonly accepted knowledge in social psychology (e.g., Tversky and Kahneman 1991; Mussweiler 2003), literature on the information effect has so far not included this specific issue. We think that pushing disciplinary boundaries and including psychological insights like these in studies on informational effects is a promising line for future research (see also Grimmelikhuijsen et al. 2017).

Research limitations and future research suggestions

Some limitations apply to the studies presented in this article. Although survey experiments are said to combine the strength of lab experiments (internal validity) and field experiments (external validity) (Mullinix et al. 2015), they have their limits. Survey experiments are mostly limited by their modesty in terms of treatment intensity and their scale (Sniderman 2018). Some of these criticisms apply to our study, too.

First, we only tested the information effect in a specific type of case. We do not know whether the effects we found apply to different types of criminal cases, too. According to Cullen et al. (2000) there is a ‘great divide’ between punitive judgments in violent versus non-violent crimes: “[The American public] sees no reason to ‘take chances’ with offenders who have shown that they will physically hurt others.” But what does that say about the context of motor vehicle crimes? In a sense, they can be violent crimes, yet the degree of intentionality is different from many other violent crimes. Therefore, the information effect we found in our studies may be more pronounced than in crimes that involve more intentional violence. Future research could address this limitation by systematically comparing traffic crime sentences with other types of sentences.

We found some indications that the circumstances of the case influence the information effect. In Studies 1–3, there was a tragic accident with somebody who engaged in risky driving and overestimating himself, but no alcohol or substance-abuse was involved. Study 4 concerned a case with drunk driving, and, indeed, the effect of the justification in this study was weaker than in Studies 1–3. At the same time, we find a strong effect of including a reference point in Study 4. This means that this is a promising lead to further explore. To what extent can reference points as an informational treatment change people’s attitudes in various types of criminal behavior? Further study is needed to more systematically investigate how various types of crimes and degrees of intentionality moderate the effect of reference points.

Furthermore, external validity is an issue for our experiments, just as for most other experiments, and we put in much effort to secure at least a modest level of external validity. For instance, we used real-world materials from existing cases and explanations from actual press releases. Furthermore, we did not use a convenience sample of students but a diverse sample in terms of education and age. Finally, we empirically validated our own work by using a different court case in Study 4; this way, we empirically tested the external validity of our findings from Studies 1–3.

Next to external validity, our experiments are limited to measuring short-term effects of the information effect. We cannot say how long the effect will last, although it is likely that, given the modesty of survey experiments in general, the effect will not last very long after the experiment (Sniderman 2018). Future research could focus on determining ways to foster more durable information effects on attitudinal variables. Experiments employing multiple waves, perhaps combined with field experiments, could be used to uncover any potential long-term effects.

A final potential limitation is based on the critique that positive results in survey experiments stem from experimenter demand effects (Orne 1962; Iyengar 2011). The concern of experimenter demand effects is that experimental participants deduce the answers researchers are expecting and that they are inclined to behave in line with these expectations. Such bias would result in biased conclusions since participants supposedly behave differently in an experiment than in the “real world.” This assumes, however, that participants in a survey experiment (a) know they participate in an experiment at all, (b) know the true goal of the experiment and (c) are able to guess to which condition they are assigned. We argue that all these three things are unlikely. Our argument is backed by a recent study by Mummolo and Peterson (2019) who empirically tested experimenter demand effects in survey experiments based on five experimental designs with a combined total of 12,000 participants. Even when participants were explicitly informed about the goal of the experiment, the research hypotheses and the condition they were in, participants were not more likely to comply with experimenter demands. We find it safe to assume that experimenter demand effects in our studies are unlikely.

Conclusion

In the introduction, we raised the more general question of whether providing information can resolve the negative attitudes about judges and judicial sentencing. For instance, most people belief that criminal courts punish too mildly (e.g., Elffers and De Keijser 2004), while, at the same time, there seems to be widespread public ignorance about crime and justice (e.g., Elffers et al. 2007; Van de Walle 2009; Hough et al. 2013; Feilzer 2015). Altogether, the four studies we carried out provide a nuanced reflection on the boundaries of the information effect on laymen attitudes. Our findings provide greater depth to the literature on the information effect as we show, for instance that the effect is more strongly present for some kinds of information—particularly procedural information and reference-point information—than other type of content. At the same time, we show that even modest information treatments mitigate affective responses to a sentencing decision.

These findings hold important policy implications as courts across the globe are now trying to find ways to earn trust by improving the public’s understanding of what judges do and how they decide. For instance, the Center for Court Innovation in the U.S. and the Centre for Justice Innovation in the U.K. advocate improved courtroom communication.Footnote 1 Indeed, courts have adopted innovative and proactive communication strategies to reach out to a broad public and better explain their decisions and procedures (Green, 2011). For instance, high courts such as the International Criminal Court and the Supreme Courts of the U.S. and U.K. now have Twitter accounts, all with well over 150,000 followers, to directly reach the public. Providing short explanations using procedural cues and reference points through such platforms may help to change public attitudes towards judges and sentencing.

Notes

See, for instance, https://www.courtinnovation.org/sites/default/files/communications_toolkit.pdf, last accessed October 10, 2019.

References

Adida, C. L., Lo, A., Platas, M. R. (2018). Perspective taking can promote short-term inclusionary behavior toward Syrian refugees. Proceedings of the National Academy of Sciences, 201804002.

Benesh, S. C., & Howell, S. E. (2001). Confidence in the courts: A comparison of users and non-users. Behavioral Sciences & the Law, 19(2), 199–214.

Benesh, S. C. (2006). Understanding public confidence in American courts. The Journal of Politics, 68(3), 697–707.

Berinsky, A. J., Margolis, M. F., Sances, M. W. (2014). Separating the shirkers from the workers? Making sure respondents pay attention on self-administered surveys. American Journal of Political Science, 58(3), 739–753.

Carr, J. Z., Schmidt, A. M., Ford, J. K., DeShon, R. P. (2003). Climate perceptions matter: A meta-analytic path analysis relating molar climate, cognitive and affective states, and individual level work outcomes. Journal of Applied Psychology, 88(4), 605–619.

Casper, J. D., Tyler, T. R., Fisher, B. (1988). Procedural justice in felony cases. Law and Society Review, 22, 483–507.

Chapman, B., Mirrlees-Black, C., Brawn, C. (2002). Improving public attitudes to the criminal justice system: The impact of information. London: Home Office.

Cullen, F. T., Fisher, B. S., Applegate, B. K. (2000). Public opinion about punishment and corrections. Crime and Justice, 27, 1–79.

De Keijser, J. W., & Elffers, H. (2009). Punitive public attitudes: A threat to the legitimacy of the criminal justice system? In M. E. Oswald, S. Bieneck, & J. Hupfeld-Heineman (Eds.), Social psychology of punishment of crime (pp. 55–74). New York: Wiley and Sons.

De Keijser, J. W. (2014). Penal theory and popular opinion. The deficiencies of direct engagement. In J. Ryberg, Jesper, & J. V. Roberts (Eds.), Popular punishment. On the normative significance of public opinion (pp. 101–118). Oxford: Oxford University press.

De Keijser, J. W., Van Koppen, P. J., Elffers, H. (2007). Bridging the gap between judges and the public? A multi-method study. Journal of Experimental Criminology, 3, 131–161.

De Keijser, J. W. (2000). Punishment and purpose: From moral theory to punishment in action. Amsterdam: Thela Thesis.

Elffers, H., & de Keijser, J. W. (2004). Het geloof in de kloof: Wederzijdse beelden van rechters en publiek. (In J. W. de Keijser & H. Elffers (Eds.), Het maatschappelijk oordeel van de strafrechter: De wisselwerking tussen rechter en samenleving (pp. 53–84). Den Haag: Boom Juridische Uitgevers).

Elffers, H., De Keijser, J. W., Van Koppen, P. J., Van Haeringen, L. (2007). Newspaper juries: A field experiment concerning the effect of information on attitudes towards the criminal justice system. Journal of Experimental Criminology, 3, 163–182.

Feilzer, M. (2015). Exploring public knowledge of sentencing practices. In J. V. Roberts (Ed.), Exploring sentencing practice in England and Wales (pp. 61–75). Palgrave Macmillan.

Gainey, R., & Payne, B. (2003). Changing attitudes toward house arrest with electronic monitoring: The impact of a single presentation. International Journal of Offender Therapy and Comparative Criminology, 47, 196–209.

Green, R. (2011). Catching the wave: State supreme court outreach efforts. Faculty Publications.1708. http://scholarship.law.wm.edu/facpubs/1708. Accessed January 7, 2019.

Grimmelikhuijsen, S. G., & Klijn, A. (2015). The effects of judicial transparency on public trust : Evidence from a field experiment. Public Administration, 93(4), 995–1011.

Grimmelikhuijsen, S., Jilke, S., Olsen, A. L., Tummers, L. (2017). Behavioral public administration: Combining insights from public administration and psychology. Public Administration Review, 77(1), 45–56.

Grootelaar, H. A. M., & van den Bos, K. (2018). How litigants in Dutch courtrooms come to trust judges: The role of perceived procedural justice, outcome favorability, and other Sociolegal moderators. Law & Society Review, 52(1), 234–268.

Hough, M. & J.V. Roberts (1998) Attitudes to Punishment: Findings from the British Crime Survey. Home Office Research Study no. 179. London: Home Office.

Hough, M., & Park, A. (2002). How malleable are public attitudes to crime and punishment? In J. V. Roberts & M. Hough (Eds.), Changing attitudes to punishment: Public opinion around the globe. Cullompton: Willan Publishing.

Hough, M., Bradford, B., Jackson, J., Roberts, J. V. (2013). Attitudes to sentencing and trust in Justice. In Ministry of Justice Analytical Series. London: Crown Copyright http://eprints.bbk.ac.uk/5195/1/5195.pdf AccessedAugust 30, 2018.

Indermauer, D., & Hough, M. (2002). Strategies for changing public attitudes to punishment. In J. V. Roberts & M. Hough (Eds.), Changing attitudes to punishment: Public opinion, crime and justice (pp. 198–214). Cullompton: Willan.

Iyengar, S. (2011). Laboratory Experiments in Political Science. In J. N. Druckman, D. P. Green, J. H. Kuklinski, & A. Lupa (Eds.), Cambridge Handbook of Experimental Political Science (pp. 73–88). New York: Cambridge University Press.

Lind, E. A., Kulik, C. T., Ambrose, M., De Vera Park, M. V. (1993). Individual and corporate dispute resolution: Using procedural fairness as a decision heuristic. Administrative Science Quarterly, 38, 224–251.

Mirrlees-Black, C. (2002). Improving public knowledge about crime and punishment. In J. V. Roberts & M. Hough (Eds.), Changing attitudes to punishment: Public opinion, crime and justice (pp. 184–197). Cullompton: Willan.

Mitchell, B., & Roberts, J. V. (2011). Public attitudes towards the mandatory life sentence for murder: Putting received wisdom to the empirical test. Criminal Law Review, 6, 454–465.

Mummolo, J., & Peterson, E. (2019). Demand effects in survey experiments: An empirical assessment. American Political Science Review, 113(2), 517–529.

Mullinix, K. J., Leeper, T. J., Druckman, J. N., Freese, J. (2015). The generalizability of survey experiments. Journal of Experimental Political Science, 2(2), 109–138.

Mussweiler, T. (2003). Comparison processes in social judgment: Mechanisms and consequences. Psychological Review, 110, 472–489.

Noije, L. van (2018). Sociale veiligheid. In: De sociale staat van Nederland 2018. [“the social state of the Netherlands 2018”]. Accessed on November 3, 2019 via https://digitaal.scp.nl/ssn2018/sociale-veiligheid.

Olsen, A. L. (2017). Compared to what? How social and historical reference points affect citizens’ performance evaluations. Journal of Public Administration Research and Theory, 27(4), 562–580.

Oppenheimer, D. M., Meyvis, T., Davidenko, N. (2009). Instructional manipulation checks: Detecting satisficing to increase statistical power. Journal of Experimental Social Psychology, 45(4), 867–872.

Orne, M. T. (1962). On the social psychology of the psychological experiment: With particular reference to demand characteristics and their implications. American Psychologist, 17(11), 776–783.

Roberts, J., Hough, M., Jackson, J., Gerber, M. M. (2012). Public opinion towards the lay magistracy and the sentencing council guidelines: The effects of information on attitudes. British Journal of Criminology, 52(6), 1072–1091.

Roberts, J. V., & Stalans, L. J. (1997). Public opinion, crime, and criminal justice. Boulder: Westview.

Roberts, J. V., Stalans, L. J., Indermauer, D., Hough, M. (2002). Penal populism and public opinion: Lessons from five countries. New York: Oxford University Press.

Ryberg, J., & Roberts, J. V. (2014). Popular punishment. On the normative significance of public opinion. Oxford: Oxford University Press.

Schwarz, N. (1990). Feelings as information: Informational and motivational functions of affective states. In E. T. Higgins & R. Sorrentino (Eds.), Handbook of motivation and cognition: Foundations of social behavior (Vol. 2). New York: Guilford.

Schwarz, N., & Bless, H. (1991). Happy and mindless, but sad and smart? The impact of affective states on analytic reasoning. In J. Fogras (Ed.), Emotion & Social Judgments (pp. 55–72). Oxford: Pergamon press.

Schwarz, N., & Clore, G. L. (1988). How do I feel about it? Informative functions of affective states. In K. Fiedler & J. Forgas (Eds.), Affect, cognition, and social behavior (pp. 44–62). Toronto: Hogrefe International.

Siamagka, N. T., Christodoulides, G., Michaelidou, N. (2015). The impact of comparative affective states on online brand perceptions: A five-country study. International Marketing Review, 32(3/4), 438–454.

Singer, L. & Cooper, S. (2008) Inform, persuade and remind. An evaluation of a. project to improve public confidence in the criminal justice system. London: Ministry of Justice.

Sniderman, P. M. (2018). Some advances in the design of survey experiments. Annual Review of Political Science, 21, 259–275.

St. Amand, M., & Zamble, E. (2001). Impact of information about sentencing decisions on public attitudes to the criminal justice system. Law and Human Behavior, 25, 515–528.

Thompson, E. R. (2007). Development and validation of an internationally reliable short-form of the positive and negative affect schedule (PANAS). Journal of Cross-Cultural Psychology, 38(2), 227–242.

Tversky, A., & Kahneman, D. (1991). Loss aversion in riskless choice: A reference-dependent model. The Quarterly Journal of Economics, 106, 1039–1061.

Tyler, T. R. (2001). Public trust and confidence in legal authorities: What do majority and minority group members want from the legal and legal institutions? Behavioral Sciences & the Law, 19(2), 215–235.

Tyler, T. R. (2006). Why people obey the law. Princeton University Press.

Tyler, T. R., Boeckmann, R. J., Smith, H. J., Huo, Y. J. (1997). Social justice in a diverse society. Boulder: Westview.

Van Tulder, F. (2005). Is de rechter zwaarder gaan straffen? [Have judges been passing harsher puishments?]. Trema, 28, 1–5.

Van de Walle, S. (2009). Confidence in the criminal justice system: Does experience count? The British Journal of Criminology, 49(3), 384–398.

Van den Bos, K. (1999). What are we talking about when we talk about no-voice procedures? On the psychology of the fair outcome effect. Journal of Experimental Social Psychology, 35, 560–577.

Van den Bos, K., & Lind, E. A. (2002). Uncertainty management by means of fairness judgments. In M. P. Zanna (Ed.), Advances in experimental social psychology (Vol. 34, pp. 1–60). San Diego: Academic Press.

Van den Bos, K., Lind, E. A., Vermunt, R., Wilke, H. A. M. (1997). How do I judge my outcome when I do not know the outcome of others? The psychology of the fair process effect. Journal of Personality and Social Psychology, 72, 1034–1046.

Wenzel, J. P., Bowler, S., Lanoue, D. J. (2003). The sources of public confidence in state courts: Experience and institutions. American Politics Research, 31(2), 191–211.

Acknowledgments

The authors would like to thank Paul Boselie, Barbara Vis, Lars Brummel and Hilke Grootelaar for their valuable feedback on earlier versions of this article.

Funding

Stephan Grimmelikhuijsen received a grant from the Dutch Science Foundation (NWO VENI-451-15-024).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 34 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Grimmelikhuijsen, S., van den Bos, K. Specifying the information effect: reference points and procedural justifications affect legal attitudes in four survey experiments. J Exp Criminol 17, 321–341 (2021). https://doi.org/10.1007/s11292-019-09407-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11292-019-09407-9