Abstract

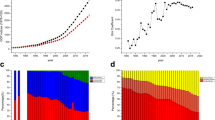

From a sociocognitive perspective, item parameters in a test represent regularities in examinees’ item responses. These regularities are originated from shared experiences among individuals in interacting with their environment. Theories explaining the relationship between culture and cognition also acknowledge these shared experiences as the source of human cognition. In this context, this study argues that if human cognition is a cultural phenomenon and not everywhere the same, then item parameters in cross-cultural surveys may inevitably fluctuate across culturally different populations. The investigation of item parameter equivalence in TIMSS 2015 supports this argument. The multidimensional scaling representation of similarity in the item parameters across countries in TIMSS 2015 shows that the item parameters are more similar within Arab, Western, East Asian and post-Soviet country clusters and are remarkably less similar between these clusters. Similar fluctuation structure across countries in discrimination and difficulty parameters in mathematics and science subjects point to the vital role of cultural differences in item parameter nonequivalence in cross-cultural surveys. The study concludes that it is very difficult for cross-cultural surveys to achieve the highest level of measurement invariance that guarantees meaningful scale score comparisons across countries.

Similar content being viewed by others

Data availability

The data sets analysed in this present study are available in the TIMSS 2015 international database, https://timssandpirls.bc.edu/timss2015/international-database/

References

Bandura, A. (1977). Social learning theory. Prentice-Hall.

Bleakley, A., Bligh, J., & Browne, J. (2011). Medical education for the future: identity, power and location. Springer.

Borg, I., Groenen, P. J. F., & Mair, P. (2018). Applied multidimensional scaling and unfolding. Springer.

Byrne, B. M., & van de Vijver, F. J. R. (2010). Testing for measurement and structural equivalence in large-scale cross-cultural studies: addressing the issue of nonequivalence. International Journal of Testing, 10(2), 107–132. https://doi.org/10.1080/15305051003637306.

Cantlon, J. F., & Brannon, E. M. (2007). Adding up the effects of cultural experience on the brain. Trends in Cognitive Sciences, 11(1), 1–4. https://doi.org/10.1016/j.tics.2006.10.008.

Cole, M. (2005). Putting culture in the middle. In H. Daniels (Ed.), An Introduction to Vygotsky (pp. 195–222). Routledge.

Cole, M., John-Steiner, V., Scribner, S., & Souberman, E. (Eds.). (1978). L.S. Vygotsky: mind in society: the development of higher psychological processes. Harvard University Press.

Davidov, E., Dülmer, H., Schlüter, E., Schmidt, P., & Meuleman, B. (2012). Using a multilevel structural equation modeling approach to explain cross-cultural measurement noninvariance. Journal of Cross-Cultural Psychology, 43(4), 558–575. https://doi.org/10.1177/0022022112438397.

Davidov, E., Meuleman, B., Cieciuch, J., Schmidt, P., & Billiet, J. (2014). Measurement equivalence in cross-national research. Annual Review of Sociology, 40(1), 55–75. https://doi.org/10.1146/annurev-soc-071913-043137.

de Leeuw, J., & Mair, P. (2009). Multidimensional scaling using majorization: SMACOF in R. Journal of Statistical Software, 31(3), 1–30. https://doi.org/10.18637/jss.v031.i03.

de Leeuw, J., & Meulman, J. (1986). A special jackknife for multidimensional scaling. Journal of Classification, 3(1), 97–112. https://doi.org/10.1007/BF01896814.

Ercikan, K. (2002). Disentangling sources of differential item functioning in multilanguage assessments. International Journal of Testing, 2(3–4), 199–215. https://doi.org/10.1080/15305058.2002.9669493.

Fernández, I., Carrera, P., Sánchez, F., Paez, D., & Candia, L. (2000). Differences between cultures in emotional verbal and non-verbal reactions. Psicothema, 12(SUPPL. 1), 83–92.

Foy, P., Martin, M. O., Mullis, I. V. S., Yin, L., Centurino, V. A. S., & Reynolds, K. A. (2016). Reviewing the TIMSS 2015 achievement item statistics. In M. O. Martin, I. V. S. Mullis, & M. Hooper (Eds.), Methods and procedures in TIMSS 2015 IEA.

Glöckner-Rist, A., & Hoijtink, H. (2003). The best of both worlds: factor analysis of dichotomous data using item response theory and structural equation modeling. Structural Equation Modeling, 10(4), 544–565. https://doi.org/10.1207/S15328007SEM1004_4.

Graffelman, J. (2013). Calibrate: calibration of scatterplot and biplot axes. R package version, 1(7), 2 http://CRAN.R-project.org/package=calibrate.

Greenfield, P. M., Maynard, A. E., & Childs, C. P. (2003). Historical change, cultural learning, and cognitive representation in Zinacantec Maya children. In Cognitive Development, 18, 455–487. https://doi.org/10.1016/j.cogdev.2003.09.004.

Grisay, A., & Monseur, C. (2007). Measuring the equivalence of item difficulty in the various versions of an international test. Studies in Educational Evaluation, 33(1), 69–86. https://doi.org/10.1016/j.stueduc.2007.01.006.

He, J., Barrera-Pedemonte, F., & Buchholz, J. (2018). Cross-cultural comparability of noncognitive constructs in TIMSS and PISA (pp. 1–17). Principles, Policy and Practice: Assessment in Education. https://doi.org/10.1080/0969594X.2018.1469467.

Heine, S. J., Lehman, D. R., Ide, E., Leung, C., Kitayama, S., Takata, T., & Matsumoto, H. (2001). Divergent consequences of success and failure in Japan and North America: an investigation of self-improving motivations and malleable selves. Journal of Personality and Social Psychology, 81(4), 599–615. https://doi.org/10.1037/0022-3514.81.4.599.

Kreiner, S., & Christensen, K. B. (2013). Analyses of model fit and robustness. A new look at the PISA scaling model underlying ranking of countries according to reading literacy. Psychometrika, 79(2), 210–231. https://doi.org/10.1007/s11336-013-9347-z.

Lorenzo-Seva, U., & ten Berge, J. M. F. (2006). Tucker’s congruence coefficient as a meaningful index of factor similarity. Methodology, 2(2), 57–64. https://doi.org/10.1027/1614-2241.2.2.57.

Lubienski, S. T. (2000). Problem solving as a means toward mathematics for all: an exploratory look through a class lens. Journal for Research in Mathematics Education, 31(4), 454–482. https://doi.org/10.2307/749653.

Lubienski, S. T. (2007). What we can do about achievement disparities. Educational Leadership, 65(3), 54–59.

Mair, P., Borg, I., & Rusch, T. (2016). Goodness-of-fit assessment in multidimensional scaling and unfolding. Multivariate Behavioral Research, 51(6), 772–789. https://doi.org/10.1080/00273171.2016.1235966.

Mair, P., Groenen, P. J. F., & de Leeuw, J. (2019). More on multidimensional scaling and unfolding in R: Smacof version 2. Retrieved from https://cran.pau.edu.tr/web/packages/smacof/vignettes/smacof.pdf

Martin, M., Mullis, I. V. S., & Foy, P. (2013). TIMSS 2015 assessment design. In I. V. S. Mullis & M. O. Martin (Eds.), Timss 2015 assessment frameworks (pp. 85–98). Chestnut Hill: TIMSS & PIRLS International Study Center, Boston College.

Martin, M., Mullis, I. V. S., & Hooper, M. (2016). Methods and procedures in Timss 2015. Chestnut Hill: TIMSS & PIRLS International Study Center, Boston College.

Mislevy, R. J. (2018). Sociocognitive foundations of educational measurement. New York: Routledge.

Mullis, I. V. S., & Martin, M. O. (2013). TIMSS 2015 assessment frameworks. Chestnut Hill: TIMSS & PIRLS International Study Center,Boston College.

Nisbett, R. E. (2005). The geography of thought. Nicholas Brealey Publishing.

Nisbett, R. E., Choi, I., Peng, K., & Norenzayan, A. (2001). Culture and systems of thought: holistic versus analytic cognition. Psychological Review, 108(2), 291–310. https://doi.org/10.1037/0033-295X.108.2.291.

Oliveri, M. E., & von Davier, M. (2011). Investigation of model fit and score scale comparability in international assessments. Psychological Test and Assessment Modeling, 53(3), 315–333 Retrieved from http://www.psychologie-aktuell.com/fileadmin/download/ptam/3-2011_20110927/04_Oliveri.pdf.

Oliveri, M. E., & von Davier, M. (2014). Toward increasing fairness in score scale calibrations employed in international large-scale assessments. International Journal of Testing, 14(1), 1–21. https://doi.org/10.1080/15305058.2013.825265.

Punter, R. A. (2018). Improving the modelling of response variation in international large-scale assessments. [unpublished doctoral dissertation]. University of Twente.

Rutkowski, L., & Rutkowski, D. (2018). Improving the comparability and local usefulness of international assessments: a look back and a way forward. Scandinavian Journal of Educational Research, 62(3), 354–367. https://doi.org/10.1080/00313831.2016.1261044.

Sandilands, D., Oliveri, M. E., Zumbo, B. D., & Ercikan, K. (2013). Investigating sources of differential item functioning in international large-scale assessments using a confirmatory approach. International Journal of Testing, 13(2), 152–174. https://doi.org/10.1080/15305058.2012.690140.

Schimmack, U., Oishi, S., & Diener, E. (2005). Individualism: a valid and important dimension of cultural differences between nations. Personality and Social Psychology Review, 9(1), 17–31. https://doi.org/10.1207/s15327957pspr0901_2.

Sookias, R. B., Passmore, S., & Atkinson, Q. D. (2018). Deep cultural ancestry and human development indicators across nation states. Royal Society Open Science, 5(4), 171411. https://doi.org/10.1098/rsos.171411.

Tang, Y., Zhang, W., Chen, K., Feng, S., Ji, Y., Shen, J., Reiman, E. M., & Liu, Y. (2006). Arithmetic processing in the brain shaped by cultures. Proceedings of the National Academy of Sciences of the United States of America, 103(28), 10775–10780. https://doi.org/10.1073/pnas.0604416103.

Tomasello, M. (1999a). The cultural origins of human cognition. Harvard University Press.

Tomasello, M. (1999b). The human adaptation for culture. Annual Review of Anthropology, 28, 509–529.

Tomasello, M. (2000). Culture and cognitive development. Current Directions in Psychological Science, 9(2), 37–40. https://doi.org/10.1111/1467-8721.00056.

Tomasello, M., Kruger, A. C., & Ratner, H. H. (1993). Cultural learning. Behavioral and Brain Sciences, 16, 495–552. https://doi.org/10.1017/s0140525x00031496.

Tucker, R. L. (1951). A method for synthesis of factor analysis studies. (personnel research section report no.984). Department of the army.

van de Vijver, F. J. R., & Leung, K. (1997). Methods and data analysis of comparative research. Thousand Oaks: Sage.

Van de Vijver, F. J. R., & Leung, K. (2011). Equivalence and bias: a review of concepts, models, and data analytic procedures. In Cross-cultural research methods in psychology. Cambridge University Press.

Varnum, M. E. W., Grossmann, I., Kitayama, S., & Nisbett, R. E. (2010). The origin of cultural differences in cognition: the social orientation hypothesis. Current Directions in Psychological Science, 19(1), 9–13. https://doi.org/10.1177/0963721409359301.

Verhelst, N. D. (2012). Profile analysis: a closer look at the PISA 2000 reading data. Scandinavian Journal of Educational Research, 56(3), 315–332. https://doi.org/10.1080/00313831.2011.583937.

Verhelst, N. D. (2017). Balance: A neglected aspect of reporting exam results. In M. Rosén, K.Y. Hansen, & U. Wolff (Eds.), Cognitive abilities and educational outcomes (pp. 273‐293). Springer International Publishing AG. https://doi.org/10.1007/978-3-319-43473-5

Verhelst, N. D., & Glas, C. A. W. (1995). The one parameter logistic model. In Rasch models: Foundations, recent developments, and applications. Springer Verlag.

Verhelst, N.D., Verstralen, H.H.F.M., & Eggen, T.J.H.M. (1991). Finding starting values for the item parameters and suitable discrimination indices in the one parameter logistic model. Measurement and research department reports, 91-10, CITO.

Verhelst, N. D., Glas, C. A. W., & Verstralen, H. H. F. M. (1994). OPLM: computer program and manual. Cito.

Wu, M., Tam, H. P., & Jen, T. (2016). Educational measurement for applied researchers. Springer.

Yıldırım, H. H., & Berberoĝlu, G. (2009). Judgmental and statistical DIF analyses of the PISA-2003 mathematics literacy items. International Journal of Testing, 9(2), 108–121. https://doi.org/10.1080/15305050902880736.

Acknowledgements

I am grateful to Dr. Norman Verhelst for his thoughtful comments on the analyses. I would also like to thank Dr. Selda Yıldırım for her help in dealing with the TIMSS 2015 dataset and Fazıl Yıldırım for his help in creating the graphics. But they are not responsible for the ways in which I have analysed or represented the data.

Author information

Authors and Affiliations

Contributions

The author carried out the study, drafted, read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Summary of the R commands used in the study to run the SMACOF package

Appendix. Summary of the R commands used in the study to run the SMACOF package

MATa <- read.csv("Math_a.csv", row.names=1, stringsAsFactors=FALSE)

MATb <- read.csv("Math_b.csv", row.names=1, stringsAsFactors=FALSE)

CULTURE<-read.csv("HDI.csv", row.names=1, stringsAsFactors=FALSE) ## Read matrix of pairwise correlations in item parameters between countries and read countries’ HDI indexdisMATa <- sim2diss(MATa, method="corr")

disMATb <- sim2diss(MATb, method="corr") ## convert correlations into dissimilarities

mdsMATa<-mds(disMATa, ndim = 2,type = "interval") ## run interval MDS and store the result in object mdsMATa.

mdsMATb<-mds(disMATb, ndim = 2,type = "interval", init = mdsMATa$conf) ## For Procrustean transformation, run MDS starting from the target configuration. fit<-Procrustes(mdsMATa$conf,mdsMATb$conf)

mdsMATb$conf<-fit$Yhat ## eliminate meaningless differences between configurations and generate Procrustean-fitted MDS solution.

HDIbiplot<-biplotmds(mdsMATa, extvar = CULTURE) ## map the variable HDI on the MDS configuration

plot (mdsMATa) ; plot (mdsMATb) ; plot (HDIbiplot) ## plot MDS configurations

permtest(mdsMATa,nrep=500);jackknife(mdsMATa) ## test for goodness-of-fit and stability of MDS configuration

Rights and permissions

About this article

Cite this article

Yıldırım, H.H. Re-reviewing item parameter equivalence in TIMSS 2015 from a sociocognitive perspective. Educ Asse Eval Acc 33, 27–48 (2021). https://doi.org/10.1007/s11092-020-09350-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11092-020-09350-8