Abstract

The impact of COVID-19 on the global outbreak of supply chain is enormous. It is crucial for governments to take policy recommendations to enhance the supply chain resilience to mitigate the negative impact of COVID-19. For such a major issue, it is a common occurrence that a large number of decision-makers (DMs) are invited to participate in the decision-making process so as to ensure the comprehensiveness and reliability of decision results. Since the attitudinal characteristics of DMs are important factors affecting decision results, this study focuses on capturing the attitudinal characteristics of DMs in the large-scale group decision making process. The capturing process combines the ordinal k-means clustering algorithm, gained and lost dominance score method and personalized quantifiers. To enable DMs to express their cognitions in depth, we use the probabilistic linguistic term set to express the evaluation information of DMs. A case study on selecting the optimal policy recommendation for improving the integration capability of supply chain is given to illustrate the applicability of the proposed process. The superiority of the proposed algorithm is highlighted through sensitive analysis and comparative analysis.

Similar content being viewed by others

1 Introduction

The impact of COVID-19 pandemic on the global supply chain is a stress test. This “black swan” event disrupted the global supply chain and industrial chain. The economic and financial turbulence triggered by the COVID-19 caused significant negative effects on production and circulation, production capacity cooperation, labor mobility and foreign investment. Manufacturing supply chain faces many uncertainties in returning to normal (Hong 2020). As Ivanov and Dolgui (2020) pointed out, the outbreak of COVID-19 is an unprecedented abnormal situation, which clearly shows the necessity of promoting the research and practice of supply chain elasticity. It is crucial for governmental bodies, health-care organizations, hospitals and their business stakeholders to take appropriate measures to enhance the supply chain resilience (Khan et al. 2019) so as to mitigate the negative impact of COVID-19 on the supply chain.

In the existing literature, Barbieri et al. (2020) analyzed the impact of the COVID-19 epidemic on supply chains in various industries around the world. Ivanov (2020) provided the results of a simulation study concerning the impact of COVID-19 on the global supply chain. Ivanov and Dolgui (2020) pointed out that not only the survivability of supply chain but also the resistance of supply chain to abnormal damage should be considered in the study of supply chain elasticity. Hobbs (2020) presented an early assessment towards the implications of COVID-19 for food supply chains and supply chain resilience. To alleviate the outbreak of medical supply chain interruption in the COVID-19 pandemic, Govindan et al. (2020) introduced a practical decision support system based on the knowledge of physicians and a fuzzy inference system. van Hoek (2020) developed a resilient supply chain through a path to close the gap between research findings and industry practice. Hong (2020) analyzed the impact of COVID-19 epidemic on a supply chain and put forward relevant policy recommendations to improve the integration ability of the supply chain. These literature provided suggestions for alleviating the negative impact of the COVID-19 epidemic on the supply chain, but few literature studied how to evaluate and select diverse recommendations which is conducive to the effective implementation of recommendations. In this regard, this study researches the policy recommendation selection of improving the integration ability of supply chain under the COVID-19 epidemic.

Due to the increasing uncertainty and fuzziness in major decision problems, it is a common occurrence to expand the scale of a decision-making group to maintain the comprehensiveness and reliability of decision results, which leads to a large-scale group decision making (LSGDM) problem (Zhou and Chen 2020). At present, the research on LSGDM has attracted great attention (Tang and Liao 2020). Many LSGDM methods determined the weight of each subgroup based on the number of decision-makers (DMs) in the subgroup (Xu et al. 2015; Shi et al. 2018; Wu and Xu 2018; Liu et al. 2019). This method is easy to understand, but the veracity of the aggregated results is limited. It may enable the subgroups which have the same number of DMs but have different attitudinal characteristics to have the same weight (Tang and Liao 2020). The attitudinal characteristics of DMs are important factors that affect decision results. Nevertheless, few literature researched the attitudinal characteristics of DMs in a subgroup. Hence, this paper focuses on capturing the attitudinal characteristics of DMs in an LSGDM process. According to the attitudinal characteristics of DMs in each subgroup, the weight of each subgroup can be assigned reasonably.

The personalized quantifier is an effective tool to capture the attitudinal characteristics of DMs. It determines a DM’s attitudinal weight according to the distances between the subjective expectation values of alternatives under each criterion and the corresponding evaluation values provided by the DM. In this sense, the subjective expected values are the main factor reflecting the attitudinal characteristics of DMs; however, it is difficult for DMs to give crisp subjective expectation values directly. In this regard, Guo (2019) calculated the relative closeness coefficients of alternatives by the technique for order preference by similarity to ideal solution (TOPSIS) method and then normalized these relative closeness coefficients as the weights of alternatives to derive the subjective expectation values of alternatives on each criterion. In this process, larger weights were assigned to the alternatives with higher subjective ranks and lower weights were assigned to the alternatives with lower subjective ranks. In other words, this process is similar to subjectively give high weight to the top alternative and low weight to the bottom alternative, which limits the rationality of the derived subjective expectation values. To improve this limitation, in this study, we use the gained and lost dominance score (GLDS) method (Wu and Liao 2019) to determine the objective ranks of alternatives, assign corresponding weights to alternatives based on the differences between the objective and subjective ranks of each alternative. We assign higher weights to the overestimated alternatives on which the subjective ranks are higher than their corresponding objective ranks.

Since there are many DMs in an LSGDM process, a lot of computation will be needed to capture the attitudinal characteristic of each DM. Consequently, we need to reduce the dimension of the decision-making problem based on cluster analysis. Clustering is the main component of an LSGDM method, which can improve the efficiency of decision making. Partitioning clustering methods and hierarchical methods are two popular clustering methods. In partitioning clustering methods, the k-means clustering (Sarrazin et al. 2018), fuzzy c-means clustering (Dunn 1973) and fuzzy equivalence relation (Liang et al. 2005) have been widely used in LSGDM (Tang and Liao 2020). Because the k-means clustering algorithm is simpler than the algorithms of fuzzy c-means clustering and fuzzy equivalence relation, we use the ordinal k-means clustering algorithm (Tang et al. 2019) to cluster the ranking preferences of DMs on alternatives in the study. In the k-means clustering algorithm, the number of initial clustering centers (k value) and clustering centers are determined randomly, which limits the veracity of clustering results. For this problem, Tang et al. (2019) presented an improved max-min method to determine the initial clustering centers and used the sum of squared errors and the silhouette coefficient to determine the k value. This method to determine the k value requires several iterations and the calculation is complicated. To further improve the accuracy and simplicity of this algorithm, we set a distance threshold to determine the k value so as to maintain a reasonable number of subgroups. In addition, due to the advantages of the probabilistic linguistic term set (PLTS, Pang et al. 2016) in expressing DMs’ cognitions scientifically, this study takes the PLTS as a tool for DMs to evaluate the performances of policy recommendations in improving the integration ability of supply chain under each criterion.

To sum up, this study is mainly devoted into the following innovative work:

-

a)

We propose an algorithm to capture the attitudinal characteristics of DMs in an LSGDM process. According to the attitudinal characteristics of DMs in each subgroup, the weights of subgroups can be assigned reasonably.

-

b)

We set a distance threshold to determine the k value in the ordinal k-means clustering algorithm so as to maintain a reasonable number of subgroups.

-

c)

We determine the weights of alternatives based on the differences between the objective and subjective ranks of alternatives. This improves the rationality of the subjective expectation values that are derived from alternative ranking.

-

d)

We apply the proposed algorithm to select the policy recommendations on improving the integration ability of supply chain to enhance the supply chain resilience under the COVID-19 epidemic outbreak.

The rest of the study is structured as follows: Section 2 reviews the relevant knowledge. Section 3 elaborates the process of capturing the attitudinal characteristics of DMs in LSGDM. Section 4 gives a case study on the selection of recommendations of mitigating the negative impact of COVID-19 epidemic on the supply chain. Section 5 discusses the sensitivity of the values of distance threshold and the superiority of the proposed algorithm. Section 6 draws conclusions of this study and puts forward the prospect of future research.

2 Preliminaries

This section reviews the relevant knowledge of the PLTS, gained and lost dominance score method, ordinal k-means clustering algorithm and personalized quantifier with cubic spline interpolation.

2.1 Probabilistic linguistic term set

The PLTS introduced by Pang et al. (2016) is a useful tool to express the cognitions of DMs. It consists of a set of ordered and continuous linguistic terms with corresponding weights. Let S = {sα|α = 0, 1, ⋯, 2τ} be a linguistic term set. A PLTS corresponding to the DM d can be denoted as \( {L}^d(p)=\Big\{{s}_{\alpha}^{(t)d}\left({p}_{\alpha}^{(t)d}\right)\mid {s}_{\alpha}^{(t)d}\in S; \) \( {p}_{\alpha}^{(t)d}\ge 0;\kern0.33em t=1,\kern0.33em 2,\kern0.33em \cdots, \kern0.33em T;\kern0.33em {\sum}_{t=1}^T{p}_{\alpha}^{(t)d}\le 1\Big\} \) in which \( {s}_{\alpha}^{(t)d}\left({p}^{(t)d}\right) \) represents the tth linguistic term \( {s}_{\alpha}^{(t)} \) with the probability \( {p}_{\alpha}^{(t)} \), and T represents the number of linguistic terms in Ld(p). After the PLTS was proposed, many achievements have been obtained and a state of the art survey of the PLTS and its applications can be found (Liao et al. 2020b).

In terms of aggregating PLTSs provided by multiple DMs, Pang et al. (2016) adopted an approach that first aggregated each PLTS provided by DMs into a linguistic term by the sum of the product of the subscript and probability of each linguistic term in the PLTS, and then aggregated the linguistic terms of all DMs by the weight of each DM. Alternatively, Wu et al. (2018) introduced an expectation function given as Eq. (1) to translate PLTSs into crisp values within the interval [0, 1]:

where α(t) and \( {p}_{\alpha^{(t)}} \) respectively represent the subscript value and probability of the tth linguistic term in the PLTS Ld(p). This function assumes that the semantics of linguistic terms are uniformly distributed. After each PLTS provided by DMs is translated into a corresponding crisp value by the expectation function, these values and are then aggregated with DMs’ weights. These two aggregation approaches are simple but not sufficient in preserving the original information. In this regard, Wu and Liao (2019) presented an aggregation operator as

where λd represents the weight of the DM d and d = 1, 2, ..., D.

2.2 Gained and lost dominance score method

The GLDS method proposed by Wu and Liao (2019) is an efficient multi-criteria decision-making method which considers both the “group utility” and “individual regret” values. The core of this method is to calculate the gained and lost domination scores between alternatives according to the distances between alternatives under different criteria. At present, the GLDS method has been combined with various methods to solve decision-making problems in various fields. For example, Fang et al. (2019) combined the GLDS method with the generalized probabilistic linguistic evidential reasoning approach to screen the high risk group of lung cancer. Liao et al. (2019) combined the probabilistic linguistic GLDS method with the logarithm-multiplicative analytic hierarchy process. Liao et al. (2020c) combined the GLDS method with q-rung orthopair fuzzy sets to evaluate the investment of the BE angle capital. Liao et al. (2020a) proposed a Choquet integral-based hesitant fuzzy GLDS method to evaluate of higher business school education. Liang et al. (2020) improved the GLDS method by a probability distribution and then implemented it in selecting the sites of electric vehicle charging stations. These researches demonstrated the flexibility and efficiency of the GLDS method in application.

The advantages of the GLDS method are in threefold: a) the optimal alternative derived by this method dominates all other alternatives; b) this method uses the dominance flow function to accurately describe the degree that an alternative is superior to another; c) the aggregation formula introduced in this method synthesizes the “group utility” values, “individual regret” values and corresponding subordinate set, which enhances the robustness of the results. The specific implementation steps of the GLDS method are displayed in the Appendix.

2.3 Personalized quantifier with cubic spline interpolation

The personalized quantifier introduced by Guo (2014) can be used to depict the individual decision attitude according to the function on the information given by the individual. At present, several functions have been employed to denote personalized quantifiers, such as the piecewise linear interpolation function (Guo 2014; Guo 2019), Bernstein polynomials (Guo 2016), and the combination of the Bernstein polynomials with interpolation spline (Guo and Xu 2018). As Wen and Liao (2020) pointed out, there are limitations on the smoothness, accuracy and simplicity of the personalized quantifiers described by the above functions. To bridge these challenges, Wen and Liao (2020) presented a personalized quantifier with cubic spline interpolation function.

Let xij denotes the normalized evaluation information of the ith alternative on the jth criterion and \( {\hat{x}}_j \) denotes the subjective expectation information associated with the DM. The personalized quantifier with cubic spline interpolation function Q(x) can be expressed as:

where Q(0) = 0, Q(1) = 1. The coefficients δ0 = δm = 0 and δ1, δ2, ... δi, ..., δm are determined by 0.5δi − 1 + 2δi + 0.5δi + 1 = 3m2(σi + 1 − σi), for 0 < i < m. σi denotes the attitudinal weight and can be calculated by

Then, the attitudinal characteristic (AC) value of a DM can be determined by

AC is limited in the interval [0, 1]. When AC tends to 0, it indicates that the attitudinal characteristic of the DM tends to be negative. When AC tends to 0.5, it indicates that the attitudinal characteristic of the DM tends to be neutral. When AC tends to 1, it indicates that the attitudinal characteristic of the DM tends to be positive.

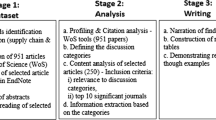

3 Capturing the attitudinal characteristics of decision-makers in group decision making

In this section, we introduce an algorithm to capture the attitudinal characteristics of DMs in LSGDM problems. This algorithm can be divided into three stages. In the first stage, the probabilistic linguistic evaluation information and subjective ranking of alternatives about the decision-making problem provided by DMs are collected. Then, the DMs are clustered based on the ordinal k-means clustering algorithm, and the alternative ranks in the clustering centers are aggregated to form collected subjective alternative ranks. The second stage applies the GLDS method to obtain an objective ranking of alternatives according to the evaluation information provided by the DMs. The third stage captures the attitudinal characteristics of the DMs in a cluster by the personalized quantifiers with cubic spline interpolation.

3.1 Classifying decision-makers by the ordinal k-means clustering algorithm

For a complex LSCDM problem with a set of alternatives A1, A2, ⋯, Ai, ⋯, Am and criteria C1, C2, ⋯, Cj, ⋯, Cn, a group of DMs E1, E2, ⋯, Ed, ⋯, ED are invited to provide information. On the one hand, it is needed to collect the probabilistic linguistic evaluation information of each DM about the performances of alternatives under each criterion based on a given linguistic term set. Then, the individual probabilistic linguistic decision matrix corresponding to Ed can be constructed as:

where \( {L}_{ij}^d(p) \) represents the probabilistic linguistic evaluation information of alternative Ai under criterion Cj. On the other hand, it is needed to collect the subjective ranks of alternatives corresponding to each DM. To better extract the individual attitudinal characteristics of the DMs, this information needs to be given by the DMs without communication.

Subsequently, we calculate the distances between each alternative rank and other alternative ranks provided by the DMs. The Euclidean distance and cosine distance are two widely used distance measures in the k-means clustering algorithm (Tang et al. 2019). The Euclidean distance measures the absolute distance of each point in a space, which is directly related to the position coordinates of each point, while the cosine distance measures the included angle of a space vector. Standing in the position of measuring the absolute distance, here we use the Euclidean distance measure to calculate the distance between two alternative ranks:

where rd and rv respectively represent the alternative ranks provided by DMs Ed and Ev. The number of alternatives m > 1, and if m is odd, then φ = 0; if m is even, then φ = 1. 2[(m − 1)2 + (m − 3)2 + ⋯ + φ] represents the farthest distance between two alternative ranks.

Then, we need to determine the initial clustering center. In this regard, we adopt the improved max-min method (Tang et al. 2019) presented as follows:

-

a.

Select the rank with the minimum average distance between each rank and other ranks as the first clustering center, which can be denoted as:

-

b.

Select the ranking with the maximum average distance between each rank and the first clustering center as the second clustering center, which can be denoted as:

where \( {r}_i^{K_1} \) is the rank of the alternative selected as the first clustering center.

-

c.

Calculate the distances between the remaining ranks and each clustering center, keep the one with the minimum distance, and then select the rank with the maximum distance as the next clustering center. Cycle this process until the k-th clustering center is selected.

where \( {r}_i^{K_{\varepsilon }} \) is the rank of the alternative selected as the εth clustering center.

On the determination the number of clustering center k, we set a distance threshold ϕ. When the maximum distance is less than ϕ, the selection of the next clustering center will be stopped. We will discuss the value of the distance threshold ϕ in Section 4.

After each clustering center is determined, all alternative ranks are clustered to the nearest clustering centers. This means that the DMs who provide corresponding alternative ranks are clustered. Then, we aggregate the alternative ranks provided by the DMs in a cluster according to the ascending order of rank sum of each alternative, such that there is an aggregated subjective rank of each alternative in a cluster.

3.2 Deducing the objective alternative ranks by the GLDS method

Aggregating the individual probabilistic linguistic decision matrices related to all DMs by Eq. (2), we can establish a group probabilistic linguistic decision matrix. Based on Eq. (1), we measure the dominance flow of alternative Ai over alternative Az under criterion Cj by

where α(t)i and α(t)z respectively represent the subscript values of the tth linguistic terms related to the performance of alternative Ai and alternative Az under criterion Cj. \( {p}_{\alpha^{(t)i}} \) and \( {p}_{\alpha^{(t)z}} \) represent the probabilities corresponding to the tth linguistic terms.

Normalizing the dominance flow by the vector normalization, we have

Combining the weight of each criterion, we can get the net gained dominance score and net lost dominance score of each alternative by Eqs. (3) and (4), respectively. Then, calculating the overall dominance score of each alternative by Eq. (5), we can obtain the objective ranks of alternatives.

3.3 Capturing the attitudinal characteristics of decision-makers based on personalized quantifiers

Since the subjective expectation of DMs is an important factor to extract the attitudinal characteristics of DMs, we need to deduce the subjective expectation values of DMs on each criterion based on the alternative ranks. Firstly, we can compute the relative importance Ii of each alternative according to the difference between the subjective rank \( {r}_{SUB}^{K_{\varepsilon }}\left({A}_i\right) \) in the cluster Kε and the objective rank rOB(Ai) by

In Eq. (11), if \( {r}_{SUB}^{K_{\varepsilon }}\left({A}_i\right)-{r}_{OB}\left({A}_i\right)>0 \), the DM underestimates alternative Ai, and the relative importance of Ai is low; if \( {r}_{SUB}^{K_{\varepsilon }}\left({A}_i\right)-{r}_{OB}\left({A}_i\right)<0 \), the DM overestimates alternative Ai, and the relative importance of Ai is high.

To take the relative importance of alternatives as their weights, we normalize these values by a linear normalization as:

Afterwards, we aggregate the individual decision matrices related to the DMs in a cluster by Eq. (2) and transform these probabilistic linguistic decision matrices into crisp matrices by Eq. (1). For these k subgroup decision matrices, we combine them with the relative importance of alternatives calculated in Eq. (12). Then, the subjective expectation value of each criterion corresponding to the DMs in the cluster Kε can be obtained by

where \( {x}_{ij}^{K_{\varepsilon }} \) represents the evaluation value of alternative Ai under criterion Ci in the subgroup decision matrix associated with the cluster Kε.

Next, by Eq. (3), we can generate the personalized quantifier with cubic spline interpolation related to the DMs in a cluster. Furthermore, the attitudinal characteristics of the DMs in the cluster Kε can be captured according to Eq. (4). Generally, the attitudinal characteristics of DMs in a cluster can be classified into five categories: positive, neutral to positive, neutral, neutral to negative, and negative. After data simulation, we find that the attitudinal characteristic value, AC, is generally stable within the interval [0.3, 0.7]. Suppose that AC is uniformly distributed in the interval. Under this premise, if AC falls in the interval [0.3, 0.38), the attitudinal characteristic is regarded as negative; if AC falls in the interval [0.38, 0.46), the attitudinal characteristic is neutral to negative; if AC lies in the interval [0.46, 0.54), the attitudinal characteristic is neutral; if AC lies in the interval [0.54, 0.62), the attitudinal characteristic is neutral to positive; if AC lies in the interval [0.62, 0.7], the attitudinal characteristic is positive.

For the clusters with different attitudinal characteristics, we can assign different weights to aggregate the decision results of these clusters and get a final decision. In this regard, we can calculate the aggregated value of each cluster by the order weighted average (OWA) operator (Yager 1996) given as Eq. (14) in which the weight of each criterion is derived from the personalized quantifier.

where \( {\hat{x}}_{ij}^{\left(\varepsilon \right)} \) denotes the jth largest value of all criteria.

Next, we further aggregate the collected values of different clusters by the weighted average (WA) operator given as Eq. (15) in which the weights of clusters are determined according to their risk preferences. Risk-seekers can be assigned higher weights to the clusters with negative attitudinal characteristics, while risk-averters can be assigned lower weights to the clusters with positive attitudinal characteristics.

where ϖε denotes the weight of cluster Kε, for ε = 1, 2, ⋯, k and \( {\sum}_{\varepsilon =1}^k{\varpi}_{\varepsilon }=1 \).

It is assumed that there is consistency between alternative ranks and the alternative evaluation information provided by the DMs. We can capture the attitudinal characteristics of the DMs who provide the information about the same group decision-making problem. This process only needs to determine the nearest clustering center to the alternative ranks provided by the DMs, and the attitudinal characteristics of the DMs previously cluster to the clustering center is the attitudinal characteristics of these DMs.

Based on the above analysis, the procedure to capture the attitudinal characteristics of DMs for LSGDM problems can be summarized as Algorithm 1, and the flow chart of this algorithm is shown as Fig. 1.

Algorithm 1

4 Case study: Selection policy recommendations of improving supply chain resilience under the COVID-19 outbreak

The global spread of COVID-19 has made it a global pandemic, as announced by the World Health Organization on March 11, 2020 (Ivanov 2020). In today’s globalized production environment, the supply chain of many companies is particularly vulnerable to the impact of the epidemic outbreak (Ivanov 2020). As reported in Fortune (2020), 94% of the supply chain of Fortune 1000 companies have been interfered by the coronavirus. With the severity of the COVID-19 pandemic becoming apparent, governments and organizations need to act quickly in a changing context (Sarkis et al. 2020). In general, the impact of the epidemic outbreak on the global supply chain is mainly reflected in the following aspects: 1) the global epidemic of COVID-19 resulted in the decreasing demand of the industrial value chain and the uncertainty of the supply; 2) the upstream factories stopped production or reduce production, resulting in the supply and production of the downstream being implicated and challenged; 3) the city blockade, resulting in regional and international logistics terminals and delays and further leading to a mass shortage or backlog (https://www.zhihu.com/question/375078869/answer/1150204862). The theoretical basis for the impact of COVID-19 on the supply chain is shown in Fig. 2 (Hong 2020).

The impact of the COVID-19 outbreak on the global supply chain shows that the highly complex vertical and horizontal industrial division system will cause the imbalance of the whole supply chain system due to the temporary contraction, deviation or rupture of local links in the face of sudden force majeure events. In this sense, the recommendations on enhancing supply chain resilience is crucial for maintain the resilience, flexibility and agility of a supply chain system. For this problem, van Hoek (2020) put forward suggestions to enhance supply chain resilience in various industries; Hobbs (2020) and Govindan et al. (2020) increased supply chain resilience of food supply chain and medical supply chain respectively by several methods. These recommendations are aimed at a specific industry and do not have wide application.

Hong (2020) presented four policy recommendations to improve the integration capability of a supply chain for enhancing supply chain resilience under the COVID-19 epidemic outbreak from the perspective of the government. Since these four policy recommendations are considered from multiple perspectives and have wide applicability, we chose them as alternatives. The specific contents of the four policy recommendations are as follows:

-

A1: Improve the capacity of resource link and relation network construction of various market entities, and flexibly adjust the supply chain network according to the changes of constraints.

-

A2: By optimizing the business environment and trade liberalization, we provide relaxed market and policy conditions for the integration of manufacturing supply chain, and encourage all kinds of manufacturing enterprises to improve the depth, breadth and level of embedding in the global industry chain, value chain and supply chain through technological innovation, product innovation, process innovation, model innovation, management innovation and channel innovation.

-

A3: Strengthen the construction of various public service platforms, optimize the carrier support system, focus on solving the basic technology and common technology bottlenecks restricting the development of the manufacturing industry, actively guide the core enterprises of the supply chain to “empower” the upstream and downstream small and medium-sized enterprises, and effectively reduce the network transaction cost of the supply chain.

-

A4: Design and optimize the governance rules, standards and systems in line with the development characteristics of the manufacturing industry, strengthen the governance of the supply chain, and provide an important guarantee for improving the integration capacity of the supply chain.

Due to the limitation of human, material and financial resources, some countries or regions may only choose one of the best programs to implement. Hence, we need to determine which one of these four recommendations is the best in the circumstances of LSGDM.

Assume that three criteria are set to evaluate these recommendations, namely, feasibility (C1), implementation benefit (C2) and implementation cost (C3). Twenty DMs are invited, among them, d1, d2, d3, d4, d5, d6, d7, d8, d9 and d10 are relevant government staffs, d11, d12, d13, d14, d15, d16, d17, d18, d19 and d20 are experts in the supply chain. According to the linguistic term set {s0 : very good, s1 : good, s2 : slightly good, s3 : medium, s4 : slightly poor, s5 : poor, s6 : very poor}given in advance, the DMs provide the probabilistic linguistic evaluation information of the for policy-recommendations under three criteria, and the probabilistic linguistic decision matrices associate with the DMs are as follows:

The ranks of the recommendations provided by the DMs are as follows:

d1, d7, and d12: {3, 1, 2, 4}{3, 1, 2, 4};

d2: {1, 2, 4, 3};

d3 and d15: {1, 3, 2, 4};

d4: {2, 1, 3, 4};

d5: {4, 1, 2, 3};

d6: {1, 2, 3, 4};

d8: {2, 4, 1, 3};

d9: {2, 3, 1, 4};

d10: {1, 4, 2, 3};

d11: {2, 3, 4, 1};

d13: {4, 2, 3, 1};

d14: {2, 4, 3, 1};

d16: {4, 3, 1, 2};

d17 and d18: {1, 3, 4, 2};

d19: {3, 4, 2, 1};

d20: {4, 1, 3, 2}.

Calculate the distances between the ranks of these recommendations by Eq. (5), and the results are listed in Table 1.

Set the distance threshold ϕ as 0.7. We can determine three clustering centers by Eqs. (6)–(8), which are d3, d13 and d1. Then, classify the ranks of recommendations provided by the remaining DMs to a cluster with the nearest clustering center and then form three clusters with respect to the DMs, respectively: K1 = {d2, d3, d6, d8, d9, d10, d15, d17, d18}; K2 = {d11, d13, d14, d16, d19, d20}; K3 = {d1, d4, d5, d7, d12}. After aggregating the ranks of recommendations provided by the DMs in each cluster, the subjective ranks of recommendations with respect to each cluster can be obtain as K1 : {1, 3, 2, 4}; K2 : {4, 3, 2, 1}; K3 : {3, 1, 2, 4}.

Next, aggregate the probabilistic linguistic decision matrices provided by these twenty DMs based on Eq. (2). A probabilistic linguistic group decision matrix can be constructed as G.

Measure the dominance flow between two recommendations under each criterion by Eq. (9), and normalize the dominance flow by Eq. (10). The calculation results are displayed in Tables 2 and 3, respectively. Based on Eqs. (A.1)-(A.3), calculate the dominance score of each recommendation and obtain the objective ranks of recommendations as shown in Table 4 (Here we assume that each criterion has the same weight).

Afterwards, aggregate the probabilistic linguistic decision matrices related to the DMs in the same cluster by Eq. (2). Then, three collected decision matrices can be formed as G′, G ′ ′ and G ′ ′ ′, respectively.

Transform the probabilistic linguistic decision matrices into crisp matrices by Eq. (1):

According to Eqs. (11) and (12), the relative importance of the recommendations related to the three clusters are calculated, and the results are shown in Table 5.

Then, by Eq. (13), the subjective expectation values of the criteria related to clusters K1, K2 and K3 are determined as: \( {\hat{x}}_1^{K_1} \)= 0.767; \( {\hat{x}}_2^{K_1} \)= 0.674; \( {\hat{x}}_3^{K_1} \)= 0.637; \( {\hat{x}}_1^{K_2} \)= 0.706; \( {\hat{x}}_2^{K_2} \)= 0.738; \( {\hat{x}}_3^{K_2} \)= 0.595; \( {\hat{x}}_1^{K_3} \)= 0.706; \( {\hat{x}}_2^{K_3} \)= 0.660; \( {\hat{x}}_3^{K_3} \)= 0.578. Based on the subjective expectation values, we can respectively generate the personalized quantifiers with cubic spline interpolation related to the DMs in clusters K1, K2 and K3 by Eq. (3):

The function images of these personalized quantifiers are shown in Fig. 3.

Furthermore, the attitudinal characteristic values of the DMs in clusters K1, K2 and K3 can be respectively captured according to Eq. (4), shown as: AC(K1)= 0.511; AC(K2)= 0.588; AC(K3)= 0.429. Hence, we can determine that the attitudinal characteristic of the DMs in cluster K1 is neutral, the attitudinal characteristic of the DMs in cluster K2 is neutral to positive and the attitudinal characteristic of the DMs in cluster K3 is neutral to negative. Based on Eq. (14), the OWA aggregation values of clusters K1, K2 and K3 are calculated as:

Suppose that the weights of the three clusters are 0.3, 0.3 and 0.4. Then, the final aggregated values of the recommendations can be obtained by Eq. (15) as: FWA(A1) = 0.693, FWA(A2) = 0.653, FWA(A3) = 0.711, FWA(A4) = 0.626. Therefore, the final ranking of the recommendations is A3 ≻ A1 ≻ A2 ≻ A4, and the optimal policy recommendation is A3.

5 Discussions

Based on the data in Section 4, we discuss the LSGDM process by sensitivity analysis and comparative analysis in terms of capturing the attitudinal characteristics of DMs. Practical management implications are also derived from the case study.

5.1 Sensitive analysis

In the process of clustering, we set the distance threshold ϕ as 0.7, and the DMs were divided into three clusters. This section discusses the clustering of the DMs and the attitudinal characteristics of the DMs in each cluster when the distance threshold ϕ is another value.

If we set ϕ as 0.6, we can determine four clustering centers, which are d3, d13, d1 and d17; if we set ϕ as 0.5, we can determine six clustering centers, which are d3, d13, d1, d17, d19 and d8; if we set ϕ as 0.4, we can determine seven clustering centers, which are d3, d13, d1, d17, d19, d8 and d16. Since 0.316 is the shortest distance between the recommendations except 0, the minimum distance threshold can be set as 0.4. The clusters under different distance thresholds are shown in Fig. 4. When ϕ= 0.6, the attitudinal characteristic values of the DMs in the clusters are AC(K1)= 0.541, AC(K2)= 0.528, AC(K3)= 0.548, AC(K4)= 0.480, and the corresponding attitudinal characteristics of the DMs in the clusters are neutral to positive, neutral, neutral to positive, and neutral, respectively. When ϕ= 0.5, the attitudinal characteristic values of the DMs in the clusters are AC(K1)= 0.552, AC(K2)= 0.640, AC(K3)= 0.548, AC(K4)= 0.501, AC(K5)= 0.503, AC(K6)= 0.475, and the corresponding attitudinal characteristics of the DMs in the clusters are neutral, positive, neutral to positive, neutral, neutral, and neutral, respectively. When ϕ= 0.4, the attitudinal characteristic values of the DMs in the clusters are AC(K1)=0.552, AC(K2)=0.640, AC(K3)=0.548, AC(K4)=0.501, AC(K5)=0.524, AC(K6)=0.475, AC(K7)=0.482, and the corresponding attitudinal characteristics of the DMs in the clusters are neutral, positive, neutral to positive, neutral, neutral, neutral, and neutral, respectively .

To highlight the influence of different distance thresholds on the final decision results, each cluster is assigned the same weight here. In this case, when ϕ= 0.7, the final aggregated values of the recommendations are FWA(A1)= 0.698, FWA(A2)= 0.656, FWA(A3)= 0.709, and FWA(A4)= 0.635, respectively. When ϕ= 0.6, the final aggregated values of the recommendations are FWA(A1)= 0.706, FWA(A2)= 0.659, FWA(A3)= 0.713, and FWA(A4)= 0.651, respectively. When ϕ= 0.5, the final aggregated values of the recommendations are FWA(A1)= 0.715, FWA(A2)= 0.667, FWA(A3)= 0.679, and FWA(A4)= 0.663, respectively. When ϕ= 0.4, the final aggregated values of the recommendations are FWA(A1)= 0.707, FWA(A2)= 0.658, FWA(A3)= 0.693, and FWA(A4)= 0.669, respectively. Then, the final ranks of the recommendations under different distance thresholds can be determined, which are shown in Fig. 5.

We can see from Fig. 5 that the changes of distance thresholds have an effect on the ranking results of recommendations. When ϕ= 0.4 and ϕ= 0.5, the optimal policy recommendation is A1, while when ϕ= 0.6 and ϕ= 0.7, the optimal policy recommendation is A3. This phenomenon may be caused by the fact that several DMs are divided into an individual cluster with the decrease of distance thresholds, which increases the impact of the information provided by the expert on the results. Besides, we find that as the distance threshold decreases, the ranking result becomes unstable, which indicates that the robustness of a small threshold is high. Furthermore, if there are hundreds of DMs, a small threshold will greatly increase the amount of calculation. Since the ranking is relatively stable when the distance threshold is taken as the middle value in this case, the median distance threshold is recommended.

5.2 Comparative analysis

In the process of determining the subjective expectation values of criteria, we calculate the difference between the objective rank and subjective rank of each recommendation. In this regard, we use the cubic spline interpolation to characterize personalized quantifiers. Guo (2019) determined the subjective expectation values of criteria based on the relative closeness coefficient of each recommendation, and used the piecewise linear interpolation to characterize personalized quantifiers. This section compares our method with Guo (2019)‘s method in terms of determining the subjective expectation values of criteria.

In Guo (2019)‘s method, the TOPSIS method was used to calculate the relative closeness coefficient of each recommendation. With the data in Section 4, the relative closeness coefficient of each recommendation is calculated as 0.296, 0.239, 0.278, and 0.187, respectively. Then, these values are converted into the weights of the recommendations according to the linear normalization. The aggregated subjective ranks of recommendations obtained by aggregating the subjective ranks of recommendations provided by the 20 DMs is {1, 2, 3, 4}. The higher weights are assigned to the recommendations with higher ranks in the subjective ranks of recommendations provided by the DMs. Based on the WA operator, the subjective expectation value of each cluster is computed as: \( {\hat{x}}_1^{\ast {K}_1}=0.773 \), \( {\hat{x}}_2^{\ast {K}_1}=0.687 \), \( {\hat{x}}_3^{\ast {K}_1}=0.618 \), \( {\hat{x}}_1^{\ast {K}_2}=0.701 \), \( {\hat{x}}_2^{\ast {K}_2}=0.704 \), \( {\hat{x}}_3^{\ast {K}_2}=0.587 \), \( {\hat{x}}_1^{\ast {K}_3}=0.714 \), \( {\hat{x}}_2^{\ast {K}_3}=0.691 \), \( {\hat{x}}_3^{\ast {K}_3}=0.555 \). Next, we can generate the personalized quantifier with piecewise linear interpolation related to the DMs in the clusters K1, K2 and K3 by \( {Q}^{\ast }(x)=\left( mx-i\right){\sigma}_i+{\sum}_{z=1}^i{\sigma}_z \) ((i − 1)/m ≤ x ≤ i/m, i = 1, 2, ⋯, m) shown as:

The function graphics of these personalized quantifiers are shown in Fig. 6.

Since \( A{C}^{\ast }={\int}_0^1{Q}^{\ast }(x) dx=1-\left(1/m\right){\sum}_{i=1}^mi{\sigma}_i+1/2m \), we can calculate the attitudinal characteristic values of the DMs in the clusters as: AC∗(K1)= 0.525, AC∗(K2)= 0.485, AC∗(K3)= 0.452. We can determine that the attitudinal characteristic of the DMs in cluster K1 is neutral, the attitudinal characteristic of the DMs in cluster K2 is neutral, and the attitudinal characteristic of the DMs in cluster K3 is neutral to negative. By Eq. (15), the final aggregated values of the recommendations are: \( {F}_{WA}^{\ast}\left({A}_1\right) \)= 0.689, \( {F}_{WA}^{\ast}\left({A}_2\right) \)= 0.649, \( {F}_{WA}^{\ast}\left({A}_3\right) \)= 0.708, and \( {F}_{WA}^{\ast}\left({A}_4\right) \)= 0.623. The final ranking of the recommendations derived by Guo (2019)‘s method is A3 ≻ A1 ≻ A2 ≻ A4.

Through the above analysis, we can find that the ranking result derived by our method is the same as that derived by Guo (2019)‘s method. This validates the reliability of our method. However, the attitudinal characteristics of the DMs in the cluster K2 deduced by the two methods are different. This may be resulted from the fact that these two methods use different interpolation functions to generate the personalized quantifiers. Compared with Figs. 3 and 6, it is not difficult to find that the personalized quantifiers depicted by the cubic spline interpolation are more smooth and interpretable than those depicted by the piecewise linear interpolation. This demonstrates the advantages of our method in terms of geometric representation.

5.3 Practical management implications

According to the research results of this study, we attain practical management implications from three aspects:

First, in the case that multifarious recommendations already exist, the selection of recommendations may be more important than the presentation of recommendations. In the face of the impact of COVID-19 epidemic on supply chains, many studies (Hobbs 2020; Govindan et al. 2020; van Hoek 2020; Hong 2020) focused on putting forward a variety of response recommendations. However, for the government or an organization, it is unrealistic to implement all these recommendations, and selecting the optimal recommendation for implementation or ranking these recommendations to implement them in turn can effectively enhance the supply chain resilience under the COVID-19 epidemic.

Second, in a decision-making process, the number of subgroups affects the ranking results. According to the characteristics of subgroups, different weights are assigned to these subgroups to obtain the ranking results. This is more reasonable than the ranking results obtained by directly aggregating the information provided by DMs. However, different number of subgroups may result in different ranking results. To solve such a problem, we can obtain relatively stable ranking results by controlling the number of subgroups through the median distance threshold, which is simple compared with iterative method.

Third, the policy recommendation selection should consider the attitude characteristics of DMs. The DMs with optimistic attitude tend to make risky evaluation on recommendations, while the DMs with negative attitude tend to make conservative evaluation on opinions. Based on the evaluation information of these two kinds of decision-makers, the results may be opposite. In this regard, capturing the attitude characteristics of DMs to consider the evaluations made by the DMs with different attitude characteristics is conducive to obtain reasonable results.

6 Conclusions

This study introduced an algorithm that integrated the PLTS, ordinal k-mean clustering algorithm, GLDS method, and personalized quantifier with cubic spline interpolation to capture the attitudinal characteristics of DMs in an LSGDM process. The PLTS was used as a tool to represent the evaluation information of DMs on alternatives. The ordinal k-mean clustering algorithm was used to form subgroups of DMs according to the ranks of alternatives associated with the DMs. The GLDS method was implemented to determine the objective ranks of alternative. The personalized quantifier with cubic spline interpolation was employed to capture the attitudinal characteristics of the DMs in each subgroup. We applied the proposed algorithm to select a suitable policy recommendation to improve the integration capability of the supply chain under the COVID-19 epidemic outbreak. The ranking results of the proposed algorithm can not only be used to select the optimal recommendation to carry out, but also can be applied to prioritize recommendations to achieve the purpose of orderly implementation of recommendations, which is more efficient than the implementation of several suggestions regardless of priorities. Through discussions, we found that in the ordinal k-mean clustering algorithm, the ranking results may become unstable with the decrease of distance threshold, and the median distance threshold is recommended in this regard. The DMs’ attitudinal weights for alternatives were determined based on the differences between subjective alternative ranks and objective alternative ranks, which is more reliable than only based on subjective alternative ranks.

In this study, to simplify the procedure of the proposed algorithm, the consensus of the DMs in each subgroup was not considered. In future research, we will capture the attitudinal characteristics of DMs in subgroups based on the consensus reaching process. Besides, in the proposed algorithm, DMs were required to provide complete alternative ranking information, but in practice, some DMs may only provide part of the alternative ranking information, which limits the practical application of the proposed algorithm. To improve this problem, we will further research the attitude characteristics of decision makers based on partial ranking of alternatives.

References

Barbieri P, Boffelli A, Elia S, Fratocchi L, Kalchschmidt M, Samson D (2020) What can we learn about reshoring after Covid-19? Oper Manag Res 13:131–136. https://doi.org/10.1007/s12063-020-00160-1

Dunn JC (1973) A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. J Cybern 3(3):32–57. https://doi.org/10.1080/01969727308546046

Fang R, Liao HC, Yang JB, Xu DL (2019) Generalised probabilistic linguistic evidential reasoning approach for multi-criteria decision-making under uncertainty. J Oper Res Soc:1–15. https://doi.org/10.1080/01605682.2019.1654415

Fortune (2020) https://fortune.com/2020/02/21/fortune-1000-coronavirus-china-supply-chain-impact/. accessed March 2020

Govindan K, Mina H, Alavi B (2020) A decision support system for demand management in healthcare supply chains considering the epidemic outbreaks: a case study of coronavirus disease 2019 (COVID-19). Transport Res E-Log Transp Rev 138:101967. https://doi.org/10.1016/j.tre.2020.101967

Guo KH (2014) Quantifier induced by subjective expected value of sample information. IEEE Trans Cybern 44(10):1784–1794. https://doi.org/10.1109/TCYB.2013.2295316

Guo KH (2016) Quantifier induced by subjective expected value of sample information with Bernstein polynomials. Eur J Oper Res 254:226–235. https://doi.org/10.1016/j.ejor.2016.03.015

Guo KH (2019) Expected value from a ranking of alternatives for personalized quantifier. IEEE Intell Syst 34(6):24–33. https://doi.org/10.1109/MIS.2019.2949266

Guo KH, Xu H (2018) Personalized quantifier by Bernstein polynomials combined with interpolation spline. Int J Intell Syst 33:1507–1533. https://doi.org/10.1002/int.21991

Hobbs JE (2020) Food supply chains during the COVID-19 pandemic. Canadian Journal of Agricultural Economics/Revue Canadienne D’agroeconomie 68:171–176. https://doi.org/10.1111/cjag.12237

Hong W (2020) Analysis of the impact of epidemic impact on the supply chain of manufacturing industry and the policy orientation in “post epidemic era”. Southwest Finance 6: 3-12. http://kns.cnki.net/kcms/detail/51. 1587.F.20200422.1057.002.Html

Ivanov D (2020) Predicting the impact of epidemic outbreaks on the global supply chains: a simulation-based analysis on the example of coronavirus (COVID-19 / SARS-CoV-2) case. Transp Res E 136:101922. https://doi.org/10.1016/j.tre.2020.101922

Ivanov D, Dolgui A (2020) Viability of intertwined supply networks: extending the supply chain resilience angles towards survivability. A position paper motivated by COVID-19 outbreak. Int J Prod Res 58(10):2904–2915. https://doi.org/10.1080/00207543.2020.1750727

Khan SAR, Sharif A, Hêriş G, Kumar A (2019) A green ideology in Asian emerging economies: from environmental policy and sustainable development. Sustain Dev 27(2):1063–1075. https://doi.org/10.1002/sd.1958

Liang GS, Chou TY, Han TC (2005) Cluster analysis based on fuzzy equivalence relation. Eur J Oper Res 166(1):160–171. https://doi.org/10.1016/j.ejor.2004.03.018

Liang XD, Wu XL, Liao HC (2020) A gained and lost dominance score II method for modelling group uncertainty: case study of site selection of electric vehicle charging stations. J Clean Prod 262:121239. https://doi.org/10.1016/j.jclepro.2020.121239

Liao HC, Yu JY, Wu XL, Al-Barakati A, Altalhi A, Herrera F (2019) Life satisfaction evaluation in earthquake-hit area by the probabilistic linguistic GLDS method integrated with the logarithm-multiplicative analytic hierarchy process. Int J Disaster Risk Reduct 38:101190. https://doi.org/10.1016/j.ijdrr.2019.101190

Liao ZQ, Liao HC, Tang M, Al-Barakati A, Llopis-Albert C (2020a) A Choquet integral-based hesitant fuzzy gained and lost dominance score method for multi-criteria group decision making considering the risk preferences of experts: case study of higher business education evaluation. Inf Fusion 62:121–133. https://doi.org/10.1016/j.inffus.2020.05.003

Liao HC, Mi XM, Xu ZS (2020b) A survey of decision-making methods with probabilistic linguistic information: Bibliometrics, preliminaries, methodologies, applications and future directions. Fuzzy Optim Decis Making 19(1):81–134. https://doi.org/10.1007/s10700-019-09309-5

Liao HC, Zhang HR, Zhang C, Wu XL, Mardani A, Al-Barakat A (2020c) A q-rung orthopair fuzzy GLDS method for investment evaluation of BE angel capital in China. Technol Econ Dev Econ 26(1):103–134. https://doi.org/10.3846/tede.2020.11260

Liu X, Xu YJ, Herrera F (2019) Consensus model for large-scale group decision making based on fuzzy preference relation with self-confidence: detecting and managing overconfidence behaviors. Inf Fusion 52:245–256. https://doi.org/10.1016/j.inffus.2019.03.001

Pang Q, Wang H, Xu ZS (2016) Probabilistic linguistic term sets in multi-attribute group decision making. Inf Sci 369:128–143. https://doi.org/10.1016/j.ins.2016.06.021

Sarkis J, Dewick P, Hofstetter JS, Schröder P (2020) Overcoming the arrogance of ignorance: supply-chain lessons from COVID-19 for climate shocks. One Earth 3(1):9–12. https://doi.org/10.1016/j.oneear.2020.06.017

Sarrazin R, De Smet Y, Rosenfeld J (2018) An extension of PROMETHEE to interval clustering. Omega 80:12–21. https://doi.org/10.1016/j.omega.2017.09.001

Shi ZJ, Wang XQ, Palomares I, Guo SJ, Ding RX (2018) A novel consensus model for multi-attribute large-scale group decision making based on comprehensive behavior classification and adaptive weight updating. Knowl-Based Syst 158:196–208. https://doi.org/10.1016/j.knosys.2018.06.002

Tang M, Liao HC (2020) From conventional group decision making to large-scale group decision making: what are the challenges and how to meet them in big data era? A state-of-the-art survey. Omega https://doi.org/10.1016/j.omega.2019.102141

Tang M, Zhou XY, Liao HC, Xu JP, Fujita H, Herrera F (2019) Ordinal consensus measure with objective threshold for heterogeneous large-scale group decision making. Knowl-Based Syst 180:62–74. https://doi.org/10.1016/j.knosys.2019.05.019

van Hoek R (2020) Research opportunities for a more resilient post-COVID-19 supply chain - closing the gap between research findings and industry practice. Int J Oper Prod Manag 40:341–355. https://doi.org/10.1108/IJOPM-03-2020-0165

Wen Z, Liao HC (2020) Information representation of blockchain technology: risk evaluation of investment by personalized quantifier with cubic spline interpolation. Information Processing and Management, Technique Report

Wu XL, Liao HC (2019) A consensus-based probabilistic linguistic gained and lost dominance score method. Eur J Oper Res 272:1017–1027. https://doi.org/10.1016/j.ejor.2018.07.044

Wu ZB, Xu JP (2018) A consensus model for large-scale group decision making with hesitant fuzzy information and changeable clusters. Inf Fusion 41:217–231. https://doi.org/10.1016/j.inffus.2017.09.0111

Wu XL, Liao HC, Xu ZS, Hafezalkotob A, Herrera F (2018) Probabilistic linguistic MULTIMOORA: a multicriteria decision making method based on the probabilistic linguistic expectation function and the improved Borda rule. IEEE Trans Fuzzy Syst 26(6):3688–3702. https://doi.org/10.1109/tfuzz.2018.2843330

Xu XH, Du ZJ, Chen XH (2015) Consensus model for multi-criteria large-group emergency decision making considering non-cooperative behaviors and minority opinions. Decis Support Syst 79:150–160. https://doi.org/10.1016/j.dss.2015.08.009

Yager RR (1996) Quantifier guided aggregation using OWA operators. Int J Intell Syst 11(1):49–73. https://doi.org/10.0884-8173/010049-25

Zhou JL, Chen JA (2020) A consensus model to manage minority opinions and noncooperative behaviors in large-scale GDM with double hierarchy linguistic preference relations. IEEE Trans Fuzzy Syst:1. https://doi.org/10.1109/TFUZZ.2020.2984188

Acknowledgements

The work was supported by the National Natural Science Foundation of China under Grant 71771156, 71971145.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

For a decision problem with m alternatives and n criteria, the implementation steps of the GLDS method are as follows:

Step 1. Construct a collective decision matrix based on the evaluation information provided by DMs.

Step 2. Calculate the dominance flow, Hj(i, z), between alternatives i and z according to the difference value between these two alternatives under each criterion (if the difference value is negative, Hj(i, z) = 0). Then, normalize the dominance flow by the vector normalization and get the normalized value \( {\overline{H}}_j\left(i,\kern0.33em z\right) \).

Step 3. Compute the net gained dominance score GDi and the lost dominance score LDi between alternatives i and z by

where wj is the weight of the jth criterion and \( {\sum}_{j=1}^n{w}_j=1 \).

Step 4. Obtain the dominance score CSi by

where r(GDi) and r(LDi) are the ranks of GDi and LDi, respectively. Then, determine the alternative ranking in descending order of CSi for i = 1, 2, ⋯, m.

Rights and permissions

About this article

Cite this article

Wen, Z., Liao, H. Capturing attitudinal characteristics of decision-makers in group decision making: application to select policy recommendations to enhance supply chain resilience under COVID-19 outbreak. Oper Manag Res 15, 179–194 (2022). https://doi.org/10.1007/s12063-020-00170-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12063-020-00170-z