Abstract

In manifold learning, the intrinsic geometry of the manifold is explored and preserved by identifying the optimal local neighborhood around each observation. It is well known that when a Riemannian manifold is unfolded correctly, the observations lying spatially near to the manifold, should remain near on the lower dimension as well. Due to the nonlinear properties of manifold around each observation, finding such optimal neighborhood on the manifold is a challenge. Thus, a sub-optimal neighborhood may lead to erroneous representation and incorrect inferences. In this paper, we propose a rotation-based affinity metric for accurate graph Laplacian approximation. It exploits the property of aligned tangent spaces of observations in an optimal neighborhood to approximate correct affinity between them. Extensive experiments on both synthetic and real world datasets have been performed. It is observed that proposed method outperforms existing nonlinear dimensionality reduction techniques in low-dimensional representation for synthetic datasets. The results on real world datasets like COVID-19 prove that our approach increases the accuracy of classification by enhancing Laplacian regularization.

Similar content being viewed by others

1 Introduction

A semi-supervised method utilizes unlabeled data for training along with given labeled data to exploit the hidden intrinsic geometrical information. It implicitly assumes that the underlying data holds either of the three assumptions: smoothness, clustering or manifold [27]. Manifold learning methods exploit the manifold assumption. These methods attempt to preserve geometric properties such as distances, proximity, angles, or local patches [22].

The real-world data gathered from imaging devices, medical science, and business applications usually lie in high dimension, and this causes the curse of dimensionality. One of the main objectives, in the analysis of such high-dimensional datasets, is to learn their geometrical and topological structure. Generally, the data is parameterized as points in \({\mathbb {R}}^{D}\), the correlation between parameters often suggests the manifold assumption that the data points are distributed on a very low-dimensional space \({\mathbb {R}}^{m}\) embedded on a Riemannian manifold \({\mathbb {R}}^{D}\) and \(m\ll D\) [2, 5, 6, 30, 33]. Manifold learning algorithms transform the high-dimensional data into a low-dimensional embedding space using existing dimensionality reduction methods. Principal component analysis (PCA) [9, 35, 40, 47], multidimensional scaling (MDS) [13,14,15, 19], linear discriminant analysis (LDA) [3, 7, 20], etc., are some popular linear dimensionality reduction algorithms. They provide true representation in the case of linear manifold, but fail to discover nonlinear or curved structures of the input data. In the case of handwritten characters, spoken letters and medical images, etc., manifolds do not follow linear properties and have nonlinear structure. The intrinsic geometry of the nonlinear manifold is explored by identifying the optimal local neighborhood around each observation. We assume that the data samples \(x_{i}\in X\) are drawn from a smooth Riemannian manifold \({\mathcal {M}}\in {\mathbb {R}}^{D}\). If a smooth Riemannian manifold is unfolded correctly, the observations lying spatially near on the manifold, should remain near on the lower dimension as well with their tangent spaces aligned.

Generally, due to the varying curvature of manifold around each observation, finding such optimal neighborhood on the manifold, is a challenge. Thus, the affinity calculated between these observations on manifold are erroneous as it may be affected from noise. On such a manifold, the tangent planes on observations are not aligned.

Manifold learning approaches are suitable for exploiting the nonlinear structures into a flat low-dimensional embedding space [22]. The aim of these approaches is to identify and exploit local linear space. The existing state-of-the-art algorithms like isometric feature mapping (ISOMAP) [33], local linear embedding (LLE) [29], Laplacian eigenmap (LE) [4], local tangent space alignment (LTSA) [36, 45], Hilbert–Schmidt independence criterion-regularized LTSA (HSIC–LTSA) [46], graph-regularized linear discriminant analysis (GRLDA) [16], jerk-based manifold regularization [39], robust Laplacian [1] identify and exploit such local structures. These methods have been applied on wide variety of applications; for instance, face recognition, facial expression transferring, handwriting identification, 3D body pose recovery, medical imaging and many more. An approach for face recognition is a two-dimensional neighborhood preserving projection (2DNPP) [42].

These state-of-the-art manifold learning methods can be categorized into distance-preserving methods, angle-preserving methods and proximity-preserving methods, which align local neighborhood for each data point into a global coordinate space. A method focuses on one perspective in order to preserve a single geometric property. For instance, Isomap is a distance preserving method; LE, LLE and LTSA are proximity-preserving methods, which assume that unfolding manifold results into aligned tangent planes of all the neighboring observations in the manifold [44]. LTSA assumes that the given data is uniformly distributed and data in local neighborhood of the manifold, follows linear properties, i.e., they lie in or close to a linear sub-space.

In LE, diffusion map (DM) [23] and vector diffusion map (VDM) [31], the data is represented as a weighted undirected graph. The vertices of graph correspond to the data observations, and the weights on edges quantify the affinity between them. In a local linear manifold, Euclidean distance is used as affinity metric, which can be described as a kernel function of the distance. If the data \(\{x_{i}\}_{i=1}^{n}\) consists of n observations in \(L_{2}({\mathbb {R}}^{3})\), then the distance between points \(x_i\) and \(x_j\) are calculated using Eq. (1)

and affinity of edges is calculated by Eq. (2)

To call an embedding faithful, we check whether it preserves the local structure of neighborhoods on the manifold, i.e., handles distances, angles, and neighborhoods in a comprehensive way.

LTSA assumes the local linearity of the manifold. Thus, local linear approximations of a manifold are constructed as a collection of overlapping approximate tangent spaces at every observation. These are, then, globally aligned to construct the global coordinate system for the underlying nonlinear manifold [45]. Here, the local tangent space is used to provide a low-dimensional linear approximation of the local geometric structure of the nonlinear manifold. The proposed rotation-based regularization method is based on the observation that the Riemannian assumption of local linearity of the manifold may not hold in a kNN neighborhood. Hence, a dimensionality reduction method, which relies on this assumption may yield suboptimal performance. This entails that the local neighborhood must be flattened so that the Euclidean distance is an accurate measure of affinity. Diffusion map assumes that the Euclidean distance between observations is approximated by the diffusion distance in the original feature space between probability distributions centered at those observations [23]. It assumes a nonlinear geometry and measures the similarity between two points at a specific scale through a diffusion metric.

In this paper, we determine the accurate pairwise affinity by aligning the tangent spaces of all the local points with respect to the point of interest to exploit the property of aligned tangent spaces of observations in an optimal neighborhood. Rotation is used to align the neighbors which are deviated from the tangent plane of the point of interest, so that they are on the same Euclidean plane. If the points are already on the plane, they are unaffected by the rotation. This gives an enhanced affinity for Laplacian, which is useful when data is affected from noise and the manifold curvatures are variable.

The contributions of this work are as follows:

-

1.

In proposed approach, the Riemannian manifold assumption of local linearity of the kNN graph neighborhoods around data points is ensured by flattening the manifold by rotating the tangent spaces of the neighbors with the tangent space of the data point of interest. The pairwise Euclidean distance between data points, then, becomes an accurate measure of the geodesic distance between vertices.

-

2.

The updated affinities based on the pairwise Euclidean distances are used in the graph Laplacian-based manifold regularization. This yields higher classification accuracy as the modified graph Laplacian, the rotation-based Laplacian, is able to give a better estimate of underlying marginal distribution.

The remainder of this paper is organized as follows: Sect. 2 defines the problem to be solved in this work. In Sect. 3, we propose our rotation-based Laplacian regularization approach for manifold learning and regularization. Section 4 contains the results obtained using our method and its comparison with state-of-the-art methods. Finally, Sect. 5 concludes our work by highlighting the salient features of rotation-based regularization.

2 Problem definition

On a Riemannian manifold, the local linear neighborhood assumption allows Euclidean distance as a measure of affinity between neighboring data points. Due to the unknown properties of the manifold, the identified neighbors may not lie in the locally linear patch around the point of interest. As the extent of the locally linear patch is unknown, kNN or \(\epsilon\) neighborhood is chosen heuristically as the linear neighborhood. Euclidean distance is computed and used as the affinity measure between data points in the kNN or \(\epsilon\) neighborhood, which is assumed to be linear. In such cases, the Euclidean distance between data points in the neighborhood fails to represent the affinity between them accurately. This requires either accurate determination of the linear region, which is difficult; or, linearization of the kNN or \(\epsilon\) neighborhood.

3 Rotation-based regularization method

Manifold regularization uses the smoothness assumption that a function, f, should change slowly where the marginal probability density is high. This requires estimation of the marginal probability density. In semi-supervised learning, the unknown marginal distribution is estimated using the given data, especially the ample amount of unlabeled data. If the data points on the manifold are represented by a graph, the smoothness of the function, f, on the graph can be measured in terms of a quadratic form of the graph Laplacian. Specifically, Graph Laplacian can be used to estimate the marginal distribution. The data points are the vertices of the graph. However, a distance needs to be associated between the corresponding vertices. This entails an accurate estimation of the geodesic distance between vertices. On the Riemannian manifold, the Euclidean distance is an accurate measure of the geodesic distance in a locally linear region. Thus, the problem of determining the geodesic distance between adjacent vertices of the graph reduces to finding the locally linear region. If a small region around a data point is flattened, the Euclidean distance is an accurate measure of geodesic distance between the vertices. This leads to accurate estimation of the graph Laplacian and, through it, the underlying marginal distribution. In the proposed rotation-based regularization method, the kNN is linearized by rotating the tangent planes of data points in the neighborhood followed by the semi-supervised learning classification using the updated affinities computed between tangent space aligned data points.

3.1 Neighborhood linearization through rotation

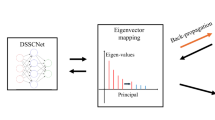

The proposed linearization method endeavors to flatten the local neighborhood around a data point chosen through kNN data points. This is achieved by rotating the tangent planes of neighboring data points with respect to the tangent plane of the point under consideration. A local linear graph of the dataset is created by fixing the neighborhood of all the data points using kNN. The tangent plane of a point is found using local PCA. The tangent planes of all the k neighboring points are also found. Once the tangent planes are determined, the tangent plane of the point of interest is fixed and other misaligned tangent planes are rotated to align them with the fixed tangent plane of the point under consideration. This flattens the chosen neighborhood and Euclidean distance can be used as a measure of affinity between data points.

Given n data samples with l labeled and \((n - l)\) unlabeled points where \((n - l) \gg l\) on a smooth Riemannian manifold \({\mathcal {M}}\), i.e., \(\{x_{i}\}_{i=1}^{n}\in {\mathbb {R}}^{D}\) that actually lie on a much lower-dimensional space \(\in {\mathbb {R}}^{m}\) i.e., \(m \ll D\). It can be represented by

where C is a compact subset of \({\mathbb {R}}^{m}\) and f is the data generation function, i.e.,

where \(\tau _{i}\) are original feature vectors or the lower-dimensional complement information and \(\eta _{i}\) is redundant data or noise. The noise may be introduced during various stages of data collection and preprocessing and may vary with the distance.

A manifold can be approximated with a graph by using a smooth function defined on the graph. This depends on the affinity matrix W as

where elements of the affinity matrix W are calculated using heat kernel \(w_{ij} = \frac{1}{C}\exp {-\frac{d_{ij}}{\epsilon ^2}}\), where \(d_{ij}\) is the distance between points \(x_i\) and \(x_j\), and diagonal matrix D has entries as \(D_{ii}=\sum _{j=1}^{n}w_{ij}\). kNN is used to create the undirected graph over given data points including both labeled and unlabeled ones.

Proposition 1

According to manifold learning assumption, on a manifold \({\mathcal {M}}\) , the tangent planes of the points \(x_i\) and \(x_k\) lying on a locally linear region are aligned.

Given a datapoint \(x_i\) and its neighbor \(x_k\in N(x_i)\) , the tangent planes of \(x_i\) and each \(x_k\) should be aligned.

To find the tangent plane \({T_{x_{i}}}\) of the point \(x_i\), local PCA is performed on the set of k nearest neighbors \(N_k( x_i )\) of point \(x_i\).

where V is a weight matrix and \(T_{x_i}\) contains the principal component scores. The m leading eigenvectors correspond to an orthogonal basis of \({T_{x_{i}}}\).

It is known that on a manifold, geodesic distance is the shortest distance between any two data points which is assumed to be Euclidean if the data points are lying in a local linear region. However, since the extent of the linear region around a data point is not known, the geodesic distance may not be Euclidean in a chosen neighborhood.

To find the correct Euclidean distance between points \(x_i\) and its neighborhood \(x_k\), we rotate \({T_{x_{k}}}\) w.r.t. \({T_{x_{i}}}\) and align them.

where \(\gamma\) is the orthogonal rotation matrix calculated using Procrustes analysis [28],

where \(\phi\) denotes translation, \(\gamma\) denotes rotation, and \(\rho\) denotes scaling. In the ideal case of local linear neighborhood, \(\phi\) will be a zero matrix, and \(\gamma\) and \(\rho\) will be unit matrices. But due to nonlinear surface, we optimize the parameters using

This idea is depicted in Fig. 1. To give the optimal rotation \({{\overline{\gamma }}}\), eigenvalue decomposition of same centroid matrices is calculated.

where k is the fixed number of neighbors. Putting these values in Eq. (10)

Let

To minimize \(\xi ({\overline{\gamma }},{\overline{\phi }},{\overline{\rho }})\), the term P is maximized; if its eigenvalue decomposition is given by \(\nu \omega \nu ^T\), the optimal rotation will be

Since finding the affinity between points \(x_i\) and \(x_k\) on tangent spaces is not same as affinity on manifold, we reconstruct the point \(x_k\) on original space using Eq. (16),

where \(T^{\prime}\) is the rotated tangent plane and V is the same weight matrix taken in Eq. (7).

We calculate the Euclidean distance between data points \(x_i\) and \(x^{\prime}_k\) as \(d^{\prime}_{ik} = \parallel x_i - x^{\prime}_k \parallel _2 ^2\).

This distance \(d^{\prime}_{ik}\) is used to calculate the revised affinity matrix using

The revised affinity enforces the function smoothening in semi-supervised learning by identifying affinity between neighboring data points accurately.

3.2 Laplacian regularized least squares classifier (LapRLSC)

Let l labeled data points are given as \(\{x_i,y_i\}_{i=1}^l\) and \((n - l)\) unlabeled points are given as \(\{x_u\}_{u=l+1}^{n}\). The prediction function is trained using the given labeled data points [6]

where \(\parallel f\parallel _A^2\) and \(\parallel f\parallel _I^2\) are penalty terms in ambient space and intrinsic space, respectively. The unlabeled input data is used in prediction function by applying manifold assumption on the graph structure, considering the points in \(\{x_u\}\) as nodes and the distances between them as weights. The intrinsic space regularization term R(f) is calculated using [25]

where \(w^{\prime}_{ik}\) is calculated using Eq. (18). Expanding Eq. (20) using Eq. (5), we get

where f is \([f(x_1),f(x_2),\dots ,f(x_n)]^T\). After putting this value in Eq. (19), we get

where \({\mathbf{Y }}_l\) is vector of true labels of the labeled points. According to the classic Representer theorem [6],

where \(\alpha _i\)’s are representation coefficients and \({\mathcal {K}}\) is a mercer kernel. According to this, Eq. (23) can be rewritten as

where \({\mathbf{K }}\) is kernel gram matrix and \({\mathbf{a }}\) is representation coefficient vector. According to kernel’s property \({\mathcal {K}}(x_i,x) = \langle \phi (x_i),\phi (x) \rangle = \phi (x_i)^T,\phi (x)\), where \(\phi\) is kernel mapping, we can write the second term of Eq. (19) as

and

Putting this values from Eqs. (24) and (25) in Eq. (22), we obtain

The optimum solution, after setting the partial derivative \(\frac{\partial f}{\partial {\mathbf{a}} } = 0\) is obtained as

3.3 Complexity analysis

Complexity of \(rLap\) depends upon the number of data points n, their dimension d, number of nearest neighbors k, and procrustes analysis. We can calculate the complexity of \(rLap\) using following steps:

-

1.

The kNN algorithm requires \(O(nd+kn)\) time.Footnote 1

-

2.

As PCA takes \(O(d^2n+d^3)\) time, and \(d \ll n\), total time complexity would be \(O(d^2n)\).

-

3.

The upper bound for procrustes analysis is \(O(d^3)\).

Thus, \(rLap\) is bounded by complexity \(O(n(d^2n + k(d^2n + d^3)))\). As \(n \gg k\) and d, we say that complexity of \(rLap\) is \(O(n^2)\).

4 Experiments and results

In this section, the proposed rotation-based Laplacian regularization technique \(rLap\)Footnote 2 has been compared with existing state-of-the-art manifold learning and regularization methods on various real world and synthetic datasets. For data visualization, various 3D synthetic datasets have been projected on 2D. The performance is evaluated by comparing the intrinsic dimensional representations of all the methods. Further, real-world classification datasets have been used to train the RLSC model using all graph Laplacian variants. Their performance has been evaluated by calculating their root-mean-square error (RMSE) using Eq. (28)

where \(\hat{y_i}\) is predicted label.

4.1 Dimensionality reduction

In the following experiments, the proposed algorithm \(rLap\) has been compared with the existing state-of-the-art manifold learning approaches including Laplacian, DM, LTSA, entropy affinity \(EA\) [37], \(K _5\) [32], \(K _7\) [41] and min–max–mean (\(MMM\)) [43]. The algorithms have been applied on five synthetic datasets, namely swiss roll, swiss hole, punctured sphere, twin peaks, and elevated swiss roll, where the original 2D structure has been embedded in 3D space. The performance of proposed method on dimensionality reduction exhibits the extent to which the method is capable of preserving the local geometrical properties.

All datasets except twin peak dataset has 4000 data points. Twin peak data set consists of 7225 data points. Number of neighbors (k) is varied to find the best representation of data in lower-dimensional space. Table 1 shows the visualization results.

Swiss roll The swiss roll data is basically a 2D flat strip which is rotated as shown in the first column of Fig. 1 to make it a 3D structure. The dataset consists of 4000 points. Among all the methods, the results of \(rLap\) method gives the most accurate 2D representation. Laplacian gives a considerable representation as compared to other methods using \(k=6\). DM gives grossly inaccurate 2D representation exhibiting its incapability of preserving global connectivity and hence rotated strip could not be unfolded correctly.

Swiss hole The swiss hole dataset contains a hole in swiss roll dataset. The data set consists of 4000 points with a circular hole. Here, LTSA preserves the maximum intrinsic structure of the data. \(rLap\) method does not retain the shape of hole. However, \(rLap\) performs better than other methods, as they fail to preserve the shape of strip and give an inaccurate 2D representation with disconnected data points.

Elevated swiss roll The elevated swiss roll data is also a 2D flat strip similar to swiss roll with the varying third dimension. The 2D representation using \(rLap\) method gives a better result than all other methods. This proves that \(rLap\) holds properties similar to Laplacian, which can be used to exploit the intrinsic geometry of the data.

Punctured sphere The surface of a punctured sphere can be represented as a 2D flat surface. The best representation from \(rLap\) method comes at \(k=14\). \(rLap\) outperformed all the other methods except LTSA. The corners represented by \(rLap\) are smooth.

Twin peaks The twin peaks dataset containing 7225 points is originally a 2D flat surface with peaks at the two corners. \(rLap\) method gives comparable results to DM, which in turn remains the best performer.

It is evident from the results that LTSA and DM cannot preserve distances and angles due to their proximity-preserving nature. \(EA\) completely fails to unfold any dataset except punctured sphere. \(rLap\) method attempts to preserve distances and isometry. For punctured sphere dataset, flattening curved data into flat surface violates the distance preserving criterion, still our method gives comparable results.

4.2 Real-world datasets

The real-world classification datasets consist of different categories like image, sound, text and medical datasets. During experimental phase, for all the datasets, we used ‘RBF’ kernel of varying kernel width. The optimal results were found for kernel width \(\sigma = 1\). The parameters defined for the manifold obtained, are \(\lambda _A\) and \(\lambda _I\) that correspond to the ambient geometry and intrinsic geometry. While regularization, value of \(\lambda _A\) was varyed between 0.001 and 0.009 and \(\lambda _I\) was varyed between 0.01 and 0.09. For the results calculated using other state-of-the-art methods, we fixed the value \(\lambda _A = 0.005\) and \(\lambda _I = 0.045\). For each class, the number of labeled examples is set to 2, selected randomly across 10 rounds of classification. \(rLap\) method is executed for RLSC and compared with Laplacian (Lap), p-Laplacian(p-Lap) [24], higher order Laplacian (\(L^{m}\)) [48], ensemble manifold regularization EMR-RLS-24G (\(EMR24\)) [11], \(EA\), \(K _5\), \(K _7\) and \(MMM\) methods.

Isolet dataset Isolet dataset is a collection of English alphabet recorded in isolation with 150 people [10]. Each letter of alphabet was spoken twice by each speaker. Speakers were divided into five groups having equal members, and each group outcome was termed as Isolet1 to Isolet5. Each Isolet set has 1560 samples. Each sample is represented by 617 features. Experiment has been repeated 10 times with every time a different set of labels are chosen. Isolet1 has been used for training purpose and Isolet5 for testing.

USPS handwritten digit dataset USPS dataset contains digits (0–9) digitized from handwritten zip codes [18]. Same digit has been sampled from different handwritings containing total 7291 samples. In the experiment, we have applied the concept of one-vs-all classification for predicting a digit. A total of 4000 images are used for training containing 400 instances per digit and remaining 3291 images for testing.

MNIST dataset MNIST datasetFootnote 3 consists of handwritten digits having a training set of 60,000 and test set of 10,000 examples. In this experiment, we have taken all the 70,000 images, out of which 4000 examples per digit have been used for training and rest 30,000 are used for testing.

HASYv2 dataset HASY dataset contains single symbols similar to MNIST. It contains 168,233 instances of 369 classes including Arabic numerals and Latin characters each of size \(32 \times 32\). HASYv2 has much less samples per class [34]. The experiment consists of only nine symbols (classes) which have minimum 500 samples per class. Each symbol is pairwise classified from other one, hence having a 36 binary classification problem.

BCI dataset Brain–computer interface (BCI) is an electroencephalographic mental imagery dataset [17]. The dataset contains 60 hours of EEG BCI recordings spread across 75 experiments and 13 participants, featuring 60,000 mental imagery examples in four different BCI interaction paradigms with up to 6 EEG BCI interaction states. In our experiment we used HaLT interaction paradigm, which consists of the imageries of left and right hands movement, left and right leg movement and tongue movement, for a total of six mental states to be used for interaction with BCI. All readings of subjectA have been used for pairwise classification, hence having a 10 binary classification problem. A total of 1175 readings have been used for training the data and 1275 for testing.

COIL-20 dataset COIL-20 dataset consists of 1440 grayscale images of 20 different everyday objects of size \(128\times 128\) [26]. Forty-five instances per image, i.e., 900 images were taken for training. Remaining 27 instances per image, i.e., 540 images were taken for testing.

Cifar-10 dataset: Cifar-10 dataset contains 60,000 color images of 10 classes of size \(32\times 32\) pixels [21]. Every class consists of 6000 images. 50,000 images are given for training and 10,000 images for testing. In this experiment, only 6000 images are used for training, i.e., 600 images per class and total 4000 images are used for testing.

Fashion MNIST dataset Fashion MNIST [38] dataset consists of 70,000 images from 10 different fashion categories. All the images are in grayscale and of \(28\times 28\) pixel size. In the experiment, for each of the 10 rounds, total 42,469 data instances were selected randomly and rest were used for testing.

Lego brick dataset Lego bricks dataset contains total 16 categories of building bricks of different shapes, which are manufactured by Lego [12]. There are \(\approx \,400\) images for each category. Images are in grayscale and size of each image is \(200\times 200\) pixels.

Figures 2, 3, 4, 5, 6, 7, 8, 9 and 10 show the mean square error (RMSE) results for test and unlabeled data of the datasets at k = 6 for RLSC.Footnote 4 The parametric analysis of \(\lambda _A\) and \(\lambda _I\) is also depicted in the figures.

While tuning \(\lambda _A\) for a fixed \(\lambda _I\), it is observed that RMSE is increased gradually with increasing the value of \(\lambda _A\) for MNIST, BCI, Fashion MNIST, and Lego bricks datasets, where as RMSE is decreased for ISOLET and USPS datasets. Change in \(\lambda _A\) does not affect RMSE for HASY dataset and for COIL20 dataset also RMSE does not change after \(\lambda _A =0.005\). While tuning \(\lambda _I\), RMSE is increased for all the datasets except ISOLET and USPS datasets.

Tables 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19 contain the RMSE values of all the methods compared with \(rLap\) for \(k=6\) to \(k=20\). For the spoken letter dataset Isolet, \(rLap\) outperforms other methods for all the NN values. It gives \(\approx 80.34\%\) and \(\approx 85.99\%\) accuracy for test and unlabeled data respectively.

For the handwritten datasets USPS, MNIST and HASYv2, test data accuracy is \(\approx 97.98\%\), \(\approx 88.58\%\) and \(\approx 96.82\%\) respectively; unlabeled data accuracy is \(\approx 98.63\%\), \(\approx 98.07\%\) and \(\approx 96.94\%\) respectively. In case of USPS dataset \(rLap\) performs better than the rest of the methods for \(k=6\) to \(k=12\), beyond that it is dominated by \(MMM\) and \(K_7\) methods. For MNIST dataset, \(EA\) outperforms \(rLap\) beyond NN =12.

For BCI test data \(rLap\) performs better than the rest of the methods at \(k=6\) and \(k=12\). For unlabeled data \(EMR24\) outperforms other methods. For both test and unlabeled datasets, the classification accuracy is \(\approx 75\%\).

For object detection datasets Fashion MNIST and Lego brick, \(rLap\) out performs all the other methods. The achieved accuracy for test data is \(\approx 93.21\%\) and \(\approx 85.52\%\) respectively, whereas for unlabeled data it is \(\approx 94.07\%\) and \(\approx 93.75\%,,\) respectively. For the other two object detection datasets COIL 20 and Cifar, test data accuracies are \(\approx 98.73\%\) and \(\approx 75.43\%\) and unlabled data accuracies are \(\approx 98.79\%\) and \(\approx 75.71\%\). The results for COIL20 test data show that \(rLap\) has minimum RMSE for \(k=6\) to \(k=16\). For unlabeled data \(Lap\) performs better than \(rLap\) beyond NN = 12. In case of Cifar unlabeled data, \(rLap\) performs better, where as for test data p-Lap gives the best results from \(k=12\) onwards, still \(rLap\) method gave comparable results with p-Lap.

4.3 Medical image datasets

In case of medical image classification, we find limited number of labeled datasets. So it is important for a model to classify these medical images accurately using semi-supervised approach. In our experiment, we have considered five benchmark medical image datasets for binary classification.

Mammography images dataset This dataset is taken from Mammographic Image Analysis Society (Mini-MIAS)Footnote 5 to predict breast cancer. The dataset consisted of 322 mammography images having \(1024 \times 1024\) dimensions each. The dataset was divided into 200 and 122 images for training and testing respectively.

Diabetic retinopathy images This dataset is taken from Indian Diabetic Retinopathy Image Dataset (IDRiD) Web site.Footnote 6 This abnormality of eyes affects the retina of patients by increasing the amount of insulin in their blood. In this experiment, a subset containing 516 images was used, where each image is of resolution \(4288 \times 2848\) pixels. The training set consisted of 344 images and remaining 172 images were used for testing.

Chest X-ray images This dataset contains 338 chest X-ray images,Footnote 7 used to classify data for COVID-19. Though the dataset contains the images for SARS (Severe acute respiratory syndrome), ARDS (acute respiratory distress syndrome) and other classes [8], etc; we have applied the concept of one-vs-all classification for predicting COVID-19 only. Out of 422 X-ray and CT Scan images of 216 patients, we have taken only X-ray images of 194 patients, containing 272 COVID-19 positive images.

COVID CT Scan images This dataset contains COVID CT Scan images.Footnote 8 A total of 349 images of 216 patients are COVID-positive and 397 images are non-COVID images. All the images are of different resolutions, varying from minimum \(153 \times 124\) pixels to maximum of \(1853 \times 1485\) pixels; averaging \(491 \times 383\) pixels. Total 400 images are considered in training and remaining 346 for testing.

Alzheimer’s Brain MRI This dataset consists of 6400 Alzheimer’s Brain MRI imagesFootnote 9 of resolution \(176 \times 208\) pixels each. The dataset contains 5121 images for training and 1279 images for testing. In Alzheimer’s, cognitive impairment can be very mild, mild or moderate. So, the dataset has four classes of images namely non demented, very mild demented, mild demented and moderate demented. In the experiment non demented and very mild demented images are considered as negative and rest as positive.

The images in Fig. 11 show examples of each medical image dataset. Results obtained for these datasets for \(k=6\) are summarized in Table 20 for test data and in Table 21 for unlabeled data. We can conclude that \(rLap\) method gives best results for all the medical image unlabeled datasets. For test datasets, \(EMR\) performs better than \(rLap\) for Mini-MIAS dataset, whereas \(MMM\) gives the highest accuracy for Alzheimers’ dataset. For COVID-19 and diabetic retinopathy \(rLap\) performs better than all state-of-the-art methods.

Remarks Based on the experimental results, it is evident that the proposed \(rLap\) method outperforms the existing manifold regularization methods. While the classification accuracy remained high for spoken alphabets (Isolet), image datasets (fashion MNIST, Lego bricks, and COVID-19 medical images) datasets, moderate enhancement in accuracy has been achieved for the unlabeled data of many handwritten datasets. Performance of the trained model on test data shows significant improvement COID20 dataset, inferring that the model does not overfit. For manifold learning as well, our method better unfolds the synthetic datasets in 2D. So it can be summarized that \(rLap\) performed better than existing state-of-the-art manifold learning and regularization methods for most of the datasets.

5 Conclusion

It is known that the extent of locally linear neighborhood on a Riemann manifold is difficult to ascertain. In this paper, we flatten a heuristically chosen neighborhood by aligning their tangent spaces to allow Euclidean distance as a measure of pairwise affinity. Extensive experiments on both synthetic and real world datasets prove that our proposed method performs well in both manifold learning and regularization. For dimensionality reduction, our algorithm gives better representation of synthetic datasets over the Laplacian, LTSA, DM, EA, \(K_5\), \(K_7\) and MMM approaches. The reduced classification error for RLSC proved that \(rLap\) based Euclidean distance between similar neighbors by aligning the tangent spaces of misaligned neighbors is a good representation of affinity. However, the choice of the neighborhood size is vital for the success of the alignment-based affinity measure.

Notes

Experimental codes are available at https://github.com/imprashantshukla/rLap.

ET and EU represent RMSE for test and unlabeled data respectively.

References

Abhishek, Verma S (2019) Optimal manifold neighborhood and kernel width for robust non-linear dimensionality reduction. Knowl Based Syst 18:104953. https://doi.org/10.1016/j.knosys.2019.104953

Ando R, Zhang T (2006) Learning on graph with Laplacian regularization. Adv Neural Inf Proc Syst 19:25–32

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces versus fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Belkin M, Niyogi P (2003) Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 15(6):1373–1396

Belkin M, Niyogi P, Sindhwani V (2005) On manifold regularization. In: AISTATS, p 1

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7(Nov):2399–2434

Ching WK, Chu D, Liao LZ, Wang X (2012) Regularized orthogonal linear discriminant analysis. Pattern Recognit 45(7):2719–2732

Cohen JP, Morrison P, Dao L (2020) Covid-19 image data collection. arXiv: 2003.11597. https://github.com/ieee8023/covid-chestxray-dataset. Accessed April 2020

De la Torre F, Black MJ (2001) Robust principal component analysis for computer vision. In: Proceedings of eighth IEEE international conference on computer vision. ICCV 2001, vol 1. IEEE, pp 362–369

Fanty M, Cole R (1991) Spoken letter recognition. In: Lippmann RP, Moody J, Touretzky D (eds) Advances in neural information processing systems, vol 3. Morgan-Kaufmann, pp 220–226. https://proceedings.neurips.cc/paper/1990/file/49182f81e6a13cf5eaa496d51fea6406-Paper.pdf

Geng B, Tao D, Xu C, Yang L, Hua XS (2012) Ensemble manifold regularization. IEEE Trans Pattern Anal Mach Intell 34(6):1227–1233

Hazelzet J (2019) Images of lego bricks. https://www.kaggle.com/joosthazelzet/lego-brick-images. Accessed January 2020

Holm L, Sander C (1996) Mapping the protein universe. Science 273(5275):595–602

Hou J, Jun SR, Zhang C, Kim SH (2005) Global mapping of the protein structure space and application in structure-based inference of protein function. Proc Natl Acad Sci 102(10):3651–3656

Hou J, Sims GE, Zhang C, Kim SH (2003) A global representation of the protein fold space. Proc Natl Acad Sci 100(5):2386–2390

Huang S, Yang D, Zhou J, Zhang X (2015) Graph regularized linear discriminant analysis and its generalization. Pattern Anal Appl 18(3):639–650

Kaya M, Binli MK, Ozbay E, Yanar H, Mishchenko Y (2018) A large electroencephalographic motor imagery dataset for electroencephalographic brain computer interfaces. Sci Data 5:180211

Kaynak C (1995) Methods of combining multiple classifiers and their applications to handwritten digit recognition. Unpublished master’s thesis, Bogazici University

Kim J, Ahn Y, Lee K, Park SH, Kim S (2010) A classification approach for genotyping viral sequences based on multidimensional scaling and linear discriminant analysis. BMC Bioinform 11(1):434

Kosinov S, Pun T (2008) Distance-based discriminant analysis method and its applications. Pattern Anal Appl 11(3–4):227–246

Krizhevsky A et al (2009) Learning multiple layers of features from tiny images, vol 7. Citeseer

Lin T, Liu Y, Wang B, Wang L, Zha H (2016) Local orthogonality preserving alignment for nonlinear dimensionality reduction

Lin T, Zha H, Lee SU (2006) Riemannian manifold learning for nonlinear dimensionality reduction. In: European conference on computer vision. Springer, Berlin, pp 44–55

Liu W, Ma X, Zhou Y, Tao D, Cheng J (2018) \(p\)-Laplacian regularization for scene recognition. IEEE Trans Cybern 49(8):2927–2940

Liu X, Zhai D, Zhao D, Zhai G, Gao W (2014) Progressive image denoising through hybrid graph Laplacian regularization: a unified framework. IEEE Trans Image Process 23(4):1491–1503

Nene SA, Nayar SK, Murase H (1996) Columbia object image library (coil-20). Tech. rep

Olivier C, Bernhard S, Alexander Z (2006) Semi-supervised learning. IEEE Trans Neural Netw 20:542

Saeed N, Nam H (2016) Cluster based multidimensional scaling for irregular cognitive radio networks localization. IEEE Trans Signal Process 64(10):2649–2659

Saul LK, Roweis ST (2000) An introduction to locally linear embedding. Unpublished. http://www.cs.toronto.edu/~roweis/lle/publications.html. Accessed May 2019

Saul LK, Roweis ST (2003) Think globally, fit locally: unsupervised learning of low dimensional manifolds. J Mach Learn Res 4(June):119–155

Singer A, Wu HT (2012) Vector diffusion maps and the connection laplacian. Commun Pure Appl Math 65(8):1067–1144

Taşdemir K, Yalçin B, Yildirim I (2015) Approximate spectral clustering with utilized similarity information using geodesic based hybrid distance measures. Pattern Recognit 48(4):1465–1477. https://doi.org/10.1016/j.patcog.2014.10.023

Tenenbaum JB, De Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500):2319–2323

Thoma M (2017) The hasyv2 dataset. arXiv preprint arXiv:1701.08380

Turk MA, Pentland AP (1991) Face recognition using eigenfaces. In: Proceedings. 1991 IEEE Computer society conference on computer vision and pattern recognition. IEEE, pp 586–591

Vidya G, Omprakash S (2016) Survey on recent researches on high level image retrieval. Int J Comput Sci Eng 4(9):72–77

Vladymyrov M, Carreira-Perpinan M (2013) Entropic affinities: properties and efficient numerical computation. In: International conference on machine learning, pp 477–485

Xiao H, Rasul K, Vollgraf R (2017) Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv:1708.07747

Yadav RK, Verma S, Venkatesan S et al (2020) Regularization on a rapidly varying manifold. Int J Mach Learn Cybern 1–20

Yeung KY, Ruzzo WL (2001) Principal component analysis for clustering gene expression data. Bioinformatics 17(9):763–774

Zelnik-Manor L, Perona P (2004) Self-tuning spectral clustering. In: Advances in neural information processing systems 17, Neural Information Processing Systems, {NIPS} 2004, December 13–18, 2004, Vancouver, British Columbia, Canada, pp 1601–1608. http://papers.nips.cc/paper/2619-self-tuning-spectral-clustering

Zhang H, Wu QJ, Chow TW, Zhao M (2012) A two-dimensional neighborhood preserving projection for appearance-based face recognition. Pattern Recognit 45(5):1866–1876

Zhang L, Lin J, Karim R (2018) Adaptive kernel density-based anomaly detection for nonlinear systems. Knowl Based Syst 139:50–63

Zhang Z, Zha H (2003) Nonlinear dimension reduction via local tangent space alignment. In: International conference on intelligent data engineering and automated learning. Springer, Berlin, pp 477–481

Zhang Z, Zha H (2004) Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM J Sci Comput 26(1):313–338

Zheng X, Ma Z, Li L (2020) Local tangent space alignment based on Hilbert–Schmidt independence criterion regularization. Pattern Anal Appl 23(2):855–868. https://doi.org/10.1007/s10044-019-00810-6

Zheng-Bradley X, Rung J, Parkinson H, Brazma A (2010) Large scale comparison of global gene expression patterns in human and mouse. Genome Biol 11(12):R124

Zhou X, Belkin M (2011) Semi-supervised learning by higher order regularization. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics, pp 892–900

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shukla, P., Abhishek, Verma, S. et al. A rotation based regularization method for semi-supervised learning. Pattern Anal Applic 24, 887–905 (2021). https://doi.org/10.1007/s10044-020-00947-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-020-00947-9